I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

Opening an issue or submitting a pull request on GitHub: https://github.com/isaactpetersen/Principles-Psychological-Assessment

Adding an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

Chapter 10 Clinical Judgment Versus Algorithmic Prediction

10.1 Approaches to Prediction

There are two primary approaches to prediction: human (i.e., clinical) judgment and the actuarial (i.e., statistical) method.

10.1.1 Human/Clinical Judgment

Using the clinical judgment method of prediction, all gathered information is collected and formulated into a diagnosis or prediction in the clinician’s mind. The clinician selects, measures, and combines risk factors and produces risk estimate solely according to clinical experience and judgment.

10.1.2 Actuarial/Statistical Method

In the actuarial or statistical method of prediction (i.e., statistical prediction rules), information is gathered and combined systematically in an evidence-based statistical prediction formula, and established cutoffs are used. The method is based on equations and data, so both are needed.

An example of a statistical method of prediction is the Violence Risk Appraisal Guide (Rice et al., 2013). The Violence Risk Appraisal Guide is used in an attempt to predict violence and is used for parole decisions. For instance, the equation might be something like Equation (10.1):

\[\begin{equation} \scriptsize \text{Violence risk} = \beta \cdot \text{conduct disorder} + \beta \cdot \text{substance use} + \beta \cdot \text{suspended from school} + \beta \cdot \text{childhood aggression} + ... \tag{10.1} \end{equation}\]

Then, based on their score and the established cutoffs, a person is given a “low risk”, “medium risk”, or “high risk” designation.

10.1.3 Combining Human Judgment and Statistical Algorithms

There are numerous ways in which humans and statistical algorithms could be involved. On one extreme, humans make all judgments (consistent with the clinical judgment approach). On the other extreme, although humans may be involved in data collection, a statistical formula makes all decisions based on the input data, consistent with an actuarial approach. However, the clinical judgment and actuarial approaches can be combined in a hybrid way (J. Dana & Thomas, 2006). For example, to save time and money, a clinician might use an actuarial approach in all cases, but might only use a clinical approach when the actuarial approach gives a “positive” test. Or, the clinician might use both human judgment and an actuarial approach independently to see whether they agree. That is, the clinician may make a prediction based on their judgment and might also generate a prediction from an actuarial approach.

The challenge is what to do when the human and the algorithm disagree. Hypothetically, humans reviewing and adjusting the results from the statistical algorithm could lead to more accurate predictions. However, human input also could lead to the possibility or exacerbation of biased predictions. In general, with very few exceptions, actuarial approaches are as accurate or more accurate than clinical judgment (Ægisdóttir et al., 2006; Baird & Wagner, 2000; Dawes et al., 1989; Grove et al., 2000; Grove & Meehl, 1996). Moreover, the superiority of actuarial approaches to clinical judgment tends to hold even when the clinician is given more information than the actuarial approach (Dawes et al., 1989). In addition, actuarial predictions outperform human judgment even when the human is given the result of the actuarial prediction (Kahneman, 2011). Allowing clinicians to override actuarial predictions consistently leads to lower predictive accuracy (Garb & Wood, 2019).

In general, validity tends to increase with greater structure (e.g., structured or semi-structured interviews as opposed to free-flowing, unstructured interviews), in terms of administration, responses, scoring, interpretation, etc. Unstructured interviews are susceptible to confirmatory bias, such as exploring only the diagnoses that confirm the clinician’s hypotheses rather than attempting to explore diagnoses that disconfirm the clinician’s hypotheses, which leads to fewer diagnoses. Unstructured interviews are also susceptible to bias relating to race, gender, class, etc. (Garb, 1997, 2005).

There is sometimes a misconception that formulas cannot account for qualitative information. However, that is not true. Qualitative information can be scored or coded to be quantified so that it can be included in statistical formulas. That said, the quality of predictions rests on the quality and relevance of the assessment information for the particular prediction/judgment decision. If the assessment data are lousy, it is unlikely that a statistical algorithm (or a human for that matter) will make an accurate prediction: “Garbage in, garbage out”. A statistical formula cannot rescue inaccurate assessment data.

10.2 Errors in Clinical Judgment

Clinical judgment is naturally subject to errors. Below, I describe a few errors to which clinical judgment seems particularly prone.

When operating freely, clinicians tend to over-estimate exceptions to the established rules (i.e., the broken leg syndrome). Meehl (1957) acknowledged that there may be some situations where it is glaringly obvious that the statistical formula would be incorrect because it fails to account for an important factor. He called these special cases “broken leg” cases, in which the human should deviate from the formula (i.e., broken leg countervailing). The example goes like this:

“If a sociologist were predicting whether Professor X would go to the movies on a certain night, he might have an equation involving age, academic specialty, and introversion score. The equation might yield a probability of .90 that Professor X goes to the movie tonight. But if the family doctor announced that Professor X had just broken his leg, no sensible sociologist would stick with the equation. Why didn’t the factor of ‘broken leg’ appear in the formula? Because broken legs are very rare, and in the sociologist’s entire sample of 500 criterion cases plus 250 cross-validating cases, he did not come upon a single instance of it. He uses the broken leg datum confidently, because ‘broken leg’ is a subclass of a larger class we may crudely denote as ‘relatively immobilizing illness or injury,’ and movie-attending is a subclass of a larger class of ‘actions requiring moderate mobility.’” (Meehl, 1957, pp. 269–270)

However, people too often think that cases where they disagree with the statistical algorithm are broken leg cases. People too often think their case is an exception to the rule. As a result, they too often change the result of the statistical algorithm and are more likely to be wrong than right in doing so. Because actuarial methods are based on actual population levels (i.e., base rates), unique exceptions are not over-estimated.

Actuarial predictions are perfectly reliable—they will always return the same conclusion given an identical set of data. The human judge is likely to both disagree with others and with themselves given the same set of symptoms.

A clinician’s decision is likely to be influenced by past experiences, and given the sample of humanity that the clinician is exposed to, the clinician is likely (based on prior experience) to over-estimate the likelihood of occurrence of infrequent phenomena. Actuarial methods are based on objective algorithms, and past personal experience and personal biases do not factor into any decisions. Clinicians give weight to less relevant information, and often give too much weight to singular variables (e.g., Graduate Record Examination scores). Actuarial formulas do a better job of focusing on relevant variables. Computers are good at factoring in base rates, inverse conditional probabilities, etc. Humans ignore base rates (base rate neglect), and tend to show confusion of the inverse.

Computers are better at accurately weighing risk factors and calculating unbiased risk estimates. In an actuarial formula, the relevant risk factors are weighted according to their predictive power. This stands in contrast to the Diagnostic and Statistical Manual of Mental Disorders (DSM), in which each symptom is equally weighted.

Humans are typically given no feedback on their judgments. To improve accuracy of judgments, it is important for feedback to be clear, consistent, and timely. It is especially unlikely for feedback in clinical psychology to be timely because we will have to wait a long time to see the outcomes of predictions. The feedback that clinicians receive regarding their clients’ long-term outcomes tends to be sparse, delayed or nonexistent, and too ambiguous to support learning from experience (Kahneman, 2011). Moreover, it is important to distinguish short-term anticipation in a therapy session (e.g., what the client will say next) from long-terms predictions predictions. Although clinicians may have strong intuitions dealing with short-term anticipation in therapy sessions, their long-term predictions are not accurate (Kahneman, 2011).

Clinicians are susceptible to representative schema biases (Dawes, 1986). Clinicians are exposed to a skewed sample of humanity, and they make judgments based on a prototype from their (biased) experiences. This is known as the representativeness heuristic. Different clinicians may have different prototypes, leading to lower inter-rater reliability.

Intuition is a form of recognition-based judgment (i.e., recognizing cues that provide access to information in memory). Development of strong intuition depends on the quality and speed of feedback, in addition to having adequate opportunities to practice [i.e., sufficient opportunities to learn the cues; Kahneman (2011)]. The quality and speed of the feedback tend to benefit anesthesiologists who often quickly learn the results of their actions. By contrast, radiologists tend not to receive quality feedback about the accuracy of their diagnoses, including their false-positive and false-negative decisions (Kahneman, 2011).

In general, many so-called experts are “pseudo-experts” who do not know the boundaries of their competence—that is, they do not know what they do not know; they have the illusion of validity of their predictions and are overconfident about their predictions (Kahneman, 2011). Yet, many people arrogantly proclaim to have predictive powers, including in low-validity environments such as clinical psychology. True experts know their limits in terms of knowledge and ability to predict.

Intuitions tend to be skilled when a) the environment is regular and predictable, and b) there is opportunity to learn the regularities, cues, and contingencies through extensive practice (Kahneman, 2011). Example domains that meet these conditions supporting intuition include activities such as chess, bridge, and poker, and occupations such as medical providers, athletes, and firefighters. By contrast, clinical psychology and other domains such as stock-picking and other long-terms forecasts are low-validity environments that are irregular and unpredictable. In environments that do not have stable regularities, intuition cannot be trusted (Kahneman, 2011).

10.3 Humans Versus Computers

10.3.1 Advantages of Computers

Here are some advantages of computers over humans, including “experts”:

- Computers can process lots of information simultaneously. So can humans. But computers can to an even greater degree.

- Computers are faster at making calculations.

- Given the same input, a formula will give the exact same result everytime. Humans’ judgment tends to be inconsistent both across raters and within rater across time, when trying to make judgments or predictions from complex information (Kahneman, 2011). As noted in Section 5.5, reliability sets the upper bound for validity, so unreliable judgments cannot be valid.

- Computations by computers are error-free (as long as the computations are programmed correctly).

- Computers’ judgments will not be biased by fatigue or emotional responses.

- Computers’ judgments will tend not to be biased in the way that humans’ cognitive biases are, such as with anchoring bias, representativeness bias, confirmation bias, or recency bias. Computers are less likely to be over-confident in their judgments.

- Computers can more accurately weight the set of predictors based on large data sets. Humans tend to give too much weight to singular predictors. Experts may attempt to be clever and to consider complex combinations of predictors, but doing so often reduces validity (Kahneman, 2011). Simple combinations of predictions often outperform more complex combinations (Kahneman, 2011).

10.3.2 Advantages of Humans

Computers are bad at some things too. Here are some advantages of humans over computers (as of now):

- Humans can be better at identifying patterns in data (but also can mistakenly identify patterns where there are none).

- Humans can be flexible and take a different approach if a given approach is not working.

- Humans are better at tasks requiring creativity and imagination, such as developing theories that explain phenomena.

- Humans have the ability to reason, which is especially important when dealing with complex, abstract, or open-ended problems, or problems that have not been faced before (or for which we have insufficient data).

- Humans are better able to learn.

- Humans are better at holistic, gestalt processing, including facial and linguistic processing.

There may be situations in which a clinical judgment would do better than an actuarial judgment. One situation where clinical judgment would be important is when no actuarial method exists for the judgment or prediction. For instance, when no actuarial method exists for the diagnosis or disorder (e.g., suicide), it is up to the clinical judge. However, we could collect data on the outcomes or on clinicians’ judgments to develop an actuarial method that will be more reliable than the clinicians’ judgments. That is, an actuarial method developed based on clinicians’ judgments will be more accurate than clinicians’ judgments. In other words, we do not necessarily need clients’ outcome data to develop an actuarial method. We could use the client’s data as predictors of the clinicians’ judgments to develop a structured approach to prediction that weighs factors similarly to clinicians, but with more reliable predictions.

Another situation in which clinical judgment could outperform a statistical algorithm is in true “broken leg” cases, e.g., important and rare events (edge cases) that are not yet accounted for by the algorithm.

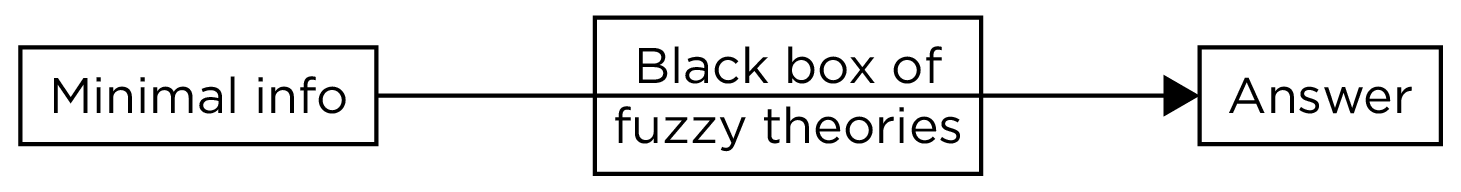

Another situation in which clinical judgment could be preferable is if advanced, complex theories exist. Computers have a difficult time adhering to complex theories, so clinicians may be better suited. However, we do not have any of these complex theories in psychology that are accurate. We would need strong theory informed by data regarding causal influences, and accurate measures to assess them. If theories alone were true and could explain everything, the psychoanalytic tradition would give us all the answers, as depicted in Figure 10.1. However, no theories in psychology are that good. Nevertheless, predictive accuracy can be improved when considering theory (Garb & Wood, 2019; Silver, 2012).

Figure 10.1: Conceptual Depiction of the Psychoanalytic Tradition.

If the diagnosis/prediction requires complex configural relations that a computer will have a difficult time replicating, a clinician’s judgment may be preferred. Although the likelihood that the clinician can accurately work through these complex relations is theoretically possible, it is highly unlikely. Holistic pattern recognition (such as language and faces) tends to be better by humans than computers. But computers are getting better with holistic pattern recognition through machine learning. An example of a simple decision (as opposed to a complex decision) would be whether to provide treatment A (e.g., cognitive behavior therapy) or treatment B (e.g., medication) for someone who shows melancholy.

In sum, the clinician seeks to integrate all information to make a decision, but is biased.

10.3.3 Comparison of Evidence

Hundreds of studies have examined clinical versus actuarial prediction methods across many disciplines. Findings consistently show that actuarial methods are as accurate or more accurate than clinical prediction methods (Ægisdóttir et al., 2006; Baird & Wagner, 2000; Dawes et al., 1989; Grove et al., 2000; Grove & Meehl, 1996). “There is no controversy in social science that shows such a large body of qualitatively diverse studies coming out so uniformly…as this one” (Meehl, 1986, pp. 373–374). In general, actuarial methods tend to be about 10% more accurate than clinical judgment (Grove et al., 2000). Thus, actuarial methods have incremental validity over clinical judgment. As noted earlier, the superiority of actuarial approaches to clinical judgment tends to hold even when the clinician is given more information than the actuarial approach (Dawes et al., 1989). In addition, actuarial predictions outperform human judgment even when the human is given the result of the actuarial prediction (Kahneman, 2011). Allowing clinicians to override actuarial predictions consistently leads to lower predictive accuracy (Garb & Wood, 2019).

Actuarial methods are particularly valuable for criterion-referenced assessment tasks, in which the aim is to predict specific events or outcomes (Garb & Wood, 2019). For instance, actuarial methods have shown promise in predicting violence, criminal recidivism, psychosis onset, course of mental disorders, treatment selection, treatment failure, suicide attempts, and suicide (Garb & Wood, 2019). Actuarial methods are especially important to use in low-validity environments (like clinical psychology) in which there is considerable uncertainty and unpredictability (Kahneman, 2011). By contrast, for norm-referenced assessment tasks, different approaches may be preferred over actuarial methods and statistical prediction rules. For instance, for norm-referenced assessment tasks such as describing personality and psychopathology (Garb & Wood, 2019), where the criterion is a latent construct (derived from the internal pattern of associations among the items) rather than a specific event or outcome, psychometric methods of scale construction such as factor analysis may be preferred (Garb & Wood, 2019)—i.e., the inductive approach to scale construction.

Another benefit of actuarial methods is that they are explicit; they can be transparent and lead to informed scientific criticism to improve them. By contrast, clinical judgment methods are not typically transparent; clinical judgment relies on mental processes that are often difficult to specify.

10.4 Accuracy of Different Statistical Models

It is important to evaluate the accuracy of different types of statistical models to provide guidance on which types of models may be most accurate for a given prediction problem. Simpler statistical formulas are more likely to generalize to new samples than complex statistical models due to model over-fitting. As described in Section 5.3.1.3.3.3, over-fitting occurs when the statistical model accounts for error variance (an overly specific prediction), which will not generalize to future samples.

Youngstrom et al. (2018) discussed the benefits of the probability nomogram. The probability nomogram combines the risk ratio with the base rate (according to Bayes’ theorem) to generate a prediction. The risk ratio (diagnostic likelihood ratio) is an index of the predictive validity of an instrument: it is the ratio of the probability that a test result is correct to the probability that the test result is incorrect. There are two types of diagnostic likelihood ratios: the positive likelihood ratio and the negative likelihood ratio. As described in Equation (9.61), the positive likelihood ratio is the probability that a person with the disease tested positive for the disease (true positive rate) divided by the probability that a person without the disease tested positive for the disease (false positive rate). As described in Equation (9.62), the negative likelihood ratio is the probability that a person with the disease tested negative for the disease (false negative rate) divided by the probability that a person without the disease tested negative for the disease (true negative rate). Using a probability nomogram, you start with the base rate, then plot the diagnostic likelihood ratio corresponding to a second source of information (e.g., positive test), then connect the base rate (pretest probability) to the posttest probability through the likelihood ratio. These approaches are described in greater detail in Section 12.5.

Youngstrom et al. (2018) ordered actuarial approaches to diagnostic classification from less to more complex:

- Predicting from the base rate

- Take the best

- The probability nomogram

- Naïve Bayesian algorithms

- Logistic regression with one predictor

- Logistic regression with multiple predictors

- Least absolute shrinkage and selection option (LASSO)

As described in Section 9.1.2.4, predicting from the base rate is selecting the most likely outcome in every case. “Take the best” refers to focusing on the single variable with the largest validity coefficient, and making a decision based on it (based on some threshold). The probability nomogram combines the base rate (prior probability) with the information offered by an assessment finding to revise the probability. Naïve Bayesian algorithms use the probability nomogram with multiple assessment findings. Basically, you continue to calculate a new posttest probability based on each assessment finding, revising the pretest probability for the next assessment finding based on the posttest probability of the last assessment finding. However, this approach assumes that all predictors are uncorrelated, which is probably not true in practice.

Logistic regression is useful for classification problems because it deals with a dichotomous dependent variable, but one could extrapolate it to a continuous dependent variable with multiple regression. The effect size from logistic regression is equivalent to receiver operating characteristic (ROC) curve analysis. Logistic regression with multiple predictors combines multiple predictors into one regression model. Including multiple predictors in the same model optimizes the weights of multiple predictors, but it is important for predictors not to be collinear.

LASSO is a form of regression that can handle multiple predictors (like multiple regression). However, it can handle more predictors than multiple regression. For instance, LASSO can accommodate situations where there are more predictors than there are participants. Moreover, unlike multiple regression, it can handle collinear predictors. LASSO uses internal cross-validation to avoid over-fitting.

Youngstrom et al. (2018) found that model complexity improves accuracy but only to a point; some of the simpler models (Naïve Bayesian and Logistic regression) did just as well and in some cases better than LASSO models in classifying bipolar disorder. Although LASSO models showed the highest discrimination accuracy in the internal sample, they showed the largest shrinkage in the external sample. Moreover, the LASSO models showed poor calibration in the internal sample; they over-diagnosed bipolar disorder. By contrast, simpler models showed better calibration in the internal sample. The probability nomogram with one or multiple assessment findings showed better calibration than LASSO models because they accounted for the original base rate, so they did not over-diagnose bipolar disorder.

In summary, the best models are those that are relatively simple (parsimonious), that can account for one or several of the most important predictors and their optimal weightings, and that account for the base rate of the phenomenon. Multiple regression and/or prior literature can be used to identify the weights of various predictors. Even unit-weighted formulas (formulas whose predictors are equally weighted with a weight of one) can sometimes generalize better to other samples than complex weightings (Garb & Wood, 2019; Wainer, 1976). Differential weightings sometimes capture random variance and over-fit the model, thus leading to predictive accuracy shrinkage in cross-validation samples (Garb & Wood, 2019), as described below. The choice of predictive variables often matters more than their weighting.

In general, there is often shrinkage of estimates from training data set to a test data set. Shrinkage is when variables with stronger predictive power in the original data set tend to show somewhat smaller predictive power (smaller validity coefficients) when applied to new groups. Shrinkage reflects a model over-fitting (i.e., fitting to error by capitalizing on chance). Shrinkage is especially likely when the original sample is small and/or unrepresentative and the number of variables considered for inclusion is large. Cross-validation with large, representative samples can help evaluate the amount of shrinkage of estimates, particularly for more complex models such as machine learning models (Ursenbach et al., 2019). Ideally, cross-validation would be conducted with a separate sample (external cross-validation) to see the generalizability of estimates. However, you can also do internal cross-validation. For example, you can perform k-fold cross-validation, where you:

- split the data set into k groups

- for each unique group:

- take the group as a hold-out data set (also called a test data set)

- take the remaining groups as a training data set

- fit a model on the training data set and evaluate it on the test data set

- after all k-folds have been used as the test data set, and all models have been fit, you average the estimates across the models, which presumably yields more robust, generalizable estimates

An emerging technique that holds promise for increasing predictive accuracy of actuarial methods is machine learning (Garb & Wood, 2019). However, one challenge of some machine learning techniques is that they are like a “black box” and are not transparent, which raises ethical issues (Garb & Wood, 2019). Machine learning may be most valuable when the data available are complex and there are many predictive variables (Garb & Wood, 2019).

10.5 Getting Started

10.5.1 Load Libraries

Code

library("petersenlab") #to install: install.packages("remotes"); remotes::install_github("DevPsyLab/petersenlab")

library("pROC")

library("caret")

library("MASS")

library("randomForest")

library("e1071")

library("ranger")

library("ordinalForest")

library("elasticnet")

library("LiblineaR")

library("glmnet")

library("viridis")

library("MOTE")

library("tidyverse")

library("here")

library("tinytex")

library("rmarkdown")10.5.2 Prepare Data

10.5.2.1 Load Data

aSAH is a data set from the pROC package (Robin et al., 2021) that contains test scores (s100b) and clinical outcomes (outcome) for patients.

10.6 Fitting the Statistical Models

You can find an array of statistical models for classification and prediction available in the caret package (Kuhn, 2022) at the following link: https://topepo.github.io/caret/available-models.html (archived at https://perma.cc/E45U-YE6S)

10.6.1 Regression with One Predictor

Dichotomous Outcome:

Call:

glm(formula = disorder ~ s100b, data = mydataActuarial)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.1796 0.0561 3.202 0.00178 **

s100b 0.7417 0.1530 4.848 4.09e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 0.1942322)

Null deviance: 26.124 on 112 degrees of freedom

Residual deviance: 21.560 on 111 degrees of freedom

AIC: 139.49

Number of Fisher Scoring iterations: 2Ordinal Outcome:

Call:

polr(formula = ordinalFactor ~ s100b, data = mydataActuarial)

Coefficients:

Value Std. Error t value

s100b 0.8485 0.6905 1.229

Intercepts:

Value Std. Error t value

0|1 -0.6055 0.2570 -2.3565

1|2 0.8514 0.2621 3.2480

Residual Deviance: 246.405

AIC: 252.405 Logistic Regression Model

lrm(formula = ordinal ~ s100b, data = mydataActuarial, x = TRUE,

y = TRUE)

Model Likelihood Discrimination Rank Discrim.

Ratio Test Indexes Indexes

Obs 113 LR chi2 1.59 R2 0.016 C 0.525

0 35 d.f. 1 R2(1,113)0.005 Dxy 0.050

1 39 Pr(> chi2) 0.2067 R2(1,100.4)0.006 gamma 0.051

2 39 Brier 0.213 tau-a 0.033

max |deriv| 1e-09

Coef S.E. Wald Z Pr(>|Z|)

y>=1 0.6055 0.2570 2.36 0.0184

y>=2 -0.8514 0.2621 -3.25 0.0012

s100b 0.8485 0.6905 1.23 0.2191 Code

Ordered Logistic or Probit Regression

113 samples

1 predictor

3 classes: '0', '1', '2'

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

method Accuracy Kappa

cauchit 0.2999569 0.002188742

cloglog 0.3092742 0.014863850

logistic 0.3070254 0.011181887

loglog 0.3050676 0.008781209

probit 0.3090156 0.014176878

Accuracy was used to select the optimal model using the largest value.

The final value used for the model was method = cloglog.Continuous Outcome:

Call:

lm(formula = s100b ~ ndka, data = mydataActuarial)

Residuals:

Min 1Q Median 3Q Max

-0.37261 -0.14000 -0.09024 0.10732 0.76057

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.1705939 0.0234559 7.273 5.28e-11 ***

ndka 0.0038861 0.0005259 7.390 2.94e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.2238 on 111 degrees of freedom

Multiple R-squared: 0.3298, Adjusted R-squared: 0.3237

F-statistic: 54.61 on 1 and 111 DF, p-value: 2.936e-1110.6.2 Regression with Multiple Predictors

Dichotomous Outcome:

Call:

glm(formula = disorder ~ s100b + ndka + gender + age + wfns,

data = mydataActuarial)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.1888383 0.1527337 1.236 0.219099

s100b 0.1537913 0.2338580 0.658 0.512231

ndka 0.0006123 0.0012259 0.499 0.618515

genderFemale -0.1713339 0.0824500 -2.078 0.040168 *

age 0.0052547 0.0028551 1.840 0.068550 .

wfns.L 0.4182090 0.1078207 3.879 0.000184 ***

wfns.Q 0.0261311 0.1295000 0.202 0.840479

wfns.C 0.1669874 0.0834770 2.000 0.048064 *

wfns^4 -0.0932508 0.1550179 -0.602 0.548784

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for gaussian family taken to be 0.1549053)

Null deviance: 26.124 on 112 degrees of freedom

Residual deviance: 16.110 on 104 degrees of freedom

AIC: 120.56

Number of Fisher Scoring iterations: 2Ordinal Outcome:

Call:

polr(formula = ordinalFactor ~ s100b + ndka + gender + age +

wfns, data = mydataActuarial)

Coefficients:

Value Std. Error t value

s100b 1.8489542 1.164022 1.58842

ndka 0.0003385 0.006903 0.04903

genderFemale -0.6508492 0.398431 -1.63353

age 0.0015384 0.013144 0.11705

wfns.L -0.6994631 0.530547 -1.31838

wfns.Q -1.3813985 0.613950 -2.25002

wfns.C -0.1869263 0.429721 -0.43499

wfns^4 0.6399225 0.731127 0.87526

Intercepts:

Value Std. Error t value

0|1 -0.9359 0.7340 -1.2751

1|2 0.6024 0.7295 0.8257

Residual Deviance: 238.5788

AIC: 258.5788 Logistic Regression Model

lrm(formula = ordinal ~ s100b + ndka + gender + age + wfns, data = mydataActuarial,

x = TRUE, y = TRUE)

Model Likelihood Discrimination Rank Discrim.

Ratio Test Indexes Indexes

Obs 113 LR chi2 9.42 R2 0.090 C 0.637

0 35 d.f. 8 R2(8,113)0.012 Dxy 0.275

1 39 Pr(> chi2) 0.3080 R2(8,100.4)0.014 gamma 0.275

2 39 Brier 0.205 tau-a 0.184

max |deriv| 3e-10

Coef S.E. Wald Z Pr(>|Z|)

y>=1 0.4489 0.8968 0.50 0.6167

y>=2 -1.0894 0.9016 -1.21 0.2269

s100b 1.8487 1.1637 1.59 0.1122

ndka 0.0003 0.0069 0.05 0.9609

gender=Female -0.6508 0.3984 -1.63 0.1024

age 0.0015 0.0131 0.12 0.9068

wfns 0.3266 0.4408 0.74 0.4587

wfns=3 0.7044 1.1576 0.61 0.5428

wfns=4 -0.8591 1.2771 -0.67 0.5011

wfns=5 -2.3094 1.6651 -1.39 0.1655 Code

Ordered Logistic or Probit Regression

113 samples

5 predictor

3 classes: '0', '1', '2'

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

method Accuracy Kappa

cauchit 0.2051282 -0.194664032

cloglog 0.3177155 0.019400327

logistic 0.3105615 0.005977777

loglog 0.3080244 0.007859259

probit 0.3074942 0.005253176

Accuracy was used to select the optimal model using the largest value.

The final value used for the model was method = cloglog.Continuous Outcome:

Call:

lm(formula = s100b ~ ndka + gender + age + wfns, data = mydataActuarial)

Residuals:

Min 1Q Median 3Q Max

-0.45724 -0.08047 -0.00746 0.03834 0.60901

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.0870834 0.0631673 1.379 0.1709

ndka 0.0033368 0.0003945 8.458 1.72e-13 ***

genderFemale 0.0333867 0.0342521 0.975 0.3319

age 0.0017750 0.0011788 1.506 0.1351

wfns.L 0.3124368 0.0330875 9.443 1.09e-15 ***

wfns.Q 0.1156324 0.0528496 2.188 0.0309 *

wfns.C -0.0125489 0.0348138 -0.360 0.7192

wfns^4 -0.0779965 0.0642403 -1.214 0.2274

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.1642 on 105 degrees of freedom

Multiple R-squared: 0.6586, Adjusted R-squared: 0.6358

F-statistic: 28.93 on 7 and 105 DF, p-value: < 2.2e-1610.6.3 Least Absolute Shrinkage and Selection Option (LASSO)

The least absolute shrinkage and selection option (LASSO) models were fit in the caret package (Kuhn, 2022).

Dichotomous Outcome:

Code

glmnet

113 samples

5 predictor

2 classes: 'Good', 'Poor'

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

alpha lambda Accuracy Kappa

0.10 0.0005284466 0.7355782 0.4287611

0.10 0.0052844664 0.7287405 0.4099028

0.10 0.0528446638 0.7218622 0.3770501

0.55 0.0005284466 0.7375306 0.4322519

0.55 0.0052844664 0.7286740 0.4091351

0.55 0.0528446638 0.7227115 0.3738905

1.00 0.0005284466 0.7344793 0.4259979

1.00 0.0052844664 0.7336155 0.4208213

1.00 0.0528446638 0.7221166 0.3723118

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were alpha = 0.55 and lambda = 0.0005284466.Continuous Outcome:

Code

The lasso

113 samples

4 predictor

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

fraction RMSE Rsquared MAE

0.1 0.2429079 0.2539792 0.1738286

0.5 0.2108372 0.3378498 0.1324253

0.9 0.2166231 0.3055272 0.1267198

RMSE was used to select the optimal model using the smallest value.

The final value used for the model was fraction = 0.5.10.6.4 Ridge Regression

The ridge regression models were fit in the caret package (Kuhn, 2022).

Dichotomous Outcome:

Code

Regularized Logistic Regression

113 samples

5 predictor

2 classes: 'Good', 'Poor'

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

cost loss epsilon Accuracy Kappa

0.5 L1 0.001 0.7325252 0.4103154

0.5 L1 0.010 0.7295235 0.4051628

0.5 L1 0.100 0.7277705 0.3960880

0.5 L2_dual 0.001 0.7248420 0.3938821

0.5 L2_dual 0.010 0.7326213 0.4044132

0.5 L2_dual 0.100 0.7345136 0.4173344

0.5 L2_primal 0.001 0.7345607 0.4114534

0.5 L2_primal 0.010 0.7323873 0.4078716

0.5 L2_primal 0.100 0.6439091 0.1596625

1.0 L1 0.001 0.7355483 0.4187553

1.0 L1 0.010 0.7374291 0.4240174

1.0 L1 0.100 0.7358523 0.4188055

1.0 L2_dual 0.001 0.7259651 0.4116438

1.0 L2_dual 0.010 0.7290618 0.4028366

1.0 L2_dual 0.100 0.7236890 0.3806801

1.0 L2_primal 0.001 0.7436326 0.4349872

1.0 L2_primal 0.010 0.7334694 0.4118764

1.0 L2_primal 0.100 0.6446988 0.1637100

2.0 L1 0.001 0.7452451 0.4415898

2.0 L1 0.010 0.7435028 0.4363186

2.0 L1 0.100 0.7363747 0.4247123

2.0 L2_dual 0.001 0.7400012 0.4239179

2.0 L2_dual 0.010 0.7279734 0.3936125

2.0 L2_dual 0.100 0.7177376 0.3990560

2.0 L2_primal 0.001 0.7485614 0.4486697

2.0 L2_primal 0.010 0.7436215 0.4330285

2.0 L2_primal 0.100 0.6466046 0.1685345

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were cost = 2, loss = L2_primal and

epsilon = 0.001.Continuous Outcome:

Code

Ridge Regression

113 samples

4 predictor

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

lambda RMSE Rsquared MAE

0e+00 0.2334776 0.3049999 0.1386310

1e-04 0.2330332 0.3068559 0.1380783

1e-01 0.2364670 0.2965017 0.1388237

RMSE was used to select the optimal model using the smallest value.

The final value used for the model was lambda = 1e-04.10.6.5 Elastic Net

The elastic net models were fit in the caret package (Kuhn, 2022).

Continuous Outcome:

Code

Elasticnet

113 samples

4 predictor

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

lambda fraction RMSE Rsquared MAE

0e+00 0.050 0.2731440 0.2621499 0.1863863

0e+00 0.525 0.2336049 0.3631764 0.1389417

0e+00 1.000 0.2385990 0.3368319 0.1364719

1e-04 0.050 0.2731444 0.2621501 0.1863868

1e-04 0.525 0.2336066 0.3631744 0.1389431

1e-04 1.000 0.2386016 0.3368277 0.1364723

1e-01 0.050 0.2734316 0.2622984 0.1867288

1e-01 0.525 0.2348413 0.3605836 0.1397894

1e-01 1.000 0.2412255 0.3321961 0.1369182

RMSE was used to select the optimal model using the smallest value.

The final values used for the model were fraction = 0.525 and lambda = 0.10.6.6 Random Forest Machine Learning

The random forest models were fit in the caret package (Kuhn, 2022).

Dichotomous Outcome:

Code

Random Forest

113 samples

5 predictor

2 classes: 'Good', 'Poor'

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

mtry Accuracy Kappa

2 0.7369067 0.3953884

5 0.7347013 0.4032376

8 0.7284303 0.3899586

Accuracy was used to select the optimal model using the largest value.

The final value used for the model was mtry = 2.Ordinal Outcome:

Code

Random Forest

113 samples

5 predictor

3 classes: '0', '1', '2'

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

nsets ntreeperdiv ntreefinal Accuracy Kappa

50 50 200 0.3211389 -0.005466516

50 50 400 0.3022129 -0.031700266

50 50 600 0.3053906 -0.026771454

50 100 200 0.3079423 -0.024898546

50 100 400 0.3067742 -0.025865855

50 100 600 0.3104021 -0.020939333

50 150 200 0.3086011 -0.025645459

50 150 400 0.3040616 -0.029628515

50 150 600 0.3020118 -0.033196001

100 50 200 0.3290558 0.003086972

100 50 400 0.3042518 -0.027140626

100 50 600 0.3138210 -0.014979737

100 100 200 0.3070572 -0.029330252

100 100 400 0.3142540 -0.017303057

100 100 600 0.3048068 -0.034920067

100 150 200 0.3163612 -0.013654494

100 150 400 0.3058112 -0.031364175

100 150 600 0.3091778 -0.025260742

150 50 200 0.3080989 -0.023783202

150 50 400 0.3097436 -0.022047402

150 50 600 0.3088715 -0.027163376

150 100 200 0.3076460 -0.027799395

150 100 400 0.3090770 -0.023510219

150 100 600 0.3130607 -0.018028479

150 150 200 0.3058690 -0.028026053

150 150 400 0.2998348 -0.039127889

150 150 600 0.3062674 -0.031066605

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were nsets = 100, ntreeperdiv = 50

and ntreefinal = 200.Continuous Outcome:

Code

Random Forest

113 samples

4 predictor

No pre-processing

Resampling: Bootstrapped (25 reps)

Summary of sample sizes: 113, 113, 113, 113, 113, 113, ...

Resampling results across tuning parameters:

mtry RMSE Rsquared MAE

2 0.2217943 0.3596668 0.1278765

4 0.2219191 0.3547089 0.1278610

7 0.2222394 0.3476888 0.1318543

RMSE was used to select the optimal model using the smallest value.

The final value used for the model was mtry = 2.10.6.7 k-Fold Cross-Validation

The k-fold cross-validation models were fit in the caret package (Kuhn, 2022).

Dichotomous Outcome:

Code

Generalized Linear Model

113 samples

5 predictor

2 classes: 'Good', 'Poor'

No pre-processing

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 102, 102, 102, 102, 102, 102, ...

Resampling results:

Accuracy Kappa

0.7780303 0.5046079Continuous Outcome:

Code

Linear Regression

113 samples

4 predictor

No pre-processing

Resampling: Cross-Validated (10 fold)

Summary of sample sizes: 102, 103, 101, 102, 101, 102, ...

Resampling results:

RMSE Rsquared MAE

0.2074522 0.3819308 0.1293988

Tuning parameter 'intercept' was held constant at a value of TRUE10.6.8 Leave-One-Out (LOO) Cross-Validation

The leave-one-out (LOO) cross-validation models were fit in the caret package (Kuhn, 2022).

Dichotomous Outcome:

Code

Generalized Linear Model

113 samples

5 predictor

2 classes: 'Good', 'Poor'

No pre-processing

Resampling: Leave-One-Out Cross-Validation

Summary of sample sizes: 112, 112, 112, 112, 112, 112, ...

Resampling results:

Accuracy Kappa

0.7699115 0.4747944Continuous Outcome:

Code

Linear Regression

113 samples

4 predictor

No pre-processing

Resampling: Leave-One-Out Cross-Validation

Summary of sample sizes: 112, 112, 112, 112, 112, 112, ...

Resampling results:

RMSE Rsquared MAE

0.2313417 0.2764679 0.1274925

Tuning parameter 'intercept' was held constant at a value of TRUE10.7 Examples of Using Actuarial Methods

There are many examples of actuarial methods used in research and practice. As just a few examples, actuarial methods have been used to predict violence risk (Rice et al., 2013), to predict treatment outcome [for the treatment of depression; DeRubeis et al. (2014); Z. D. Cohen & DeRubeis (2018)], and to screen for attention-deficit/hyperactivity disorder (Lindhiem et al., 2015). Machine learning is becoming more widely used as another form of approaches to actuarial methods (Dwyer et al., 2018). For an empirical example, we have examined actuarial methods to predict arrests, illegal drug use, and drunk driving (Petersen et al., 2015).

10.8 Why Clinical Judgment is More Widely Used Than Statistical Formulas

Despite actuarial methods being generally more accurate than clinical judgment, clinical judgment is much more widely used. There are several reasons why actuarial methods have not caught on; one reason is professional traditions. Experts in any field do not like to think that a computer could outperform them. Some practitioners argue that judgment/prediction is an “art form” and that using a statistical formula is treating people like a number. However, using an approach (i.e., clinical judgment) that systematically leads to less accurate decisions and predictions is an ethical problem.

Some clinicians do not think that group averages (e.g., in terms of which treatment is most effective) apply to an individual client. This invokes the distinction between nomothetic (group-level) inferences and idiographic (individual-level) inferences. However, the scientific evidence and probability theory strongly favor the nomothetic approach to clinical prediction—it is better to generalize from group-level evidence than throwing out all the evidence and taking the approach of “anything goes.” Clinicians frequently believe the broken leg fallacy, i.e., thinking that your client is an exception to the algorithmic prediction. In most cases, deviating from the statistical formula will result in less accurate predictions. People tend to over-estimate the probability of low base rate conditions and events.

Another reason why actuarial methods have not caught on is the belief that receiving a treatment is the only thing that matters. But it is an empirical question which treatment is most effective for whom. What if we could do better? For example, we could potentially use a formula to identify the most effective treatment for a client. Some treatments are no better than placebo; other treatments are actually harmful (Lilienfeld, 2007; Williams et al., 2021).

Another reason why clinical methods are more widely used than actuarial methods is the over-confidence in clinicians’ predictions—clinicians think they are more accurate than they actually are. We see this when examining their calibration; their predictions tend to be mis-calibrated. For example, things they report with 80% confidence occur less than 80% of the time. Clinicians will sometimes be correct by chance, and they tend to mis-attribute that to their assessment; clinicians tend to remember the successes and forget the failures. Note, however, that it is not just clinicians who are over-confident; humans in general tend to be over-confident in their predictions.

Another argument against using actuarial methods is that “no methods exist”. In some cases, that is true—actuarial methods do not yet exist for some prediction problems. However, one can always create an algorithm of the clinicians’ judgments, even if one does not have access to the clients’ outcome information. A model of clinicians’ responses tends to be more accurate than clinicians’ judgments themselves because the model gives the same outcome with the same input data—i.e., it is perfectly reliable.

Another argument from some clinicians is that, “My job is to understand, not to predict”. But what kind of understanding does not involve predictions? Accurate predictions help in understanding. Knowing how people would perform in different conditions is the same thing as good understanding.

Another potential reason clinicians may not use actuarial judgment is time. This can be a legitimate challenge to using actuarial models in some contexts. Actuarial methods can take time to apply—they require assessing the relevant criteria, putting the assessment scores into an actuarial formula, and interpreting the output score. In some clinical situations, such as risk assessments in terms of whether someone is imminently suicidal or homicidal and how to proceed, clinicians must make immediate in-session decisions (Jacinto et al., 2018). Thus, it can be challenging or impractical in some situations to conduct all of the relevant assessments needed to apply actuarial approaches. Moreover, in some workplace settings, such as health care settings, clinicians may be pushed beyond their limits in terms of productivity expectations. In these situations, one possible approach is to exercise one’s best possible judgment in the moment based on the available evidence [i.e., intuitive decision-making processes; Jacinto et al. (2018)], and to check it against an actuarial model after the fact. This is like comparing your decisions in a chess match, after the game ends, to how a computer would have moved in each of the situations. It is valuable to receive feedback on the accuracy of one’s judgments and, when time permits, to evaluate one’s intuitive decision-making against analytical decision-making. However, there are structured approaches to assessing suicide risk that are quick to administer (e.g., Posner et al., 2011).

10.9 Conclusion

In general, it is better to develop and use structured, actuarial approaches than informal approaches that rely on human or clinical judgment. Actuarial approaches to prediction tend to be as accurate or more accurate than clinical judgment. Nevertheless, clinical judgment tends to be much more widely used than actuarial approaches, which is a major ethical problem.