I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

Opening an issue or submitting a pull request on GitHub: https://github.com/isaactpetersen/Principles-Psychological-Assessment

Adding an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

9 Prediction

“It is very difficult to predict—especially the future.”

— Neils Bohr

9.1 Overview of Prediction

In psychology, we are often interested in predicting behavior. Behavior is complex. Behavior is multiply determined—it is probabilistically influenced by many processes, including processes internal to the person in addition to external processes. In addition, the same behavior can occur for different reasons—that is, behavior can be considered overdetermined. Moreover, people’s behavior occurs in the context of a dynamic system with nonlinear, probabilistic, and cascading influences that change across time. The ever-changing system makes behavior challenging to predict. And, similar to chaos theory (or the butterfly effect), one small change in the system can lead to large differences later on. Another example of a chaotic system is the atmosphere and resulting weather (archived at https://perma.cc/248N-9CW5).

When something is overdetermined, it has multiple causes, any of which could be sufficient to result in the outcome. In other words, the same behavior can occur for different reasons, consistent with the principle of developmental psychopathology known as equifinality—i.e., multiple causes can result in the same outcome. For instance, aggression could be caused by either excessively low anxiety for some children or excessively high anxiety for others; or for other children, it could be caused by deficient social information processing or another cognitive process. Multifinality refers to the idea that the same cause can result in different outcomes. For instance, substance use might lead to aggressive behavior for some people, whereas it might lead to depressive behavior for others. In contrast to overdetermination, underdetermination refers to the idea that the identified causes are insufficient for explaining the resulting outcome. All of these challenges make behavior difficult to predict.

Predictions can come in different types. Some predictions involve categorical data, whereas other predictions involve continuous data. When dealing with categorical data, we can evaluate predictions using a 2x2 table known as a confusion matrix (see Figure 9.4), or with logistic regression models. When dealing with continuous data, we can evaluate predictions using multiple regression or similar variants such as structural equation modeling and mixed models.

Let’s consider a prediction example, assuming the following probabilities:

- The probability of contracting HIV is .3%

- The probability of a positive test for HIV is 1%

- The probability of a positive test if you have HIV is 95%

What is the probability of HIV if you have a positive test?

As we will see, the probability is: \(\frac{95\% \times .3\%}{1\%} = 28.5\%\). So based on the above probabilities, if you have a positive test, the probability that you have HIV is 28.5%. Most people tend to vastly over-estimate the likelihood that the person has HIV in this example. Why? Because they do not pay enough attention to the base rate (in this example, the base rate of HIV is .3%).

9.1.1 Issues Around Probability

9.1.1.1 Types of Probabilities

It is important to distinguish between different types of probabilities: marginal probabilities, joint probabilities, and conditional probabilities.

9.1.1.1.1 Base Rate (Marginal Probability)

A base rate is the probability of an event. Base rates are marginal probabilities. A marginal probability is the probability of an event irrespective of the outcome of another variable. For instance, we can consider the following marginal probabilities:

\(P(C_i)\) is the probability (i.e., base rate) of a classification, \(C\), independent of other things. A base rate is often used as the “prior probability” in a Bayesian model. In our example above, \(P(C_i)\) is the base rate (i.e., prevalence) of HIV in the population: \(P(\text{HIV}) = .3\%\). \(P(R_i)\) is the probability (base rate) of a response, \(R\), independent of other things. In the example above, \(P(R_i)\) is the base rate of a positive test for HIV: \(P(\text{positive test}) = 1\%\). The base rate of a positive test is known as the positivity rate or selection ratio.

9.1.1.1.2 Joint Probability

A joint probability is the probability of two (or more) events occurring simultaneously. For instance, the probability of events \(A\) and \(B\) both occurring together is \(P(A, B)\). A joint probability can be calculated using the marginal probability of each event, as in Equation 9.1:

\[ P(A, B) = P(A) \cdot P(B) \tag{9.1}\]

Conversely (and rearranging the terms for the calculation of conditional probability), a joint probability can also be calculated using the conditional probability and marginal probability, as in Equation 9.2:

\[ P(A, B) = P(A | B) \cdot P(B) \tag{9.2}\]

9.1.1.1.3 Conditional Probability

A conditional probability is the probability of one event occurring given the occurrence of another event. Conditional probabilities are written as: \(P(A | B)\). This is read as the probability that event \(A\) occurs given that event \(B\) occurred. For instance, we can consider the following conditional probabilities:

\(P(C | R)\) is the probability of a classification, \(C\), given a response, \(R\). In other words, \(P(C | R)\) is the probability of having HIV given a positive test: \(P(\text{HIV} | \text{positive test})\). \(P(R | C)\) is the probability of a response, \(R\), given a classification, \(C\). In the example above, \(P(R | C)\) is the probability of having a positive test given that a person has HIV: \(P(\text{positive test} | \text{HIV}) = 95\%\).

A conditional probability can be calculated using the joint probability and marginal probability (base rate), as in Equation 9.3:

\[ P(A | B) = \frac{P(A, B)}{P(B)} \tag{9.3}\]

9.1.1.2 Confusion of the Inverse

A conditional probability is not the same thing as its reverse (or inverse) conditional probability. Unless the base rate of the two events (\(C\) and \(R\)) are the same, \(P(C | R) \neq P(R | C)\). However, people frequently make the mistake of thinking that two inverse conditional probabilities are the same. This mistake is known as the “confusion of the inverse”, or the “inverse fallacy”, or the “conditional probability fallacy”. The confusion of inverse probabilities is the logical error of representative thinking that leads people to assume that the probability of \(C\) given \(R\) is the same as the probability of \(R\) given C, even though this is not true. As a few examples to demonstrate the logical fallacy, if 93% of breast cancers occur in high-risk women, this does not mean that 93% of high-risk women will eventually get breast cancer. As another example, if 77% of car accidents take place within 15 miles of a driver’s home, this does not mean that you will get in an accident 77% of times you drive within 15 miles of your home.

Which car is the most frequently stolen? It is often the Honda Accord or Honda Civic—probably because they are among the most popular/commonly available cars. The probability that the car is a Honda Accord given that a car was stolen (\(p(\text{Honda Accord } | \text{ Stolen})\)) is what the media reports and what the police care about. However, that is not what buyers and car insurance companies should care about. Instead, they care about the probability that the car will be stolen given that it is a Honda Accord (\(p(\text{Stolen } | \text{ Honda Accord})\)).

9.1.1.3 Bayes’ Theorem

An alternative way of calculating a conditional probability is using the inverse conditional probability (instead of the joint probability). This is known as Bayes’ theorem. Bayes’ theorem can help us calculate a conditional probability of some classification, \(C\), given some response, \(R\), if we know the inverse conditional probability and the base rate (marginal probability) of each. Bayes’ theorem is in Equation 9.4:

\[ \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R_i)} \end{aligned} \tag{9.4}\]

Or, equivalently (rearranging the terms):

\[ \frac{P(C | R)}{P(R | C)} = \frac{P(C_i)}{P(R_i)} \tag{9.5}\]

Or, equivalently (rearranging the terms):

\[ \frac{P(C | R)}{P(C_i)} = \frac{P(R | C)}{P(R_i)} \tag{9.6}\]

More generally, Bayes’ theorem has been described as:

\[ \begin{aligned} P(H | E) &= \frac{P(E | H) \cdot P(H)}{P(E)} \\ \text{posterior probability} &= \frac{\text{likelihood} \times \text{prior probability}}{\text{model evidence}} \\ \end{aligned} \tag{9.7}\]

where \(H\) is the hypothesis, and \(E\) is the evidence—the new information that was not used in computing the prior probability.

In Bayesian terms, the posterior probability is the conditional probability of one event occurring given another event—it is the updated probability after the evidence is considered. In this case, the posterior probability is the probability of the classification occurring (\(C\)) given the response (\(R\)). The likelihood is the inverse conditional probability—the probability of the response (\(R\)) occurring given the classification (\(C\)). The prior probability is the marginal probability of the event (i.e., the classification) occurring, before we take into account any new information. The model evidence is the marginal probability of the other event occurring—i.e., the marginal probability of seeing the evidence.

In the HIV example above, we can calculate the conditional probability of HIV given a positive test using three terms: the conditional probability of a positive test given HIV (i.e., the sensitivity of the test), the base rate of HIV, and the base rate of a positive test for HIV. The conditional probability of HIV given a positive test is in Equation 9.8:

\[ \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R_i)} \\ P(\text{HIV} | \text{positive test}) &= \frac{P(\text{positive test} | \text{HIV}) \cdot P(\text{HIV})}{P(\text{positive test})} \\ &= \frac{\text{sensitivity of test} \times \text{base rate of HIV}}{\text{base rate of positive test}} \\ &= \frac{95\% \times .3\%}{1\%} = \frac{.95 \times .003}{.01}\\ &= 28.5\% \end{aligned} \tag{9.8}\]

The petersenlab package (Petersen, 2025) contains the pAgivenB() function that estimates the probability of one event, \(A\), given another event, \(B\).

Thus, assuming the probabilities in the example above, the conditional probability of having HIV if a person has a positive test is 28.5%. Given a positive test, chances are higher than not that the person does not have HIV.

Bayes’ theorem can be depicted visually (Ballesteros-Pérez et al., 2018). If we have 100,000 people in our population, we would be able to fill out a 2-by-2 confusion matrix, as depicted in Figure 9.1.

We know that .3% of the population contracts HIV, so 300 people in the population of 100,000 would contract HIV. Therefore, we put 300 in the marginal sum of those with HIV (\(.003 \times 100,000 = 300\)), i.e., the base rate of HIV. That means 99,700 people do not contract HIV (\(100,000 - 300 = 99,700\)). We know that 1% of the population tests positive for HIV, so we put 1,000 in the marginal sum of those who test positive \(.01 \times 100,000 = 1,000\), i.e., the marginal probability of a positive test (the selection ratio). That means 99,000 people test negative for HIV (\(100,000 - 1,000 = 99,000\)). We also know that 95% of those who have HIV test positive for HIV. Three hundred people have HIV, so 95% of them (i.e., 285 people; \(.95 \times 300 = 285\)) tested positive for HIV (true positives). Because we know that 300 people have HIV and that 285 of those with HIV tested positive, that means that 15 people with HIV tested negative (\(300 - 15 = 285\); false negatives). We know that 1,000 people tested positive for HIV, and 285 with HIV tested positive, so that means that 715 people without HIV tested positive (\(1,000 - 285 = 715\); false positives). We know that 99,000 people tested negative for HIV, and 15 with HIV tested negative, so that means that 98,985 people without HIV tested negative (\(99,000 - 15 = 98,985\); true negatives). So, to answer the question of what is the probability of having HIV if you have a positive test, we divide the number of people with HIV who had a positive test (285) by the total number of people who had a positive test (1000), which leads to a probability of 28.5%.

This can be depicted visually in Figures 9.2 and 9.3.1

Now let’s see what happens if the person tests positive a second time. We would revise our “prior probability” for HIV from the general prevalence in the population (0.3%) to be the “posterior probability” of HIV given a first positive test (28.5%). This is known as Bayesian updating. We would also update the “evidence” to be the marginal probability of getting a second positive test.

If we do not know a marginal probability (i.e., base rate) of an event (e.g., getting a second positive test), we can calculate a marginal probability with the law of total probability using conditional probabilities and the marginal probability of another event (e.g., having HIV). According to the law of total probability, the probability of getting a positive test is the probability that a person with HIV gets a positive test (i.e., sensitivity) times the base rate of HIV plus the probability that a person without HIV gets a positive test (i.e., false positive rate) times the base rate of not having HIV, as in Equation 9.9:

\[ \begin{aligned} P(\text{not } C_i) &= 1 - P(C_i) \\ P(R_i) &= P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot P(\text{not } C_i) \\ 1\% &= 95\% \times .3\% + P(R | \text{not } C) \times 99.7\% \\ \end{aligned} \tag{9.9}\]

In this case, we know the marginal probability (\(P(R_i)\)), and we can use that to solve for the unknown conditional probability that reflects the false positive rate (\(P(R | \text{not } C)\)), as in Equation 9.10:

\[ \scriptsize \begin{aligned} P(R_i) &= P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot P(\text{not } C_i) && \\ P(R_i) - [P(R | \text{not } C) \cdot P(\text{not } C_i)] &= P(R | C) \cdot P(C_i) && \text{Move } P(R | \text{not } C) \text{ to the left side} \\ - [P(R | \text{not } C) \cdot P(\text{not } C_i)] &= P(R | C) \cdot P(C_i) - P(R_i) && \text{Move } P(R_i) \text{ to the right side} \\ P(R | \text{not } C) \cdot P(\text{not } C_i) &= P(R_i) - [P(R | C) \cdot P(C_i)] && \text{Multiply by } -1 \\ P(R | \text{not } C) &= \frac{P(R_i) - [P(R | C) \cdot P(C_i)]}{P(\text{not } C_i)} && \text{Divide by } P(R | \text{not } C) \\ &= \frac{1\% - [95\% \times .3\%]}{99.7\%} = \frac{.01 - [.95 \times .003]}{.997}\\ &= .7171515\% \\ \end{aligned} \tag{9.10}\]

We can then estimate the marginal probability of the event, substititing in \(P(R | \text{not } C)\), using the law of total probability. The petersenlab package (Petersen, 2025) contains the pA() function that estimates the marginal probability of one event, \(A\).

The petersenlab package (Petersen, 2025) contains the pBgivenNotA() function that estimates the probability of one event, \(B\), given that another event, \(A\), did not occur.

With this conditional probability (\(P(R | \text{not } C)\)), the updated marginal probability of having HIV (\(P(C_i)\)), and the updated marginal probability of not having HIV (\(P(\text{not } C_i)\)), we can now calculate an updated estimate of the marginal probability of getting a second positive test. The probability of getting a second positive test is the probability that a person with HIV gets a second positive test (i.e., sensitivity) times the updated probability of HIV plus the probability that a person without HIV gets a second positive test (i.e., false positive rate) times the updated probability of not having HIV, as in Equation 9.11:

\[ \begin{aligned} P(R_{i}) &= P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot P(\text{not } C_i) \\ &= 95\% \times 28.5\% + .7171515\% \times 71.5\% = .95 \times .285 + .007171515 \times .715 \\ &= 27.58776\% \end{aligned} \tag{9.11}\]

The petersenlab package (Petersen, 2025) contains the pB() function that estimates the marginal probability of one event, \(B\).

[1] 0.2758776Code

[1] 0.2758776We then substitute the updated marginal probability of HIV (\(P(C_i)\)) and the updated marginal probability of getting a second positive test (\(P(R_i)\)) into Bayes’ theorem to get the probability that the person has HIV if they have a second positive test (assuming the errors of each test are independent, i.e., uncorrelated), as in Equation 9.12:

\[ \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R_i)} \\ P(\text{HIV} | \text{a second positive test}) &= \frac{P(\text{a second positive test} | \text{HIV}) \cdot P(\text{HIV})}{P(\text{a second positive test})} \\ &= \frac{\text{sensitivity of test} \times \text{updated base rate of HIV}}{\text{updated base rate of positive test}} \\ &= \frac{95\% \times 28.5\%}{27.58776\%} \\ &= 98.14\% \end{aligned} \tag{9.12}\]

The petersenlab package (Petersen, 2025) contains the pAgivenB() function that estimates the probability of one event, \(A\), given another event, \(B\).

[1] 0.9814135Code

[1] 0.9814134Thus, a second positive test greatly increases the posterior probability that the person has HIV from 28.5% to over 98%.

As seen in the rearranged formula in Equation 9.5, the ratio of the conditional probabilities is equal to the ratio of the base rates. Thus, it is important to consider base rates. People have a strong tendency to ignore (or give insufficient weight to) base rates when making predictions. The failure to consider the base rate when making predictions when given specific information about a case is a cognitive bias known as the base-rate fallacy or as base rate neglect. For example, people tend to say that the probability of a rare event is more likely than it actually is given specific information.

As seen in the rearranged formula in Equation 9.6, the inverse conditional probabilities (\(P(C | R)\) and \(P(R | C)\)) are not equal unless the base rates of \(C\) and \(R\) are the same. If the base rates are not equal, we are making at least some prediction errors. If \(P(C_i) > P(R_i)\), our predictions must include some false negatives. If \(P(R_i) > P(C_i)\), our predictions must include some false positives.

Using the law of total probability, we can substitute the calculation of the marginal probability (\(P(R_i)\)) into Bayes’ theorem to get an alternative formulation of Bayes’ theorem, as in Equation 9.13:

\[ \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R_i)} \\ &= \frac{P(R | C) \cdot P(C_i)}{P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot P(\text{not } C_i)} \\ &= \frac{P(R | C) \cdot P(C_i)}{P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot [1 - P(C_i)]} \end{aligned} \tag{9.13}\]

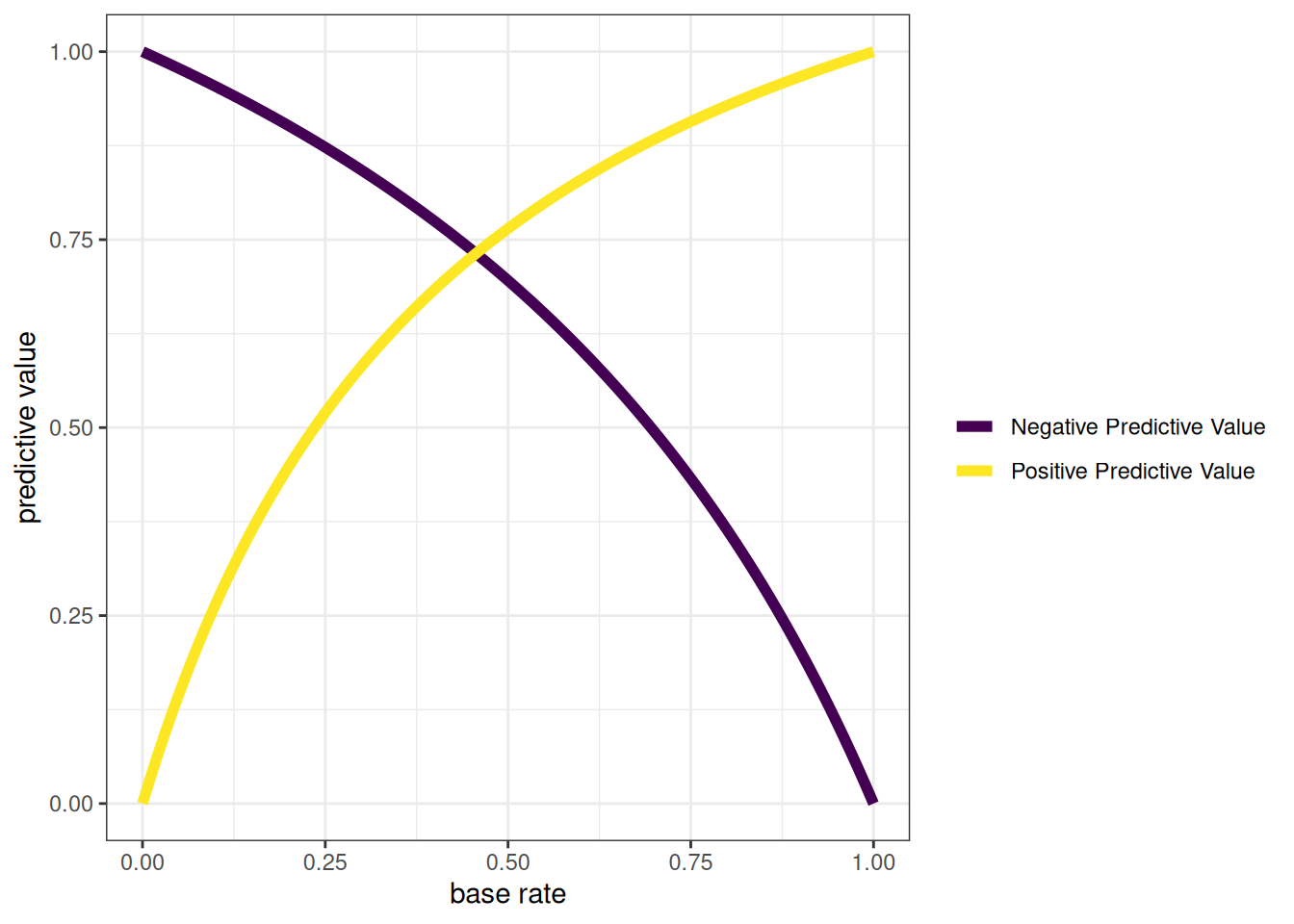

Instead of using marginal probability (base rate) of \(R\), as in the original formulation of Bayes’ theorem, it uses the conditional probability, \(P(R|\text{not } C)\). Thus, it uses three terms: two conditional probabilities—\(P(R|C)\) and \(P(R|\text{not } C)\)—and one marginal probability, \(P(C_i)\). This alternative formulation of Bayes’ theorem can be used to calculate positive predictive value, based on sensitivity, specificity, and the base rate, as presented in Equation 9.51.

Let us see how the alternative formulation of Bayes’ theorem applies to the HIV example above. We can calculate the probability of HIV given a positive test using three terms: the conditional probability that a person with HIV gets a positive test (i.e., sensitivity), the conditional probability that a person without HIV gets a positive test (i.e., false positive rate), and the base rate of HIV. Using the \(P(R|\text{not } C)\) calculated in Equation 9.10, the conditional probability of HIV given a single positive test is in Equation 9.14:

\[ \small \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot [1 - P(C_i)]} \\ &= \frac{\text{sensitivity of test} \times \text{base rate of HIV}}{\text{sensitivity of test} \times \text{base rate of HIV} + \text{false positive rate of test} \times (1 - \text{base rate of HIV})} \\ &= \frac{95\% \times .3\%}{95\% \times .3\% + .7171515\% \times (1 - .3\%)} = \frac{.95 \times .003}{.95 \times .003 + .007171515 \times (1 - .003)}\\ &= 28.5\% \end{aligned} \tag{9.14}\]

[1] 0.285Code

[1] 0.285The petersenlab package (Petersen, 2025) contains the pAgivenB() function that estimates the probability of one event, \(A\), given another event, \(B\).

[1] 0.285Code

[1] 0.285To calculate the conditional probability of HIV given a second positive test, we update our priors because the person has now tested positive for HIV. We update the prior probability of HIV (\(P(C_i)\)) based on the posterior probability of HIV after a positive test (\(P(C | R)\)) that we calculated above. We can calculate the conditional probability of HIV given a second positive test using three terms: the conditional probability that a person with HIV gets a positive test (i.e., sensitivity; which stays the same), the conditional probability that a person without HIV gets a positive test (i.e., false positive rate; which stays the same), and the updated marginal probability of HIV. The conditional probability of HIV given a second positive test is in Equation 9.15:

\[ \scriptsize \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R | C) \cdot P(C_i) + P(R | \text{not } C) \cdot [1 - P(C_i)]} \\ &= \frac{\text{sensitivity of test} \times \text{updated base rate of HIV}}{\text{sensitivity of test} \times \text{updated base rate of HIV} + \text{false positive rate of test} \times (1 - \text{updated base rate of HIV})} \\ &= \frac{95\% \times 28.5\%}{95\% \times 28.5\% + .7171515\% \times (1 - 28.5\%)} = \frac{.95 \times .285}{.95 \times .285 + .007171515 \times (1 - .285)}\\ &= 98.14\% \end{aligned} \tag{9.15}\]

The petersenlab package (Petersen, 2025) contains the pAgivenB() function that estimates the probability of one event, \(A\), given another event, \(B\).

[1] 0.9814134Code

[1] 0.9814134[1] 0.9814134Code

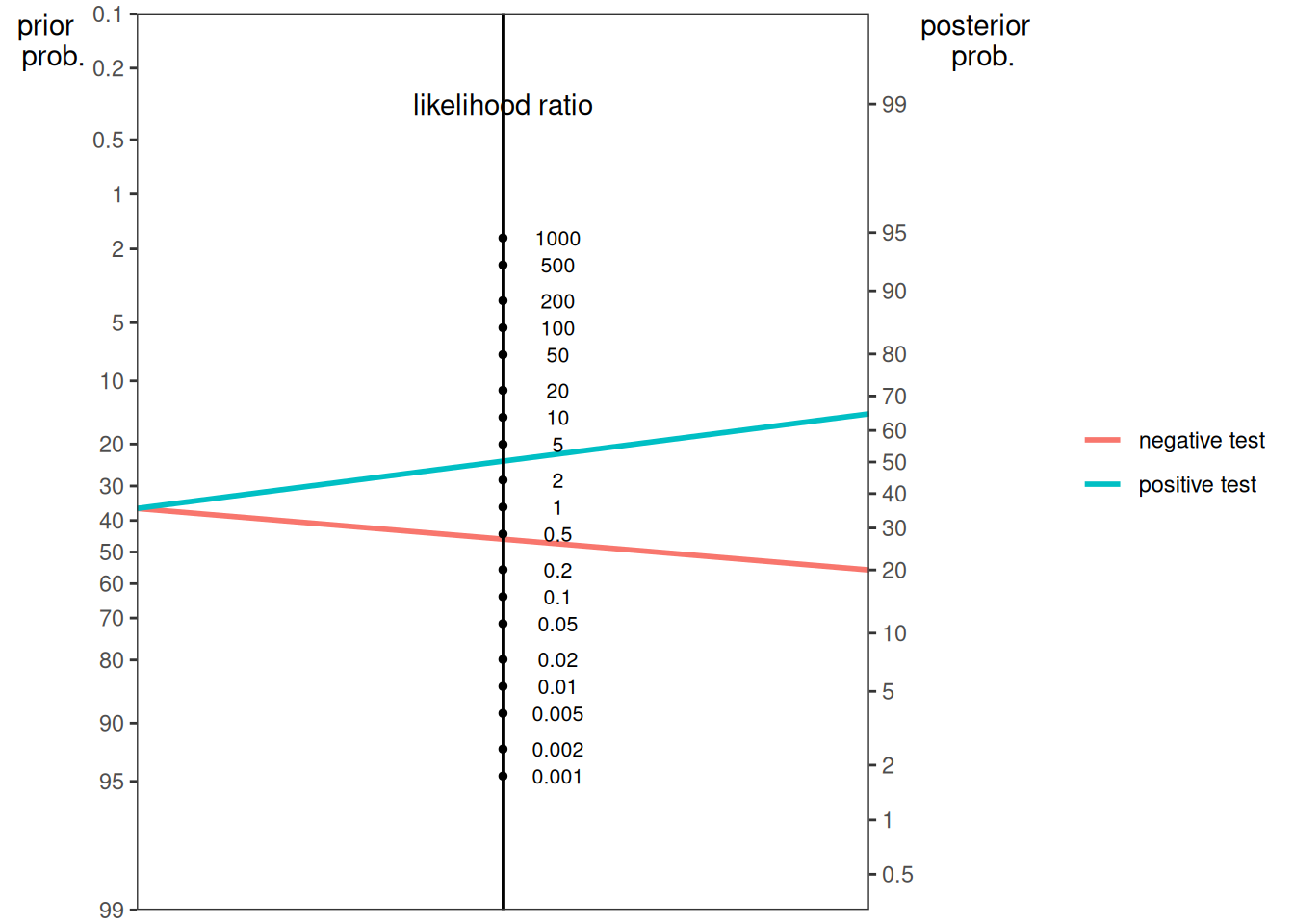

[1] 0.9814134If we want to compare the relative probability of two outcomes, we can use the odds form of Bayes’ theorem, as in Equation 9.16:

\[ \begin{aligned} P(C | R) &= \frac{P(R | C) \cdot P(C_i)}{P(R_i)} \\ P(\text{not } C | R) &= \frac{P(R | \text{not } C) \cdot P(\text{not } C_i)}{P(R_i)} \\ \frac{P(C | R)}{P(\text{not } C | R)} &= \frac{\frac{P(R | C) \cdot P(C_i)}{P(R_i)}}{\frac{P(R | \text{not } C) \cdot P(\text{not } C_i)}{P(R_i)}} \\ &= \frac{P(R | C) \cdot P(C_i)}{P(R | \text{not } C) \cdot P(\text{not } C_i)} \\ &= \frac{P(C_i)}{P(\text{not } C_i)} \times \frac{P(R | C)}{P(R | \text{not } C)} \\ \text{posterior odds} &= \text{prior odds} \times \text{likelihood ratio} \end{aligned} \tag{9.16}\]

In sum, the marginal probability, including the prior probability or base rate, should be weighed heavily in predictions unless there are sufficient data to indicate otherwise, i.e., to update the posterior probability based on new evidence. Bayes’ theorem provides a powerful tool to anchor predictions to the base rate unless sufficient evidence changes the posterior probability (by updating the evidence and prior probability).

9.1.2 Prediction Accuracy

9.1.2.1 Decision Outcomes

To consider how we can evaluate the accuracy of predictions, consider an example adapted from Meehl & Rosen (1955). The military conducts a test of its prospective members to screen out applicants who would likely fail basic training. To evaluate the accuracy of our predictions using the test, we can examine a confusion matrix. A confusion matrix is a matrix that presents the predicted outcome on one dimension and the actual outcome (truth) on the other dimension. If the predictions and outcomes are dichotomous, the confusion matrix is a 2x2 matrix with two rows and two columns that represent four possible predicted-actual combinations (decision outcomes): true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).

When discussing the four decision outcomes, “true” means an accurate judgment, whereas “false” means an inaccurate judgment. “Positive” means that the judgment was that the person has the characteristic of interest, whereas “negative” means that the judgment was that the person does not have the characteristic of interest. A true positive is a correct judgment (or prediction) where the judgment was that the person has (or will have) the characteristic of interest, and, in truth, they actually have (or will have) the characteristic. A true negative is a correct judgment (or prediction) where the judgment was that the person does not have (or will not have) the characteristic of interest, and, in truth, they actually do not have (or will not have) the characteristic. A false positive is an incorrect judgment (or prediction) where the judgment was that the person has (or will have) the characteristic of interest, and, in truth, they actually do not have (or will not have) the characteristic. A false negative is an incorrect judgment (or prediction) where the judgment was that the person does not have (or will not have) the characteristic of interest, and, in truth, they actually do have (or will have) the characteristic.

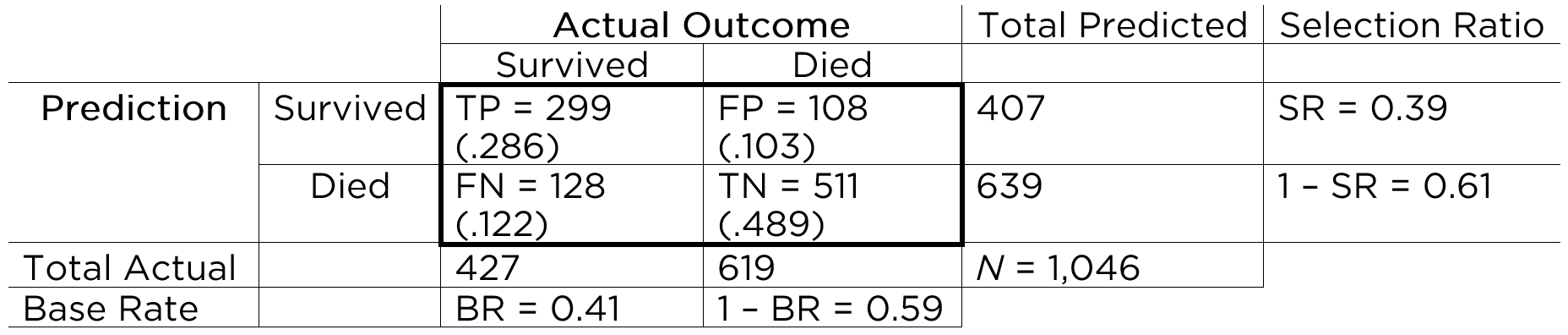

An example of a confusion matrix is in Figure 9.4.

With the information in the confusion matrix, we can calculate the marginal sums and the proportion of people in each cell (in parentheses), as depicted in Figure 9.5.

That is, we can sum across the rows and columns to identify how many people actually showed poor adjustment (\(n = 100\)) versus good adjustment (\(n = 1,900\)), and how many people were selected to reject (\(n = 508\)) versus retain (\(n = 1,492\)). If we sum the column of predicted marginal sums (\(508 + 1,492\)) or the row of actual marginal sums (\(100 + 1,900\)), we get the total number of people (\(N = 2,000\)).

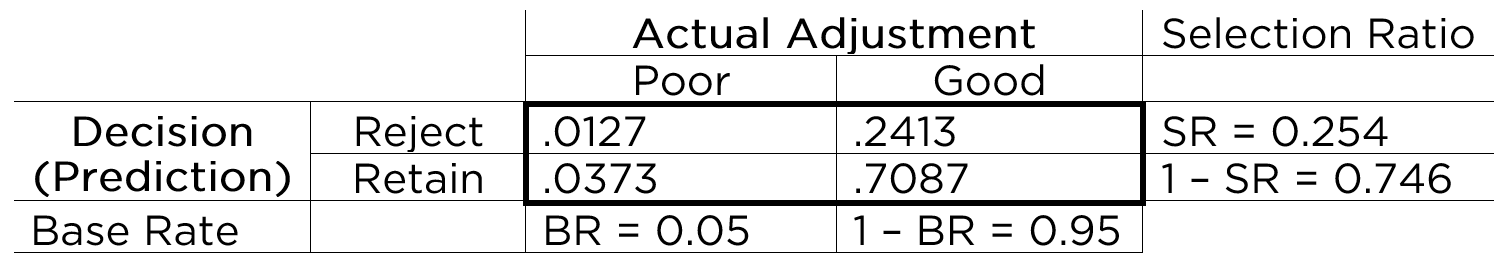

Based on the marginal sums, we can compute the marginal probabilities, as depicted in Figure 9.6.

The marginal probability of the person having the characteristic of interest (i.e., showing poor adjustment) is called the base rate (BR). That is, the base rate is the proportion of people who have the characteristic. It is calculated by dividing the number of people with poor adjustment (\(n = 100\)) by the total number of people (\(N = 2,000\)): \(BR = \frac{FN + TP}{N}\). Here, the base rate reflects the prevalence of poor adjustment. In this case, the base rate is .05, so there is a 5% chance that an applicant will be poorly adjusted. The marginal probability of good adjustment is equal to 1 minus the base rate of poor adjustment.

The marginal probability of predicting that a person has the characteristic (i.e., rejecting a person) is called the selection ratio (SR). The selection ratio is the proportion of people who will be selected (in this case, rejected rather than retained); i.e., the proportion of people who are identified as having the characteristic. The selection ratio is calculated by dividing the number of people selected to reject (\(n = 508\)) by the total number of people (\(N = 2,000\)): \(SR = \frac{TP + FP}{N}\). In this case, the selection ratio is .25, so 25% of people are rejected. The marginal probability of not selecting someone to reject (i.e., the marginal probability of retaining) is equal to 1 minus the selection ratio.

The selection ratio might be something that the test dictates according to its cutoff score. Or, the selection ratio might be imposed by external factors that place limits on how many people you can assign a positive test value. For instance, when deciding whether to treat a client, the selection ratio may depend on how many therapists are available and how many cases can be treated.

9.1.2.2 Percent Accuracy

Based on the confusion matrix, we can calculate the prediction accuracy based on the percent accuracy of the predictions. The percent accuracy is the number of correct predictions divided by the total number of predictions, and multiplied by 100. In the context of a confusion matrix, this is calculated as: \(100\% \times \frac{\text{TP} + \text{TN}}{N}\). In this case, our percent accuracy was 78%—that is, 78% of our predictions were accurate, and 22% of our predictions were inaccurate.

9.1.2.3 Percent Accuracy by Chance

78% sounds pretty accurate. And it is much higher than 50%, so we are doing a pretty good job, right? Well, it is important to compare our accuracy to what accuracy we would expect to get by chance alone, if predictions were made by a random process rather than using a test’s scores. Our selection ratio was 25.4%. How accurate would we be if we randomly selected 25.4% of people to reject? To determine what accuracy we could get by chance alone given the selection ratio and the base rate, we can calculate the chance probability of true positives and the chance probability of true negatives. The probability of a given cell in the confusion matrix is a joint probability—the probability of two events occurring simultaneously. To calculate a joint probability, we multiply the probability of each event.

So, to get the chance expectancies of true positives, we would multiply the respective marginal probabilities, as in Equation 9.17:

\[ \begin{aligned} P(TP) &= P(\text{Poor adjustment}) \times P(\text{Reject})\\ &= BR \times SR \\ &= .05 \times .254 \\ &= .0127 \end{aligned} \tag{9.17}\]

To get the chance expectancies of true negatives, we would multiply the respective marginal probabilities, as in Equation 9.18:

\[ \begin{aligned} P(TN) &= P(\text{Good adjustment}) \times P(\text{Retain})\\ &= (1 - BR) \times (1 - SR) \\ &= .95 \times .746 \\ &= .7087 \end{aligned} \tag{9.18}\]

To get the percent accuracy by chance, we sum the chance expectancies for the correct predictions (TP and TN): \(.0127 + .7087 = .7214\). Thus, the percent accuracy you can get by chance alone is 72%. This is because most of our predictions are to retain people, and the base rate of poor adjustment is quite low (.05). Our measure with 78% accuracy provides only a 6% increment in correct predictions. Thus, you cannot judge how good your judgment or prediction is until you know how you would do by random chance.

The chance expectancies for each cell of the confusion matrix are in Figure 9.7.

9.1.2.4 Predicting from the Base Rate

Now, let us consider how well you would do if you were to predict from the base rate. Predicting from the base rate is also called “betting from the base rate”, and it involves setting the selection ratio by taking advantage of the base rate so that you go with the most likely outcome in every prediction. Because the base rate is quite low (.05), we could predict from the base rate by selecting no one to reject (i.e., setting the selection ratio at zero). Our percent accuracy by chance if we predict from the base rate would be calculated by multiplying the marginal probabilities, as we did above, but with a new selection ratio, as in Equation 9.19:

\[ \begin{aligned} P(TP) &= P(\text{Poor adjustment}) \times P(\text{Reject})\\ &= BR \times SR \\ &= .05 \times 0 \\ &= 0 \\ \\ P(TN) &= P(\text{Good adjustment}) \times P(\text{Retain})\\ &= (1 - BR) \times (1 - SR) \\ &= .95 \times 1 \\ &= .95 \end{aligned} \tag{9.19}\]

We sum the chance expectancies for the correct predictions (TP and TN): \(0 + .95 = .95\). Thus, our percent accuracy by predicting from the base rate is 95%. This is damning to our measure because it is a much higher accuracy than the accuracy of our measure. That is, we can be much more accurate than our measure simply by predicting from the base rate and selecting no one to reject.

Going with the most likely outcome in every prediction (predicting from the base rate) can be highly accurate (in terms of percent accuracy) as noted by Meehl & Rosen (1955), especially when the base rate is very low or very high. This should serve as an important reminder that we need to compare the accuracy of our measures to the accuracy by (1) random chance and (2) predicting from the base rate. There are several important implications of the impact of base rates on prediction accuracy. One implication is that using the same test in different settings with different base rates will markedly change the accuracy of the test. Oftentimes, using a test will actually decrease the predictive accuracy when the base rate deviates greatly from .50. But percent accuracy is not everything. Percent accuracy treats different kinds of errors as if they are equally important. However, the value we place on different kinds of errors may be different, as described next.

9.1.2.5 Different Kinds of Errors Have Different Costs

Some errors have a high cost, and some errors have a low cost. Among the four decision outcomes, there are two types of errors: false positives and false negatives. The extent to which false positives and false negatives are costly depends on the prediction problem. So, even though you can often be most accurate by going with the base rate, it may be advantageous to use a screening instrument despite lower overall accuracy because of the huge difference in costs of false positives versus false negatives in some cases.

Consider the example of a screening instrument for HIV. False positives would be cases where we said that someone is at high risk of HIV when they are not, whereas false negatives are cases where we said that someone is not at high risk when they actually are. The costs of false positives include a shortage of blood, some follow-up testing, and potentially some anxiety, but that is about it. The costs of false negatives may be people getting HIV. In this case, the costs of false negatives greatly outweigh the costs of false positives, so we use a screening instrument to try to identify the cases at high risk for HIV because of the important consequences of failing to do so, even though using the screening instrument will lower our overall accuracy level.

Another example is when the Central Intelligence Agency (CIA) used a screen for protective typists during wartime to try to detect spies. False positives would be cases where the CIA believes that a person is a spy when they are not, and the CIA does not hire them. False negatives would be cases where the CIA believes that a person is not a spy when they actually are, and the CIA hires them. In this case, a false positive would be fine, but a false negative would be really bad.

How you weigh the costs of different errors depends considerably on the domain and context. Possible costs of false positives to society include: unnecessary and costly treatment with side effects and sending an innocent person to jail (despite our presumption of innocence in the United States criminal justice system that a person is innocent until proven guilty). Possible costs of false negatives to society include: setting a guilty person free, failing to detect a bomb or tumor, and preventing someone from getting treatment who needs it.

The differential costs of different errors also depend on how much flexibility you have in the selection ratio in being able to set a stringent versus loose selection ratio. Consider if there is a high cost of getting rid of people during the selection process. For example, if you must hire 100 people and only 100 people apply for the position, you cannot lose people, so you need to hire even high-risk people. However, if you do not need to hire many people, then you can hire more conservatively.

Any time the selection ratio differs from the base rate, you will make errors. For example, if you reject 25% of applicants, and the base rate of poor adjustment is 5%, then you are making errors of over-rejecting (false positives). By contrast, if you reject 1% of applicants and the base rate of poor adjustment is 5%, then you are making errors of under-rejecting or over-accepting (false negatives).

A low base rate makes it harder to make predictions, and tends to lead to less accurate predictions. For instance, it is very challenging to predict low base rate behaviors, including suicide (Kessler et al., 2020). The difficulty in predicting events with a low base rate is apparent with the true score formula from classical test theory: \(X = T + e\). As described in Equation 4.10, reliability is the ratio of true score variance to observed score variance. As true score variance increases, reliability increases. If the base rate is .05, the maximum variance of the true scores is .05. The lower true score variance makes the measure less reliable and hard to make accurate predictions.

9.1.2.6 Sensitivity, Specificity, PPV, and NPV

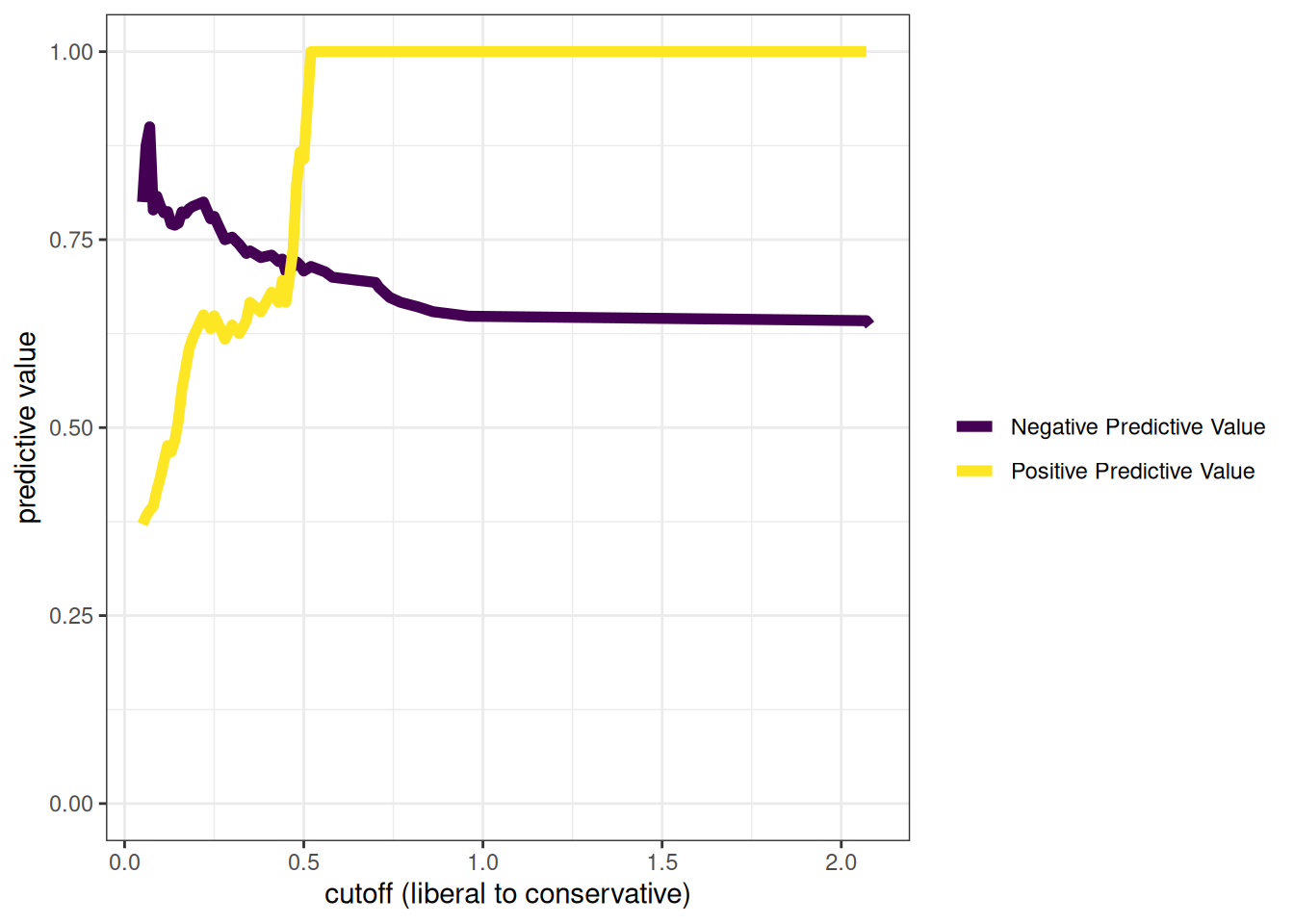

As described earlier, percent accuracy is not the only important aspect of accuracy. Percent accuracy can be misleading because it is highly influenced by base rates. You can have a high percent accuracy by predicting from the base rate and saying that no one has the condition (if the base rate is low) or that everyone has the condition (if the base rate is high). Thus, it is also important to consider other aspects of accuracy, including sensitivity (SN), specificity (SP), positive predictive value (PPV), and negative predictive value (NPV). We want our predictions to be sensitive to be able to detect the characteristic but also to be specific so that we classify only people actually with the characteristic as having the characteristic.

Let us return to the confusion matrix in Figure 9.8. If we know the frequency of each of the four predicted-actual combinations of the confusion matrix (TP, TN, FP, FN), we can calculate sensitivity, specificity, PPV, and NPV.

Sensitivity is the proportion of those with the characteristic (\(\text{TP} + \text{FN}\)) that we identified with our measure (\(\text{TP}\)): \(\frac{\text{TP}}{\text{TP} + \text{FN}} = \frac{86}{86 + 14} = .86\). Specificity is the proportion of those who do not have the characteristic (\(\text{TN} + \text{FP}\)) that we correctly classify as not having the characteristic (\(\text{TN}\)): \(\frac{\text{TN}}{\text{TN} + \text{FP}} = \frac{1,478}{1,478 + 422} = .78\). PPV is the proportion of those who we classify as having the characteristic (\(\text{TP} + \text{FP}\)) who actually have the characteristic (\(\text{TP}\)): \(\frac{\text{TP}}{\text{TP} + \text{FP}} = \frac{86}{86 + 422} = .17\). NPV is the proportion of those we classify as not having the characteristic (\(\text{TN} + \text{FN}\)) who actually do not have the characteristic (\(\text{TN}\)): \(\frac{\text{TN}}{\text{TN} + \text{FN}} = \frac{1,478}{1,478 + 14} = .99\).

Sensitivity, specificity, PPV, and NPV are proportions, and their values therefore range from 0 to 1, where higher values reflect greater accuracy. With sensitivity, specificity, PPV, and NPV, we have a good snapshot of how accurate the measure is at a given cutoff. In our case, our measure is good at finding whom to reject (high sensitivity), but it is rejecting too many people who do not need to be rejected (lower PPV due to many FPs). Most people whom we classify as having the characteristic do not actually have the characteristic. However, the fact that we are over-rejecting could be okay depending on our goals, for instance, if we do not care about over-dropping (i.e., the PPV being low).

9.1.2.6.1 Some Accuracy Estimates Depend on the Cutoff

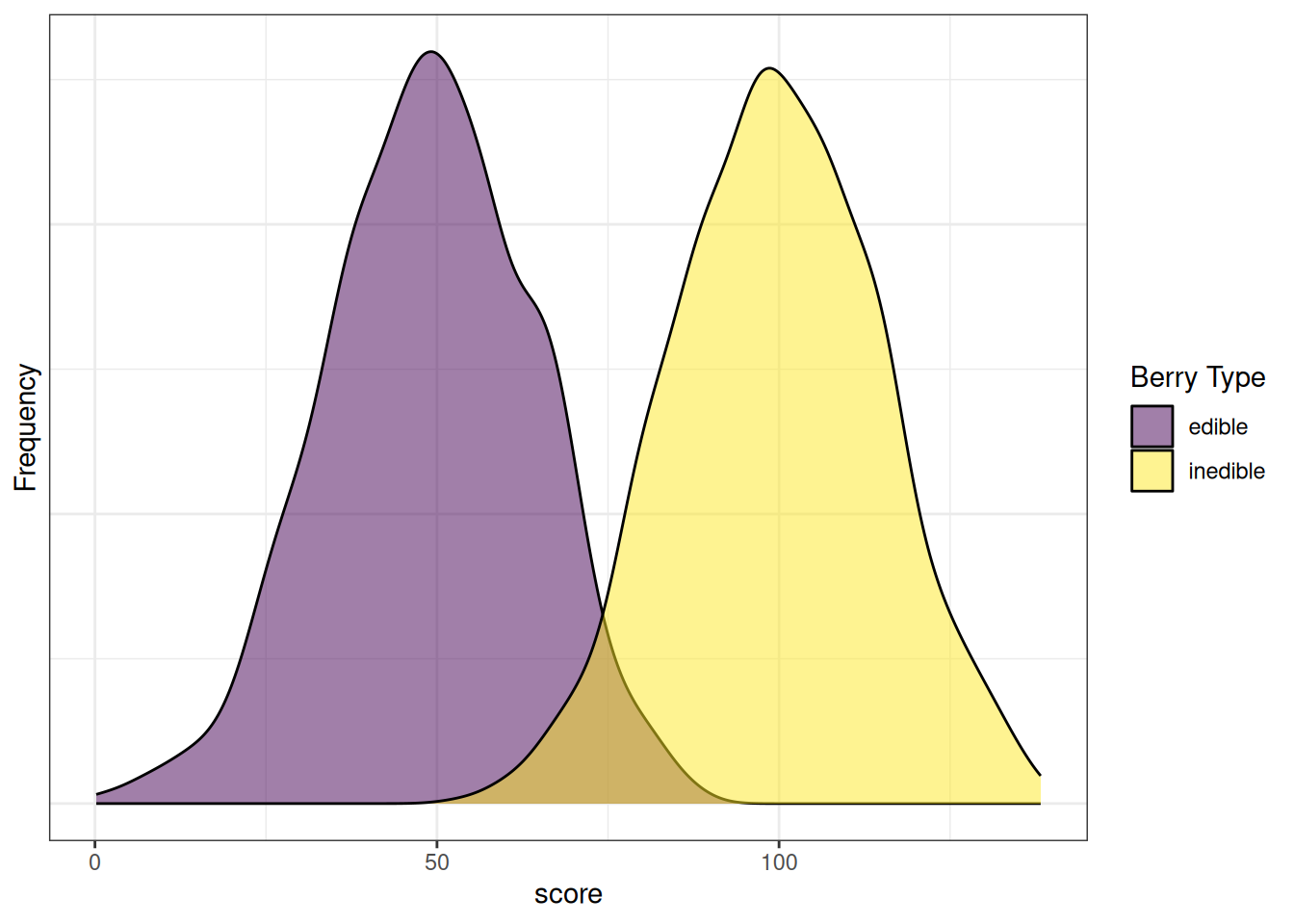

Sensitivity, specificity, PPV, and NPV differ based on the cutoff (i.e., threshold) for classification. Consider the following example. Aliens visit Earth, and they develop a test to determine whether a berry is edible or inedible.

Figure 9.9 depicts the distributions of scores by berry type. Note how there are clearly two distinct distributions. However, the distributions overlap to some degree. Thus, any cutoff will have at least some inaccurate classifications. The extent of overlap of the distributions reflects the amount of measurement error of the measure with respect to the characteristic of interest.

Code

#No Cutoff

ggplot(data = edibleData, aes(x = score, ymin = 0, fill = type)) +

geom_density(alpha = .5) +

scale_fill_manual(name = "Berry Type", values = c(viridis(2)[1], viridis(2)[2])) +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(axis.text.y = element_blank(),

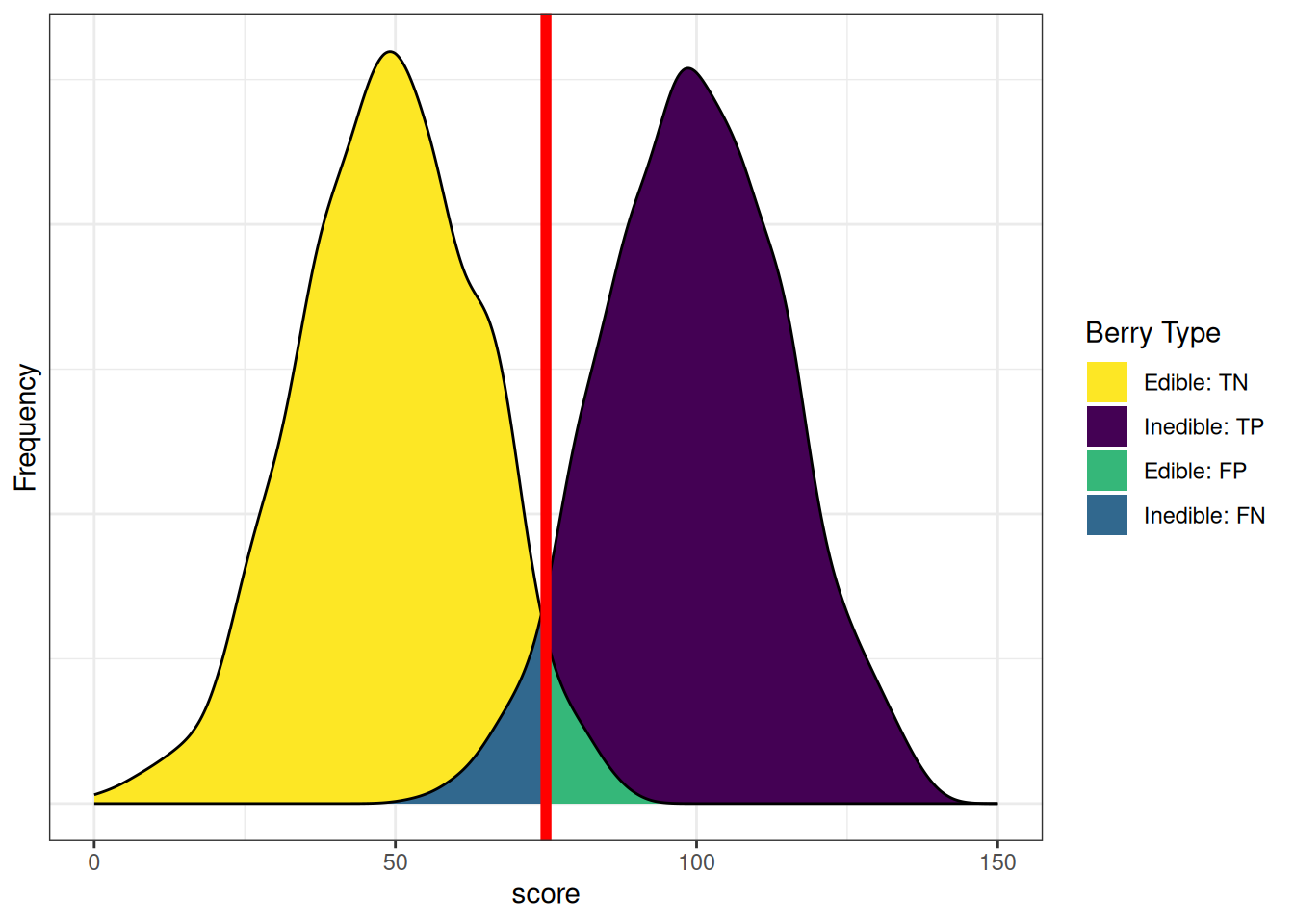

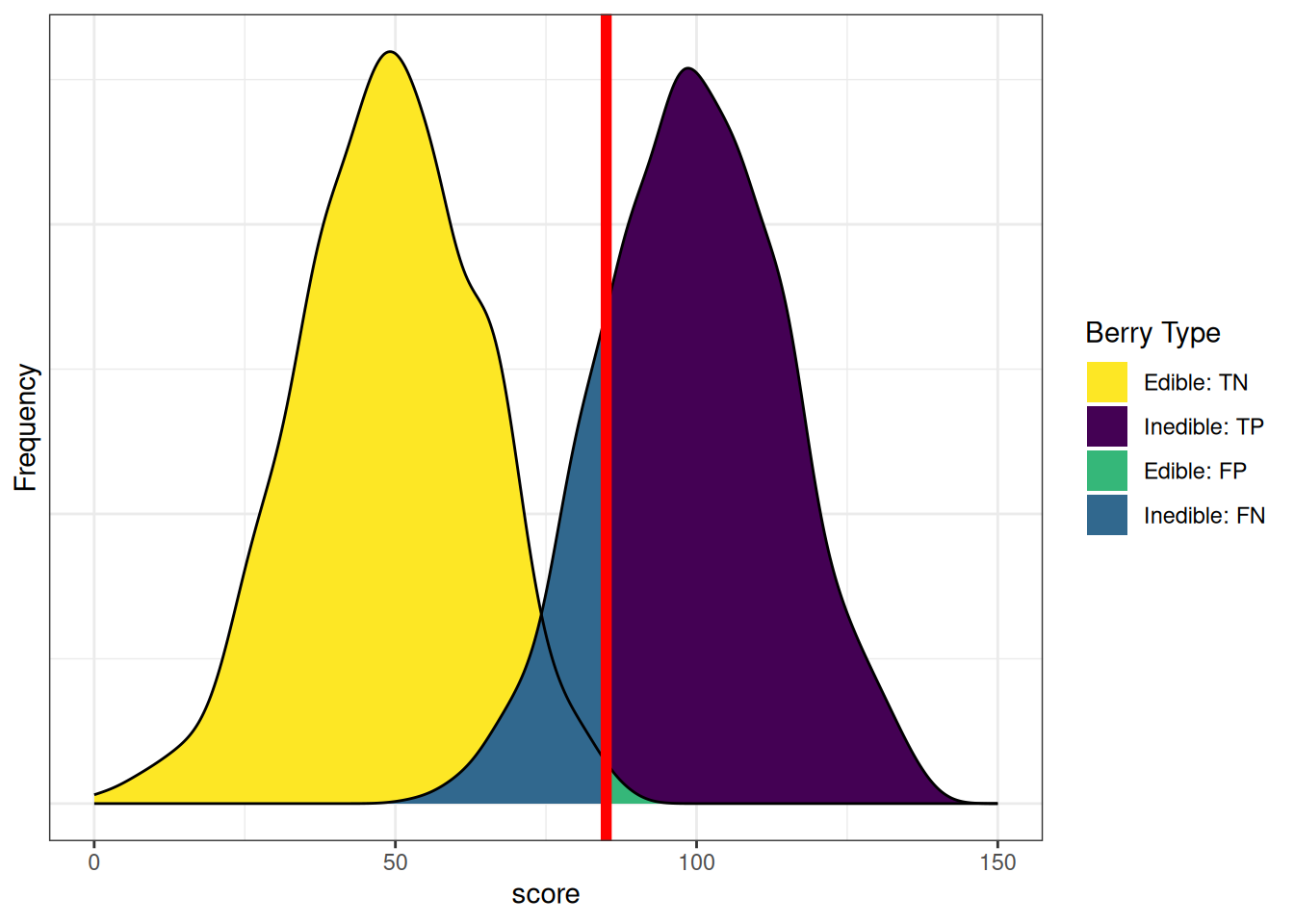

axis.ticks.y = element_blank())Figure 9.10 depicts the distributions of scores by berry type with a cutoff. The red line indicates the cutoff—the level above which berries are classified by the test as inedible. There are errors on each side of the cutoff. Below the cutoff, there are some false negatives (blue): inedible berries that are inaccurately classified as edible. Above the cutoff, there are some false positives (green): edible berries that are inaccurately classified as inedible. Costs of false negatives could include sickness or death from eating the inedible berries. Costs of false positives could include taking longer to find food, finding insufficient food, and starvation.

Code

#Standard Cutoff

ggplot(data = density_data, aes(x = x, ymin = 0, ymax = y, fill = type)) +

geom_ribbon(alpha = 1) +

scale_fill_manual(name = "Berry Type",

values = c(viridis(4)[4], viridis(4)[1], viridis(4)[3], viridis(4)[2]),

breaks = c("edible_TN","inedible_TP","edible_FP","inedible_FN"),

labels = c("Edible: TN","Inedible: TP","Edible: FP","Inedible: FN")) +

geom_line(aes(y = y)) +

geom_vline(xintercept = cutoff, color = "red", linewidth = 2) +

scale_x_continuous(name = "score") +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(axis.text.y = element_blank(),

axis.ticks.y = element_blank())Based on our assessment goals, we might use a different selection ratio by changing the cutoff. Figure 9.11 depicts the distributions of scores by berry type when we raise the cutoff. There are now more false negatives (blue) and fewer false positives (green). If we raise the cutoff (to be more conservative), the number of false negatives increases and the number of false positives decreases. Consequently, as the cutoff increases, sensitivity and NPV decrease (because we have more false negatives), whereas specificity and PPV increase (because we have fewer false positives). A higher cutoff could be optimal if the costs of false positives are considered greater than the costs of false negatives. For instance, if the aliens cannot risk eating the inedible berries because the berries are fatal, and there are sufficient edible berries that can be found to feed the alien colony.

Code

#Raise the cutoff

cutoff <- 85

hist_edible <- density(edibleScores, from = 0, to = 150) %$%

data.frame(x = x, y = y) %>%

mutate(area = x >= cutoff)

hist_edible$type[hist_edible$area == TRUE] <- "edible_FP"

hist_edible$type[hist_edible$area == FALSE] <- "edible_TN"

hist_inedible <- density(inedibleScores, from = 0, to = 150) %$%

data.frame(x = x, y = y) %>%

mutate(area = x < cutoff)

hist_inedible$type[hist_inedible$area == TRUE] <- "inedible_FN"

hist_inedible$type[hist_inedible$area == FALSE] <- "inedible_TP"

density_data <- bind_rows(hist_edible, hist_inedible)

density_data$type <- factor(density_data$type, levels = c("edible_TN","inedible_TP","edible_FP","inedible_FN"))

ggplot(data = density_data, aes(x = x, ymin = 0, ymax = y, fill = type)) +

geom_ribbon(alpha = 1) +

scale_fill_manual(name = "Berry Type",

values = c(viridis(4)[4], viridis(4)[1], viridis(4)[3], viridis(4)[2]),

breaks = c("edible_TN","inedible_TP","edible_FP","inedible_FN"),

labels = c("Edible: TN","Inedible: TP","Edible: FP","Inedible: FN")) +

geom_line(aes(y = y)) +

geom_vline(xintercept = cutoff, color = "red", linewidth = 2) +

scale_x_continuous(name = "score") +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(axis.text.y = element_blank(),

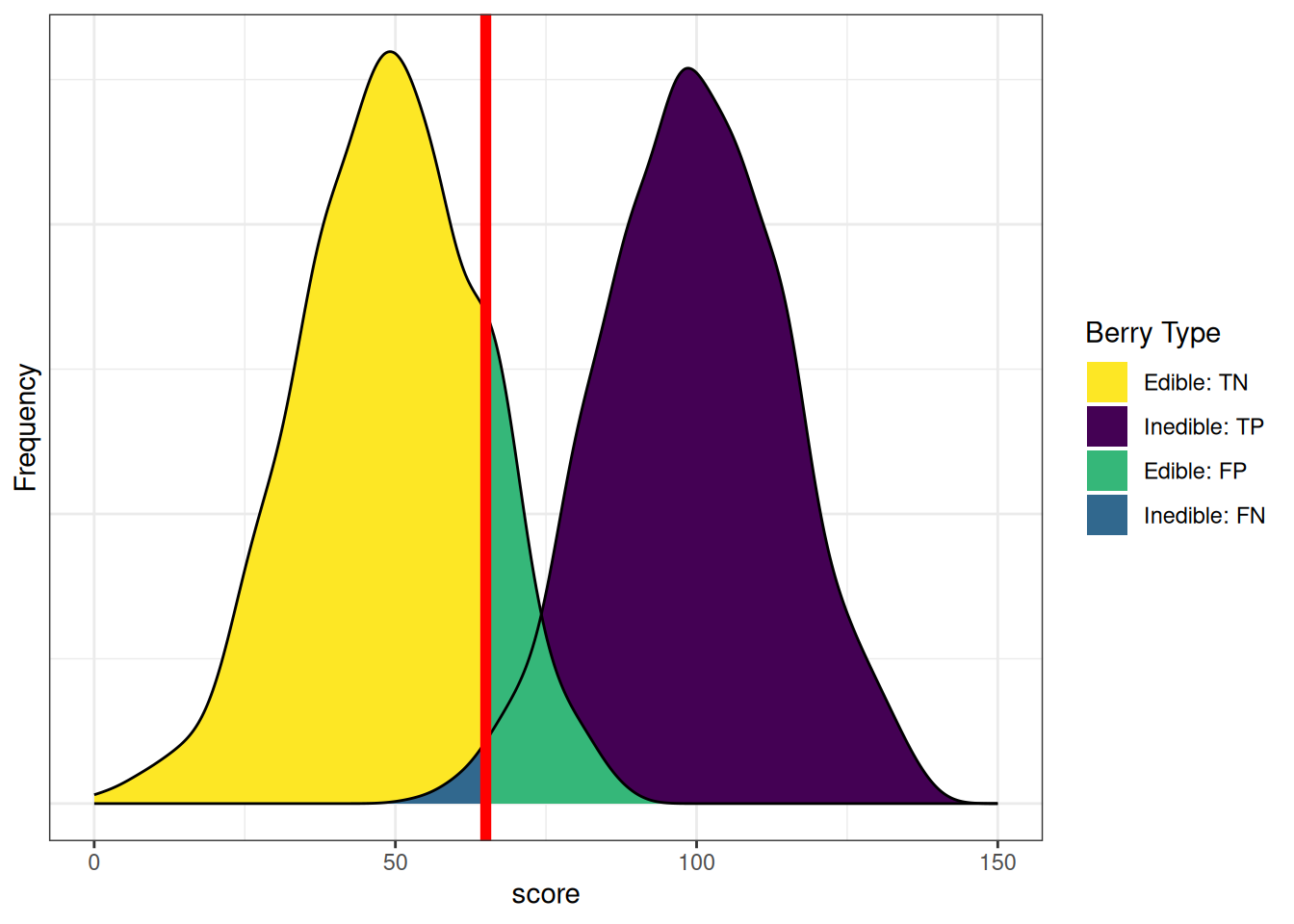

axis.ticks.y = element_blank())Figure 9.12 depicts the distributions of scores by berry type when we lower the cutoff. There are now fewer false negatives (blue) and more false positives (green). If we lower the cutoff (to be more liberal), the number of false negatives decreases and the number of false positives increases. Consequently, as the cutoff decreases, sensitivity and NPV increase (because we have fewer false negatives), whereas specificity and PPV decrease (because we have more false positives). A lower cutoff could be optimal if the costs of false negatives are considered greater than the costs of false positives. For instance, if the aliens cannot risk missing edible berries because they are in short supply relative to the size of the alien colony, and eating the inedible berries would, at worst, lead to minor, temporary discomfort.

Code

#Lower the cutoff

cutoff <- 65

hist_edible <- density(edibleScores, from = 0, to = 150) %$%

data.frame(x = x, y = y) %>%

mutate(area = x >= cutoff)

hist_edible$type[hist_edible$area == TRUE] <- "edible_FP"

hist_edible$type[hist_edible$area == FALSE] <- "edible_TN"

hist_inedible <- density(inedibleScores, from = 0, to = 150) %$%

data.frame(x = x, y = y) %>%

mutate(area = x < cutoff)

hist_inedible$type[hist_inedible$area == TRUE] <- "inedible_FN"

hist_inedible$type[hist_inedible$area == FALSE] <- "inedible_TP"

density_data <- bind_rows(hist_edible, hist_inedible)

density_data$type <- factor(density_data$type, levels = c("edible_TN","inedible_TP","edible_FP","inedible_FN"))

ggplot(data = density_data, aes(x = x, ymin = 0, ymax = y, fill = type)) +

geom_ribbon(alpha = 1) +

scale_fill_manual(name = "Berry Type",

values = c(viridis(4)[4], viridis(4)[1], viridis(4)[3], viridis(4)[2]),

breaks = c("edible_TN","inedible_TP","edible_FP","inedible_FN"),

labels = c("Edible: TN","Inedible: TP","Edible: FP","Inedible: FN")) +

geom_line(aes(y = y)) +

geom_vline(xintercept = cutoff, color = "red", linewidth = 2) +

scale_x_continuous(name = "score") +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(axis.text.y = element_blank(),

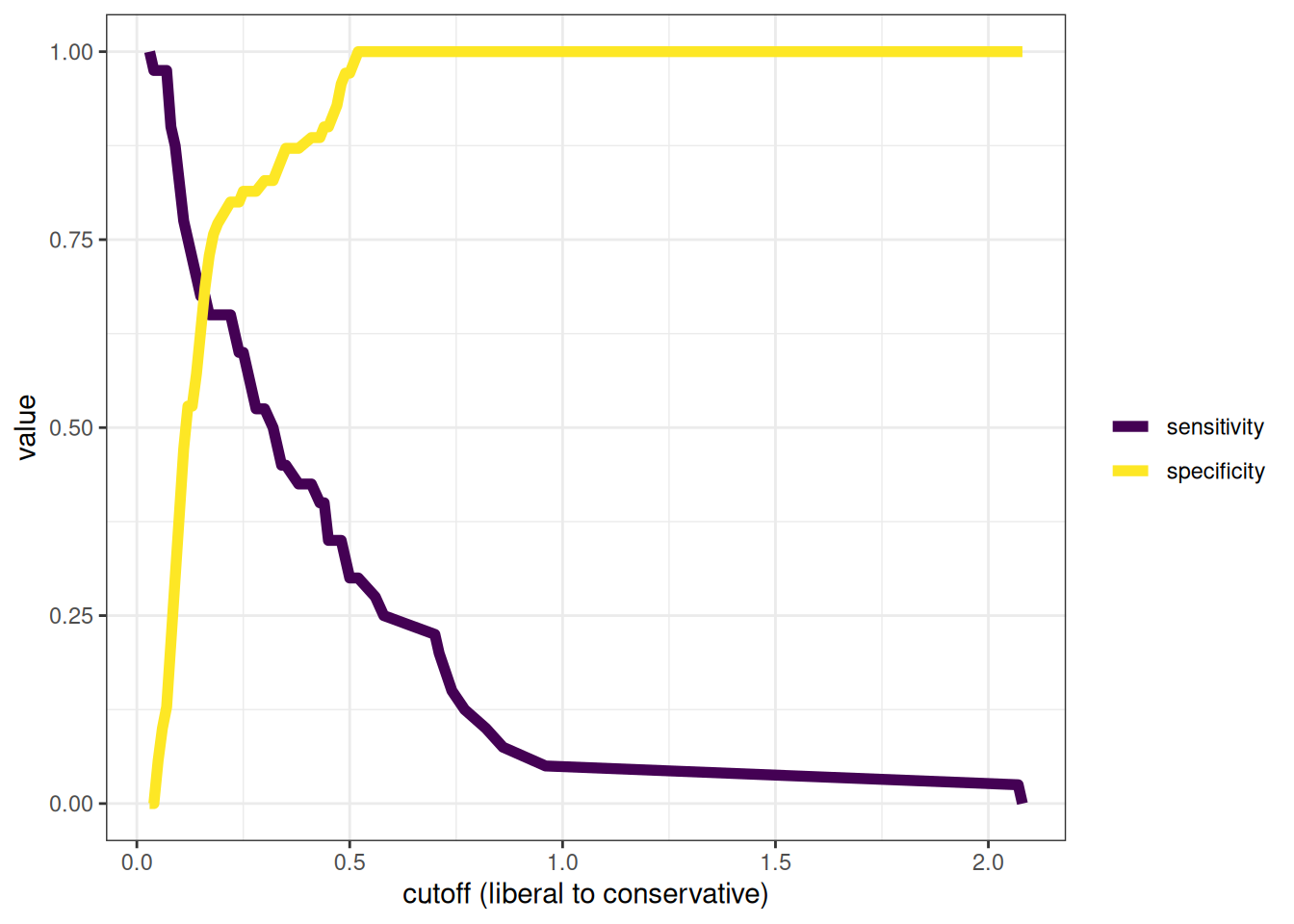

axis.ticks.y = element_blank())In sum, sensitivity and specificity differ based on the cutoff for classification. if we raise the cutoff, sensitivity and PPV increase (due to fewer false positives), whereas and sensitivity and NPV decrease (due to more false negatives). If we lower the cutoff, sensitivity and NPV increase (due to fewer false negatives), whereas specificity and PPV decrease (due to more false positives). Thus, the optimal cutoff depends on how costly each type of error is: false negatives and false positives. If false negatives are more costly than false positives, we would set a low cutoff. If false positives are more costly than false negatives, we would set a high cutoff.

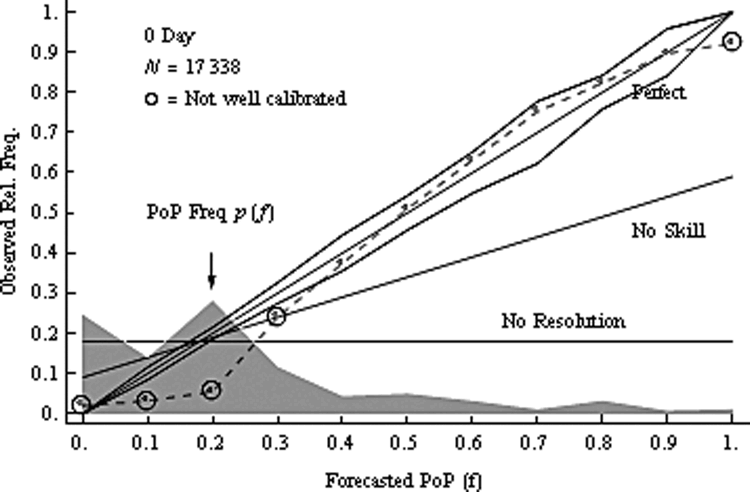

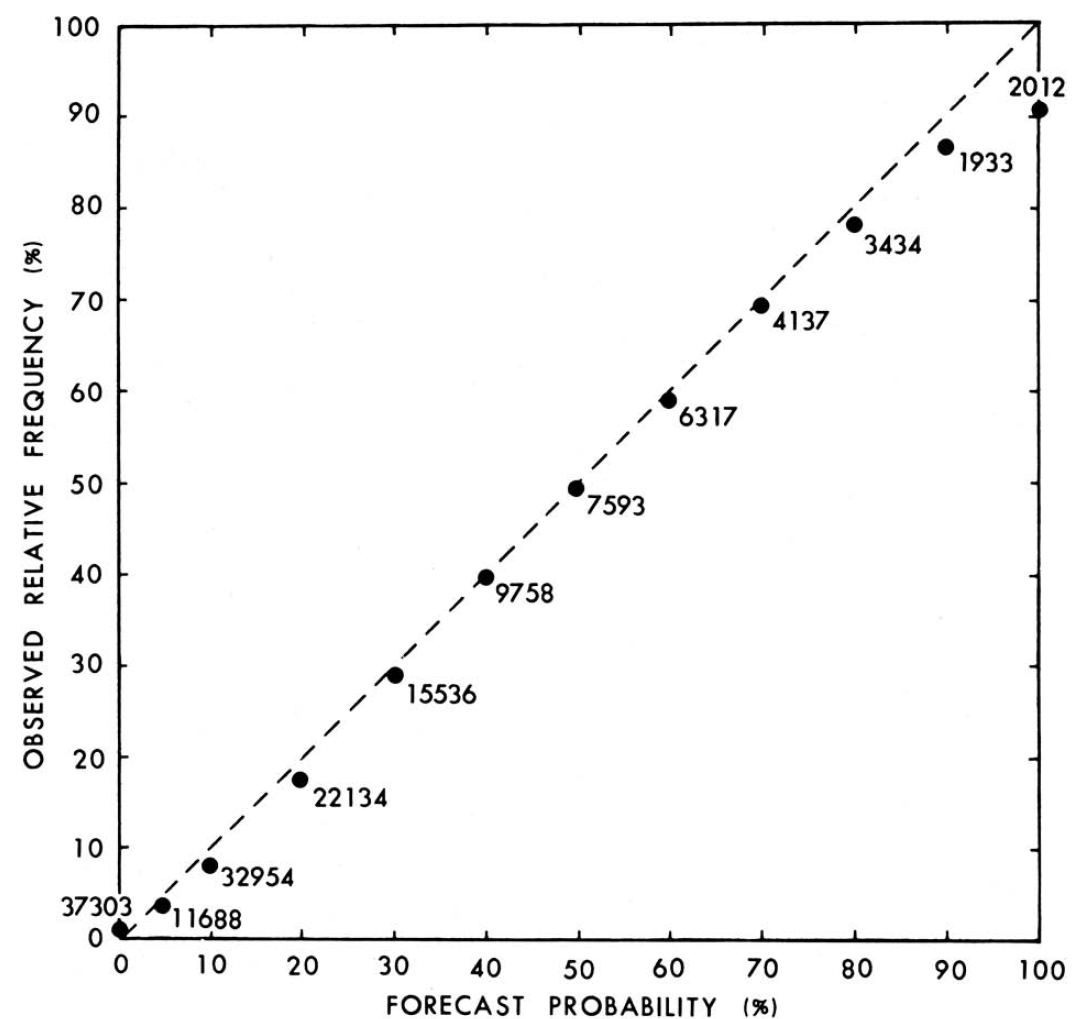

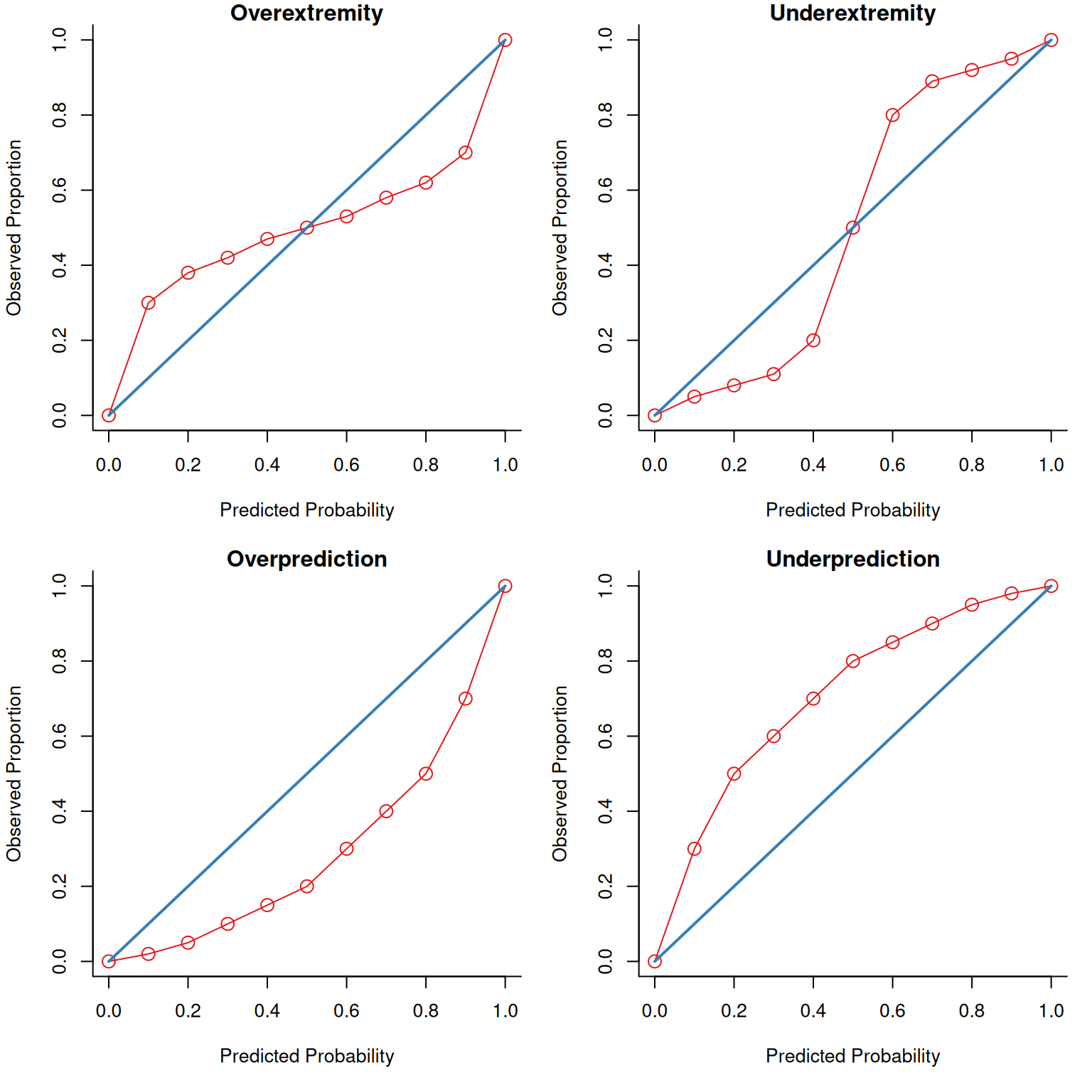

9.1.2.7 Signal Detection Theory

Signal detection theory (SDT) is a probability-based theory for the detection of a given stimulus (signal) from a stimulus set that includes non-target stimuli (noise). SDT arose through the development of radar (RAdio Detection And Ranging) and sonar (SOund Navigation And Ranging) in World War II based on research on sensory-perception research. The military wanted to determine which objects on radar/sonar were enemy aircraft/submarines, and which were noise (e.g., different object in the environment or even just the weather itself). SDT allowed determining how many errors operators made (how accurate they were) and decomposing errors into different kinds of errors. SDT distinguishes between sensitivity and bias. In SDT, sensitivity (or discriminability) is how well an assessment distinguishes between a target stimulus and non-target stimuli (i.e., how well the assessment detects the target stimulus amid non-target stimuli). Bias is the extent to which the probability of a selection decision from the assessment is higher or lower than the true rate of the target stimulus.

Some radar/sonar operators were not as sensitive to the differences between signal and noise, due to factors such as age, ability to distinguish gradations of a signal, etc. People who showed low sensitivity (i.e., who were not as successful at distinguishing between signal and noise) were screened out because the military perceived sensitivity as a skill that was not easily taught. By contrast, other operators could distinguish signal from noise, but their threshold was too low or high—they could take in information, but their decisions tended to be wrong due to systematic bias or poor calibration. That is, they systematically over-rejected or under-rejected stimuli. Over-rejecting leads to many false negatives (i.e., saying that a stimulus is safe when it is not). Under-rejecting leads to many false positives (i.e., saying that a stimulus is harmful when it is not). A person who showed good sensitivity but systematic bias was considered more teach-able than a person who showed low sensitivity. Thus, radar and sonar operators were selected based on their sensitivity to distinguish signal from noise, and then were trained to improve the calibration so they reduce their systematic bias and do not systematically over- or under-reject.

Although SDT was originally developed for use in World War II, it now plays an important role in many areas of science and medicine. A medical application of SDT is tumor detection in radiology. SDT also plays an important role in psychology, especially cognitive psychology. For instance, research on social perception of sexual interest has shown that men tend to show lack of sensitivity to differences in women’s affect—i.e., they have relative difficulties discriminating between friendliness and sexual interest (Farris et al., 2008). Men also tend to show systematic bias (poor calibration) such that they tend to over-estimate women’s sexual interest in them—i.e., men tend to have too low of a threshold for determining that a women is showing sexual interest in them (Farris et al., 2006).

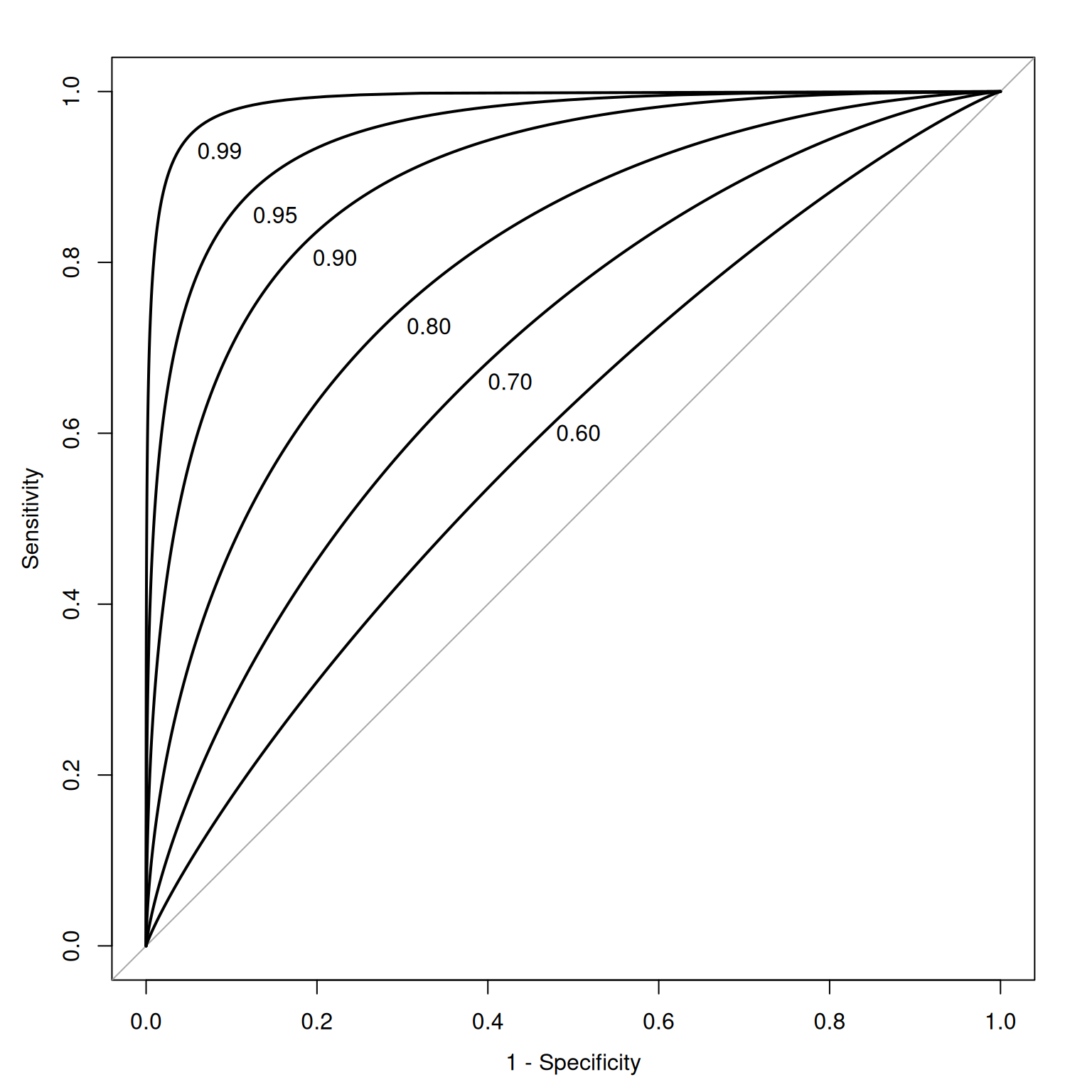

SDT metrics of sensitivity include \(d'\) (“\(d\)-prime”), \(A\) (or \(A'\)), and the area under the receiver operating characteristic (ROC) curve. SDT metrics of bias include \(\beta\) (beta), \(c\), and \(b\).

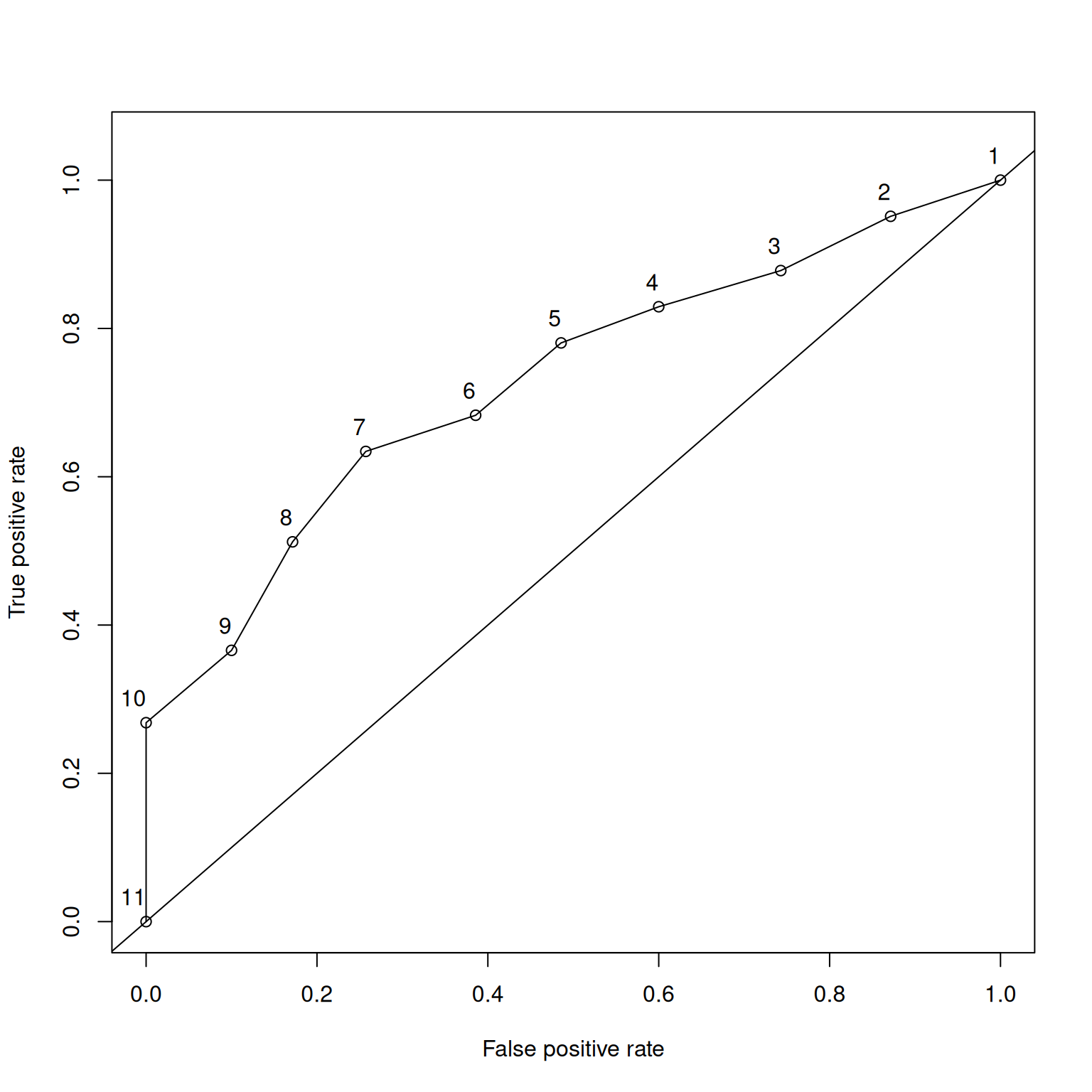

9.1.2.7.1 Receiver Operating Characteristic (ROC) Curve

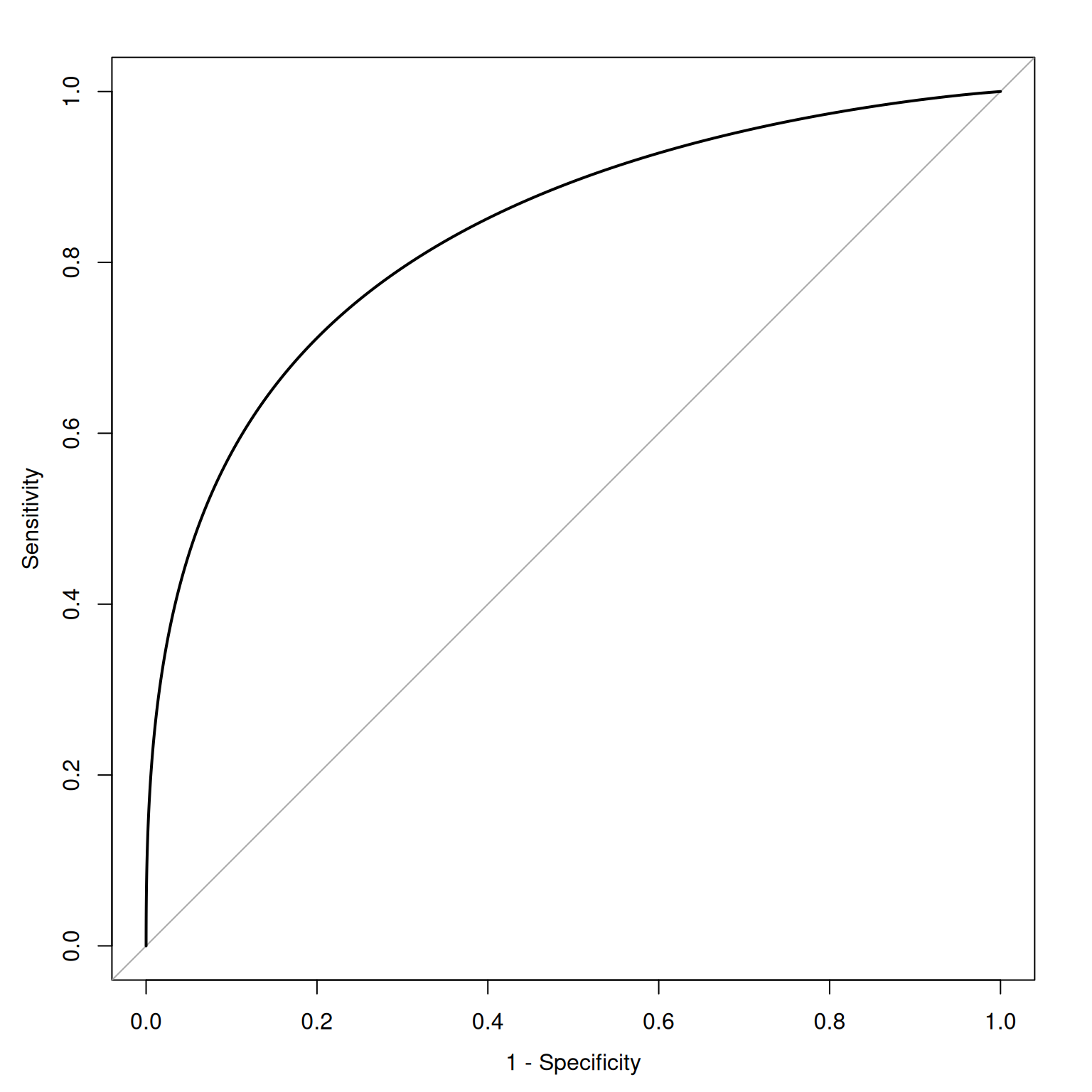

The x-axis of the ROC curve is the false alarm rate or false positive rate (\(1 -\) specificity). The y-axis is the hit rate or true positive rate (sensitivity). We can trace the ROC curve as the combination between sensitivity and specificity at every possible cutoff. At a cutoff of zero (top right of ROC curve), we calculate sensitivity (1.0) and specificity (0) and plot it. At a cutoff of zero, the assessment tells us to make an action for every stimulus (i.e., it is the most liberal). We then gradually increase the cutoff, and plot sensitivity and specificity at each cutoff. As the cutoff increases, sensitivity decreases and specificity increases. We end at the highest possible cutoff, where the sensitivity is 0 and the specificity is 1.0 (i.e., we never make an action; i.e., it is the most conservative). Each point on the ROC curve corresponds to a pair of hit and false alarm rates (sensitivity and specificity) resulting from a specific cutoff value. Then, we can draw lines or a curve to connect the points.

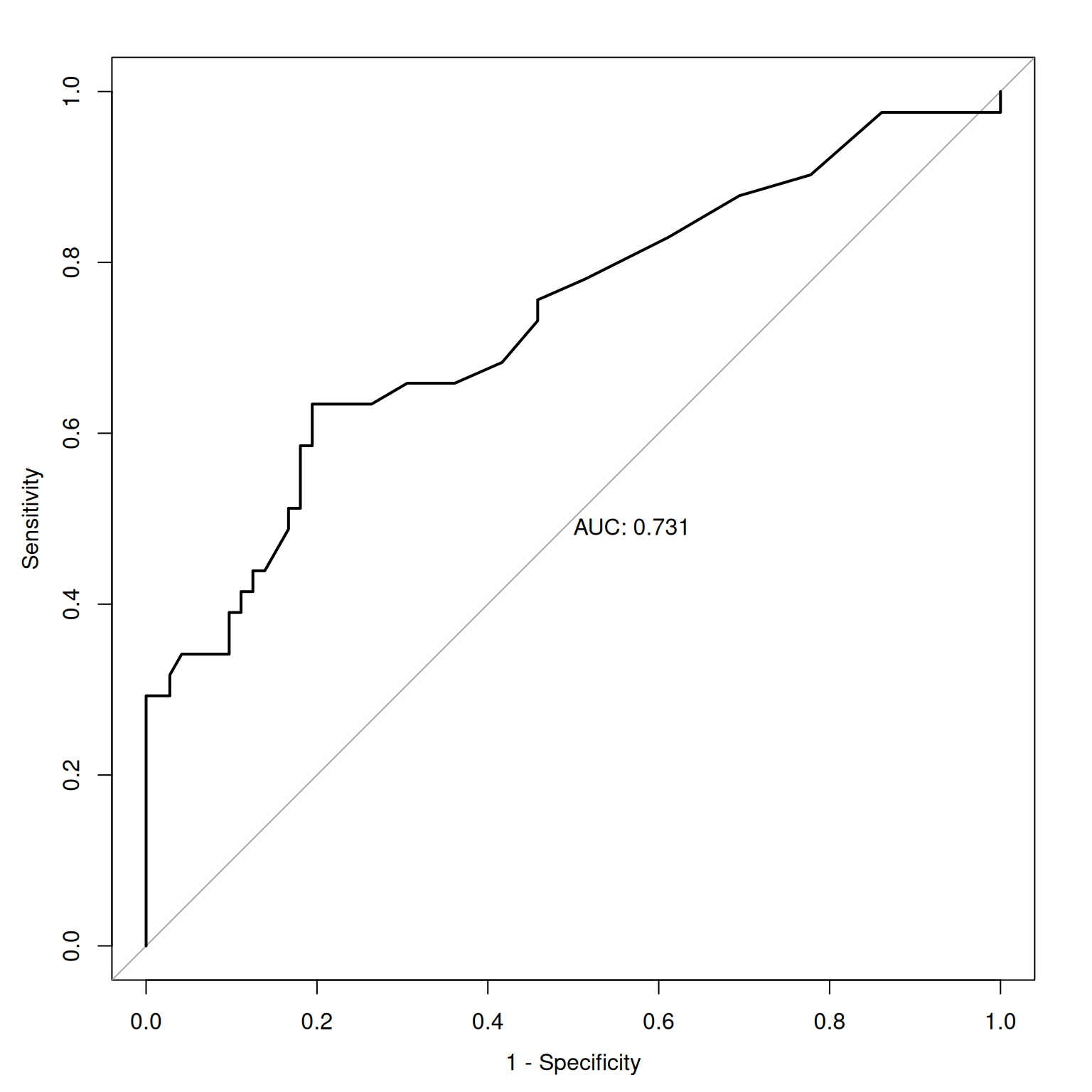

Figure 9.13 depicts an empirical ROC plot where lines are drawn to connect the hit and false alarm rates.

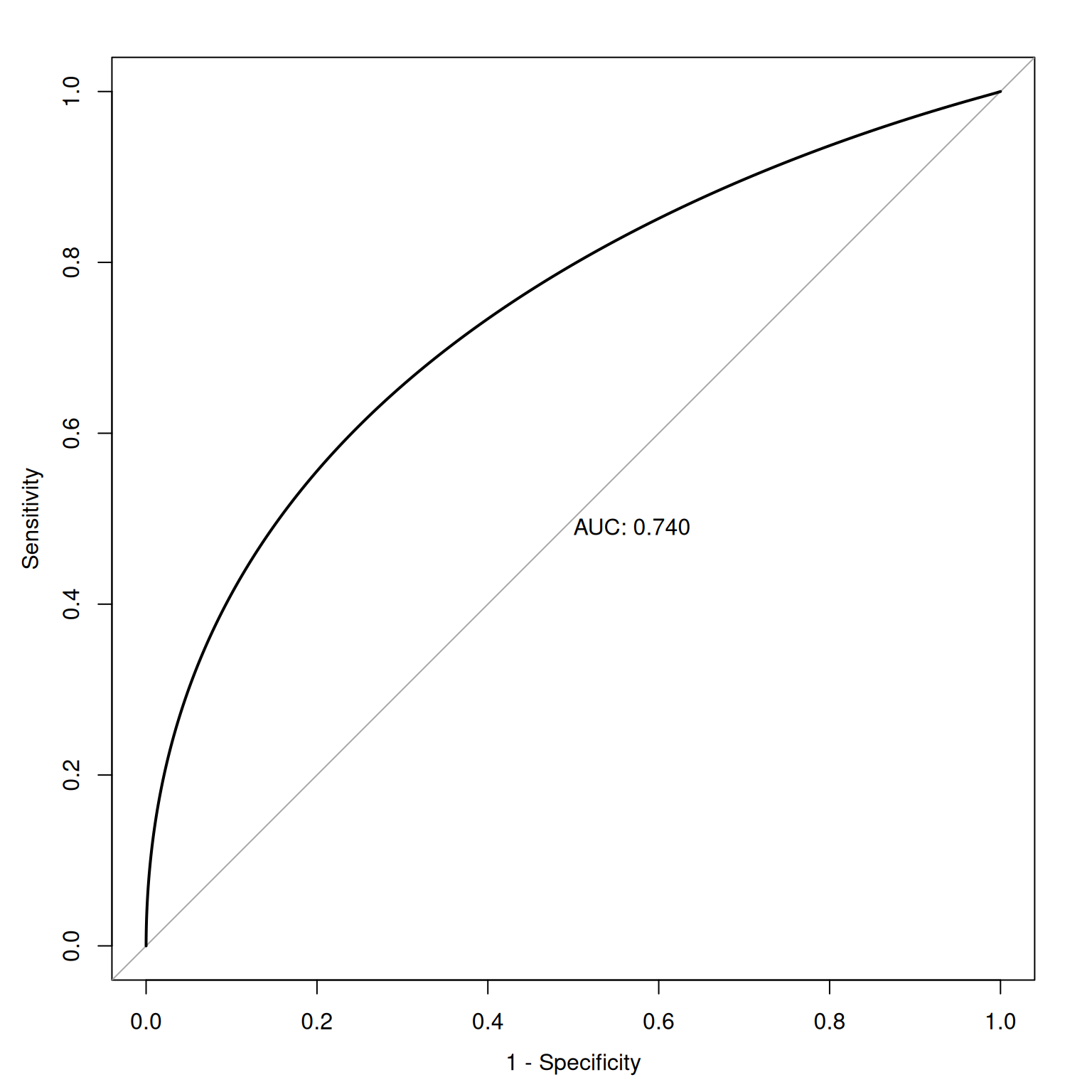

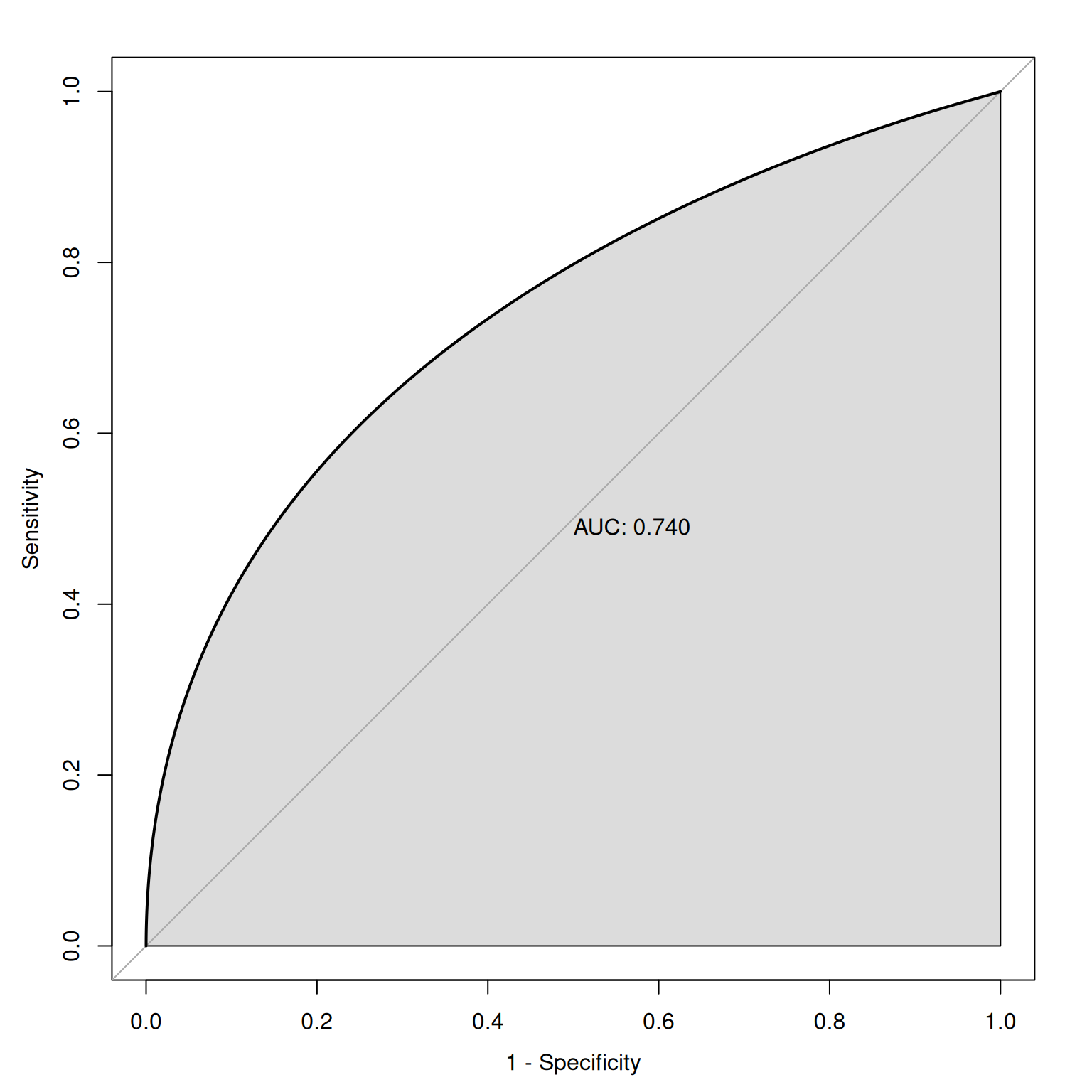

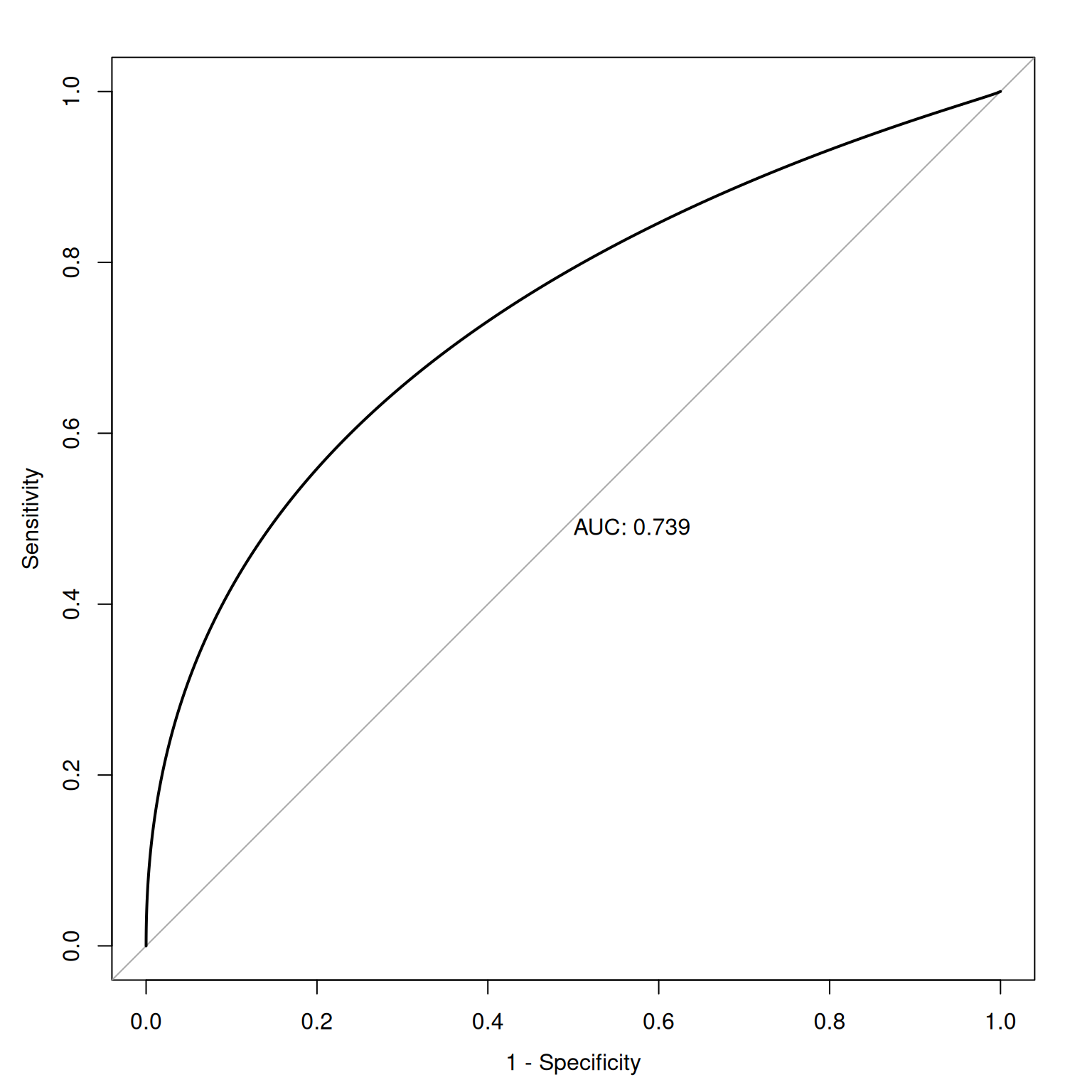

Figure 9.14 depicts an ROC curve where a smoothed and fitted curve is drawn to connect the hit and false alarm rates.

9.1.2.7.1.1 Area Under the ROC Curve

ROC methods can be used to compare and compute the discriminative power of measurement devices free from the influence of selection ratios, base rates, and costs and benefits. An ROC analysis yields a quantitative index of how well an index predicts a signal of interest or can discriminate between different signals. ROC analysis can help tell us how often our assessment would be correct. If we randomly pick two observations, and we were right once and wrong once, we were 50% accurate. But this would be a useless measure because it reflects chance responding.

The geometrical area under the ROC curve reflects the discriminative accuracy of the measure. The index is called the area under the curve (AUC) of an ROC curve. AUC quantifies the discriminative power of an assessment. AUC is the probability that a randomly selected target and a randomly selected non-target is ranked correctly by the assessment method. AUC values range from 0.0 to 1.0, where chance accuracy is 0.5 as indicated by diagonal line in the ROC curve. That is, a measure can be useful to the extent that its ROC curve is above the diagonal line (i.e., its discriminative accuracy is above chance).

Code

Code

#From here: https://stats.stackexchange.com/questions/422926/generate-synthetic-data-given-auc/424213; archived at https://perma.cc/F6F9-VG2K

simulateDataFromAUC <- function(auc, n){

t <- sqrt(log(1/(1-auc)**2))

z <- t-((2.515517 + 0.802853*t + 0.0103328*t**2) / (1 + 1.432788*t + 0.189269*t**2 + 0.001308*t**3))

d <- z*sqrt(2)

x <- c(rnorm(n/2, mean = 0), rnorm(n/2, mean = d))

y <- c(rep(0, n/2), rep(1, n/2))

data <- data.frame(x = x, y = y)

return(data)

}

set.seed(52242)

auc60 <- simulateDataFromAUC(.60, 50000)

auc70 <- simulateDataFromAUC(.70, 50000)

auc80 <- simulateDataFromAUC(.80, 50000)

auc90 <- simulateDataFromAUC(.90, 50000)

auc95 <- simulateDataFromAUC(.95, 50000)

auc99 <- simulateDataFromAUC(.99, 50000)

plot(roc(y ~ x, auc60, smooth = TRUE), legacy.axes = TRUE, print.auc = TRUE, print.auc.x = .52, print.auc.y = .61, print.auc.pattern = "%.2f")

plot(roc(y ~ x, auc70, smooth = TRUE), legacy.axes = TRUE, print.auc = TRUE, print.auc.x = .6, print.auc.y = .67, print.auc.pattern = "%.2f", add = TRUE)

plot(roc(y ~ x, auc80, smooth = TRUE), legacy.axes = TRUE, print.auc = TRUE, print.auc.x = .695, print.auc.y = .735, print.auc.pattern = "%.2f", add = TRUE)

plot(roc(y ~ x, auc90, smooth = TRUE), legacy.axes = TRUE, print.auc = TRUE, print.auc.x = .805, print.auc.y = .815, print.auc.pattern = "%.2f", add = TRUE)

plot(roc(y ~ x, auc95, smooth = TRUE), legacy.axes = TRUE, print.auc = TRUE, print.auc.x = .875, print.auc.y = .865, print.auc.pattern = "%.2f", add = TRUE)

plot(roc(y ~ x, auc99, smooth = TRUE), legacy.axes = TRUE, print.auc = TRUE, print.auc.x = .94, print.auc.y = .94, print.auc.pattern = "%.2f", add = TRUE)As an example, given an AUC of .75, this says that the overall score of an individual who has the characteristic in question will be higher 75% of the time than the overall score of an individual who does not have the characteristic. In lay terms, AUC provides the probability that we will classify correctly based on our instrument if we were to randomly pick one good and one bad outcome. AUC is a stronger index of accuracy than percent accuracy, because you can have high percent accuracy just by going with the base rate. AUC tells us how much better than chance a measure is at discriminating outcomes. AUC is useful as a measure of general discriminative accuracy, and it tells us how accurate a measure is at all possible cutoffs. Knowing the accuracy of a measure at all possible cutoffs can be helpful for selecting the optimal cutoff, given the goals of the assessment. In reality, however, we may not be interested in all cutoffs because not all errors are equal in their costs.

If we lower the base rate, we would need a larger sample to get enough people to classify into each group. SDT/ROC methods are traditionally about dichotomous decisions (yes/no), not graded judgments. SDT/ROC methods can get messy with ordinal data that are more graded because you would have an AUC curve for each ordinal grouping.

9.2 Getting Started

9.2.1 Load Libraries

Code

library("petersenlab") #to install: install.packages("remotes"); remotes::install_github("DevPsyLab/petersenlab")

library("pROC")

library("ROCR")

library("rms")

library("ResourceSelection")

library("PredictABEL")

library("uroc") #to install: install.packages("remotes"); remotes::install_github("evwalz/uroc")

library("rms")

library("gridExtra")

library("grid")

library("ggpubr")

library("msir")

library("car")

library("viridis")

library("ggrepel")

library("caret")

library("MOTE")

library("tidyverse")

library("here")

library("tinytex")

library("rmarkdown")

library("knitr")9.2.2 Prepare Data

9.2.2.1 Load Data

aSAH is a data set from the pROC package (Robin et al., 2021) that contains test scores (s100b) and clinical outcomes (outcome) for patients.

9.2.2.2 Simulate Data

For reproducibility, I set the seed below. Using the same seed will yield the same answer every time. There is nothing special about this particular seed.

Code

set.seed(52242)

mydataSDT$testScore <- mydataSDT$s100b

mydataSDT <- mydataSDT %>%

mutate(testScoreSimple = ntile(testScore, 10))

mydataSDT$predictedProbability <-

(mydataSDT$s100b - min(mydataSDT$s100b, na.rm = TRUE)) /

(max(mydataSDT$s100b, na.rm = TRUE) - min(mydataSDT$s100b, na.rm = TRUE))

mydataSDT$continuousOutcome <- mydataSDT$testScore +

rnorm(nrow(mydataSDT), mean = 0.20, sd = 0.20)

mydataSDT$disorder <- NA

mydataSDT$disorder[mydataSDT$outcome == "Good"] <- 0

mydataSDT$disorder[mydataSDT$outcome == "Poor"] <- 19.2.2.3 Add Missing Data

Adding missing data to dataframes helps make examples more realistic to real-life data and helps you get in the habit of programming to account for missing data.

9.3 Receiver Operating Characteristic (ROC) Curve

The receiver operating characteristic (ROC) curve shows the combination of hit rate (sensitivity) and false alarm rate (\(1 - \text{specificity}\)) at every possible cutoff. It depicts that, as the cutoff increases (i.e., becomes more conservative), sensitivity decreases and specificity increases. It also depicts that, as the cutoff decreases (i.e., becomes more liberal), sensitivity increases and specificity decreases.

Receiver operating characteristic (ROC) curves were generated using the pROC package (Robin et al., 2021). The examples depict ROC curves that demonstrate that the measure is moderately accurate—the measure is more accurate than chance but there remains considerable room for improvement in predictive accuracy.

9.3.1 Empirical ROC Curve

The syntax used to generate an empirical ROC plot is below, and the plot is in Figure 9.13.

An empirical ROC plot with cutoffs overlaid is in Figure 9.18.

Code

9.3.2 Smooth ROC Curve

9.3.3 Youden’s J Statistic

The threshold at the Youden’s J statistic is the threshold where the test has the maximum combination (i.e., sum) of sensitivity and specificity: \(\text{max}(\text{sensitivity} + \text{specificity} - 1)\)

Code

For this test, the Youden’s J Statistic is at a threshold of \(0.205\), where sensitivity is \(0.65\) and specificity is \(0.8\).

9.3.4 The point closest to the top-left part of the ROC curve with perfect sensitivity and specificity

The point closest to the top-left part of the ROC plot with perfect sensitivity and specificity: \(\text{min}[(1 - \text{sensitivity})^2 + (1 - \text{specificity})^2]\)

Code

For this test, the combination of sensitivity and specificity is closest to the top left of the ROC plot at a threshold of \(0.205\), where sensitivity is \(0.65\) and specificity is \(0.8\).

9.4 Prediction Accuracy Across Cutoffs

There are two primary dimensions of accuracy: (1) discrimination (e.g., sensitivity, specificity, area under the ROC curve) and (2) calibration. Some general indexes of accuracy combine discrimination and calibration, as described in Section 9.4.1. This section (Section 9.4) describes indexes of accuracy that span all possible cutoffs. That is, each index of accuracy described in this section provides a single numerical index of accuracy that aggregates the accuracy across all possible cutoffs. Aspects of accuracy at a particular cutoff are described in Section 9.5.

The petersenlab package (Petersen, 2025) contains the accuracyOverall() function that estimates the prediction accuracy across cutoffs.

Code

[,1]

ME -0.11

MAE 0.34

MdAE 0.17

MSE 0.21

RMSE 0.46

MPE -Inf

MAPE Inf

sMAPE 82.46

MASE 0.74

RMSLE 0.30

rsquared 0.10

rsquaredAsPredictor 0.18

rsquaredAdjAsPredictor 0.17

rsquaredPredictiveAsPredictor 0.12Code

[,1]

ME -0.11

MAE 0.34

MdAE 0.17

MSE 0.21

RMSE 0.46

MPE 59.62

MAPE 64.97

sMAPE 82.46

MASE 0.74

RMSLE 0.30

rsquared 0.10

rsquaredAsPredictor 0.18

rsquaredAdjAsPredictor 0.17

rsquaredPredictiveAsPredictor 0.129.4.1 General Prediction Accuracy

There are many metrics of general prediction accuracy. When thinking about which metric(s) may be best for a given problem, it is important to consider the purpose of the assessment. The estimates of general prediction accuracy are separated below into scale-dependent and scale-independent accuracy estimates.

9.4.1.1 Scale-Dependent Accuracy Estimates

The estimates of prediction accuracy described in this section are scale-dependent. These accuracy estimates depend on the unit of measurement and therefore cannot be compared across measures with different scales or across data sets.

9.4.1.1.1 Mean Error

Here, “error” (\(e\)) is the difference between the predicted and observed value for a given individual (\(i\)). Mean error (ME; also known as bias, see Section 4.5.1.3.2) is the mean difference between the predicted and observed values across individuals (\(i\)), that is, the mean of the errors across individuals (\(e_i\)). Values closer to zero reflect greater accuracy. If mean error is above zero, it indicates that predicted values are, on average, greater than observed values (i.e., over-estimating errors). If mean error is below zero, it indicates that predicted values are, on average, less than observed values (i.e., under-estimating errors). If both over-estimating and under-estimating errors are present, however, they can cancel each other out. As a result, even with a mean error of zero, there can still be considerable error present. Thus, although mean error can be helpful for examining whether predictions systematically under- or over-estimate the actual scores, other forms of accuracy are necessary to examine the extent of error. The formula for mean error is in Equation 9.20:

\[ \begin{aligned} \text{mean error} &= \frac{\sum\limits_{i = 1}^n(\text{predicted}_i - \text{observed}_i)}{n} \\ &= \text{mean}(e_i) \end{aligned} \tag{9.20}\]

In this case, the mean error is negative, so the predictions systematically under-estimate the actual scores.

9.4.1.1.2 Mean Absolute Error (MAE)

Mean absolute error (MAE) is the mean of the absolute value of differences between the predicted and observed values across individuals, that is, the mean of the absolute value of errors. Smaller MAE values (closer to zero) reflect greater accuracy. MAE is preferred over root mean squared error (RMSE) when you want to give equal weight to all errors and when the outliers have considerable impact. The formula for MAE is in Equation 9.21:

\[ \begin{aligned} \text{mean absolute error (MAE)} &= \frac{\sum\limits_{i = 1}^n|\text{predicted}_i - \text{observed}_i|}{n} \\ &= \text{mean}(|e_i|) \end{aligned} \tag{9.21}\]

9.4.1.1.3 Mean Squared Error (MSE)

Mean squared error (MSE) is the mean of the square of the differences between the predicted and observed values across individuals, that is, the mean of the squared value of errors. Smaller MSE values (closer to zero) reflect greater accuracy. MSE penalizes larger errors more heavily than smaller errors (unlike MAE). However, MSE is sensitive to outliers and can be impacted if the errors are skewed. The formula for MSE is in Equation 9.22:

\[ \begin{aligned} \text{mean squared error (MSE)} &= \frac{\sum\limits_{i = 1}^n(\text{predicted}_i - \text{observed}_i)^2}{n} \\ &= \text{mean}(e_i^2) \end{aligned} \tag{9.22}\]

9.4.1.1.4 Root Mean Squared Error (RMSE)

Root mean squared error (RMSE) is the square root of the mean of the square of the differences between the predicted and observed values across individuals, that is, the root mean squared value of errors. Smaller RMSE values (closer to zero) reflect greater accuracy. RMSE penalizes larger errors more heavily than smaller errors (unlike MAE). However, RMSE is sensitive to outliers and can be impacted if the errors are skewed. The formula for RMSE is in Equation 9.23:

\[ \begin{aligned} \text{root mean squared error (RMSE)} &= \sqrt{\frac{\sum\limits_{i = 1}^n(\text{predicted}_i - \text{observed}_i)^2}{n}} \\ &= \sqrt{\text{mean}(e_i^2)} \end{aligned} \tag{9.23}\]

9.4.1.2 Scale-Independent Accuracy Estimates

The estimates of prediction accuracy described in this section are intended to be scale-independent (unit-free) so the accuracy estimates can be compared across measures with different scales or across data sets (Hyndman & Athanasopoulos, 2018).

9.4.1.2.1 Mean Percentage Error (MPE)

Mean percentage error (MPE) values closer to zero reflect greater accuracy. The formula for percentage error is in Equation 9.24:

\[ \begin{aligned} \text{percentage error }(p_i) = \frac{100\% \times (\text{observed}_i - \text{predicted}_i)}{\text{observed}_i} \end{aligned} \tag{9.24}\]

We then take the mean of the percentage errors to get MPE. The formula for MPE is in Equation 9.25:

\[ \begin{aligned} \text{mean percentage error (MPE)} &= \frac{100\%}{n} \sum\limits_{i = 1}^n \frac{\text{observed}_i - \text{predicted}_i}{\text{observed}_i} \\ &= \text{mean(percentage error)} \\ &= \text{mean}(p_i) \end{aligned} \tag{9.25}\]

Note: MPE is undefined when one or more of the observed values equals zero, due to division by zero. I provide the option in the function to drop undefined values so you can still generate an estimate of accuracy despite undefined values, but use this option at your own risk.

9.4.1.2.2 Mean Absolute Percentage Error (MAPE)

Smaller mean absolute percentage error (MAPE) values (closer to zero) reflect greater accuracy. The formula for MAPE is in Equation 9.26: MAPE is asymmetric because it overweights underestimates and underweights overestimates. MAPE can be preferable to symmetric mean absolute percentage error (sMAPE) if there are no observed values of zero and if you want to emphasize the importance of underestimates (relative to overestimates).

\[ \begin{aligned} \text{mean absolute percentage error (MAPE)} &= \frac{100\%}{n} \sum\limits_{i = 1}^n \Bigg|\frac{\text{observed}_i - \text{predicted}_i}{\text{observed}_i}\Bigg| \\ &= \text{mean(|percentage error|)} \\ &= \text{mean}(|p_i|) \end{aligned} \tag{9.26}\]

Note: MAPE is undefined when one or more of the observed values equals zero, due to division by zero. I provide the option in the function to drop undefined values so you can still generate an estimate of accuracy despite undefined values, but use this option at your own risk.

9.4.1.2.3 Symmetric Mean Absolute Percentage Error (sMAPE)

Unlike MAPE, symmetric mean absolute percentage error (sMAPE) is symmetric because it equally weights underestimates and overestimates. Smaller sMAPE values (closer to zero) reflect greater accuracy. The formula for sMAPE is in Equation 9.27:

\[ \small \begin{aligned} \text{symmetric mean absolute percentage error (sMAPE)} = \frac{100\%}{n} \sum\limits_{i = 1}^n \frac{|\text{predicted}_i - \text{observed}_i|}{|\text{predicted}_i| + |\text{observed}_i|} \end{aligned} \tag{9.27}\]

Note: sMAPE is undefined when one or more of the individuals has a prediction–observed combination such that the sum of the absolute value of the predicted value and the absolute value of the observed value equals zero (\(|\text{predicted}_i| + |\text{observed}_i|\)), due to division by zero. I provide the option in the function to drop undefined values so you can still generate an estimate of accuracy despite undefined values, but use this option at your own risk.

Code

symmetricMeanAbsolutePercentageError = function(predicted, actual, dropUndefined = FALSE){

relativeError <- abs(predicted - actual)/(abs(predicted) + abs(actual))

if(dropUndefined == TRUE){

relativeError[!is.finite(relativeError)] <- NA

}

value <- 100 * mean(abs(relativeError), na.rm = TRUE)

return(value)

}9.4.1.2.4 Mean Absolute Scaled Error (MASE)

Mean absolute scaled error (MASE) is described by (Hyndman & Athanasopoulos, 2018). Values closer to zero reflect greater accuracy.

The adapted formula for MASE with non-time series data is described here (https://stats.stackexchange.com/a/108963/20338) (archived at https://perma.cc/G469-8NAJ). Scaled errors are calculated using Equation 9.28:

\[ \begin{aligned} \text{scaled error}(q_i) &= \frac{\text{observed}_i - \text{predicted}_i}{\text{scaling factor}} \\ &= \frac{\text{observed}_i - \text{predicted}_i}{\frac{1}{n} \sum\limits_{i = 1}^n |\text{observed}_i - \overline{\text{observed}}|} \end{aligned} \tag{9.28}\]

Then, we calculate the mean of the absolute value of the scaled errors to get MASE, as in Equation 9.29:

\[ \begin{aligned} \text{mean absolute scaled error (MASE)} &= \frac{1}{n} \sum\limits_{i = 1}^n |q_i| \\ &= \text{mean(|scaled error|)} \\ &= \text{mean}(|q_i|) \end{aligned} \tag{9.29}\]

Note: MASE is undefined when the scaling factor is zero, due to division by zero. With non-time series data, the scaling factor is the average of the absolute value of individuals’ observed scores minus the average observed score (\(\frac{1}{n} \sum\limits_{i = 1}^n |\text{observed}_i - \overline{\text{observed}}|\)).

Code

meanAbsoluteScaledError <- function(predicted, actual){

mydata <- data.frame(na.omit(cbind(predicted, actual)))

errors <- mydata$actual - mydata$predicted

scalingFactor <- mean(abs(mydata$actual - mean(mydata$actual)))

scaledErrors <- errors/scalingFactor

value <- mean(abs(scaledErrors))

return(value)

}9.4.1.2.5 Root Mean Squared Log Error (RMSLE)

The squared log of the accuracy ratio is described by Tofallis (2015). The accuracy ratio is in Equation 9.30:

\[ \begin{aligned} \text{accuracy ratio} &= \frac{\text{predicted}_i}{\text{observed}_i} \end{aligned} \tag{9.30}\]

However, the accuracy ratio is undefined with observed or predicted values of zero, so it is common to modify it by adding 1 to the predictor and denominator, as in Equation 9.31:

\[ \begin{aligned} \text{accuracy ratio} &= \frac{\text{predicted}_i + 1}{\text{observed}_i + 1} \end{aligned} \tag{9.31}\]

Squaring the log values keeps the values positive, such that smaller values (values closer to zero) reflect greater accuracy. Then we take the mean of the squared log values, which keeps the values positive, and calculate the square root of the mean squared log values to put them back on the (pre-squared) log metric. This is known as the root mean squared log error (RMSLE). Division inside the log is equal to subtraction outside the log. So, the formula can be reformulated with the subtraction of two logs, as in Equation 9.32:

\[ \begin{aligned} \text{root mean squared log error (RMSLE)} &= \sqrt{\sum\limits_{i = 1}^n log\bigg(\frac{\text{predicted}_i + 1}{\text{observed}_i + 1}\bigg)^2} \\ &= \sqrt{\text{mean}\Bigg[log\bigg(\frac{\text{predicted}_i + 1}{\text{observed}_i + 1}\bigg)^2\Bigg]} \\ &= \sqrt{\text{mean}\big[log(\text{accuracy ratio})^2\big]} = \sqrt{\text{mean}\Big\{\big[log(\text{predicted}_i + 1) - log(\text{actual}_i + 1)\big]^2\Big\}} \end{aligned} \tag{9.32}\]

RMSLE can be preferable when the scores have a wide range of values and are skewed. RMSLE can help to reduce the impact of outliers. RMSLE gives more weight to smaller errors in the prediction of small observed values, while also penalizing larger errors in the prediction of larger observed values. It overweights underestimates and underweights overestimates.

There are other variations of prediction accuracy metrics that use the log of the accuracy ratio. One variation makes it similar to median symmetric percentage error (Morley et al., 2018).

Note: Root mean squared log error is undefined when one or more predicted values or actual values equals -1. When predicted or actual values are -1, this leads to \(log(0)\), which is undefined. I provide the option in the function to drop undefined values so you can still generate an estimate of accuracy despite undefined values, but use this option at your own risk.

9.4.1.2.6 Coefficient of Determination (\(R^2\))

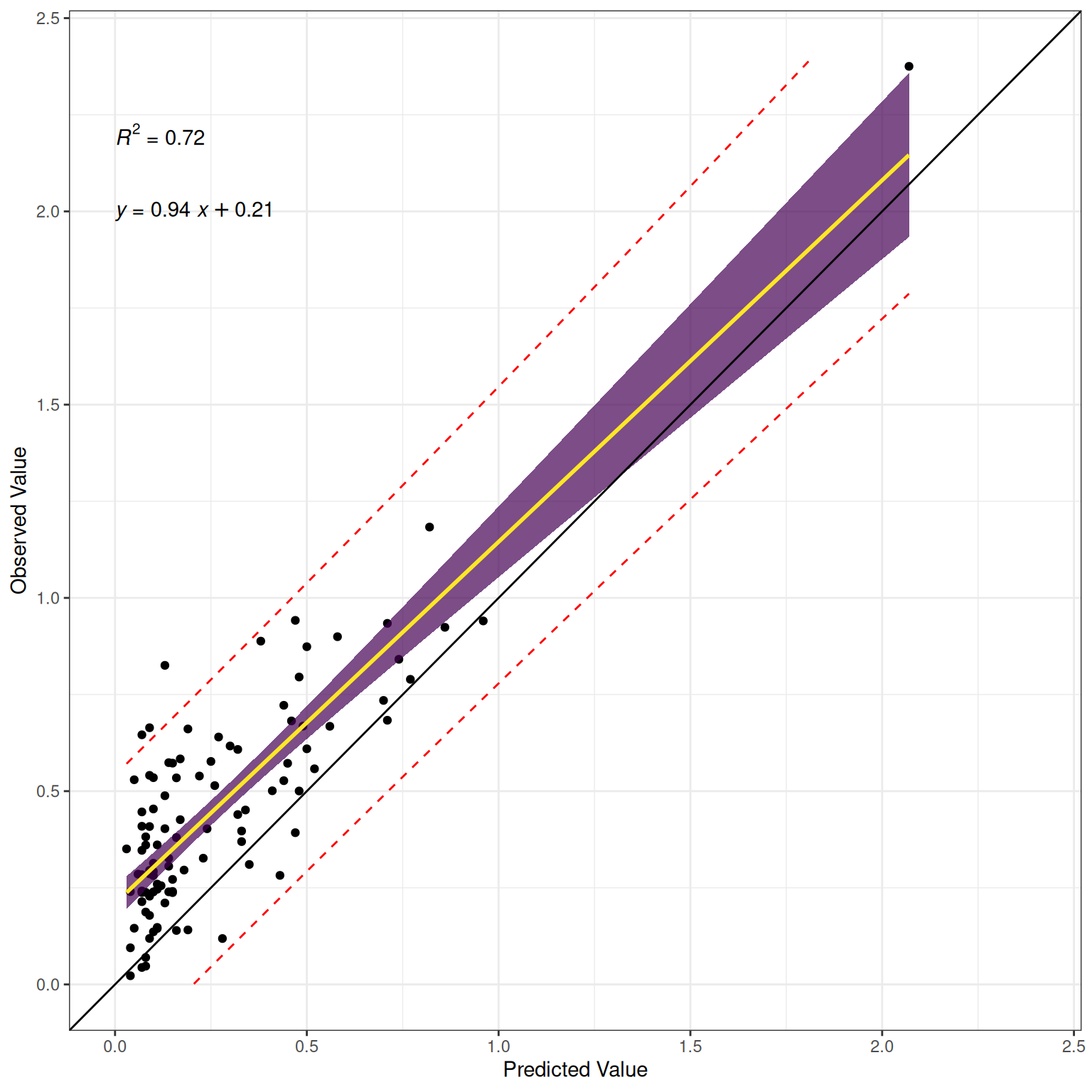

The coefficient of determination (\(R^2\)) is a general index of accuracy that combines both discrimination and calibration. It reflects the proportion of variance in the outcome (dependent) variable that is explained by the model predictions: \(R^2 = \frac{\text{variance explained in }Y}{\text{total variance in }Y}\). Larger values indicate greater accuracy. The formula for the coefficient of determination is in Equation 9.33:

\[ \begin{aligned} R^2 &= 1 - \frac{\sum (y_i - \hat{y}_i)^2}{\sum (y_i - \bar{y})^2} \\ &= 1 - \frac{SS_{\text{residual}}}{SS_{\text{total}}} \\ &= 1 - \frac{\text{sum of squared residuals}}{\text{total sum of squares}} \\ &= \frac{\text{variance explained in }y}{\text{total variance in }y} \end{aligned} \tag{9.33}\]

where \(y\) is the outcome variable, \(y_i\) is the observed value of the outcome variable for the \(i\)th observation, \(\hat{y}_i\) is the model predicted value for the \(i\)th observation, and \(\bar{y}\) is the mean of the observed values of the outcome variable. The total sum of squares is an index of the total variation in the outcome variable.

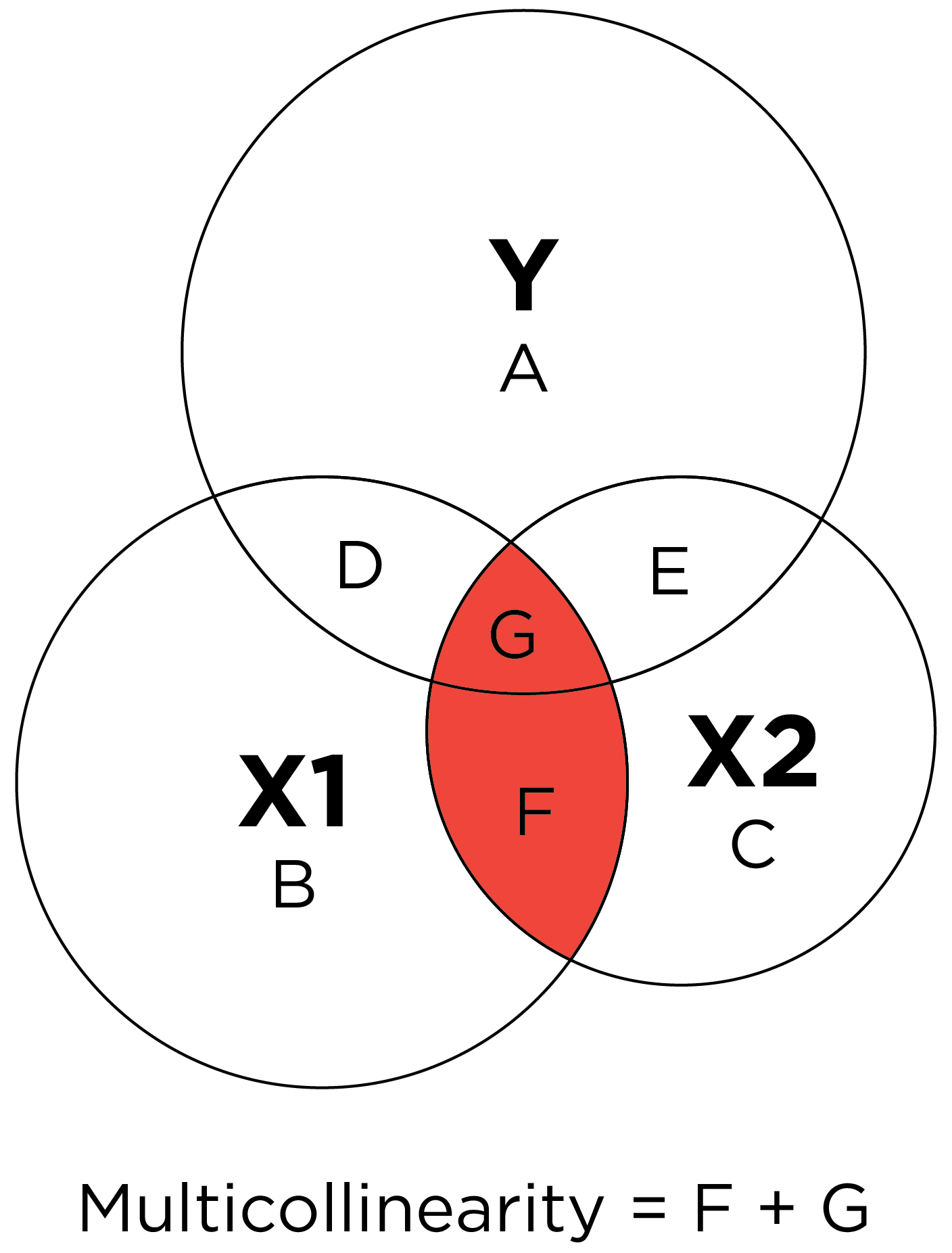

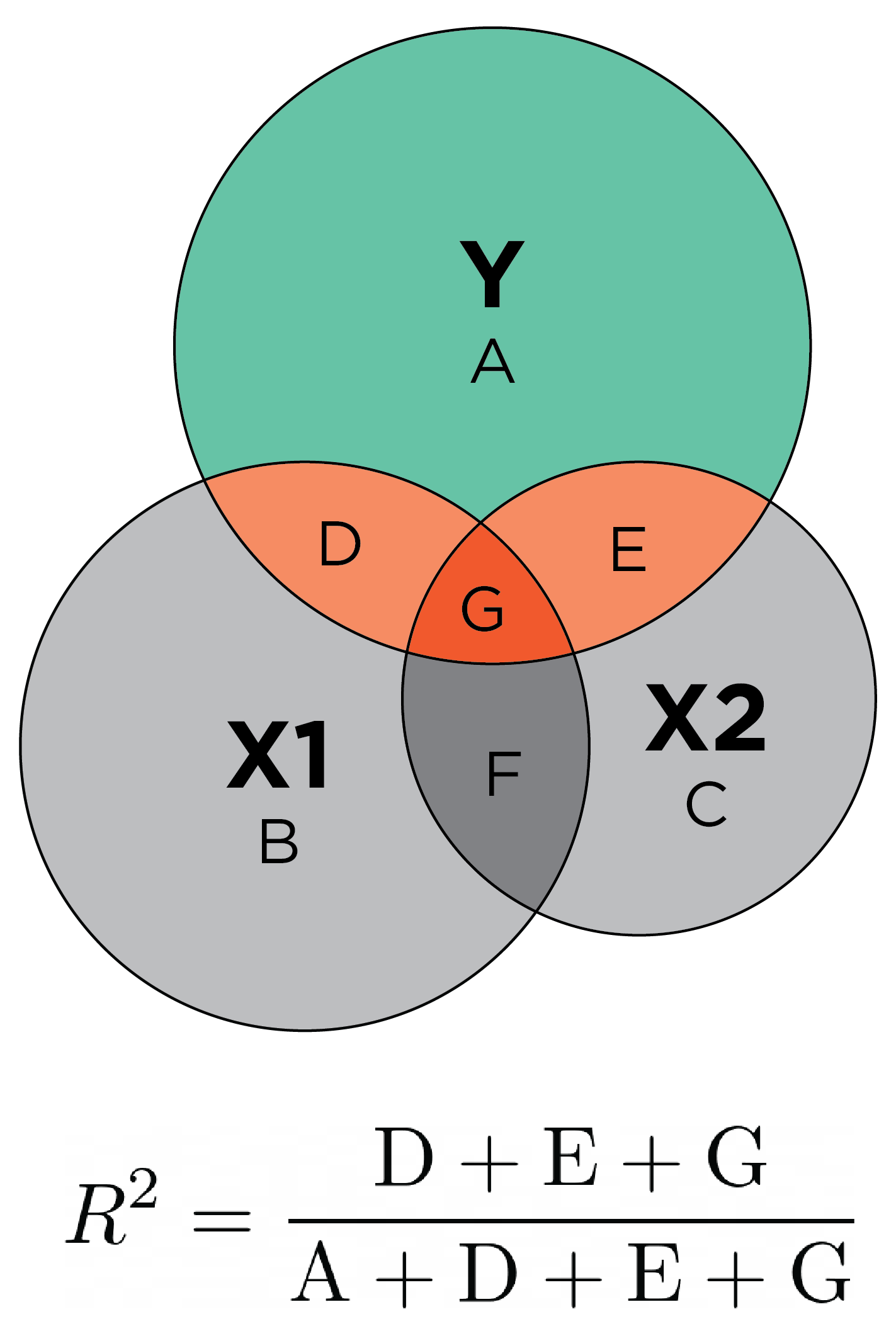

\(R^2\) is commonly estimated in multiple regression, in which multiple predictors are allowed to predict one outcome. Multiple regression can be conceptualized with overlapping circles in what is called a Ballantine graph (archived at https://perma.cc/C7CU-KFVG). \(R^2\) in multiple regression is depicted conceptually with a Ballantine graph in Figure 9.20.

\(R^2\):

The predictor (testScore) explains \(17.79\)% of the variance \((R^2 = .1779)\) in the outcome (disorder status).

The coefficient of determination typically ranges from 0 to 1, but it can be negative. A negative coefficient of determination indicates that the predicted values are less accurate than using the mean of the actual values as the predicted values (i.e., predicting the mean of the observed values for every case).

An issue with \(R^2\) is that it can be artificially inflated by adding more predictors to the model, even if those predictors do not improve the model fit. \(R^2\) never decreases when adding predictors to a model, even if the predictors do not provide any predictive power. Thus, to account for the number of predictors in the model, we can use adjusted \(R^2\).

9.4.1.2.6.1 Adjusted \(R^2\) (\(R^2_{adj}\))

Adjusted \(R^2\) is similar to the coefficient of determination, but it accounts for the number of predictors included in the regression model to penalize overfitting. Specifically, adjusted \(R^2\) penalizes the number of predictors in the model. Adjusted \(R^2\) reflects the proportion of variance in the outcome (dependent) variable that is explained by the model predictions over and above what would be expected to be accounted for by chance, given the number of predictors in the model. Thus, adjusted \(R^2\) will increase only if the new predictor improves the model (i.e., explains additional variance in the outcome variable) more than would be expected by chance. Larger values indicate greater accuracy. The formula for adjusted \(R^2\) is in Equation 9.34:

\[ R^2_{adj} = 1 - (1 - R^2) \frac{n - 1}{n - p - 1} \tag{9.34}\]

where \(p\) is the number of predictors in the model, and \(n\) is the sample size.

Adjusted \(R^2\) is described further in Section 9.9.

9.4.1.2.6.2 Predictive \(R^2\)

Predictive \(R^2\) is described here: https://tomhopper.me/2014/05/16/can-we-do-better-than-r-squared/ (archived at https://perma.cc/BK8J-HFUK) and https://mfatihtuzen.netlify.app/posts/2025-04-30_rsquared/ (archived at https://perma.cc/EPP8-VG23). Predictive \(R^2\) penalizes overfitting, unlike traditional \(R^2\). Larger values indicate greater accuracy. The formula for predictive \(R^2\) is in Equation 9.35:

\[ R^2_{pred} = 1 - \frac{\text{PRESS}}{\text{SS}_\text{total}} \tag{9.35}\]

where PRESS is the predictive residual sum of squares, and \(\text{SS}_\text{total}\) is the total sum of squares.

Code

#predictive residual sum of squares (PRESS)

PRESS <- function(linear.model) {

#calculate the predictive residuals

pr <- residuals(linear.model)/(1-lm.influence(linear.model)$hat)

#calculate the PRESS

PRESS <- sum(pr^2)

return(PRESS)

}

predictiveRSquared <- function(predicted, actual){

#fit linear model

linear.model <- lm(actual ~ predicted)

#use anova() to get the sum of squares for the linear model

lm.anova <- anova(linear.model)

#calculate the total sum of squares

tss <- sum(lm.anova$'Sum Sq')

#calculate the predictive R^2

value <- 1 - PRESS(linear.model)/(tss)

return(value)

}It is preferable to examine predictive \(R^2\) using new data that the model was not trained on—i.e., cross-validation, either in an independent sample (i.e., external cross-validation) or in a hold-out sample (i.e., internal cross-validation on test data).

For example, here is an example of estimating predictive \(R^2\) with 10-fold cross-validation using the caret package (Kuhn, 2022).

Code

set.seed(52242)

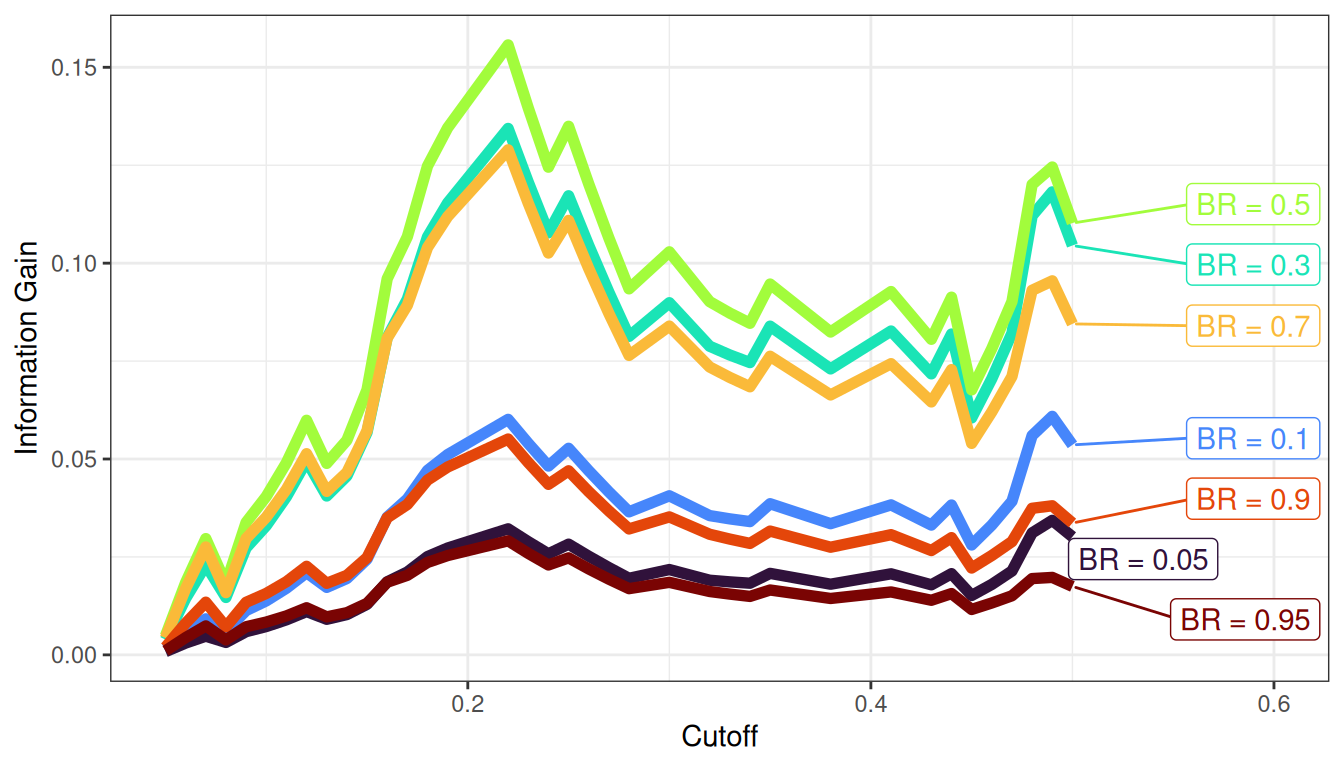

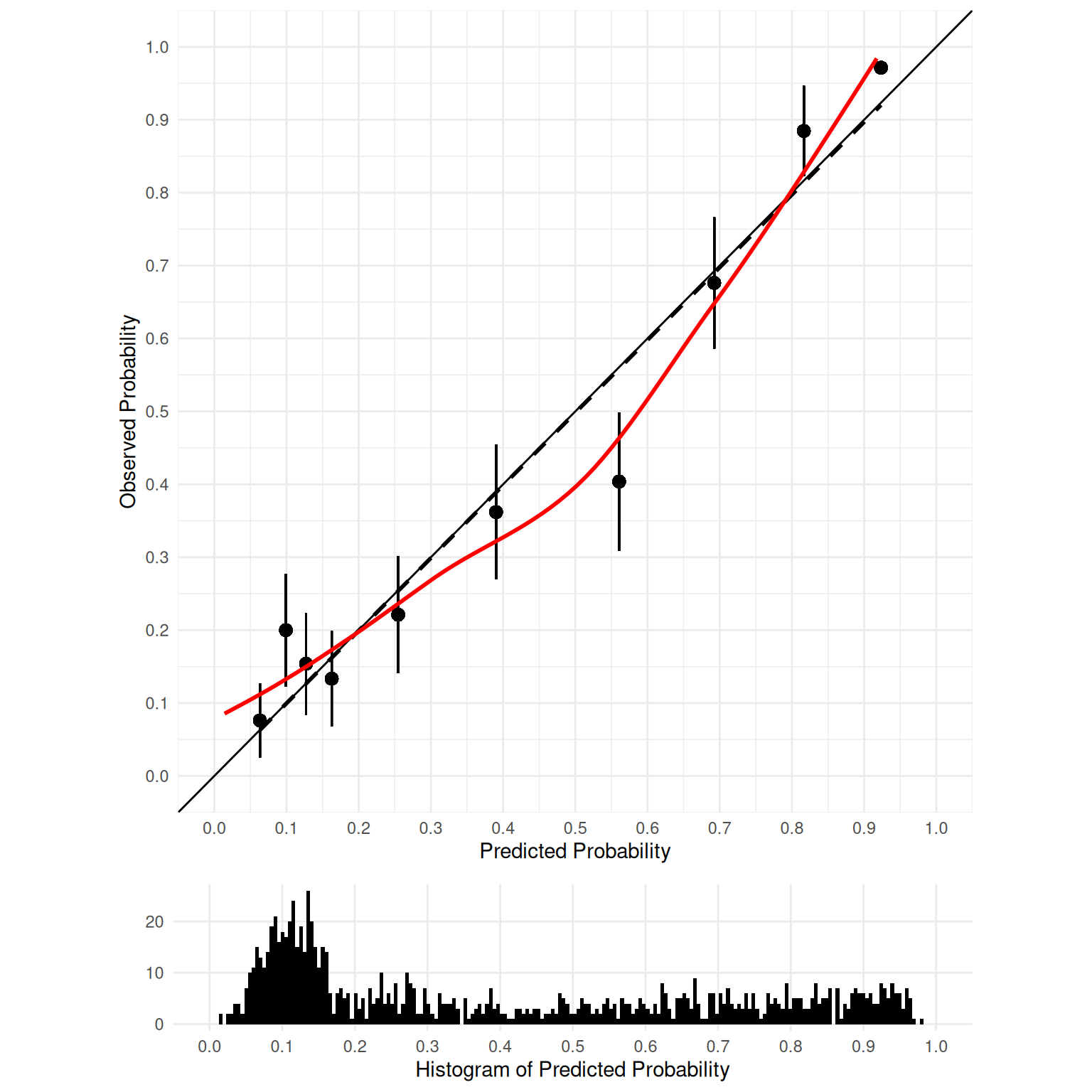

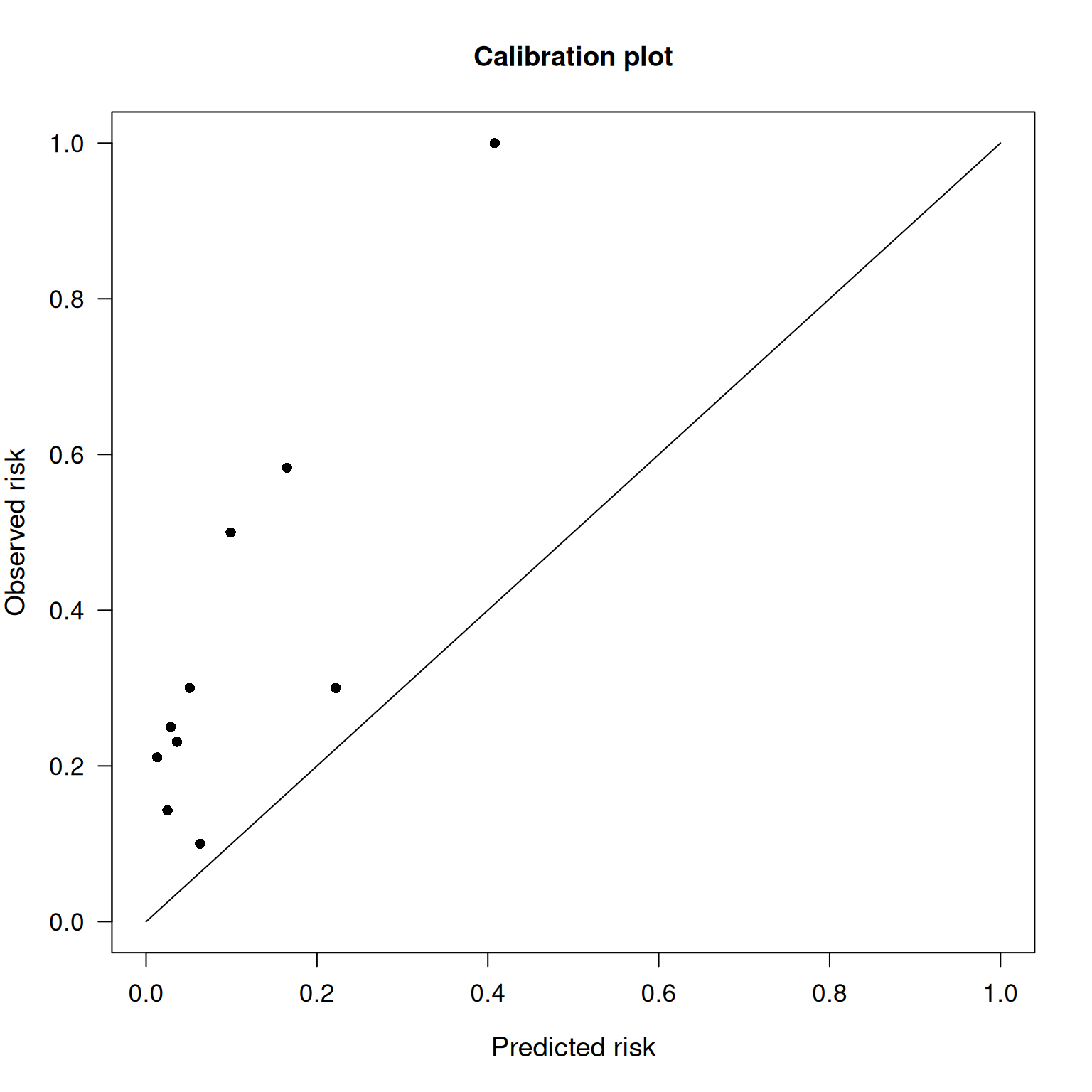

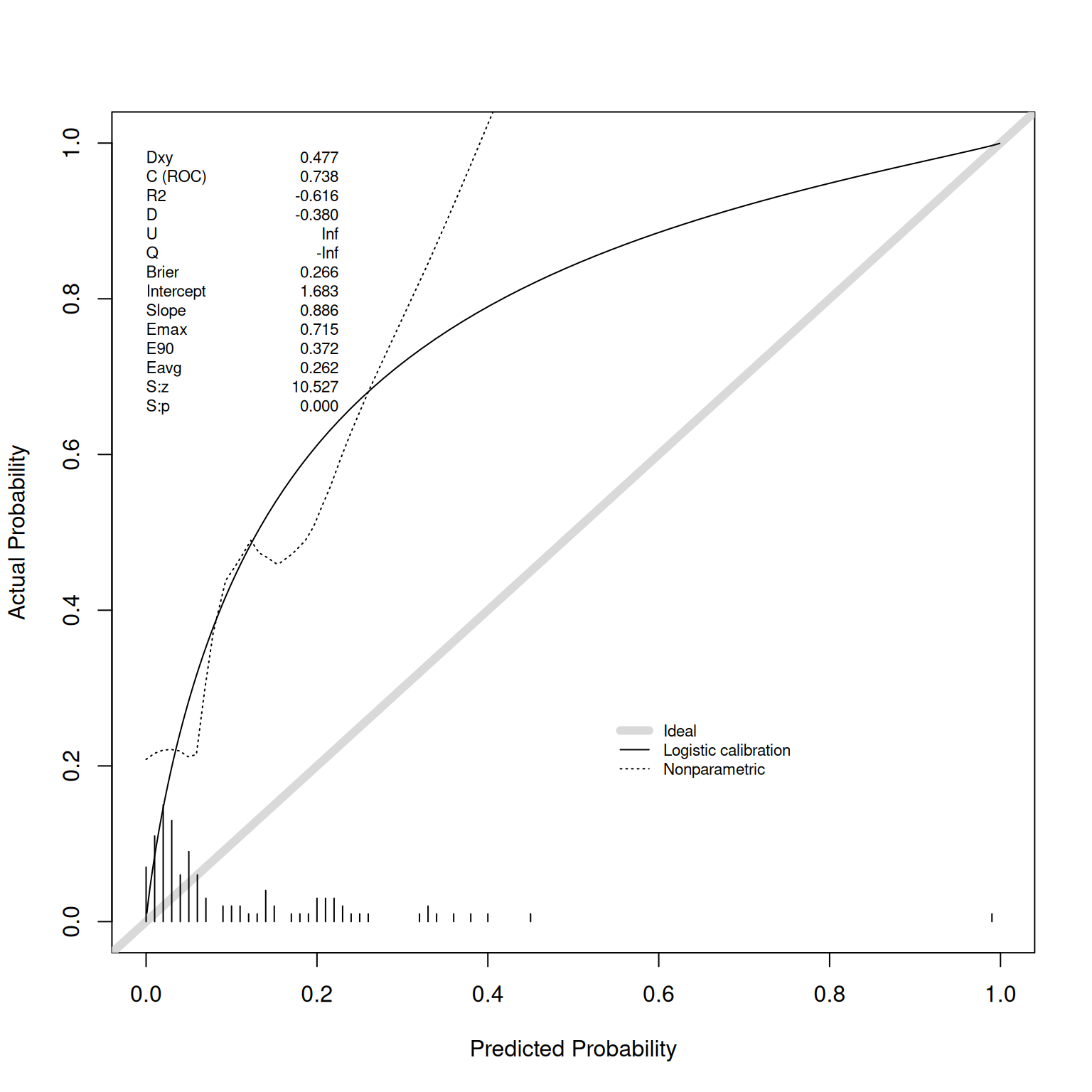

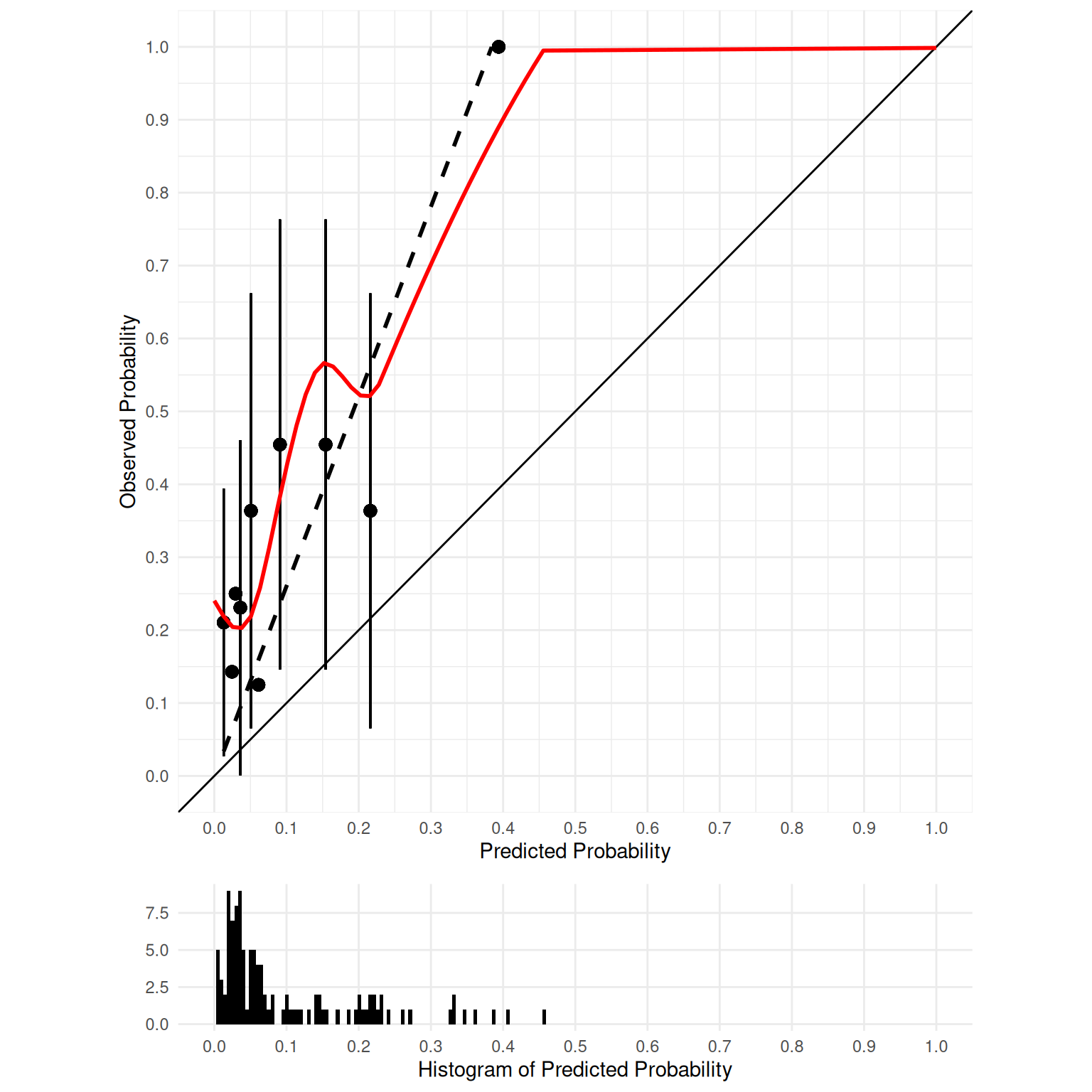

# Set up 10-fold cross-validation