I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

Opening an issue or submitting a pull request on GitHub: https://github.com/isaactpetersen/Principles-Psychological-Assessment

Adding an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

Chapter 16 Test Bias

16.1 Overview of Bias

There are multiple definitions of the term “bias” depending on the context. In general, bias is a systematic error (Reynolds & Suzuki, 2012). Mean error is an example of systematic error, and is sometimes called bias. Cognitive biases are systematic errors in thinking, including confirmation bias and hindsight bias. Method biases are a form of systematic error that involve the influence of measurement on a person’s score that is not due to the person’s level on the construct. Method biases include response biases or response styles, including acquiescence and social desirability bias. Attentional bias refers to the tendency to process some types of stimuli more than others.

Sometimes bias is used to refer in particular to systematic error (in measurement, prediction, etc.) as a function of group membership, where test bias refers to the same score having different meaning for different groups. Under this meaning, a test is unbiased if a given test score has the same meaning regardless of group membership. For example, a test is biased if there is differential validity of test scores for groups (e.g., age, education, culture, race, sex). Test bias would exist, for instance, if a test is a less valid predictor for racial minorities or linguistic minorities. Test bias would also exist if scores on the Scholastic Aptitude Test (SAT) under-estimate women’s grades in college, for instance.

There are some known instances of test bias, as described in Section 16.3. Research has not produced much empirical evidence of test bias (Brown et al., 1999; Hall et al., 1999; Jensen, 1980; Kuncel & Hezlett, 2010; Reynolds et al., 2021; Reynolds & Suzuki, 2012; Sackett et al., 2008; Sackett & Wilk, 1994), though some item-level bias is not uncommon. Moreover, where test bias has been observed, it is often small, unclear, and does not always generalize (N. S. Cole, 1981). However, just because there is not much empirical evidence of test bias does not mean that test bias does not exist. Moreover, just because a test does not show bias does not mean that it should be used. Furthermore, just because a test does not show bias does not mean that there are not race-, social class-, and gender-related biases in clinical judgment during the assessment process.

It is also worth pointing out that group differences in scores do not necessarily indicate bias. Group differences in scores could reflect true group differences in the construct. For instance, women have better verbal abilities, on average, compared to men. So, if women’s scores on a verbal ability test are higher on average than men’s scores, this would not be sufficient evidence for bias.

There are two broad categories of test bias:

Predictive bias refers to differences between groups in the relation between the test and criterion. As with all criterion-related validity tests, the findings depend on the strength and quality of the criterion. Test structure bias refers to differences in the internal test characteristics across groups.

16.2 Ways to Investigate/Detect Test Bias

16.2.1 Predictive Bias

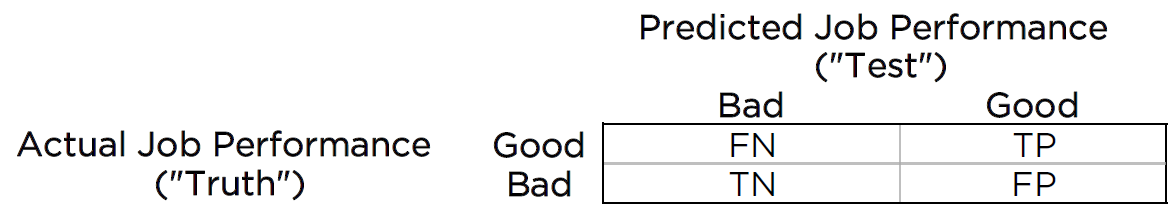

Predictive bias exists when differences emerge between groups in terms of predictive validity to a criterion. It is assessed by using a regression line looking at the association between test score and job performance. For instance, consider a 2x2 confusion matrix used for the standard prediction problem. A confusion matrix for whom to select for a job is depicted in Figure 16.1.

Figure 16.1: 2x2 Confusion Matrix for Job Selection. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

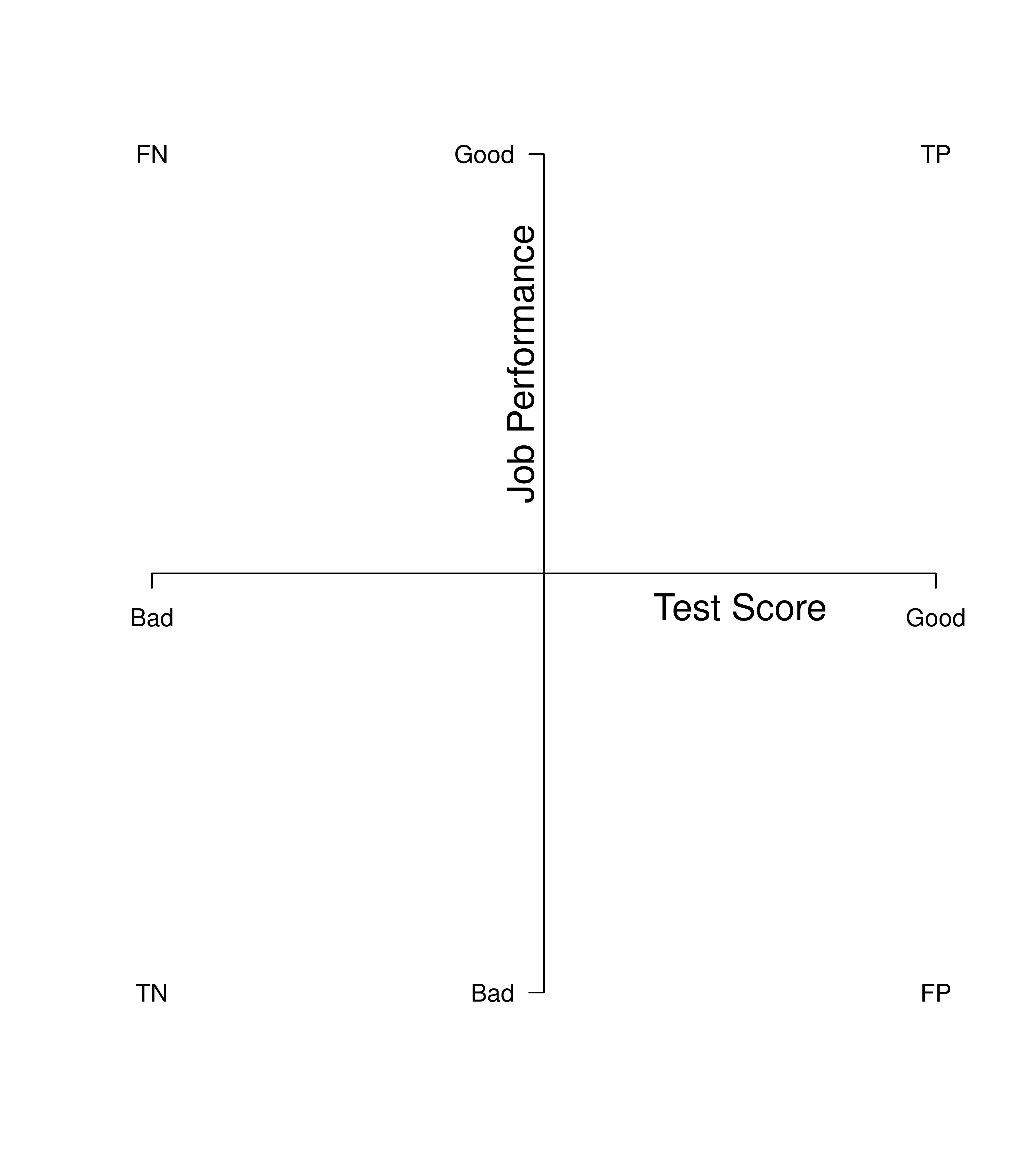

We can also visualize the confusion matrix in terms of a scatterplot of the test scores (i.e., predicted job performance) and the “truth” scores (i.e., actual job performance), as depicted in Figure 16.2. The predictor (test score) is on the x-axis. The criterion (job performance) is on the y-axis. The quadrants reflect the cutoffs (i.e., thresholds) imposed from the 2x2 confusion matrix. The vertical line reflects the cutoff for selecting someone for a job. The horizontal line reflects the cutoff for good job performance (i.e., people who should have been selected for the job).

Figure 16.2: 2x2 Confusion Matrix for Job Selection in the Form of a Graph With Predicted Performance on the x-Axis and Actual Job Performance on the y-Axis. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

The data points in the top right quadrant are true positives: people who the test predicted would do a good job and who did a good job. The data points in the bottom left quadrant are true negatives: people who the test predicted would do a poor job and who would have done a poor job. The data points in the bottom right quadrant are false positives: people who the test predicted would do a good job and who did a poor job. The data points in the top left quadrant are false negatives: people who the test predicted would do a poor job and who would have done a good job.

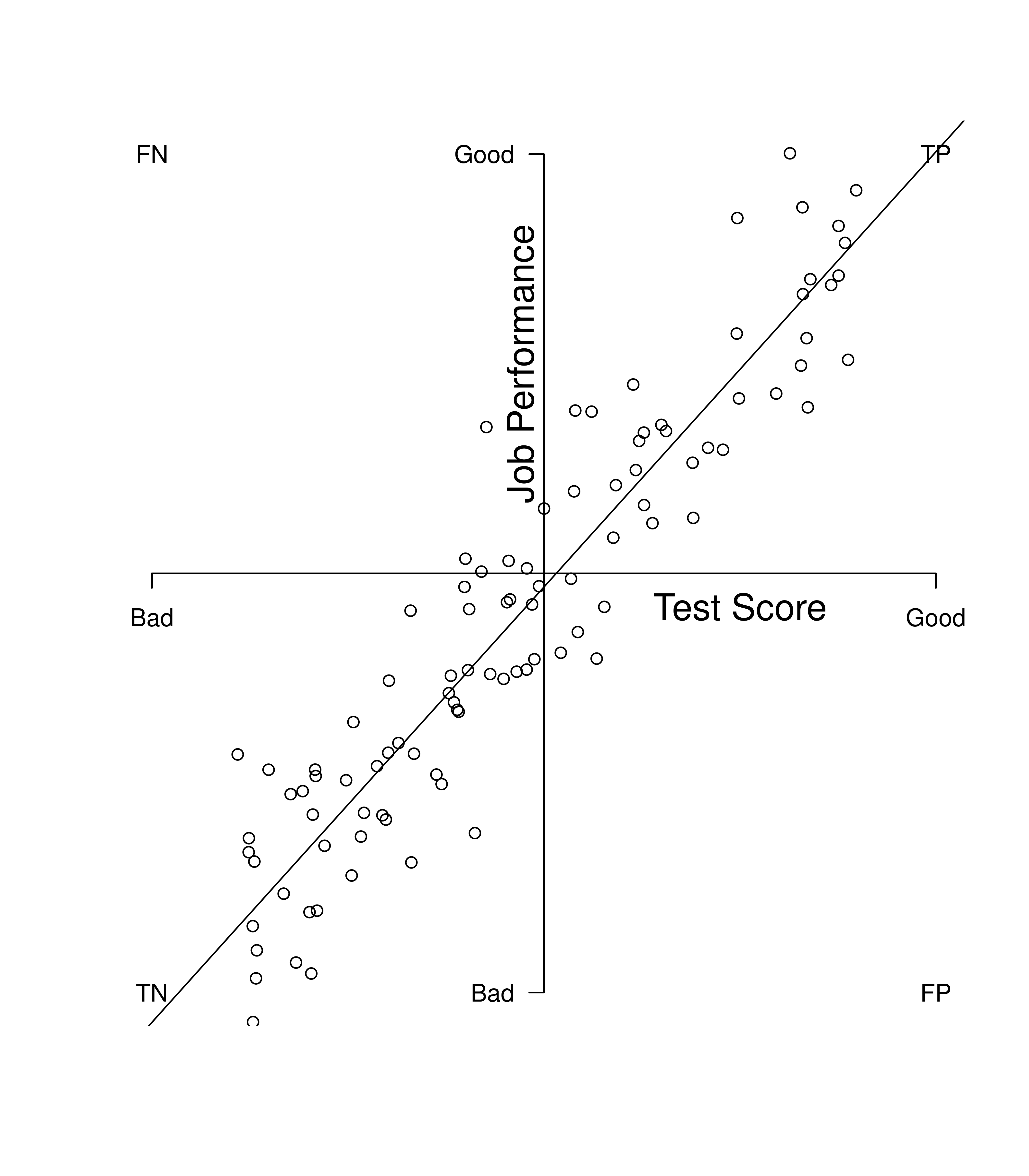

Figure 16.3 depicts a strong predictor. The best-fit regression line has a steep slope where there are lots of data points that are true positives and true negatives, with relatively few false positives and false negatives.

Figure 16.3: Example of a Strong Predictor. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

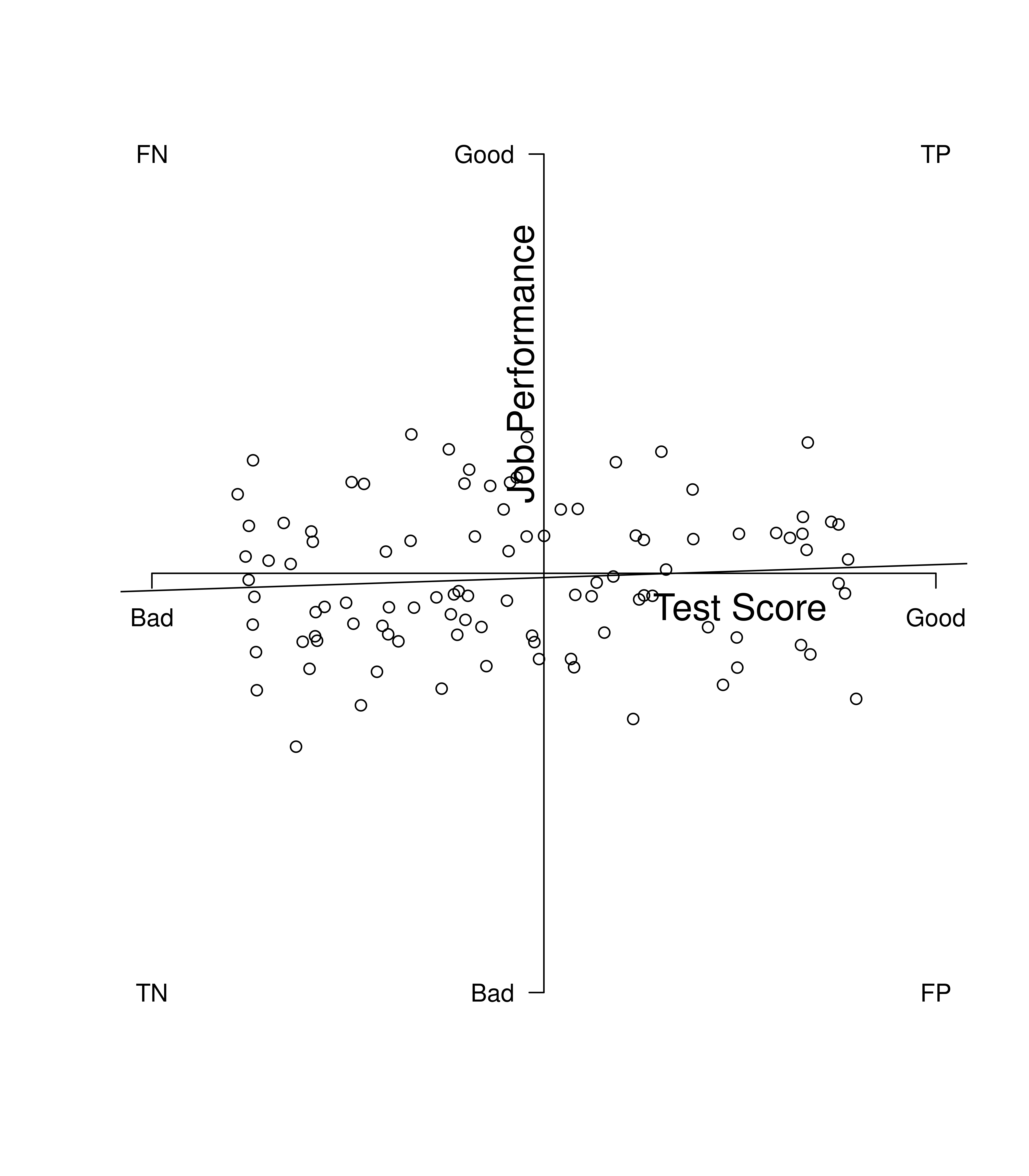

Figure 16.4 depicts a poor predictor. The best-fit regression line has a shallow slope where there are just as many data points that are in the false cells (false positives and false negatives) as there are in the true cells (true positives and true negatives). In general, the steeper the slope, the better the predictor.

Figure 16.4: Example of a Poor Predictor. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

We can evaluate predictive bias using a best-fit regression line between the predictor and criterion for each group.

16.2.1.1 Types of Predictive Bias

There are three types of predictive bias:

The slope of the regression line is the steepness of the line. The intercept of the regression line is the y-value of the point where the line crosses the y-axis (i.e., when \(x = 0\)). If a measure shows predictive test bias, when looking at the regression line for each group, the groups’ regression lines differ in either slopes and/or intercepts.

16.2.1.1.1 Different Slopes

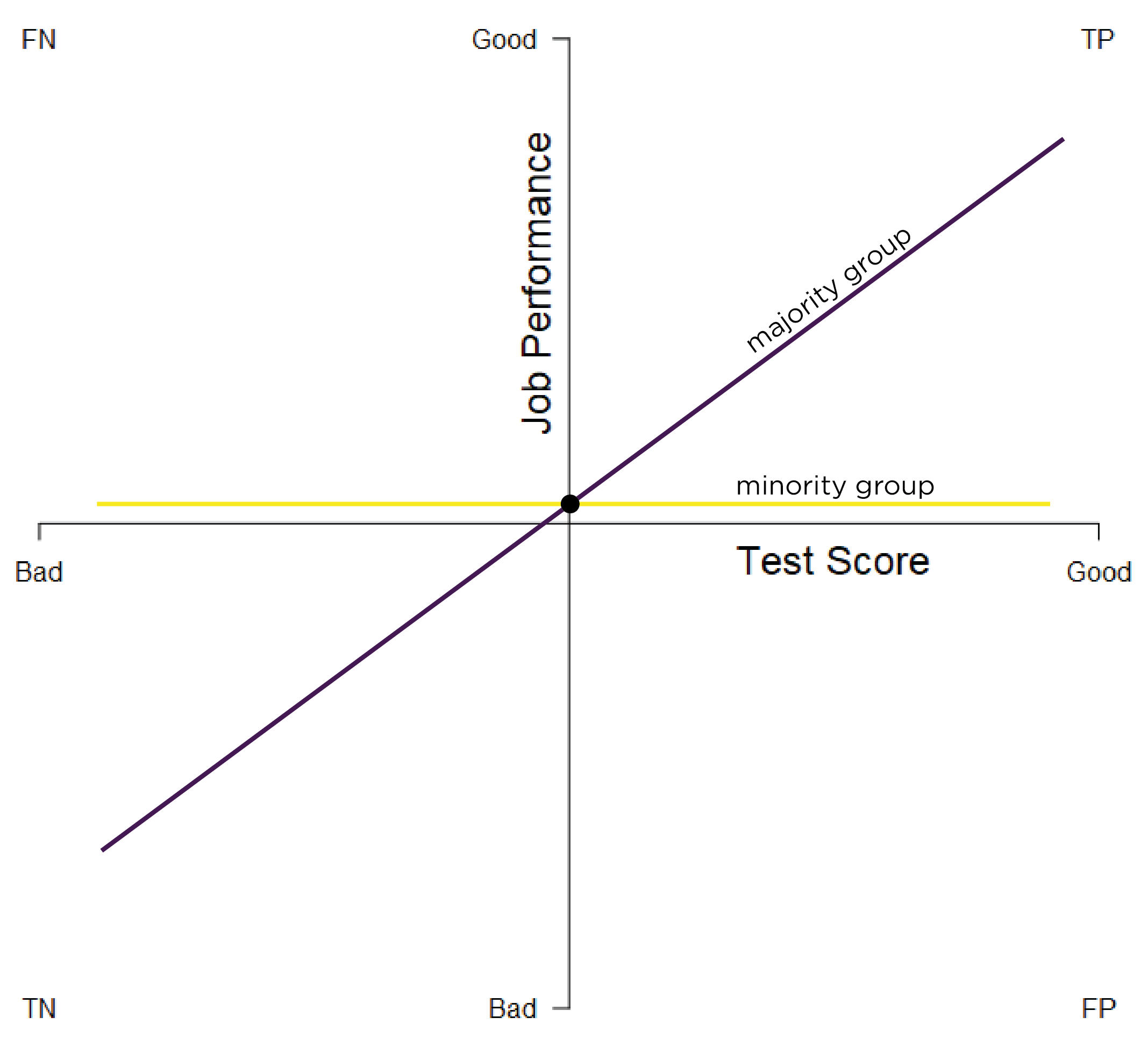

Predictive bias in terms of different slopes exists when there are differences in the slope of the regression line between minority and majority groups. The slope describes the direction and steepness of the regression line. The slope of a regression line is the amount of change in \(y\) for every unit change in \(x\) (i.e., rise over run). Differing slopes indicate differential predictive validity, in which the test is a more effective predictor of performance in one group over the other. Different slopes predictive bias is depicted in Figure 16.5. In the figure, the predictor performs well in the majority group. However, the slope is close to zero in the minority group, indicating that there is no association between the predictor and the criterion for the minority group.

Figure 16.5: Test Bias: Different Slopes. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

Different slopes can especially occur if we develop our measure and criterion based on the normative majority group. Not much evidence has found empirical evidence of different slopes across groups. However, samples often do not have the power to detect differing slopes (Aguinis et al., 2010). Theoretically, to fix biases related to different slopes, you should find another measure that is more predictive for the minority group. If the predictor is a strong predictor in both groups but shows slight differences in the slope, within-group norming could be used.

16.2.1.1.2 Different Intercepts

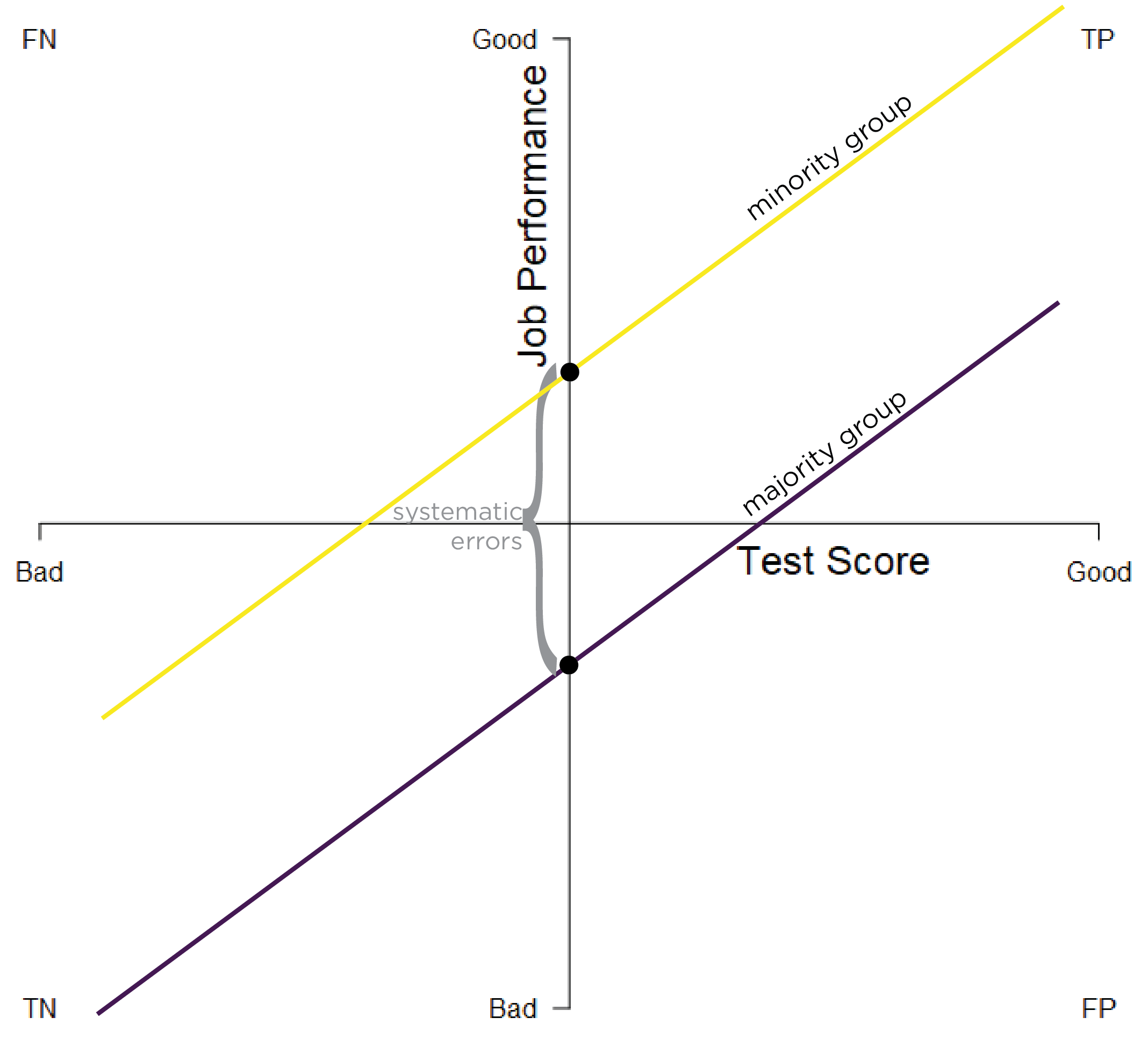

Predictive bias in terms of different intercepts exists when there are differences in the intercept of the regression line between minority and majority groups. The \(y\)-intercept describes the point on the \(y\)-axis that the line intersects with the \(y\)-axis (when \(x = 0\)). When the distributions have similar slopes, intercept differences suggest that the measure systematically under- or over-estimates group performance relative to the person’s ability. The same test score leads to systematically different predictions for the majority and minority groups. In other words, minority group members get different tests scores than majority group members with the same ability. Different intercepts predictive bias is depicted in Figure 16.6.

Figure 16.6: Test Bias: Different Intercepts. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

A higher intercept (relative to zero) indicates that the measure under-estimates a person’s ability (at that test score)—i.e., the person’s job performance is better than what the test score would suggest. A lower intercept (relative to zero) indicates that the measure over-estimates a person’s ability (at that test score)—i.e., the person’s job performance is worse than what the test score would suggest. Figure 16.6 indicates that the measure systematically under-estimates the job performance of the minority group.

Performance among members of a minority group could be under- or over-estimated. For example, historically, women’s grades in math and engineering classes tended to be under-estimated by the Scholastic Aptitude Test [SAT; M. J. Clark & Grandy (1984)]. However, where intercept differences have been observed, measures often show small over-estimation of school and job performance among minority groups (Reynolds & Suzuki, 2012). For example, women’s physical strength and endurance is over-estimated based on physical ability tests (Sackett & Wilk, 1994). In addition, over-estimation of African Americans’ and Hispanics’ school and job performance has been observed based on cognitive ability tests (N. S. Cole, 1981; Reynolds & Suzuki, 2012; Sackett et al., 2008; Sackett & Wilk, 1994). At the same time, the Black–White difference in job performance is less than the Black–White difference in test performance.

The over-prediction of lower-scoring groups is likely mostly an artifact of measurement error (L. S. Gottfredson, 1994). The over-estimation of African Americans’ and Hispanics’ school and job performance may be due to measurement error in the tests. Moreover, test scores explain only a portion of the variation in job performance. Black people are far less disadvantaged on the noncognitive determinants of job performance than on the cognitive ones. Nevertheless, the over-estimation that has been often observed is on average—the performance is not over-estimated for all individuals of the groups even if there is an average over-estimation effect. In addition, simulation findings indicate that lower intercepts (i.e., over-estimation) among minority groups compared to majority groups could be observed if there are different slopes but not different intercepts in the population, because different slopes are likely to go undetected due to low power (Aguinis et al., 2010). That is, if a test shows weaker validity for a minority group than the majority group, it could appear as different intercepts that favor the minority group when, in fact, it reflects shallower slopes of the minority group that go undetected.

Predictive biases in intercepts could especially occur if we develop tests that are based on the majority group, and the items assess constructs other than the construct of interest which are systematically biased in favor of the majority group or against the minority group. Arguments about reduced power to detect differences are less relevant for intercepts and means than for slopes.

To correct for a bias in intercepts, we could add bonus points to the scores for the minority group to correct for the amount of the systematic error, and to result in the same regression line. But if the minority group is over-predicted (as has often been the case where intercept differences have been observed), we would not want to use score adjustment to lower the minority group’s scores.

16.2.1.1.3 Different Intercepts and Slopes

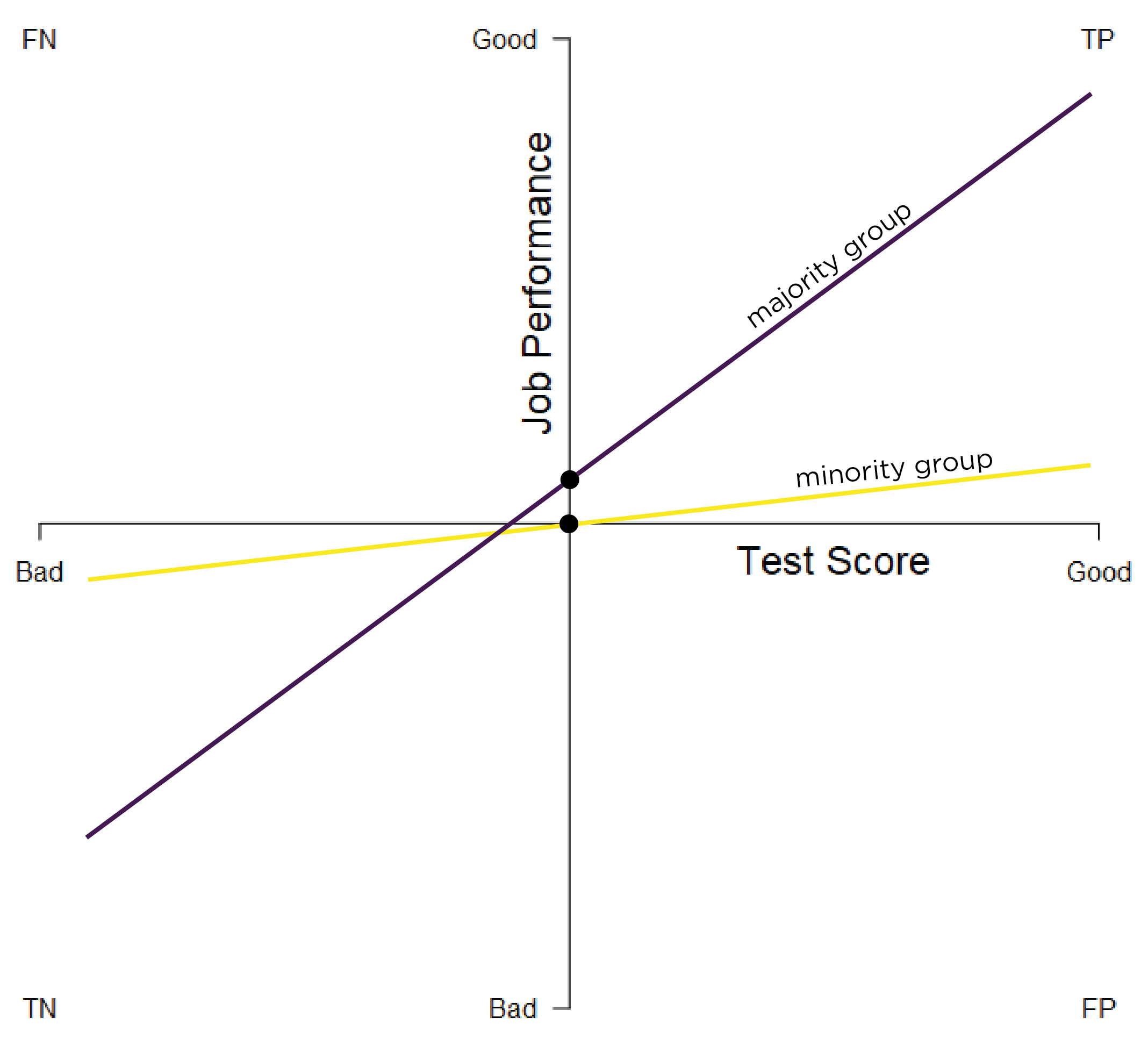

Predictive bias in terms of different intercepts and slopes exists when there are differences in the intercept and slope of the regression line between minority and majority groups. In cases of different intercepts and slopes, there is both differential validity (because the regression lines have different slopes), as well as varying under- and over-estimation of groups’ performance at particular scores. Different intercepts and slopes predictive bias is depicted in Figure 16.7.

Figure 16.7: Test Bias: Different Intercepts and Slopes. TP = true positive; TN = true negative; FP = false positive; FN = false negative.

In instances of different intercepts and slopes predictive bias, a measure can simultaneously over-estimate and under-estimate a person’s ability at different test scores. For instance, a measure can under-estimate a person’s ability at higher test scores and can over-estimate a person’s ability at lower test scores.

Different intercepts and slopes across groups is possibly more realistic than just different intercepts or just different slopes. However, different intercepts and slopes predictive bias is more complicated to study, represent, and resolve. It is difficult to examine because of complexity, and it is not easy to fix. Currently, we have nothing to address different intercepts and slopes predictive bias. We would need to use a different measure or measures for each group.

16.2.2 Test Structure Bias

In addition to predictive bias, another type of test bias is test structure bias. Test structure bias involves differences in internal test characteristics across groups. Examining test structure bias is different from examining the total score, as is used when examining predictive bias. Test structure bias can be identified empirically or based on theory/judgment.

Empirically, test structure bias can be examined in multiple ways.

16.2.2.1 Empirical Approaches to Identification

16.2.2.1.1 Item \(\times\) Group tests (ANOVA)

Item \(\times\) Group tests in analysis of variance (ANOVA) examine whether the difference between groups on the overall score match comparisons among smaller items sets between groups. Item \(\times\) Group tests are used to rule out that items are operating in different ways in different groups. If the items operate in different ways in different groups, they do not have the same meaning across groups. For example, if we are going to use a measure for multiple groups, we would expect its items to operate similarly across groups. So, if women show higher scores on a depression measure compared to men, would also expect them to show similar elevations on each item (e.g., sleep loss).

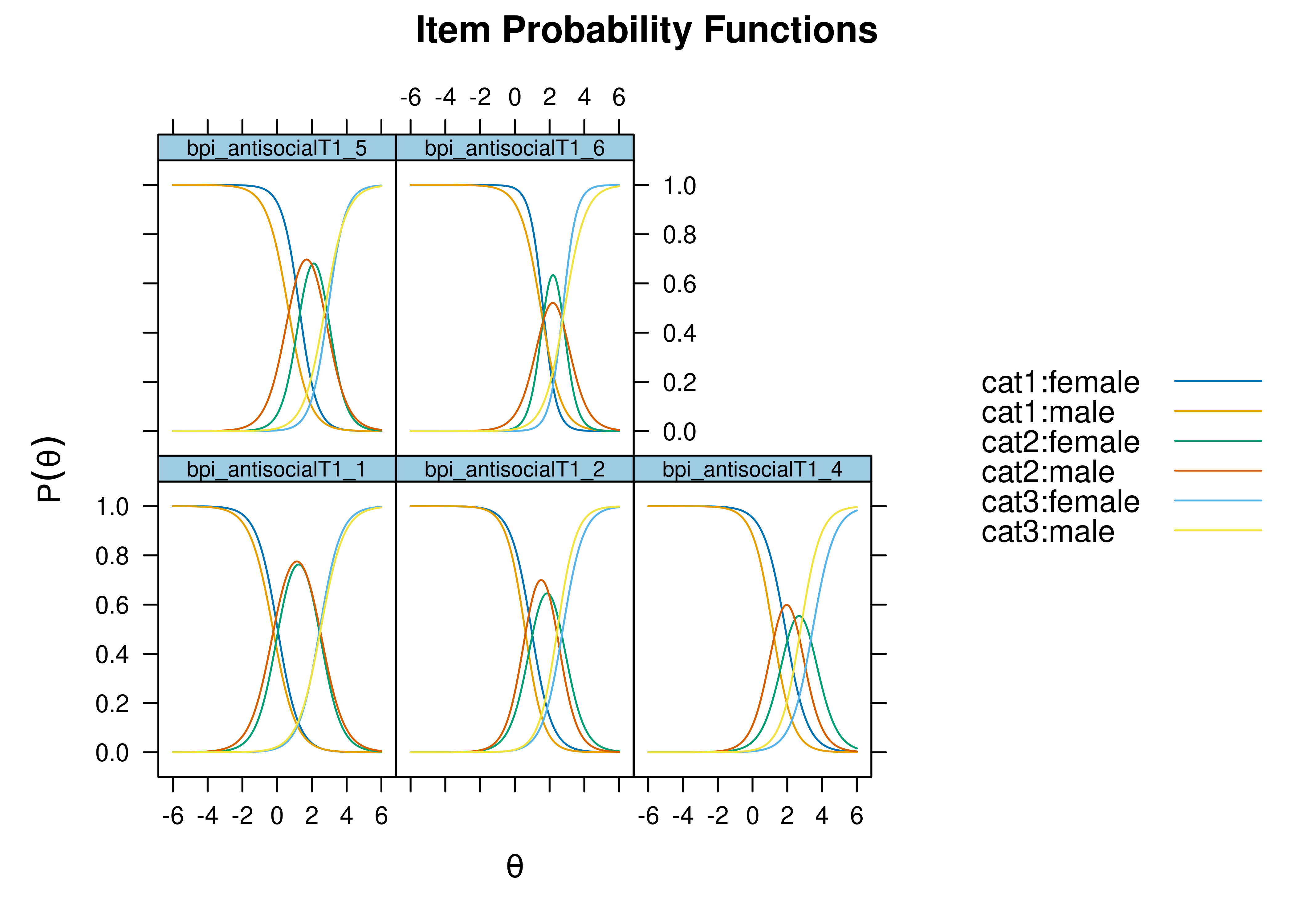

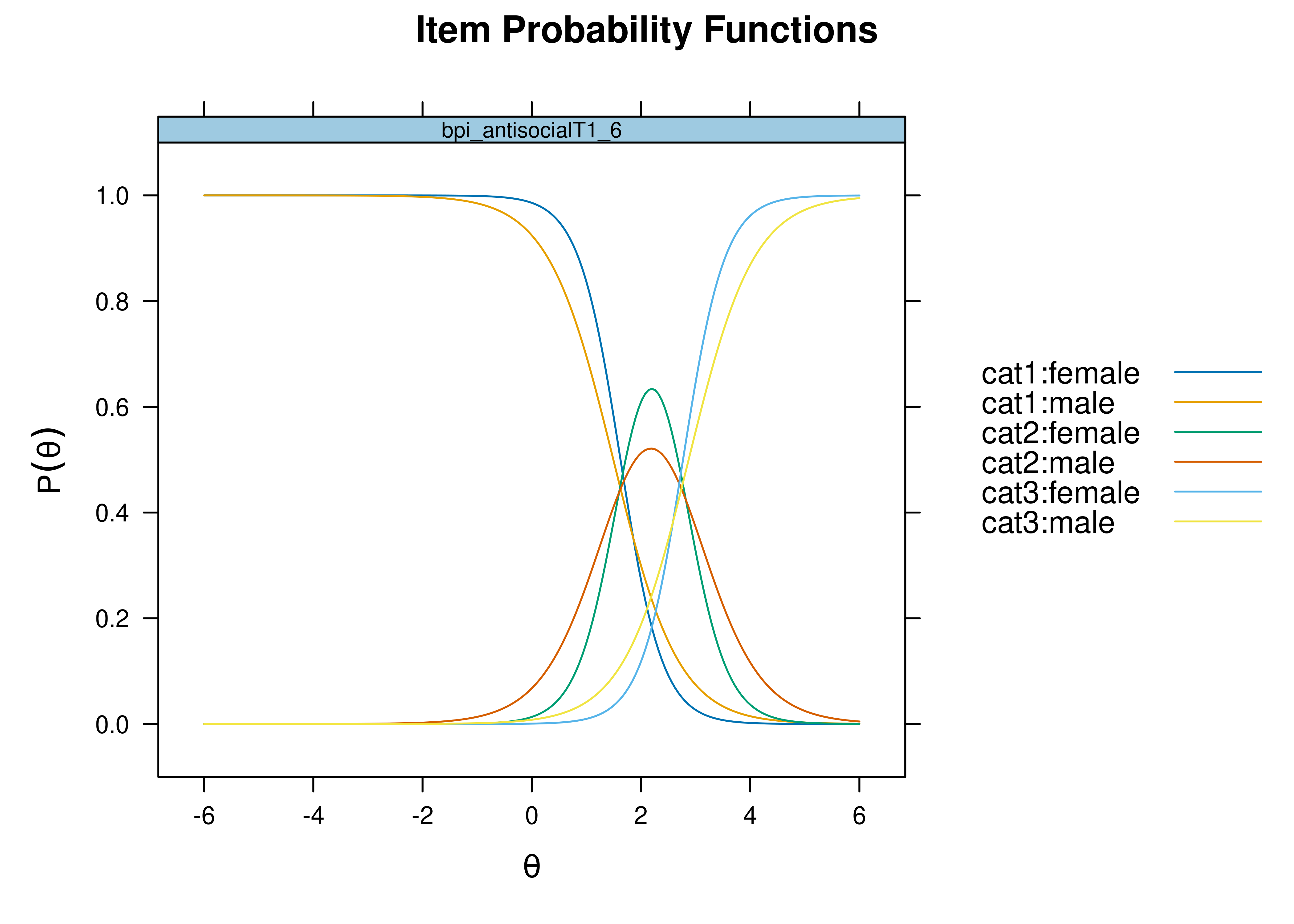

16.2.2.1.2 Item Response Theory

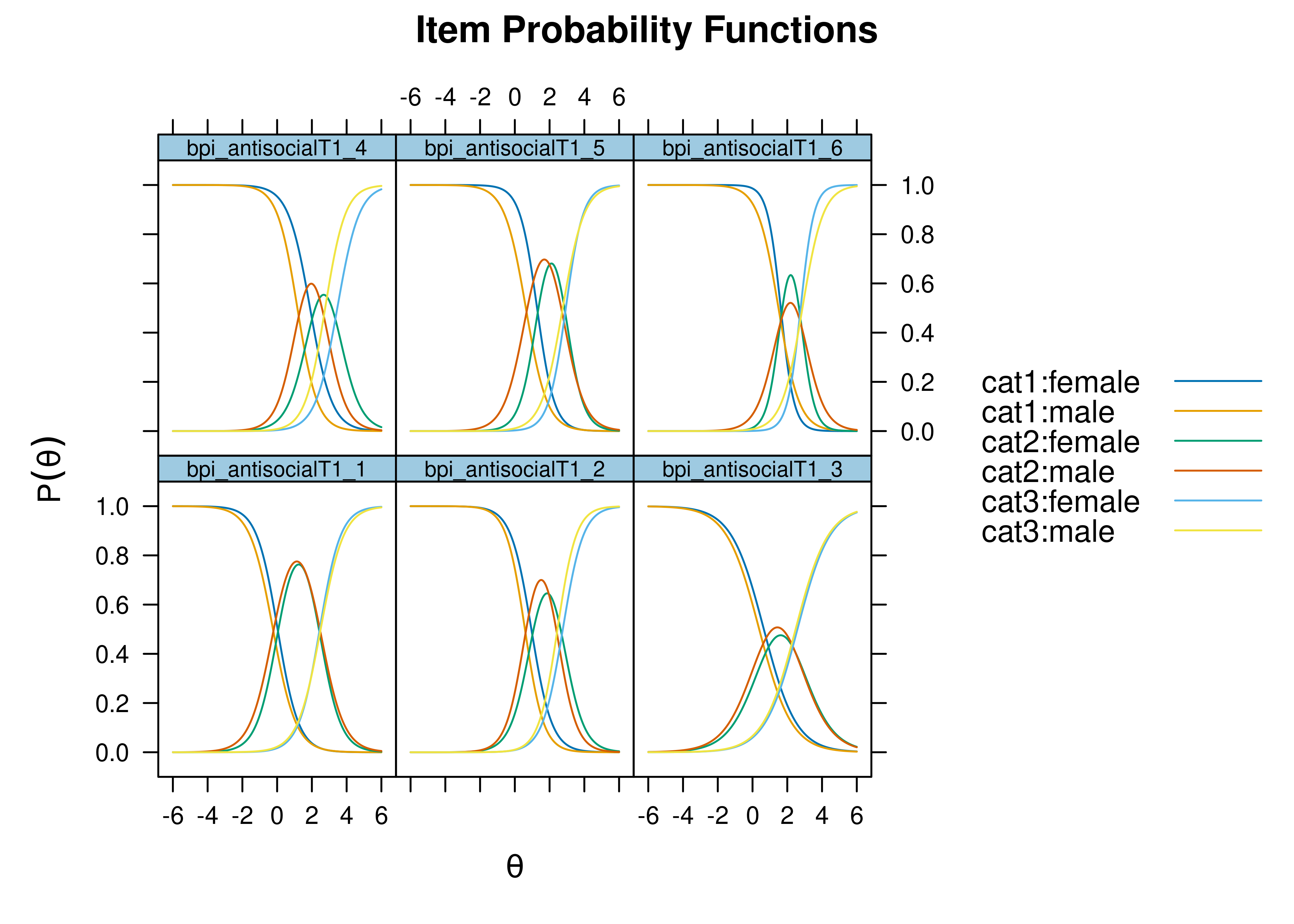

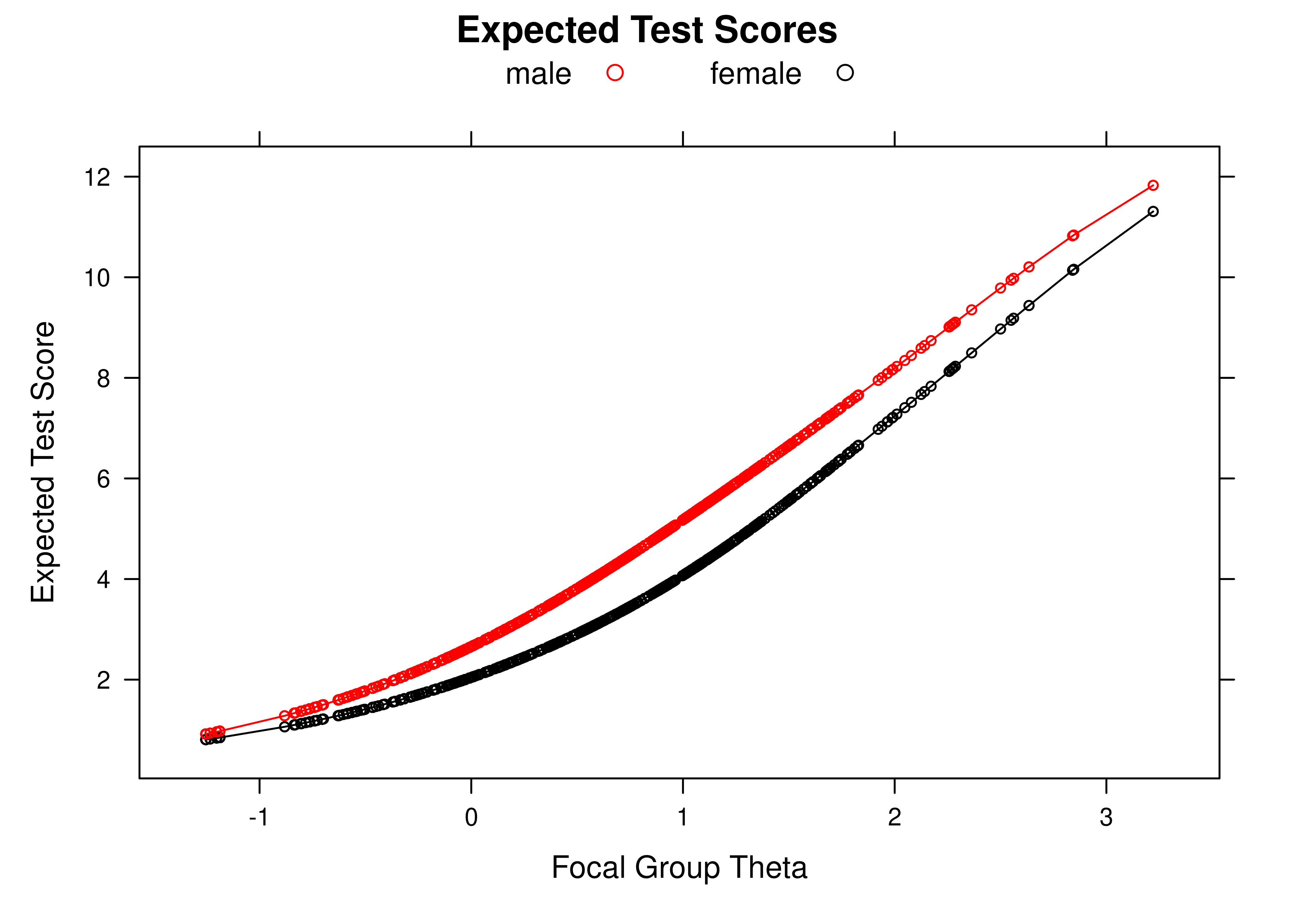

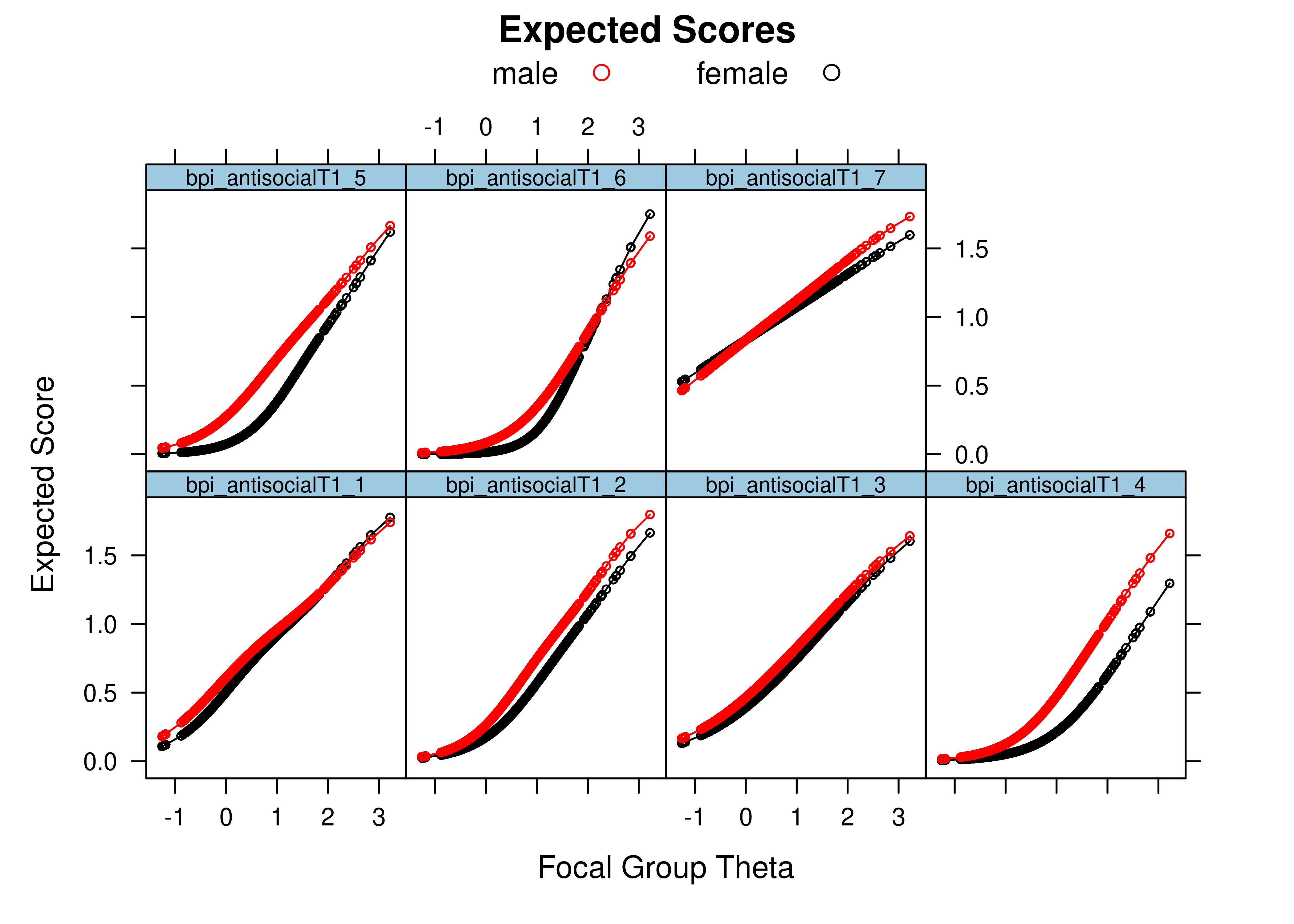

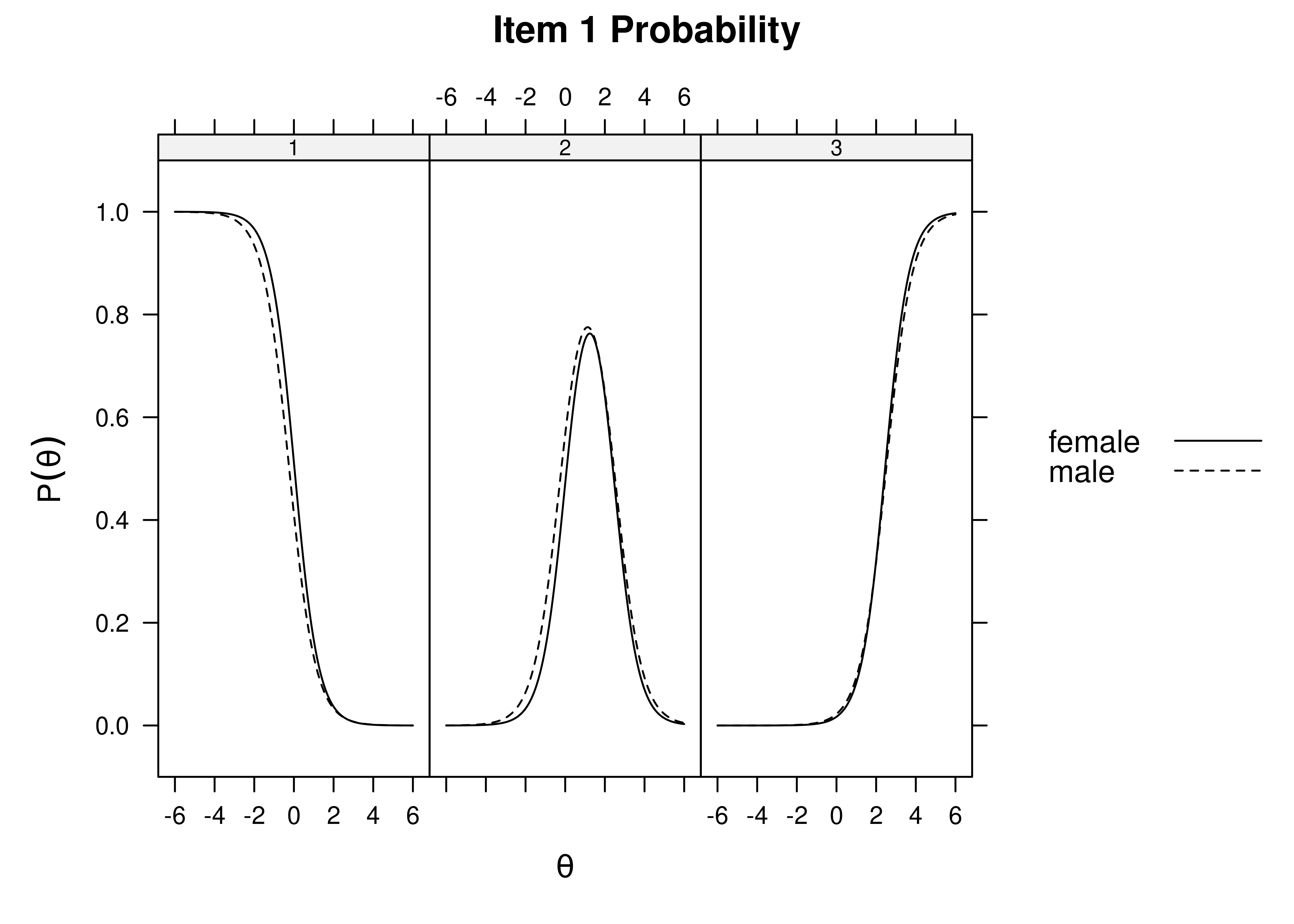

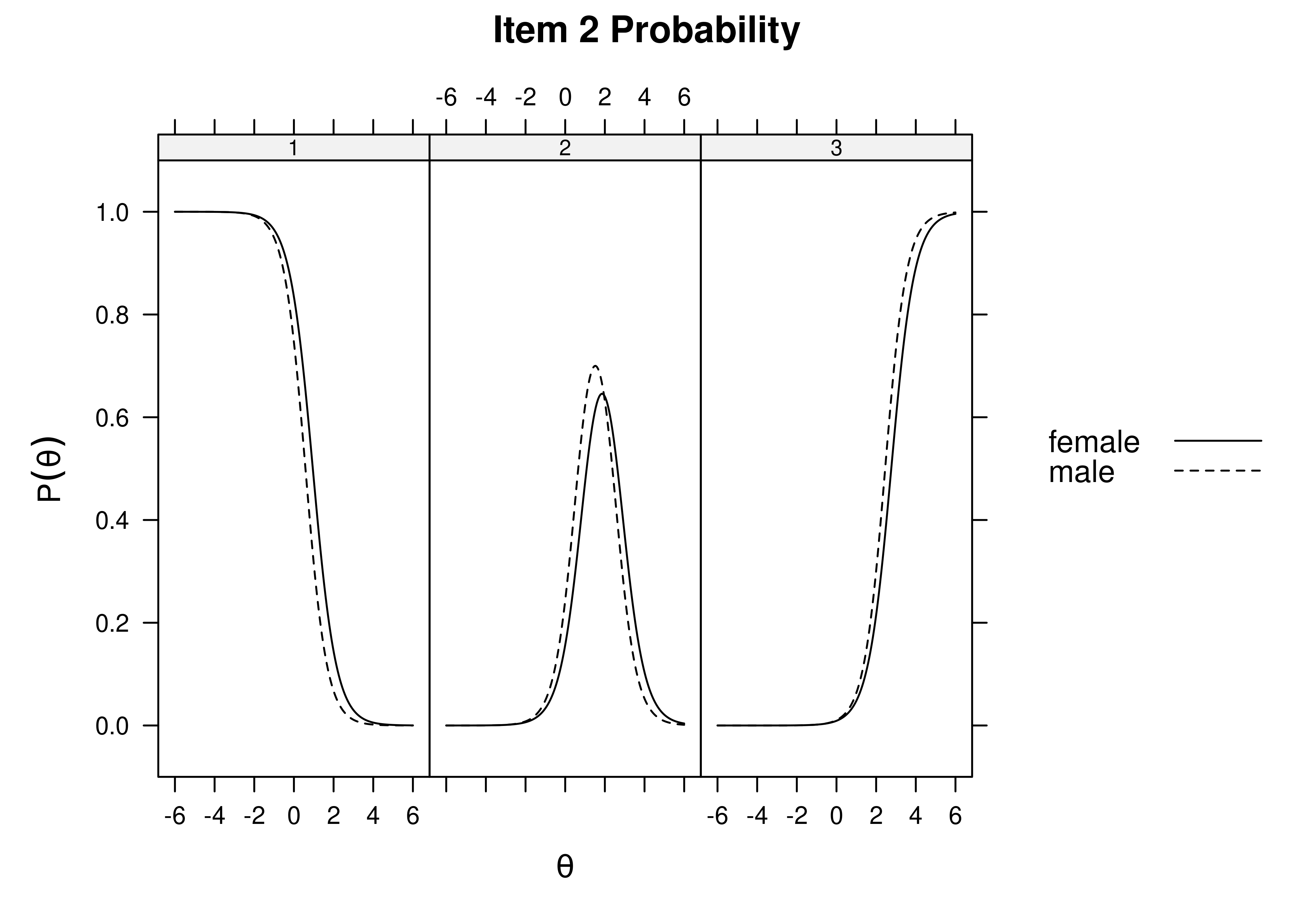

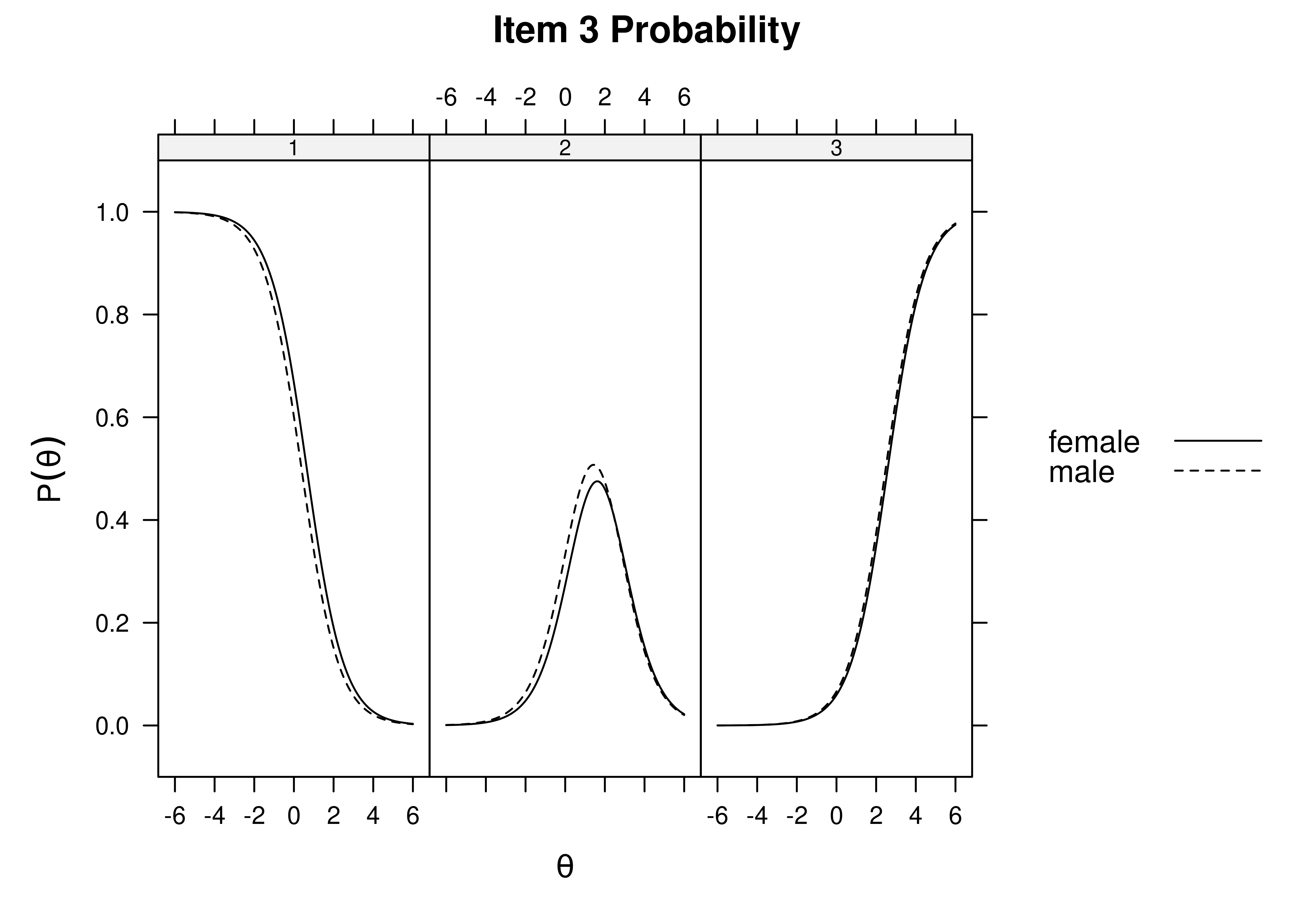

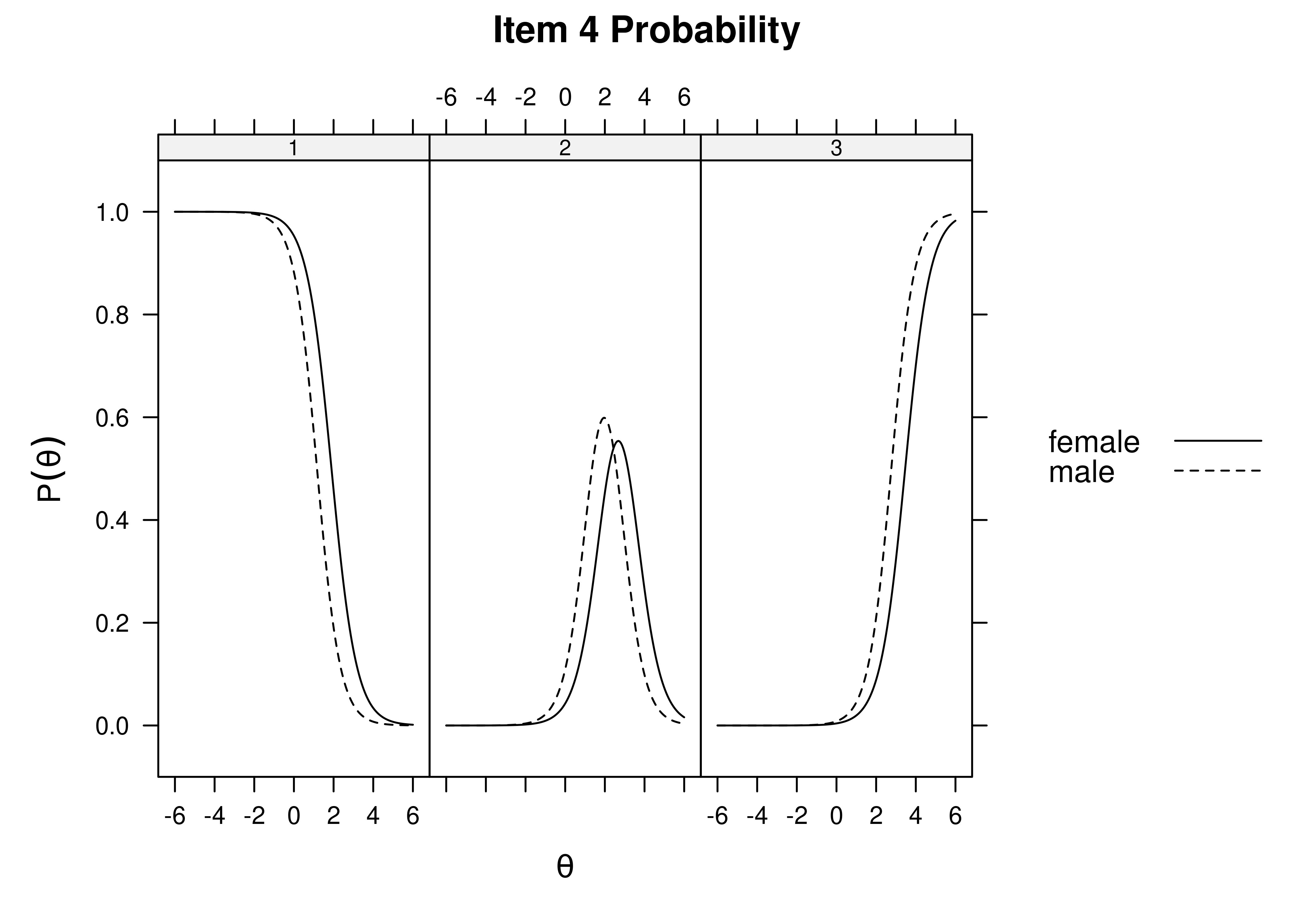

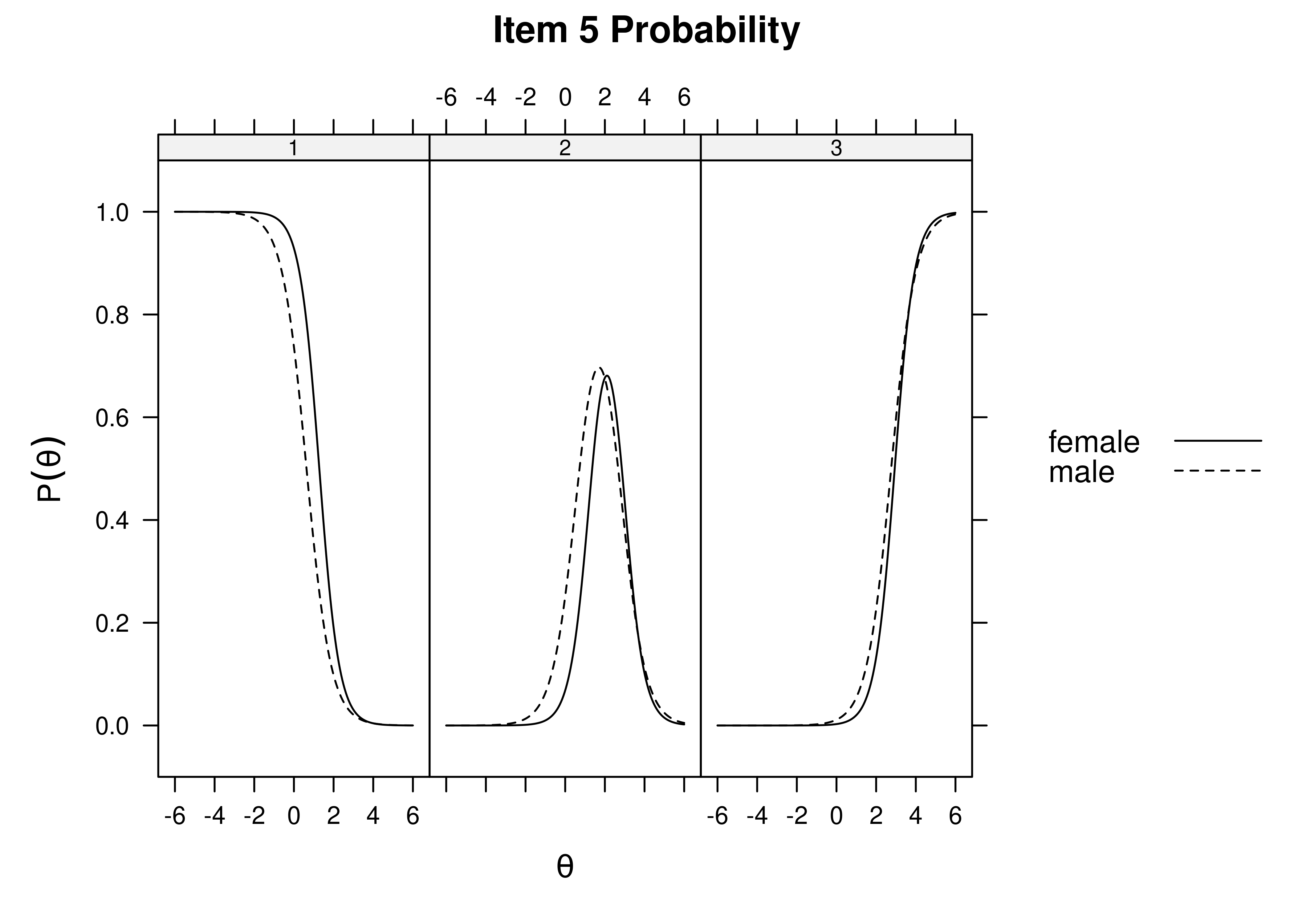

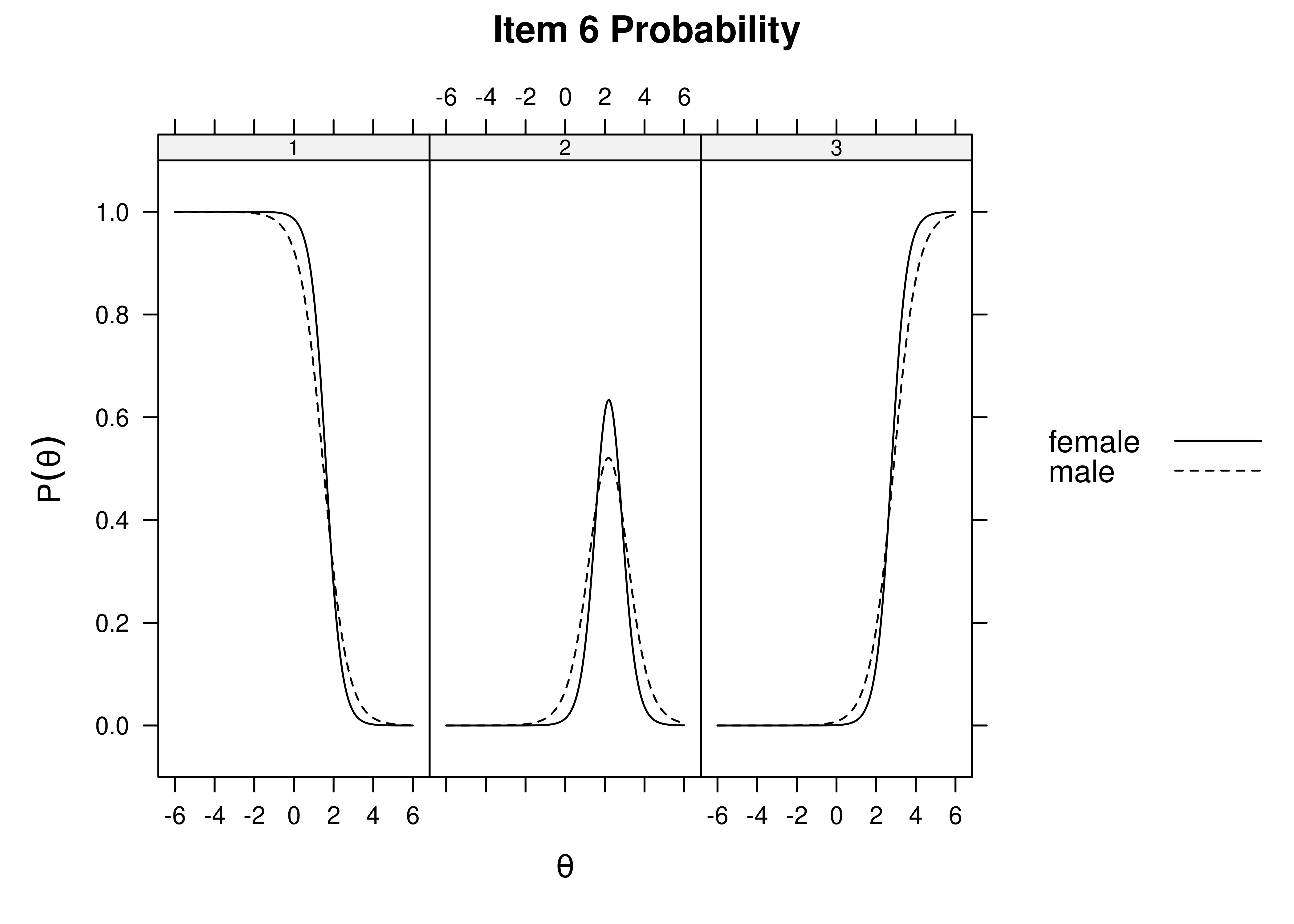

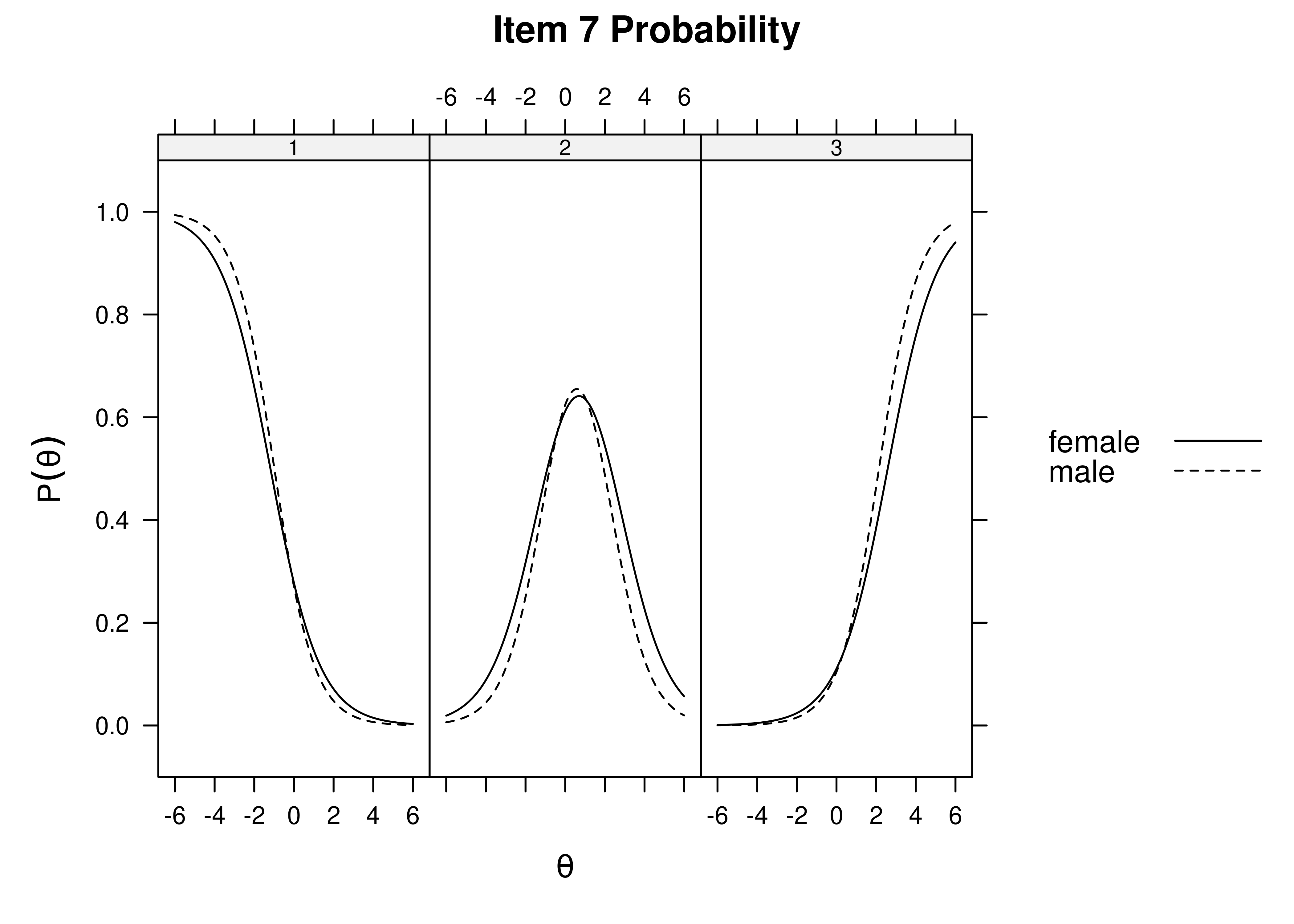

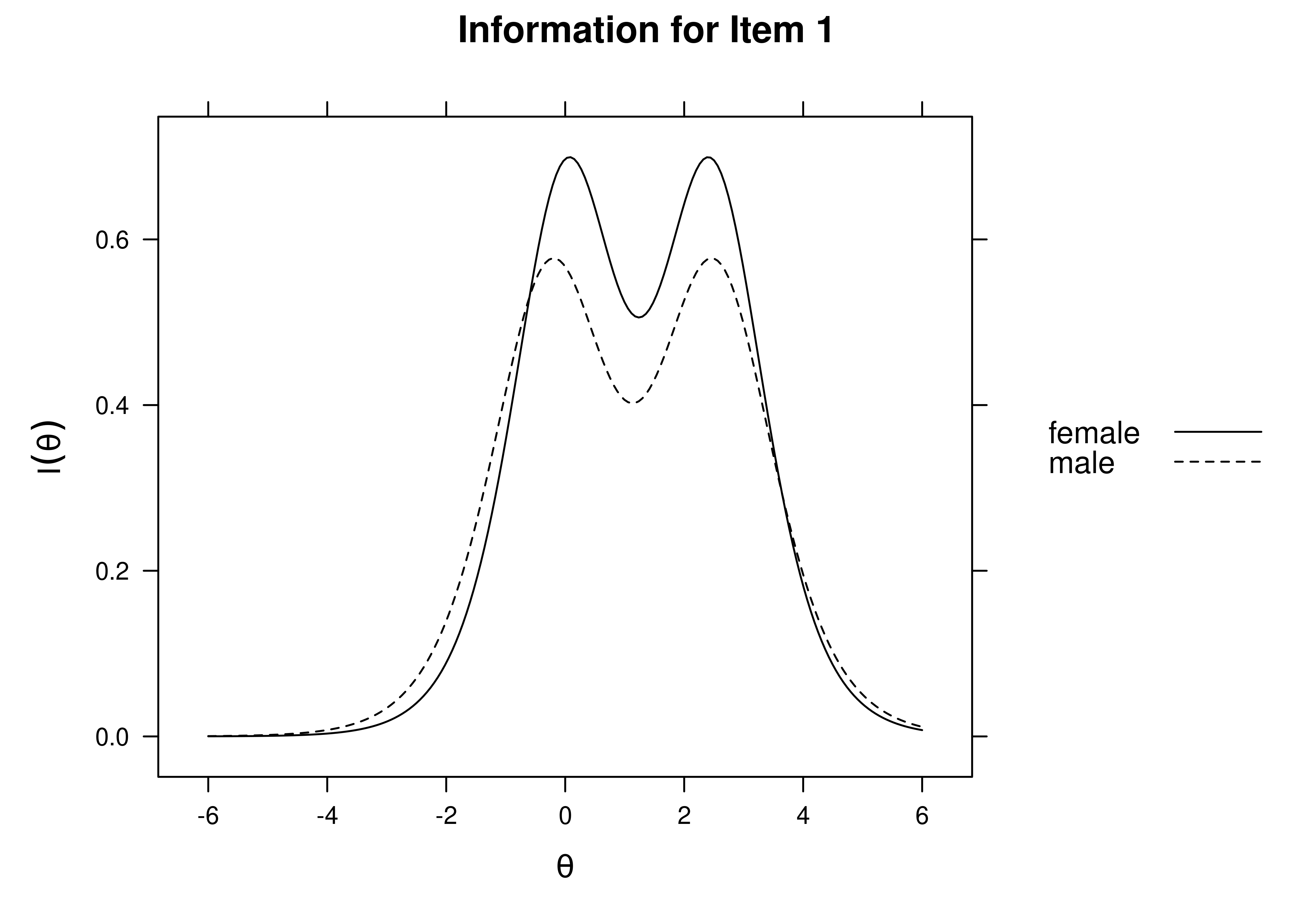

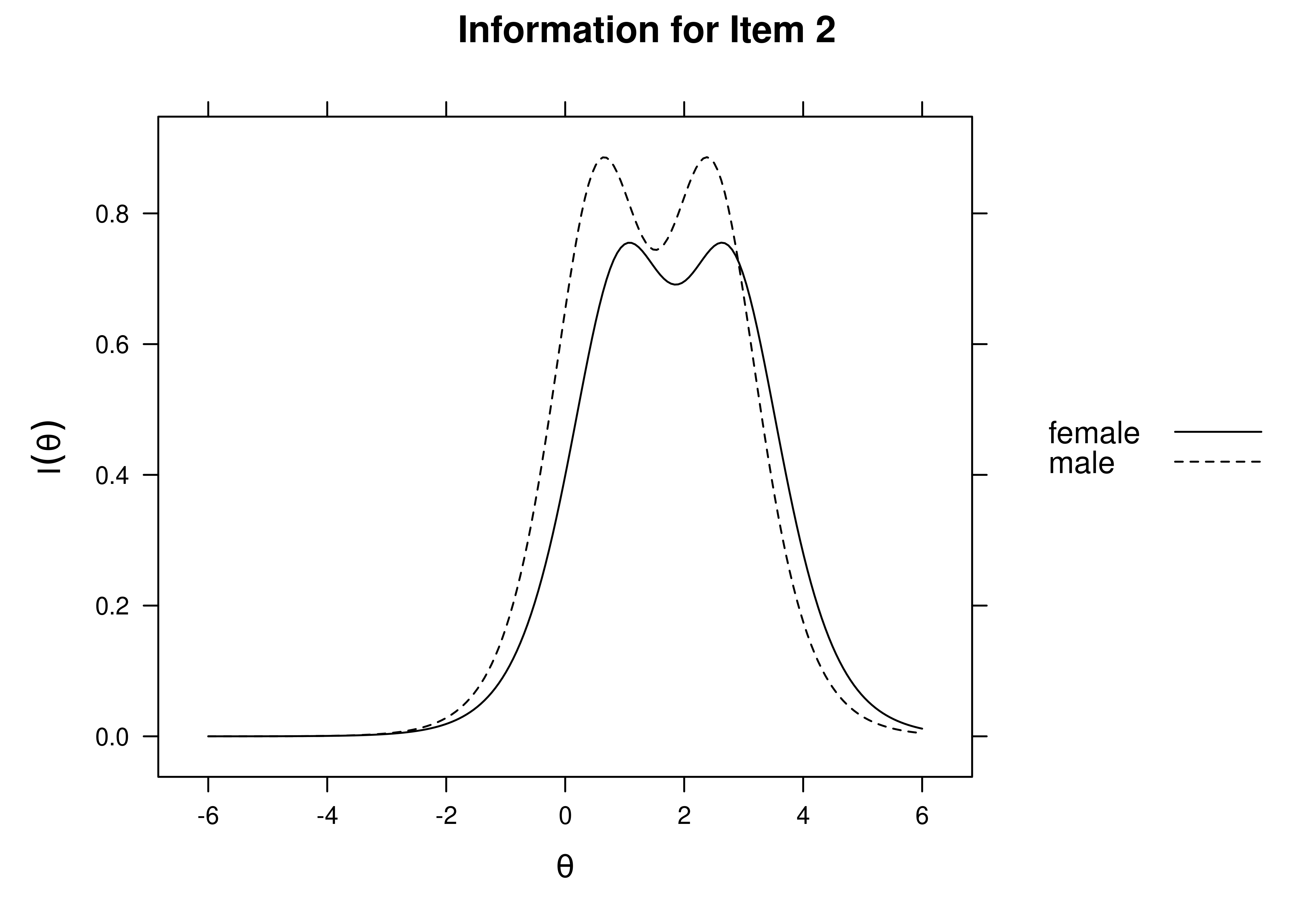

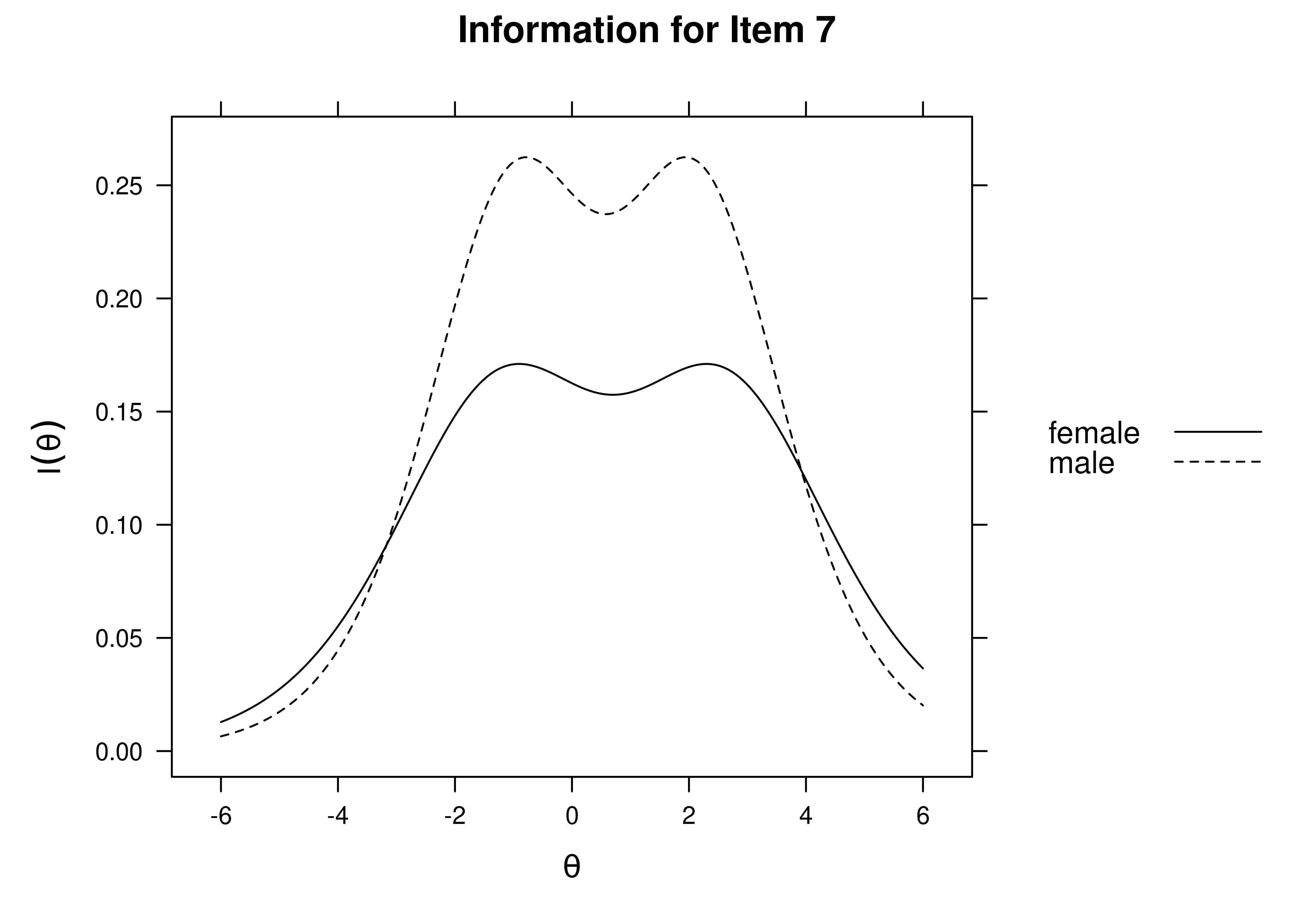

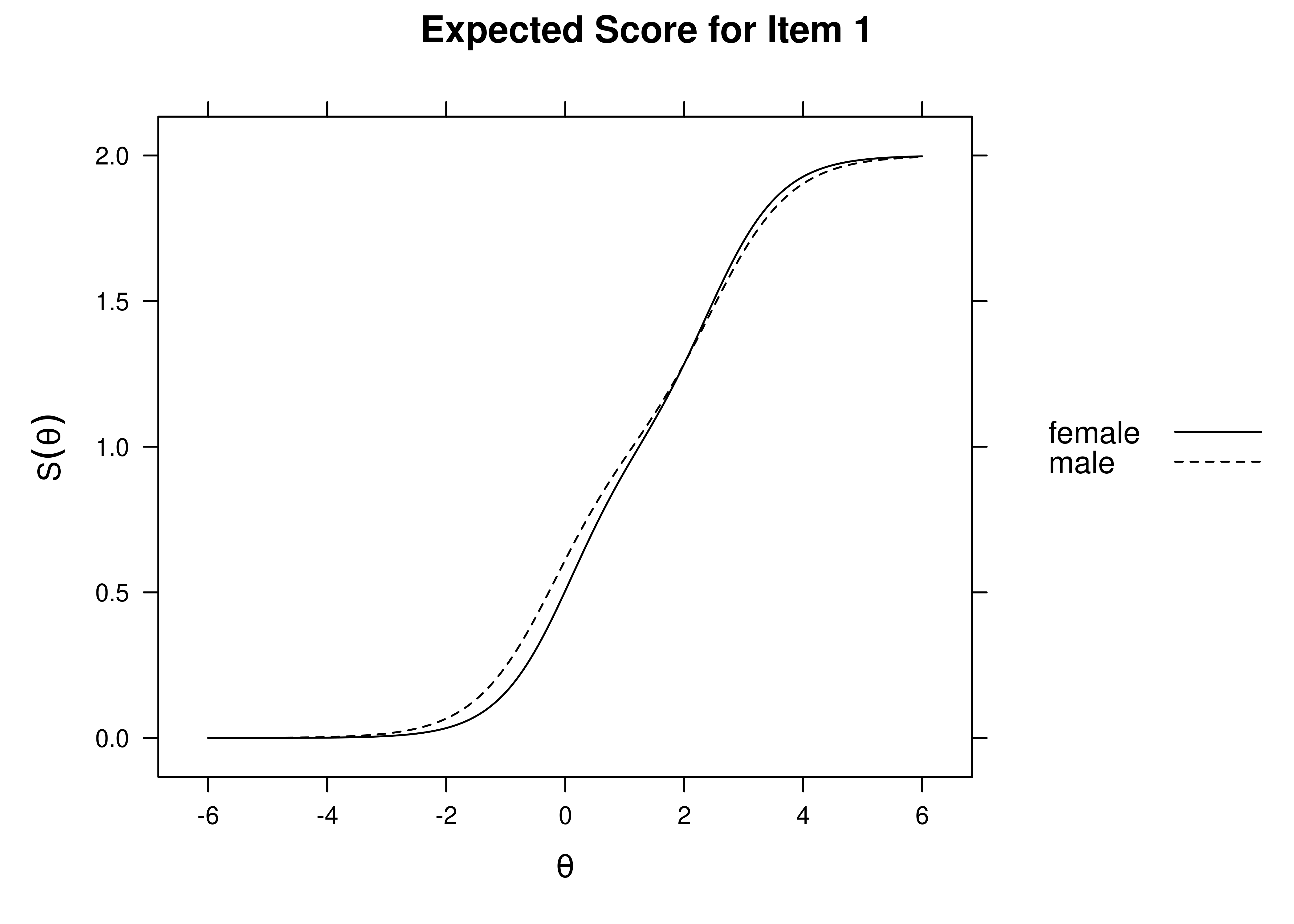

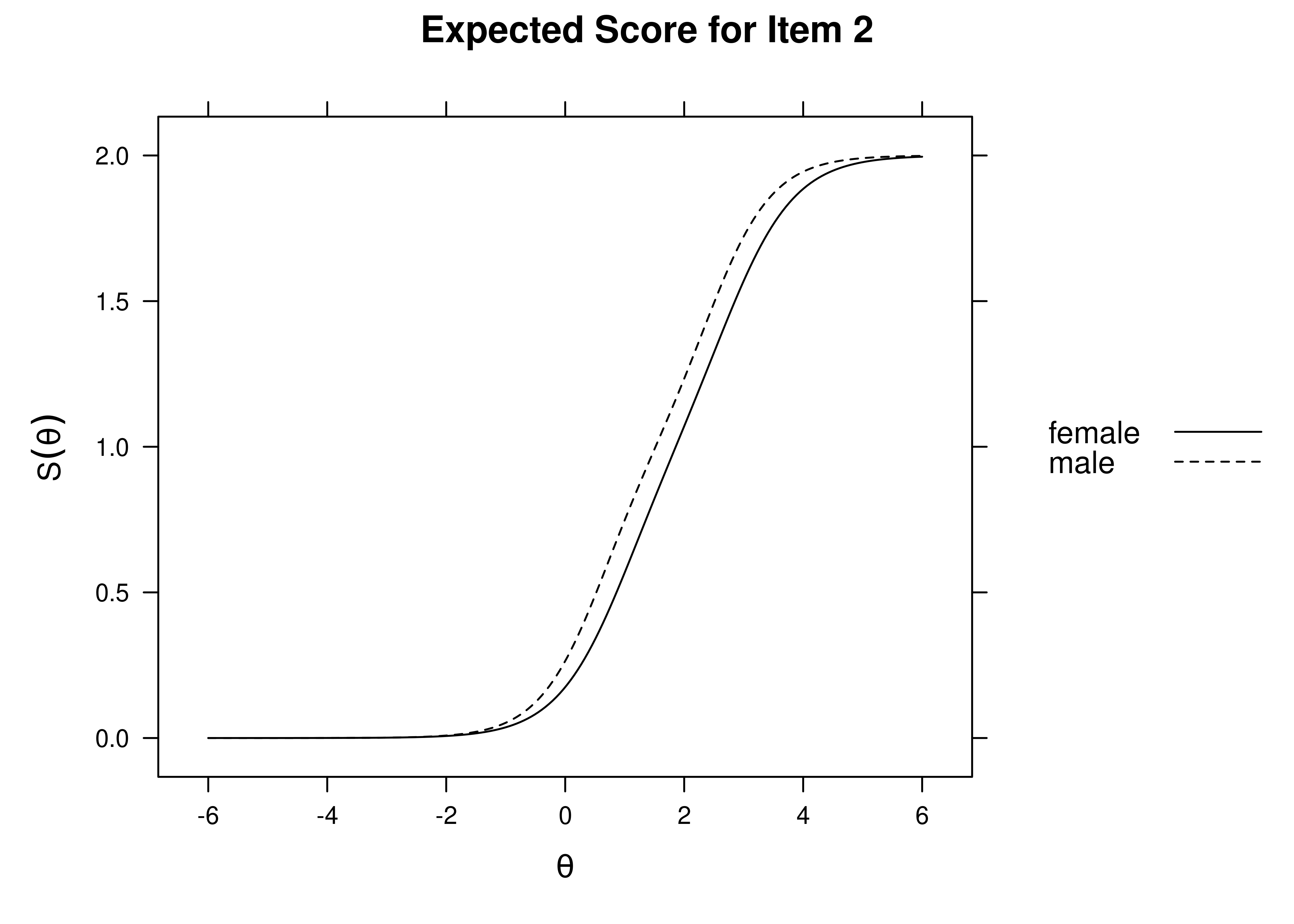

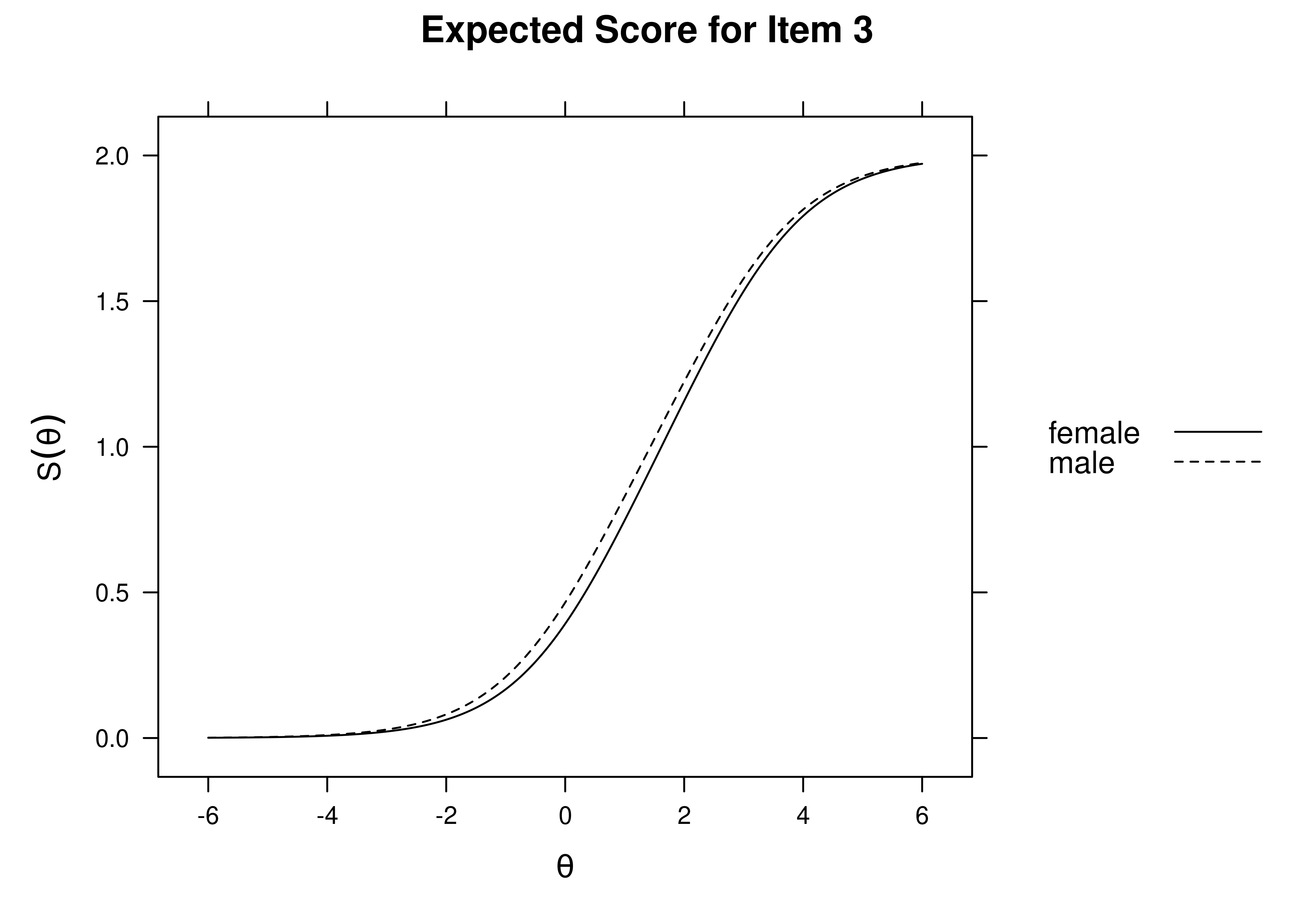

Using item response theory, we can examine differential item functioning (DIF). Evidence of DIF, indicates that there are differences between group in terms of discrimination and/or difficulty/severity of items. Differences between groups in terms of the item characteristic curve (which combines the item’s discrimination and severity) would be evidence against construct validity invariance between the groups and would provide evidence of bias. DIF examines stretching and compression of different groups. As an example, consider the item “bites others” in relation to externalizing problems. The item would be expected to show a weaker discrimination and higher severity in adults compared to children. DIF is discussed in Section 16.9.

16.2.2.1.3 Confirmatory Factor Analysis

Confirmatory factor analysis allows tests of measurement invariance (also called factorial invariance). Measurement invariance examines whether the factor structure of the underlying latent variables in the test is consistent across groups. It also examines whether the manifestation of the construct differs between groups. Measurement invariance is discussed in Section 16.10.

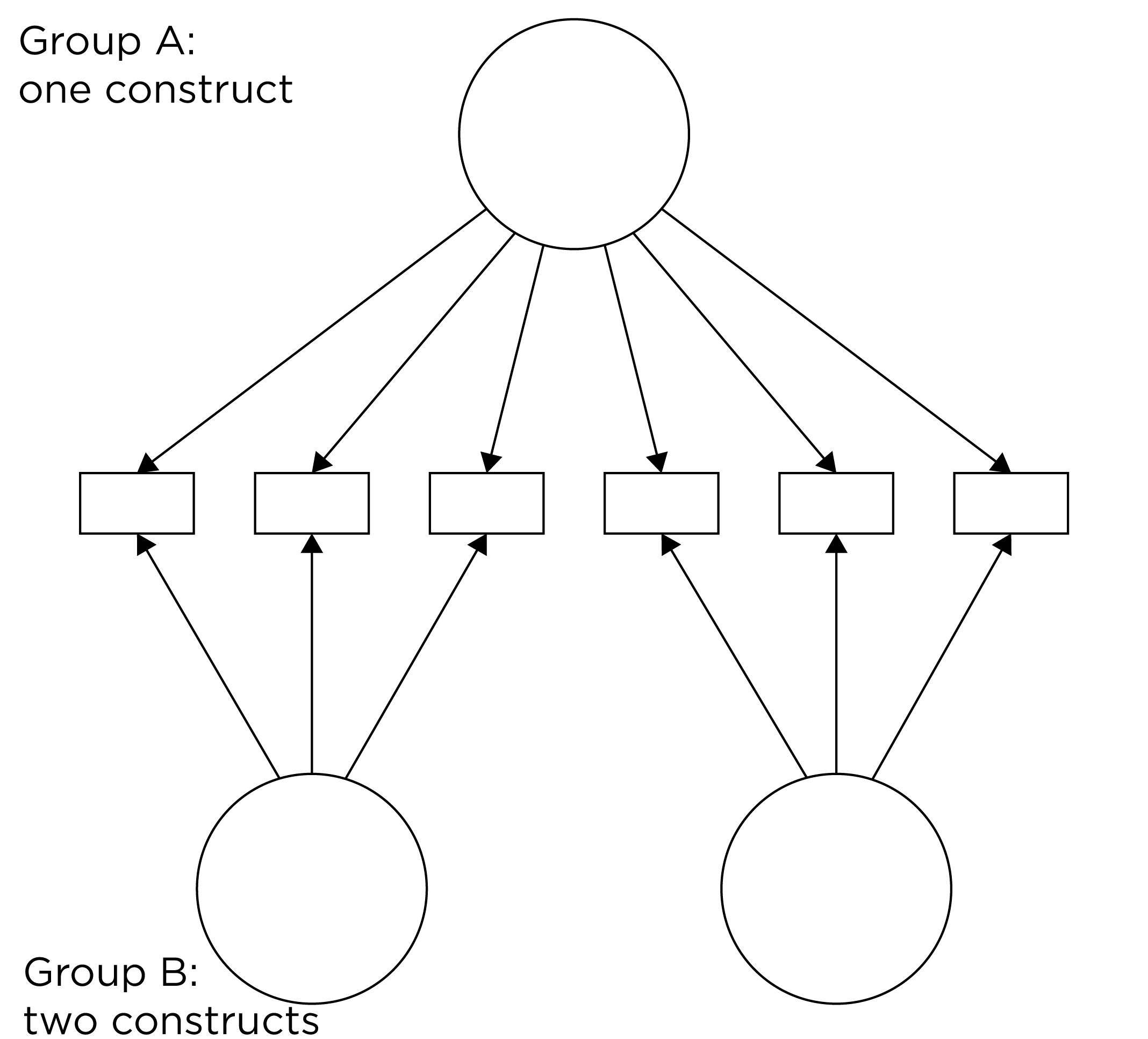

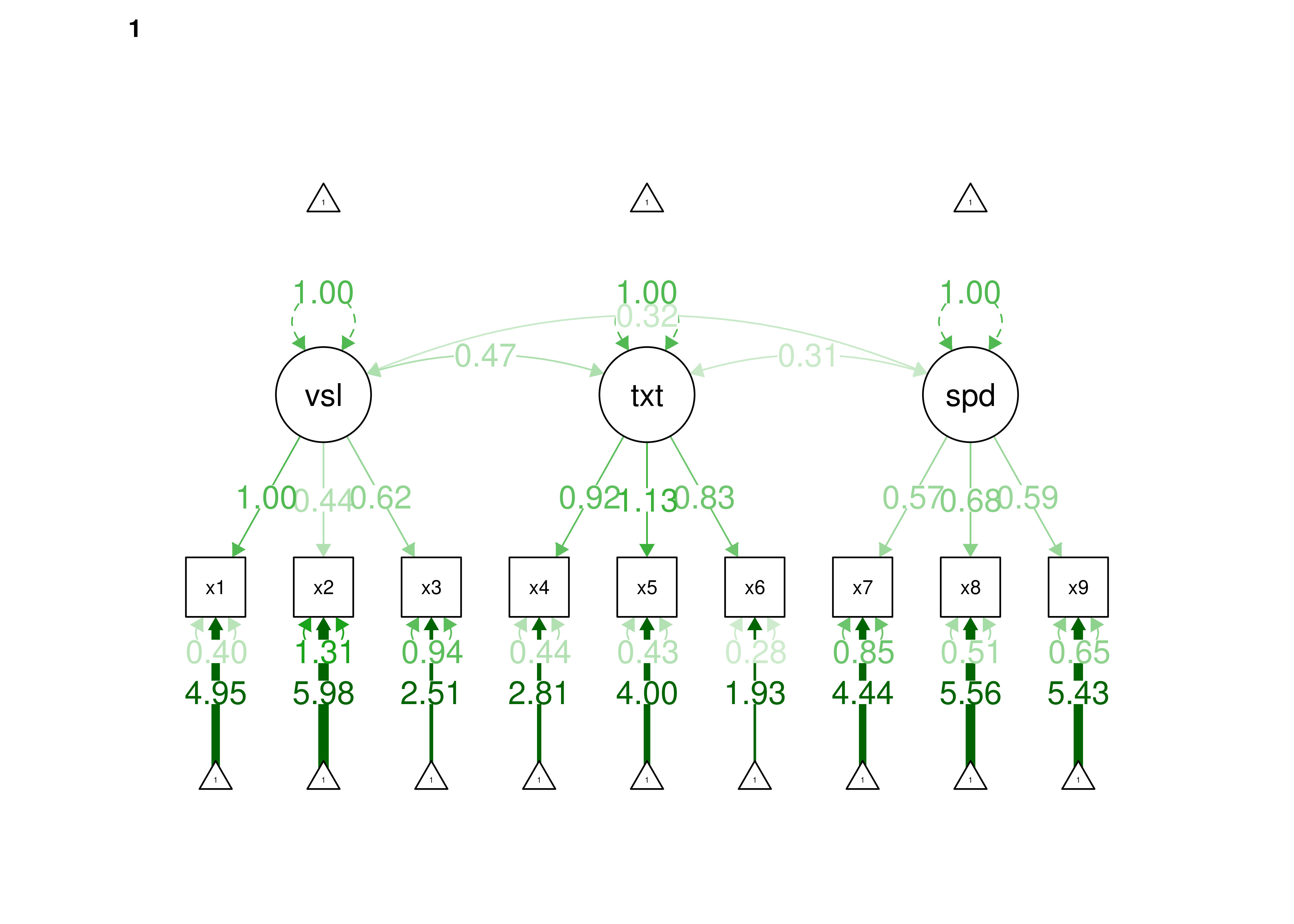

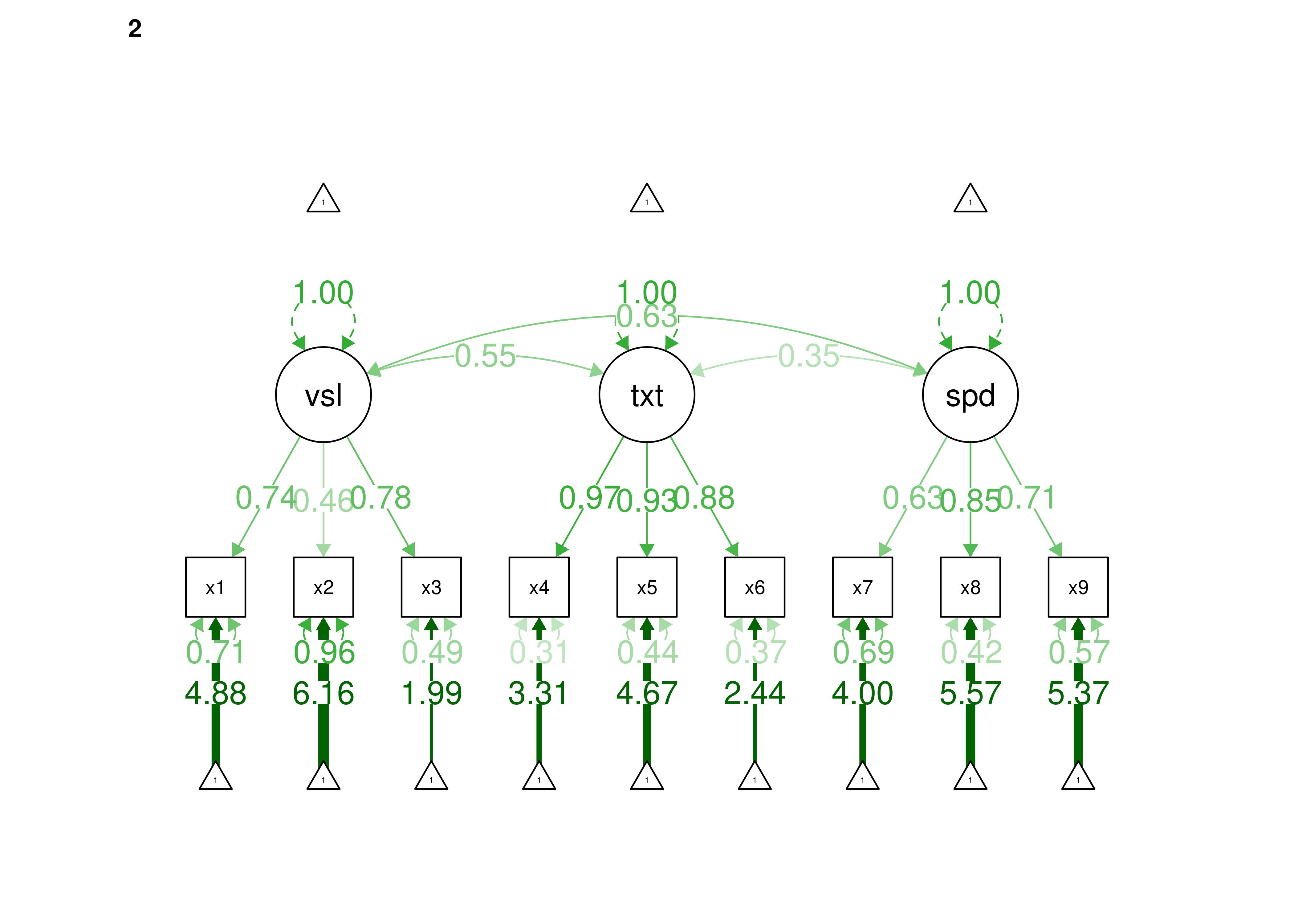

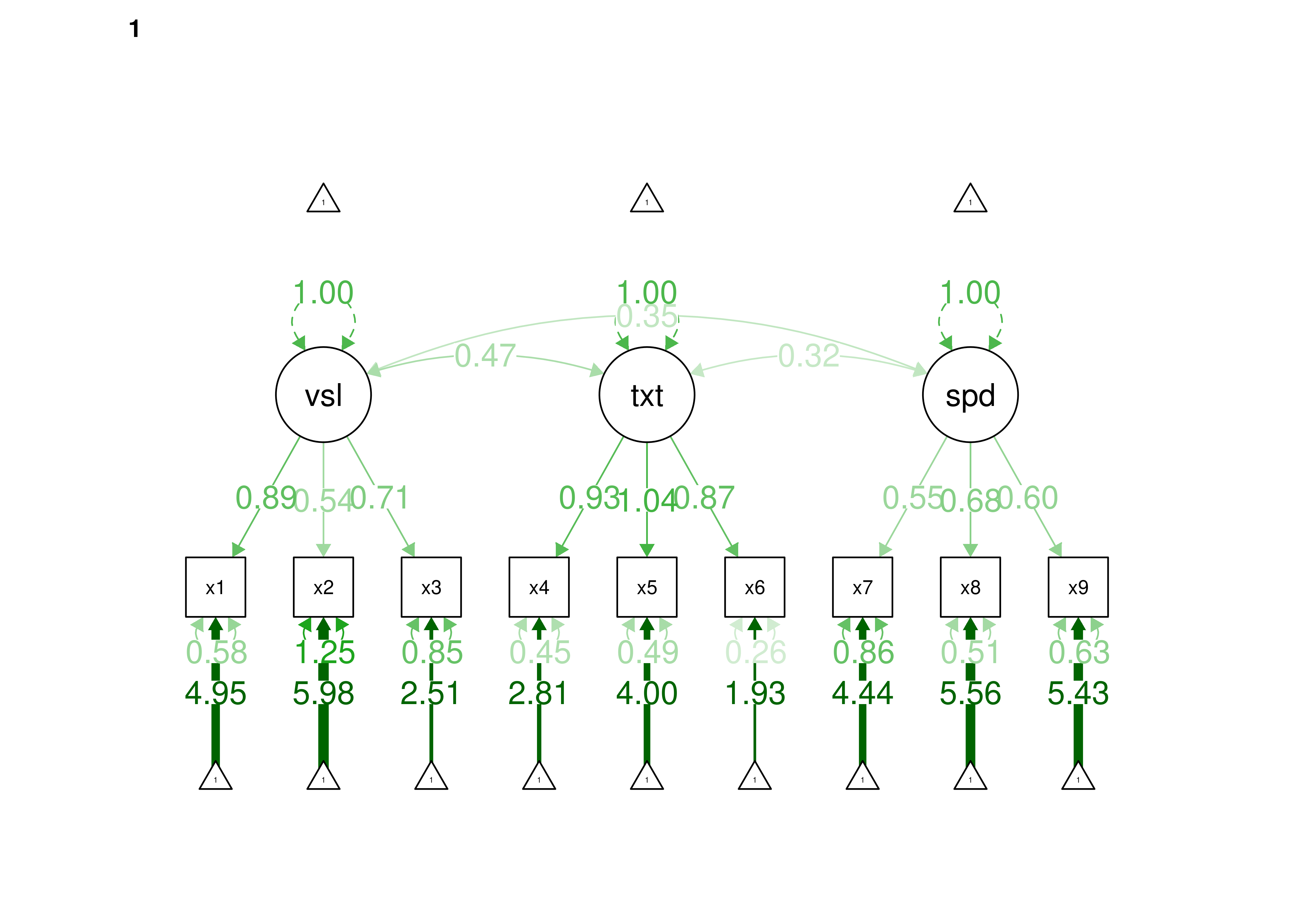

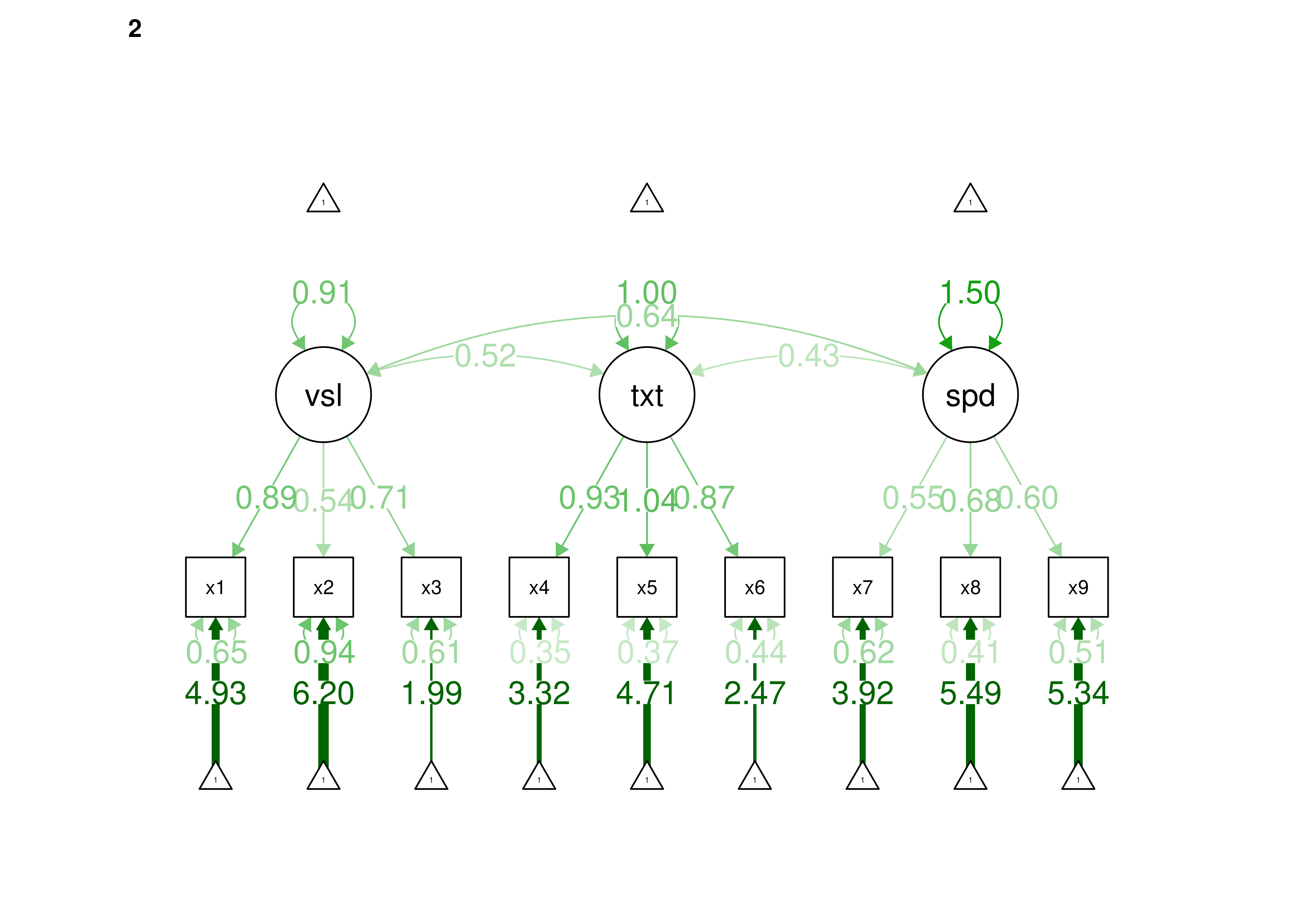

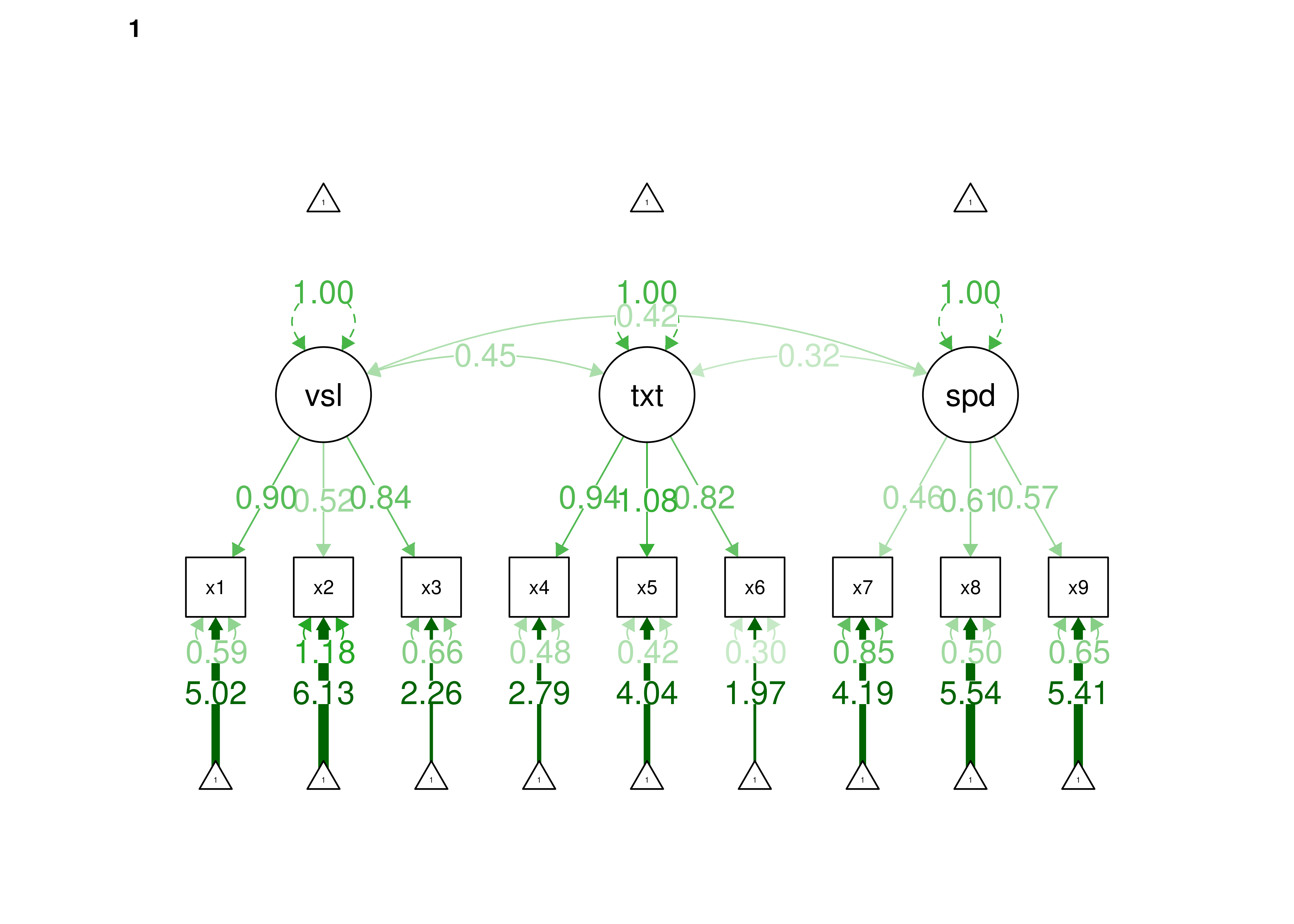

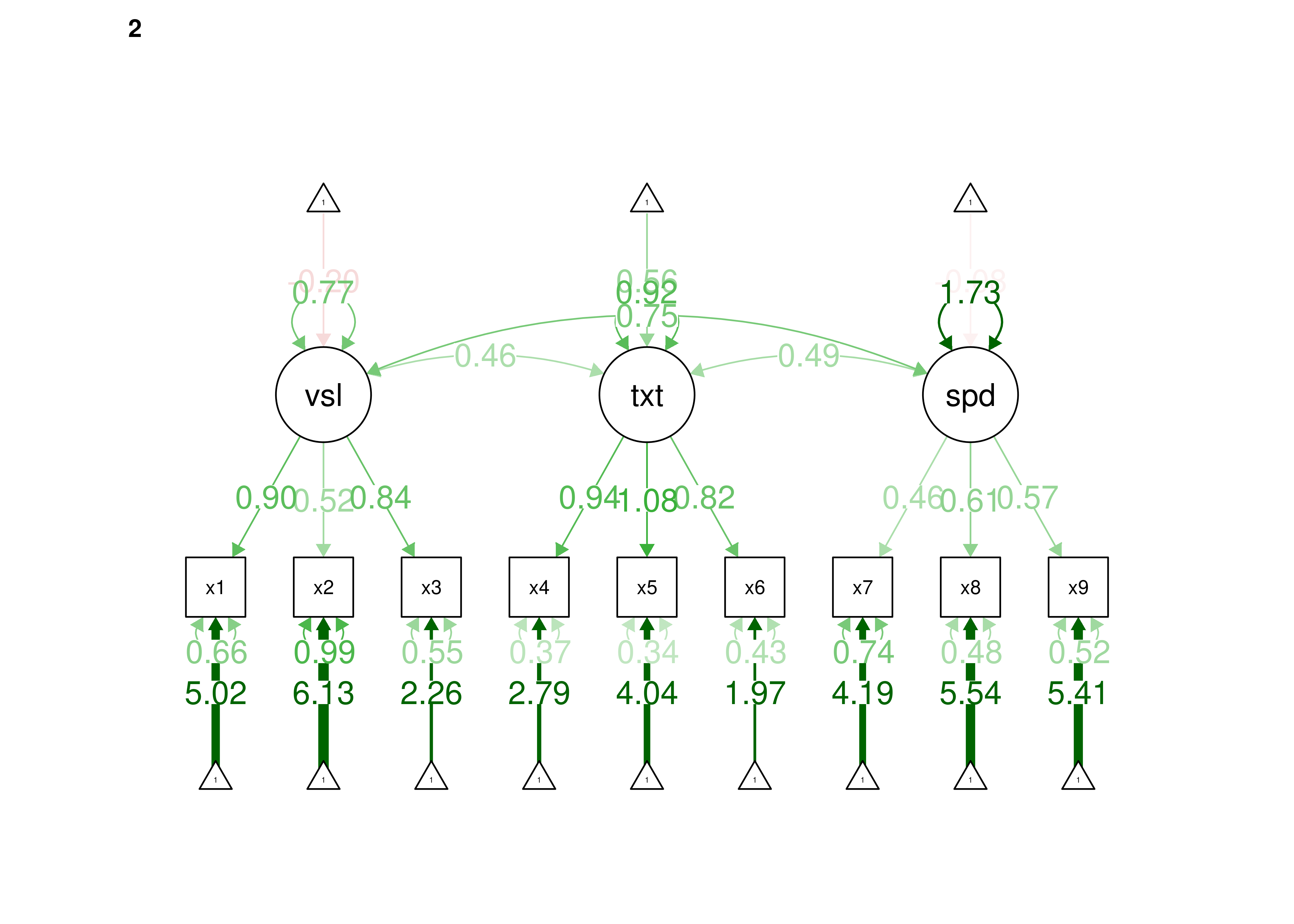

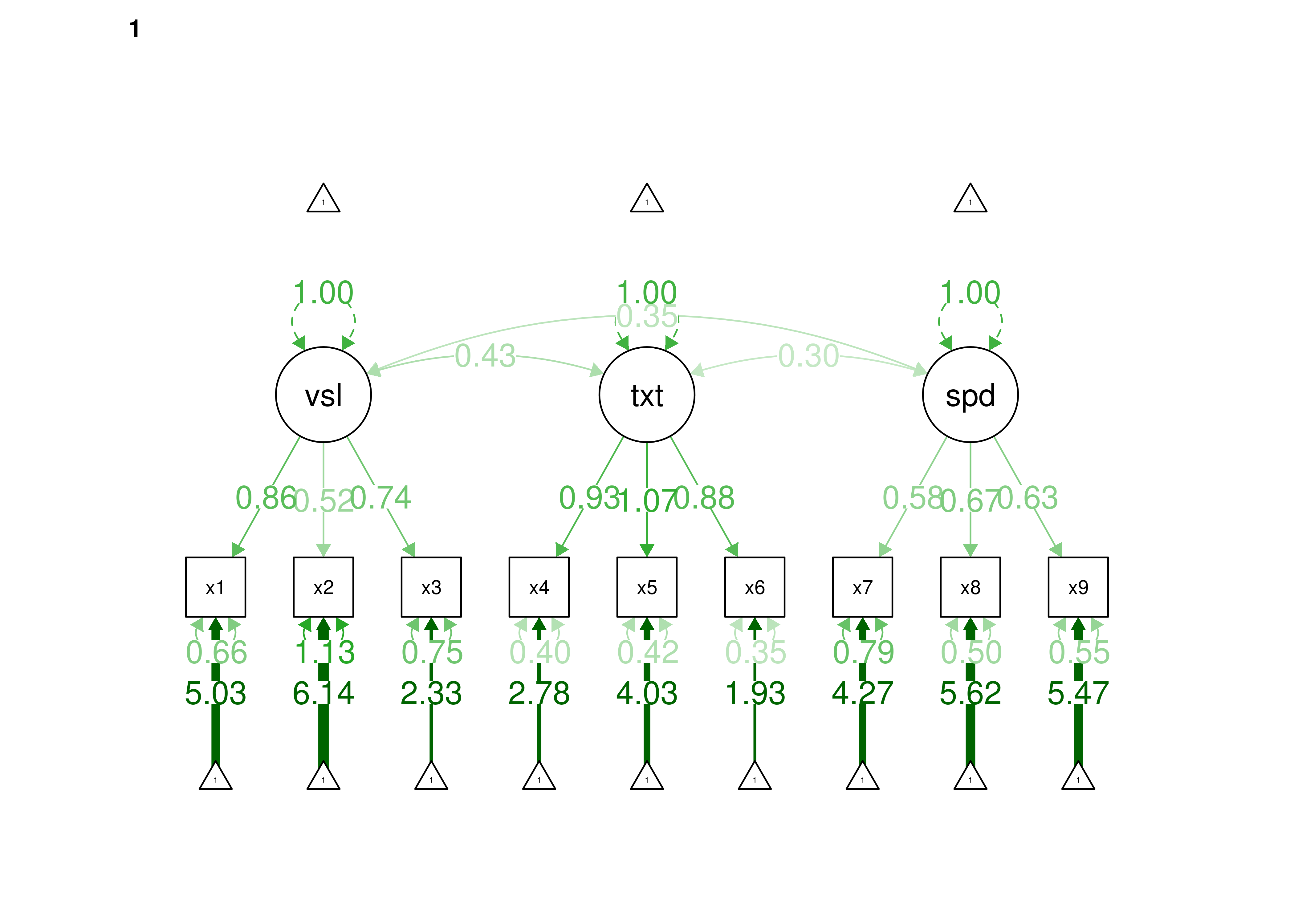

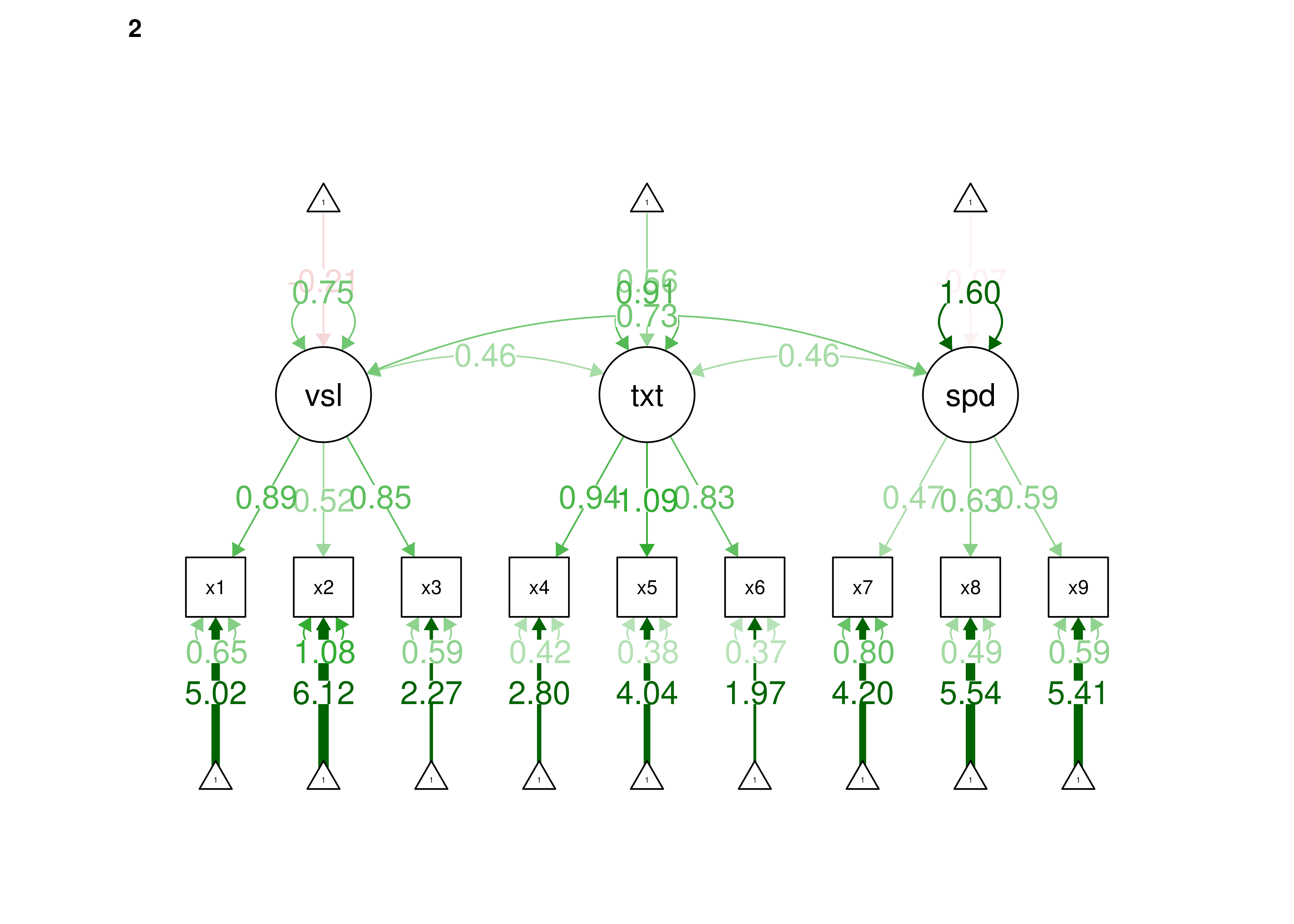

Even if you find the same slope and intercepts across groups in a prediction model, the measure would still be assessing different constructs across groups if the measure has a different factor structure between the groups. A different factor structure across groups is depicted in Figure 16.8.

Figure 16.8: Different Factor Structure Across Groups.

An example of a different factor structure across groups is the differentiation of executive functions from two factors to three factors (inhibition, working memory, cognitive flexibility) across childhood (K. Lee et al., 2013).

There are different degrees of measurement invariance (for a review, see Putnick & Bornstein, 2016):

- Configural invariance: same number of factors in each group, and which indicators load on which factors are the same in each group (i.e., the same pattern of significant loadings in each group).

- Metric (“weak factorial”) invariance: items have the same factor loadings (discrimination) in each group.

- Scalar (“strong factorial”) invariance: items have the same intercepts (difficulty/severity) in each group.

- Residual (“strict factorial”) invariance: items have the same residual/unique variances in each group.

16.2.2.1.4 Structural Equation Modeling

Structural equation modeling is a confirmatory factor analysis (CFA) model that incorporates prediction. Structural equation modeling allows examining differences in the underlying structure with differences in prediction in the same model.

16.2.2.1.5 Signal Detection Theory

Signal detection theory is a dynamic measure of bias. It allows examining the overall bias in selection systems, including both accuracy and errors at various cutoffs (sensitivity, specificity, positive predictive value, and negative predictive value), as well as accuracy across all possible cutoffs (the area under the receiver operating characteristic curve). While there may be similar predictive validity between groups, the type of errors we are making across groups might differ. It is important to decide which types of error to emphasize depending on the fairness goals and examining sensitivity/specificity to adjust cutoffs.

16.2.2.1.6 Empirical Evidence of Test Structure Bias

It is not uncommon to find items that show differences across groups in severity (intercepts) and/or discrimination (factor loadings). However, cross-group differences in item functioning tend to be small and not consistent across studies, suggesting that some of the differences may reflect Type I errors that result from sampling error and multiple testing. That said, some instances of cross-group differences in item parameters could reflect test structure bias that is real and important to address.

16.2.2.2 Theoretical/Judgmental Approaches to Identification

16.2.2.2.1 Facial Validity Bias

Facial validity bias considers the extent to which an average person thinks that an item is biased—i.e., the item has differing validity between minority and majority groups. If so, the item should be reconsidered. Does an item disfavor certain groups? Is the language specific to a particular group? Is it offensive to some people? This type of judgment moves into the realm of whether or not an item should be used.

16.2.2.2.2 Content Validity Bias

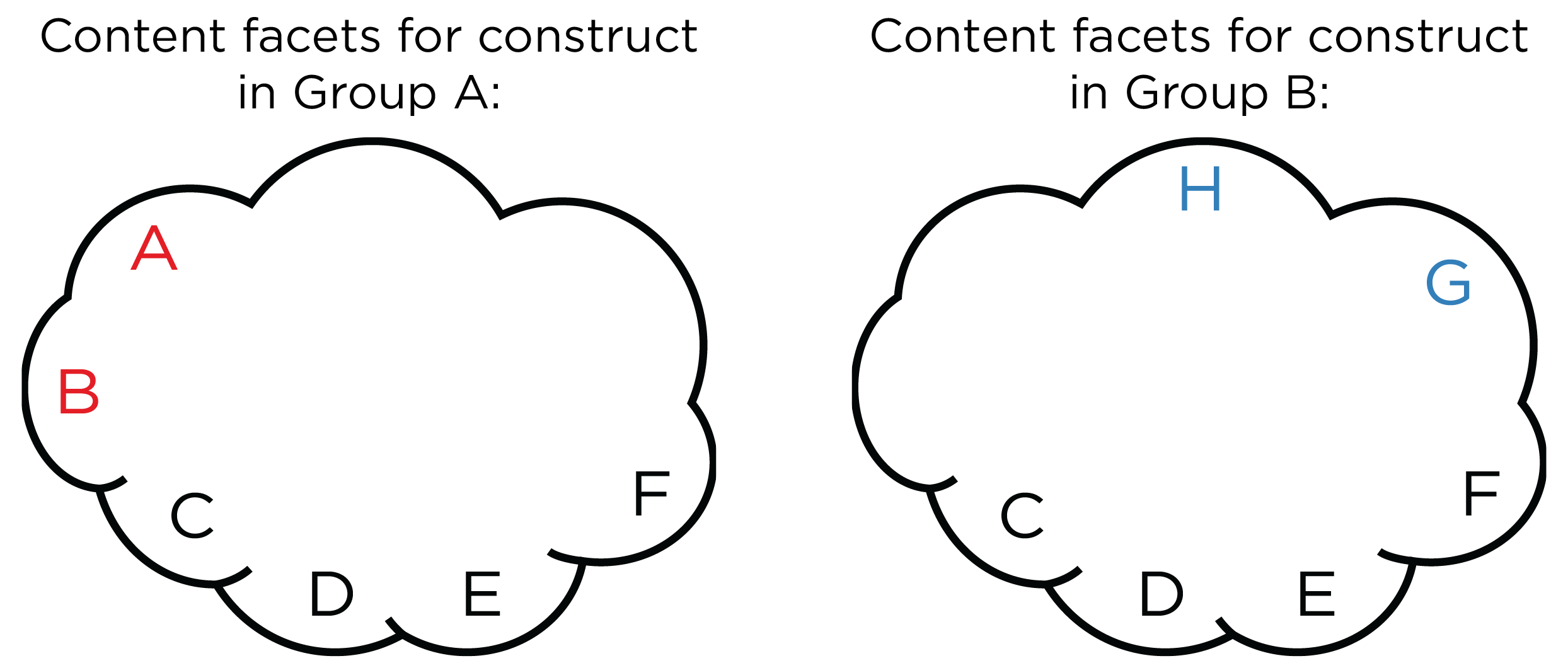

Content validity bias is determined by judgments of construct experts who look for items that do not do an adequate job assessing the construct between groups. A construct may include some content facets in one group, but may include different content facets in another group, as depicted in Figure 16.9.

Figure 16.9: Different Content Facets in a Given Construct for Two Groups.

Examples include information questions and vocabulary questions on the Wechsler Adult Intelligence Scale. If an item is linguistically complicated, grammatically complex or convoluted, or a double negative, it may be less valid or predictive for rural populations and those with less education.

Also, stereotype threat may contribute to content validity bias. Stereotype threat occurs when people are or feel at risk of conforming themselves to stereotypes about their social group, thus leading them to show poorer performance in ways that are consistent with the stereotype. Stereotype threat may partially explain why some women may perform more poorly on some math items than some men.

Another example of content validity bias is when the same measure is used to assess a construct across ages even though the construct shows heterotypic continuity. Heterotypic continuity occurs when a construct changes in its behavioral manifestation with development (Petersen et al., 2020). That is, the same construct may look different at different points in development. An example of a construct that shows heterotypic continuity is externalizing problems. In early childhood, externalizing problems often manifest in overt forms, including physical aggression (e.g., biting) and temper tantrums. By contrast, in adolescence and adulthood, externalizing problems more often manifest in covert ways, including relational aggression and substance use. Content validity and facial validity bias judgments are often related, but not always.

16.3 Examples of Bias

As described in the overview in Section 16.1, there is not much empirical evidence of test bias (Brown et al., 1999; Hall et al., 1999; Jensen, 1980; Kuncel & Hezlett, 2010; Reynolds et al., 2021; Reynolds & Suzuki, 2012; Sackett et al., 2008; Sackett & Wilk, 1994). That said, some item-level bias is not uncommon. One instance of test bias is that, historically, women’s grades in math and engineering classes tended to be under-estimated by the Scholastic Aptitude Test [SAT; M. J. Clark & Grandy (1984)]. Fernández & Abe (2018) review the evidence on other instances of test and item bias. For instance, test bias can occur if a subgroup is less familiar with the language, the stimulus material, or the response procedures, or if they have different response styles. In addition to test bias, there are known patterns of bias in clinical judgment, as described in Section 25.3.11.

16.4 Test Fairness

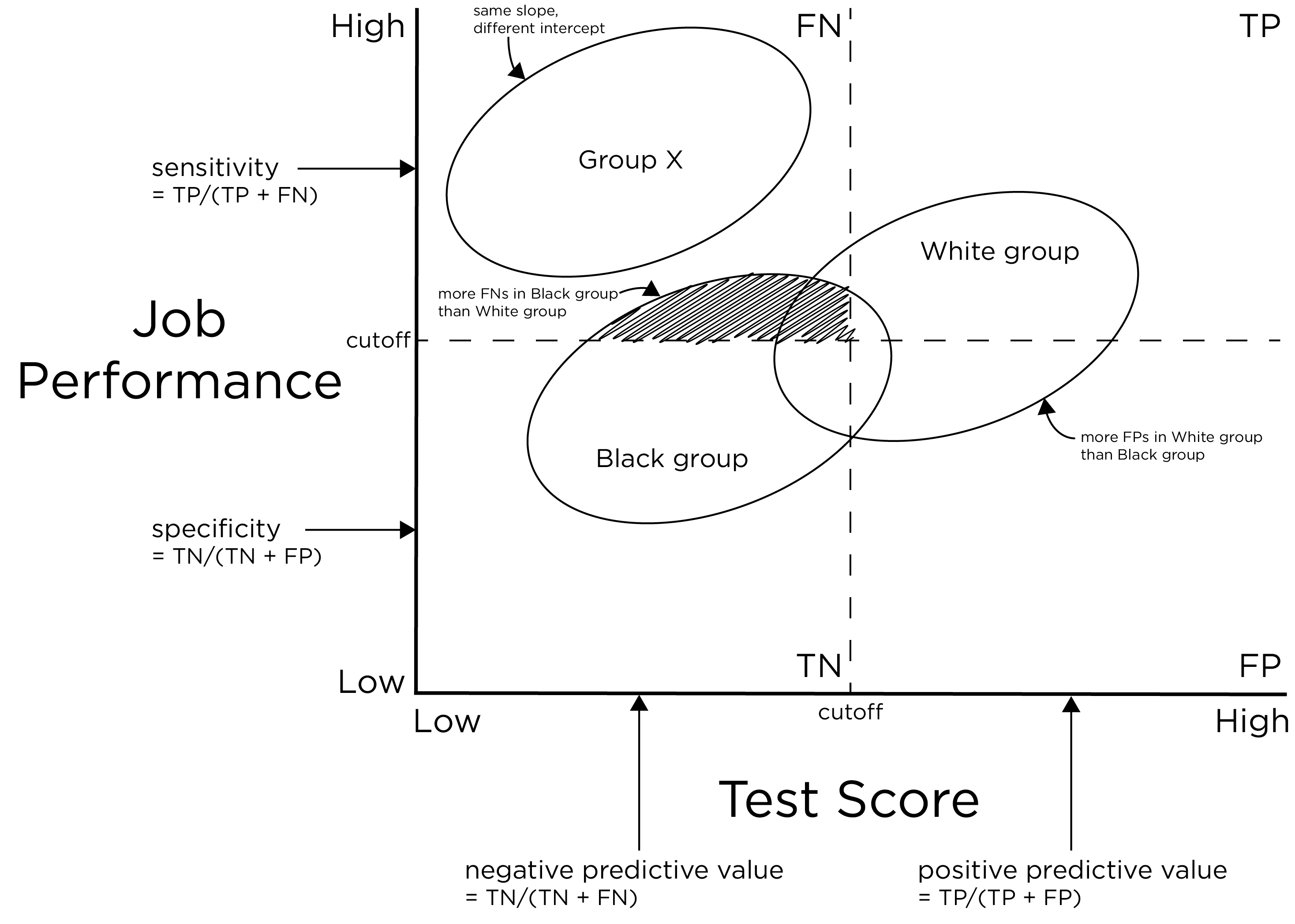

There is interest in examining more than just the accuracy of measures. It is also important to examine the errors being made and differentiate the weight or value of different kinds of errors (and correct decisions). Consider an example of an unbiased test, as depicted in Figure 16.10, adapted from L. S. Gottfredson (1994). Although the example is of a White group and a Black group, we could substitute any two groups into the example (e.g., males versus females).

Figure 16.10: Potential Unfairness in Testing. The ovals represent the distributions of individuals’ performance both on a test and a job performance criterion. TP = true positive; TN = true negative; FP = false positive; FN = false negative. (Adapted from L. S. Gottfredson (1994), Figure 1, p. 958. Gottfredson, L. S. (1994). The science and politics of race-norming. American Psychologist, 49(11), 955–963. https://doi.org/10.1037/0003-066X.49.11.955)

The example is of an unbiased test between White and Black job applicants. There are no differences between the two groups in terms of slope. If we drew a regression line, the line would go through the centroid of both ovals. Thus, the measure is equally predictive in both groups even though that the Black group failed the test at a higher rate than the White group. Moreover, there is no difference between the groups in terms of intercept. Thus, the performance of one group is not over-estimated relative to the performance of the other group. To demonstrate what a different intercept would look like, Group X shows a different intercept. In sum, there is no predictive validity bias between the two groups. But just because the test predicts just as well in both groups does not mean that the selection procedures are fair.

Although the test is unbiased, there are differences in the quality of prediction: there are more false negatives in the Black group compared to the White group. This gives the White group an advantage and the Black group additional disadvantages. If the measure showed the same quality of prediction, we would say the test is fair. The point of the example is that just because a test is unbiased does not mean that the test is fair.

There are two kinds of errors: false negatives and false positives. Each error type has very different implications. False negatives would be when the test predicts that an applicant would perform poorly and we do not give them the job even though they would have performed well. False negatives have a negative effect on the applicant. And, in this example, there are more false negatives in the Black group. By contrast, false positives would be when we predict that an applicant would do well, and we give them the job but they perform poorly. False positives are a benefit to the applicant but have a negative effect on the employer. In this example, there are more false positives in the White group, which is an undeserved benefit based on the selection ratio; therefore, the White group benefits.

In sum, equal accuracy of prediction (i.e., equal total number of errors) does not necessarily mean the test is fair; we must examine the types of errors. Merely ensuring accuracy does not ensure fairness!

16.4.1 Adverse Impact

Adverse impact is defined as rejecting members of one group at a higher rate than another group. Adverse impact is different from test validity. According to federal guidelines, adverse impact is present if the selection rate of one group is less than four-fifths (80%) the selection rate of the group with the highest selection rate.

There is much more evidence of adverse impact than test bias. Indeed, disparate impact of tests on personnel selection across groups is the norm rather than the exception, even when using valid tests that are unbiased, which in part reflect group-related differences in job-related skills (L. S. Gottfredson, 1994). Examples of adverse impact include:

- physical ability tests, which produce substantial adverse impact against women (despite over-estimation of women’s performance),

- cognitive ability tests, which produce substantial impact against some ethnic minority groups, especially Black and Hispanic people (despite over-estimation of Black and Hispanic people’s performance), even though cognitive ability tests tend to be among the strongest predictors of job performance (Sackett et al., 2008; Schmidt & Hunter, 1981), and

- personality tests, which produce higher estimates of dominance among men than women; it is unclear whether this has predictive bias.

16.4.2 Bias Versus Fairness

Whether a measure is accurate or shows test bias is a scientific question. By contrast, whether a test is fair and thus should be used for a given purpose is not just a scientific question; it is also an ethical question. It involves the consideration of the potential consequences of testing in terms of social values and consequential validity.

16.4.3 Operationalizing Fairness

There are many perspectives to what should be considered when evaluating test fairness (American Educational Research Association et al., 2014; Camilli, 2013; Committee on the General Aptitude Test Battery et al., 1989; Dorans, 2017; Fletcher et al., 2021; Gipps & Stobart, 2009; Helms, 2006; Jonson & Geisinger, 2022; Melikyan et al., 2019; Sackett et al., 2008; Thorndike, 1971; Zieky, 2006, 2013). As described in Fletcher et al. (2021), there are three primary ways of operationalizing fairness:

- Equal outcomes: the selection rate is the same across groups.

- Equal opportunity: the sensitivity (true positive rate; 1 \(-\) false negative rate) is the same across groups.

- Equal odds: the sensitivity is the same across groups and the specificity (true negative rate; 1 \(-\) false positive rate) is the same across groups.

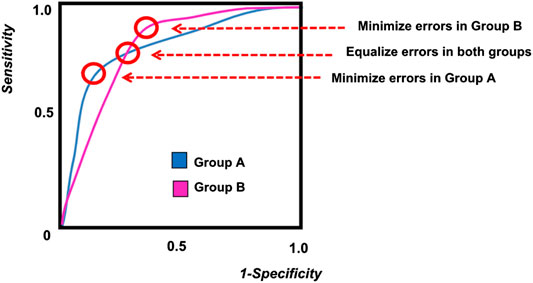

For example, the job selection procedure shows equal outcomes if the proportion of men selected is equal to the proportion of women selected. The job selection procedure shows equal opportunity if, among those who show strong job performance, the proportion of classification errors (false negatives) is the same for men and women. Receiver operating characteristic (ROC) curves are depicted for two groups in Figure 16.11. A cutoff that represents equal opportunity is depicted with a horizontal line (i.e., the same sensitivity) in Figure 16.11. The job selection procedure shows equal odds if (a), among those who show strong job performance, the proportion of classification errors (false negatives) is the same for men and women, and (b), among those who show poor job performance, the proportion of classification errors (false positives) is the same for men and women. A cutoff that represents equal odds is depicted where the ROC curve for Group A intersects with the ROC curve from Group B in Figure 16.11. The equal odds approach to fairness is consistent with a National Academy of Sciences committee on fairness (Committee on the General Aptitude Test Battery et al., 1989; L. S. Gottfredson, 1994). Approaches to operationalizing fairness in the context of prediction models are described by Paulus & Kent (2020).

Figure 16.11: Receiver Operating Characteristic (ROC) Curves for Two Groups. (Figure reprinted from Fletcher et al. (2021), Figure 2, p. 3. Fletcher, R. R., Nakeshimana, A., & Olubeko, O. (2021). Addressing fairness, bias, and appropriate use of artificial intelligence and machine learning in global health. Frontiers in Artificial Intelligence, 3(116). https://doi.org/10.3389/frai.2020.561802)

It is not possible to meet all three types of fairness simultaneously (i.e., equal selection rates, sensitivity, and specificity across groups) unless the base rates are the same across groups or the selection is perfectly accurate (Fletcher et al., 2021). In the medical context, equal odds is the most common approach to fairness. However, using the cutoff associated with equal odds typically reduces overall classification accuracy. And, changing the cutoff for specific groups can lead to negative consequences. In the case that equal odds results in a classification accuracy that is too low, it may be worth considering using separate assessment procedures/tests for each group. In general, it is best to follow one of these approaches to fairness. It is difficult to get right, so try to minimize negative impact. Many fairness supporters argue for simpler rules. In the 1991 Civil Rights Act, score adjustments based on race, gender, and ethnicity (e.g., within-race norming or race-conscious score adjustments) were made illegal in personnel selection (L. S. Gottfredson, 1994).

Another perspective to fairness is that selection procedures should predict job performance and if they are correlated with any group membership (e.g., race, socioeconomic status, or gender), the test should not be used (Helms, 2006). That is, according to Helms, we should not use any test that assesses anything other than the construct of interest (job performance). Unfortunately, however, no measures like this exist. Every measure assesses multiple things, and factors such as poverty can have long-lasting impacts across many domains.

Another perspective to fairness is to make the selection procedures equal the number of successes within each group (Thorndike, 1971). According to this perspective, if you want to do selection, you should hire all people, then look at job performance. If among successful employees, 60% are White and 40% are Black, then set this selection rate for each group (i.e., hiring 80% White individuals and 20% Black individuals is not okay). According to this perspective, a selection system is only fair if the majority–minority differences on the selection device used are equal in magnitude to majority–minority differences in job performance. Selection criteria should be made based on prior distributions of success rates. However, you likely will not ever really know the true base rate in these situations. No one uses this approach because you would have a period where you have to accept everyone to find the percent that works. Also, this would only work in a narrow window of time because the selection pool changes over time.

There are lots of groups and subgroups. Ensuring fairness is very complex, and there is no way to accomplish the goal of being equally fair to all people. Therefore, do the best you can and try to minimize negative impact.

16.5 Correcting For Bias

16.5.1 What to Do When Detecting Bias

When examining item bias (using differential item functioning/DIF or measurement non-invariance) with many items (or measures) across many groups, there can be many tests, which will make it likely that DIF/non-invariance will be detected, especially with a large sample. Some detected DIF may be artificial or trivial, but other DIF may be real and important to address. It is important to consider how you will proceed when detecting DIF/non-invariance. Considerations of effect size and theory can be important for evaluating the DIF/non-invariance and whether it is negligible or important to address.

When detecting bias, there are several steps to take. First, consider what the bias indicates. Does the bias present adverse impact for a minority group? For what reasons might the bias exist? Second, examine the effect size of the bias. If the effects are small, if the bias does not present adverse impact for a minority group, and if there is no compelling theoretical reason for the bias, the bias might not be sufficient to scrap the instrument for the population. Some detected bias may be artificial, but other bias may be real. Gender and cultural differences have shown a number of statistically significant effects for a number of different assessment purposes, but many of the observed effects are quite small and likely trivial, and they do not present compelling reasons to change the assessment (Youngstrom & Van Meter, 2016).

However, if you find bias, correct for it! There are a number of score adjustment and non-score adjustment approaches to correct for bias, as described in Sections 16.5.2 and 16.5.3. If the bias occurs at the item level (e.g., test structure bias), it is generally recommended to remove or resolve items that show non-negligible bias. There are three primary options: (1) drop the item for both groups, (2) drop the item for one group but keep it for the other group, or (3) freely estimate the parameters for the item across groups. Addressing items that show larger bias can also reduce artificial bias in other items (Hagquist & Andrich, 2017). Thus, researchers are encouraged to handle item bias sequentially from high to low in magnitude. If the bias occurs at the test score level (e.g., predictive bias), score adjustments may be considered.

There are situations measurement invariance across time may not be expected (and may thus be problematic to require). For instance, if there is a treatment that occurs between two measurement occasions, the factor structure might change due to the treatment rather than due to the mere passage of time. Thus, it would be helpful to know whether the instrument shows measurement invariance across a similar timeframe when no treatment is occurring. Another situation where measurement invariance across time may not be expected is when the construct shows heterotypic continuity (Petersen et al., 2020). If the construct changes in its behavioral manifestation with development, then measurement invariance may not be expected across wide spans of time.

If you do not correct for bias, consider the impact of the test, procedure, and selection procedure when interpreting scores. Interpret scores with caution and provide necessary caveats in resulting papers or reports regarding the interpretations in question. In sum, it is important to examine the possibility of bias—it is important to consider how much “erroneous junk” you are introducing into your research.

16.5.2 Score Adjustment to Correct for Bias

Score adjustment involves adjusting scores for a particular group or groups.

16.5.2.1 Why Adjust Scores?

There may be several reasons to adjust scores for various groups in a given situation. First, there may be social goals to adjust scores. For example, we may want our selection device to yield personnel that better represent the nation or region, including diversity of genders, races, majors, social classes, etc. Score adjustments are typically discussed with respect to racial minority differences due to historical and systemic inequities. Our society aims to provide equal opportunity, including the opportunity to gain a fair share (i.e., proportional representation) of jobs. A diversity of perspectives in a job is a strength; a diversity of perspectives can lead to greater creativity and improved problem-solving. A second potential reason that we may want to apply score adjustment is to correct for bias. A third potential reason that we may want to apply score adjustment is to improve the fairness of a test.

16.5.2.2 Types of Score Adjustment

There are a number of potential techniques that have been used in attempts to correct for bias, i.e., to reduce negative impact of the test on an under-represented group. What is considered an under-represented group may depend on the context. For instance, men are under-represented compared to women as nurses, preschool teachers, and college students. However, men may not face the same systemic challenges compared to women, so even though men may show under-representation in some domains, it is arguable whether scores should be adjusted to increase their representation. Techniques for score adjustment include:

- Bonus points

- Within-group norming

- Separate cutoffs

- Top-down selection from different lists

- Banding

- Banding with bonus points

- Sliding band

- Separate tests

- Item elimination based on group differences

16.5.2.2.1 Bonus Points

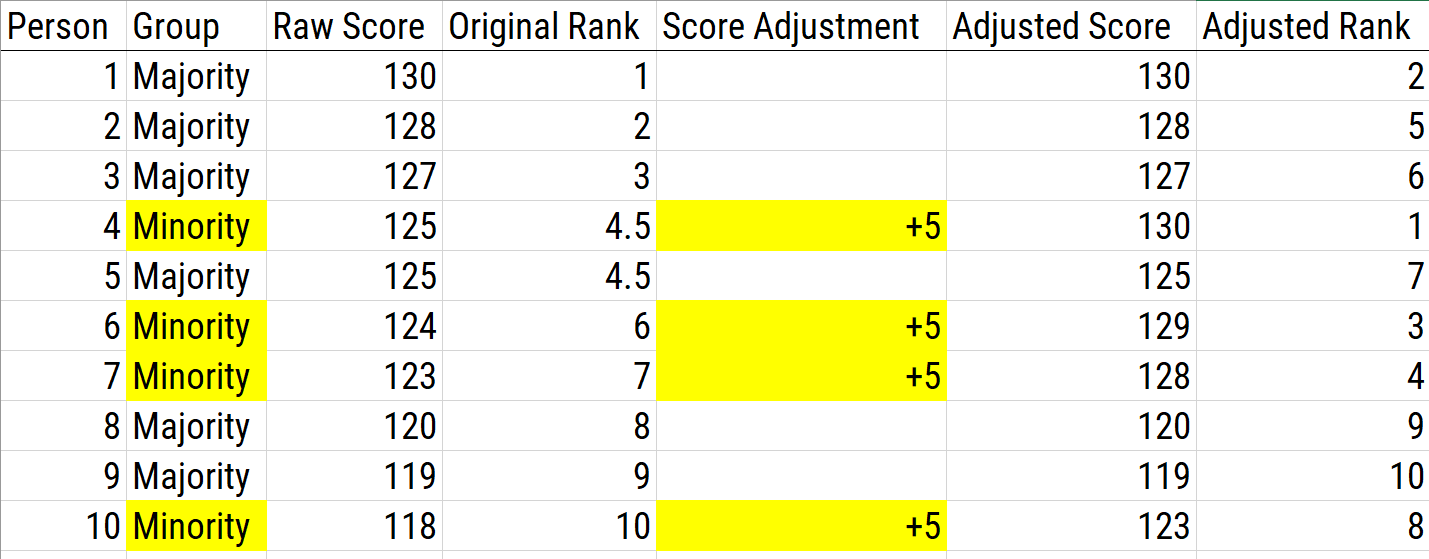

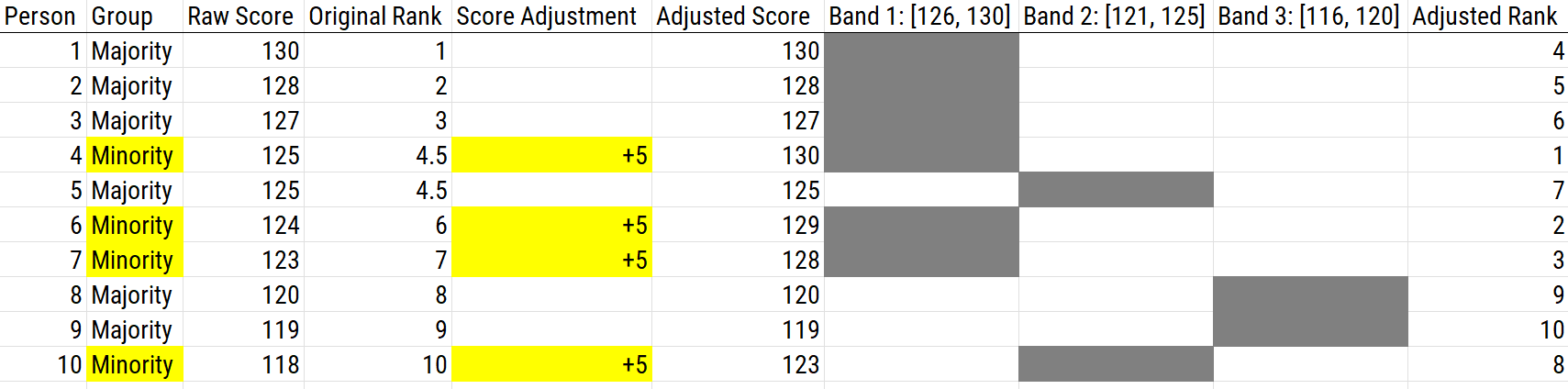

Providing bonus points involves adding a constant number of points to the scores of all individuals who are members of a particular group with the goal of eliminating or reducing group differences. Bonus points is used to correct for predictive bias differences in intercepts between groups. An example of bonus points is military veterans in placement for civil service jobs—points are added to the initial score for all veterans (e.g., add 5 points to test scores of all veterans). An example of using bonus points as a score adjustment is depicted in Figure 16.12.

Figure 16.12: Using Bonus Points as a Scoring Adjustment.

There are several pros of bonus points. If the distribution of each group is the same, this will effectively reduce group differences. Moreover, it is a simple way of impacting test selection and procedure without changing the test, which is therefore a great advantage. There are several cons of bonus points. If there are differences in group standard deviations, adding bonus points may not actually correct for bias. The use of bonus points also obscures what is actually being done to scores, so other methods like using separate cutoffs may be more explicit. In addition, the simplicity of bonus points is also a great disadvantage because it is easily understood and often not viewed as “fair” because some people are getting extra points that others do not.

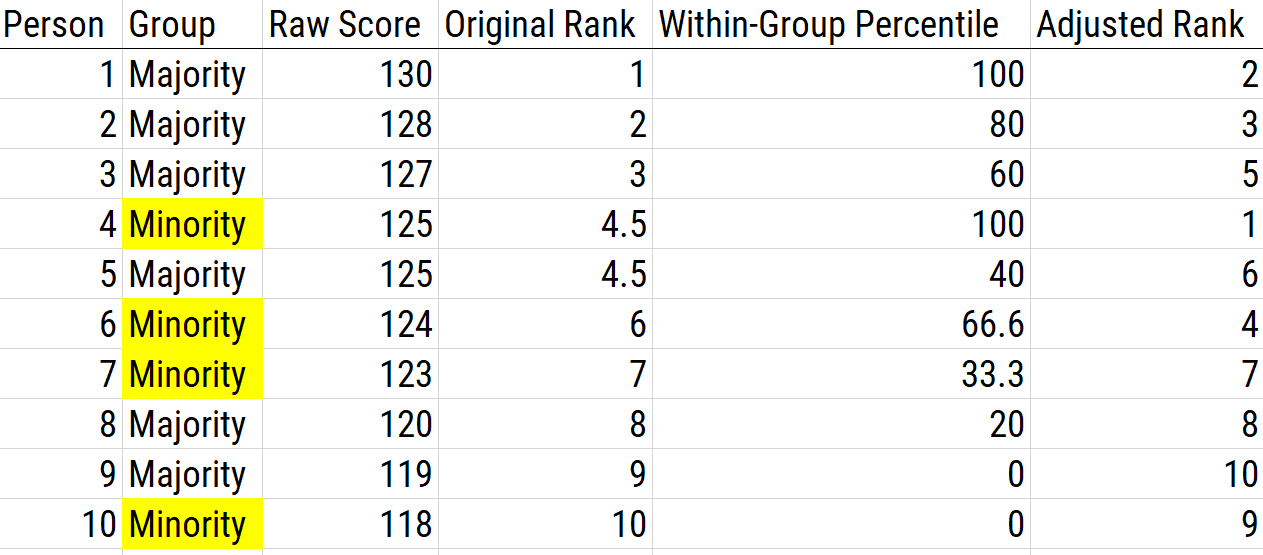

16.5.2.2.2 Within-Group Norming

A norm is the standard of performance that a person’s performance can be compared to. Within-group norming treats the person’s group in the sample as the norm. Within-group norming converts an individual’s score to standardized scores (e.g., T scores) or percentiles within one’s own group. Then, the people are selected based on the highest standard scores across groups. Withing-group norming is used to correct for predictive bias differences in slopes between groups. An example of using within-group norming as a score adjustment is depicted in Figure 16.13.

Figure 16.13: Using Within-Group Norming as a Scoring Adjustment.

There are several pros of within-group norming. First, it accounts for differences in group standard deviations and means, so it does not have the same problem as bonus points and is generally more effective at eliminating adverse impact compared to bonus points. Second, some general (non-group-specific) norms are clearly irrelevant for characterizing a person’s functioning. Group-specific norms aim to describe a person’s performance relative to people with a similar background, thus potentially reducing cultural bias. Third, group-specific norms may better reflect cultural, educational, socioeconomic, and other factors that may influence a person’s score (Burlew et al., 2019). Fourth, group-specific norms may increase specificity, and reduce over-pathologizing by preventing giving a diagnosis to people who might not show a condition (Manly & Echemendia, 2007).

There are several cons of within-group norming. First, group differences could be maintained if one decides to norm based on a reference sample or, when scores are skewed, a local sample, especially when using standardized scores. However, percentile scores will consistently eliminate adverse impact. Second, using group-specific norms may obscure background variables that explain underlying reasons for group-related differences in test performance (Manly, 2005; Manly & Echemendia, 2007). Third, group-specific norms do not address the problem if the measure shows test bias (Burlew et al., 2019). Fourth, group-specific norms may reduce sensitivity to detect conditions (Manly & Echemendia, 2007). For instance, they may prevent people from getting treatment who would benefit. It is worth noting that within-group norming on the basis of sex, gender, and ethnicity is illegal for the basis of personnel selection according to the 1991 Civil Rights Act.

As an example of within-group norming, the National Football League used to use race-norming for identification of concussions. The effect of race-norming, however, was that it lowered Black players’ concussion risk scores, which prevented many Black players from being identified as having sustained a concussion and from receiving needed treatment. Race-norming compared the Black football players cognitive test scores to group-specific norms: the cognitive test scores of Black people in the general population (not to common norms). Using Black-specific norms assumed that Black football players showed lower cognitive ability than other groups, so a low cognitive ability score for a Black player was less likely to be flagged as concerning. Thus, the race-specific norms led to lower identified rates of concussions among Black football players compared to White football players. Due to the adverse impact, Black players sued the National Football League, and the league stopped the controversial practice of race-norming for identification of concussion (https://www.washingtonpost.com/sports/2021/06/03/nfl-concussion-settlement-race-norming/; archived at https://perma.cc/KN3L-5Z7R).

A common question is whether to use group-specific norms or common norms. Group-specific norms are a controversial practice, and the answer depends. If you are interested in a person’s absolute functioning (e.g., for determining whether someone is concussed or whether they are suitable to drive), recommendations are to use common norms, not group-specific norms (Barrash et al., 2010; Silverberg & Millis, 2009). If, by contrast, you are interested in a person’s relative functioning compared to a specific group, within-group norming could make sense if there is an appropriate reference group. The question about which norms to use are complex, and psychologists should evaluate the cost and benefit of each norm, and use the norm with the greatest benefit and the least cost for the client (Manly & Echemendia, 2007).

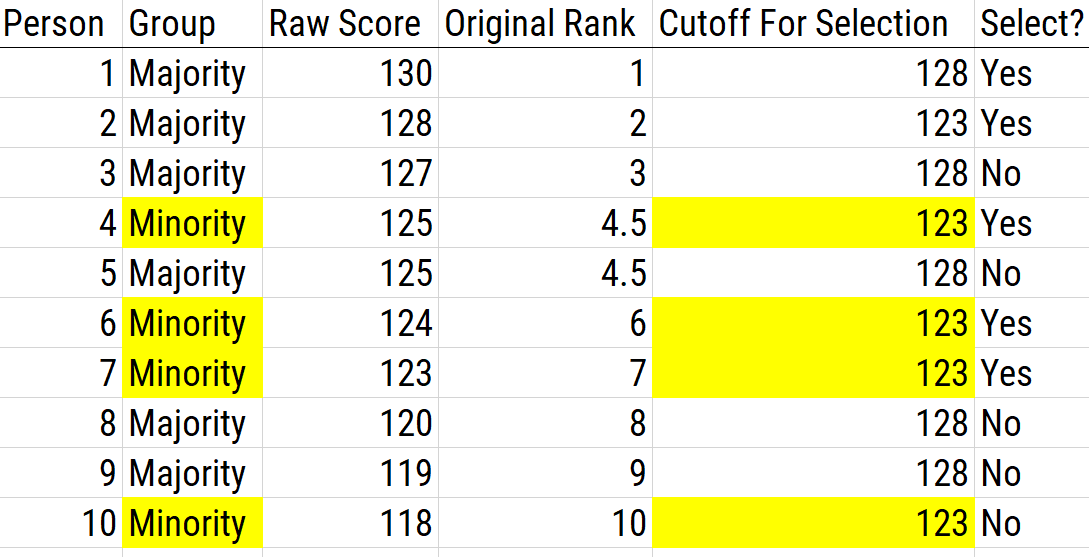

16.5.2.2.3 Separate Cutoffs

Using separate cutoffs involves using a separate cutoff score per group and selecting the top number from each group. That is, using separate cutoffs involves using different criteria for each group. Using separate cutoffs functions the same as adding bonus points, but it has greater transparency—i.e., you are lowering the standard for one group compared to another group. An example of using separate cutoffs as a score adjustment is depicted in Figure 16.14.

Figure 16.14: Using Separate Cutoffs as a Scoring Adjustment. In this example, the cutoff for the majority group is 128; the cutoff for the minority group is 123.

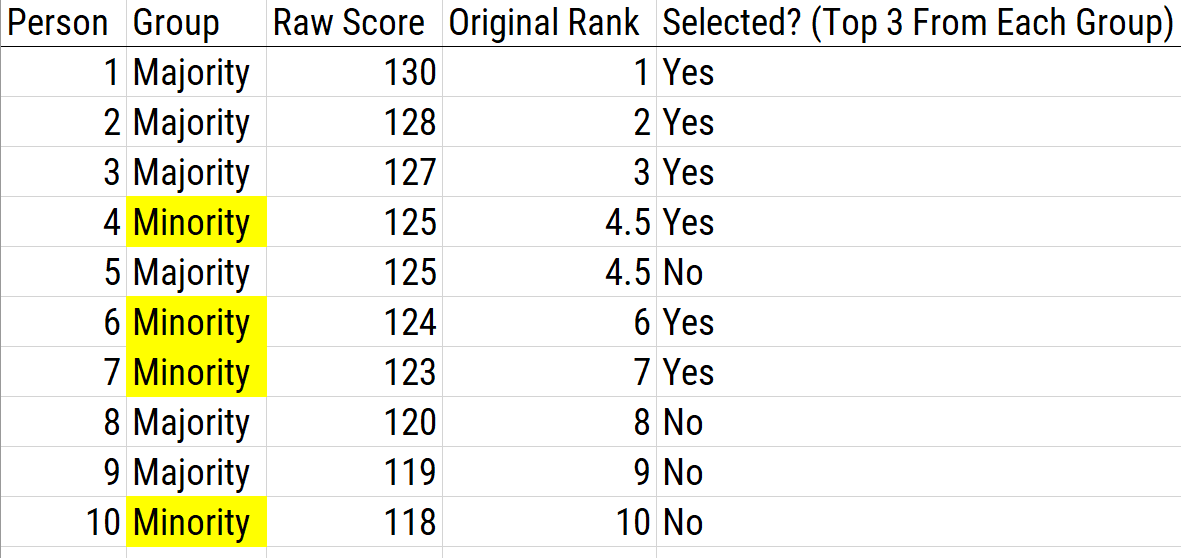

16.5.2.2.4 Top-Down Selection from Different Lists

Top-down selection from different lists involves taking the best from two different lists according to a preset rule as to how many to select from each group. Top-down selection from different lists functions the same as within-group norming. An example of using top-down selection from different lists as a score adjustment is depicted in Figure 16.15.

Figure 16.15: Using Top-Down Selection From Different Lists as a Scoring Adjustment. In this example, the top three candidates are selected from each group.

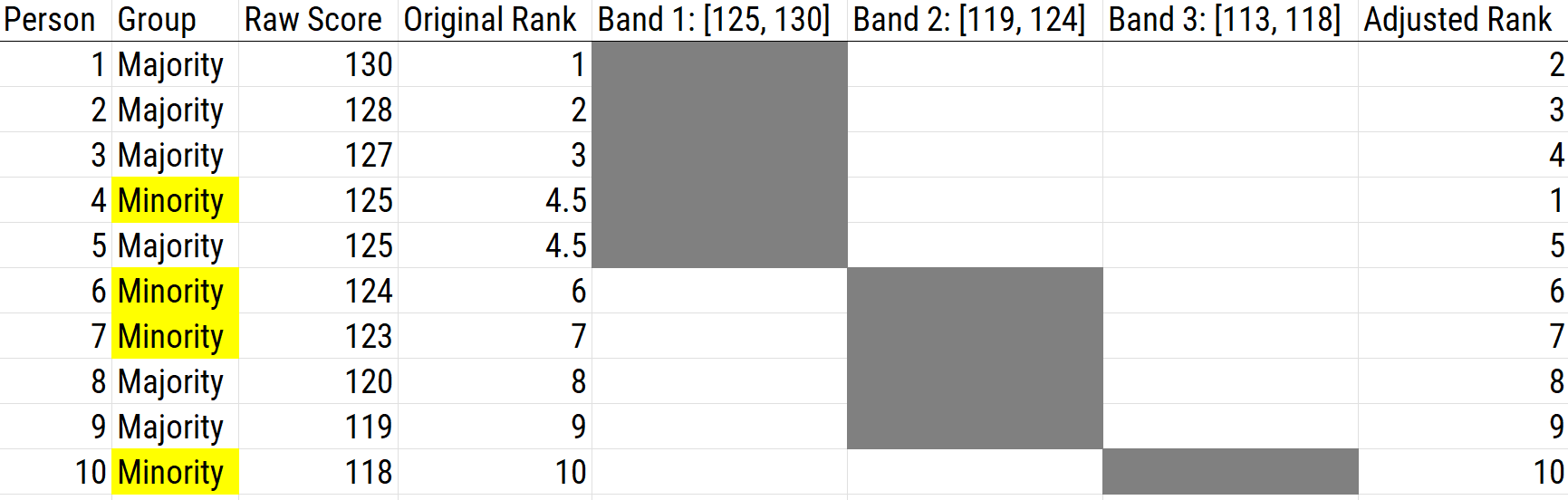

16.5.2.2.5 Banding

Banding uses a tier system that is based on the assumption that individuals within a specific score range are regarded as having equivalent scores. So that we do not over-estimate small score differences, scores within the same band are seen as equivalent—and the order of selection within the band can be modified depending on selection goals. The standard error of measurement (SEM) is used to estimate the precision (reliability) of the test scores, and it is used as the width of the band.

Consider an example: if a person received a score with confidence interval of 18–22, then scores between 18 to 22 are not necessarily different due to random fluctuation (measurement error). Therefore, scores in that range are considered the same, and we take a band of scores. However, banding by itself may not result in increased selection of lower scoring groups. The band provides a subsample of applicants so that we can use other criteria (other than the test) to select a candidate. Giving “minority preference” involves selecting members of minority group in a given band before selecting members of the majority group. An example of using banding as a score adjustment is depicted in Figure 16.16.

Figure 16.16: Using Banding as a Scoring Adjustment.

The problem with banding is that bands are set by the standard error of measurement: you can select the first group from the first band, but then whom do you select after the first band? There is no rationale where to “stop” the band because there are indistinguishable scores on the edges of each band to the next band. That is, 17 is indistinguishable from 18 (in terms of its confidence interval), 16 is indistinguishable from 17, and so on. Therefore, banding works okay for the top scores, but if you are going to hire a lot of candidates, it is a problem. A solution to this problem with banding is to use a sliding band, as described later.

16.5.2.2.6 Banding with Bonus Points

Banding is often used with bonus points to reduce the negative impact for minority groups. An example of using banding with bonus points as a score adjustment is depicted in Figure 16.17.

Figure 16.17: Using Banding With Bonus Points as a Scoring Adjustment.

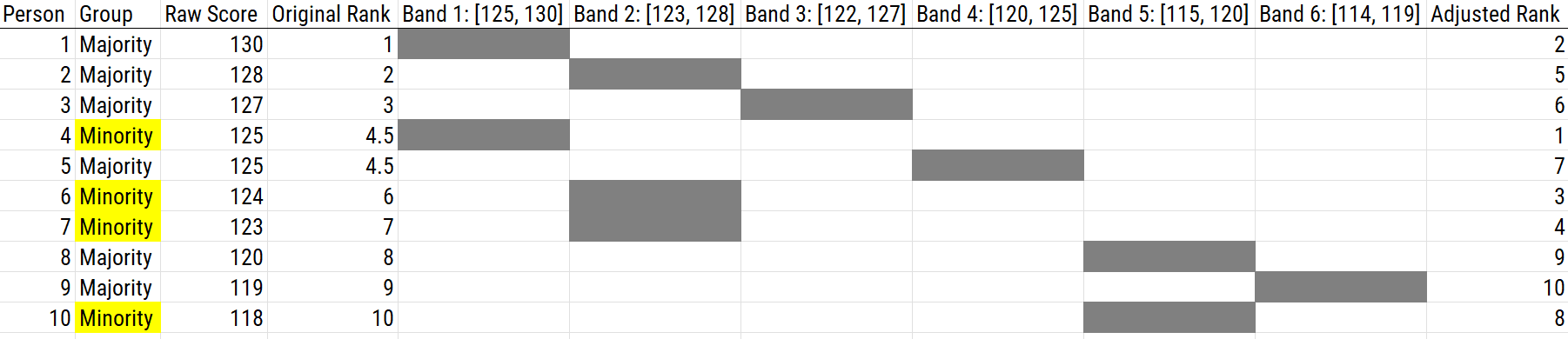

16.5.2.2.7 Sliding Band

Using a sliding band is a solution to the problem of which bands to use when using banding. Using a sliding band can help increase the number of minorities selected. Using the top band, you select all members of a minority group in the top band, then select members of the majority group with the top score of the band, then slide the band down (based on SEM), and repeat. You work your way down with bands though groups that are indistinguishable based on SEM, until getting a cell needed to select a relevant candidate.

For instance, if the top score is 22 and the SEM is 4 points, the first band would be: [18, 22]. Here is how you would proceed:

- Select the minority group members who have a score between 18 to 22.

- Select the majority group members who have a score of 22.

- Slide the band down based on the SEM to the next highest score: [17, 21].

- Select the minority group members who have a score between 17 to 21.

- Select the majority group members who have a score of 21.

- Slide the band down based on the SEM to the next highest score: [16, 20].

- …

- And so on

An example of using a sliding band as a score adjustment is depicted in Figure 16.18.

Figure 16.18: Using a Sliding Band as a Scoring Adjustment.

In sum, using a sliding band, scores that are not significantly lower than the highest remaining score should not be treated as different. Using a sliding band has the same effects on decisions as bonus points that are the width of the band. For example, if the SEM is 3, it has the same decisions as bonus points of 3; therefore, any scores within 3 of the highest score are now considered equal.

A sliding band is popular because of its scientific and statistical rationale. Also, it is more confusing and, therefore, preferred by some because it may be less likely to be sued. However, a sliding band may not always eliminate adverse impact. A sliding band has never been overturned in court (or at least, not yet).

16.5.2.2.8 Separate Tests

Using separate tests for each group is another option to reduce bias. For instance, you might use one test for the majority group and a different test for the minority group, making sure that each test is valid for the relevant group. Using separate tests is an extreme version of top-down selection and within-group norming. Using separate tests would be an option if a measure shows different slopes predictive bias.

One way of developing separate tests is to use empirical keying by group: different items for each group are selected based on each item’s association with the criterion in each group. Empirical keying is an example of dustbowl empiricism (i.e., relying on empiricism rather than theory). However, theory can also inform the item selection.

16.5.2.2.9 Item Elimination based on Group Differences

Items that show large group differences in scores can be eliminated from the test. If you remove enough items showing differences between groups, you can get similar scores between groups and can get equal group selection. A problem of item elimination based on group differences is that if you get rid of predictive items, then two goals, equal selection and predictive power, are not met. If you use this method, you often have to be willing for the measure to show decreases in predictive power.

16.5.2.3 Use of Score Adjustment

Score adjustment can be used in a number of different domains, including tests of aptitude and intelligence. Score adjustment also comes up in other areas. For example, the number of drinks it takes to be considered binge drinking differs between men (five) and women (four). Although the list of score adjustment options is long, they all really reduce to two ways:

Bonus points and within-group norming are the techniques that are most often used in the real world. These techniques differ in their degree of obscurity—i.e., confusion that is caused not for scientific reasons, but for social, political, and dissemination and implementation reasons. Often procedures that are hard to understand are preferred because it is hard to argue against, critique, or game the system. Basically, you have two options for score adjustment. One option is to adjust scores by raising scores in one group or lowering the criterion in one group. The second primary option is to renorm or change the scores. In sum, you can change the scores, or you can change the decisions you make based on the scores.

16.5.3 Other Ways to Correct for Bias

Because score adjustment is controversial, it is also important to consider other potential ways to correct for bias that do not involve score adjustment. Strategies other than score adjustment to correct for bias are described by Sackett et al. (2001).

16.5.3.1 Use Multiple Predictors

In general, high-stakes decisions should not be made based on the results from one test. So, for instance, do not make hiring decisions based just on aptitude assessments. For example, college admissions decisions are not made just based on SAT scores, but also one’s grades, personal statement, extracurricular activities, letters of recommendation, etc. Using multiple predictors works best when the predictors are not correlated with the assessment that has adverse impact, which is difficult to achieve.

There are larger majority–minority subgroup differences in verbal and cognitive ability tests than in noncognitive skills (e.g., motivation, personality, and interpersonal skills). So, it is important to include assessment of relevant noncognitive skills. Include as many relevant aspects of the construct as possible for content validity. For a job, consider as many factors as possible that are relevant for success, e.g., cognitive and noncognitive abilities.

16.5.3.2 Change the Criterion

Another option is to change the criterion so that the predictive validity of tests is less skewed. It may be that the selection instrument is not biased but the way in which we are thinking about selection procedures is biased. For example, for judging the quality of universities, there are many different criteria we could use. It could be valuable to examine the various criteria, and you might find what is driving adverse effects.

16.5.3.3 Remove Biased Items

Using item response theory or confirmatory factor analysis, you can identify items that function differently across groups (i.e., differential item functioning/DIF or measurement non-invariance). For instance, you can identify items that show different discrimination/factor loadings or difficulty/intercepts by group. You do not just want to remove items that show mean-level differences in scores (or different rates of endorsement) for one group than another, because there may be true group differences in their level on particular items. If an item is clearly invalid in one group but valid in another group, another option is to keep the item in one group, and to remove it in another group.

Be careful when removing items because removing items can lead to poorer content validity—i.e., items may no longer be a representative set of the content of the construct. Removing items also reduces a measure’s reliability and ability to detect individual differences (Hagquist, 2019; Hagquist & Andrich, 2017). DIF effects tend to be small and inconsistent; removing items showing DIF may not have a big impact.

16.5.3.4 Resolve Biased Items

Another option, for items identified that show differential item functioning using IRT or measurement non-invariance using CFA, is to resolve instead of remove items. Resolving items involves allowing an item to have a different discrimination/factor loading and/or difficulty/intercept parameter for each group. Allowing item parameters to differ across groups has a very small effect on reliability and person separation, so it can be preferable to removing items (Hagquist, 2019; Hagquist & Andrich, 2017).

16.5.3.5 Use Alternative Modes of Testing

Another option is to use alternative modes of testing. For example, you could use audio or video to present test items, rather than requiring a person to read the items, or write answers. Typical testing and computerized exams are oriented toward the upper-middle class, which is therefore a procedure problem! McClelland’s (1973) argument is that we need more real-life testing. Real-life testing could help address stereotype threats and the effects of learning disabilities. However, testing in different modalities could change the construct(s) being assessed.

16.5.3.6 Use Work Records

Using work records is based on McClelland’s (1973) argument to use more realistic and authentic assessments of job-relevant abilities. Evidence on the value of work records for personnel selection is mixed. In some cases, use of work records can actually increase adverse impact on under-represented groups because the primary group typically already has an idea of how to get into the relevant job or is already in the relevant job; therefore, they have a leg up. It would be acceptable to use work records if you trained people first and then tested, but no one spends the time to do this.

16.5.3.7 Increase Time Limit

Another option is to allot people more testing time, as long as doing so does not change the construct. Time limits often lead to greater measurement error because scores conflate pace and quality of work. Increasing time limits requires convincing stakeholders that job performance is typically not “how fast you do things” but “how well you do them”—i.e., that time does not correlate with outcome of interest. The utility of increasing time limits depends on the domain. In some domains, efficiency is crucial (e.g., medicine, pilot). Increasing time limits is not that effective in reducing group differences, and it may actually increase group differences.

16.5.3.8 Use Motivation Sets

Using motivation sets involves finding ways to increase testing motivation for minority groups. It is probably an error to think that a test assesses just aptitude; therefore, we should also consider an individual’s motivation to test. Thus, part of the score has to do with ability and some of the score has to do with motivation. You should try to maximize each examinee’s motivation, so that the person’s score on the measure better captures their true ability score. Motivation sets could include, for example, using more realistic test stimuli that are clearly applicable to the school or job requirements (i.e., that have face validity) to motivate all test takers.

16.5.3.9 Use Instructional Sets

Using instructional sets involves coaching and training. For instance, you could inform examinees about the test content, provide study materials, and recommend test-taking strategies. This could narrow the gap between groups because there is an implicit assumption that the primary group already has “light” training. Using instructional sets aims to reduce error variance due to test anxiety, unfamiliar test format, and poor test-taking skills.

Giving minority groups better access to test preparation is based on the assumption that group differences emerge because of different access to test preparation materials. This could theoretically help to systematically reduce test score differences across groups. Standardized tests like the SAT/GRE/LSAT/GMAT/MCAT, etc. embrace coaching/training. For instance, the organization ETS gives training materials for free. After training, scores on standardized tests show some but minimal improvement. In general, training yields some improvement on quantitative subscales but minimal change on verbal subscales. However, the improvements tend to apply across groups, and they do not seem to lessen group differences in scores.

16.6 Getting Started

16.6.1 Load Libraries

Code

library("petersenlab") #to install: install.packages("remotes"); remotes::install_github("DevPsyLab/petersenlab")

library("lavaan")

library("semTools")

library("semPlot")

library("mirt")

library("dmacs") #to install: install.packages("remotes"); remotes::install_github("ddueber/dmacs")

library("strucchange")

library("MOTE")

library("tidyverse")

library("here")

library("tinytex")16.6.2 Prepare Data

16.6.2.1 Load Data

cnlsy is a subset of a data set from the Children of the National Longitudinal Survey of Youth Survey (CNLSY).

The CNLSY is a publicly available longitudinal data set provided by the Bureau of Labor Statistics (https://perma.cc/EH38-HDRN).

The CNLSY data file for these examples is located on the book’s page of the Open Science Framework (https://osf.io/3pwza).

16.6.2.2 Simulate Data

For reproducibility, I set the seed below. Using the same seed will yield the same answer every time. There is nothing special about this particular seed.

Code

sampleSize <- 4000

set.seed(52242)

mydataBias <- data.frame(

ID = 1:sampleSize,

group = factor(c("male","female"),

levels = c("male","female")),

unbiasedPredictor1 = NA,

unbiasedPredictor2 = NA,

unbiasedPredictor3 = NA,

unbiasedCriterion1 = NA,

unbiasedCriterion2 = NA,

unbiasedCriterion3 = NA,

predictor = rnorm(sampleSize, mean = 100, sd = 15),

criterion1 = NA,

criterion2 = NA,

criterion3 = NA,

criterion4 = NA,

criterion5 = NA)

mydataBias$unbiasedPredictor1 <- rnorm(sampleSize, mean = 100, sd = 15)

mydataBias$unbiasedPredictor2[which(mydataBias$group == "male")] <-

rnorm(length(which(mydataBias$group == "male")), mean = 70, sd = 15)

mydataBias$unbiasedPredictor2[which(mydataBias$group == "female")] <-

rnorm(length(which(mydataBias$group == "female")), mean = 130, sd = 15)

mydataBias$unbiasedPredictor3[which(mydataBias$group == "male")] <-

rnorm(length(which(mydataBias$group == "male")), mean = 130, sd = 15)

mydataBias$unbiasedPredictor3[which(mydataBias$group == "female")] <-

rnorm(length(which(mydataBias$group == "female")), mean = 70, sd = 15)

mydataBias$unbiasedCriterion1 <- 1 * mydataBias$unbiasedPredictor1 +

rnorm(sampleSize, mean = 0, sd = 15)

mydataBias$unbiasedCriterion2 <- 1 * mydataBias$unbiasedPredictor2 +

rnorm(sampleSize, mean = 0, sd = 15)

mydataBias$unbiasedCriterion3 <- 1 * mydataBias$unbiasedPredictor3 +

rnorm(sampleSize, mean = 0, sd = 15)

mydataBias$criterion1[which(mydataBias$group == "male")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "male")] +

rnorm(length(which(mydataBias$group == "male")), mean = 0, sd = 5)

mydataBias$criterion1[which(mydataBias$group == "female")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "female")] +

rnorm(length(which(mydataBias$group == "female")), mean = 0, sd = 5)

mydataBias$criterion2[which(mydataBias$group == "male")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "male")] +

rnorm(length(which(mydataBias$group == "male")), mean = 10, sd = 5)

mydataBias$criterion2[which(mydataBias$group == "female")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "female")] +

rnorm(length(which(mydataBias$group == "female")), mean = 0, sd = 5)

mydataBias$criterion3[which(mydataBias$group == "male")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "male")] +

rnorm(length(which(mydataBias$group == "male")), mean = 0, sd = 5)

mydataBias$criterion3[which(mydataBias$group == "female")] <-

.3 * mydataBias$predictor[which(mydataBias$group == "female")] +

rnorm(length(which(mydataBias$group == "female")), mean = 0, sd = 5)

mydataBias$criterion4[which(mydataBias$group == "male")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "male")] +

rnorm(length(which(mydataBias$group == "male")), mean = 0, sd = 5)

mydataBias$criterion4[which(mydataBias$group == "female")] <-

.3 * mydataBias$predictor[which(mydataBias$group == "female")] +

rnorm(length(which(mydataBias$group == "female")), mean = 30, sd = 5)

mydataBias$criterion5[which(mydataBias$group == "male")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "male")] +

rnorm(length(which(mydataBias$group == "male")), mean = 0, sd = 30)

mydataBias$criterion5[which(mydataBias$group == "female")] <-

.7 * mydataBias$predictor[which(mydataBias$group == "female")] +

rnorm(length(which(mydataBias$group == "female")), mean = 0, sd = 5)16.6.2.3 Add Missing Data

Adding missing data to dataframes helps make examples more realistic to real-life data and helps you get in the habit of programming to account for missing data.

HolzingerSwineford1939 is a data set from the lavaan package (Rosseel et al., 2022) that contains mental ability test scores (x1–x9) for seventh- and eighth-grade children.

Code

varNames <- names(mydataBias)

dimensionsDf <- dim(mydataBias[,-c(1,2)])

unlistedDf <- unlist(mydataBias[,-c(1,2)])

unlistedDf[sample(

1:length(unlistedDf),

size = .01 * length(unlistedDf))] <- NA

mydataBias <- cbind(

mydataBias[,c("ID","group")],

as.data.frame(

matrix(

unlistedDf,

ncol = dimensionsDf[2])))

names(mydataBias) <- varNames

data("HolzingerSwineford1939")

varNames <- names(HolzingerSwineford1939)

dimensionsDf <- dim(HolzingerSwineford1939[,paste("x", 1:9, sep = "")])

unlistedDf <- unlist(HolzingerSwineford1939[,paste("x", 1:9, sep = "")])

unlistedDf[sample(

1:length(unlistedDf),

size = .01 * length(unlistedDf))] <- NA

HolzingerSwineford1939 <- cbind(

HolzingerSwineford1939[,1:6],

as.data.frame(matrix(

unlistedDf,

ncol = dimensionsDf[2])))

names(HolzingerSwineford1939) <- varNames16.7 Examples of Unbiased Tests (in Terms of Predictive Bias)

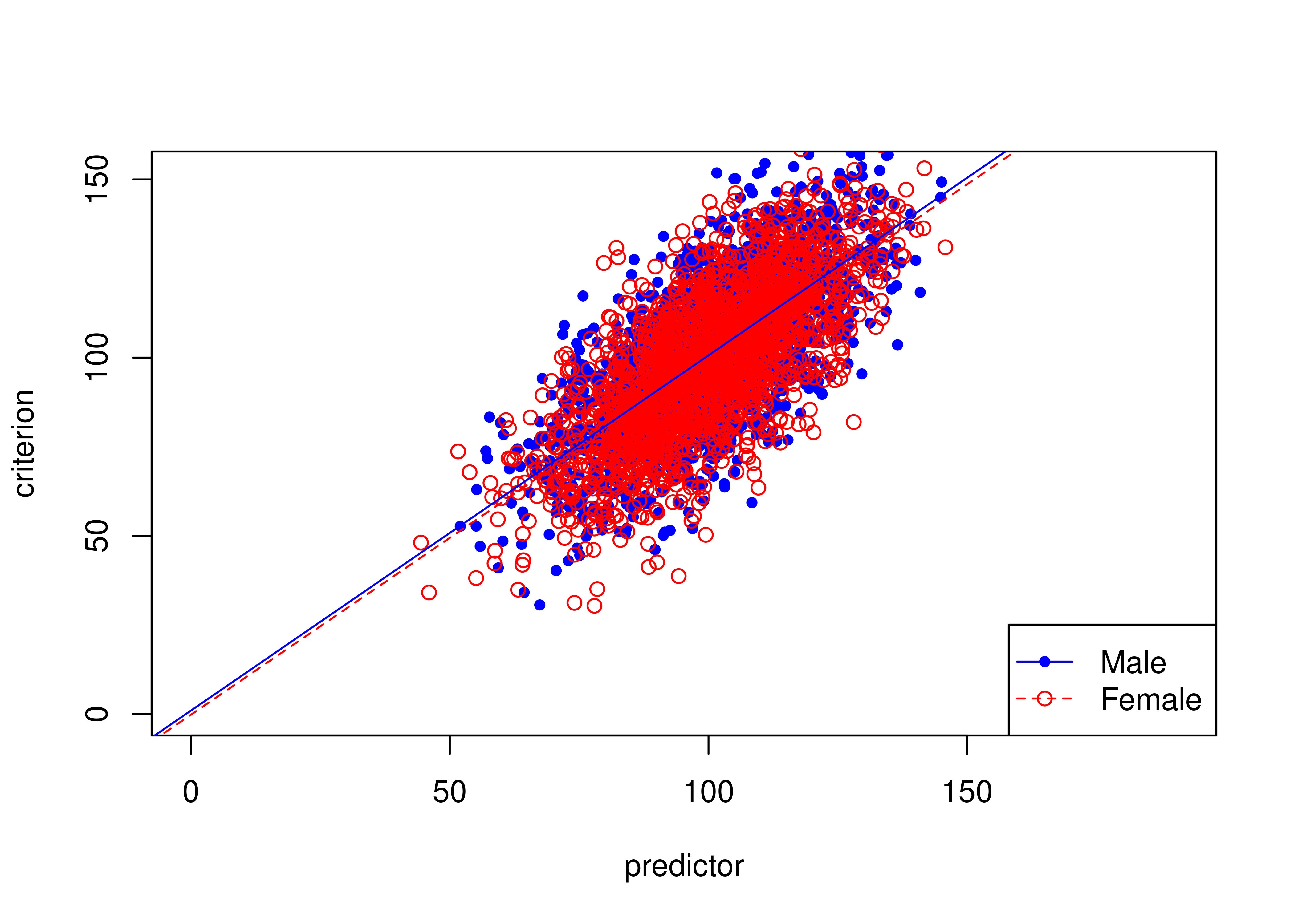

16.7.1 Unbiased test where males and females have equal means on predictor and criterion

Figure 16.19 depicts an example of an unbiased test where males and females have equal means on the predictor and criterion.

The test is unbiased because there are no significant differences in the regression lines (of predictor predicting criterion) between males and females.

Code

Call:

lm(formula = unbiasedCriterion1 ~ unbiasedPredictor1 + group +

unbiasedPredictor1:group, data = mydataBias)

Residuals:

Min 1Q Median 3Q Max

-54.623 -10.050 -0.025 10.373 66.811

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.930697 2.295058 0.406 0.685

unbiasedPredictor1 0.996976 0.022624 44.066 <2e-16 ***

groupfemale -1.165200 3.250481 -0.358 0.720

unbiasedPredictor1:groupfemale -0.004397 0.032115 -0.137 0.891

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 15.13 on 3913 degrees of freedom

(83 observations deleted due to missingness)

Multiple R-squared: 0.4963, Adjusted R-squared: 0.496

F-statistic: 1285 on 3 and 3913 DF, p-value: < 2.2e-16Code

plot(

unbiasedCriterion1 ~ unbiasedPredictor1,

data = mydataBias,

xlim = c(

0,

max(c(

mydataBias$unbiasedCriterion1,

mydataBias$unbiasedPredictor1),

na.rm = TRUE)),

ylim = c(

0,

max(c(

mydataBias$criterion1,

mydataBias$predictor),

na.rm = TRUE)),

type = "n",

xlab = "predictor",

ylab = "criterion")

points(

mydataBias$unbiasedPredictor1[which(mydataBias$group == "male")],

mydataBias$unbiasedCriterion1[which(mydataBias$group == "male")],

pch = 20,

col = "blue")

points(mydataBias$unbiasedPredictor1[which(mydataBias$group == "female")],

mydataBias$unbiasedCriterion1[which(mydataBias$group == "female")],

pch = 1,

col = "red")

abline(lm(

unbiasedCriterion1 ~ unbiasedPredictor1,

data = mydataBias[which(mydataBias$group == "male"),]),

lty = 1,

col = "blue")

abline(lm(

unbiasedCriterion1 ~ unbiasedPredictor1,

data = mydataBias[which(mydataBias$group == "female"),]),

lty = 2,

col = "red")

legend(

"bottomright",

c("Male","Female"),

lty = c(1,2),

pch = c(20,1),

col = c("blue","red"))

Figure 16.19: Unbiased Test Where Males and Females Have Equal Means on Predictor and Criterion.

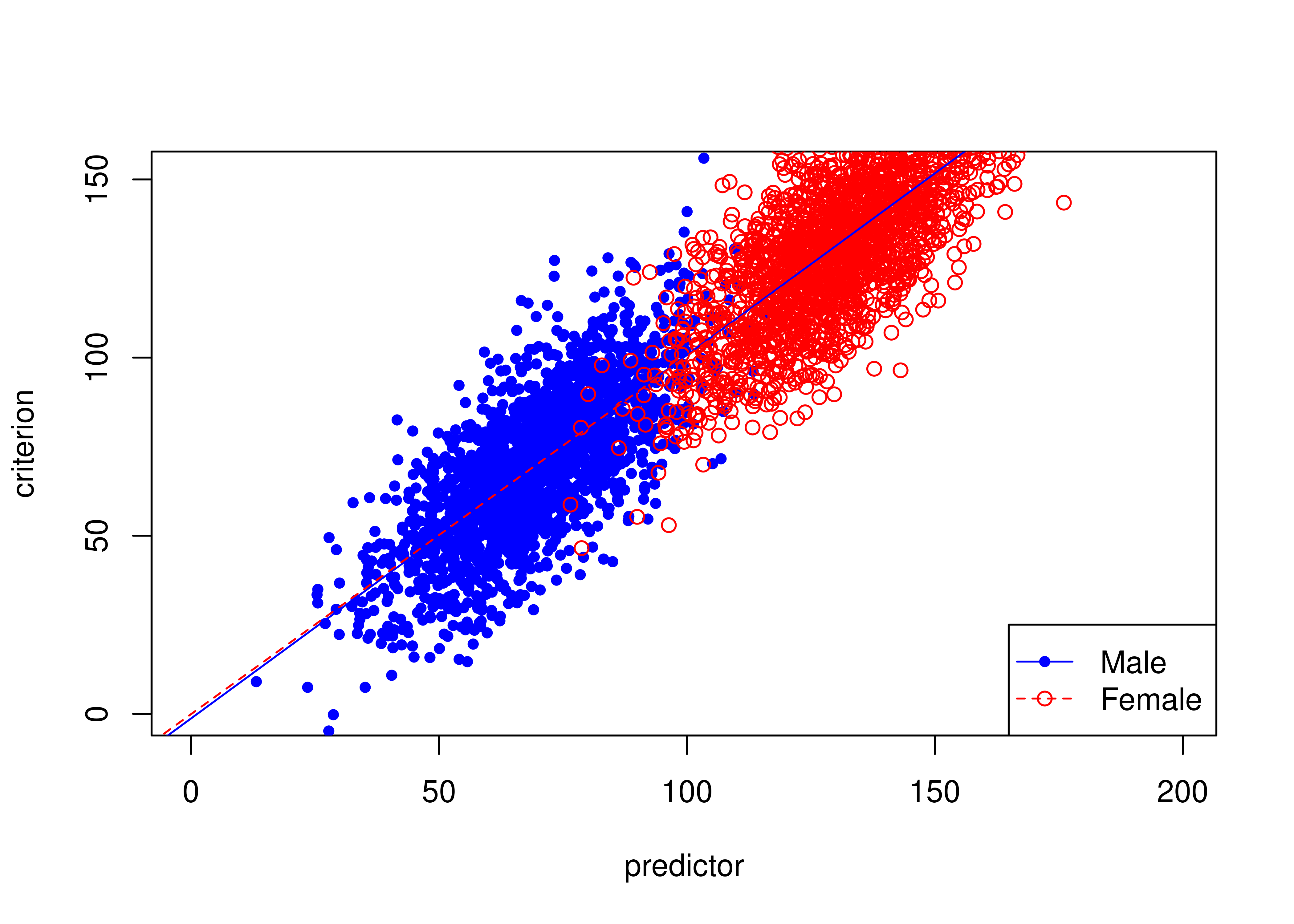

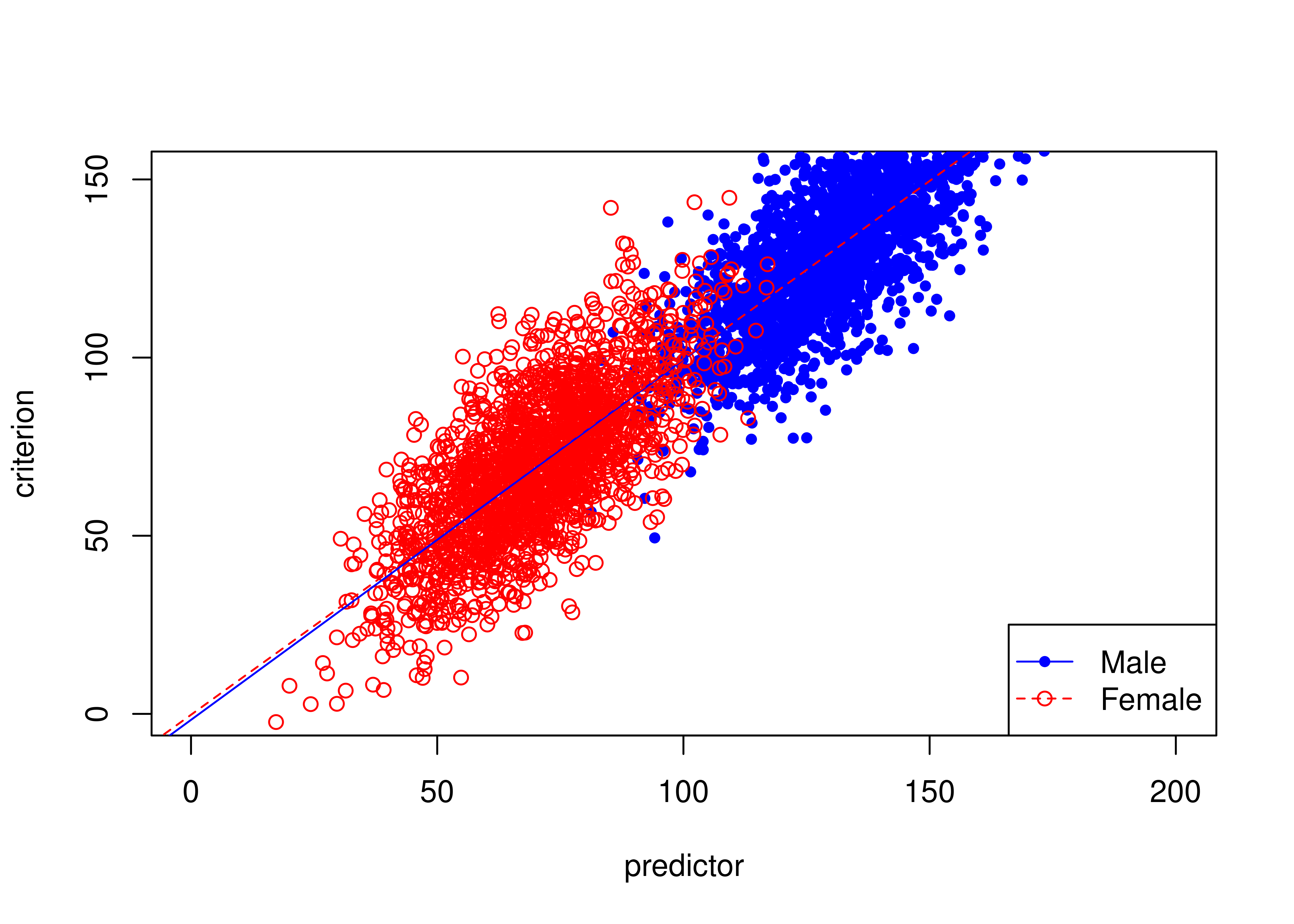

16.7.2 Unbiased test where females have higher means than males on predictor and criterion

Figure 16.20 depicts an example of an unbiased test where females have higher means than males on the predictor and criterion.

The test is unbiased because there are no differences in the regression lines (of predictor predicting criterion) between males and females.

Code

Call:

lm(formula = unbiasedCriterion2 ~ unbiasedPredictor2 + group +

unbiasedPredictor2:group, data = mydataBias)

Residuals:

Min 1Q Median 3Q Max

-47.302 -9.989 0.010 9.860 53.791

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -1.29332 1.57773 -0.820 0.412

unbiasedPredictor2 1.02006 0.02218 46.000 <2e-16 ***

groupfemale 1.21294 3.28319 0.369 0.712

unbiasedPredictor2:groupfemale -0.01501 0.03125 -0.480 0.631

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 14.81 on 3919 degrees of freedom

(77 observations deleted due to missingness)

Multiple R-squared: 0.8412, Adjusted R-squared: 0.8411

F-statistic: 6921 on 3 and 3919 DF, p-value: < 2.2e-16Code

plot(

unbiasedCriterion2 ~ unbiasedPredictor2,

data = mydataBias,

xlim = c(

0,

max(c(

mydataBias$unbiasedCriterion2,

mydataBias$unbiasedPredictor2),

na.rm = TRUE)),

ylim = c(

0,

max(c(

mydataBias$criterion1,

mydataBias$predictor),

na.rm = TRUE)),

type = "n",

xlab = "predictor",

ylab = "criterion")

points(

mydataBias$unbiasedPredictor2[which(mydataBias$group == "male")],

mydataBias$unbiasedCriterion2[which(mydataBias$group == "male")],

pch = 20,

col = "blue")

points(

mydataBias$unbiasedPredictor2[which(mydataBias$group == "female")],

mydataBias$unbiasedCriterion2[which(mydataBias$group == "female")],

pch = 1,

col = "red")

abline(lm(

unbiasedCriterion2 ~ unbiasedPredictor2,

data = mydataBias[which(mydataBias$group == "male"),]),

lty = 1,

col = "blue")

abline(lm(

unbiasedCriterion2 ~ unbiasedPredictor2,

data = mydataBias[which(mydataBias$group == "female"),]),

lty = 2,

col = "red")

legend(

"bottomright",

c("Male","Female"),

lty = c(1,2),

pch = c(20,1),

col = c("blue","red"))

Figure 16.20: Unbiased Test Where Females Have Higher Means Than Males on Predictor and Criterion.

16.7.3 Unbiased test where males have higher means than females on predictor and criterion

Figure 16.21 depicts an example of an unbiased test where males have higher means than females on the predictor and criterion.

The test is unbiased because there are no differences in the regression lines (of predictor predicting criterion) between males and females.

Code

Call:

lm(formula = unbiasedCriterion3 ~ unbiasedPredictor3 + group +

unbiasedPredictor3:group, data = mydataBias)

Residuals:

Min 1Q Median 3Q Max

-48.613 -10.115 -0.068 9.598 57.126

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -1.68352 2.84985 -0.591 0.555

unbiasedPredictor3 1.01072 0.02179 46.375 <2e-16 ***

groupfemale 1.42842 3.26227 0.438 0.662

unbiasedPredictor3:groupfemale -0.01187 0.03109 -0.382 0.703

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 14.82 on 3916 degrees of freedom

(80 observations deleted due to missingness)

Multiple R-squared: 0.8376, Adjusted R-squared: 0.8375

F-statistic: 6732 on 3 and 3916 DF, p-value: < 2.2e-16Code

plot(

unbiasedCriterion3 ~ unbiasedPredictor3,

data = mydataBias,

xlim = c(

0,

max(c(

mydataBias$unbiasedCriterion3,

mydataBias$unbiasedPredictor3),

na.rm = TRUE)),

ylim = c(0, max(c(

mydataBias$criterion1,

mydataBias$predictor),

na.rm = TRUE)),

type = "n",

xlab = "predictor",

ylab = "criterion")

points(

mydataBias$unbiasedPredictor3[which(mydataBias$group == "male")],

mydataBias$unbiasedCriterion3[which(mydataBias$group == "male")],

pch = 20,

col = "blue")

points(

mydataBias$unbiasedPredictor3[which(mydataBias$group == "female")],

mydataBias$unbiasedCriterion3[which(mydataBias$group == "female")],

pch = 1,

col = "red")

abline(lm(

unbiasedCriterion3 ~ unbiasedPredictor3,

data = mydataBias[which(mydataBias$group == "male"),]),

lty = 1,

col = "blue")

abline(lm(

unbiasedCriterion3 ~ unbiasedPredictor3,

data = mydataBias[which(mydataBias$group == "female"),]),

lty = 2,

col = "red")

legend(

"bottomright",

c("Male","Female"),

lty = c(1,2),

pch = c(20,1),

col = c("blue","red"))

Figure 16.21: Unbiased Test Where Males Have Higher Means Than Females on Predictor and Criterion.

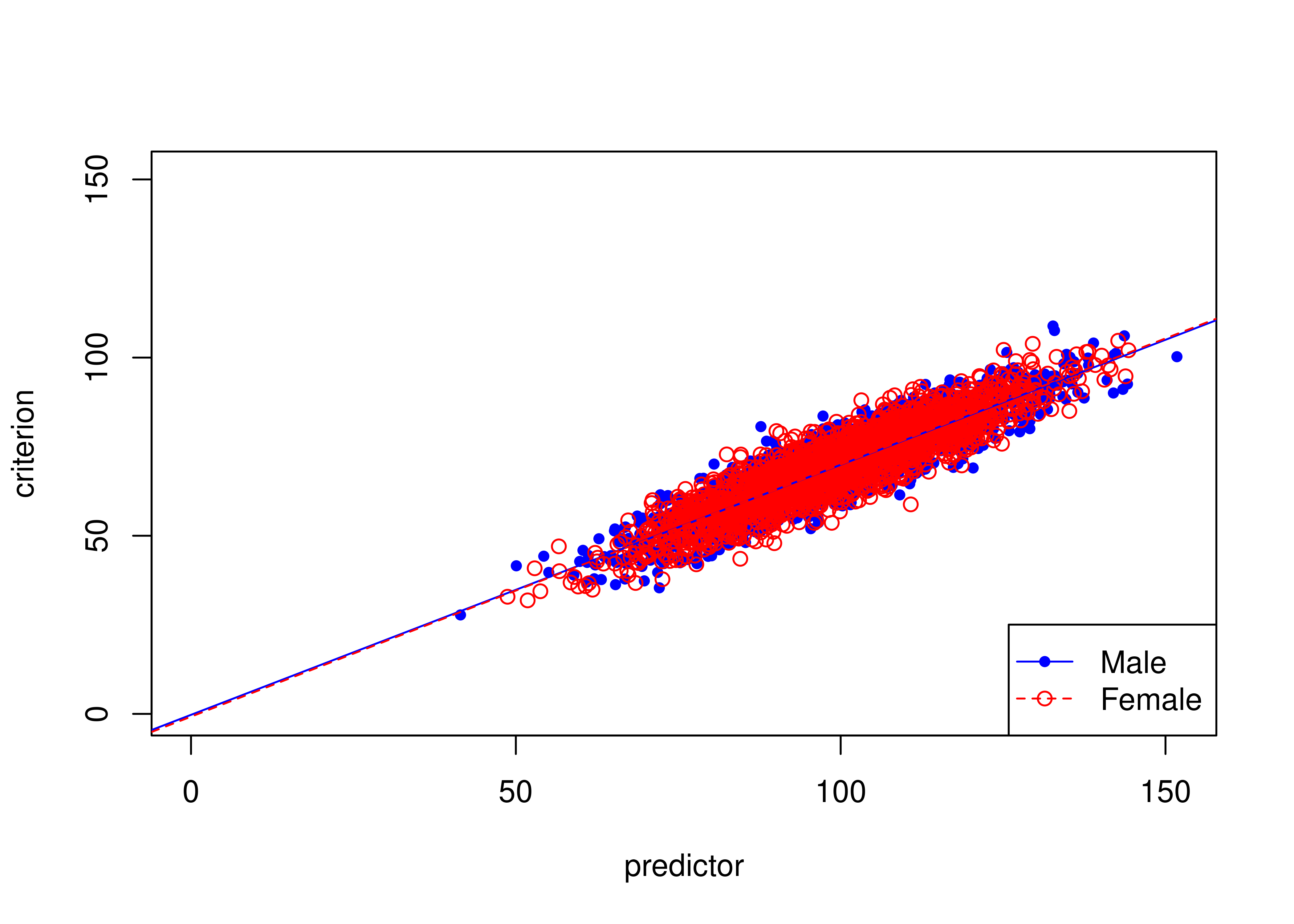

16.8 Predictive Bias: Different Regression Lines

16.8.1 Example of unbiased prediction (no differences in intercepts or slopes)

Figure 16.22 depicts an example of an unbiased test where males and females have equal means on the predictor and criterion.

The test is unbiased because there are no differences in the regression lines (of predictor predicting criterion1) between males and females.

Call:

lm(formula = criterion1 ~ predictor + group + predictor:group,

data = mydataBias)

Residuals:

Min 1Q Median 3Q Max

-18.831 -3.338 0.004 3.330 19.335

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.264357 0.747269 -0.354 0.724

predictor 0.701726 0.007370 95.207 <2e-16 ***

groupfemale -0.515860 1.059267 -0.487 0.626

predictor:groupfemale 0.006002 0.010476 0.573 0.567

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 4.952 on 3908 degrees of freedom

(88 observations deleted due to missingness)

Multiple R-squared: 0.8225, Adjusted R-squared: 0.8223

F-statistic: 6035 on 3 and 3908 DF, p-value: < 2.2e-16Code

plot(

criterion1 ~ predictor,

data = mydataBias,

xlim = c(

0,

max(c(

mydataBias$criterion1,

mydataBias$predictor),

na.rm = TRUE)),

ylim = c(

0,

max(c(

mydataBias$criterion1,

mydataBias$predictor),

na.rm = TRUE)),

type = "n",

xlab = "predictor",

ylab = "criterion")

points(

mydataBias$predictor[which(mydataBias$group == "male")],

mydataBias$criterion1[which(mydataBias$group == "male")],

pch = 20,

col = "blue")

points(

mydataBias$predictor[which(mydataBias$group == "female")],

mydataBias$criterion1[which(mydataBias$group == "female")],

pch = 1,

col = "red")

abline(lm(

criterion1 ~ predictor,

data = mydataBias[which(mydataBias$group == "male"),]),

lty = 1,

col = "blue")

abline(lm(

criterion1 ~ predictor,

data = mydataBias[which(mydataBias$group == "female"),]),

lty = 2,

col = "red")

legend(

"bottomright",

c("Male","Female"),

lty = c(1,2),

pch = c(20,1),

col = c("blue","red"))

Figure 16.22: Example of Unbiased Prediction (No Differences in Intercepts or Slopes Between Males and Females).

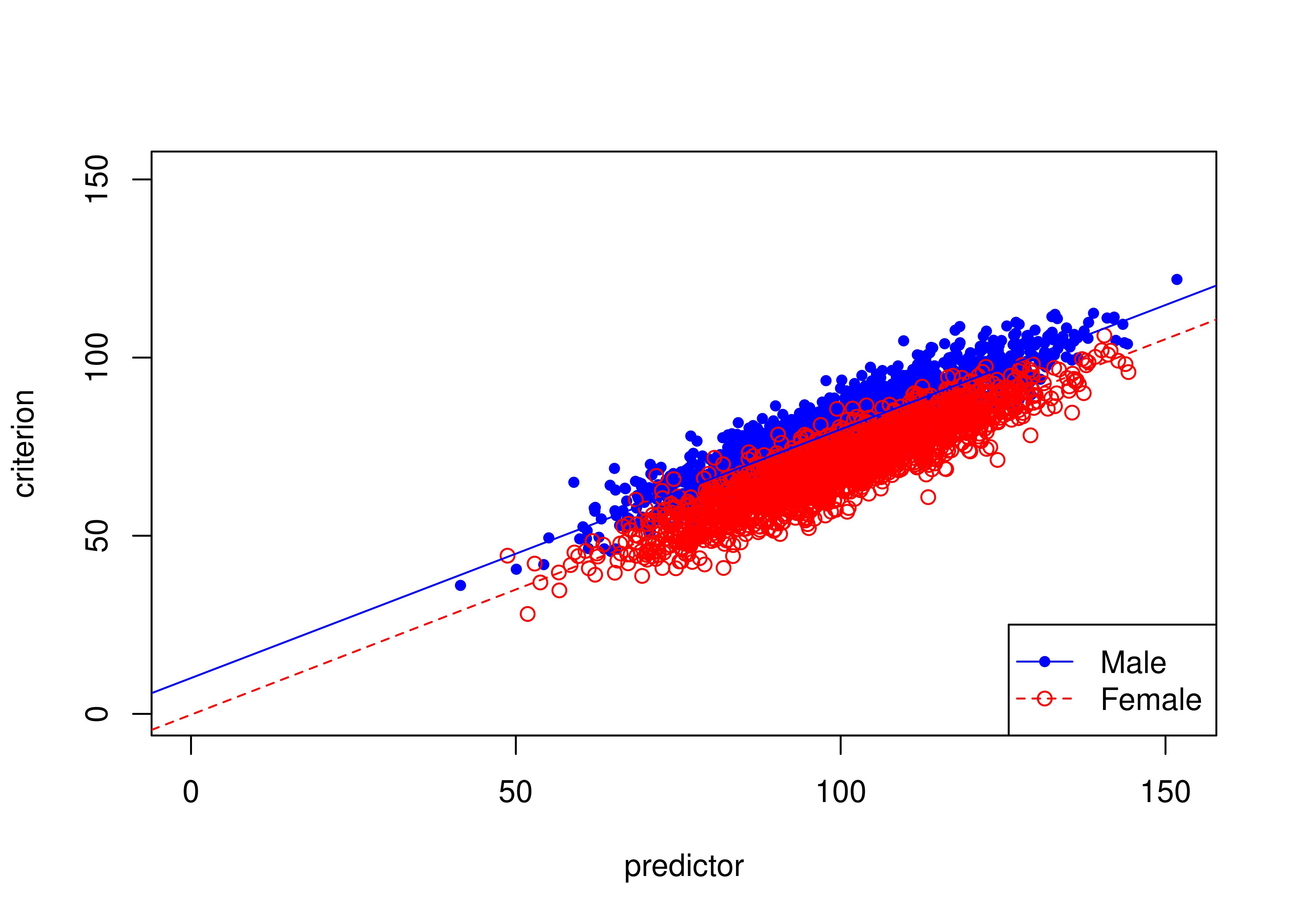

16.8.2 Example of intercept bias

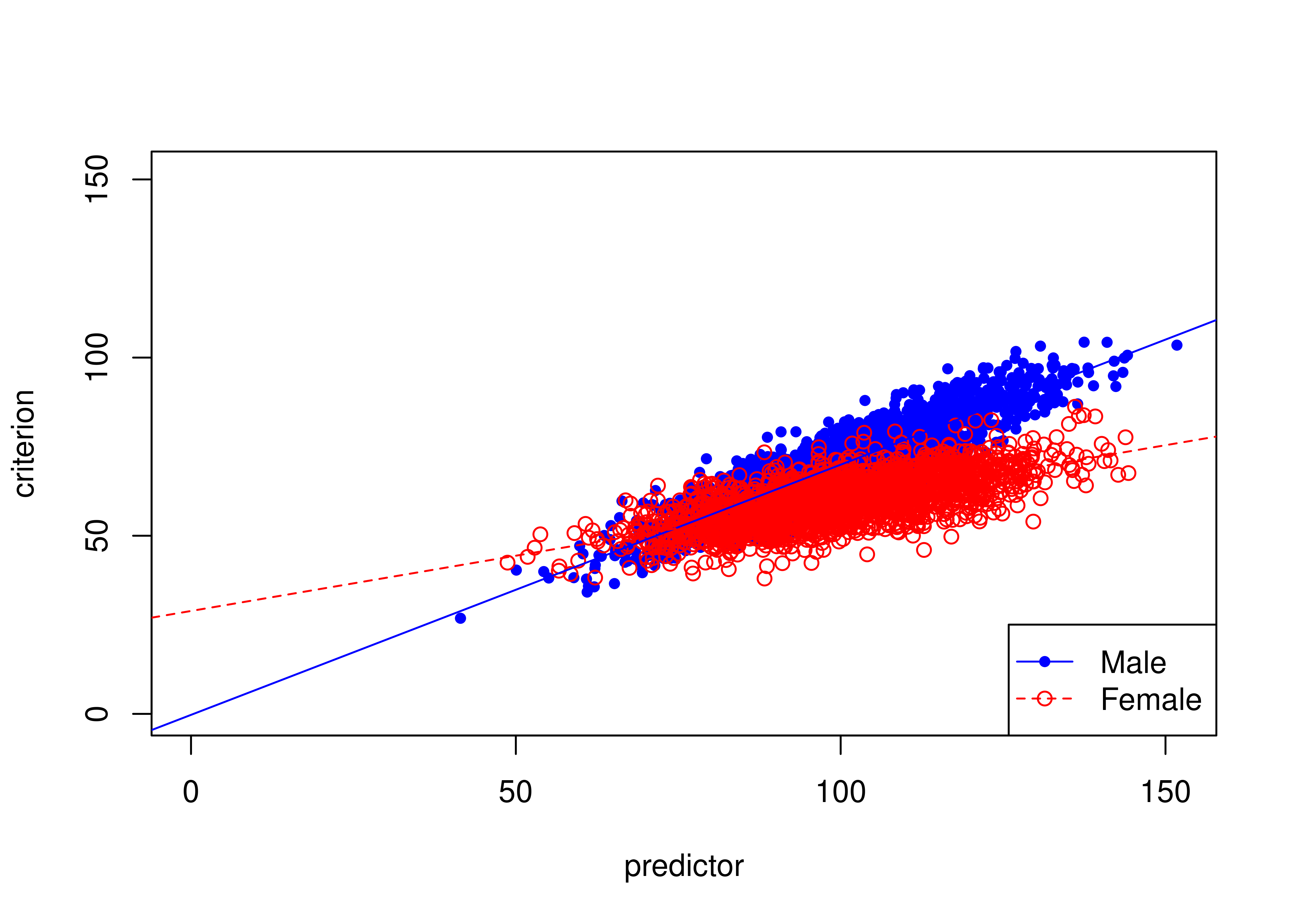

Figure 16.23 depicts an example of a biased test due to intercept bias.

There are differences in the intercepts of the regression lines (of predictor predicting criterion2) between males and females: males have a higher intercept than females.

That is, the same score on the predictor results in higher predictions for males than females.

Call:

lm(formula = criterion2 ~ predictor + group + predictor:group,

data = mydataBias)

Residuals:

Min 1Q Median 3Q Max

-18.6853 -3.4153 -0.0385 3.3979 18.0864

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 10.054048 0.760084 13.228 <2e-16 ***

predictor 0.697987 0.007499 93.075 <2e-16 ***

groupfemale -10.288893 1.074168 -9.578 <2e-16 ***

predictor:groupfemale 0.004748 0.010625 0.447 0.655

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 5.037 on 3913 degrees of freedom

(83 observations deleted due to missingness)

Multiple R-squared: 0.8452, Adjusted R-squared: 0.8451

F-statistic: 7124 on 3 and 3913 DF, p-value: < 2.2e-16Code

plot(

criterion2 ~ predictor,

data = mydataBias,

xlim = c(

0,

max(c(

mydataBias$criterion2,

mydataBias$predictor), na.rm = TRUE)),

ylim = c(

0,

max(c(

mydataBias$criterion2,

mydataBias$predictor),

na.rm = TRUE)),

type = "n",

xlab = "predictor",

ylab = "criterion")

points(

mydataBias$predictor[which(mydataBias$group == "male")],

mydataBias$criterion2[which(mydataBias$group == "male")],

pch = 20,

col = "blue")

points(

mydataBias$predictor[which(mydataBias$group == "female")],

mydataBias$criterion2[which(mydataBias$group == "female")],

pch = 1,

col = "red")

abline(lm(

criterion2 ~ predictor,

data = mydataBias[which(mydataBias$group == "male"),]),

lty = 1,

col = "blue")

abline(lm(

criterion2 ~ predictor,

data = mydataBias[which(mydataBias$group == "female"),]),

lty = 2,

col = "red")

legend(

"bottomright",

c("Male","Female"),

lty = c(1,2),

pch = c(20,1),

col = c("blue","red"))

Figure 16.23: Example of Intercept Bias in Prediction (Different Intercepts Between Males and Females).

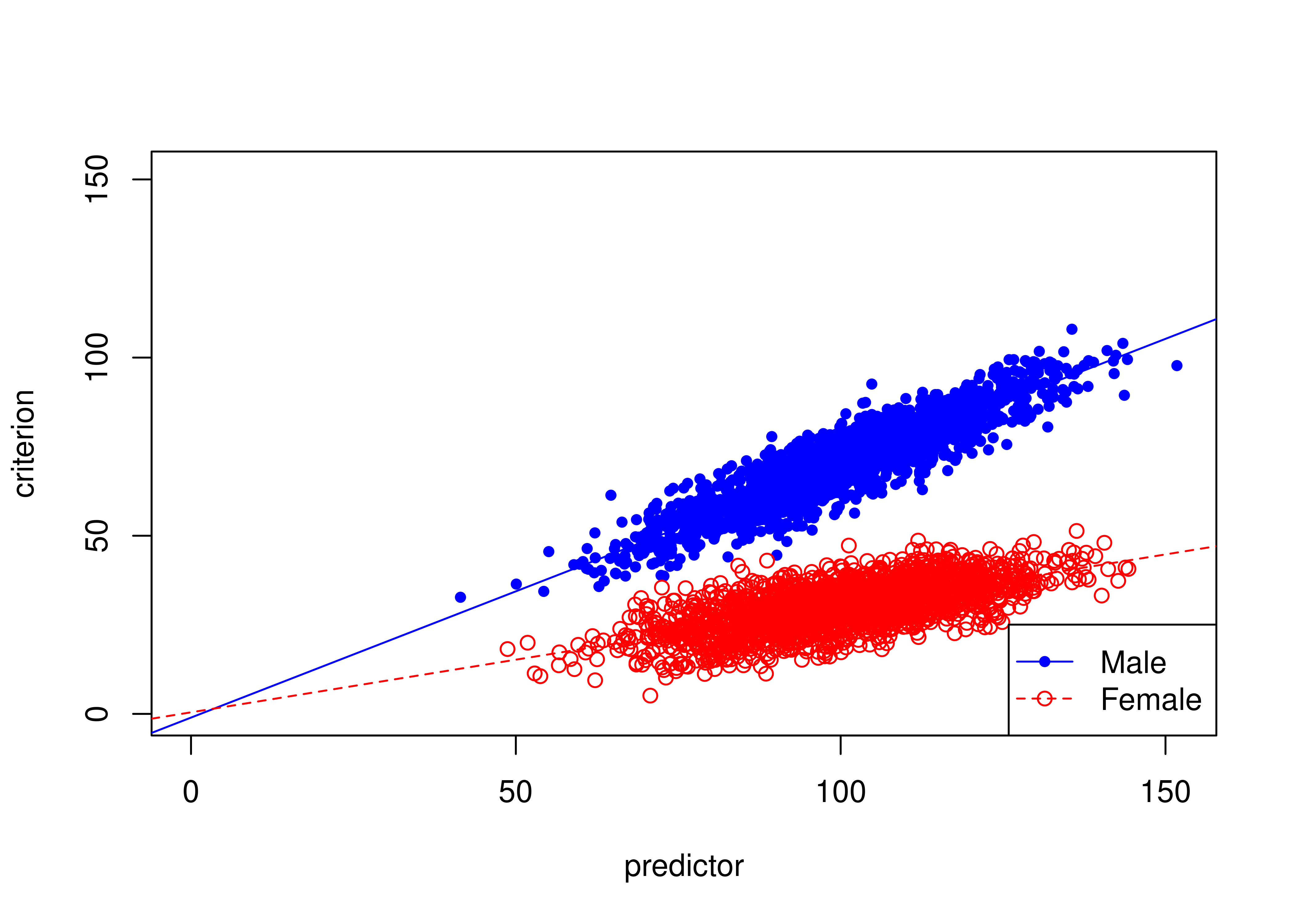

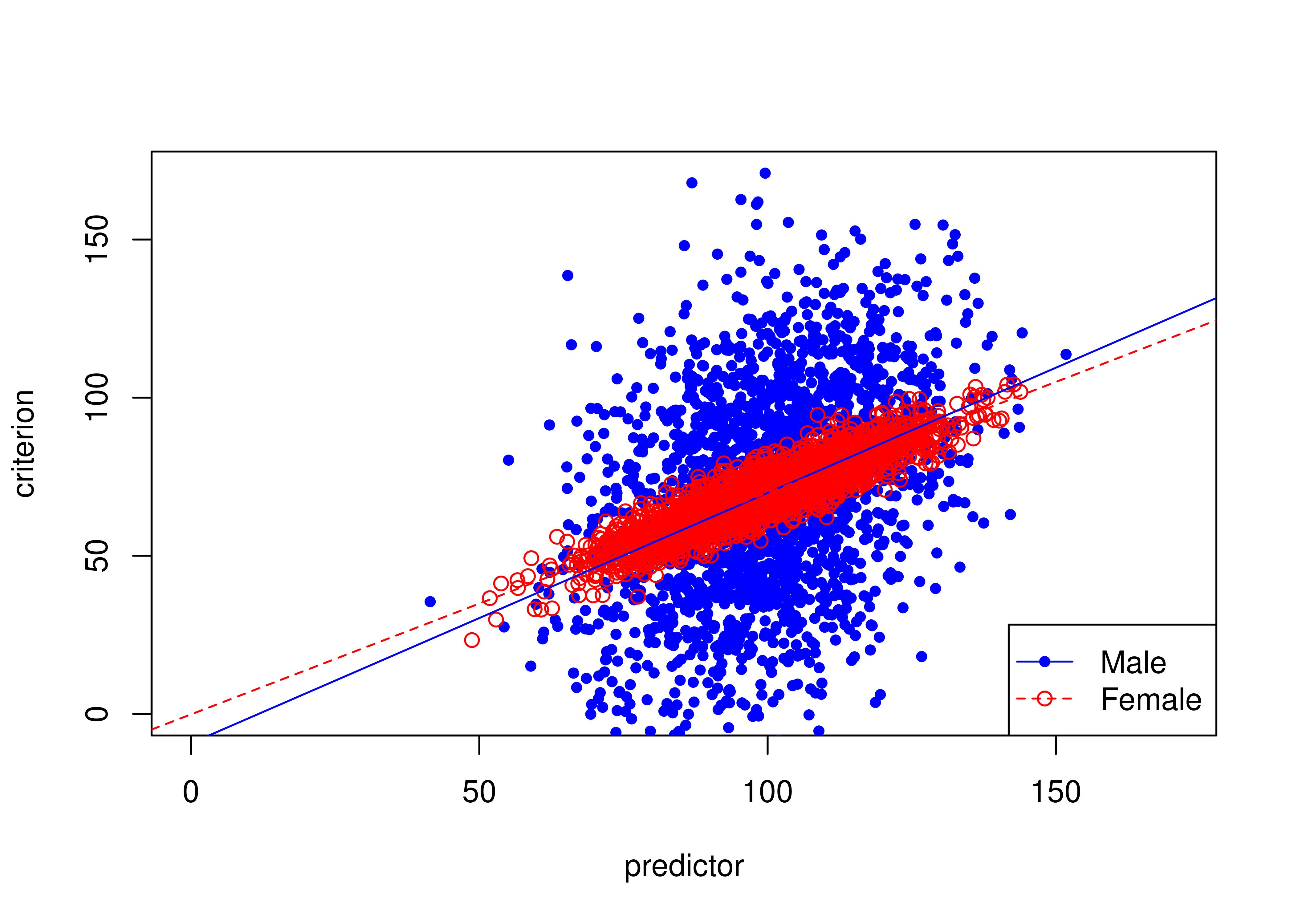

16.8.3 Example of slope bias

Figure 16.24 depicts an example of a biased test due to slope bias.

There are differences in the slopes of the regression lines (of predictor predicting criterion3) between males and females: males have a higher slope than females.