I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

Opening an issue or submitting a pull request on GitHub: https://github.com/isaactpetersen/Principles-Psychological-Assessment

Adding an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

Chapter 8 Item Response Theory

In the chapter on reliability, we introduced classical test theory. Classical test theory is a measurement theory of how test scores relate to a construct. Classical test theory provides a way to estimate the relation between the measure (or item) and the construct. For instance, with a classical test theory approach, to estimate the relation between an item and the construct, you would compute an item–total correlation. An item–total correlation is the correlation of an item with the total score on the measure (e.g., sum score). The item–total correlation approximates the relation between an item and the construct. However, the item–total correlation is a crude estimate of the relation between an item and the construct. And there are many other ways to characterize the relation between an item and a construct. One such way is with item response theory (IRT).

8.1 Overview of IRT

Unlike classical test theory, which is a measurement theory of how test scores relate to a construct, IRT is a measurement theory that describes how an item is related to a construct. For instance, given a particular person’s level on the construct, what is their chance of answering “TRUE” on a particular item?

IRT is an approach to latent variable modeling. In IRT, we estimate a person’s construct score (i.e., level on the construct) based on their item responses. The construct is estimated as a latent factor that represents the common variance among all items as in structural equation modeling or confirmatory factor analysis. The person’s level on the construct is called theta (\(\theta\)). When dealing with performance-based tests, theta is sometimes called “ability.”

8.1.1 Item Characteristic Curve

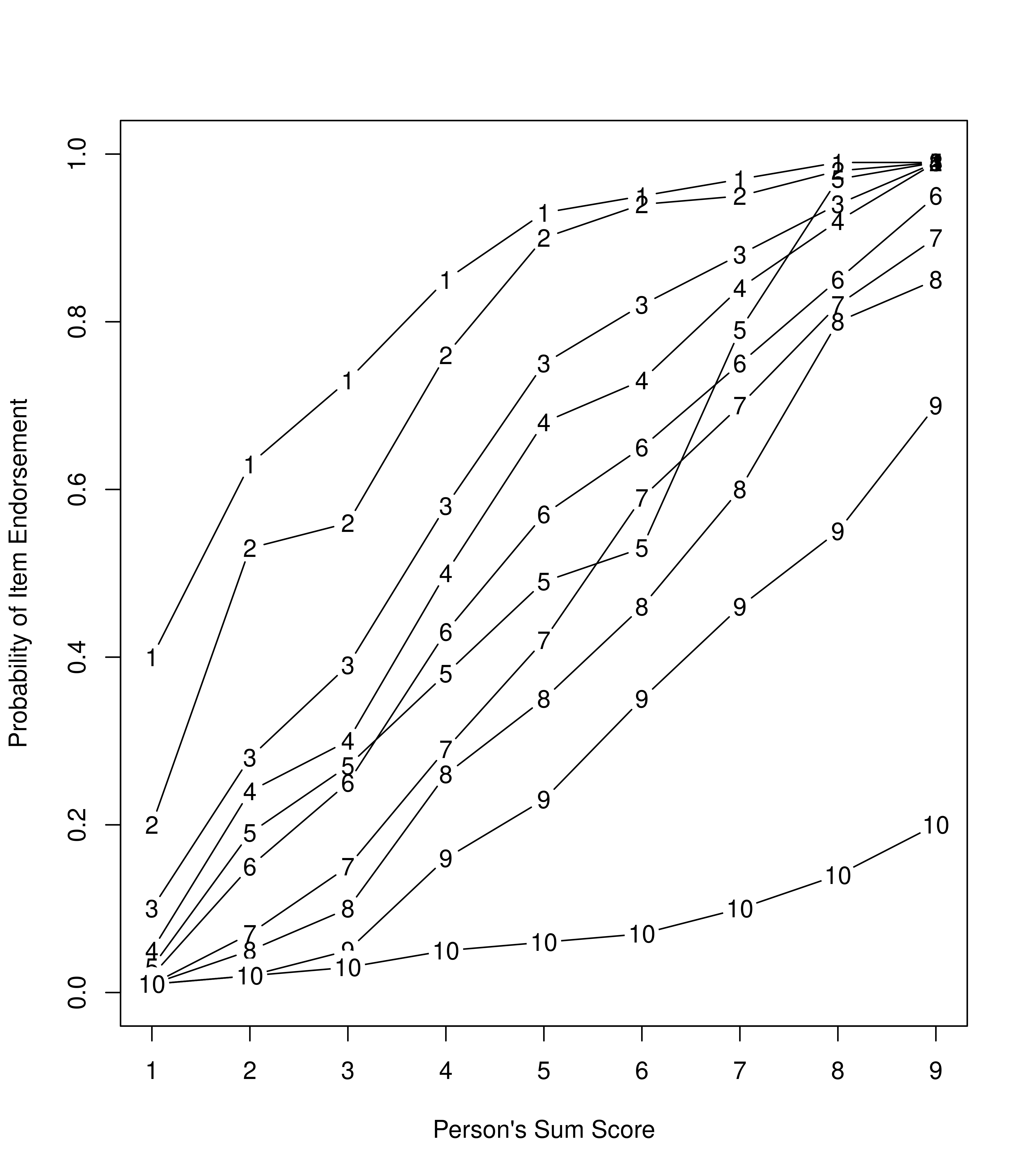

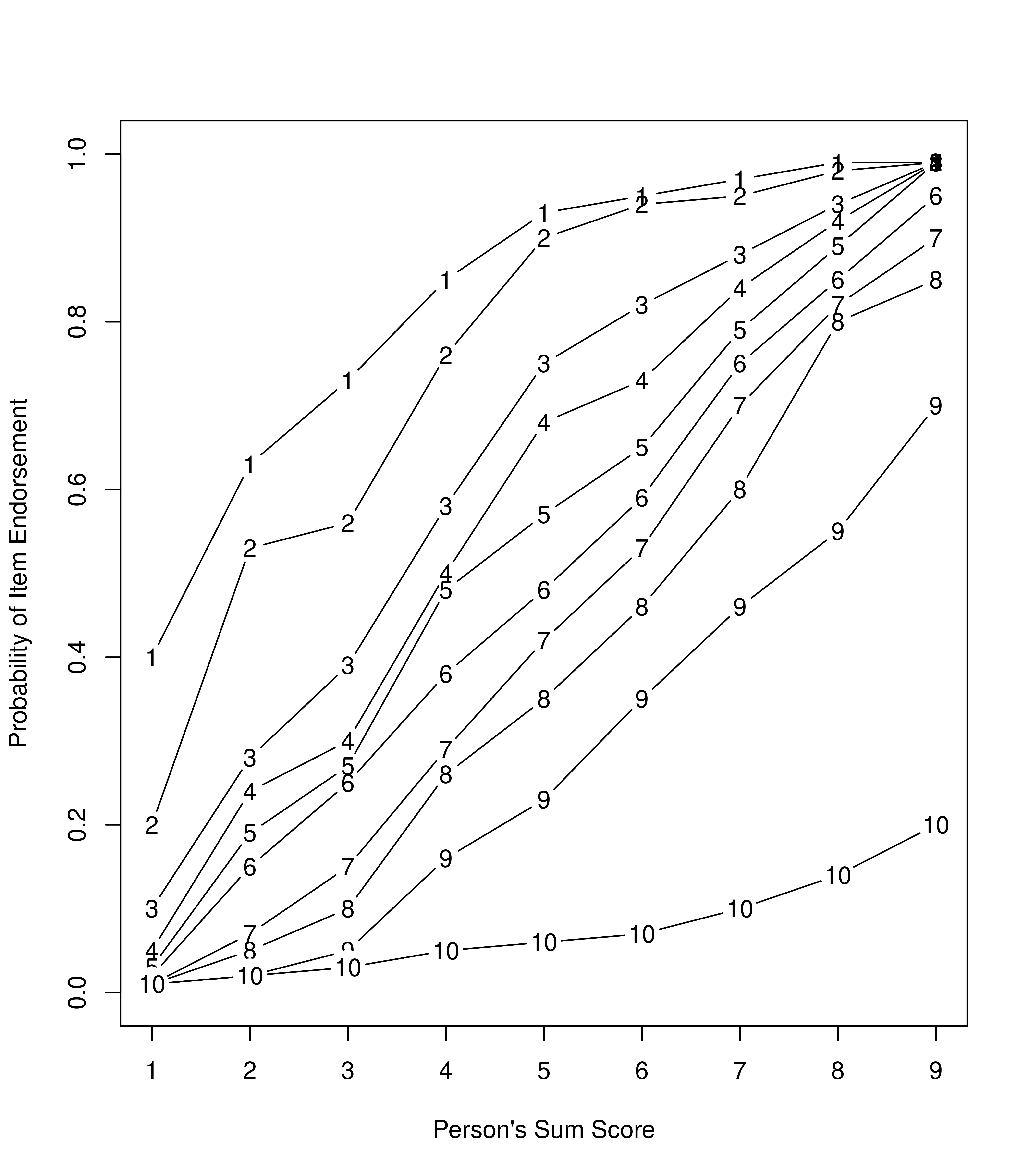

In IRT, we can plot an item characteristic curve (ICC). The ICC is a plot of the model-derived probability of a symptom being present (or a correct response) as a function of a person’s standing on a latent continuum. For instance, we can create empirical ICCs that can take any shape (see Figure 8.1).

Figure 8.1: Empirical Item Characteristic Curves of the Probability of Endorsement of a Given Item as a Function of the Person’s Sum Score.

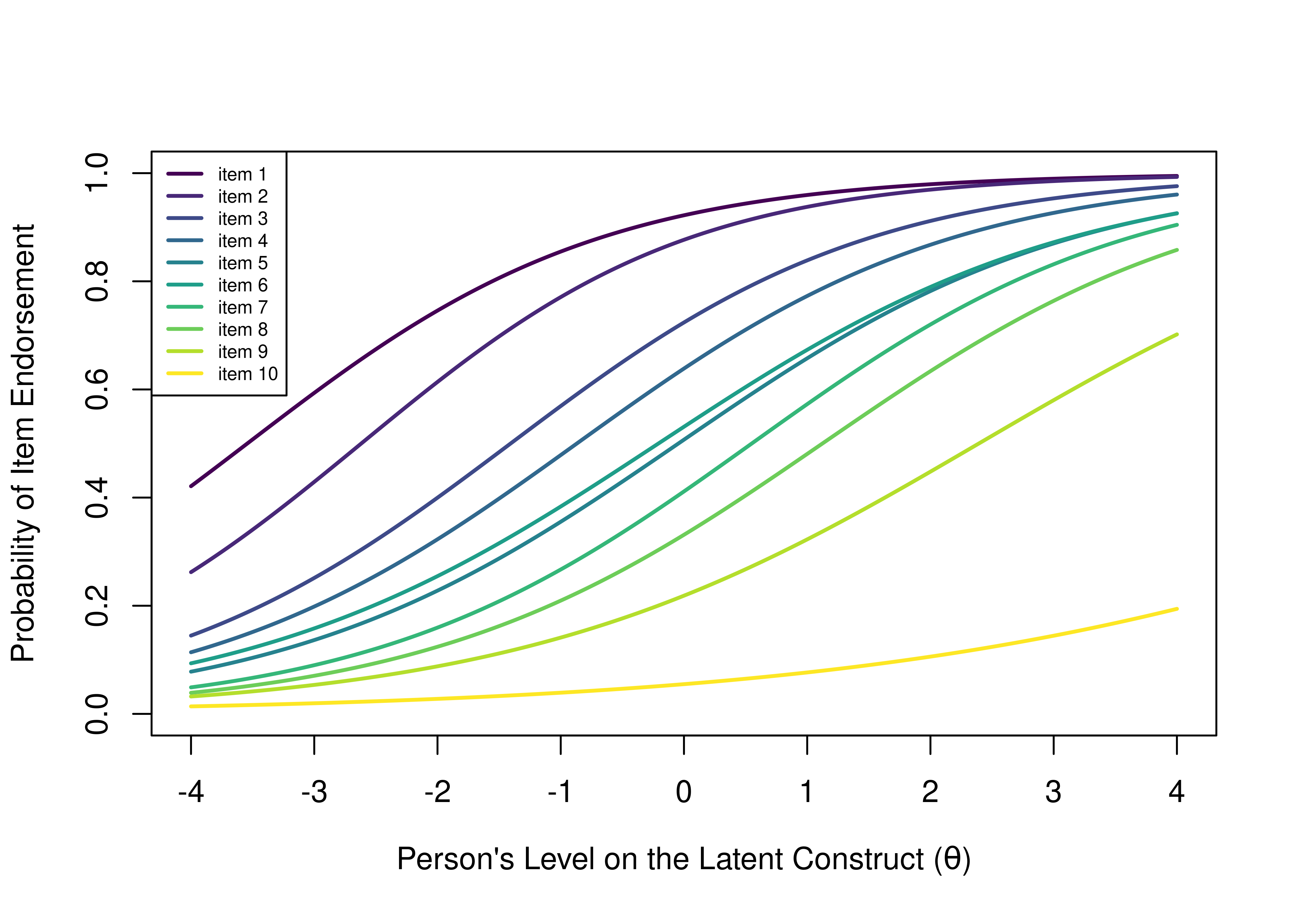

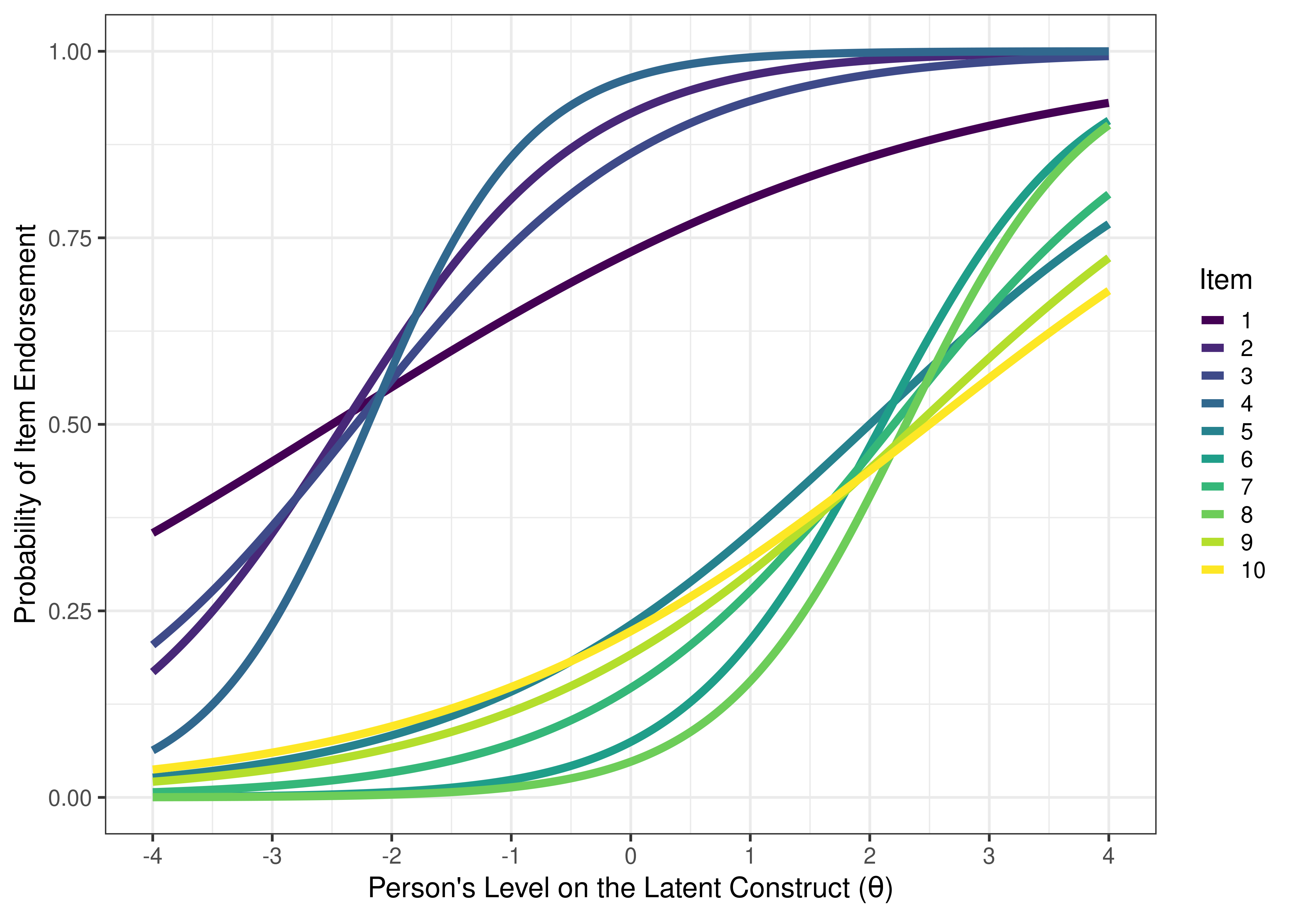

In a model-implied ICC, we fit a logistic (sigmoid) curve to each item’s probability of a symptom being present as a function of a person’s level on the latent construct. The model-implied ICCs for the same 10 items from Figure 8.1 are depicted in Figure 8.2.

Figure 8.2: Item Characteristic Curves of the Probability of Endorsement of a Given Item as a Function of the Person’s Level on the Latent Construct.

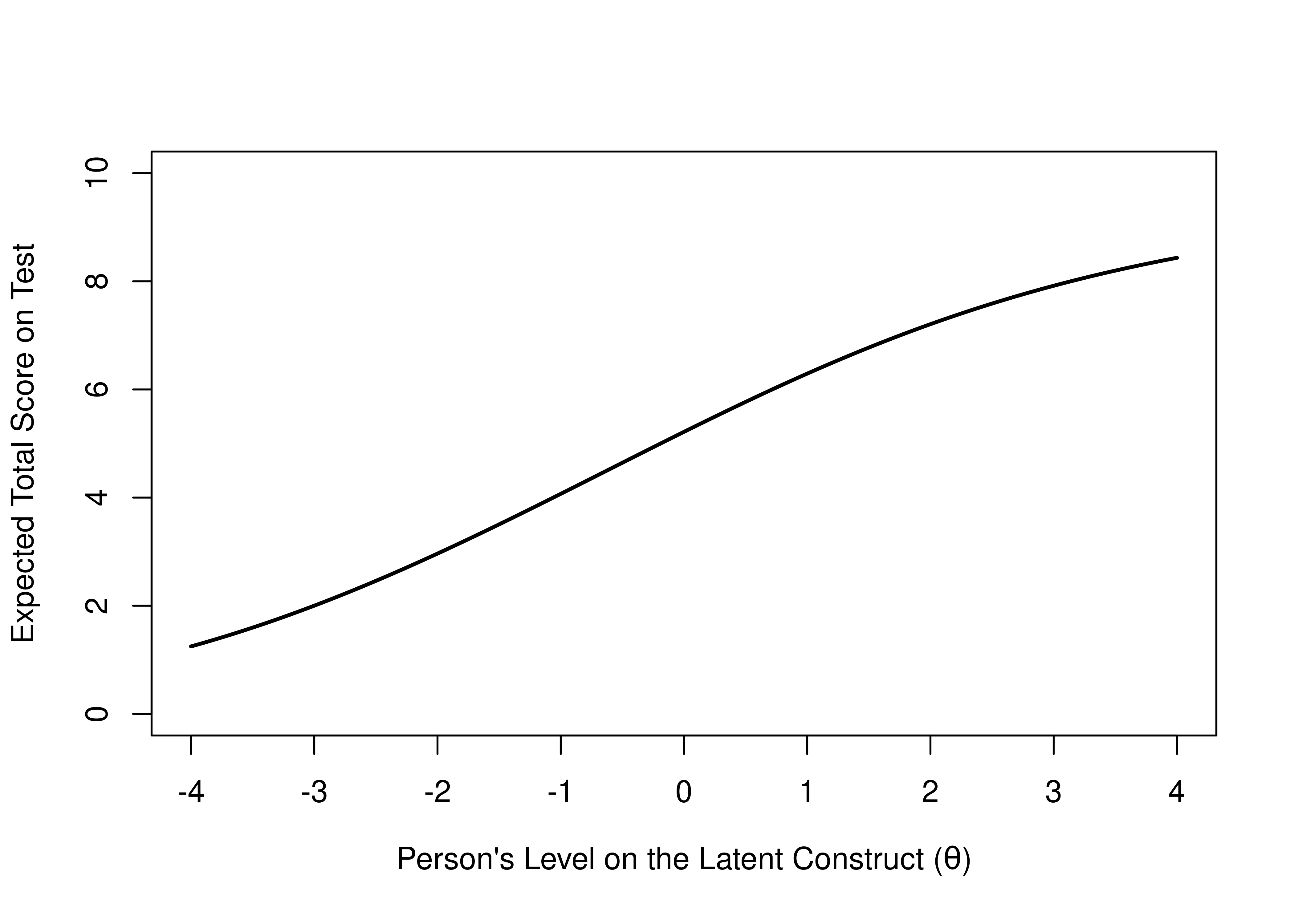

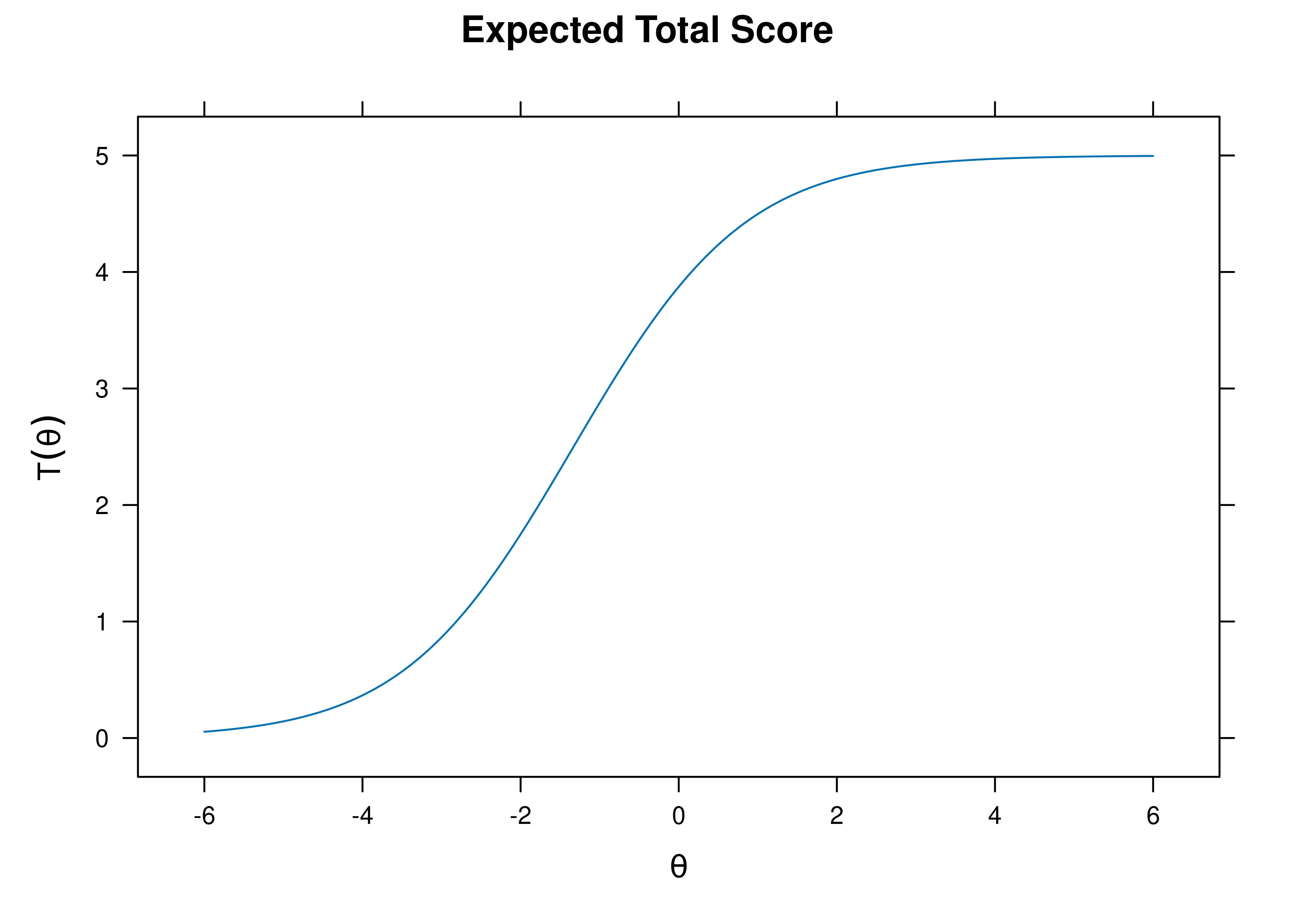

ICCs can be summed across items to get the test characteristic curve (TCC):

Figure 8.3: Test Characteristic Curve of the Expected Total Score on the Test as a Function of the Person’s Level on the Latent Construct.

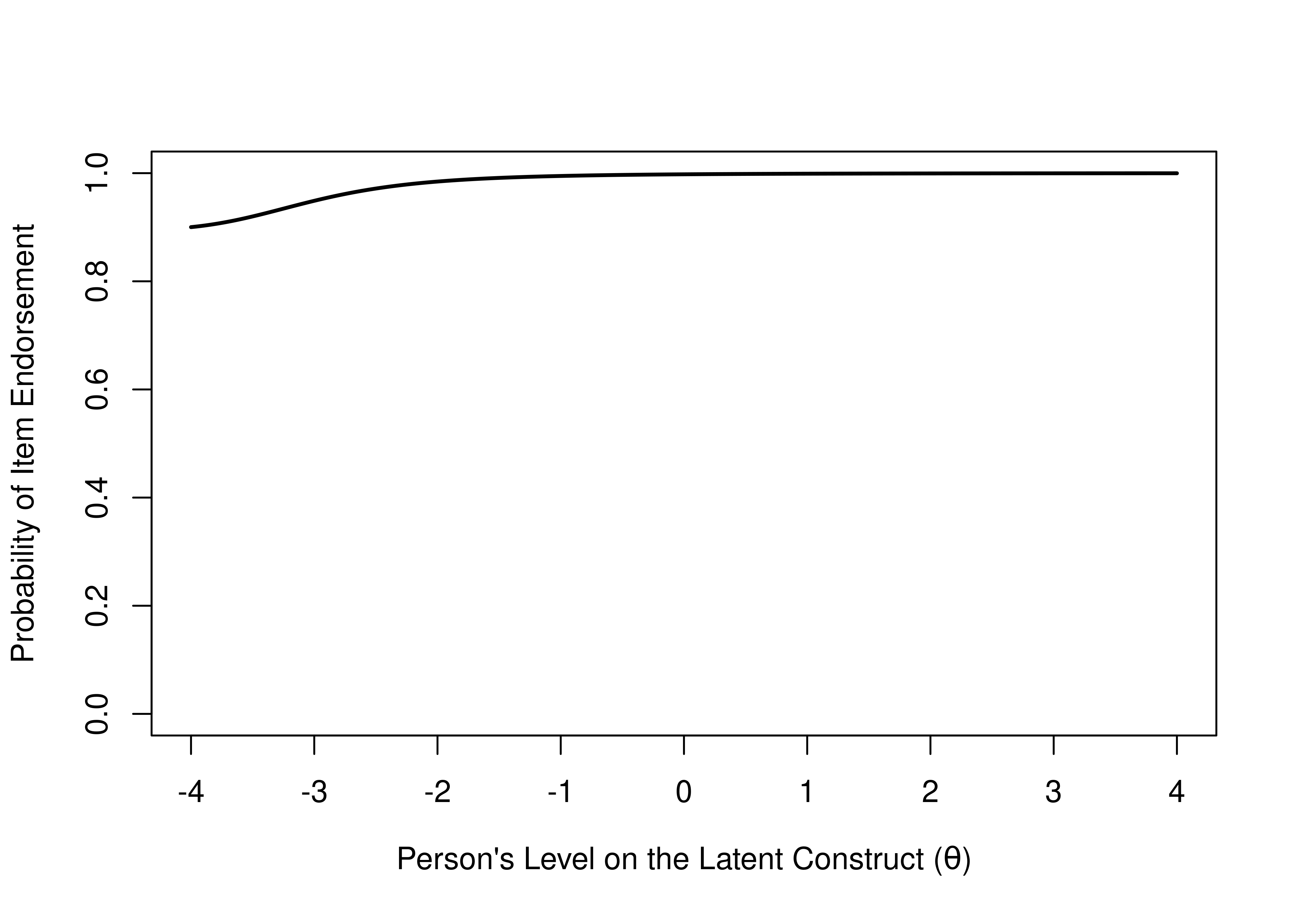

An ICC provides more information than an item–total correlation. Visually, we can see the utility of various items by looking at the items’ ICC plots. For instance, consider what might be a useless item for diagnostic purposes. For a particular item, among those with a low total score (level on the construct), 90% respond with “TRUE” to the item, whereas among everyone else, 100% respond with “TRUE” (see Figure 8.4). This item has a ceiling effect and provides only a little information about who would be considered above clinical threshold for a disorder. So, the item is not very clinically useful.

Figure 8.4: Item Characteristic Curve of an Item with a Ceiling Effect That is not Diagnostically Useful.

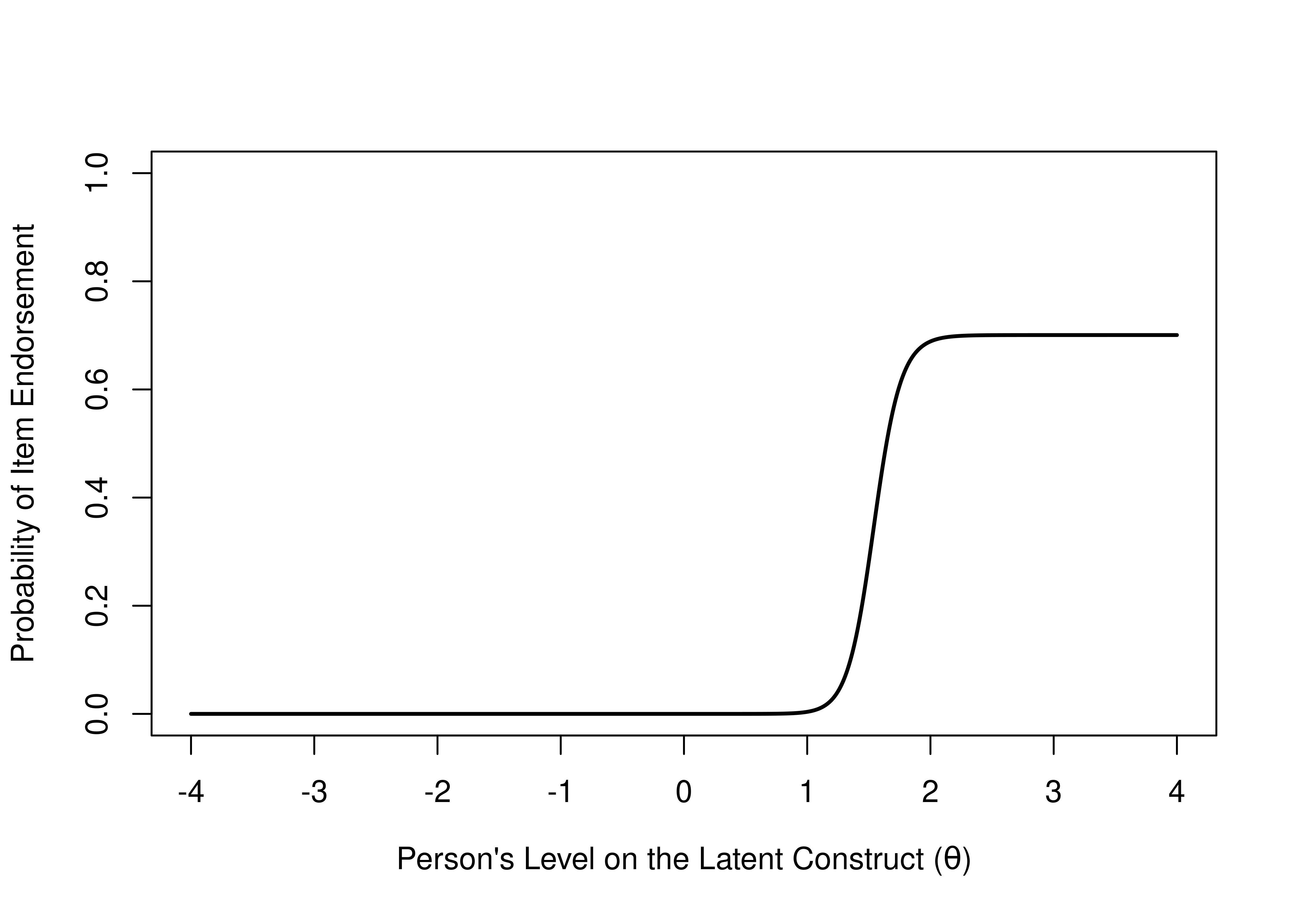

Now, consider a different item. For those with a low level on the construct, 0% respond with “TRUE”, so it has a floor effect and tells us nothing about the lower end of the construct. But for those with a higher level on the construct, 70% respond with true (see Figure 8.5). So, the item tells us something about the higher end of the distribution, and could be diagnostically useful. Thus, an ICC allows us to immediately tell the utility of items.

Figure 8.5: Item Characteristic Curve of an Item With a Floor Effect That is Diagnostically Useful.

8.1.2 Parameters

We can estimate up to four parameters in an IRT model and can glean up to four key pieces of information from an item’s ICC:

- Difficulty (severity)

- Discrimination

- Guessing

- Inattention/careless errors

8.1.2.1 Difficulty (Severity)

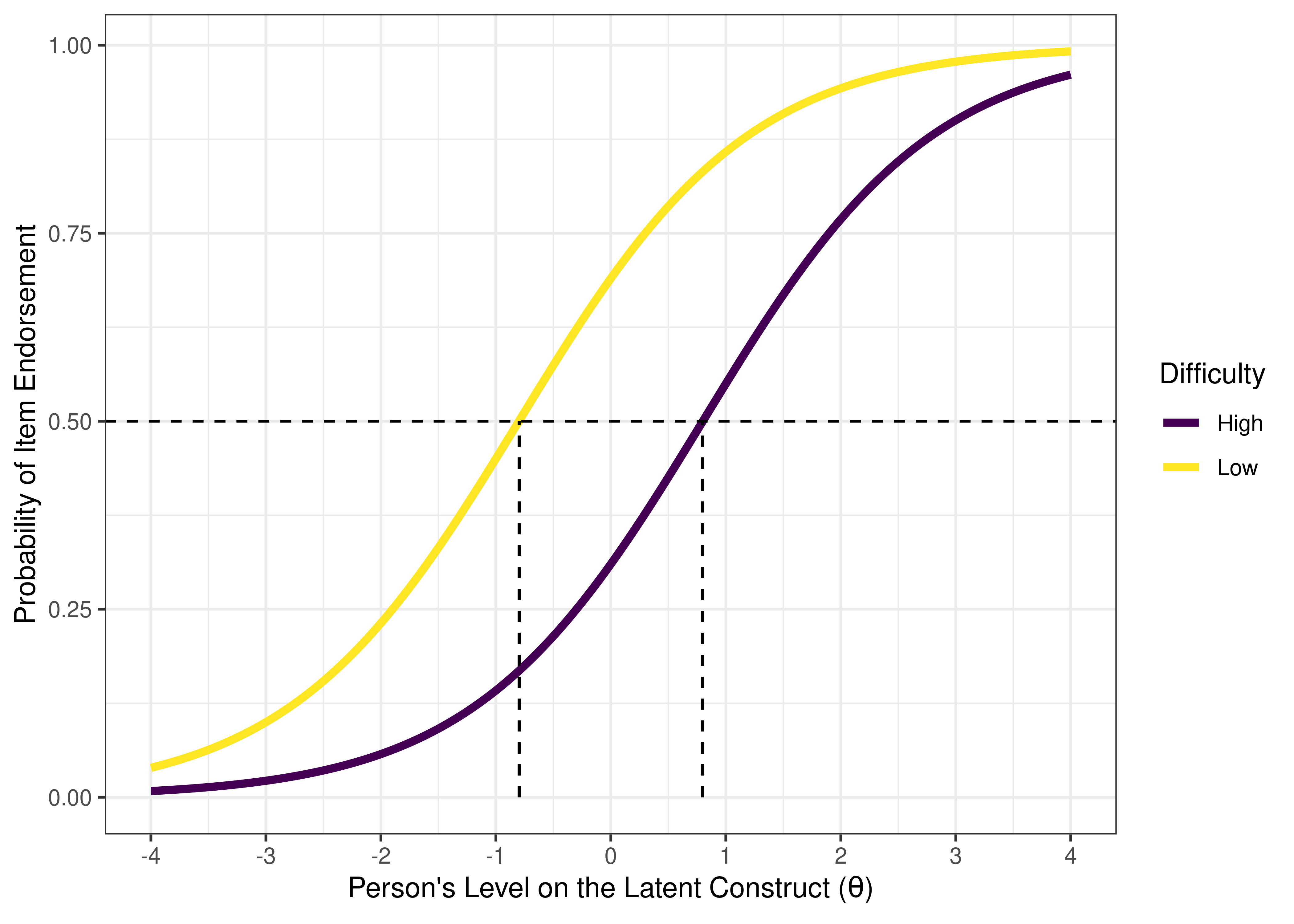

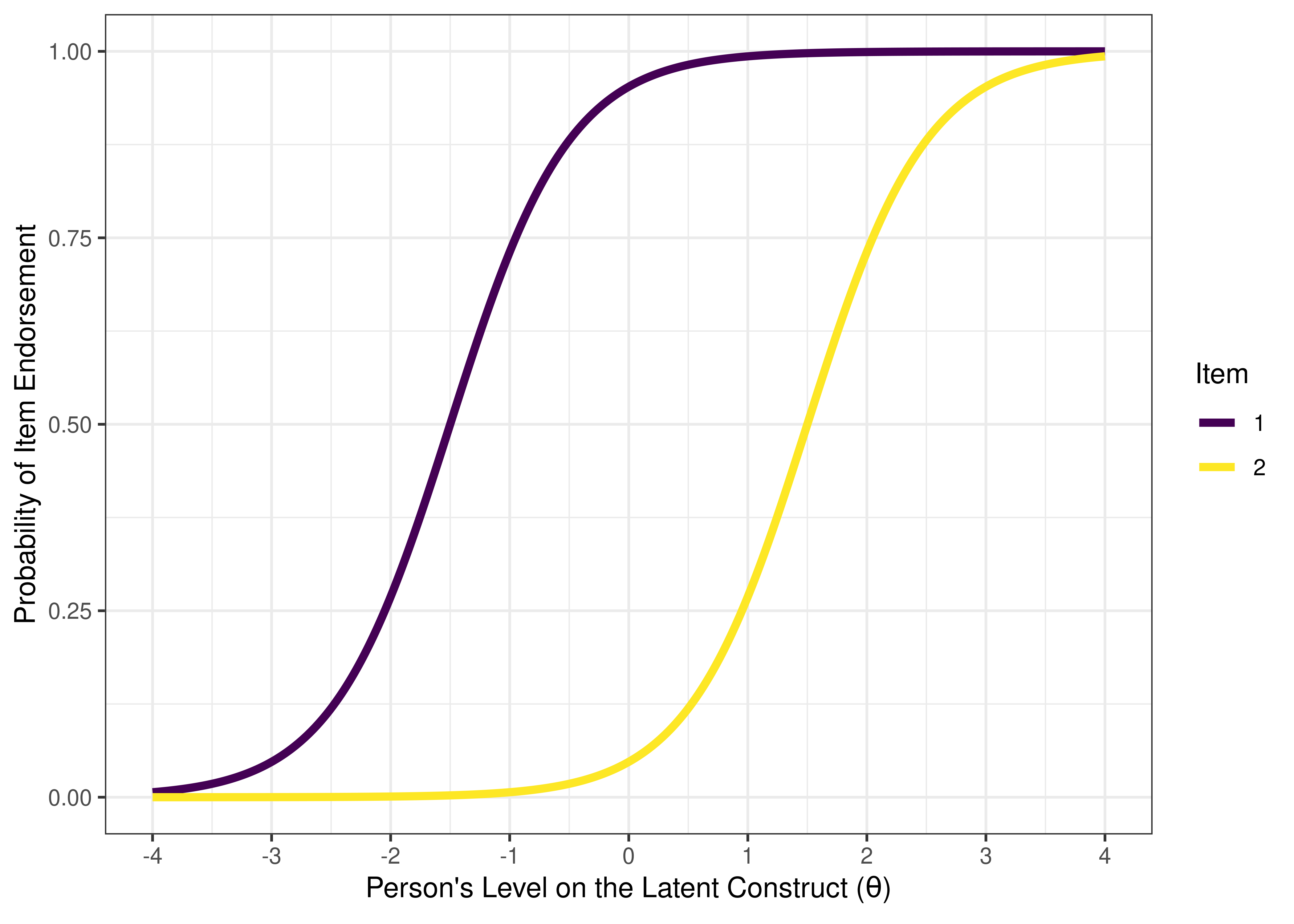

The item’s difficulty parameter is the item’s location on the latent construct. It is quantified by the intercept, i.e., the location on the x-axis of the inflection point of the ICC. In a 1- or 2-parameter model, the inflection point is where 50% of the sample endorses the item (or gets the item correct), that is, the point on the x-axis where the ICC crosses .5 probability on the y-axis (i.e., the level on the construct at which the probability of endorsing the item is equal to the probability of not endorsing the item). Item difficulty is similar to item means or intercepts in structural equation modeling or factor analysis. Some items are more useful at the higher levels of the construct, whereas other items are more useful at the lower levels of the construct. See Figure 8.6 for an example of an item with a low difficulty and an item with a high difficulty.

Figure 8.6: Item Characteristic Curves of an Item With Low Difficulty Versus High Difficulty. The dashed horizontal line indicates a probability of item endorsement of .50. The dashed vertical line is the item difficulty, i.e., the person’s level on the construct (the location on the x-axis) at the inflection point of the item characteristic curve. In a two-parameter logistic model, the inflection point corresponds to the probability of item endorsement is 50%. Thus, in a two-parameter logistic model, the difficulty of an item is the person’s level on the construct where the probability of endorsing the item is 50%.

When dealing with a measure of clinical symptoms (e.g., depression), the difficulty parameter is sometimes called severity, because symptoms that are endorsed less frequently tend to be more severe [e.g., suicidal behavior; Krueger et al. (2004)]. One way of thinking about the severity parameter of an item is: “How severe does your psychopathology have to be for half of people to endorse the symptom?”

When dealing with a measure of performance, aptitude, or intelligence, the parameter would be more likely to be called difficulty: “How high does your ability have to be for half of people to pass the item?” An item with a low difficulty would be considered easy, because even people with a low ability tend to pass the item. An item with a high difficulty would be considered difficult, because only people with a high ability tend to pass the item.

8.1.2.2 Discrimination

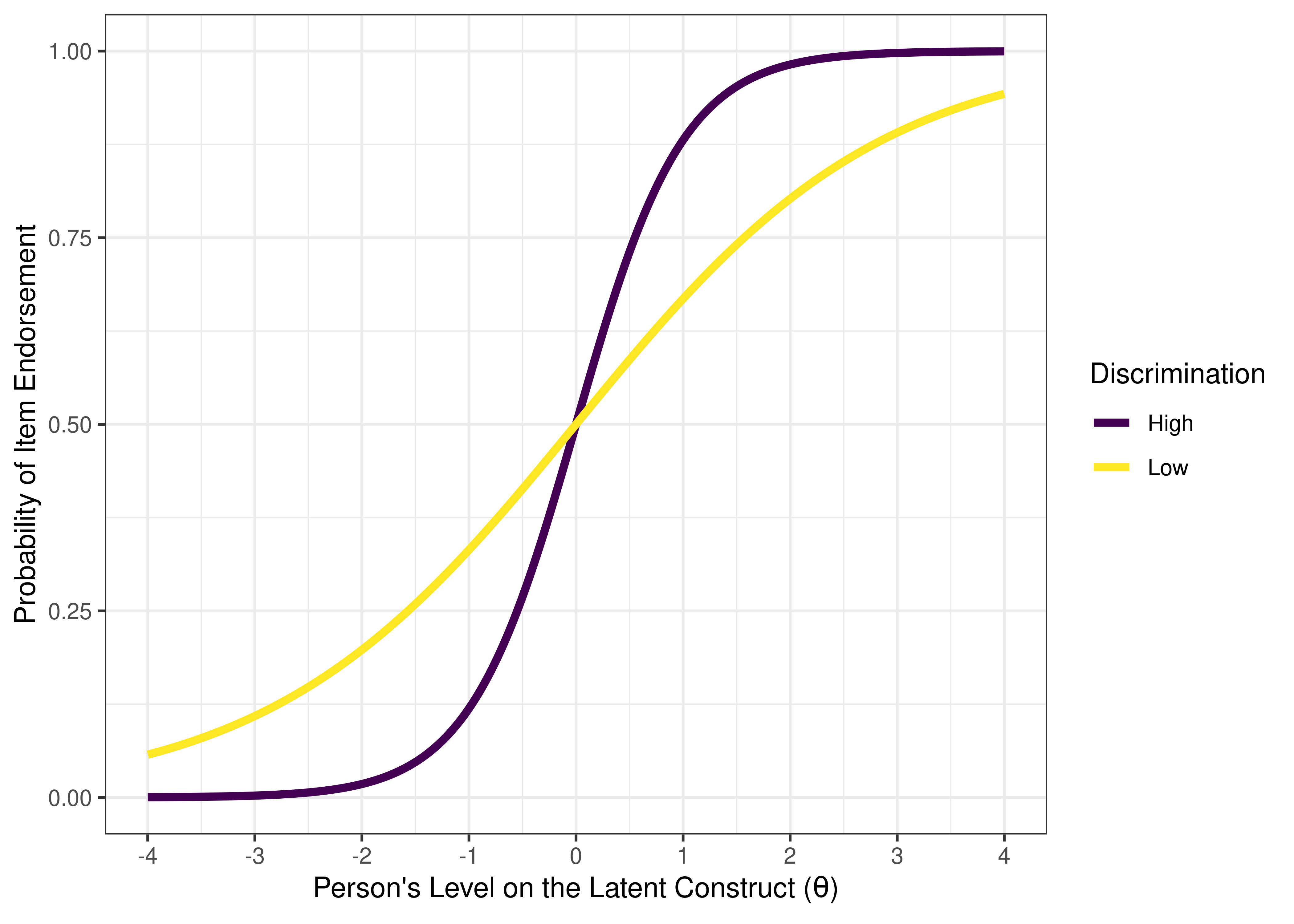

The item’s discrimination parameter is how well the item can distinguish between those who were higher versus lower on the construct, that is, how strongly the item is correlated with the construct (i.e., the latent factor). It is similar to the factor loading in structural equation modeling or factor analysis. It is quantified by the slope of the ICC, i.e., the steepness of the line at its steepest point. The slope reflects the inverse of how much range of construct levels it would take to flip 50/50 whether a person is likely to pass or fail an item.

Some items have ICCs that go up fast (have a steep slope). These items provide a fine distinction between people with lower versus higher levels on the construct and therefore have high discrimination. Some items go up gradually (less steep slope), so it provides less precision and information, and has a low discrimination. See Figure 8.7 for an example of an item with a low discrimination and an item with a high discrimination.

Figure 8.7: Item Characteristic Curves of an Item With Low Discrimination Versus High Discrimination. The discrimination of an item is the slope of the line at its inflection point.

8.1.2.3 Guessing

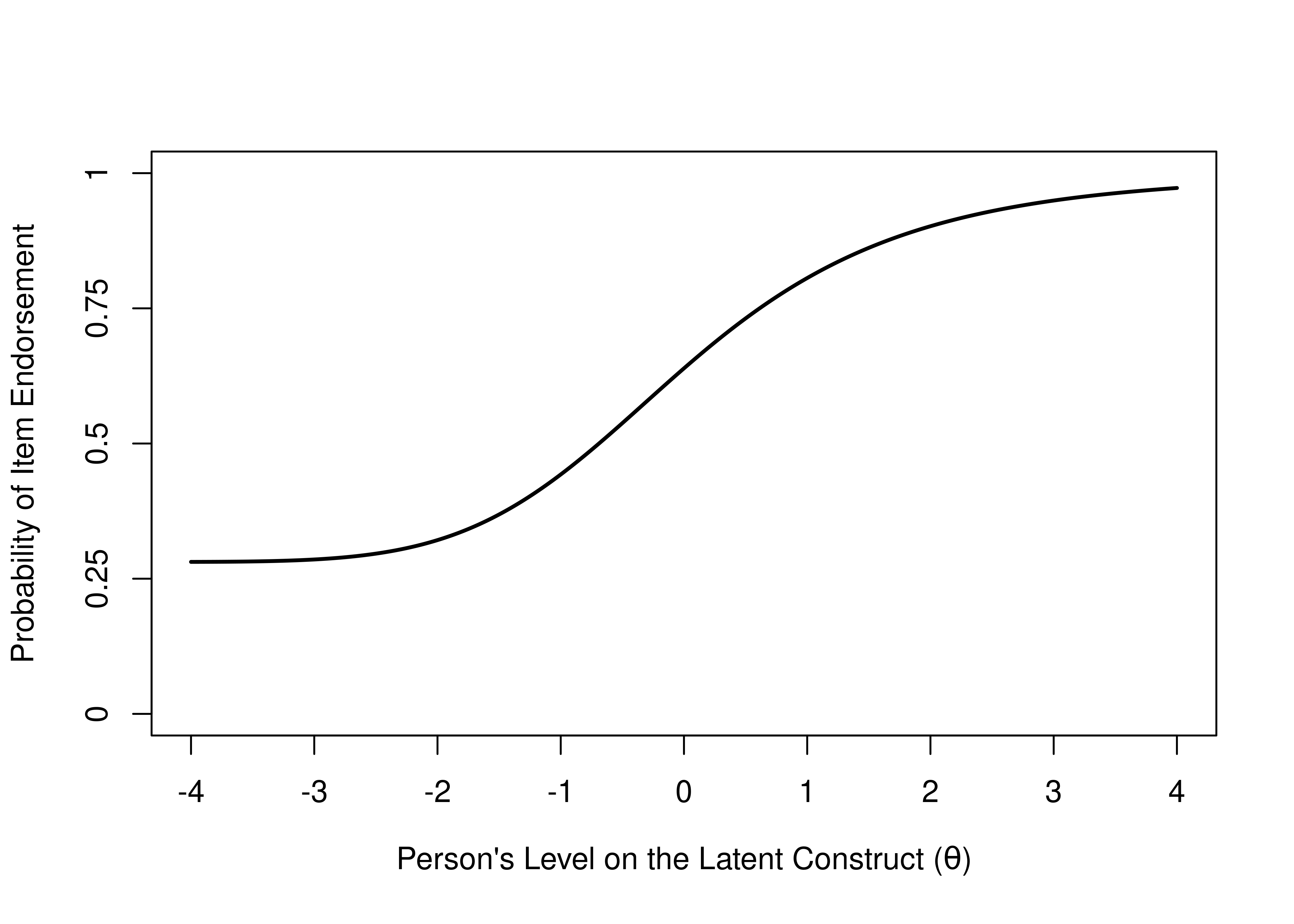

The item’s guessing parameter is reflected by the lower asymptote of the ICC. If the item has a lower asymptote above zero, it suggests that the probability of getting the item correct (or endorsing the item) never reaches zero, for any level of the construct. On an educational test, this could correspond to the person’s likelihood of being able to answer the item correctly by chance just by guessing. For example, for a 4-option multiple choice test, a respondent would be expected to get a given item correct 25% of the time just by guessing. See Figure 8.8 for an example of an item from a true/false exam and Figure 8.9 for an example of an item from a 4-option multiple choice exam.

Figure 8.8: Item Characteristic Curve of an Item from a True/False Exam, There Test Takers Get the Item Correct at Least 50% of the Time.

Figure 8.9: Item Characteristic Curve of an Item From a 4-Option Multiple Choice Exam, Where Test Takers Get the Item Correct at Least 25% of the Time.

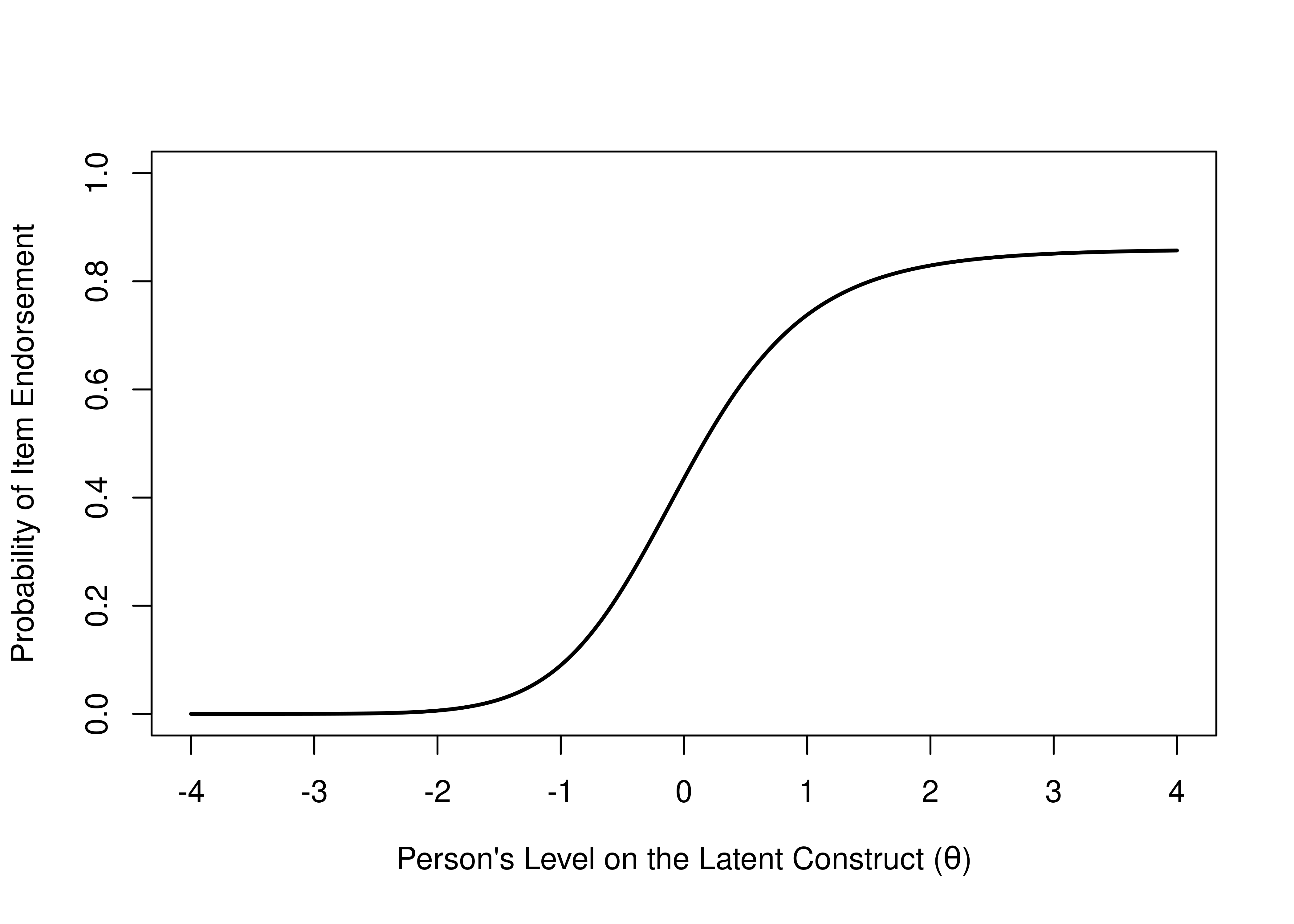

8.1.2.4 Inattention/Careless Errors

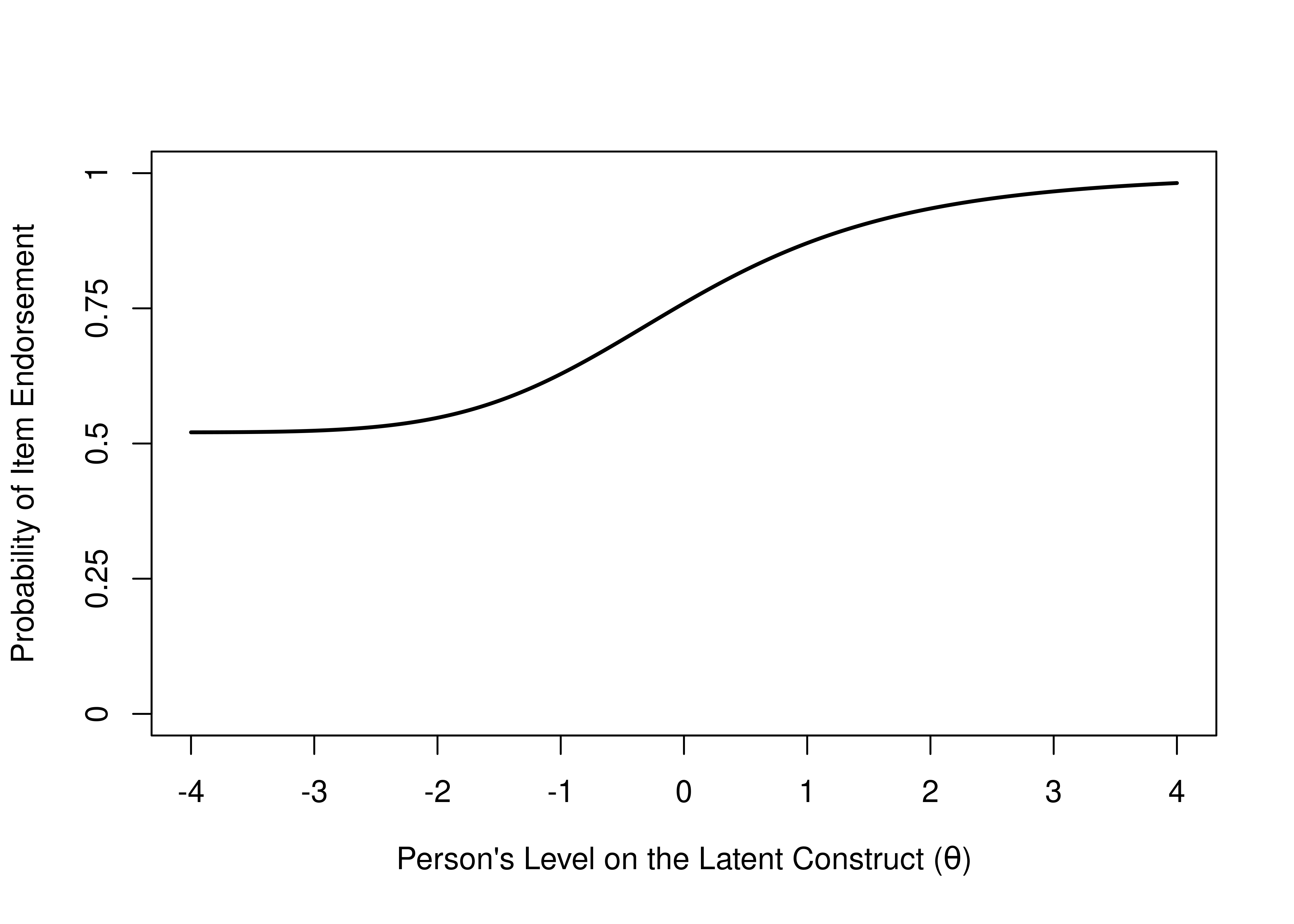

The item’s inattention (or careless error) parameter is the reflected by the upper asymptote of the ICC. If the item has an upper asymptote below one, it suggests that the probability of getting the item correct (or endorsing the item) never reaches one, for any level on the construct. See Figure 8.10 for an example of an item whose probability of endorsement (or getting it correct) exceeds .85.

Figure 8.10: Item Characteristic Curve of an Item Where the Probability of Getting an Item Correct Never Exceeds .85.

8.1.3 Models

IRT models can be fit that estimate one or more of these four item parameters.

8.1.3.1 1-Parameter and Rasch models

A Rasch model estimates the item difficulty parameter and holds everything else fixed across items. It fixes the item discrimination to be one for each item. In the Rasch model, the probability that a person \(j\) with a level on the construct of \(\theta\) gets a score of one (instead of zero) on item \(i\), based on the difficulty (\(b\)) of the item, is estimated using Equation (8.1):

\[\begin{equation} P(X = 1|\theta_j, b_i) = \frac{e^{\theta_j - b_i}}{1 + e^{\theta_j - b_i}} \tag{8.1} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the fourPL() function that estimates the probability of item endorsement as function of the item characteristics from the Rasch model and the person’s level on the construct (theta).

To estimate the probability of endorsement from the Rasch model, specify \(b\) and \(\theta\), while keeping the defaults for the other parameters.

Code

Code

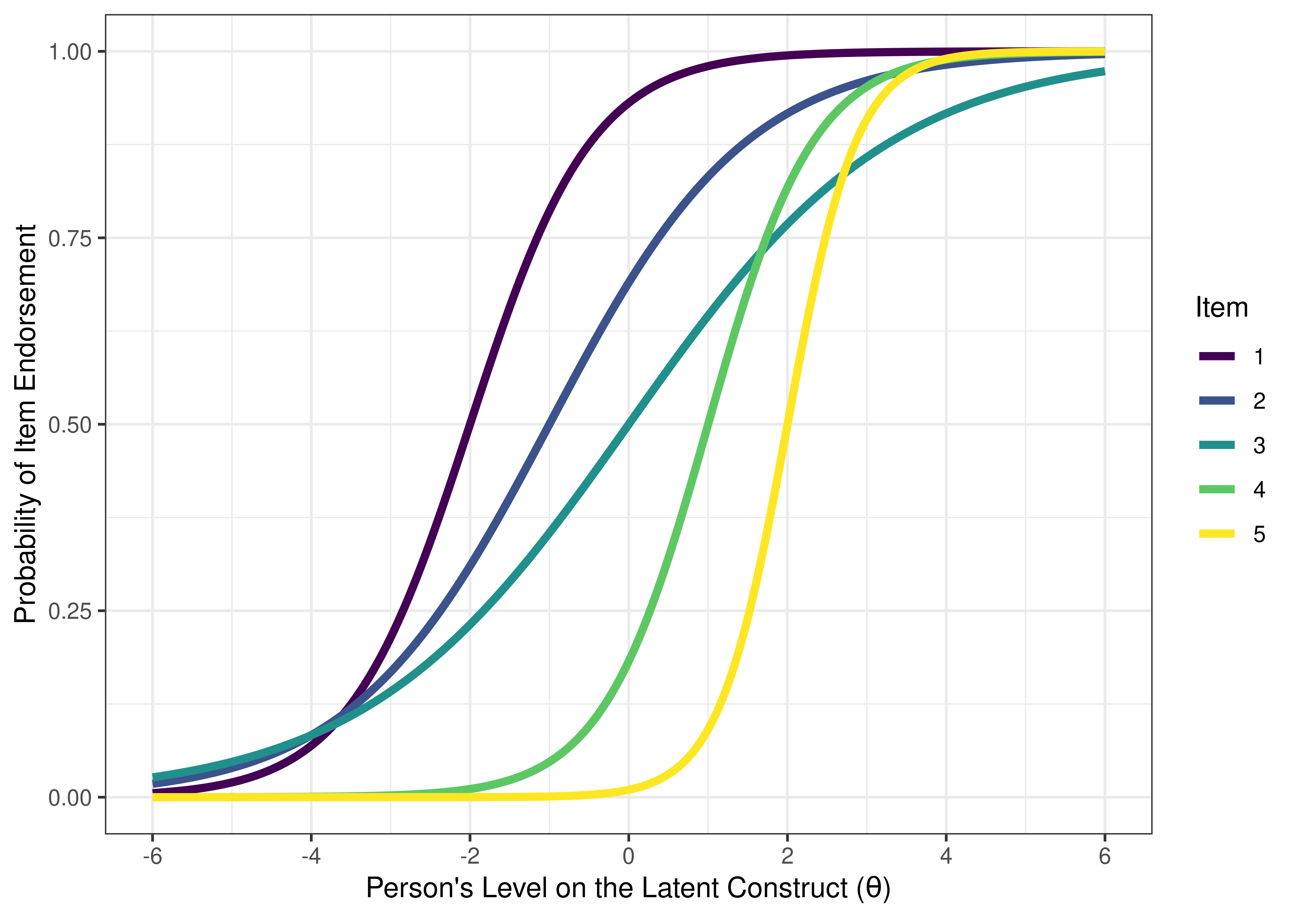

[1] 0.2689414A one-parameter logistic (1-PL) IRT model, similar to a Rasch model, estimates the item difficulty parameter, and holds everything else fixed across items (see Figure 8.11). The one-parameter logistic model holds the item discrimination fixed across items, but does not fix it to one, unlike the Rasch model.

In the one-parameter logistic model, the probability that a person \(j\) with a level on the construct of \(\theta\) gets a score of one (instead of zero) on item \(i\), based on the difficulty (\(b\)) of the item and the items’ (fixed) discrimination (\(a\)), is estimated using Equation (8.2):

\[\begin{equation} P(X = 1|\theta_j, b_i, a) = \frac{e^{a(\theta_j - b_i)}}{1 + e^{a(\theta_j - b_i)}} \tag{8.2} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the fourPL() function that estimates the probability of item endorsement as function of the item characteristics from the one-parameter logistic model and the person’s level on the construct (theta).

To estimate the probability of endorsement from the one-parameter logistic model, specify \(a\), \(b\), and \(\theta\), while keeping the defaults for the other parameters.

Rasch and one-parameter logistic models are common and are the easiest to fit. However, they make fairly strict assumptions. They assume that items have the same discrimination.

Figure 8.11: One-Parameter Logistic Model in Item Response Theory.

A one-parameter logistic model is only valid if there is not crossing of lines in empirical ICCs (see Figure 8.12).

Figure 8.12: Empirical Item Characteristic Curves of the Probability of Endorsement of a Given Item as a Function of the Person’s Sum Score. The empirical item characteristic curves of these items do not cross each other.

8.1.3.2 2-Parameter

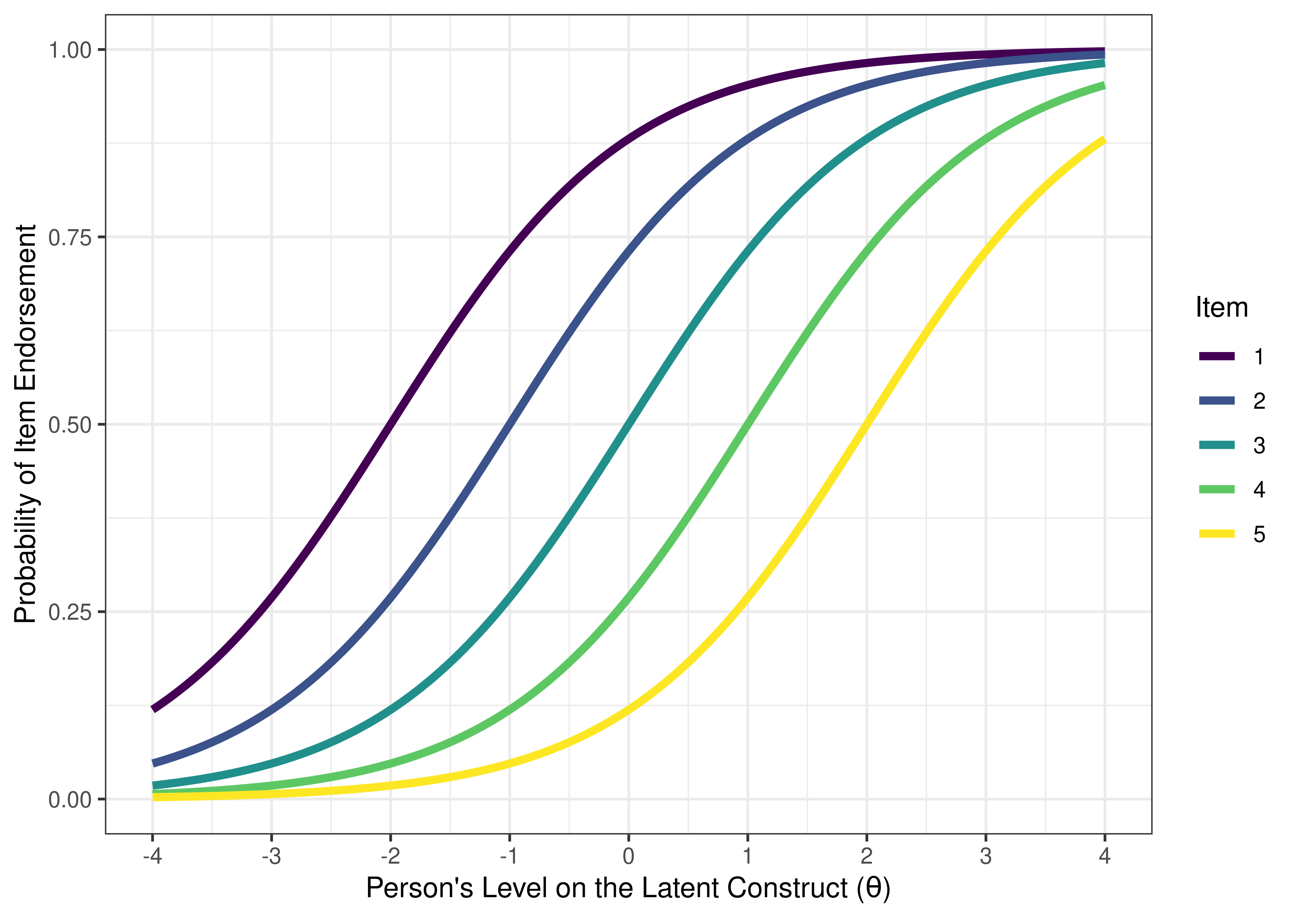

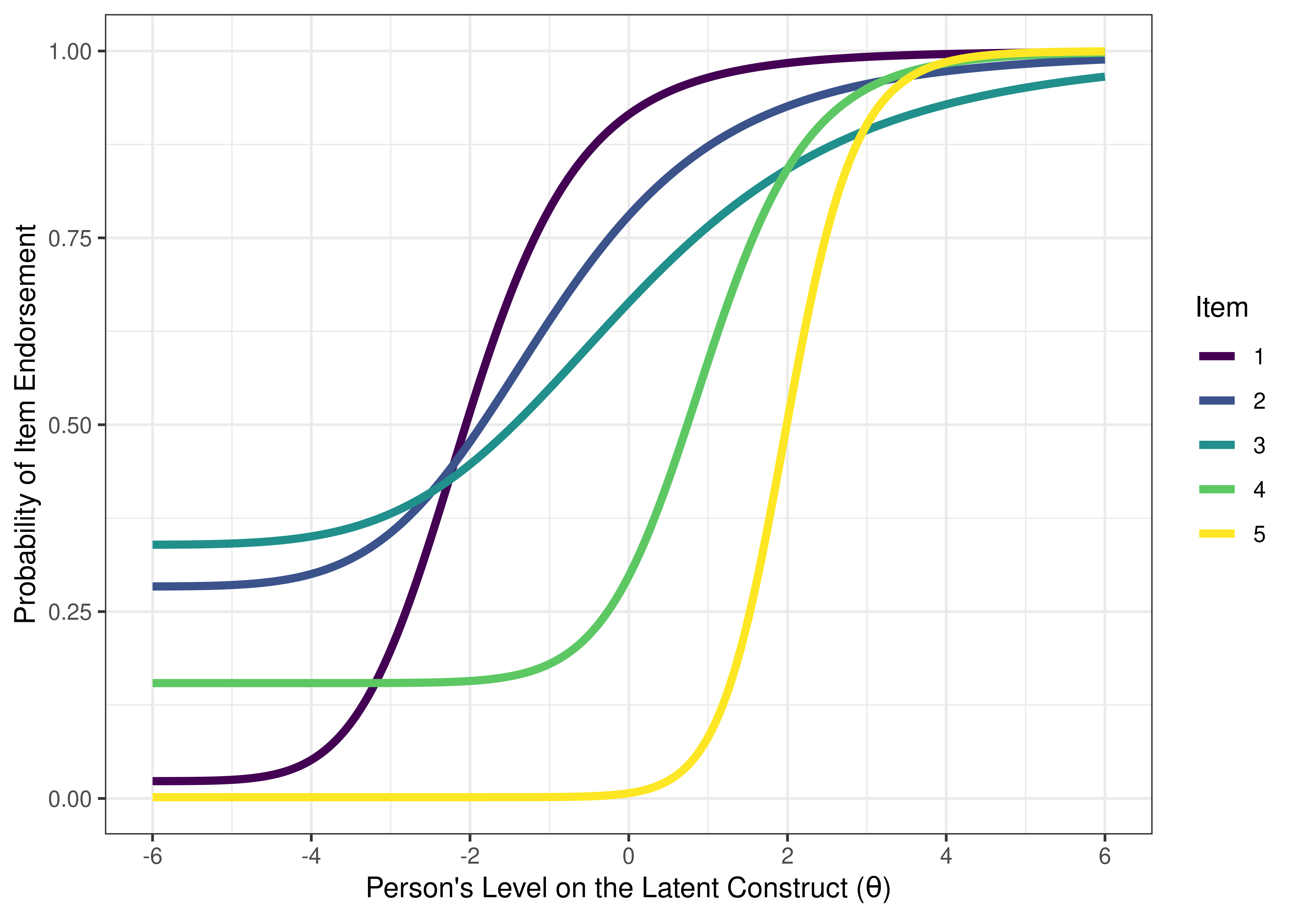

A two-parameter logistic (2-PL) IRT model estimates item difficulty and discrimination, and it holds the asymptotes fixed across items (see Figure 8.13). Two-parameter logistic models are also common.

In the two-parameter logistic model, the probability that a person \(j\) with a level on the construct of \(\theta\) gets a score of one (instead of zero) on item \(i\), based on the difficulty (\(b\)) and discrimination (\(a\)) of the item, is estimated using Equation (8.3):

\[\begin{equation} P(X = 1|\theta_j, b_i, a_i) = \frac{e^{a_i(\theta_j - b_i)}}{1 + e^{a_i(\theta_j - b_i)}} \tag{8.3} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the fourPL() function that estimates the probability of item endorsement as function of the item characteristics from the two-parameter logistic model and the person’s level on the construct (theta).

To estimate the probability of endorsement from the two-parameter logistic model, specify \(a\), \(b\), and \(\theta\), while keeping the defaults for the other parameters.

[1] 0.3543437

Figure 8.13: Two-Parameter Logistic Model in Item Response Theory.

8.1.3.3 3-Parameter

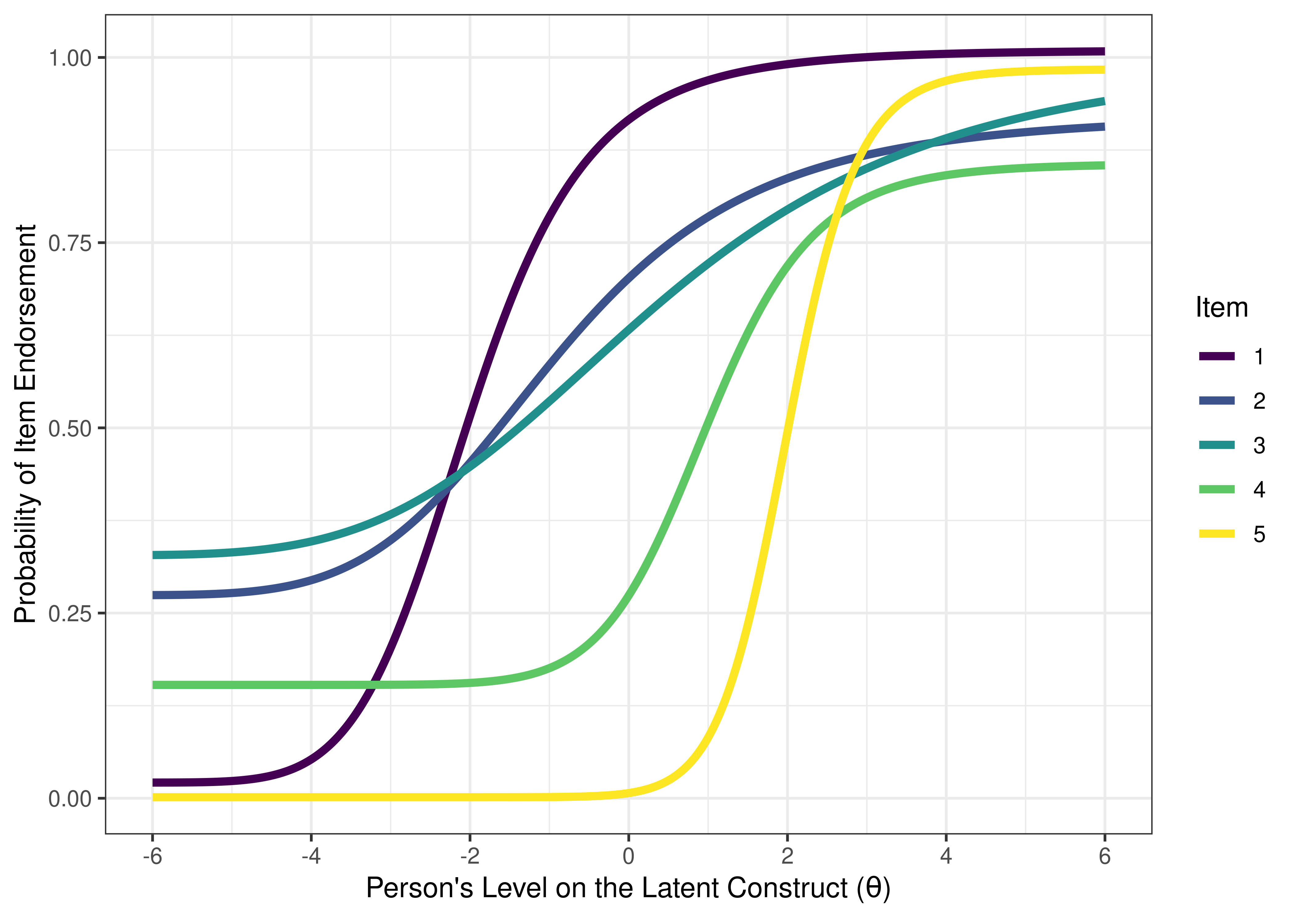

A three-parameter logistic (3-PL) IRT model estimates item difficulty, discrimination, and guessing (lower asymptote), and it holds the upper asymptote fixed across items (see Figure 8.14). This model would provide information about where an item drops out. Three-parameter logistic models are less common to estimate because it adds considerable computational complexity and requires a large sample size, and the guessing parameter is often not as important as difficulty and discrimination. Nevertheless, 3-parameter logistic models are sometimes estimated in the education literature to account for getting items correct by random guessing.

In the three-parameter logistic model, the probability that a person \(j\) with a level on the construct of \(\theta\) gets a score of one (instead of zero) on item \(i\), based on the difficulty (\(b\)), discrimination (\(a\)), and guessing parameter (\(c\)) of the item, is estimated using Equation (8.4):

\[\begin{equation} P(X = 1|\theta_j, b_i, a_i, c_i) = c_i + (1 - c_i) \frac{e^{a_i(\theta_j - b_i)}}{1 + e^{a_i(\theta_j - b_i)}} \tag{8.4} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the fourPL() function that estimates the probability of item endorsement as function of the item characteristics from the three-parameter logistic model and the person’s level on the construct (theta).

To estimate the probability of endorsement from the three-parameter logistic model, specify \(a\), \(b\), \(c\), and \(\theta\), while keeping the defaults for the other parameters.

[1] 0.625

Figure 8.14: Three-Parameter Logistic Model in Item Response Theory.

8.1.3.4 4-Parameter

A four-parameter logistic (4-PL) IRT model estimates item difficulty, discrimination, guessing, and careless errors (see Figure 8.15). The fourth parameter adds considerable computational complexity and is rare to estimate.

In the four-parameter logistic model, the probability that a person \(j\) with a level on the construct of \(\theta\) gets a score of one (instead of zero) on item \(i\), based on the difficulty (\(b\)), discrimination (\(a\)), guessing parameter (\(c\)), and careless error parameter (\(d\)) of the item, is estimated using Equation (8.5) (Magis, 2013):

\[\begin{equation} P(X = 1|\theta_j, b_i, a_i, c_i, d_i) = c_i + (d_i - c_i) \frac{e^{a_i(\theta_j - b_i)}}{1 + e^{a_i(\theta_j - b_i)}} \tag{8.5} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the fourPL() function that estimates the probability of item endorsement as function of the item characteristics from the four-parameter logistic model and the person’s level on the construct (theta).

To estimate the probability of endorsement from the four-parameter logistic model, specify \(a\), \(b\), \(c\), \(d\), and \(\theta\).

[1] 0.8168019

Figure 8.15: Four-Parameter Logistic Model in Item Response Theory.

8.1.3.5 Graded Response Model

Graded response models and generalized partial credit models can be estimated with one, two, three, or four parameters. However, they use polytomous data (not dichotomous data), as described in the section below.

The two-parameter graded response model takes the general form of Equation (8.6):

\[\begin{equation} P(X_{ji} = x_{ji}|\theta_j) = P^*_{x_{ji}}(\theta_j) - P^*_{x_{ji} + 1}(\theta_j) \tag{8.6} \end{equation}\]

where:

\[\begin{equation} P^*_{x_{ji}}(\theta_j) = P(X_{ji} \geq x_{ji}|\theta_j, b_{ic}, a_i) = \frac{1}{1 + e^{a_i(\theta_j - b_{ic})}} \tag{8.7} \end{equation}\]

In the model, \(a_i\) an item-specific discrimination parameter, \(b_{ic}\) is an item- and category-specific difficulty parameter, and \(θ_n\) is an estimate of a person’s standing on the latent variable. In the model, \(i\) represents unique items, \(c\) represents different categories that are rated, and \(j\) represents participants.

8.1.4 Type of Data

IRT models are most commonly estimated with binary or dichotomous data. For example, the measures have questions or items that can be considered collapsed into two groups (e.g., true/false, correct/incorrect, endorsed/not endorsed). IRT models can also be estimated with polytomous data (e.g., likert scale), which adds computational complexity. IRT models with polytomous data can be fit with a graded response model or generalized partial credit model.

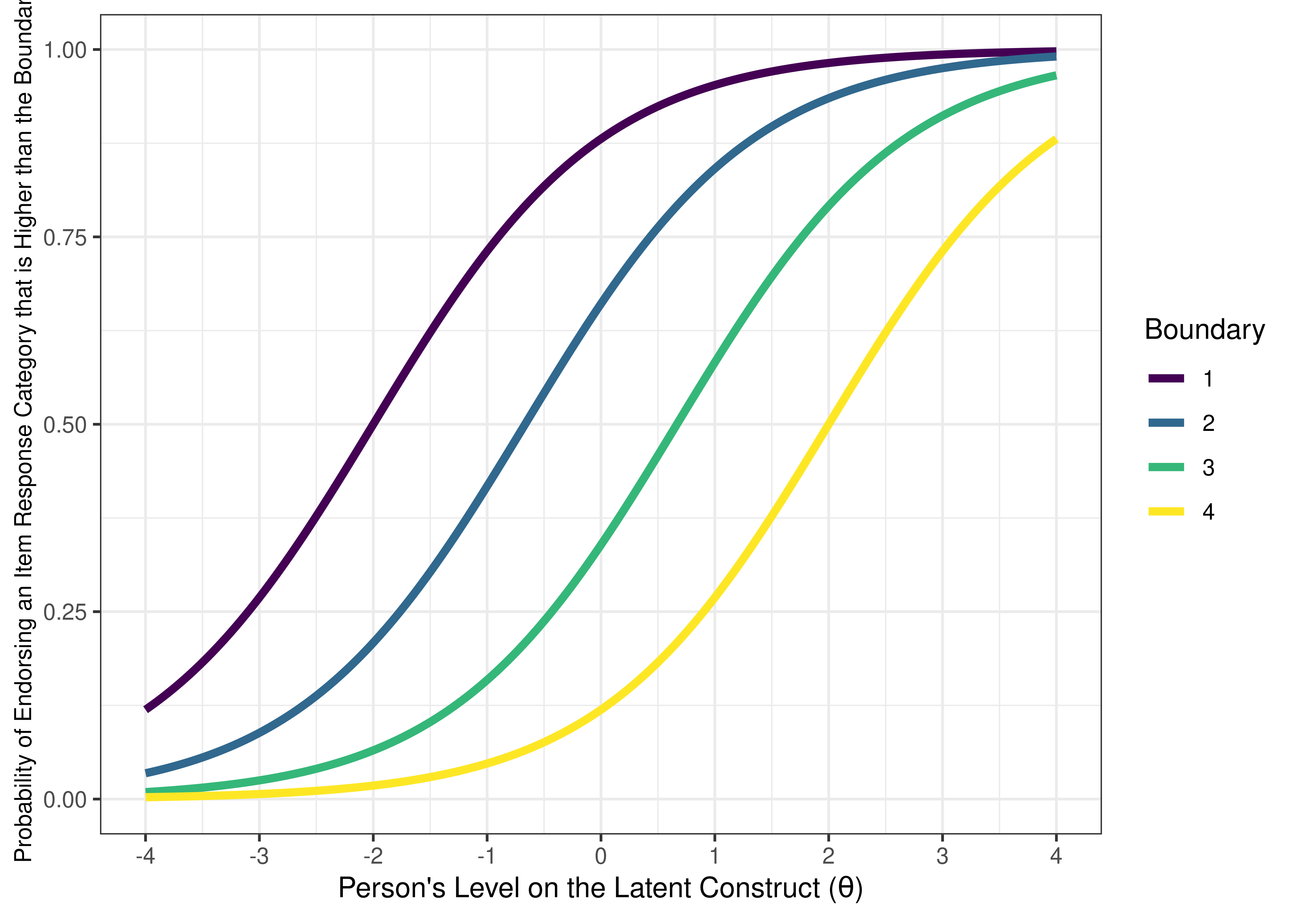

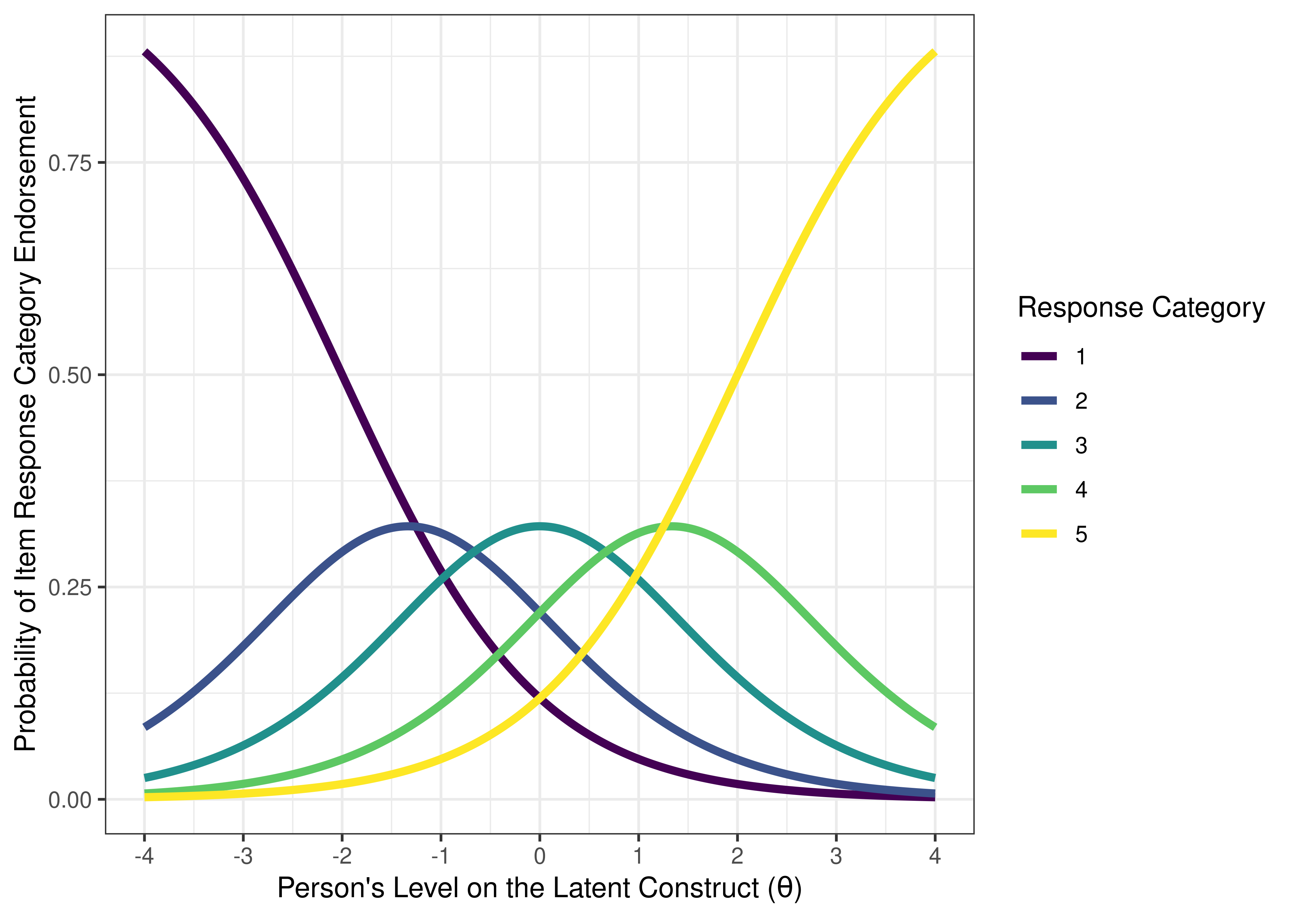

For example, see Figure 8.16 for an example of an item boundary characteristic curve for an item from a 5-level likert scale (based on a cumulative distribution). If an item has \(k\) response categories, it has \(k - 1\) thresholds. For example, an item with 5-level likert scale (1 = strongly disagree; 2 = disagree; 3 = neither agree nor disagree; 4 = agree; 5 = strongly agree) has 4 thresholds: one from 1–2, one from 2–3, one from 3–4, and one from 4–5. The item boundary characteristic curve is the probability that a person selects a response category higher than \(k\) of a polytomous item. As depicted, one likert scale item does equivalent work as 4 binary items. See Figure 8.17 for the same 5-level likert scale item plotted with an item response category characteristic curve (based on a static, non-cumulative distribution).

Figure 8.16: Item Boundary Characteristic Curves From Two-Parameter Graded Response Model in Item Response Theory.

Figure 8.17: Item Response Category Characteristic Curves From Two-Parameter Graded Response Model in Item Response Theory.

IRT does not handle continuous data well, with some exceptions (Y. Chen et al., 2019) such as in a Bayesian framework (Bürkner, 2021). If you want to use continuous data, you might consider moving to a factor analysis framework.

8.1.5 Sample Size

Sample size requirements depend on the complexity of the model. A 1-parameter model often requires ~100 participants. A 2-parameter model often requires ~1,000 participants. A 3-parameter model often requires ~10,000 participants.

8.1.6 Reliability (Information)

IRT conceptualizes reliability in a different way than classical test theory does. Both IRT and classical test theory conceptualize reliability as involving the precision of a measure’s scores. In classical test theory, (im)precision—as operationalized by the standard error of measurement—is estimated with a single index across the whole range of the construct. That is, in classical test theory, the same standard error of measurement applies to all scores in the population (Embretson, 1996). However, IRT estimates how much measurement precision (information) or imprecision (standard error of measurement) each item, and the test as a whole, has at different construct levels. This allows IRT to conceptualize reliability in such a way that precision/reliability can differ at different construct levels, unlike in classical test theory (Embretson, 1996). Thus, IRT does not have one index of reliability; rather, its estimate of reliability differs at different levels on the construct. As a result, from the perspective of IRT, it does not make sense to talk about the reliability of a scale, because the reliability of the scale depends on the person’s score on that scale.

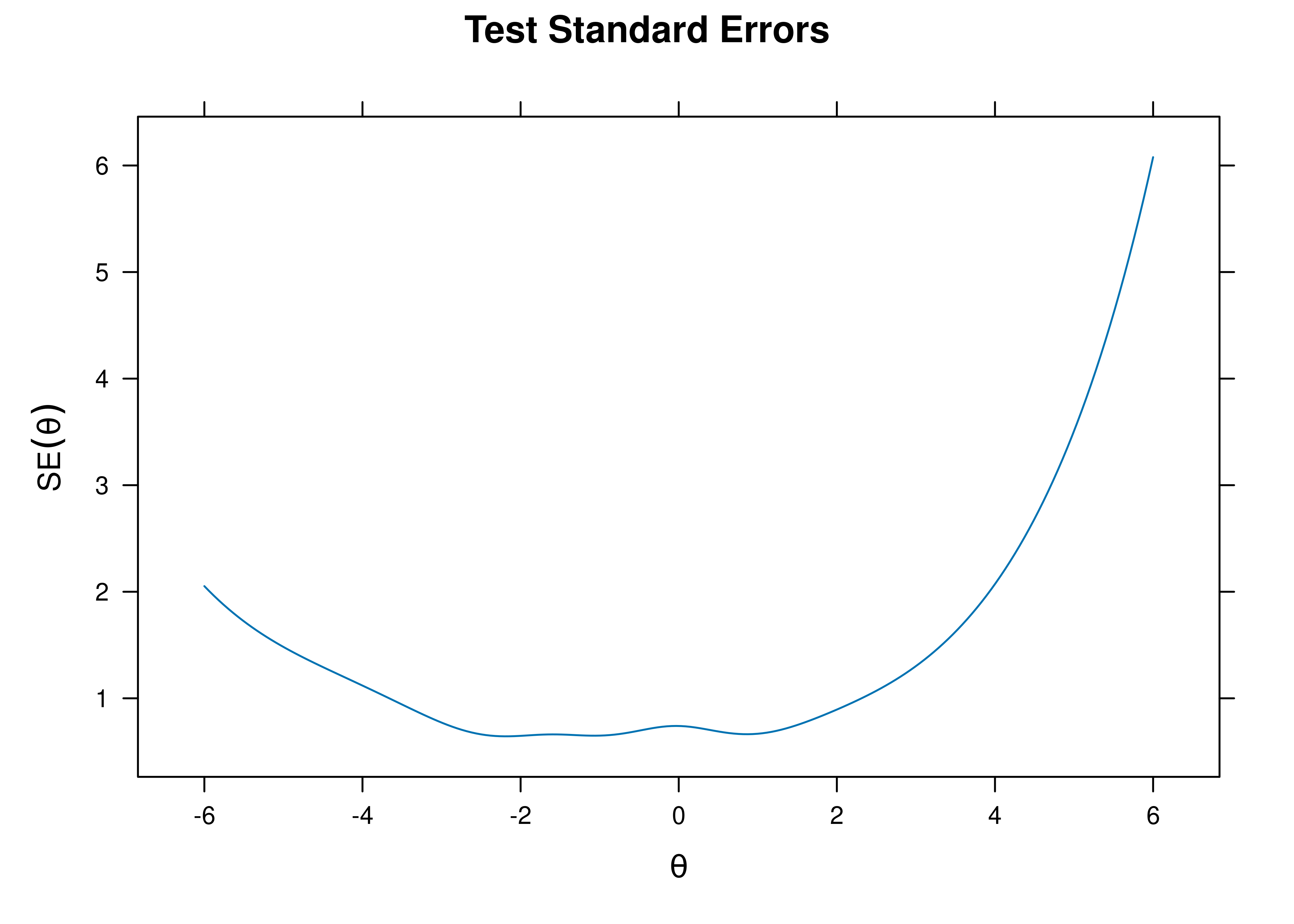

Based on an item’s difficulty and discrimination, we can calculate how much information each item provides. In IRT, information is how much measurement precision or consistency an item (or the measure) provides. In other words, information is the degree to which an item (or measure) reduces the standard error of measurement, that is, how much it reduces uncertainty of a person’s level on the construct. As a reminder (from Equation (4.11)), the standard error of measurement is calculated as:

\[ \text{standard error of measurement (SEM)} = \sigma_x \sqrt{1 - r_{xx}} \]

where \(\sigma_x = \text{standard deviation of observed scores on the item } x\), and \(r_{xx} = \text{reliability of the item } x\). The standard error of measurement is used to generate confidence intervals for people’s scores. In IRT, the standard error of measurement (at a given construct level) can be calculated as the inverse of the square root of the amount of test information at that construct level, as in Equation (8.8):

\[\begin{equation} \text{SEM}(\theta) = \frac{1}{\sqrt{\text{information}(\theta)}} \tag{8.8} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the standardErrorIRT() function that estimates the standard error of measurement at a person’s level on the construct (theta) from the amount of information that the item (or test) provides.

[1] 1.290994The standard error of measurement tends to be higher (i.e., reliability/information tends to be lower) at the extreme levels of the construct where there are fewer items.

The formula for information for item \(i\) at construct level \(\theta\) in a Rasch model is in Equation (8.9) (Baker & Kim, 2017):

\[\begin{equation} \text{information}_i(\theta) = P_i(\theta)Q_i(\theta) \tag{8.9} \end{equation}\]

where \(P_i(\theta)\) is the probability of getting a one instead of a zero on item \(i\) at a given level on the latent construct, and \(Q_i(\theta) = 1 - P_i(\theta)\).

The petersenlab package (Petersen, 2025) contains the itemInformation() function that estimates the amount of information an item provides as function of the item characteristics from the Rasch model and the person’s level on the construct (theta).

To estimate the amount of information an item provides in a Rasch model, specify \(b\) and \(\theta\), while keeping the defaults for the other parameters.

Code

[1] 0.1966119The formula for information for item \(i\) at construct level \(\theta\) in a two-parameter logistic model is in Equation (8.10) (Baker & Kim, 2017):

\[\begin{equation} \text{information}_i(\theta) = a^2_iP_i(\theta)Q_i(\theta) \tag{8.10} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the itemInformation() function that estimates the amount of information an item provides as function of the item characteristics from the two-parameter logistic model and the person’s level on the construct (theta).

To estimate the amount of information an item provides in a two-parameter logistic model, specify \(a\), \(b\), and \(\theta\), while keeping the defaults for the other parameters.

[1] 0.08236233The formula for information for item \(i\) at construct level \(\theta\) in a three-parameter logistic model is in Equation (8.11) (Baker & Kim, 2017):

\[\begin{equation} \text{information}_i(\theta) = a^2_i\bigg[\frac{Q_i(\theta)}{P_i(\theta)}\bigg]\bigg[\frac{(P_i(\theta) - c_i)^2}{(1 - c_i)^2}\bigg] \tag{8.11} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the itemInformation() function that estimates the amount of information an item provides as function of the item characteristics from the three-parameter logistic model and the person’s level on the construct (theta).

To estimate the amount of information an item provides in a three-parameter logistic model, specify \(a\), \(b\), \(c\), and \(\theta\), while keeping the defaults for the other parameters.

[1] 0.096The formula for information for item \(i\) at construct level \(\theta\) in a four-parameter logistic model is in Equation (8.12) (Magis, 2013):

\[\begin{equation} \text{information}_i(\theta) = \frac{a^2_i[P_i(\theta) - c_i]^2[d_i - P_i(\theta)^2]}{(d_i - c_i)^2 P_i(\theta)[1 - P_i(\theta)]} \tag{8.12} \end{equation}\]

The petersenlab package (Petersen, 2025) contains the itemInformation() function that estimates the amount of information an item provides as function of the item characteristics from the four-parameter logistic model and the person’s level on the construct (theta).

To estimate the amount of information an item provides in a four-parameter logistic model, specify \(a\), \(b\), \(c\), \(d\), and \(\theta\).

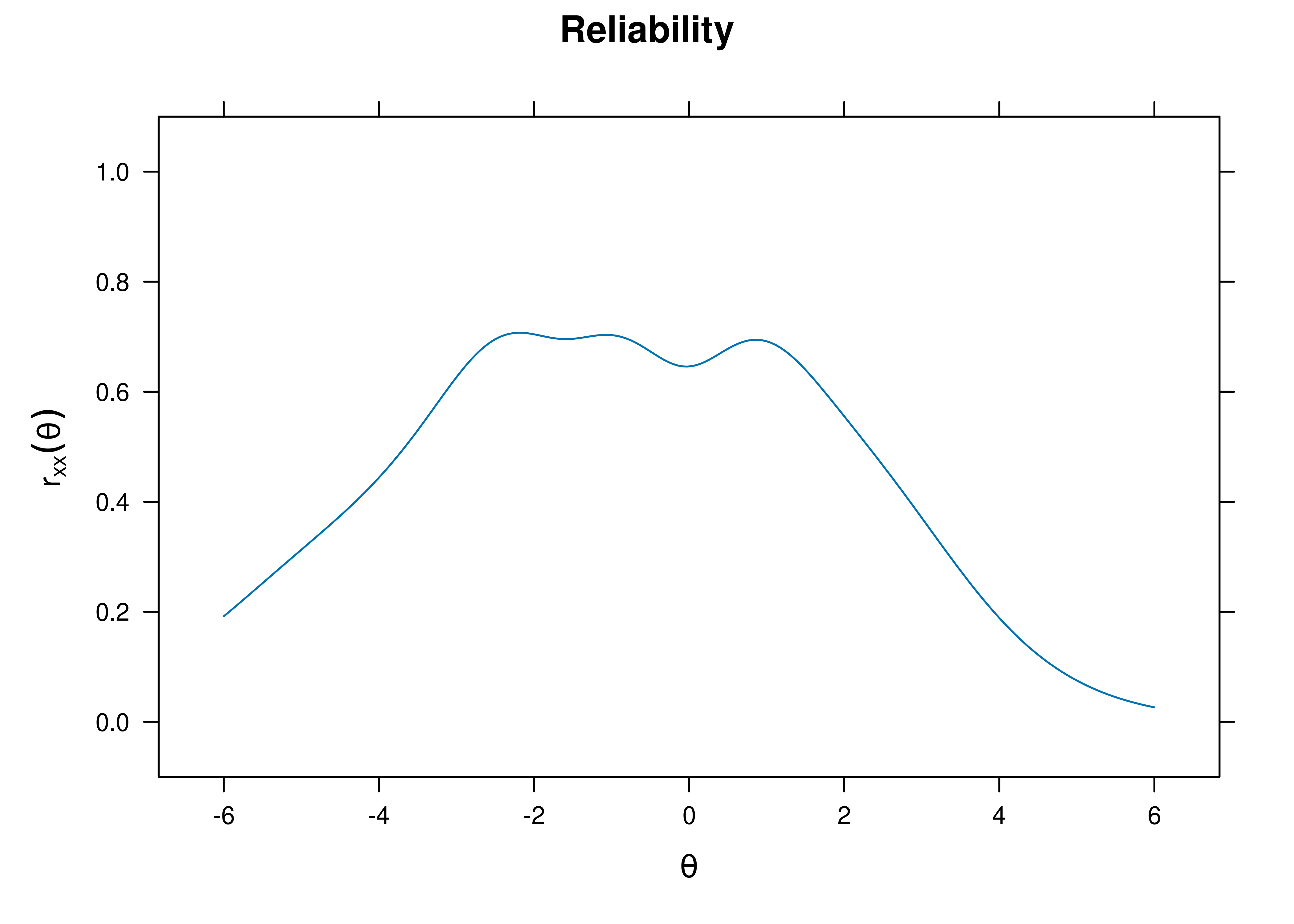

[1] 0.01503727Reliability at a given level of the construct (\(\theta\)) can be estimated as in Equation (8.13):

\[ \begin{aligned} \text{reliability}(\theta) &= \frac{\text{information}(\theta)}{\text{information}(\theta) + \sigma^2(\theta)} \\ &= \frac{\text{information}(\theta)}{\text{information}(\theta) + 1} \end{aligned} \tag{8.13} \]

where \(\sigma^2(\theta)\) is the variance of theta, which is fixed to one in most IRT models.

The petersenlab package (Petersen, 2025) contains the reliabilityIRT() function that estimates the amount of reliability an item or a measure provides as function of its information and the variance of people’s construct levels (\(\theta\)).

Code

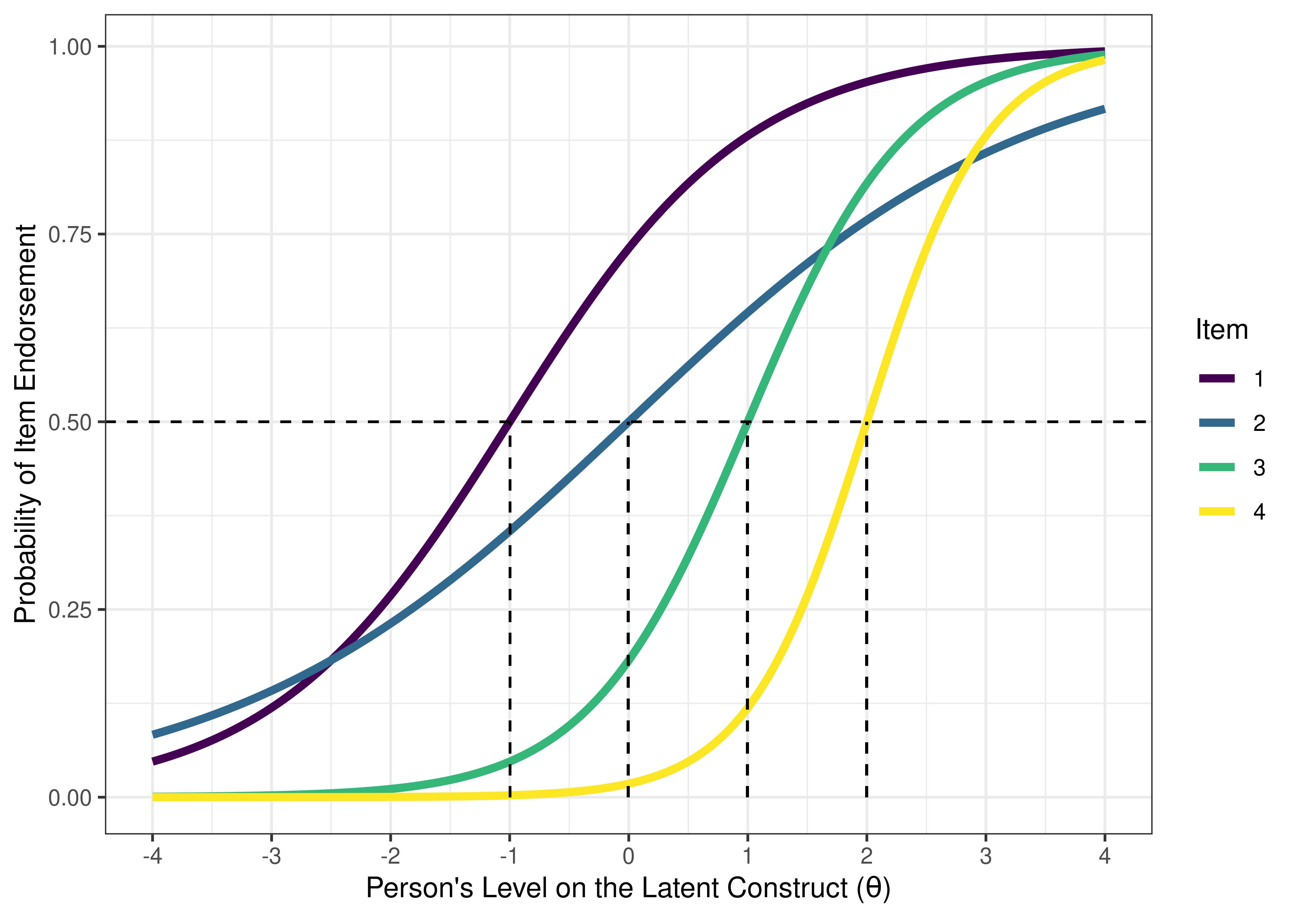

[1] 0.9090909Consider some hypothetical items depicted with ICCs in Figure 8.18.

Figure 8.18: Item Characteristic Curves From Two-Parameter Logistic Model in Item Response Theory. The dashed horizontal line indicates a probability of item endorsement of .50. The dashed vertical line is the item difficulty, i.e., the person’s level on the construct (the location on the x-axis) at the inflection point of the item characteristic curve. In a two-parameter logistic model, the inflection point corresponds to the probability of item endorsement is 50%. Thus, in a two-parameter logistic model, the difficulty of an item is the person’s level on the construct where the probability of endorsing the item is 50%.

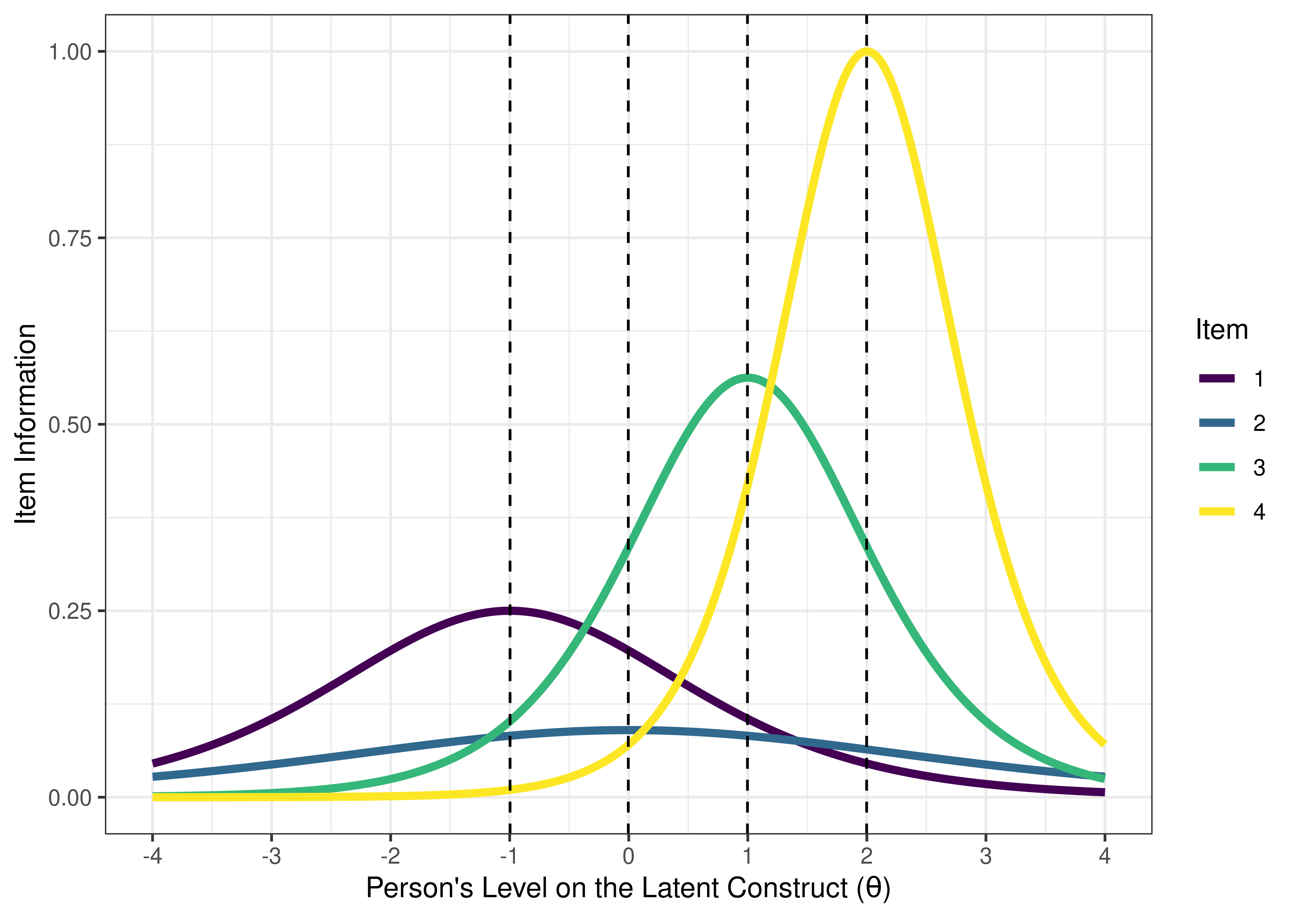

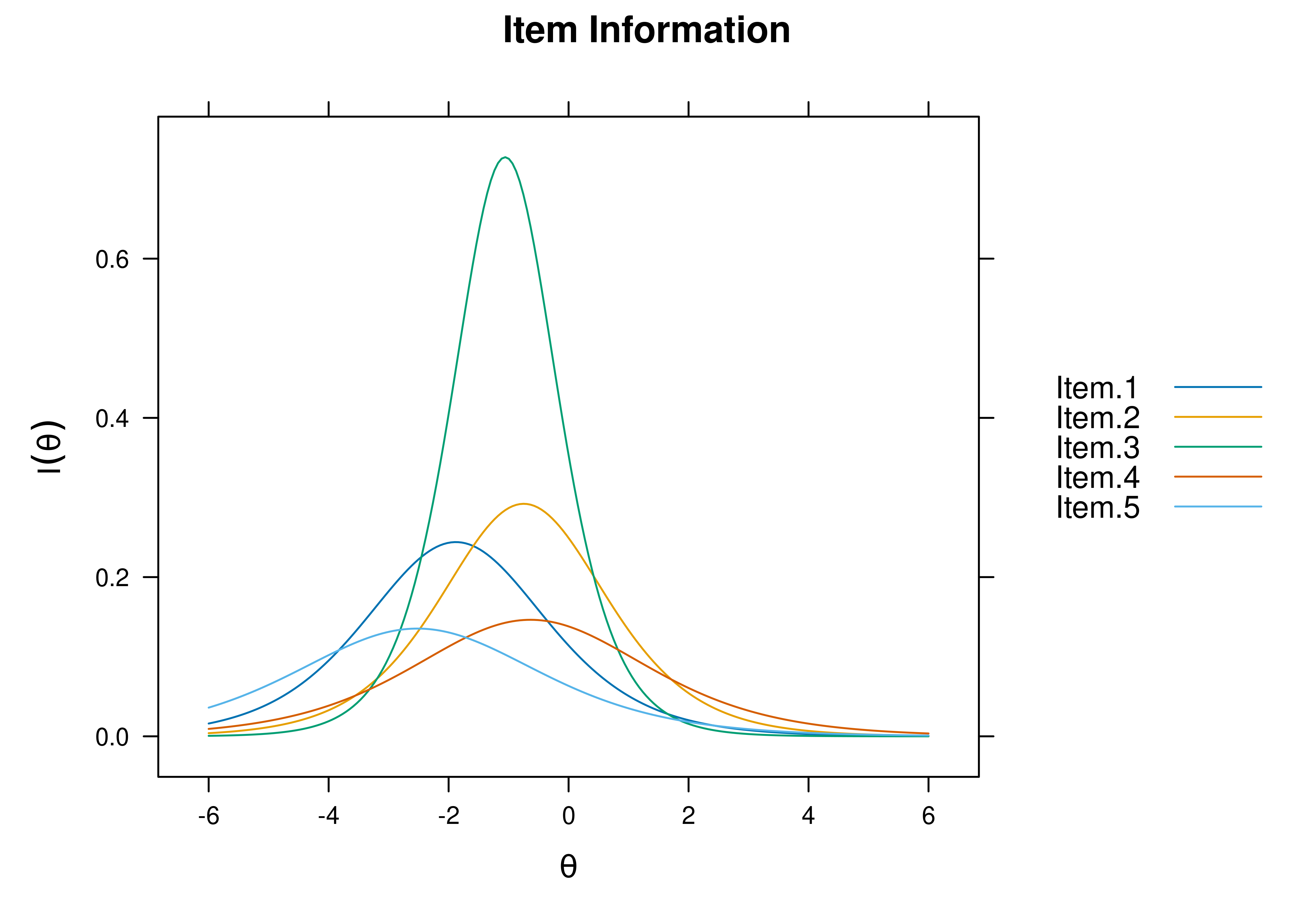

We can present the ICC in terms of an item information curve (see Figure 8.19). On the x-axis, the information peak is located at the difficulty/severity of the item. The higher the discrimination, the higher the information peak on the y-axis.

Figure 8.19: Item Information From Two-Parameter Logistic Model in Item Response Theory. The dashed vertical line is the item difficulty, which is located at the peak of the item information curve.

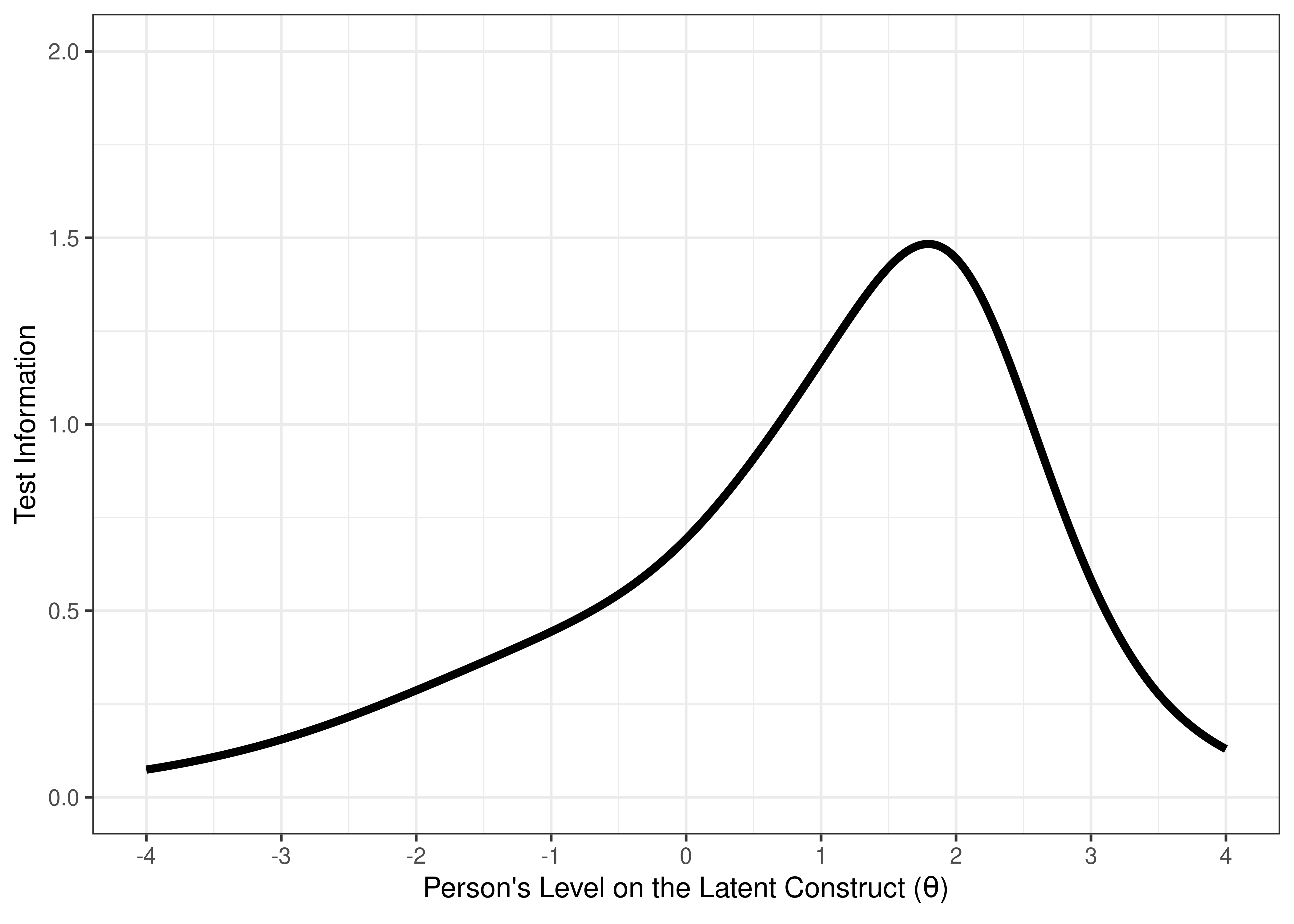

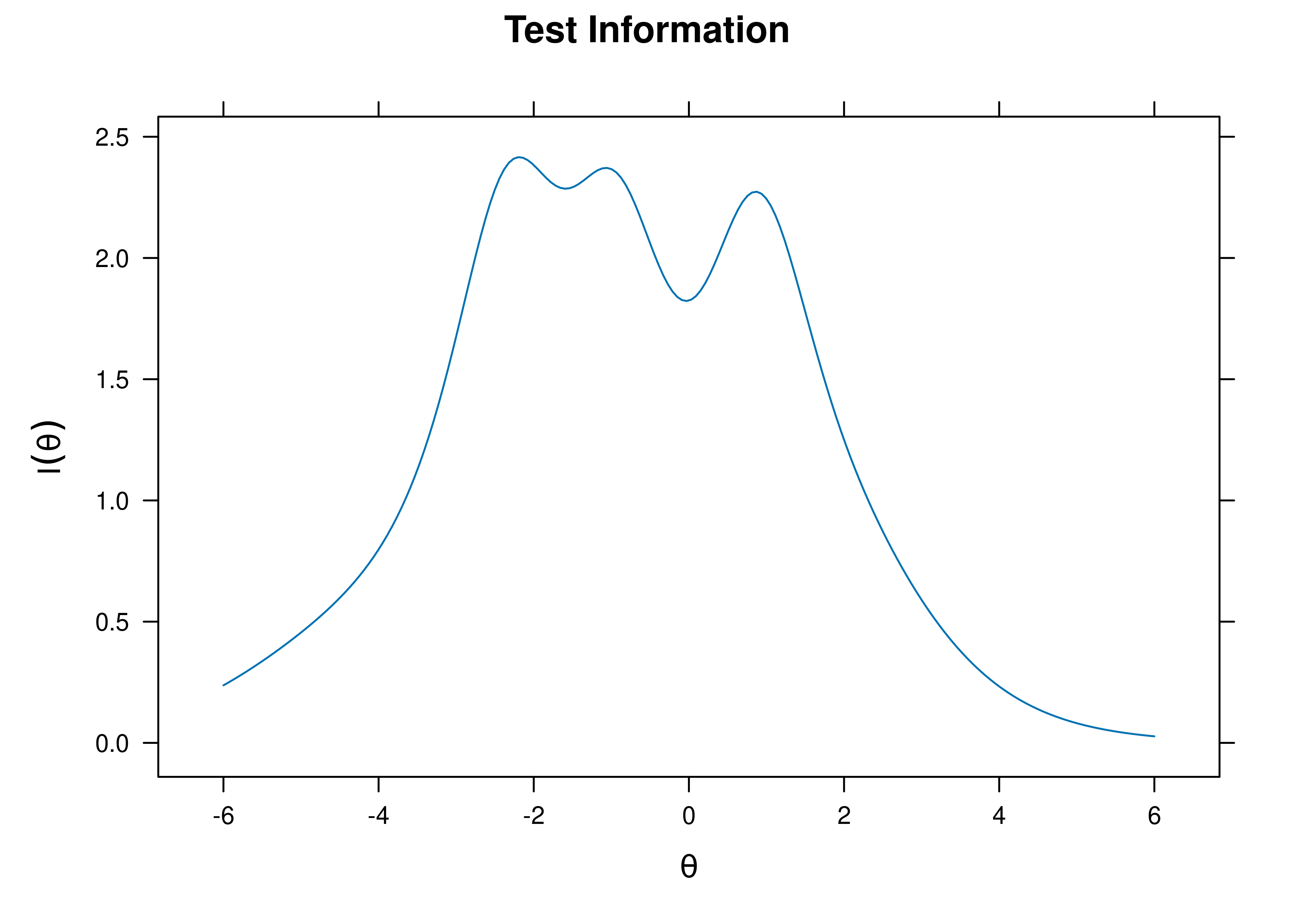

We can aggregate (sum) information across items to determine how much information the measure as a whole provides. This is called the test information curve (see Figure 8.20). Note that we get more information from likert/multiple response items compared to binary/dichotomous items. Having 10 items with a 5-level response scale yields as much information as 40 dichotomous items.

Figure 8.20: Test Information Curve From Two-Parameter Logistic Model in Item Response Theory.

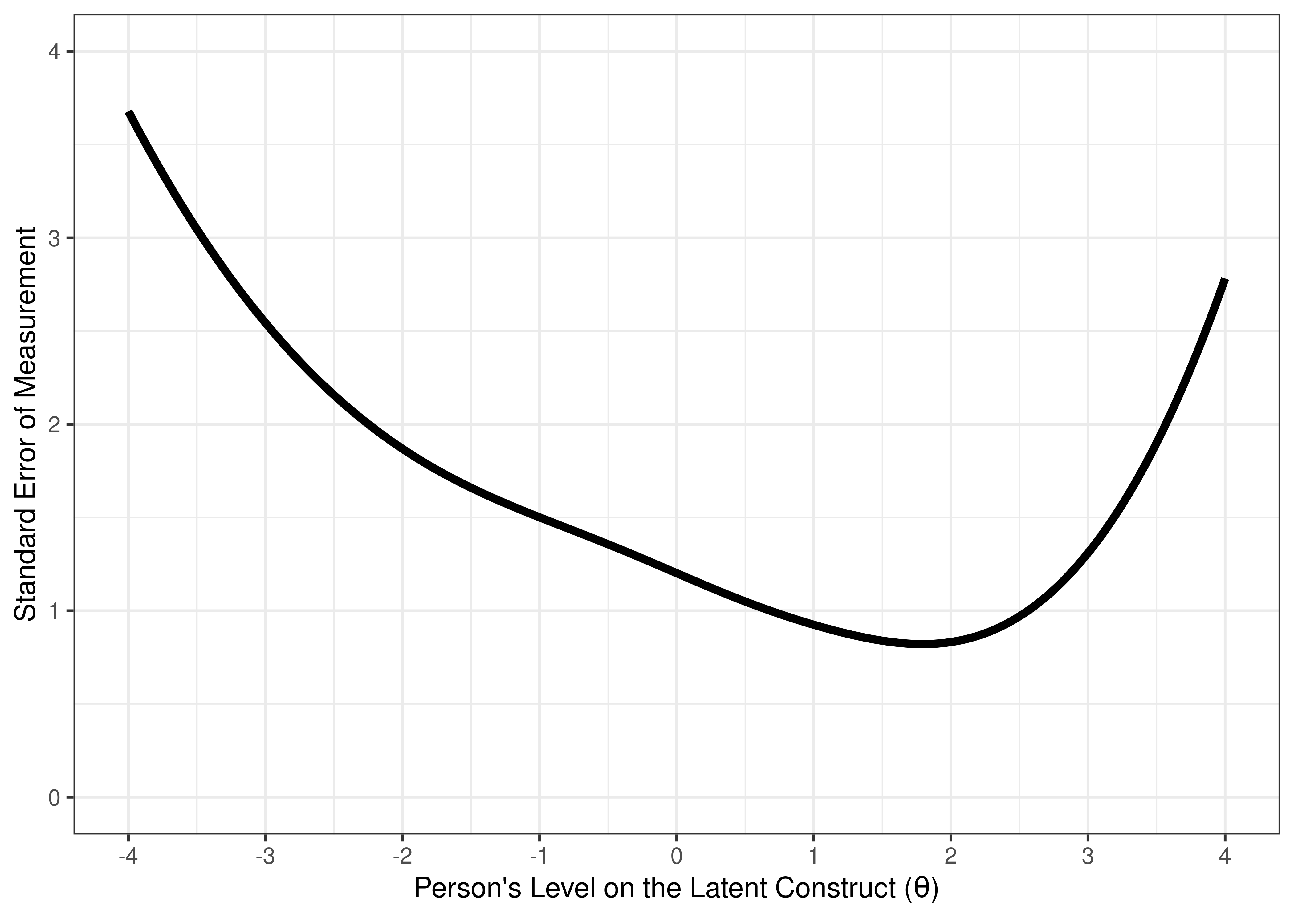

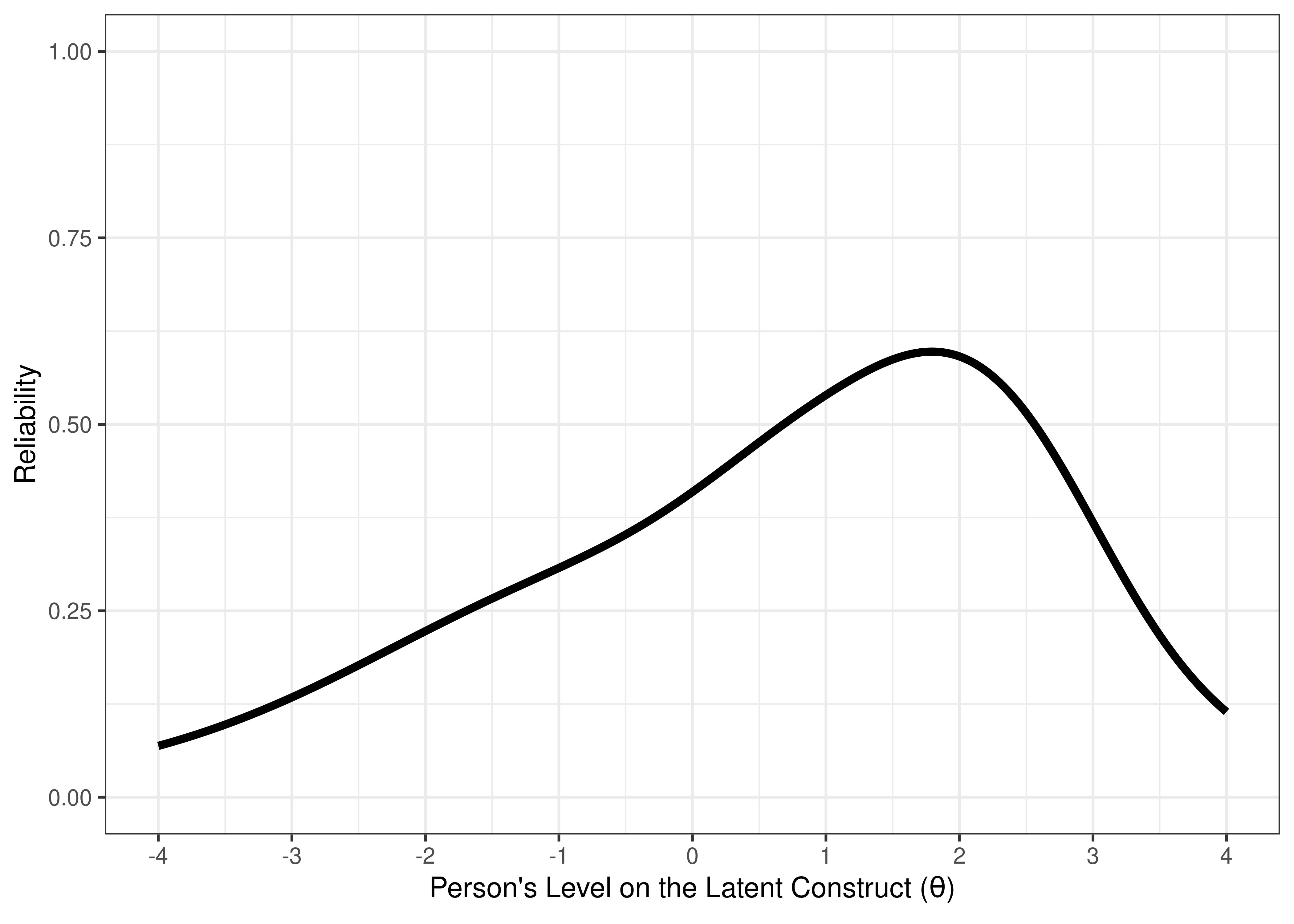

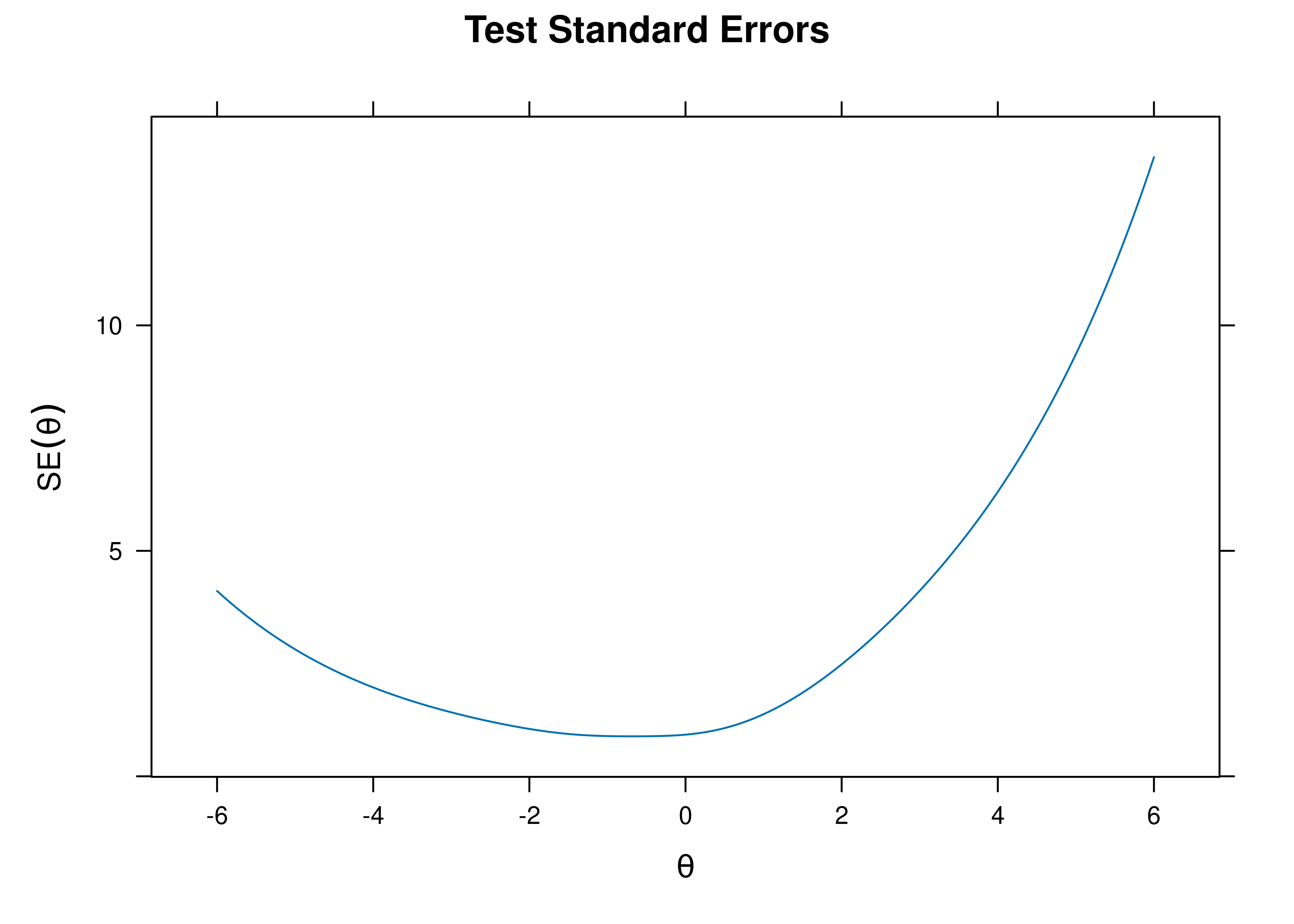

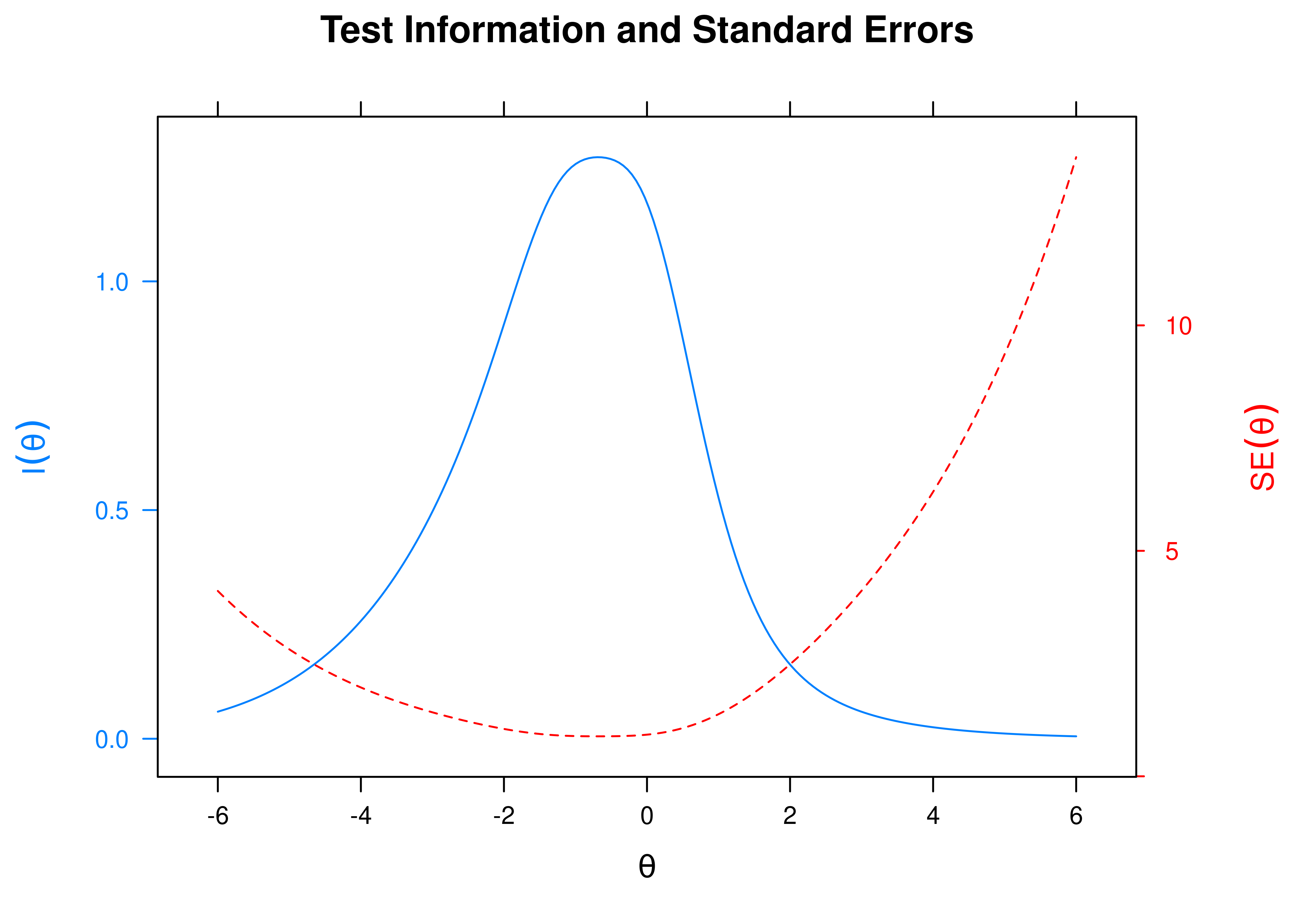

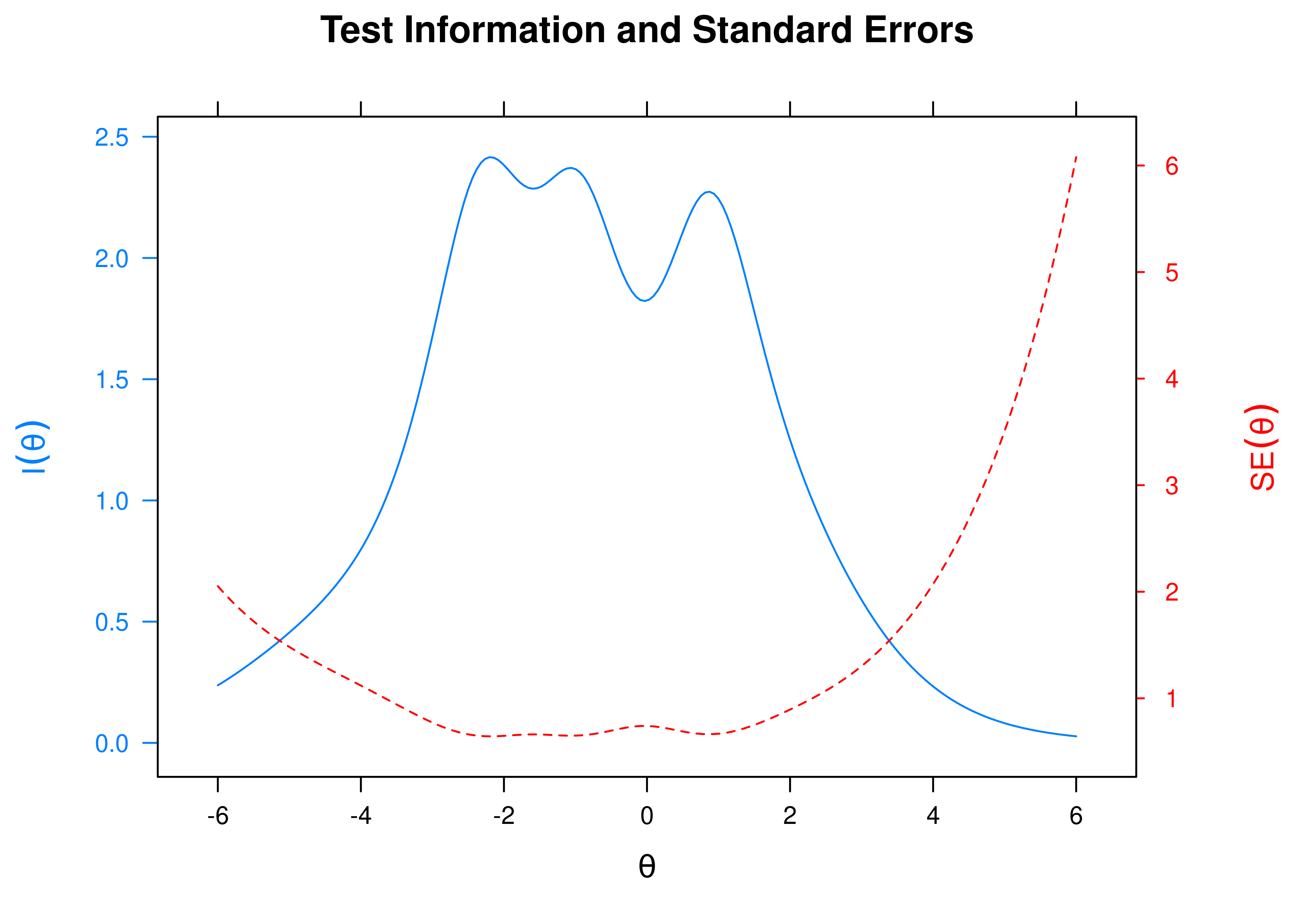

Based on test information, we can calculate the standard error of measurement (see Figure 8.21). Notice how the degree of (un)reliability differs at different construct levels.

Figure 8.21: Test Standard Error of Measurement From Two-Parameter Logistic Model in Item Response Theory.

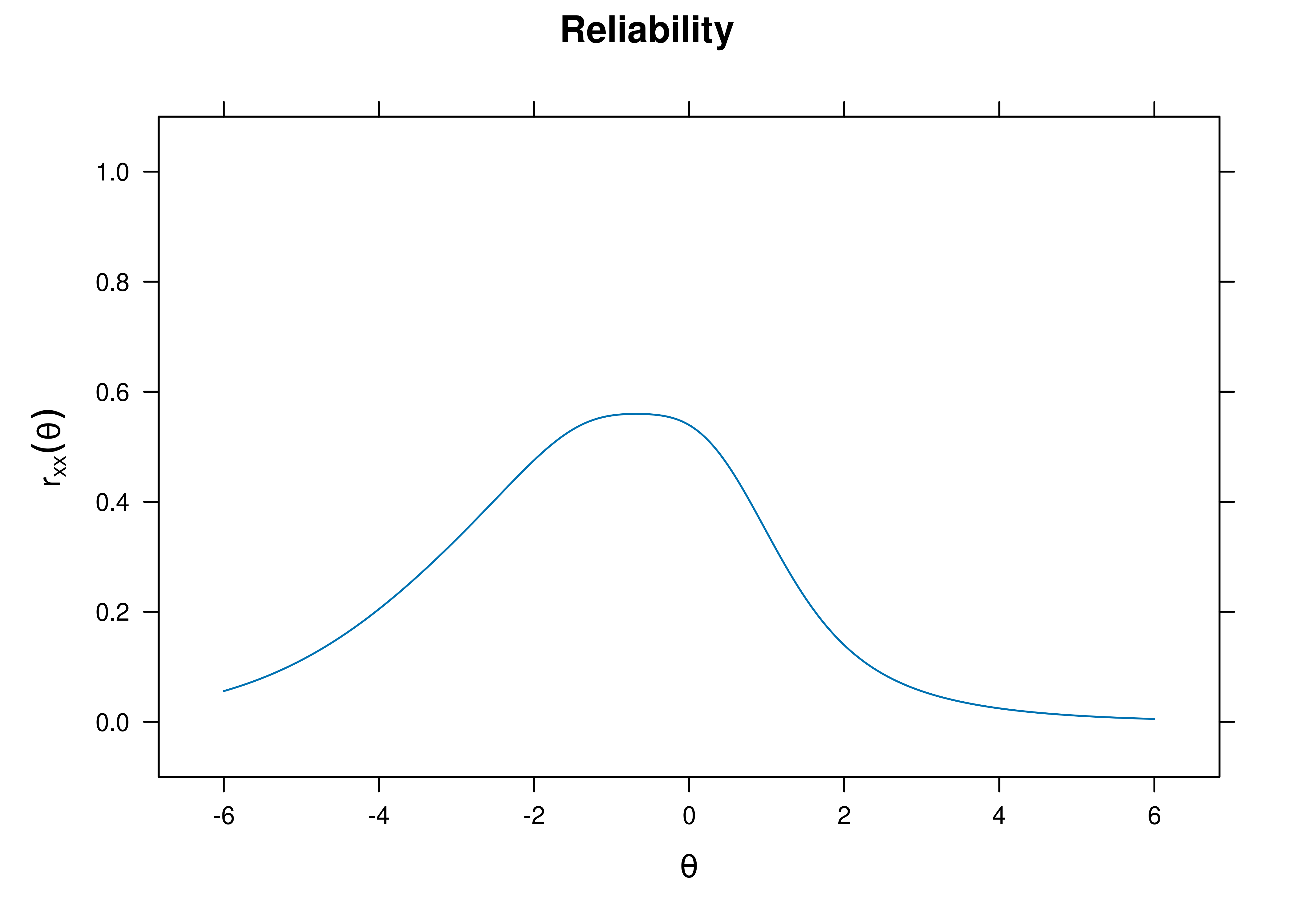

Based on test information, we can estimate the reliability (see Figure 8.22). Notice how the degree of (un)reliability differs at different construct levels.

Figure 8.22: Test Reliability From Two-Parameter Logistic Model in Item Response Theory.

8.1.7 Efficient Assessment

One of the benefits of IRT is for item selection to develop brief assessments. For instance, you could use two items to estimate where the person is on the construct: low, middle, or high (see Figure 8.23). If the responses to the two items do not meet expectations, for instance, the person passes the difficult item but fails the easy item, we would keep assessing additional items to determine their level on the construct. If two items perform similarly, that is, they have the same difficulty and discrimination, they are redundant, and we can sacrifice one of them. This leads to greater efficiency and better measurement in terms of reliability and validity. For more information on designing and evaluating short forms compared to their full-scale counterparts, see Smith et al. (2000).

Figure 8.23: Visual Representation of an Efficient Assessment Based on Item Characteristic Curves from Two-Parameter Logistic Model in Item Response Theory.

IRT forms the basis of computerized adaptive testing, which is discussed in Chapter 21. As discussed earlier, briefer measures can increase reliability and validity of measurement if the items are tailored to the ability level of the participant. The idea of adaptive testing is that, instead of having a standard scale for all participants, the items adapt to each person. An example of a measure that has used computerized adaptive testing is the Graduate Record Examination (GRE).

With adaptive testing, it is important to develop a comprehensive item bank that spans the difficulty range of interest. The starting construct level is the 50th percentile. If the respondent gets the first item correct, it moves to the next item that would provide the most information for the person, based on a split of the remaining sample (e.g., 75th percentile). And so on… The goal of adaptive testing is to find the construct level where the respondent keeps getting items right and wrong 50% of the time. Adaptive testing is a promising approach that saves time because it tailors which items are administered to which person (based on their construct level) to get the most reliable estimate in the shortest time possible. However, it assumes that if you get a more difficult item correct, that you would have gotten easier items correct, which might not be true in all contexts (especially for constructs that are not unidimensional).

Although most uses of IRT have been in cognitive and educational testing, IRT may also benefit other domains of assessment including clinical assessment (Gibbons et al., 2016; Reise & Waller, 2009; Thomas, 2019).

8.1.7.1 A Good Measure

According to IRT a good measure should:

- fit your goals of the assessment, in terms of the range of interest regarding levels on the construct,

- have good items that yield lots of information, and

- have a good set of items that densely cover the construct within the range of interest, without redundancy.

First, a good measure should fit your goals of the assessment, in terms of the “range of interest” or the “target range” of levels on the construct. For instance, if your goal is to perform diagnosis, you would only care about the high end of the construct (e.g., 1–3 standard deviations above the mean)—there is no use discriminating between “nothing”, “almost nothing”, and “a little bit.” For secondary prevention, i.e., early identification of risk to prevent something from getting worse, you would be interested in finding people with elevated risk—e.g., you would need to know who is 1 or more standard deviations above the mean, but you would not need to discriminate beyond that. For assessing individual differences, you would want items that discriminate across the full range, including at the lower end. The items’ difficulty should span the range of interest.

Second, a good measure should have good items that yield lots of information. For example, the items should have strong discrimination, that is, the items are strongly related to the construct. The items should have sufficient variability in responses. This can be achieved by having items with more response options (e.g., likert/multiple choice items, as opposed to binary items), items that differ in difficulty, and (at least some) items that are not too difficult or too easy (to avoid ceiling/floor effects).

Third, a good measure should have a good set of items that densely cover the construct within the range of interest, without redundancy. The items should not have the same difficulty or they would be considered redundant, and one of the redundant items could be dropped. The items’ difficulty should densely cover the construct within the range of interest. For instance, if the construct range of interest is 1–2 standard deviations above the mean, the items should have difficulty that densely cover this range (e.g., 1.0, 1.05, 1.10, 1.15, 1.20, 1.25, 1.30, …, 2.0).

With items that (1) span the range of interest, (2) have high discrimination and information, and (3) densely cover the range of interest without redundancy, the measure should have a high information in the range of interest. This would allow it to efficiently and accurately assess the construct for the intended purpose.

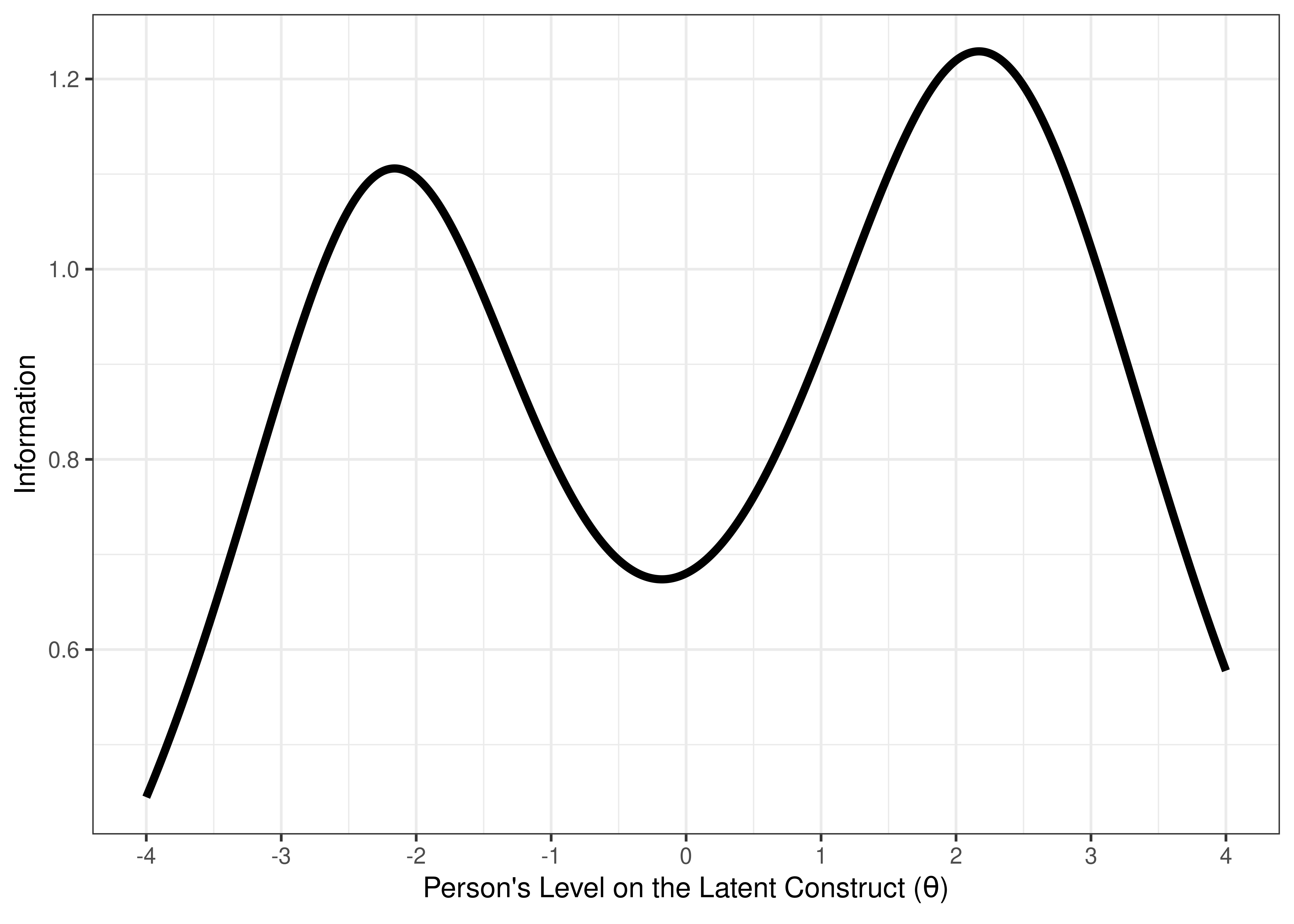

An example of a bad measure for assessing the full range of individual differences is depicted in terms of ICCs in Figure 8.24 and in terms of test information in Figure 8.25. The measure performs poorly for the intended purpose, because its items do not (a) span the range of interest (−3 to 3 standard deviations from the mean of the latent construct), (b) have high discrimination and information, and (c) densely cover the range of interest without redundancy.

Figure 8.24: Visual Representation of a Bad Measure Based on Item Characteristic Curves of Items From a Bad Measure Estimated from Two-Parameter Logistic Model in Item Response Theory.

Figure 8.25: Visual Representation of a Bad Measure Based on the Test Information Curve.

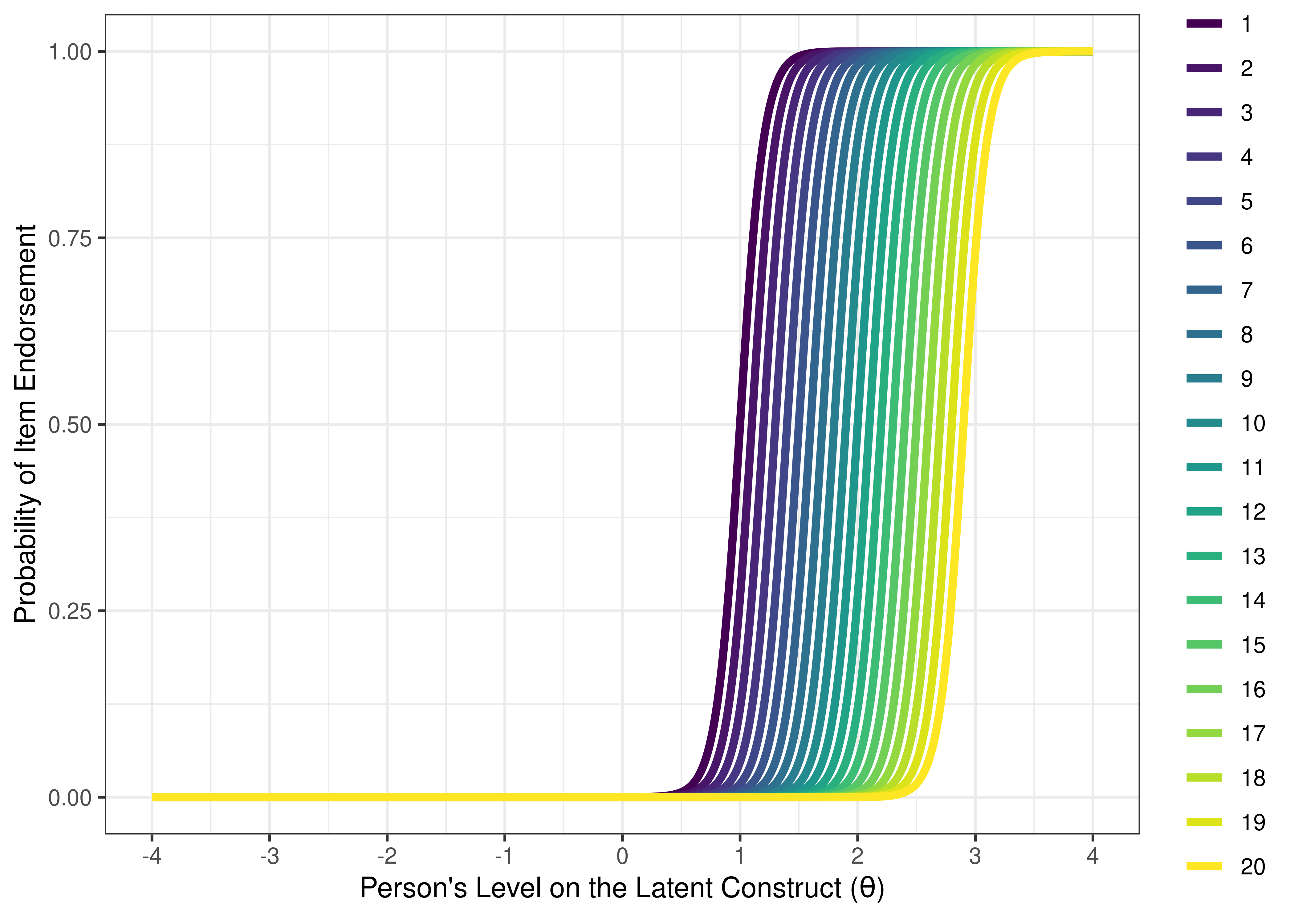

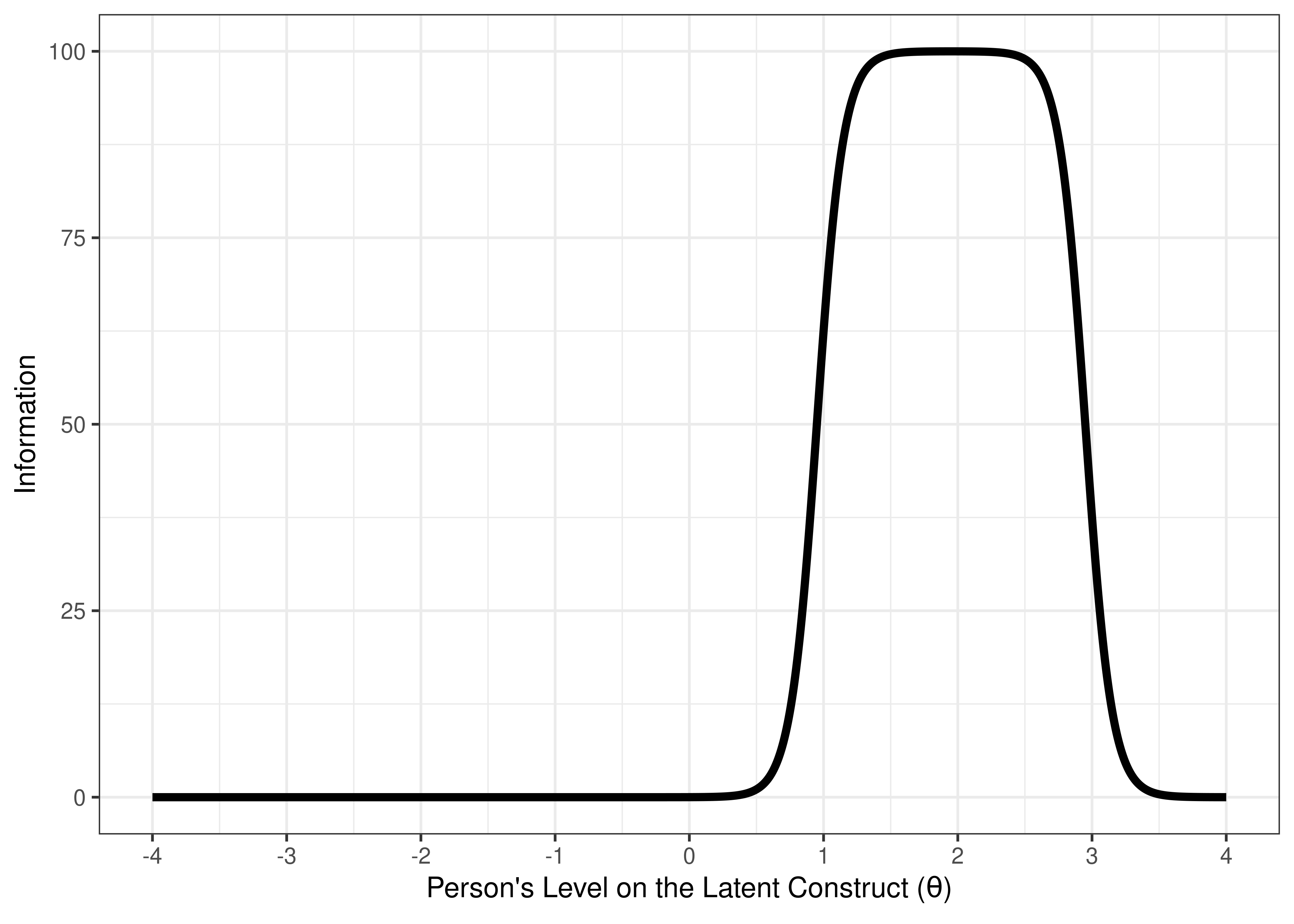

An example of a good measure for distinguishing clinical-range versus sub-clinical range is depicted in terms of ICCs in Figure 8.26 and in terms of test information in Figure 8.27. The measure is good for the intended purpose, in terms of having items that (a) span the range of interest (1–3 standard deviations above the mean of the latent construct), (b) have high discrimination and information, and (c) densely cover the range of interest without redundancy.

Figure 8.26: Visual Representation of a Good Measure (For Distinguishing Clinical-Range Versus Sub-clinical Range) Based on Item Characteristic Curves of Items From a Good Measure Estimated From Two-Parameter Logistic Model in Item Response Theory.

Figure 8.27: Visual Representation of a Good Measure (For Distinguishing Clinical-Range Versus Sub-clinical Range) Based on the Test Information Curve.

8.1.8 Assumptions of IRT

IRT has several assumptions:

- monotonicity

- unidimensionality

- item invariance

- local independence

8.1.8.1 Monotonicity

The monotonicity assumption holds that a person’s probability of endorsing a higher level on the item increases as a person’s level on the latent construct increases.

For instance, for each item assessing externalizing problems, as a child increases in their level of externalizing problems, they are expected to be rated with a higher level on that item.

Monotonicity can be evaluated in multiple ways.

For instance, monotonicity can be evaluated using visual inspection of empirical item characteristic curves.

Another way to evaluate monotonicity is with Mokken scale analysis, such as using the mokken package in R.

8.1.8.2 Unidimensionality

The unidimensionality assumption holds that the items have one predominant dimension, which reflects the underlying (latent) construct. The dimensionality of a set of items can be evaluated using factor analysis. Although items that are intended to assess a given latent latent construct are expected to be unidimensional, models have been developed that allow multiple latent dimensions, as shown in Section 8.6. These multidimensional IRT models allow borrowing information from a given latent factor in the estimation of other latent factor(s) to account for the covariation.

8.1.8.3 Item Invariance

The item invariance assumption holds that the items function similarly (i.e., have the same parameters) for all people and subgroups in the population. The extent to which items may violate the item invariance assumption can be evaluated empirically using tests of differential item functioning (DIF). Tests of measurement invariance are the equivalent of tests of differential item functioning for factor analysis/structural equation models. Test of differential item functioning and measurement invariance are described in the chapter on test bias.

8.1.8.4 Local Independence

The local independence assumptions holds that the items are uncorrelated when controlling for the latent dimension. That is, IRT models assume that the items’ errors (residuals) are uncorrelated with each other. Factor analysis and structural equation models can relax this assumption and allow items’ error terms to correlate with each other.

8.2 Getting Started

8.2.1 Load Libraries

Code

library("petersenlab") #to install: install.packages("remotes"); remotes::install_github("DevPsyLab/petersenlab")

library("mirt")

library("lavaan")

library("semTools")

library("semPlot")

library("lme4")

library("BifactorIndicesCalculator") #to install: install.packages("remotes"); remotes::install_github("ddueber/BifactorIndicesCalculator")

library("MOTE")

library("tidyverse")

library("here")

library("tinytex")8.2.2 Load Data

LSAT7 is a data set from the mirt package (Chalmers, 2020) that contains five items from the Law School Admissions Test.

SAT12 is a data set from the mirt package (Chalmers, 2020) that contains 32 items for a grade 12 science assessment test (SAT) measuring topics of chemistry, biology, and physics.

8.2.4 Descriptive Statistics

$overall

N mean_total.score sd_total.score ave.r sd.r alpha SEM.alpha

1000 3.707 1.199 0.143 0.052 0.453 0.886

$itemstats

N K mean sd total.r total.r_if_rm alpha_if_rm

Item.1 1000 2 0.828 0.378 0.530 0.246 0.396

Item.2 1000 2 0.658 0.475 0.600 0.247 0.394

Item.3 1000 2 0.772 0.420 0.611 0.313 0.345

Item.4 1000 2 0.606 0.489 0.592 0.223 0.415

Item.5 1000 2 0.843 0.364 0.461 0.175 0.438

$proportions

0 1

Item.1 0.172 0.828

Item.2 0.342 0.658

Item.3 0.228 0.772

Item.4 0.394 0.606

Item.5 0.157 0.843

$total.score_frequency

0 1 2 3 4 5

Freq 12 40 114 205 321 308

$total.score_means

0 1

Item.1 2.313953 3.996377

Item.2 2.710526 4.224924

Item.3 2.359649 4.104922

Item.4 2.827411 4.278878

Item.5 2.426752 3.945433

$total.score_sds

0 1

Item.1 1.162389 0.9841483

Item.2 1.058885 0.9038319

Item.3 1.087593 0.9043068

Item.4 1.103158 0.8661396

Item.5 1.177807 1.04158778.3 Comparison of Scoring Approaches

A measure that is a raw symptom count (i.e., a count of how many symptoms a person endorses) is low in precision and has a high standard error of measurement. Some diagnostic measures provide an ordinal response scale for each symptom. For example, the Structured Clinical Interview of Mental Disorders (SCID) provides a response scale from 0 to 2, where 0 = the symptom is absent, 1 = the symptom is sub-threshold, and 2 = the symptom is present. If your measure was a raw symptom sum, as opposed to a count of how many symptoms were present, the measure would be slightly more precise and have a somewhat smaller standard error of measurement.

A weighted symptom sum is the classical test theory analog of IRT. In classical test theory, proportion correct (or endorsed) would correspond to item difficulty and the item–total correlation (i.e., a point-biserial correlation) would correspond to item discrimination. If we were to compute a weighted sum of each item according to its strength of association with the construct (i.e., the item–total correlation), this measure would be somewhat more precise than the raw symptom sum, but it is not a latent variable method.

In IRT analysis, the weight for each item influences the estimate of a person’s level on the construct. IRT down-weights the poorly discriminating items and up-weights the strongly discriminating items. This leads to greater precision and a lower standard error of measurement than non-latent scoring approaches.

According to Embretson (1996), many perspectives have changed because of IRT. First, according to classical test theory, longer tests are more reliable than shorter tests, as described in Section 4.5.5.4 in the chapter on reliability. However, according to IRT, shorter tests (i.e., tests with fewer items) can be more reliable than longer tests. Item selection using IRT can lead to briefer assessments that have greater reliability than longer scales. For example, adaptive tests that tailor the difficulty of the items to the ability level of the participant.

Second, in classical test theory, a score’s meaning is tied to its location in a distribution (i.e., the norm-referenced standard). In IRT, however, the people and items are calibrated on a common scale. Based on a child’s IRT-estimated ability level (i.e., level on the construct), we can have a better sense of what the child knows and does not know, because it indicates the difficulty level at which they would tend to get items correct 50% of the time; the person would likely fail items with a higher difficulty compared to this level, whereas the person would likely pass items with a lower difficulty compared to this level. Consider Binet’s distribution of ability that arranges the items from easiest to most difficult. Based on the item difficulty and content of the items and the child’s performance, we can have a better indication that a child can perform items successfully in a particular range (e.g., count to 10) but might not be able to perform more difficult items (e.g., tie their shoes). From an intervention perspective, this would allow working in the “window of opportunity” or the zone of proximal development. Thus, IRT can provide more meaningful understanding of a person’s ability compared to traditional classical test theory interpretations such as the child being at the “63rd percentile” for a child of their age, which lacks conceptual meaning.

According to Cooper & Balsis (2009), our current diagnostic system relies heavily on how many symptoms a person endorses as an index of severity, but this assumes that all symptom endorsements have the same overall weight (severity). Using IRT, we can determine the relative severity of each item (symptom)—and it is clear that some symptoms indicate more severity than others. From this analysis, a respondent can endorse fewer, more severe items, and have overall more severe psychopathology than an individual who endorses more, less severe items. Basically, not all items are equally severe—know your items!

8.4 Rasch Model (1-Parameter Logistic)

A one-parameter logistic (1PL) item response theory (IRT) model is a model fit to dichotomous data, which estimates a different difficulty (\(b\)) parameter for each item.

Discrimination (\(a\)) is not estimated (i.e., it is fixed at the same value—one—across items).

Rasch models were fit using the mirt package (Chalmers, 2020).

8.4.2 Model Summary

F1 h2

Item.1 0.261

Item.2 0.261

Item.3 0.261

Item.4 0.261

Item.5 0.261

SE.F1

Item.1

Item.2

Item.3

Item.4

Item.5

SS loadings: 0

Proportion Var: 0

Factor correlations:

F1

F1 1$items

a b g u

Item.1 1 -1.868 0 1

Item.2 1 -0.791 0 1

Item.3 1 -1.461 0 1

Item.4 1 -0.521 0 1

Item.5 1 -1.993 0 1

$means

F1

0

$cov

F1

F1 1.0228.4.3 Factor Scores

One can obtain factor scores (i.e., theta; and their associated standard errors) for each participant using the fscores() function.

8.4.4 Plots

8.4.4.1 Test Curves

The test curves suggest that the measure is most reliable (i.e., provides the most information has the smallest standard error of measurement) at lower levels of the construct.

8.4.4.1.1 Test Characteristic Curve

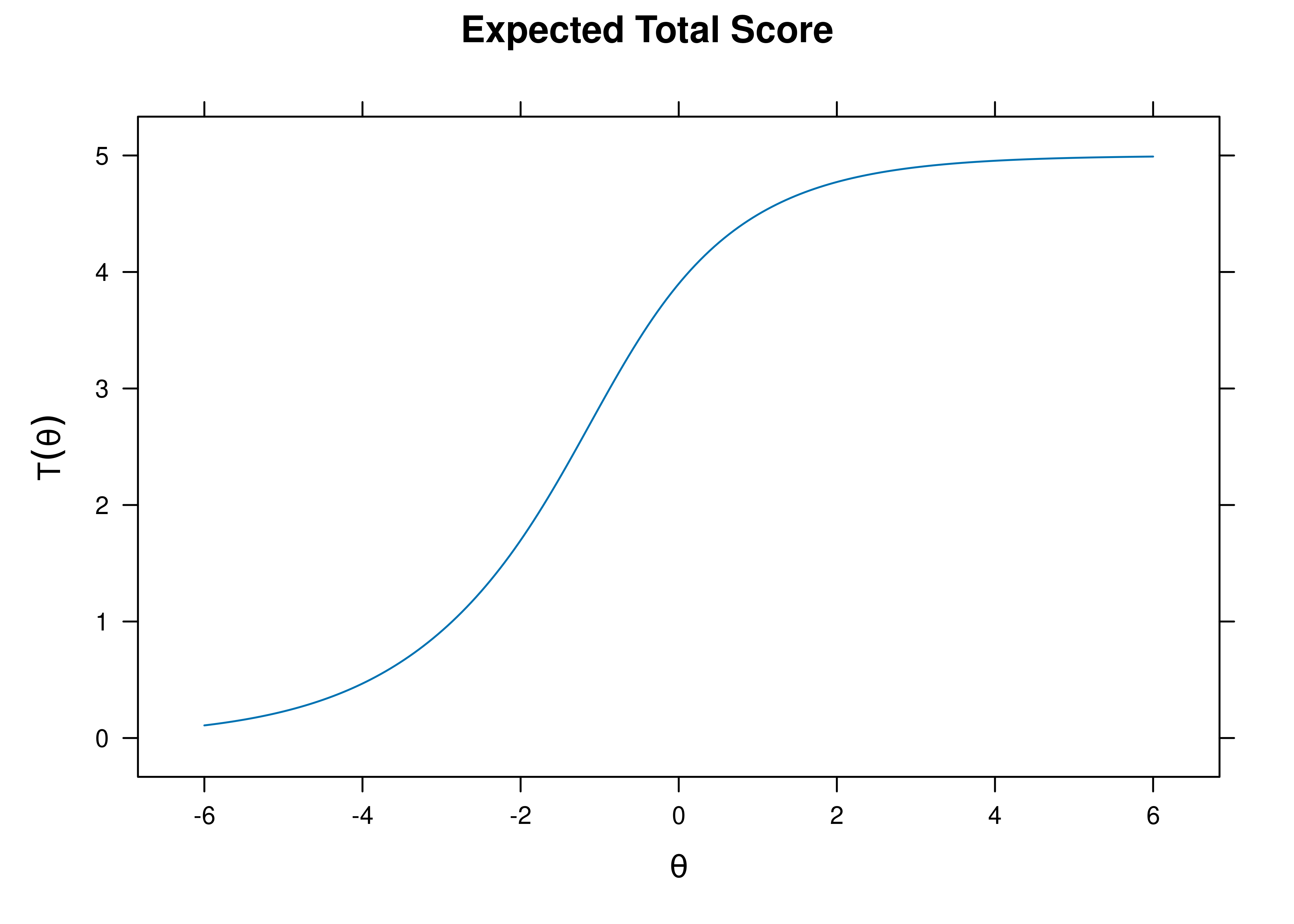

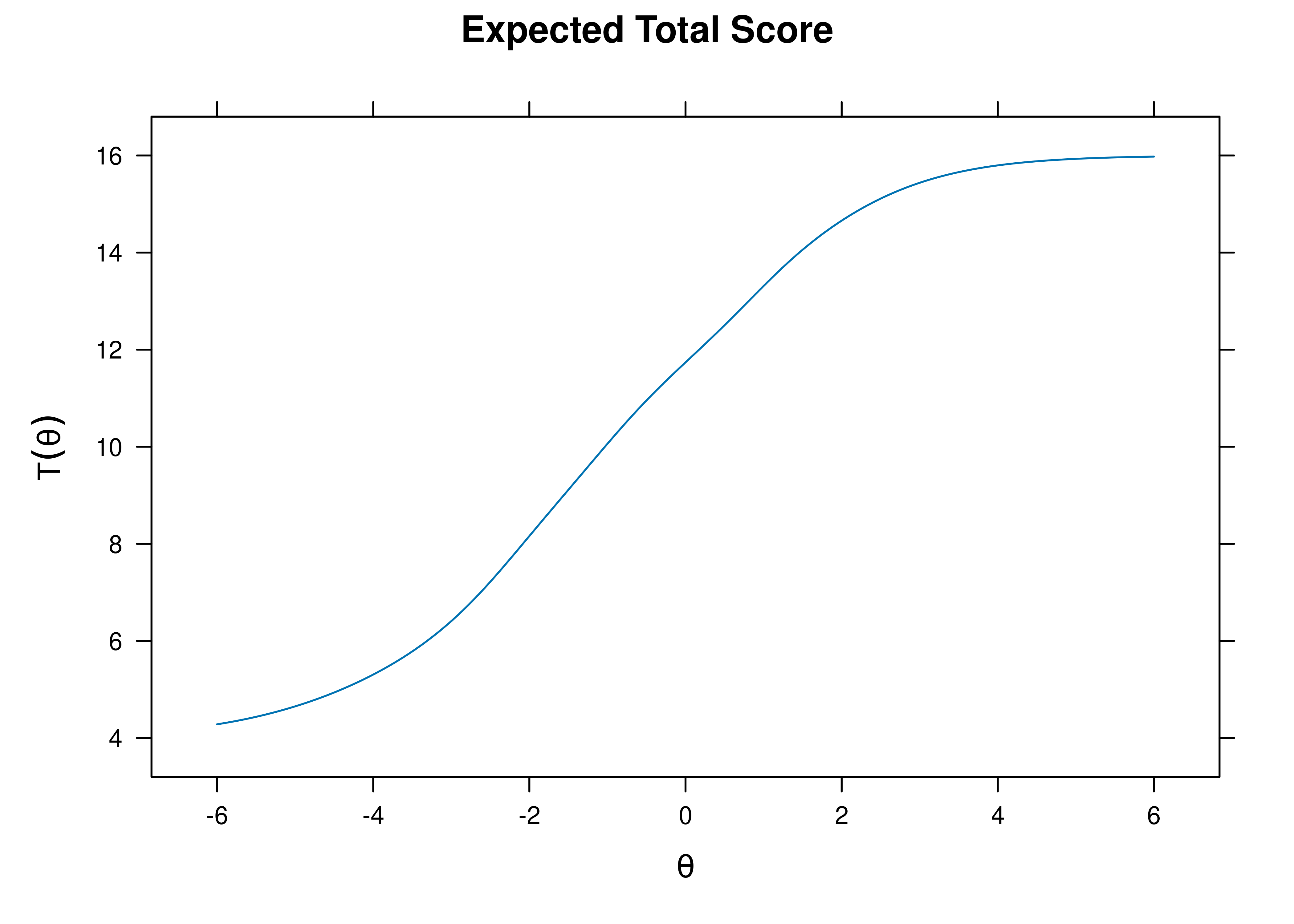

A test characteristic curve (TCC) plot of the expected total score as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.28.

Figure 8.28: Test Characteristic Curve From Rasch Item Response Theory Model.

8.4.4.1.2 Test Information Curve

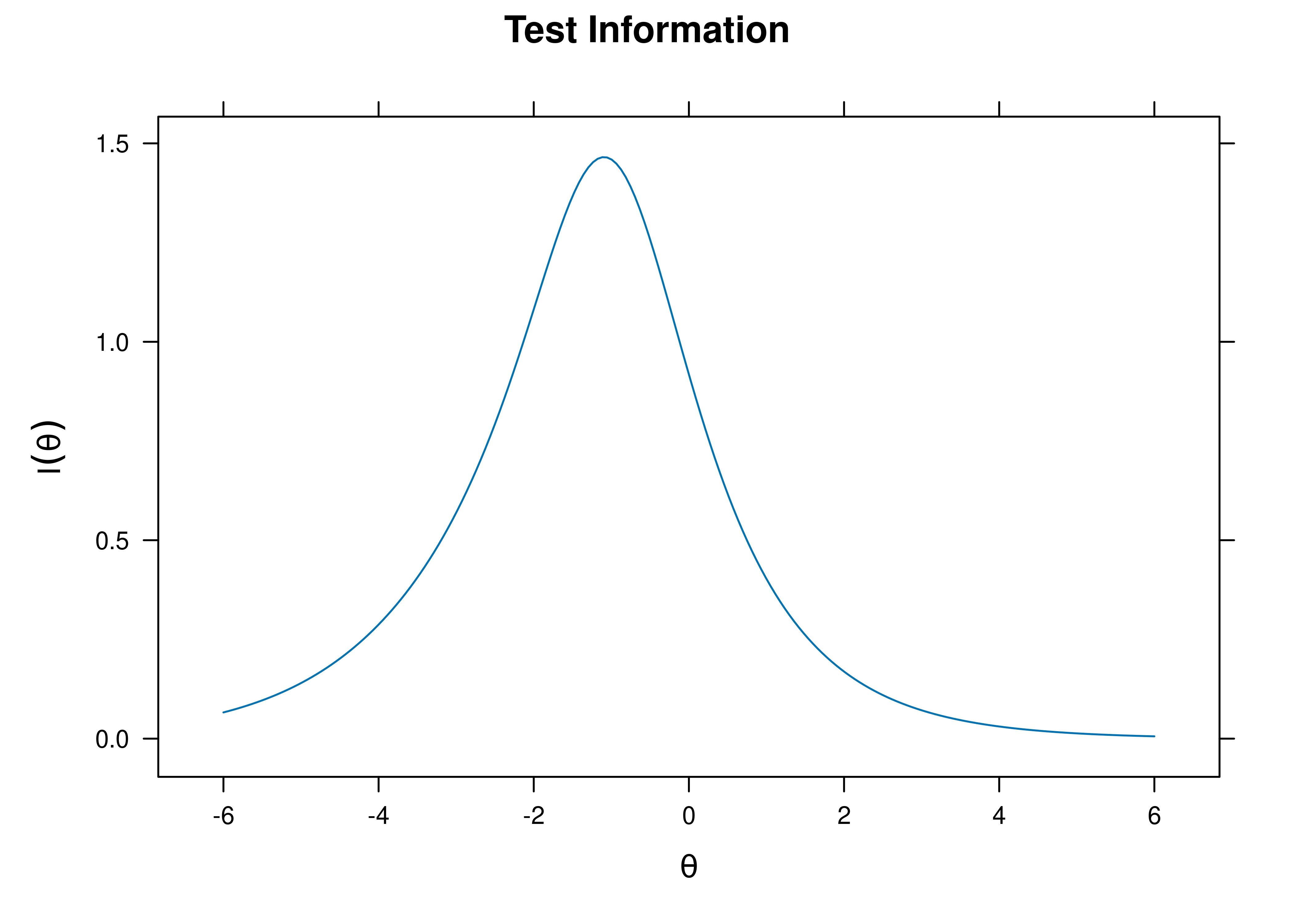

A plot of test information as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.29.

Figure 8.29: Test Information Curve From Rasch Item Response Theory Model.

8.4.4.1.3 Test Reliability

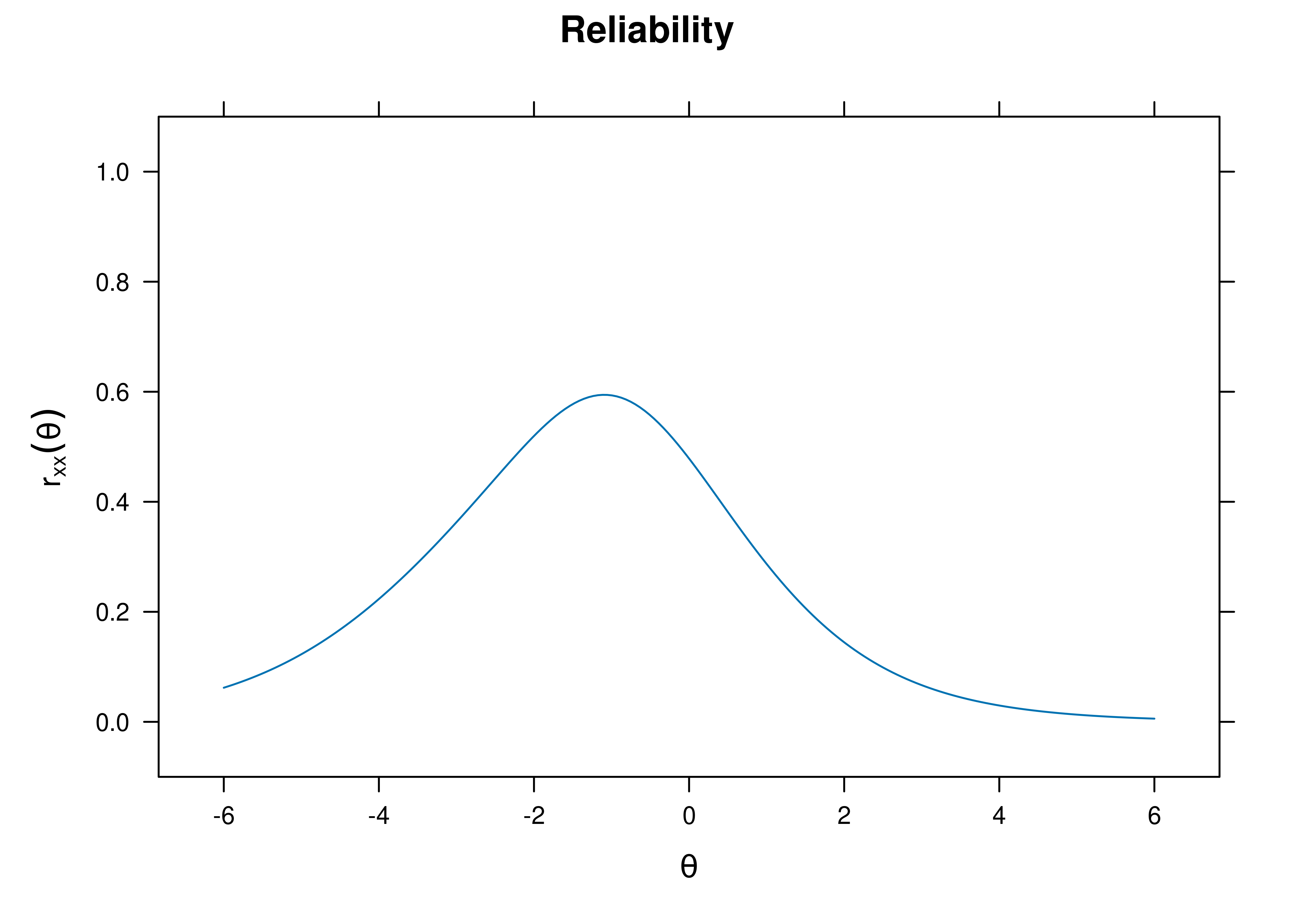

The estimate of marginal reliability is below:

[1] 0.4155251A plot of test reliability as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.30.

Figure 8.30: Test Reliability From Rasch Item Response Theory Model.

8.4.4.1.4 Test Standard Error of Measurement

A plot of test standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.31.

Figure 8.31: Test Standard Error of Measurement From Rasch Item Response Theory Model.

8.4.4.1.5 Test Information Curve and Test Standard Error of Measurement

A plot of test information and standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.32.

Figure 8.32: Test Information Curve and Standard Error of Measurement From Rasch Item Response Theory Model.

8.4.4.2 Item Curves

8.4.4.2.1 Item Characteristic Curves

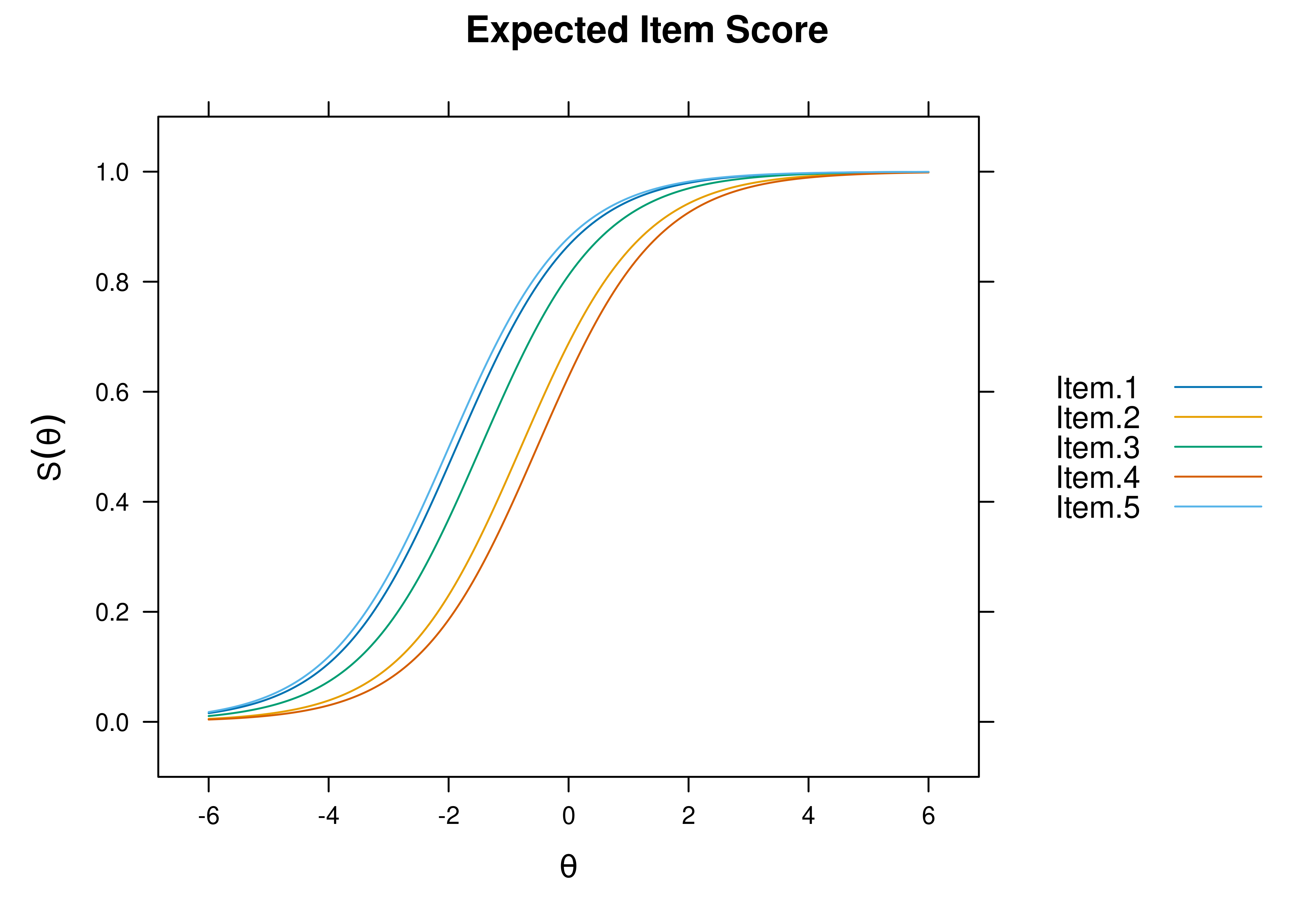

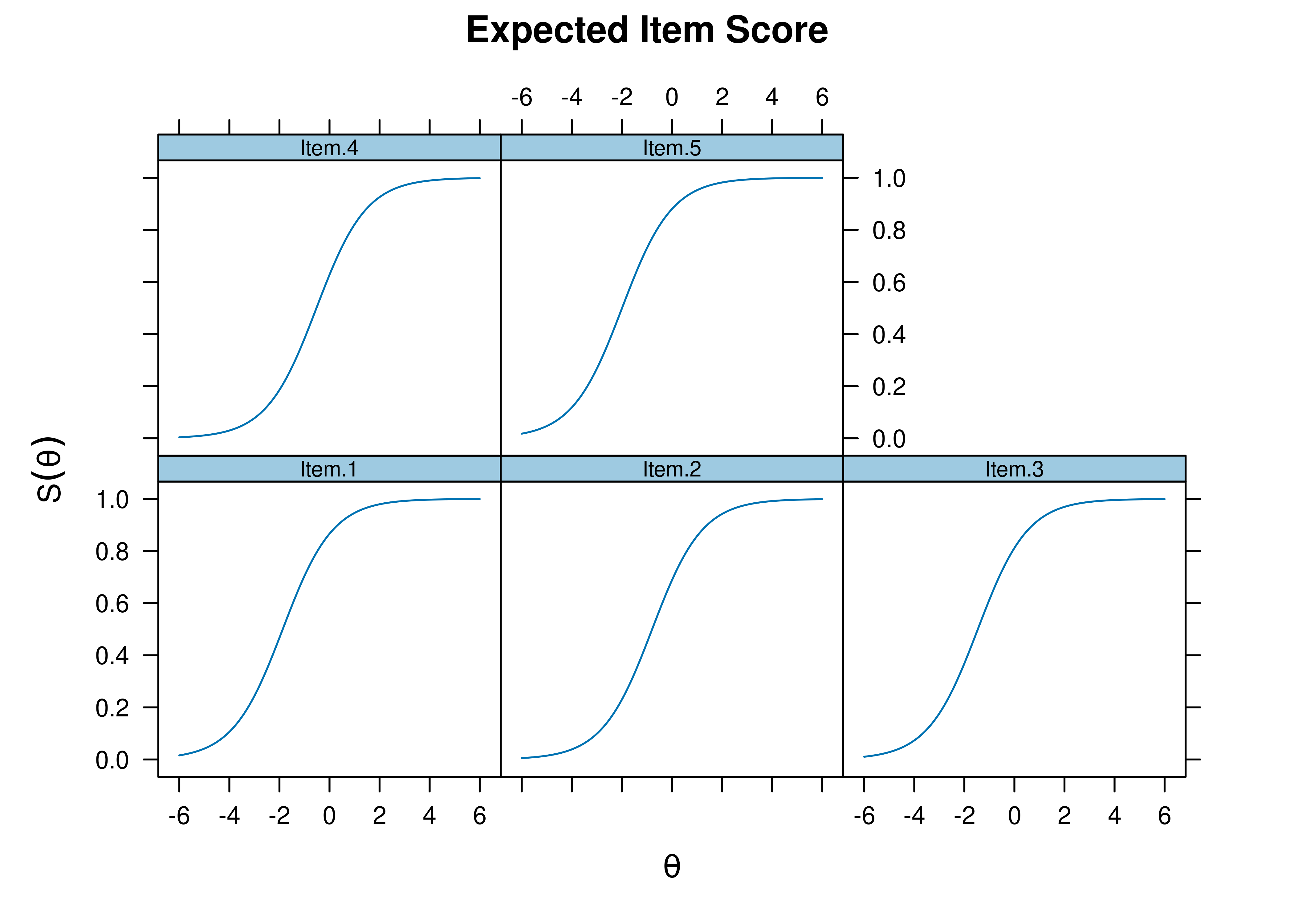

Item characteristic curve (ICC) plots of the probability of item endorsement (or getting the item correct) as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.33 and 8.34.

Figure 8.33: Item Characteristic Curves From Rasch Item Response Theory Model.

Figure 8.34: Item Characteristic Curves From Rasch Item Response Theory Model.

8.4.4.2.2 Item Information Curves

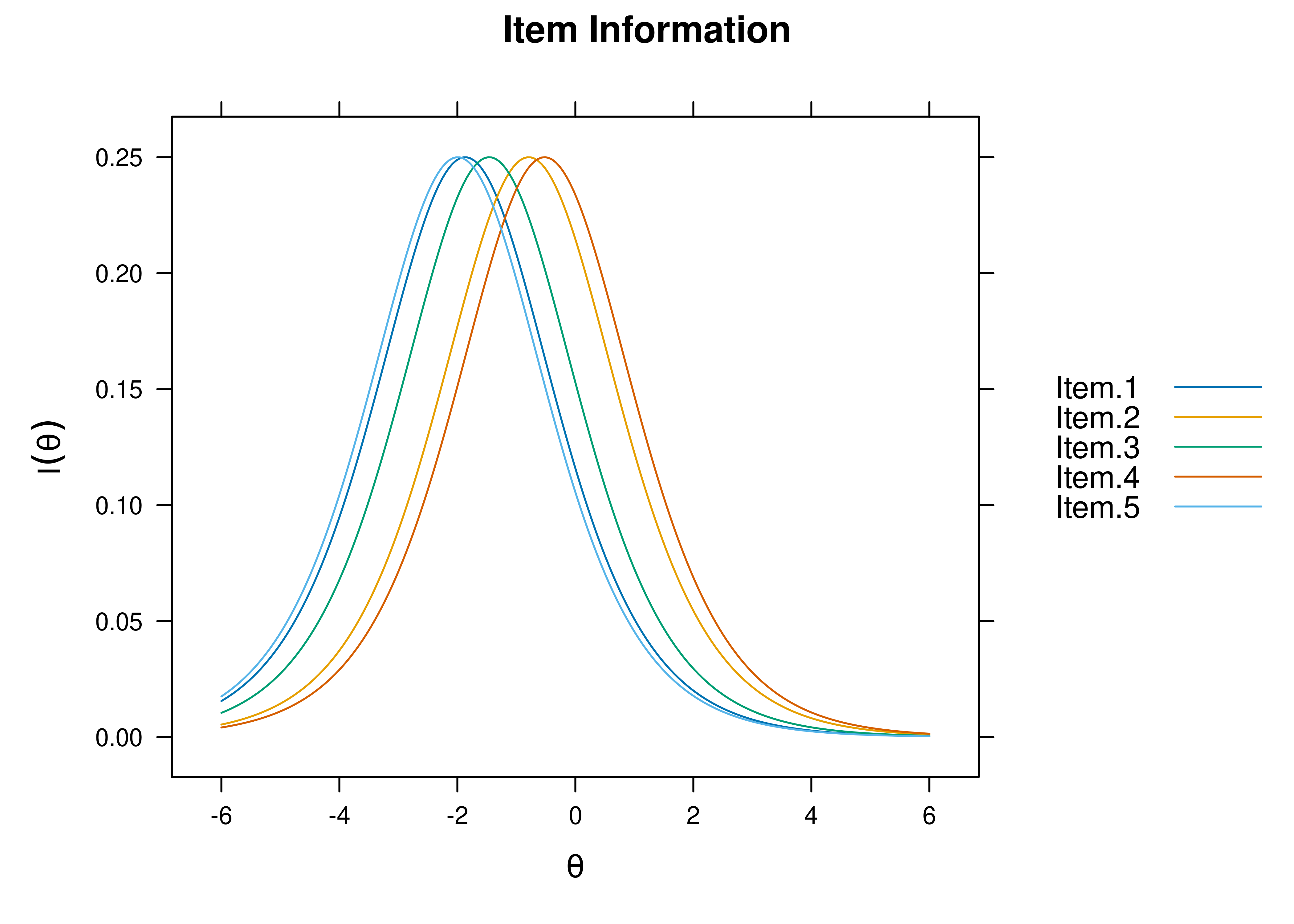

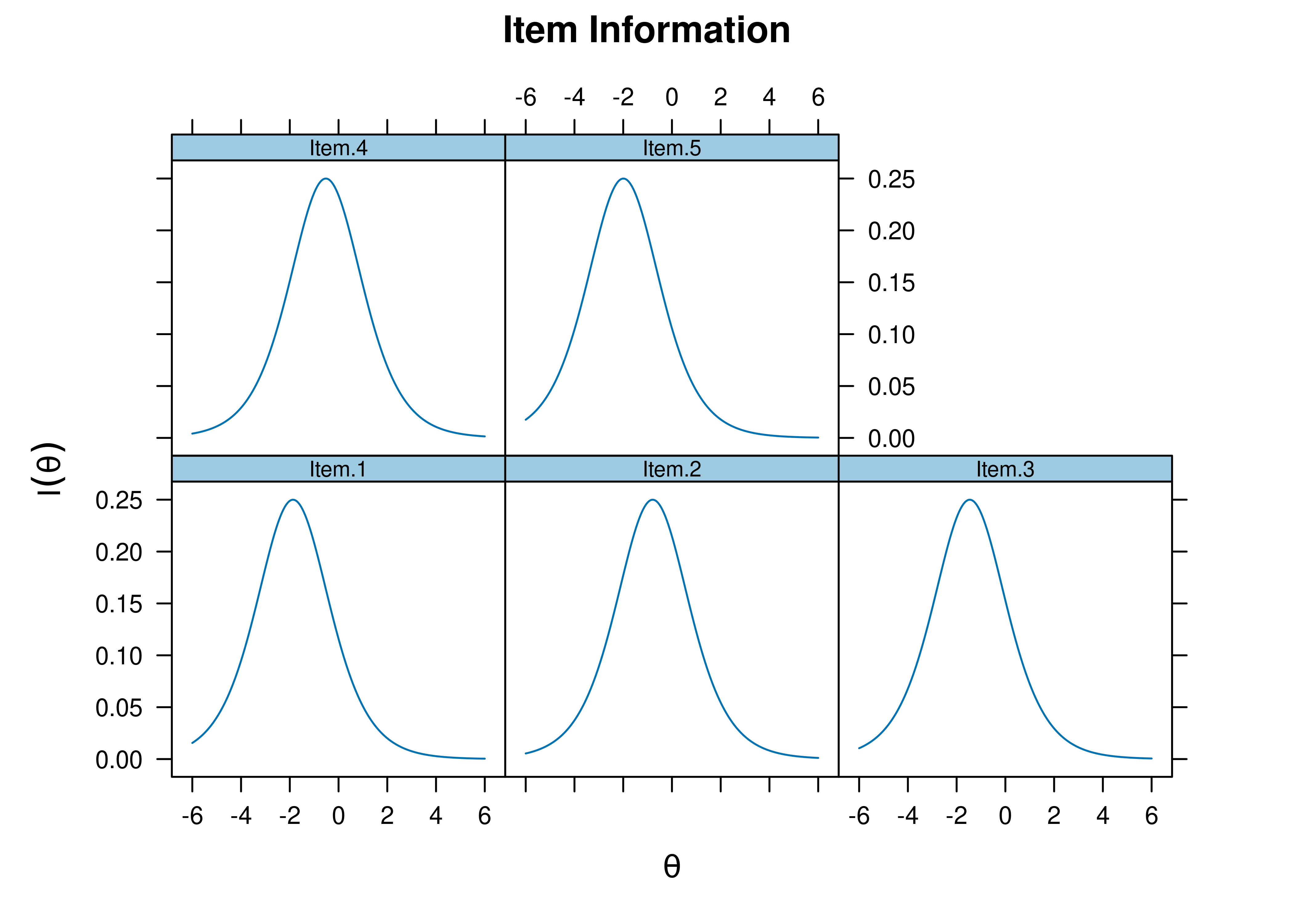

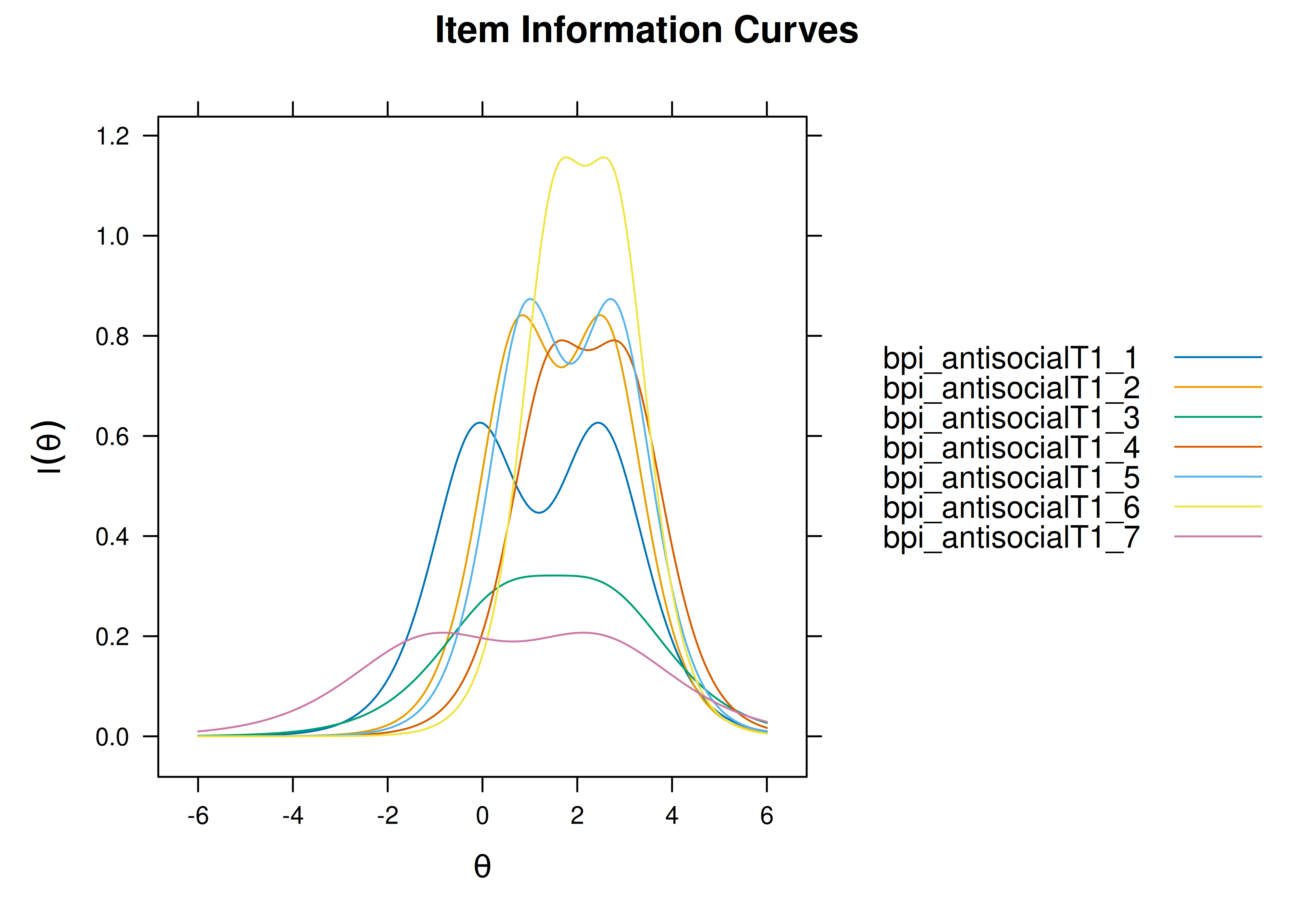

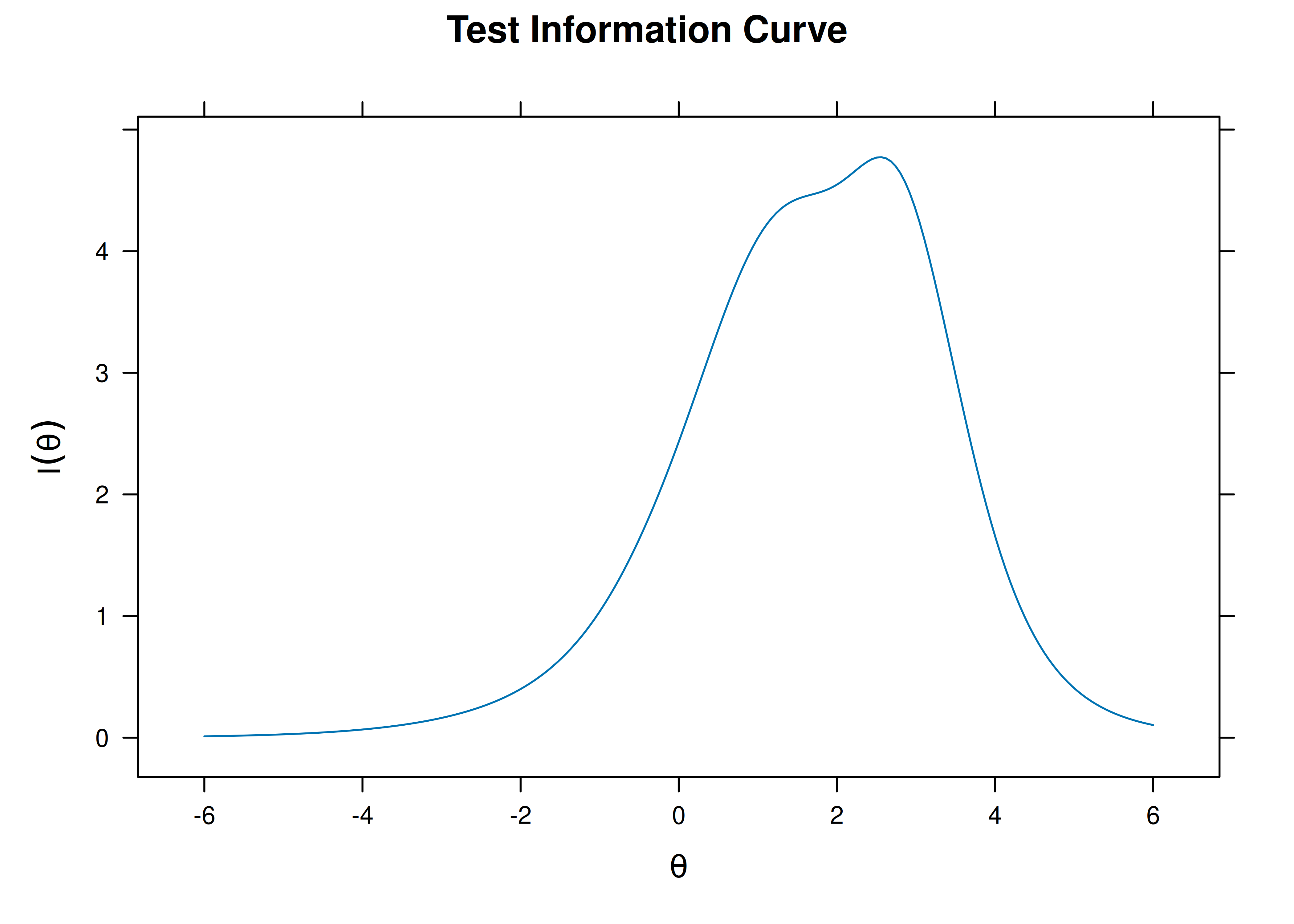

Plots of item information as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.35 and 8.36.

Figure 8.35: Item Information Curves from Rasch Item Response Theory Model.

Figure 8.36: Item Information Curves from Rasch Item Response Theory Model.

8.4.5 CFA

A one-parameter logistic model can also be fit in a CFA framework, sometimes called item factor analysis.

The item factor analysis models were fit in the lavaan package (Rosseel et al., 2022).

Code

onePLModel_cfa <- '

# Factor Loadings (i.e., discrimination parameters)

latent =~ loading*Item.1 + loading*Item.2 + loading*Item.3 +

loading*Item.4 + loading*Item.5

# Item Thresholds (i.e., difficulty parameters)

Item.1 | threshold1*t1

Item.2 | threshold2*t1

Item.3 | threshold3*t1

Item.4 | threshold4*t1

Item.5 | threshold5*t1

'

onePLModel_cfa_fit = sem(

model = onePLModel_cfa,

data = mydataIRT,

ordered = c("Item.1", "Item.2", "Item.3", "Item.4", "Item.5"),

mimic = "Mplus",

estimator = "WLSMV",

std.lv = TRUE,

parameterization = "theta")

summary(

onePLModel_cfa_fit,

fit.measures = TRUE,

rsquare = TRUE,

standardized = TRUE)lavaan 0.6-20 ended normally after 13 iterations

Estimator DWLS

Optimization method NLMINB

Number of model parameters 10

Number of equality constraints 4

Number of observations 1000

Model Test User Model:

Standard Scaled

Test Statistic 22.305 24.361

Degrees of freedom 9 9

P-value (Chi-square) 0.008 0.004

Scaling correction factor 0.926

Shift parameter 0.283

simple second-order correction (WLSMV)

Model Test Baseline Model:

Test statistic 244.385 228.667

Degrees of freedom 10 10

P-value 0.000 0.000

Scaling correction factor 1.072

User Model versus Baseline Model:

Comparative Fit Index (CFI) 0.943 0.930

Tucker-Lewis Index (TLI) 0.937 0.922

Robust Comparative Fit Index (CFI) 0.895

Robust Tucker-Lewis Index (TLI) 0.884

Root Mean Square Error of Approximation:

RMSEA 0.038 0.041

90 Percent confidence interval - lower 0.019 0.022

90 Percent confidence interval - upper 0.059 0.061

P-value H_0: RMSEA <= 0.050 0.808 0.738

P-value H_0: RMSEA >= 0.080 0.000 0.000

Robust RMSEA 0.080

90 Percent confidence interval - lower 0.038

90 Percent confidence interval - upper 0.122

P-value H_0: Robust RMSEA <= 0.050 0.106

P-value H_0: Robust RMSEA >= 0.080 0.539

Standardized Root Mean Square Residual:

SRMR 0.065 0.065

Parameter Estimates:

Parameterization Theta

Standard errors Robust.sem

Information Expected

Information saturated (h1) model Unstructured

Latent Variables:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

latent =~

Item.1 (ldng) 0.599 0.038 15.831 0.000 0.599 0.514

Item.2 (ldng) 0.599 0.038 15.831 0.000 0.599 0.514

Item.3 (ldng) 0.599 0.038 15.831 0.000 0.599 0.514

Item.4 (ldng) 0.599 0.038 15.831 0.000 0.599 0.514

Item.5 (ldng) 0.599 0.038 15.831 0.000 0.599 0.514

Thresholds:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

Itm.1|1 (thr1) -1.103 0.056 -19.666 0.000 -1.103 -0.946

Itm.2|1 (thr2) -0.474 0.048 -9.826 0.000 -0.474 -0.407

Itm.3|1 (thr3) -0.869 0.052 -16.706 0.000 -0.869 -0.745

Itm.4|1 (thr4) -0.313 0.047 -6.678 0.000 -0.313 -0.269

Itm.5|1 (thr5) -1.174 0.058 -20.092 0.000 -1.174 -1.007

Variances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

.Item.1 1.000 1.000 0.736

.Item.2 1.000 1.000 0.736

.Item.3 1.000 1.000 0.736

.Item.4 1.000 1.000 0.736

.Item.5 1.000 1.000 0.736

latent 1.000 1.000 1.000

Scales y*:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

Item.1 0.858 0.858 1.000

Item.2 0.858 0.858 1.000

Item.3 0.858 0.858 1.000

Item.4 0.858 0.858 1.000

Item.5 0.858 0.858 1.000

R-Square:

Estimate

Item.1 0.264

Item.2 0.264

Item.3 0.264

Item.4 0.264

Item.5 0.264Code

chisq df pvalue baseline.chisq baseline.df

22.305 9.000 0.008 244.385 10.000

baseline.pvalue rmsea cfi tli srmr

0.000 0.038 0.943 0.937 0.065 $type

[1] "cor.bollen"

$cov

Item.1 Item.2 Item.3 Item.4 Item.5

Item.1 0.000

Item.2 -0.038 0.000

Item.3 0.026 0.168 0.000

Item.4 0.032 -0.061 0.012 0.000

Item.5 0.022 -0.129 0.001 -0.104 0.000

$mean

Item.1 Item.2 Item.3 Item.4 Item.5

0 0 0 0 0

$th

Item.1|t1 Item.2|t1 Item.3|t1 Item.4|t1 Item.5|t1

0 0 0 0 0 latent

0.467 latent

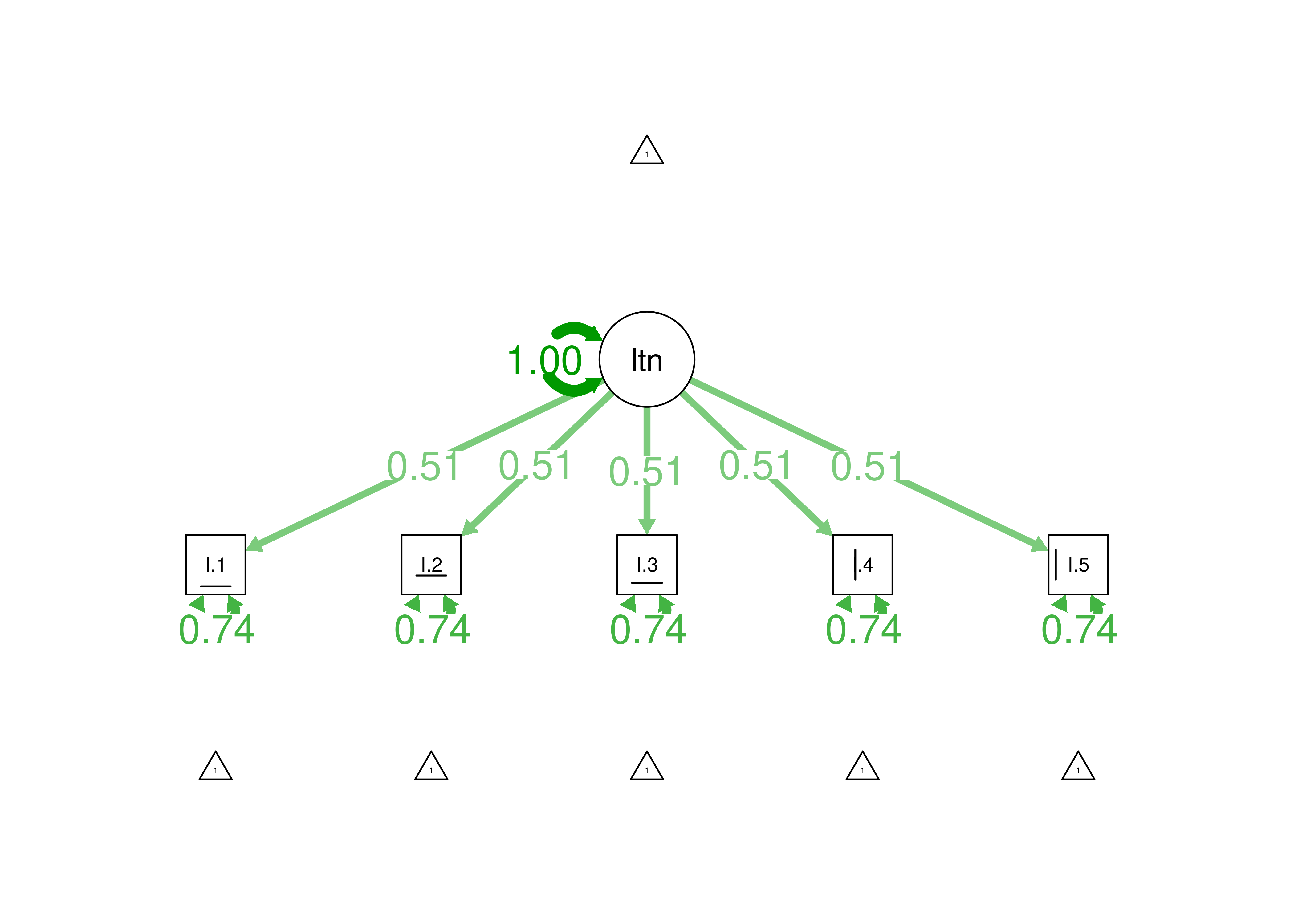

0.264 A path diagram of the one-parameter item factor analysis is in Figure 8.37.

Figure 8.37: Item Factor Analysis Diagram of One-Parameter Logistic Model.

8.4.6 Mixed Model

A Rasch model can also be fit in a mixed model framework.

The Rasch model below was fit using the lme4 package (Bates et al., 2022).

First, we convert the data from wide form to long form for the mixed model:

Code

Then, we can estimate the Rasch model using a logit or probit link:

Code

Generalized linear mixed model fit by maximum likelihood (Laplace

Approximation) [glmerMod]

Family: binomial ( logit )

Formula: response ~ -1 + item + (1 | ID)

Data: mydataIRT_long

AIC BIC logLik -2*log(L) df.resid

5354.5 5393.6 -2671.3 5342.5 4994

Scaled residuals:

Min 1Q Median 3Q Max

-2.7785 -0.6631 0.3599 0.5639 1.5080

Random effects:

Groups Name Variance Std.Dev.

ID (Intercept) 0.9057 0.9517

Number of obs: 5000, groups: ID, 1000

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

itemItem.1 1.85118 0.09903 18.693 < 2e-16 ***

itemItem.2 0.78581 0.08025 9.792 < 2e-16 ***

itemItem.3 1.45004 0.09015 16.085 < 2e-16 ***

itemItem.4 0.51687 0.07787 6.637 3.2e-11 ***

itemItem.5 1.97373 0.10221 19.310 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

itmI.1 itmI.2 itmI.3 itmI.4

itemItem.2 0.173

itemItem.3 0.192 0.178

itemItem.4 0.158 0.169 0.166

itemItem.5 0.192 0.170 0.191 0.155 Code

Generalized linear mixed model fit by maximum likelihood (Laplace

Approximation) [glmerMod]

Family: binomial ( probit )

Formula: response ~ -1 + item + (1 | ID)

Data: mydataIRT_long

AIC BIC logLik -2*log(L) df.resid

5362.0 5401.1 -2675.0 5350.0 4994

Scaled residuals:

Min 1Q Median 3Q Max

-2.7185 -0.6990 0.3660 0.5725 1.4276

Random effects:

Groups Name Variance Std.Dev.

ID (Intercept) 0.2992 0.547

Number of obs: 5000, groups: ID, 1000

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

itemItem.1 1.10493 0.05634 19.612 < 2e-16 ***

itemItem.2 0.47513 0.04801 9.896 < 2e-16 ***

itemItem.3 0.87437 0.05262 16.615 < 2e-16 ***

itemItem.4 0.31178 0.04689 6.649 2.95e-11 ***

itemItem.5 1.16956 0.05739 20.379 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

itmI.1 itmI.2 itmI.3 itmI.4

itemItem.2 0.155

itemItem.3 0.176 0.158

itemItem.4 0.142 0.149 0.147

itemItem.5 0.180 0.154 0.175 0.141 One can extract item difficulty and people’s factor scores (i.e., theta), as adapted from James Uanhoro (https://www.jamesuanhoro.com/post/2018/01/02/using-glmer-to-perform-rasch-analysis/; archived at: https://perma.cc/84WP-TQBG):

Code

8.5 Two-Parameter Logistic Model

A two-parameter logistic (2PL) IRT model is a model fit to dichotomous data, which estimates a different difficulty (\(b\)) and discrimination (\(a\)) parameter for each item.

2PL models were fit using the mirt package (Chalmers, 2020).

8.5.2 Model Summary

F1 h2

Item.1 0.502 0.252

Item.2 0.536 0.287

Item.3 0.708 0.501

Item.4 0.410 0.168

Item.5 0.397 0.157

SE.F1

Item.1 0.067

Item.2 0.060

Item.3 0.066

Item.4 0.060

Item.5 0.069

SS loadings: 1.366

Proportion Var: 0.273

Factor correlations:

F1

F1 1$items

a b g u

Item.1 0.988 -1.879 0 1

Item.2 1.081 -0.748 0 1

Item.3 1.706 -1.058 0 1

Item.4 0.765 -0.635 0 1

Item.5 0.736 -2.520 0 1

$means

F1

0

$cov

F1

F1 18.5.4 Plots

8.5.4.1 Test Curves

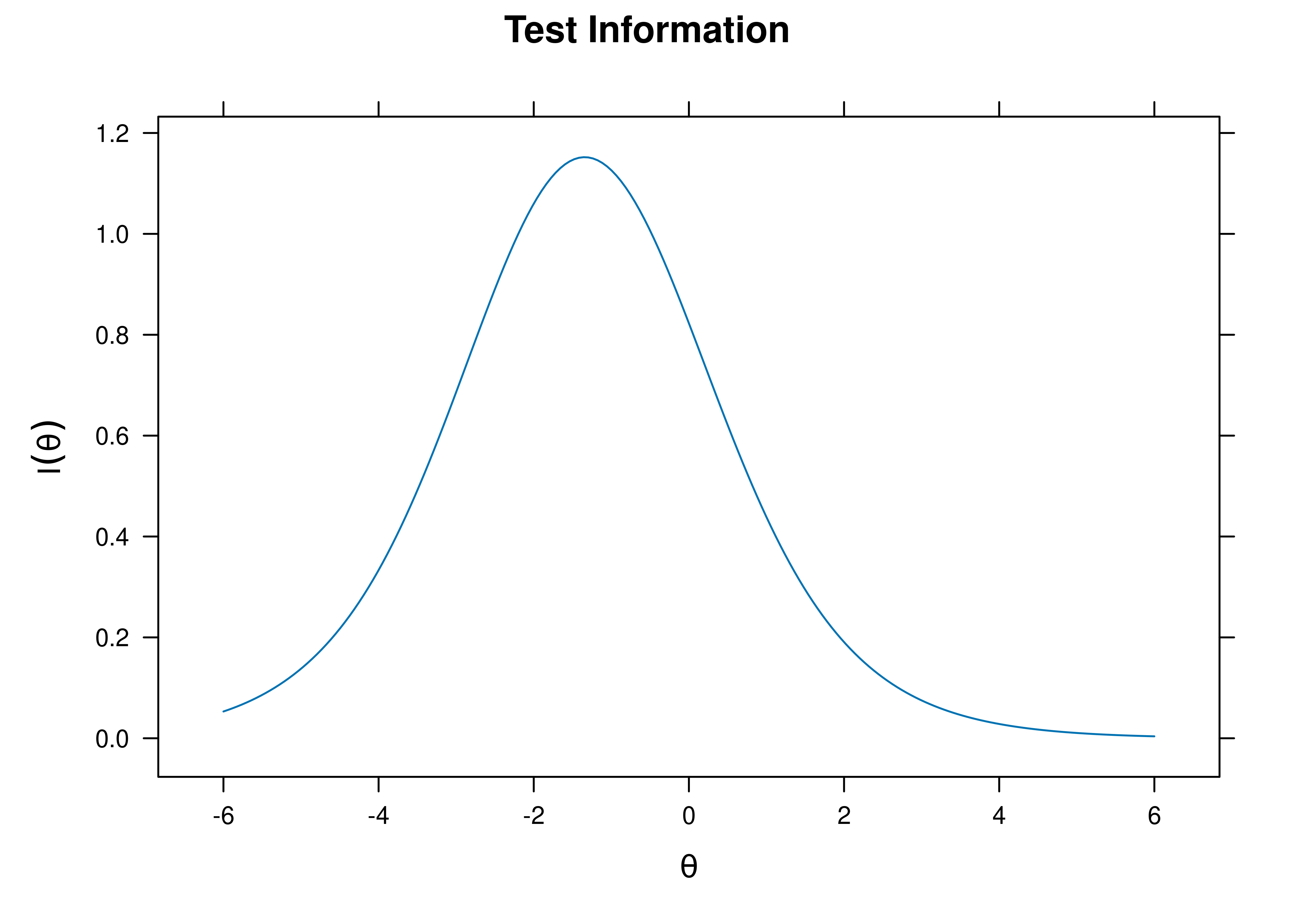

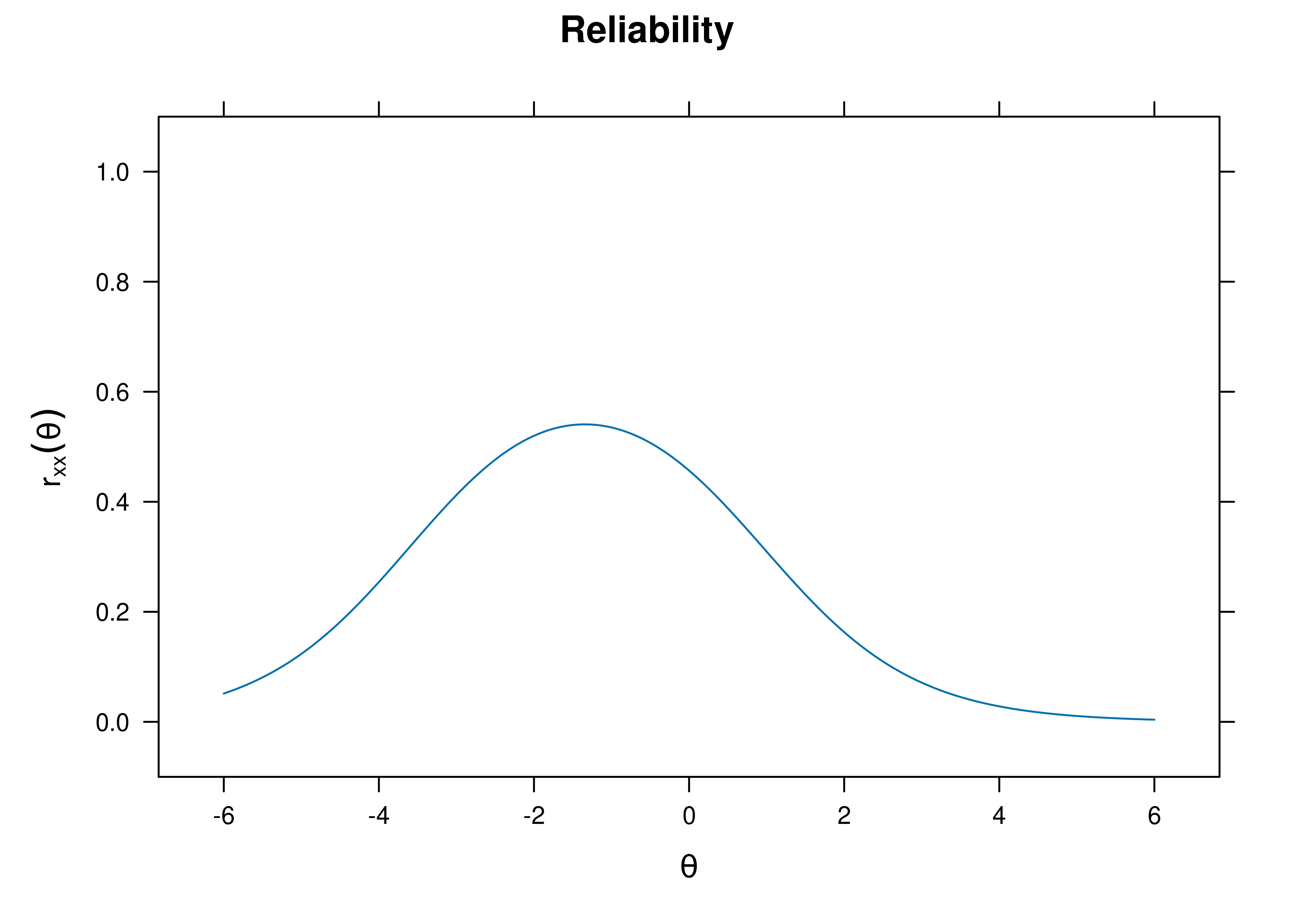

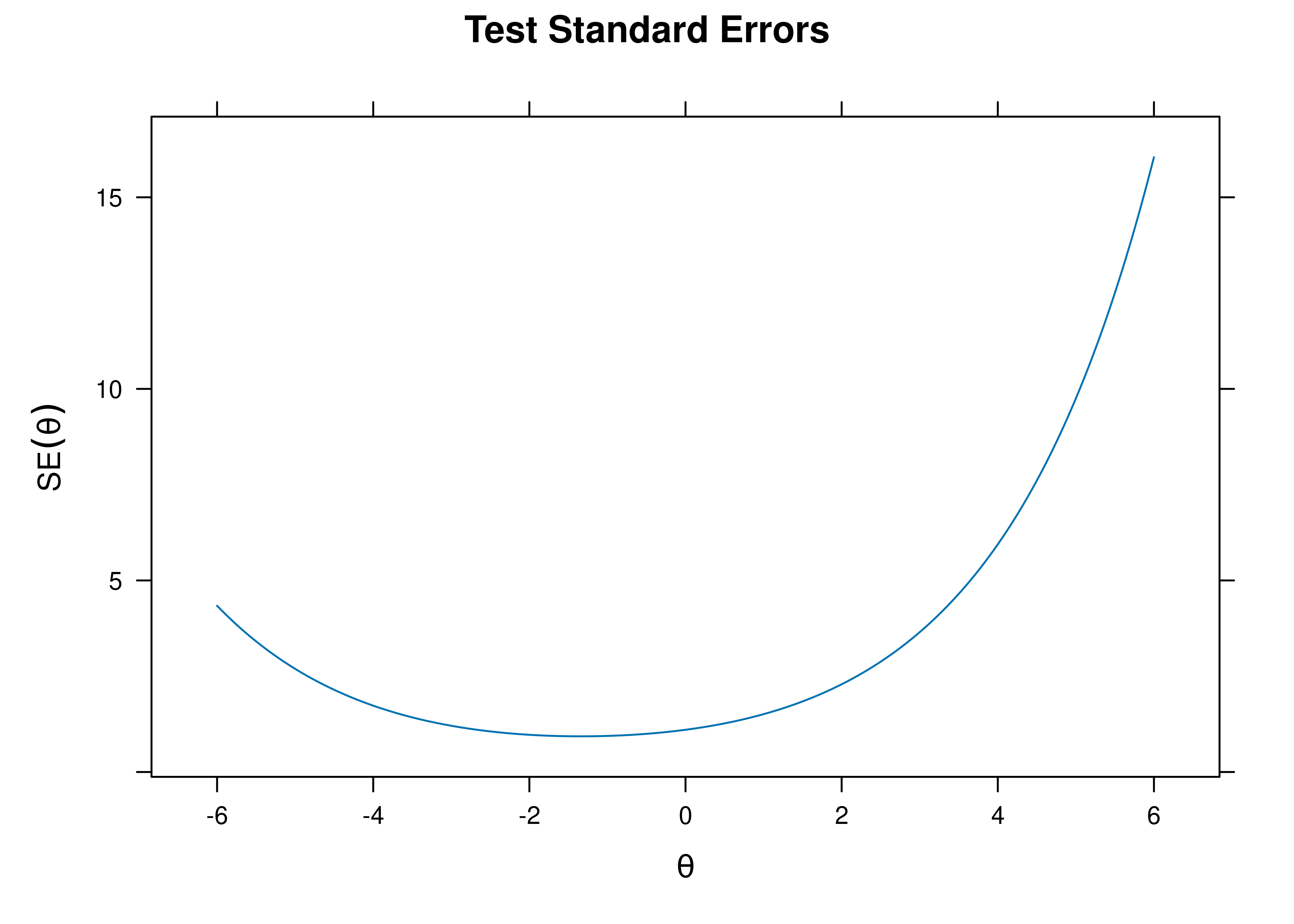

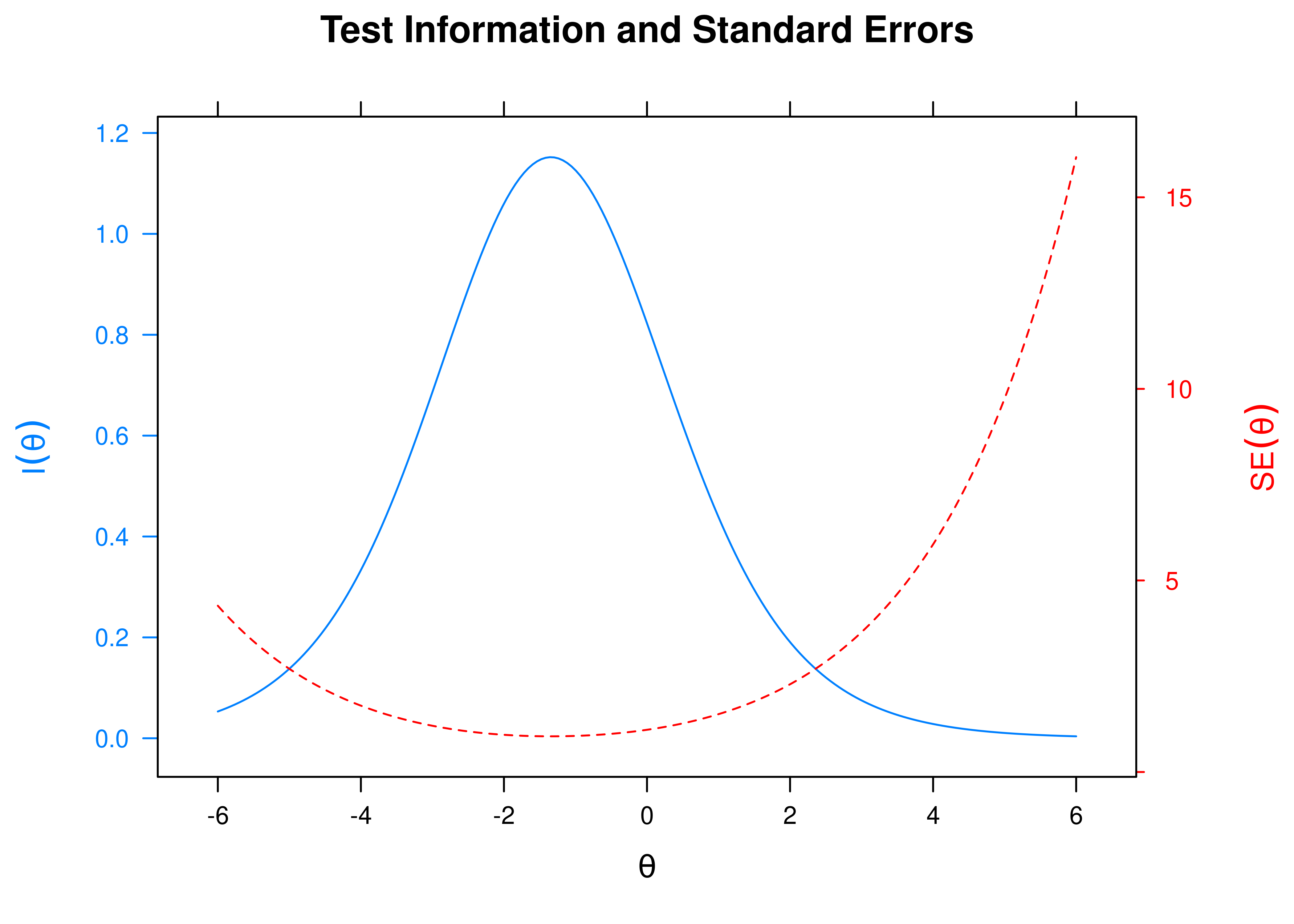

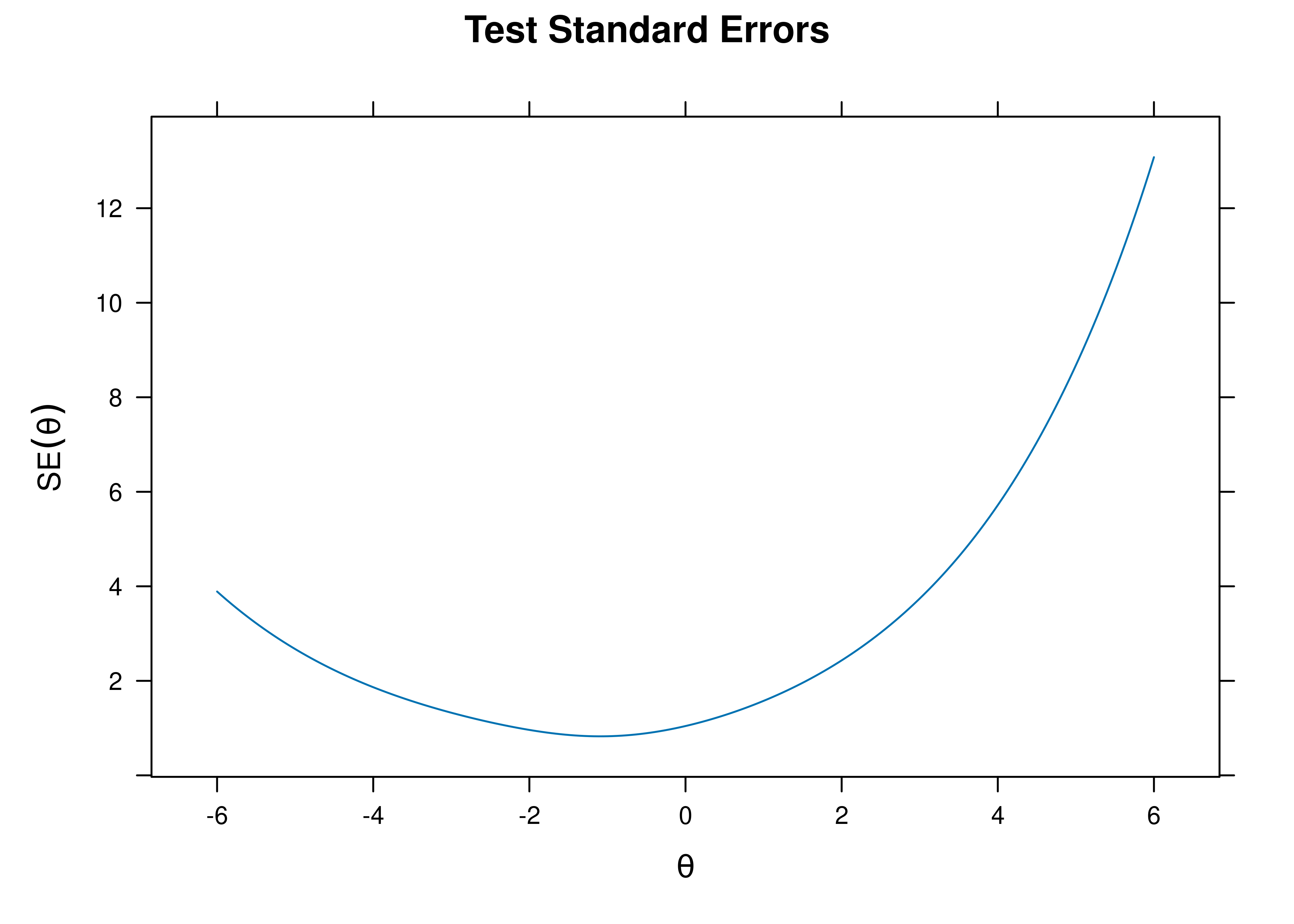

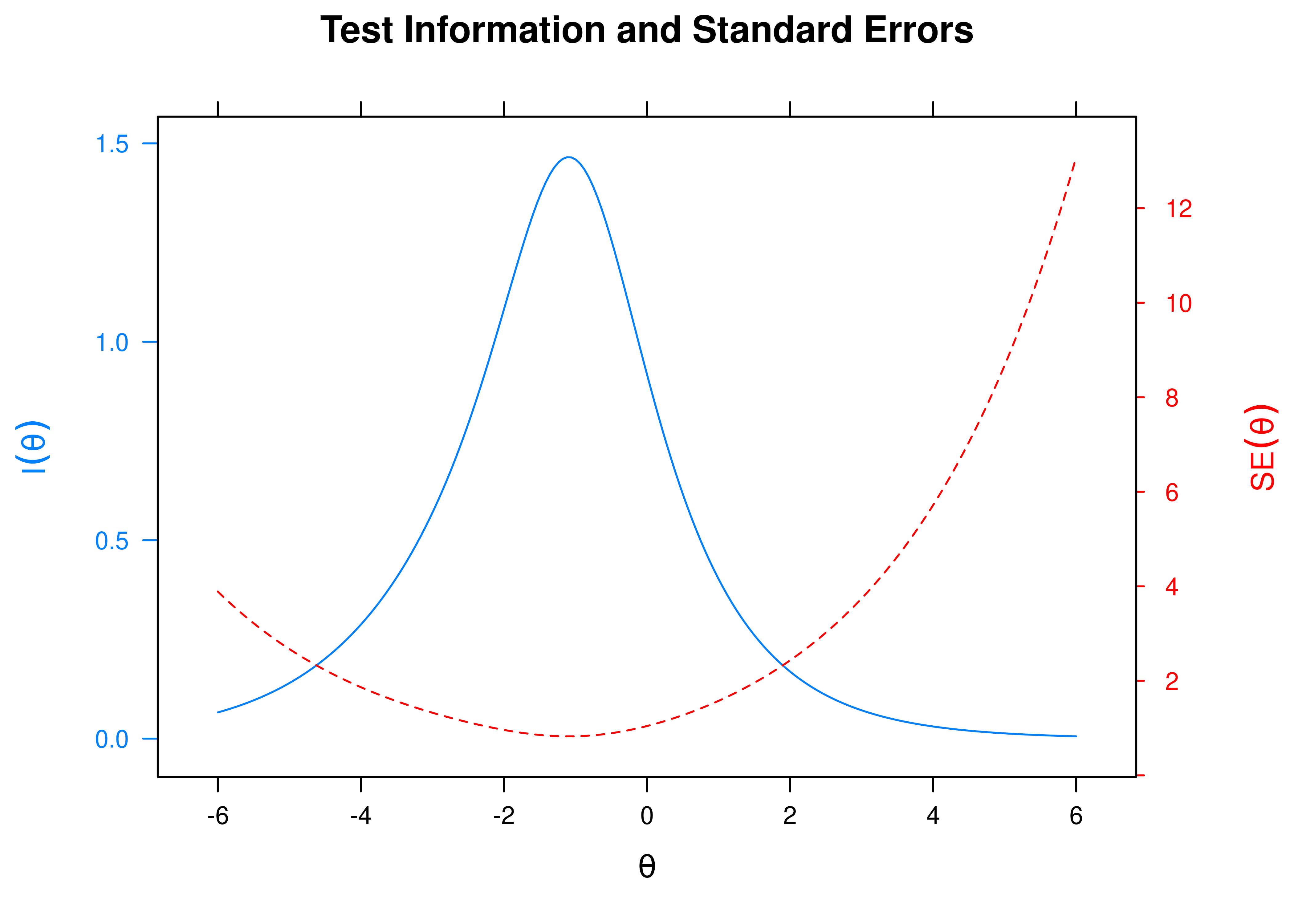

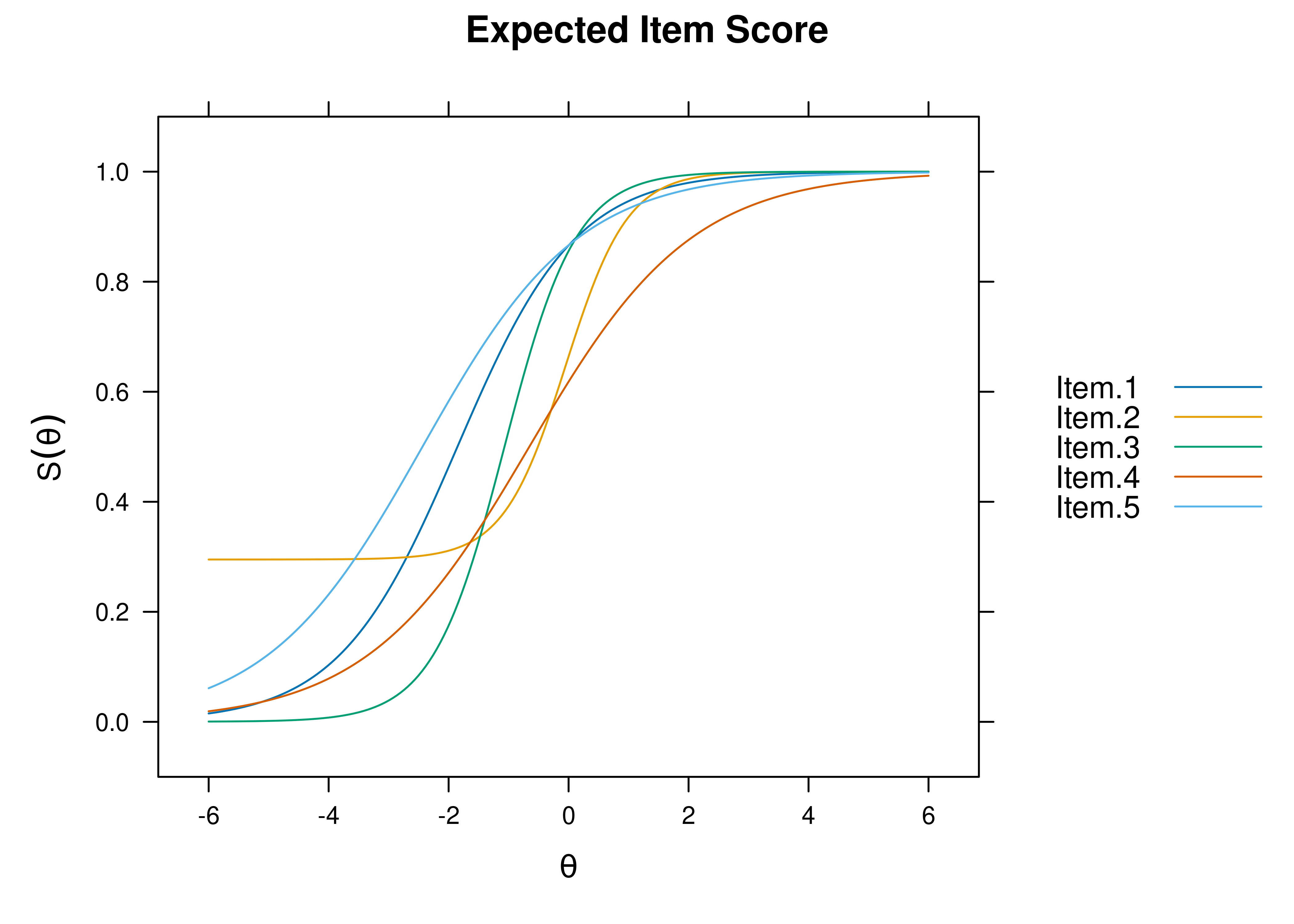

The test curves suggest that the measure is most reliable (i.e., provides the most information has the smallest standard error of measurement) at lower levels of the construct.

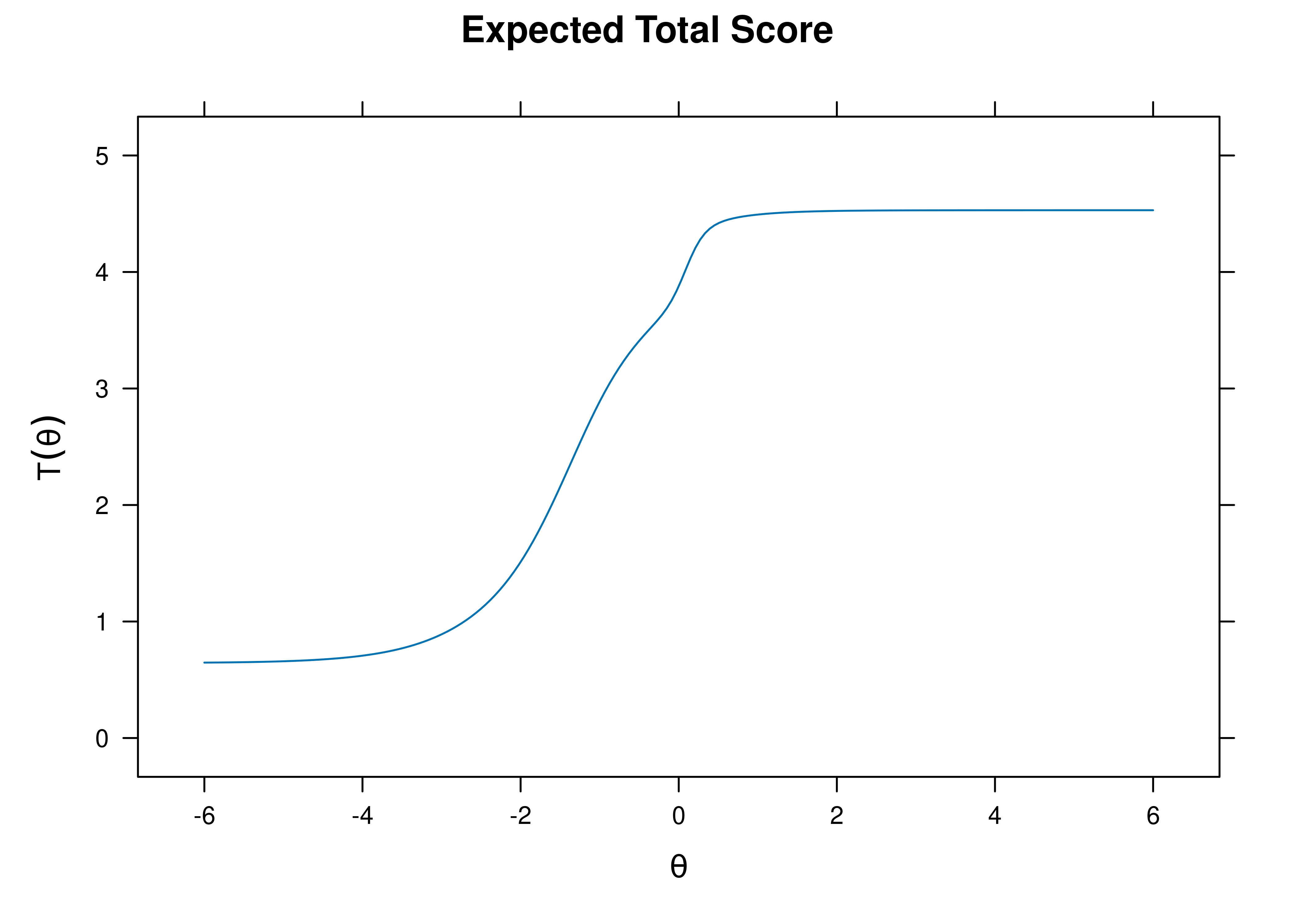

8.5.4.1.1 Test Characteristic Curve

A test characteristic curve (TCC) plot of the expected total score as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.38.

Figure 8.38: Test Characteristic Curve From Two-Parameter Logistic Item Response Theory Model.

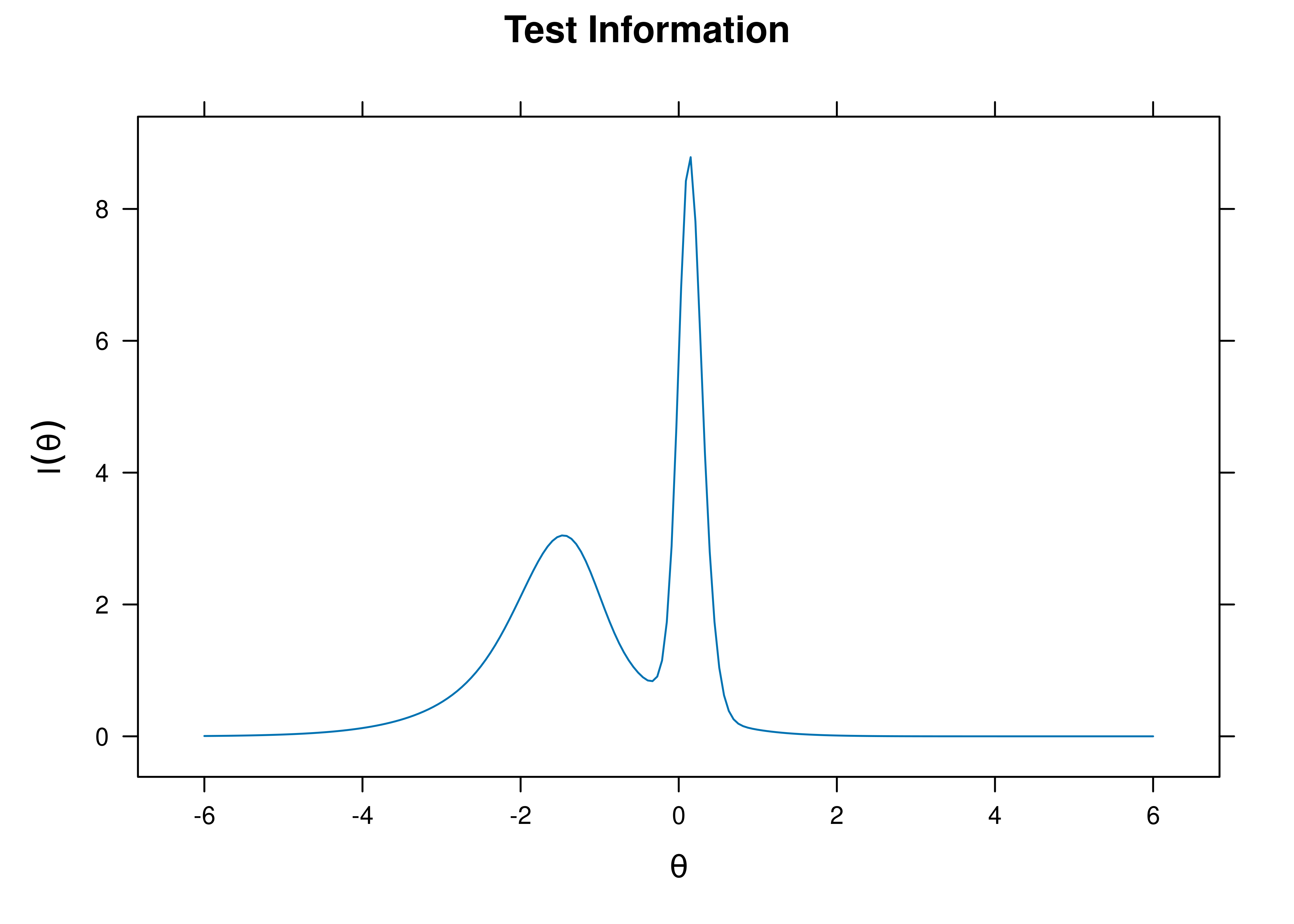

8.5.4.1.2 Test Information Curve

A plot of test information as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.39.

Figure 8.39: Test Information Curve From Two-Parameter Logistic Item Response Theory Model.

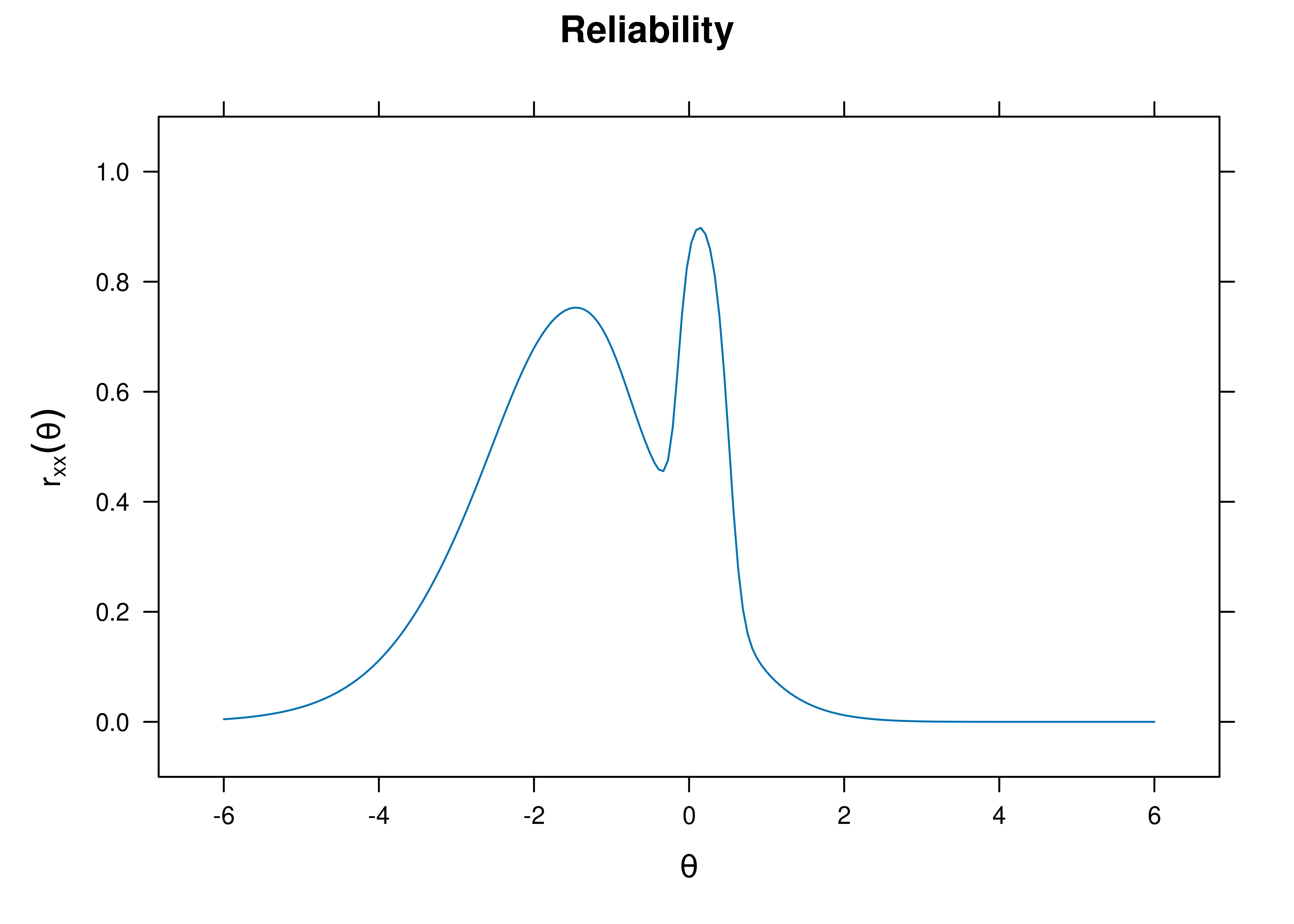

8.5.4.1.3 Test Reliability

The estimate of marginal reliability is below:

[1] 0.4417618A plot of test reliability as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.40.

Figure 8.40: Test Reliability From Two-Parameter Logistic Item Response Theory Model.

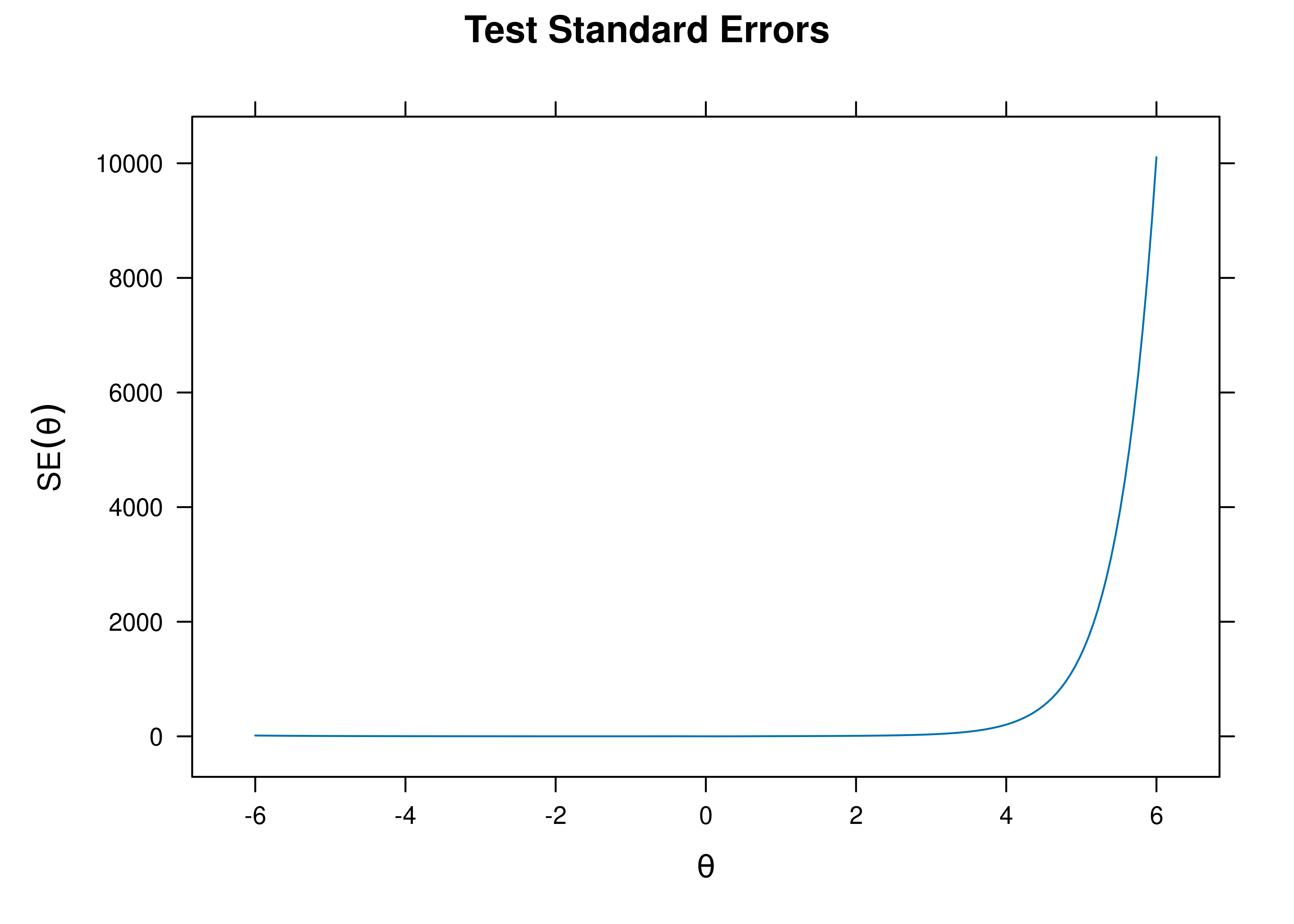

8.5.4.1.4 Test Standard Error of Measurement

A plot of test standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.41.

Figure 8.41: Test Standard Error of Measurement From Two-Parameter Logistic Item Response Theory Model.

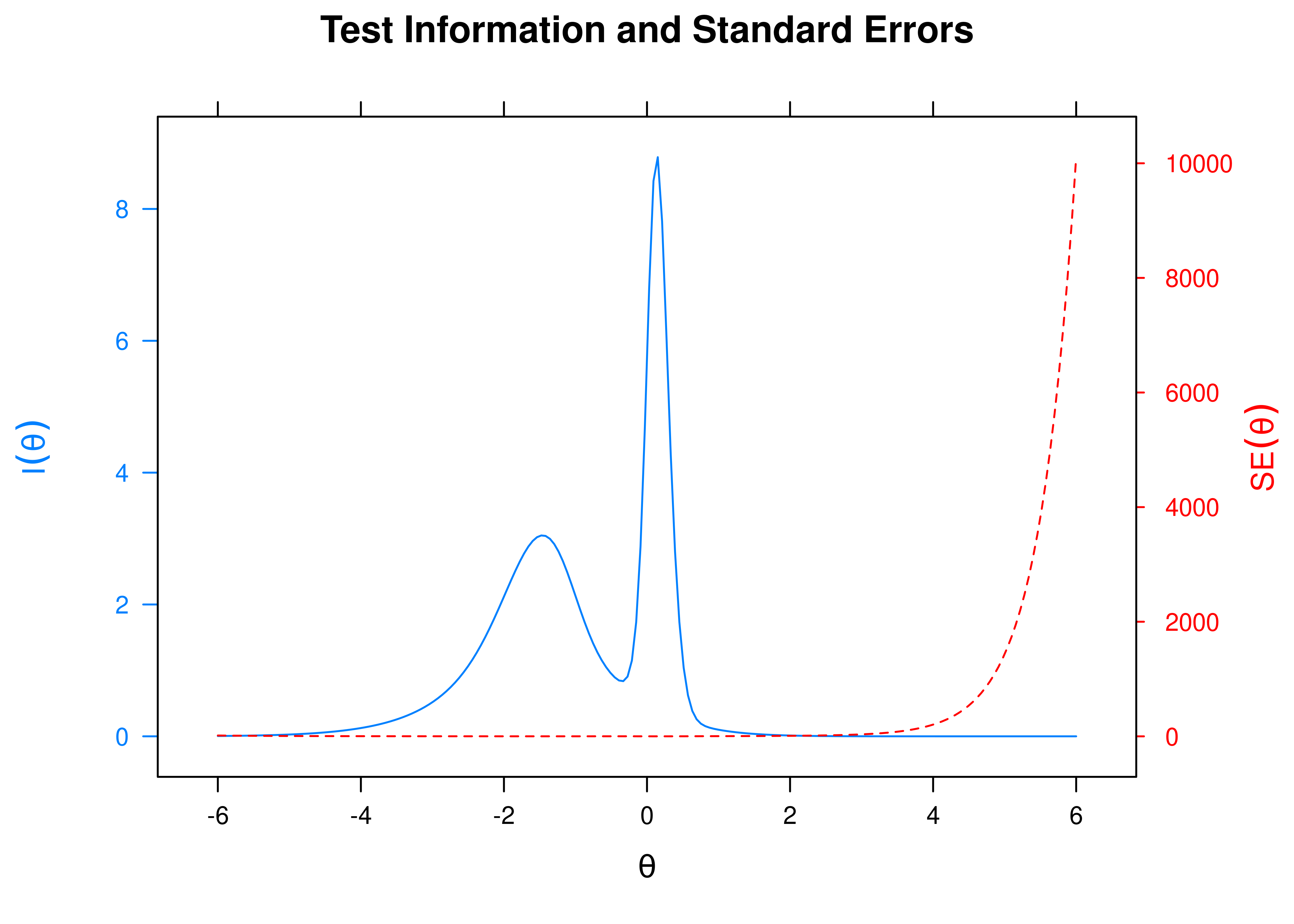

8.5.4.1.5 Test Information Curve and Standard Errors

A plot of test information and standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.42.

Figure 8.42: Test Information Curve and Standard Error of Measurement From Two-Parameter Logistic Item Response Theory Model.

8.5.4.2 Item Curves

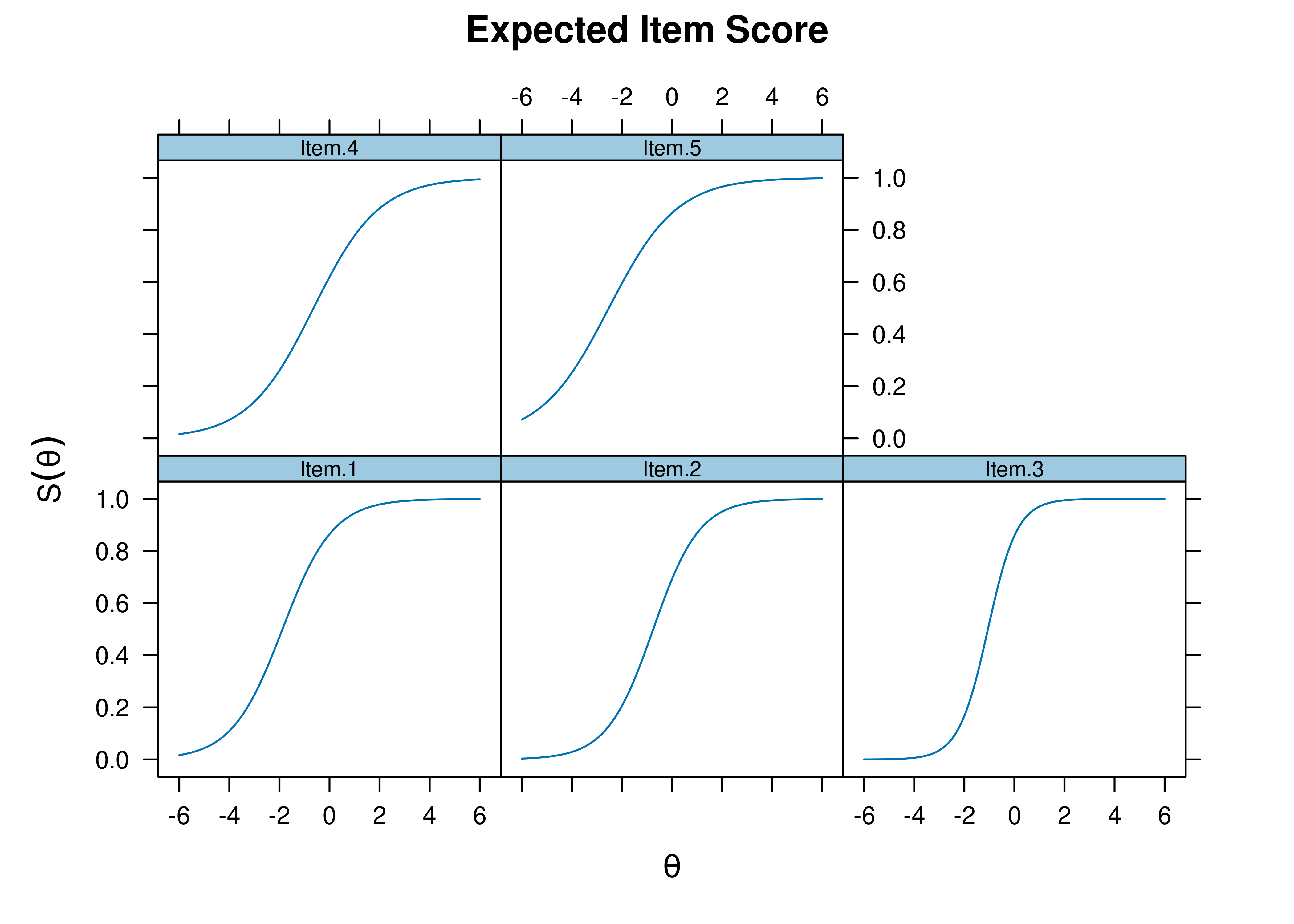

8.5.4.2.1 Item Characteristic Curves

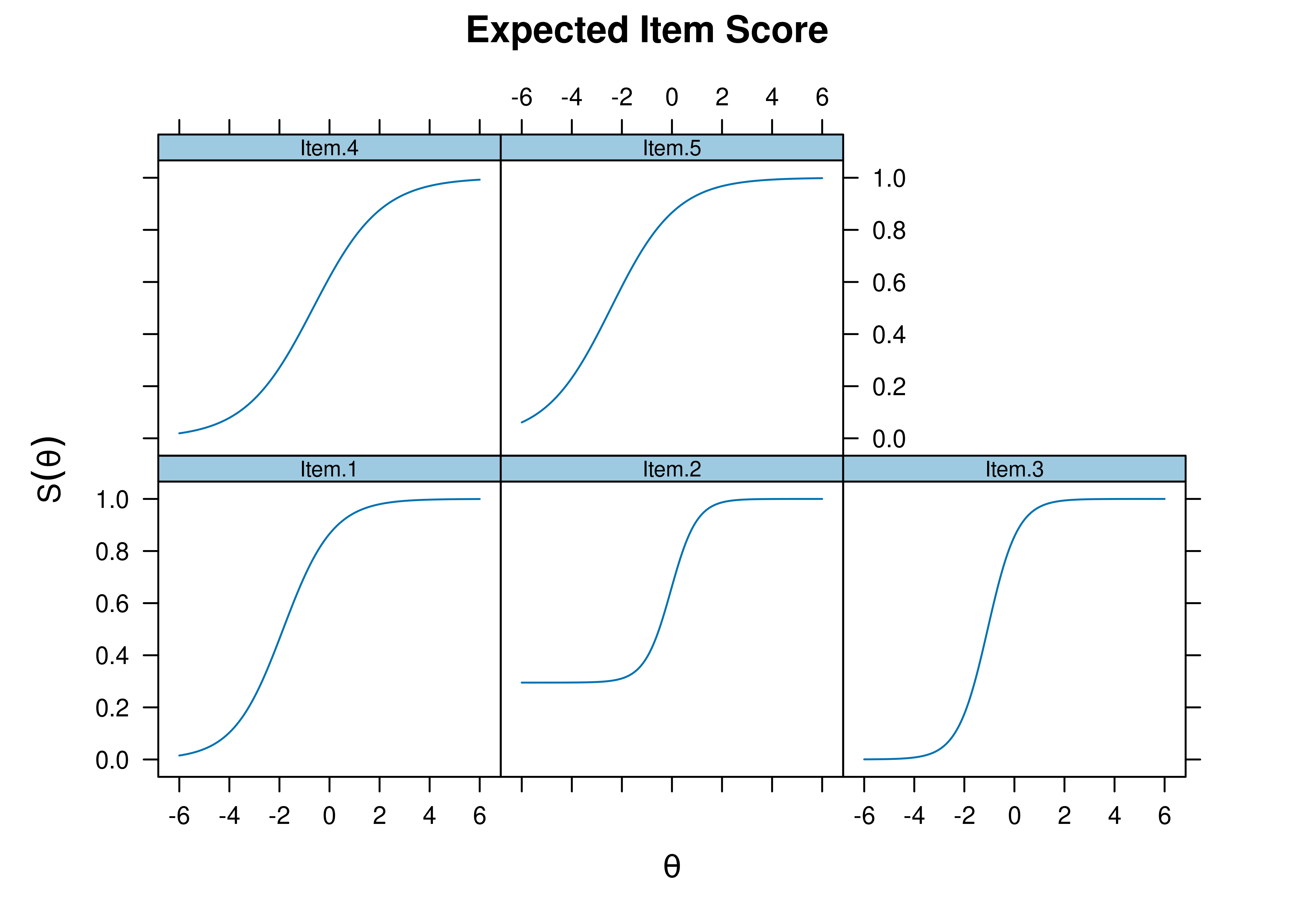

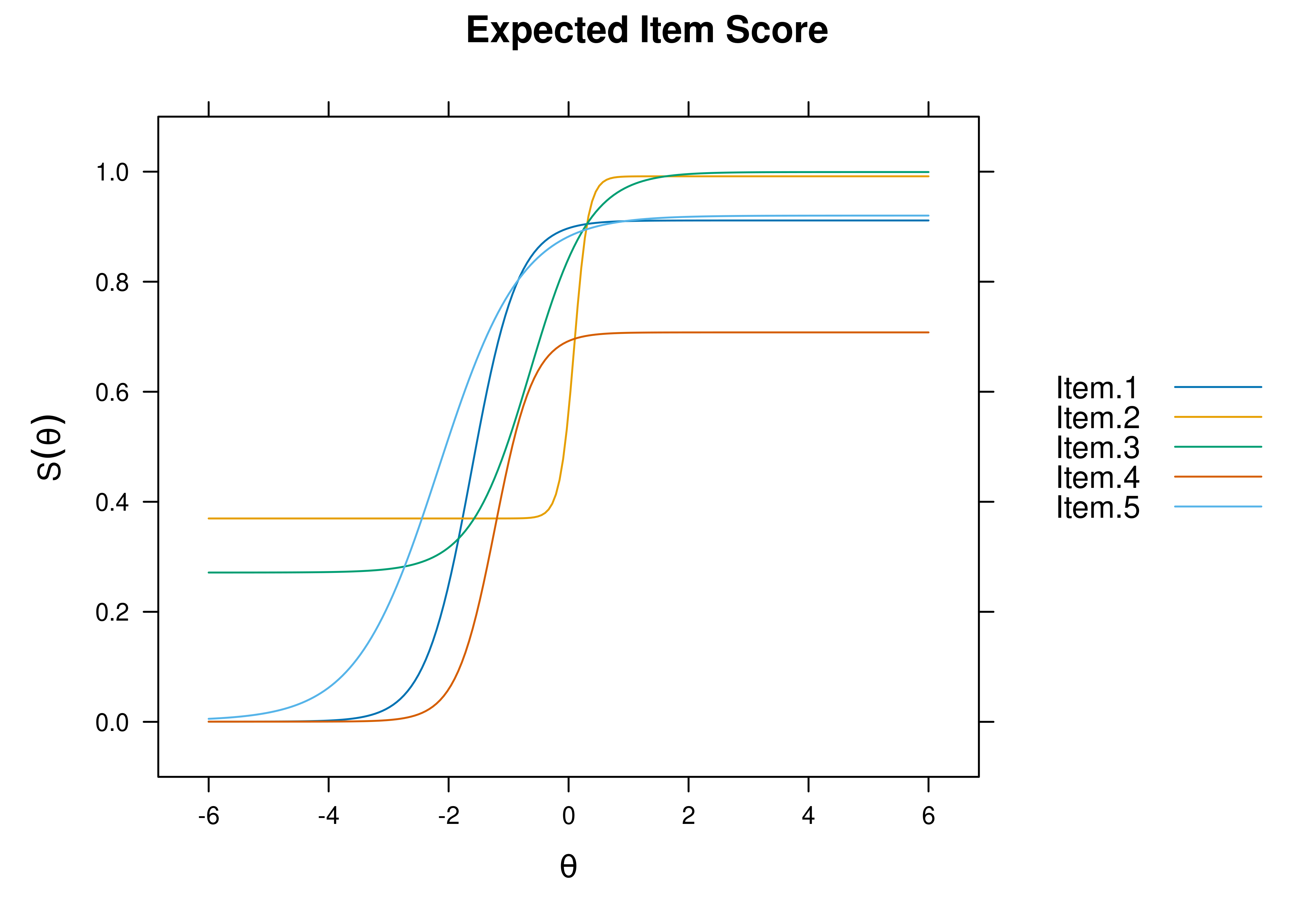

Item characteristic curve (ICC) plots of the probability of item endorsement (or getting the item correct) as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.43 and 8.44.

Figure 8.43: Item Characteristic Curves From Two-Parameter Logistic Item Response Theory Model.

Figure 8.44: Item Characteristic Curves From Two-Parameter Logistic Item Response Theory Model.

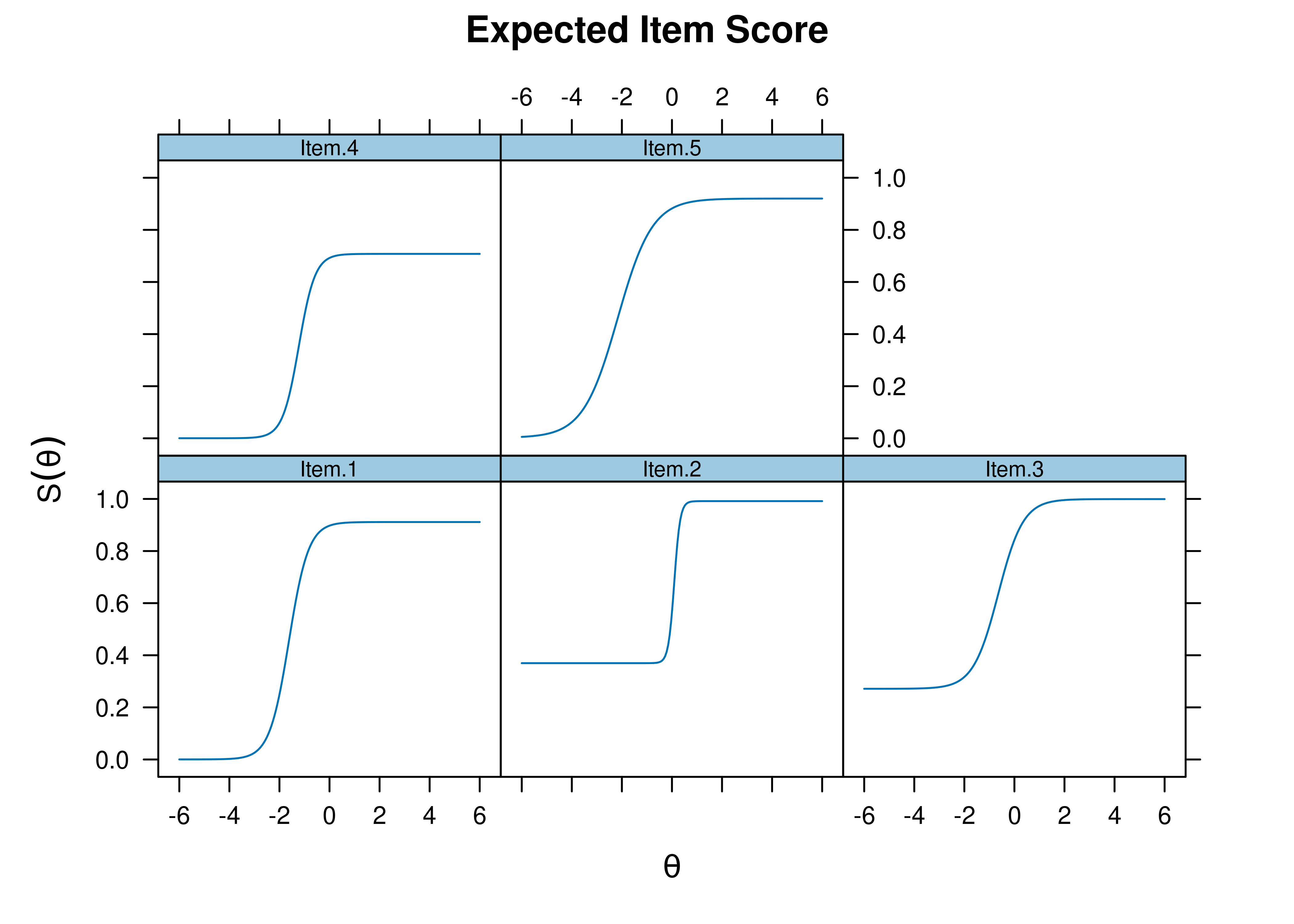

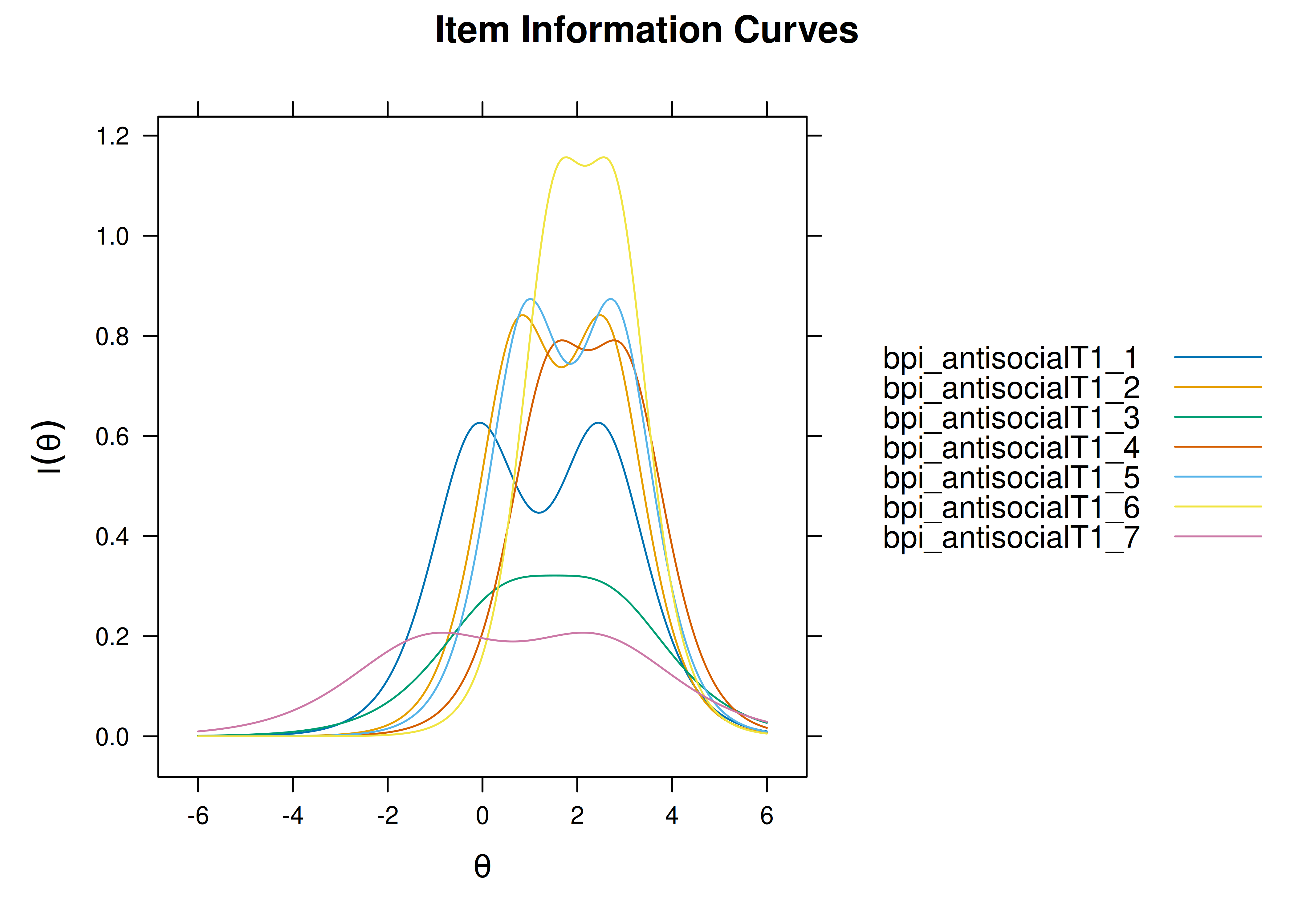

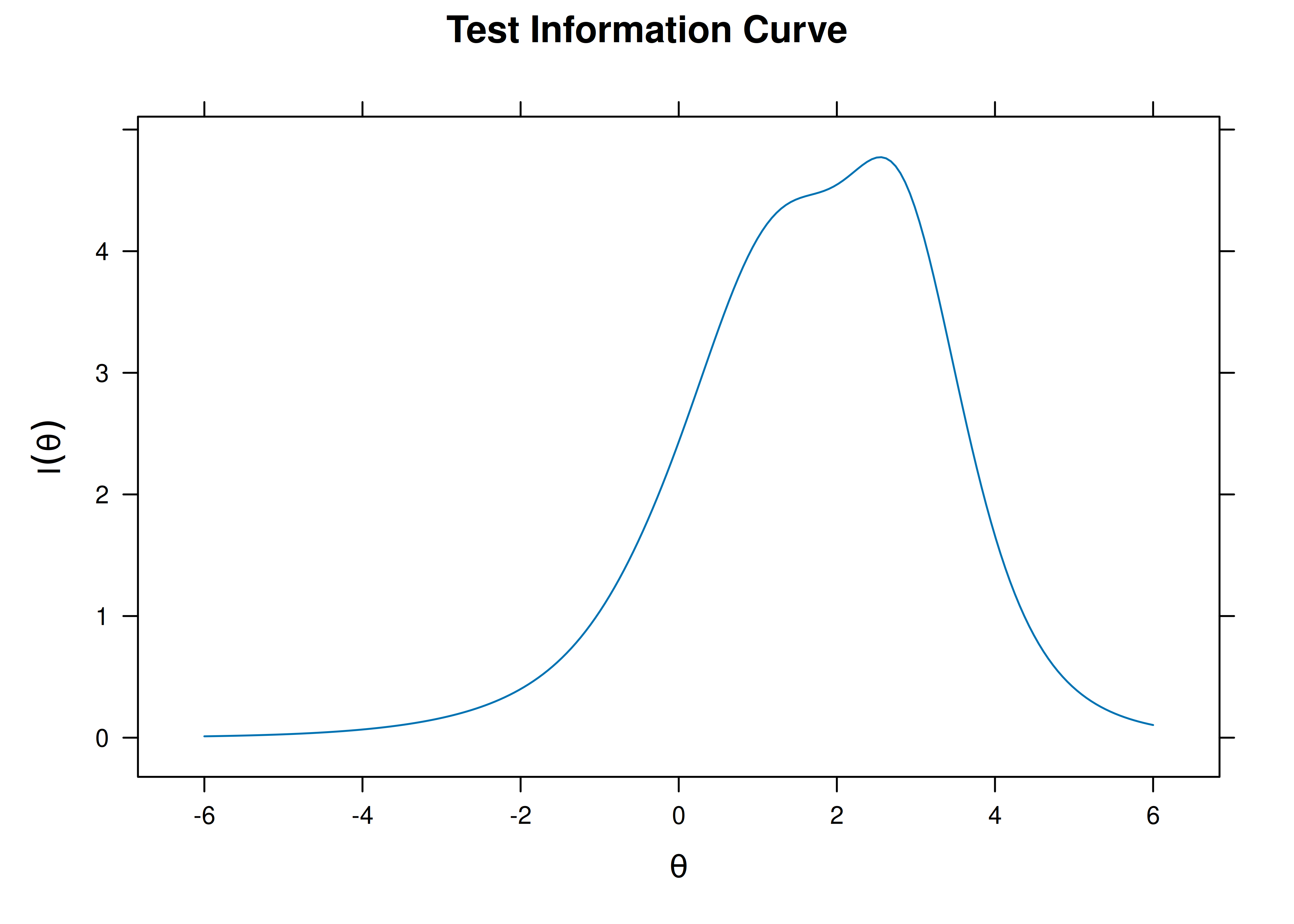

8.5.4.2.2 Item Information Curves

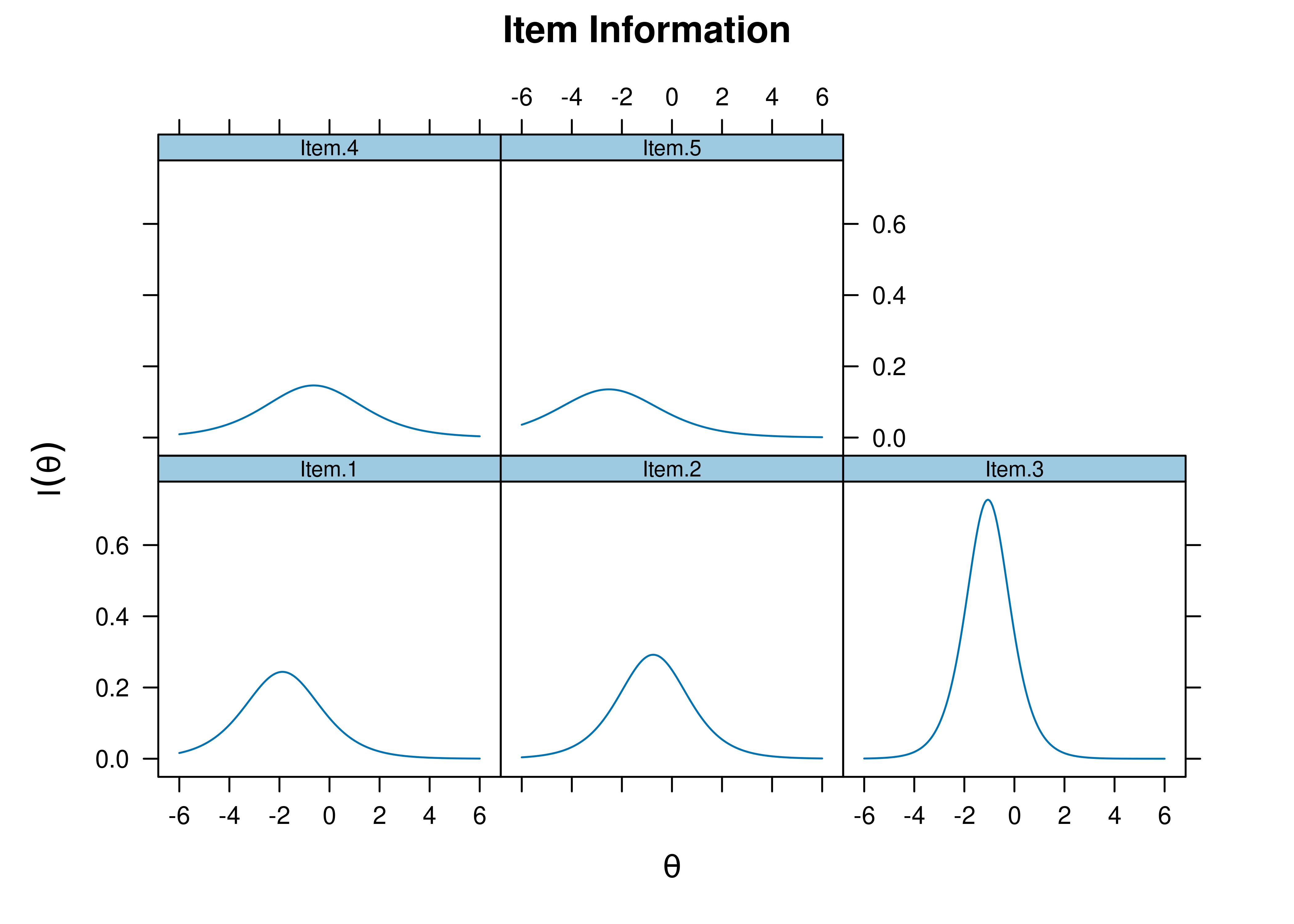

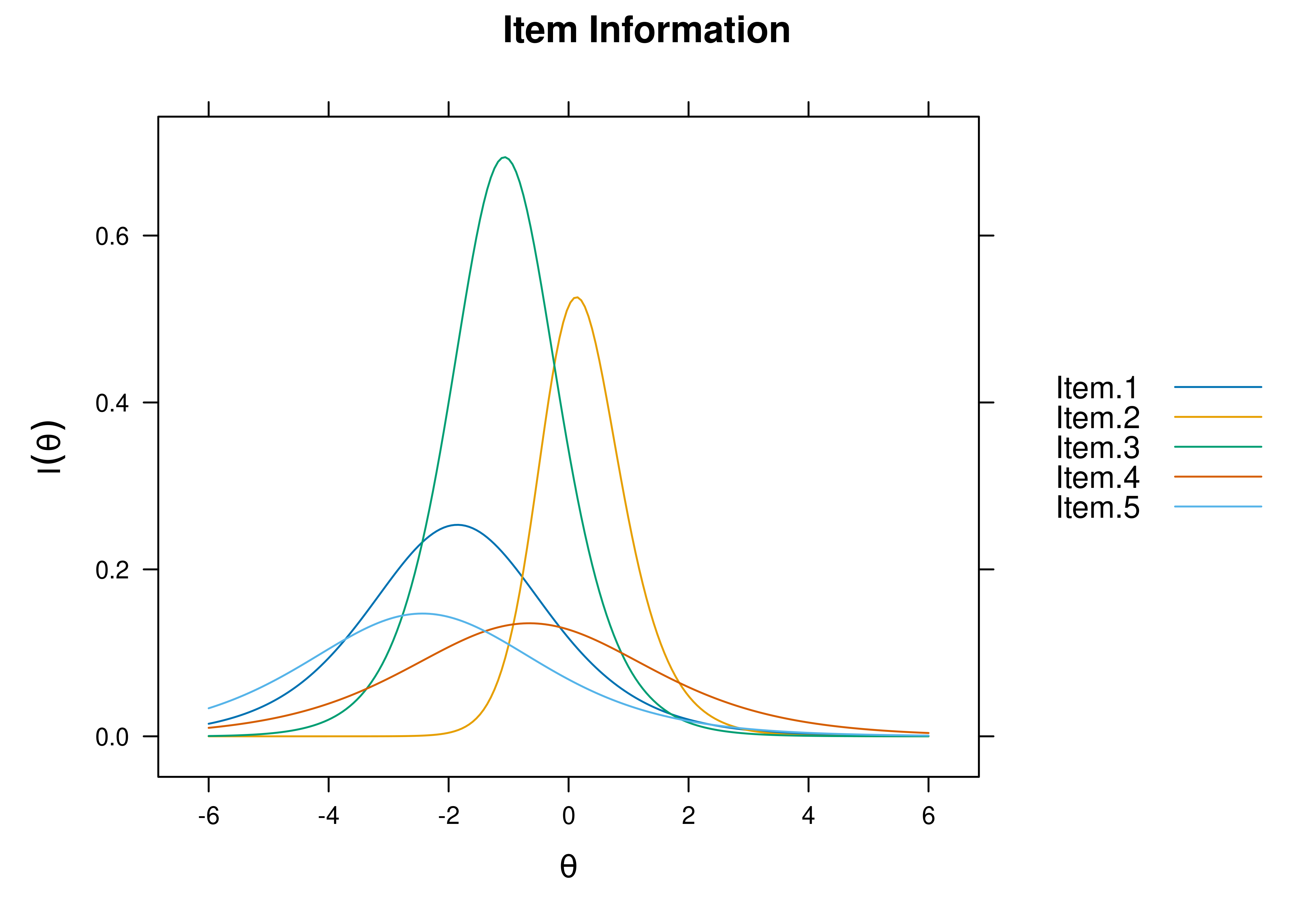

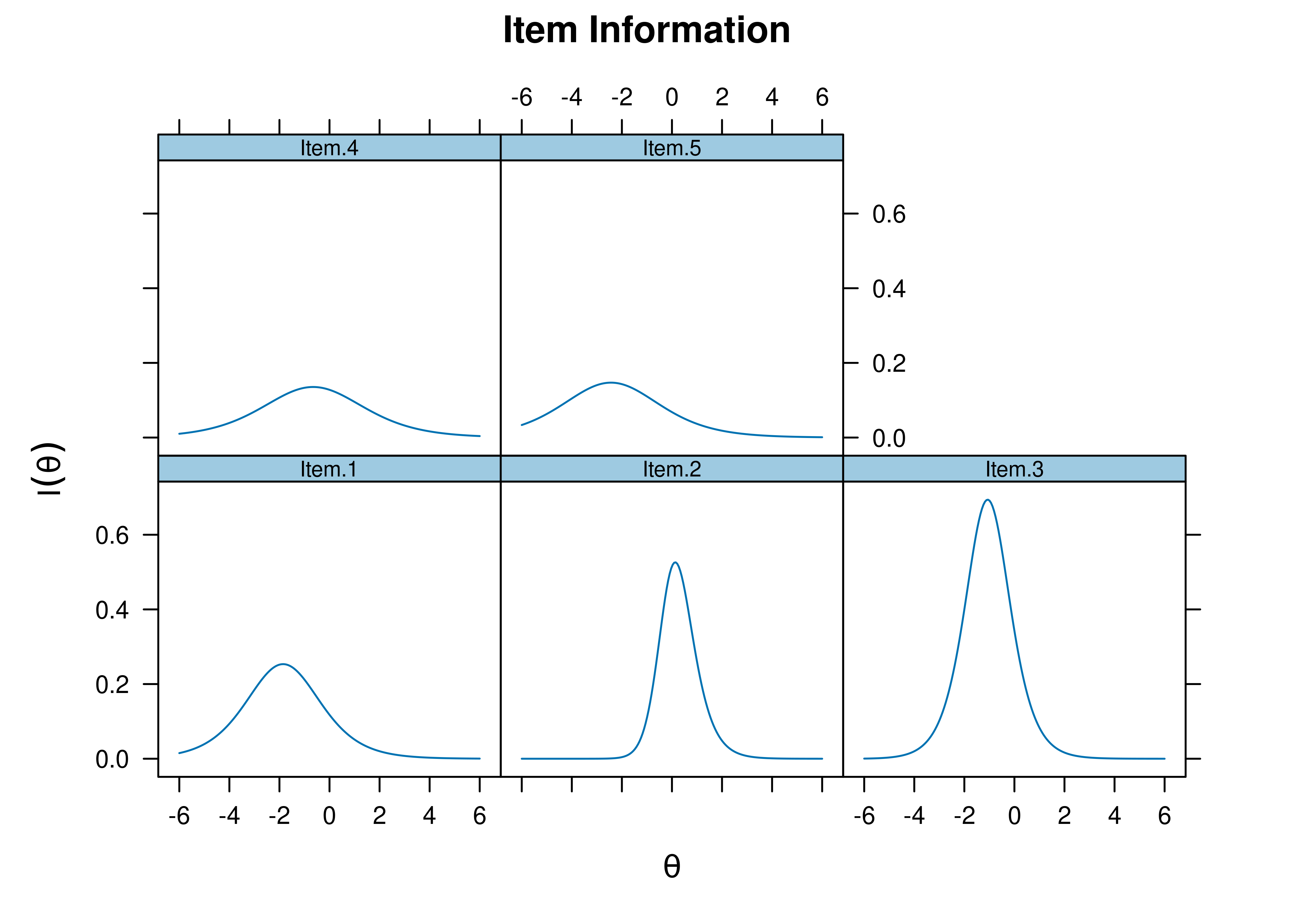

Plots of item information as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.45 and 8.46.

Figure 8.45: Item Information Curves From Two-Parameter Logistic Item Response Theory Model.

Figure 8.46: Item Information Curves From Two-Parameter Logistic Item Response Theory Model.

8.5.4.3 Convert Discrimination To Factor Loading

As described by Aiden Loe (archived at https://perma.cc/H3QN-JAWW), one can convert a discrimination parameter to a standardized factor loading using Equation (8.14):

\[\begin{equation} f = \frac{a}{\sqrt{1 + a^2}} \tag{8.14} \end{equation}\]

where \(a\) is equal to: \(\text{discrimination}/1.702\).

The petersenlab package (Petersen, 2025) contains the discriminationToFactorLoading() function that converts discrimination parameters to standardized factor loadings.

Code

Item.1 Item.2 Item.3 Item.4 Item.5

0.9879254 1.0808847 1.7058006 0.7651853 0.7357980 Item.1 Item.2 Item.3 Item.4 Item.5

0.5020091 0.5360964 0.7078950 0.4100462 0.3968194 8.5.5 CFA

A two-parameter logistic model can also be fit in a CFA framework, sometimes called item factor analysis.

The item factor analysis models were fit in the lavaan package (Rosseel et al., 2022).

Code

twoPLModel_cfa <- '

# Factor Loadings (i.e., discrimination parameters)

latent =~ loading1*Item.1 + loading2*Item.2 + loading3*Item.3 +

loading4*Item.4 + loading5*Item.5

# Item Thresholds (i.e., difficulty parameters)

Item.1 | threshold1*t1

Item.2 | threshold2*t1

Item.3 | threshold3*t1

Item.4 | threshold4*t1

Item.5 | threshold5*t1

'

twoPLModel_cfa_fit = sem(

model = twoPLModel_cfa,

data = mydataIRT,

ordered = c("Item.1", "Item.2", "Item.3", "Item.4","Item.5"),

mimic = "Mplus",

estimator = "WLSMV",

std.lv = TRUE,

parameterization = "theta")

summary(

twoPLModel_cfa_fit,

fit.measures = TRUE,

rsquare = TRUE,

standardized = TRUE)lavaan 0.6-20 ended normally after 28 iterations

Estimator DWLS

Optimization method NLMINB

Number of model parameters 10

Number of observations 1000

Model Test User Model:

Standard Scaled

Test Statistic 9.131 11.688

Degrees of freedom 5 5

P-value (Chi-square) 0.104 0.039

Scaling correction factor 0.784

Shift parameter 0.041

simple second-order correction (WLSMV)

Model Test Baseline Model:

Test statistic 244.385 228.667

Degrees of freedom 10 10

P-value 0.000 0.000

Scaling correction factor 1.072

User Model versus Baseline Model:

Comparative Fit Index (CFI) 0.982 0.969

Tucker-Lewis Index (TLI) 0.965 0.939

Robust Comparative Fit Index (CFI) 0.943

Robust Tucker-Lewis Index (TLI) 0.886

Root Mean Square Error of Approximation:

RMSEA 0.029 0.037

90 Percent confidence interval - lower 0.000 0.008

90 Percent confidence interval - upper 0.058 0.064

P-value H_0: RMSEA <= 0.050 0.871 0.757

P-value H_0: RMSEA >= 0.080 0.001 0.004

Robust RMSEA 0.079

90 Percent confidence interval - lower 0.015

90 Percent confidence interval - upper 0.139

P-value H_0: Robust RMSEA <= 0.050 0.176

P-value H_0: Robust RMSEA >= 0.080 0.547

Standardized Root Mean Square Residual:

SRMR 0.045 0.045

Parameter Estimates:

Parameterization Theta

Standard errors Robust.sem

Information Expected

Information saturated (h1) model Unstructured

Latent Variables:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

latent =~

Item.1 (ldn1) 0.587 0.099 5.916 0.000 0.587 0.506

Item.2 (ldn2) 0.627 0.099 6.338 0.000 0.627 0.531

Item.3 (ldn3) 0.979 0.175 5.594 0.000 0.979 0.699

Item.4 (ldn4) 0.479 0.076 6.325 0.000 0.479 0.432

Item.5 (ldn5) 0.417 0.084 4.961 0.000 0.417 0.384

Thresholds:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

Itm.1|1 (thr1) -1.097 0.070 -15.602 0.000 -1.097 -0.946

Itm.2|1 (thr2) -0.480 0.052 -9.200 0.000 -0.480 -0.407

Itm.3|1 (thr3) -1.043 0.108 -9.623 0.000 -1.043 -0.745

Itm.4|1 (thr4) -0.298 0.045 -6.556 0.000 -0.298 -0.269

Itm.5|1 (thr5) -1.091 0.060 -18.265 0.000 -1.091 -1.007

Variances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

.Item.1 1.000 1.000 0.743

.Item.2 1.000 1.000 0.718

.Item.3 1.000 1.000 0.511

.Item.4 1.000 1.000 0.813

.Item.5 1.000 1.000 0.852

latent 1.000 1.000 1.000

Scales y*:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

Item.1 0.862 0.862 1.000

Item.2 0.847 0.847 1.000

Item.3 0.715 0.715 1.000

Item.4 0.902 0.902 1.000

Item.5 0.923 0.923 1.000

R-Square:

Estimate

Item.1 0.257

Item.2 0.282

Item.3 0.489

Item.4 0.187

Item.5 0.148Code

chisq df pvalue baseline.chisq baseline.df

9.131 5.000 0.104 244.385 10.000

baseline.pvalue rmsea cfi tli srmr

0.000 0.029 0.982 0.965 0.045 $type

[1] "cor.bollen"

$cov

Item.1 Item.2 Item.3 Item.4 Item.5

Item.1 0.000

Item.2 -0.043 0.000

Item.3 -0.064 0.060 0.000

Item.4 0.077 -0.026 -0.026 0.000

Item.5 0.091 -0.069 -0.004 -0.006 0.000

$mean

Item.1 Item.2 Item.3 Item.4 Item.5

0 0 0 0 0

$th

Item.1|t1 Item.2|t1 Item.3|t1 Item.4|t1 Item.5|t1

0 0 0 0 0 latent

0.468 latent

0.296

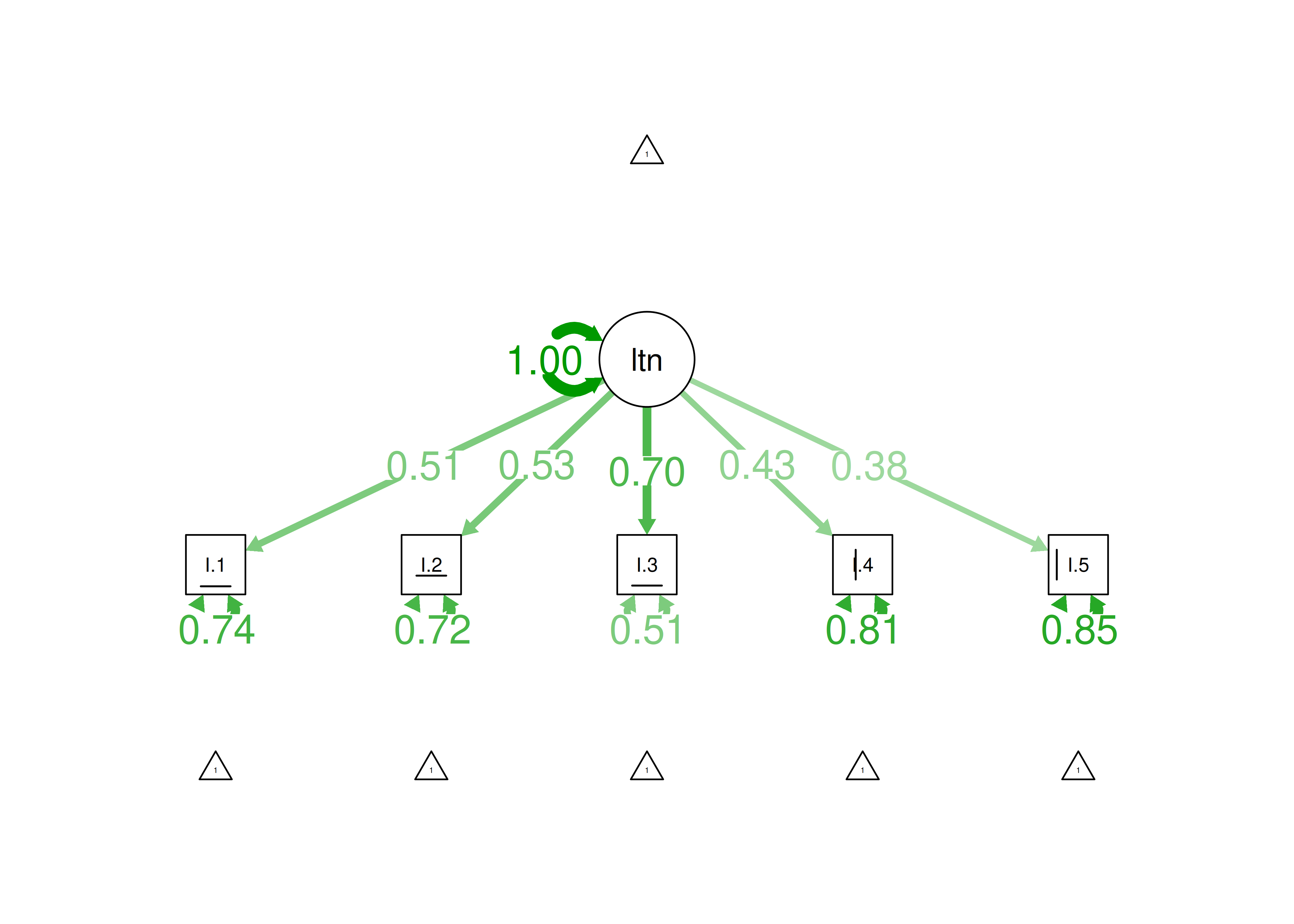

Figure 8.47: Item Factor Analysis Diagram of Two-Parameter Logistic Model.

8.6 Two-Parameter Multidimensional Logistic Model

A 2PL multidimensional IRT model is a model that allows multiple dimensions (latent factors) and is fit to dichotomous data, which estimates a different difficulty (\(b\)) and discrimination (\(a\)) parameter for each item.

Multidimensional IRT models were fit using the mirt package (Chalmers, 2020).

In this example, I estimate a 2PL multidimensional IRT model by estimating two factors.

8.6.2 Model Summary

Rotation: oblimin

Rotated factor loadings:

F1 F2 h2

Item.1 0.7944 -0.0111 0.623

Item.2 0.0804 0.4630 0.255

Item.3 -0.0129 0.8628 0.734

Item.4 0.2794 0.1925 0.165

Item.5 0.2929 0.1772 0.165

Rotated SS loadings: 0.802 1.027

Factor correlations:

F1 F2

F1 1.000

F2 0.463 1$items

a1 a2 d g u

Item.1 -2.007 0.870 2.648 0 1

Item.2 -0.849 -0.522 0.788 0 1

Item.3 -2.153 -1.836 2.483 0 1

Item.4 -0.756 -0.028 0.485 0 1

Item.5 -0.757 0.000 1.864 0 1

$means

F1 F2

0 0

$cov

F1 F2

F1 1 0

F2 0 18.6.4 Compare model fit

The modified model with two factors and the original one-factor model are considered “nested” models. The original model is nested within the modified model because the modified model includes all of the terms of the original model along with additional terms. Model fit of nested models can be compared with a chi-square difference test.

Using a chi-square difference test to compare two nested models, the two-factor model fits significantly better than the one-factor model.

8.6.5 Plots

8.6.5.1 Test Curves

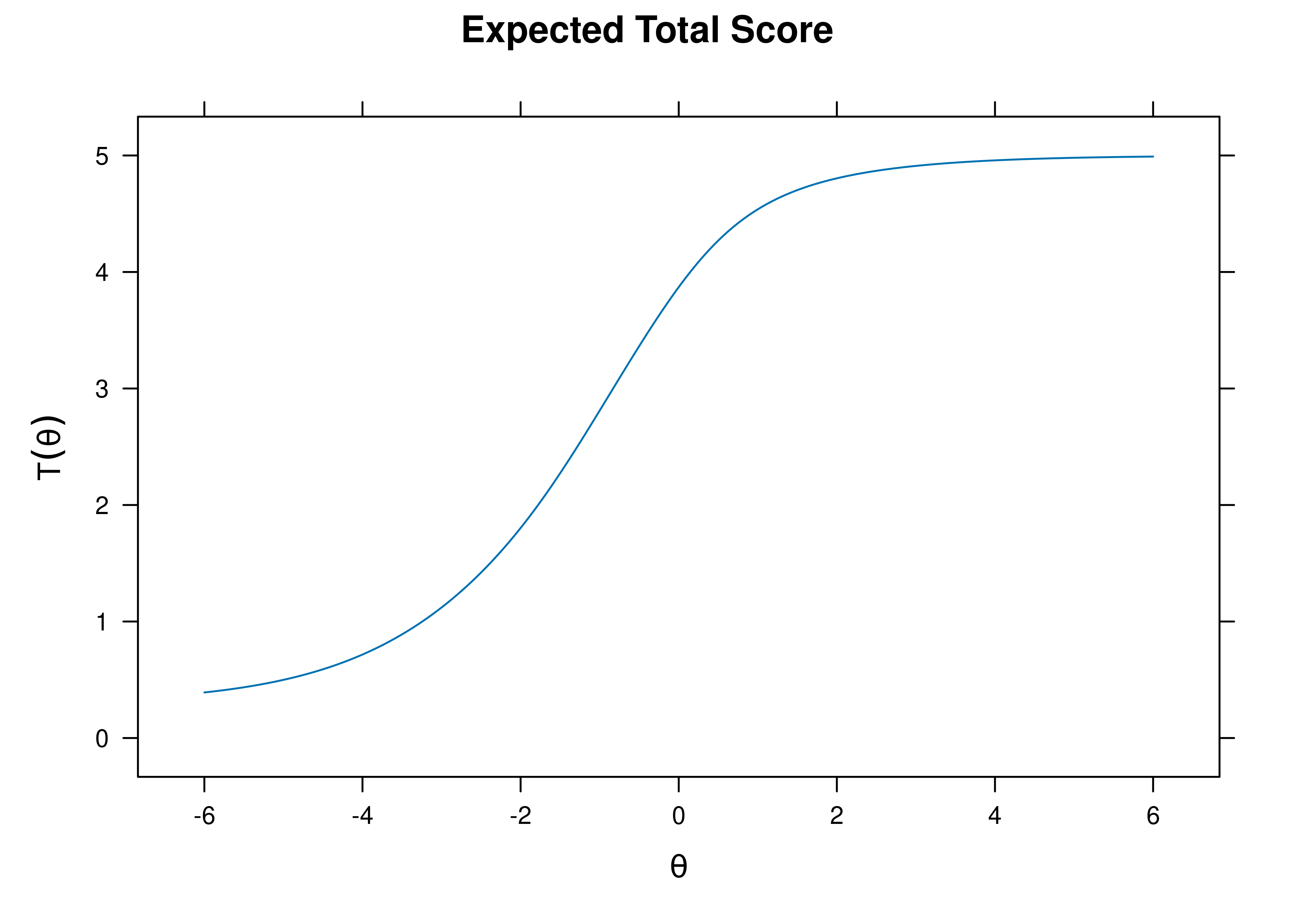

8.6.5.1.1 Test Characteristic Curve

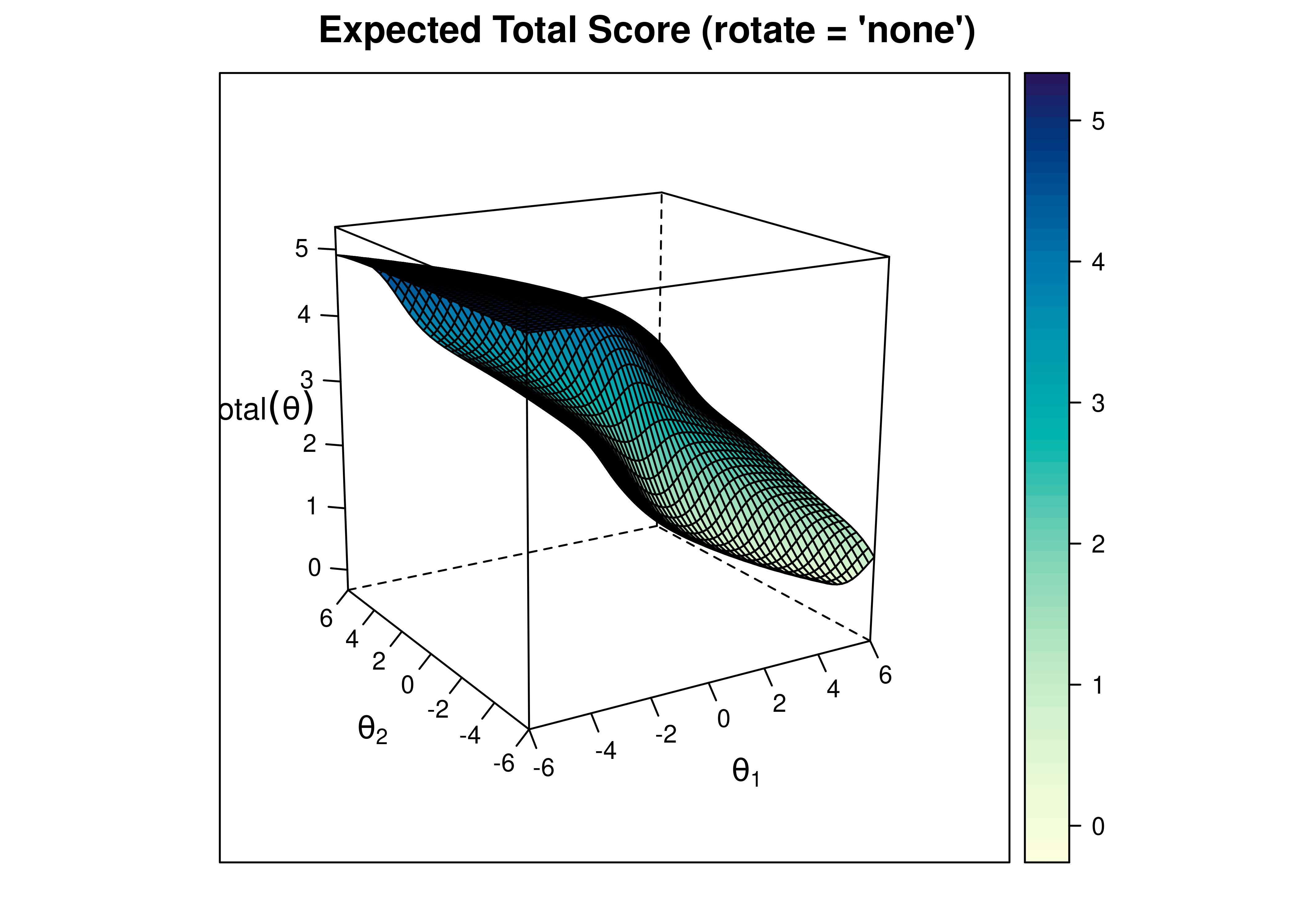

A test characteristic curve (TCC) plot of the expected total score as a function of a person’s level on each latent construct (theta; \(\theta\)) is in Figure 8.48.

Figure 8.48: Test Characteristic Curve From Two-Parameter Multidimensional Item Response Theory Model.

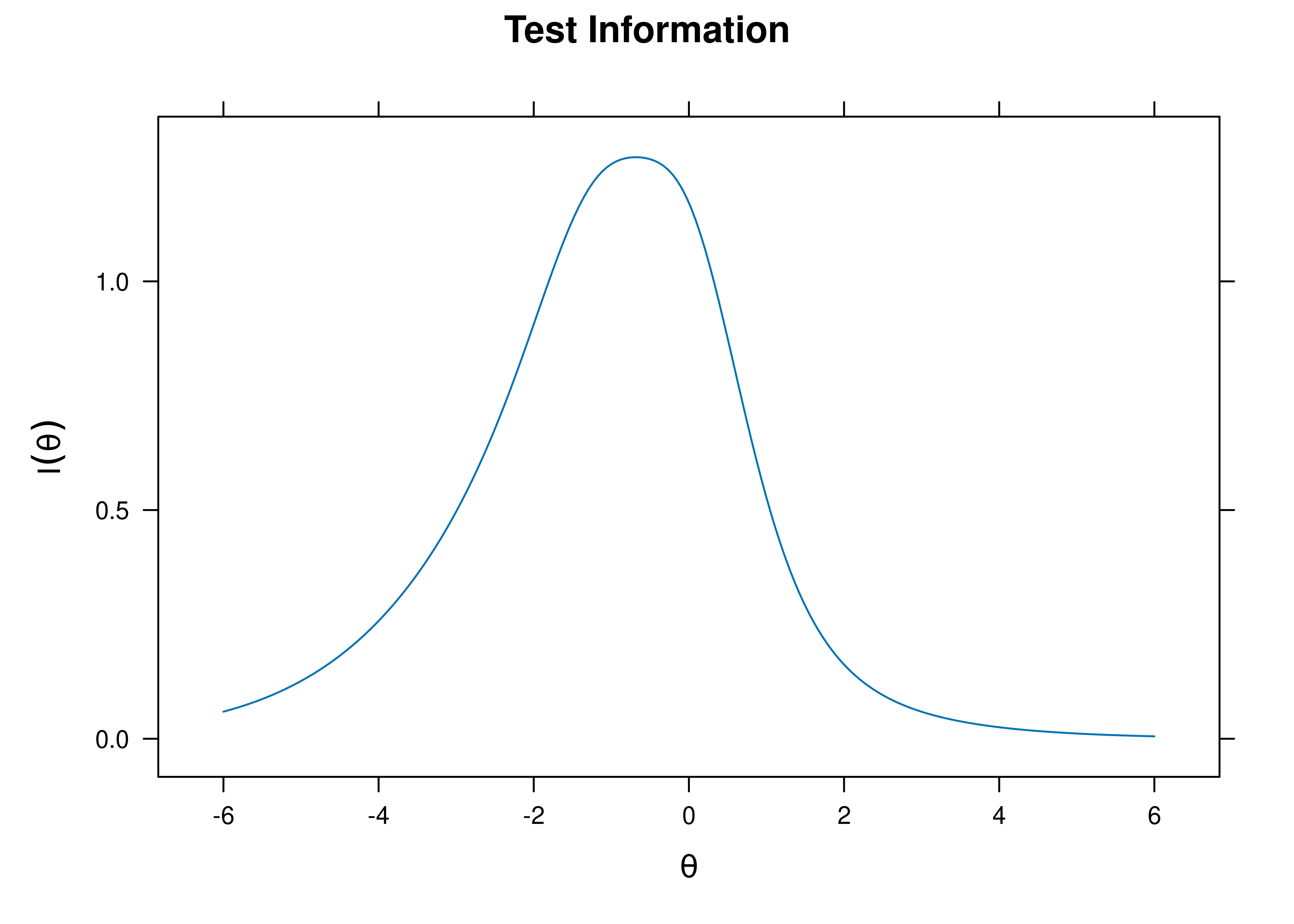

8.6.5.1.2 Test Information Curve

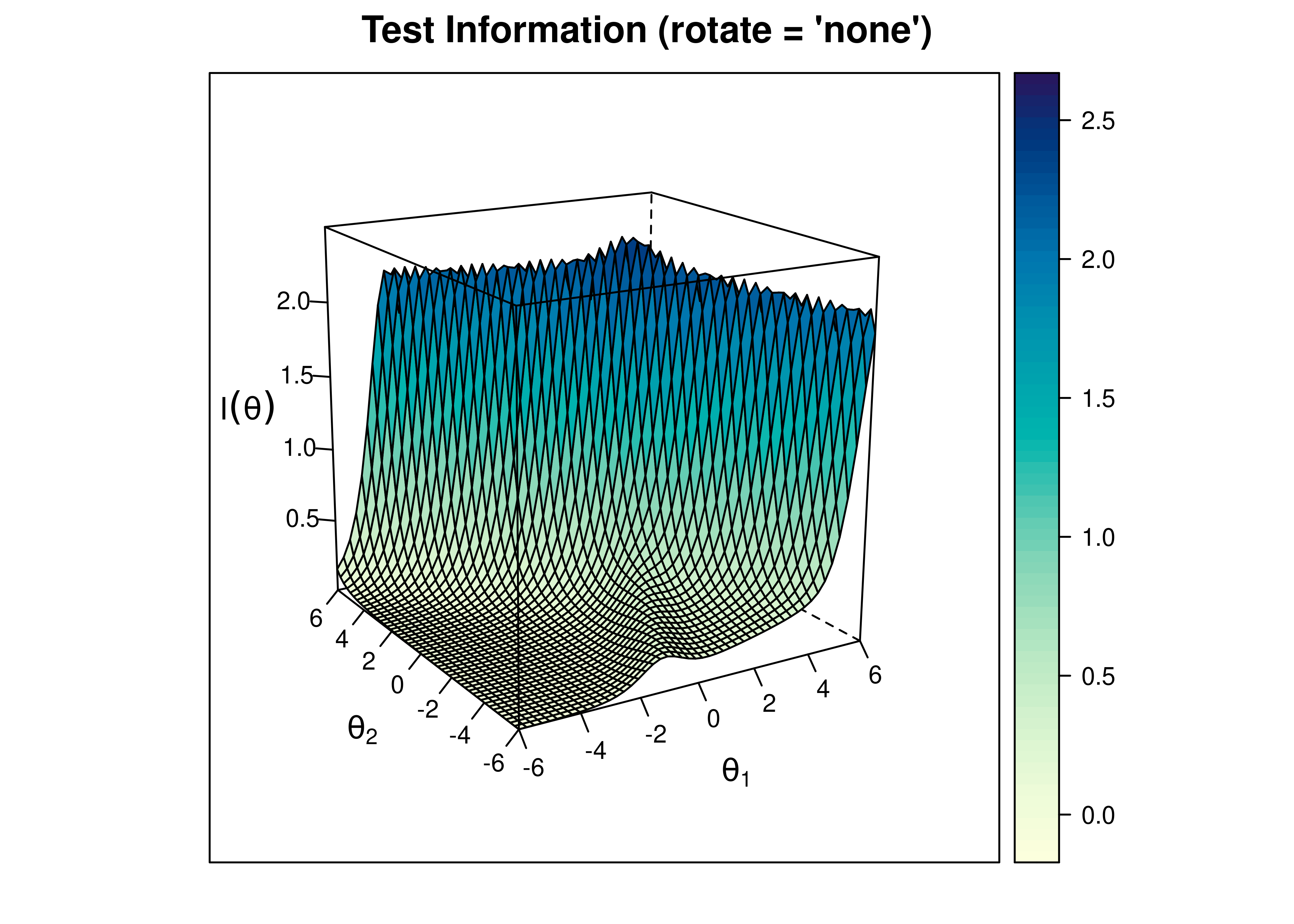

A plot of test information as a function of a person’s level on each latent construct (theta; \(\theta\)) is in Figure 8.49.

Figure 8.49: Test Information Curve From Two-Parameter Multidimensional Item Response Theory Model.

8.6.5.1.3 Test Standard Error of Measurement

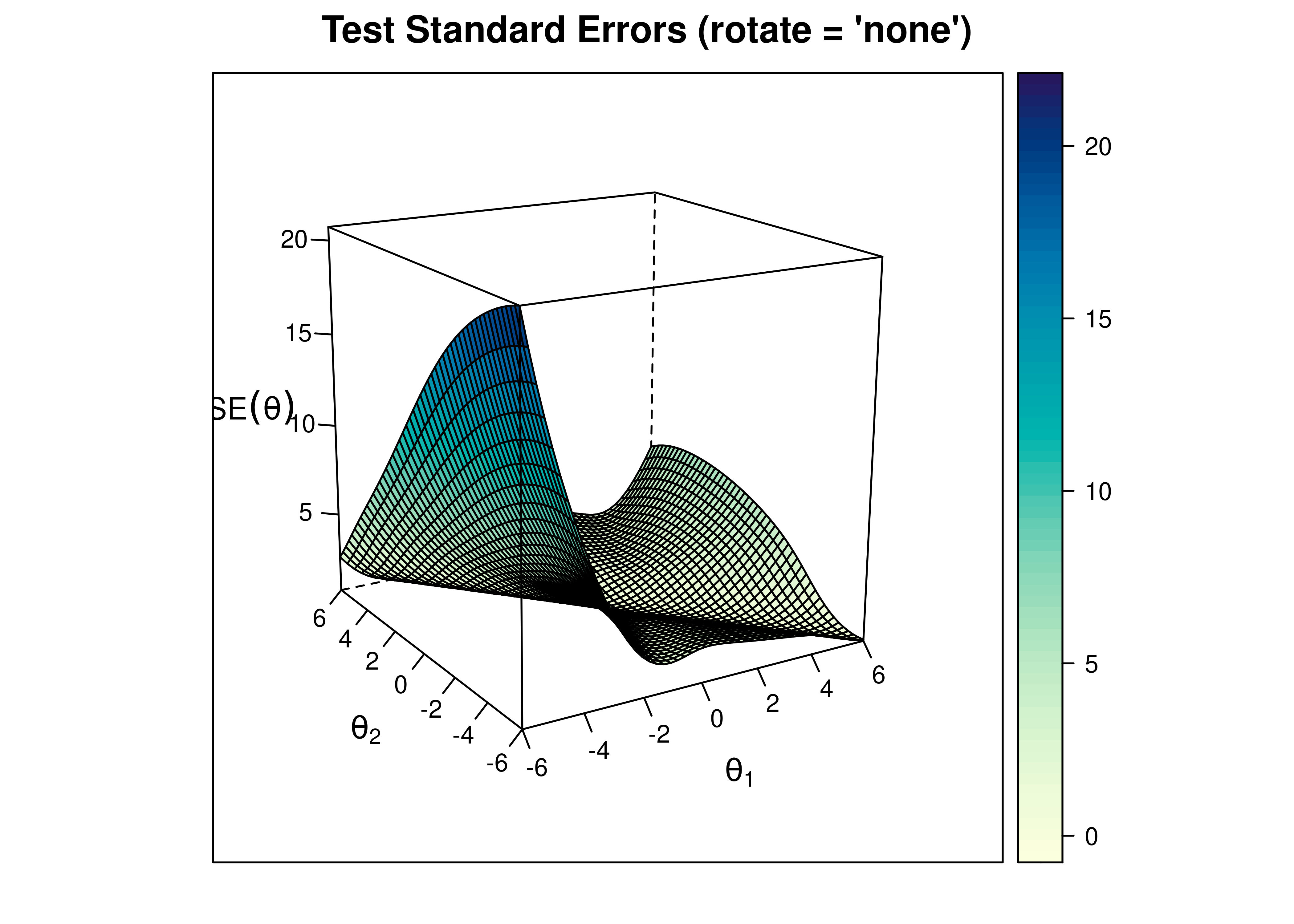

A plot of test standard error of measurement (SEM) as a function of a person’s level on each latent construct (theta; \(\theta\)) is in Figure 8.50.

Figure 8.50: Test Standard Error of Measurement From Two-Parameter Multidimensional Item Response Theory Model.

8.6.5.2 Item Curves

8.6.5.2.1 Item Characteristic Curves

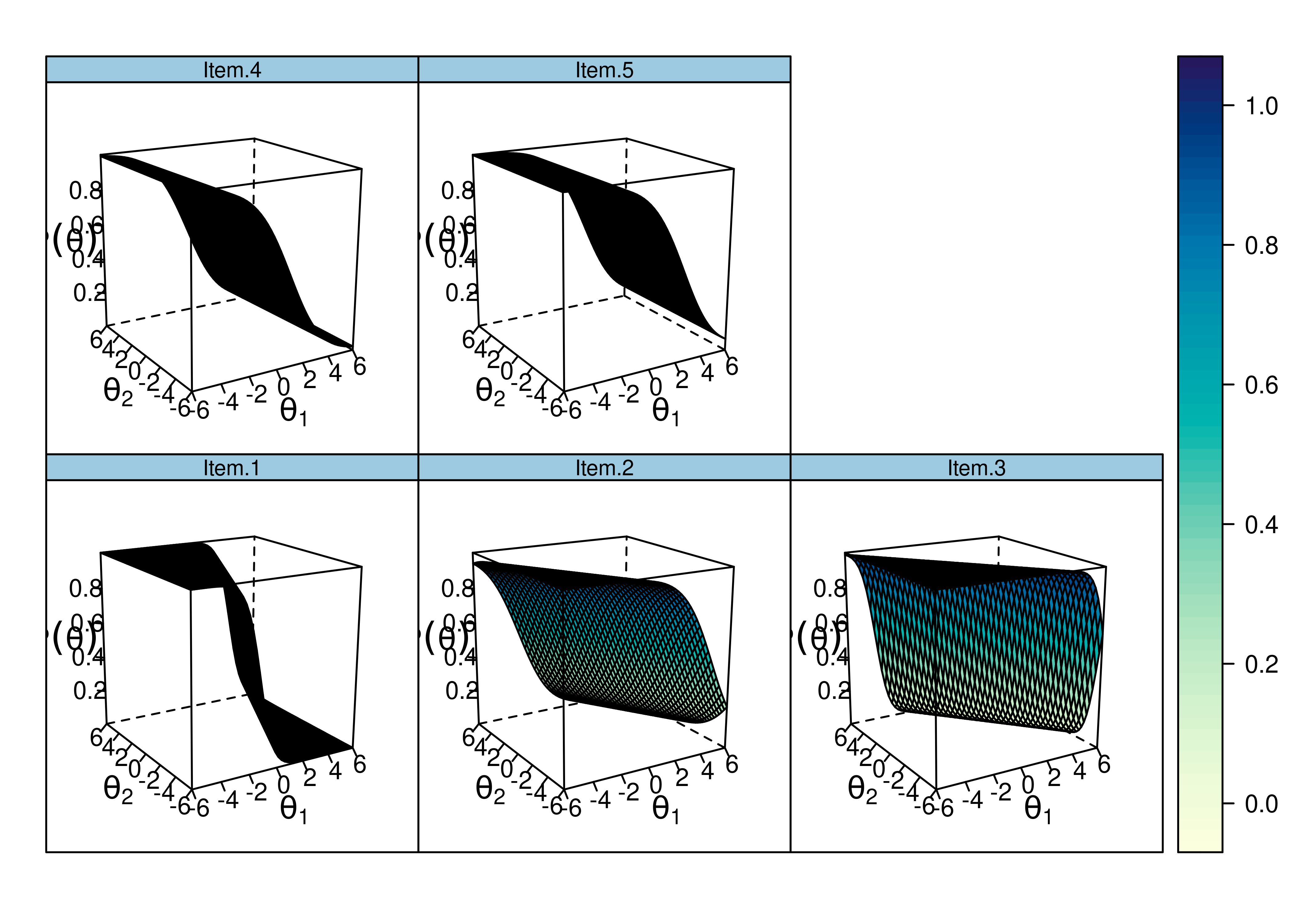

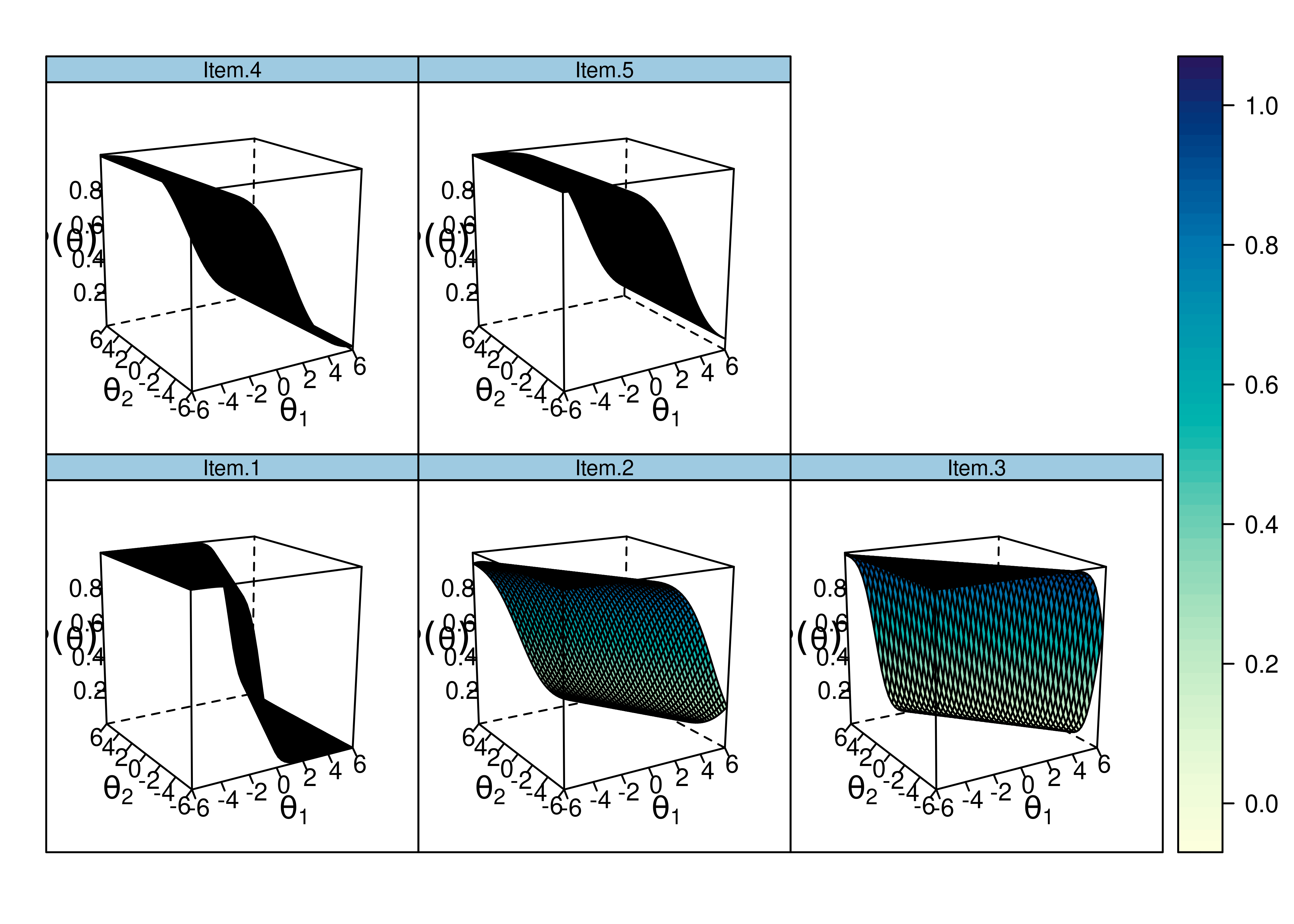

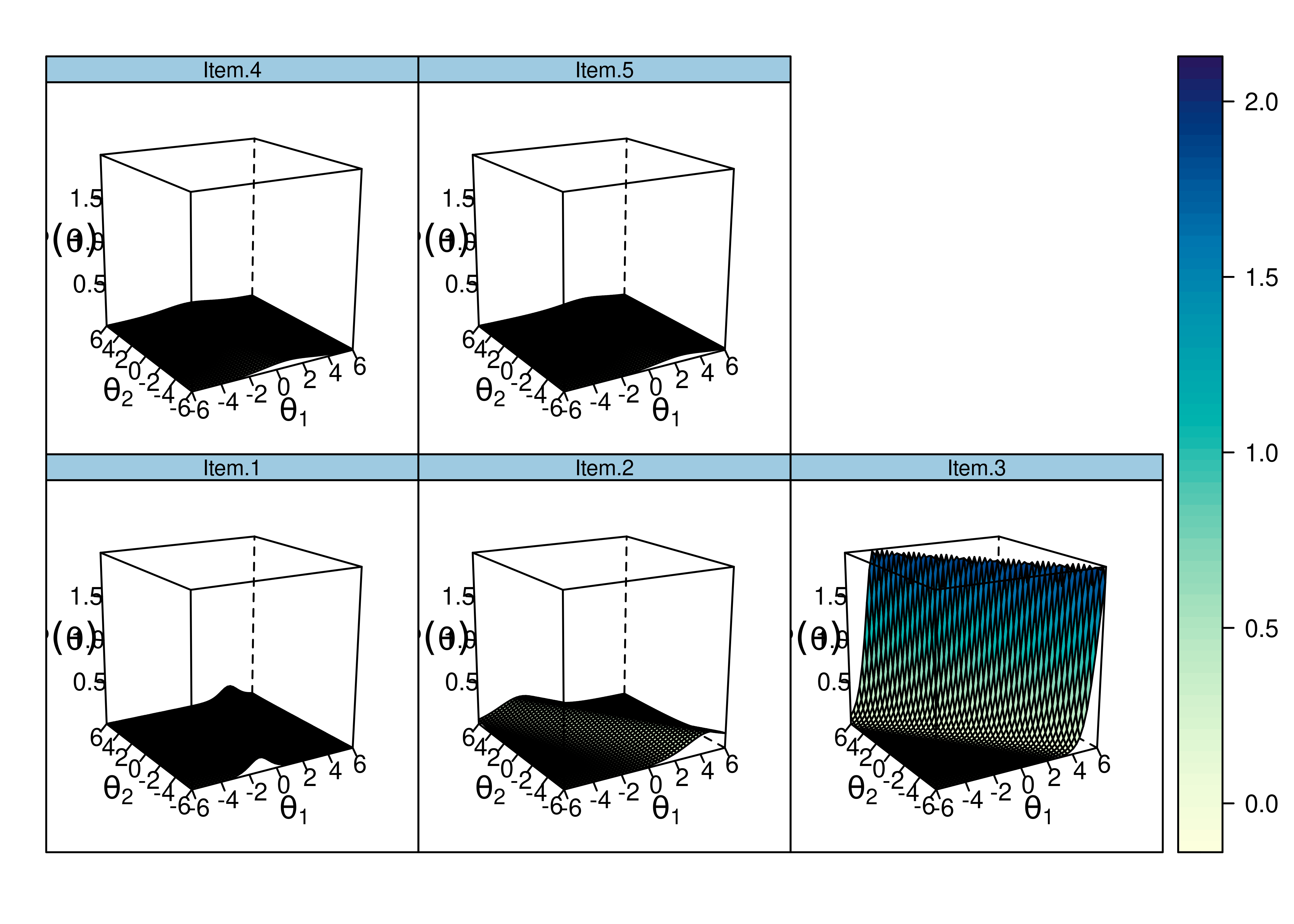

Item characteristic curve (ICC) plots of the probability of item endorsement (or getting the item correct) as a function of a person’s level on each latent construct (theta; \(\theta\)) are in Figures 8.51 and 8.52.

Figure 8.51: Item Characteristic Curves From Two-Parameter Multidimensional Item Response Theory Model.

Figure 8.52: Item Characteristic Curves From Two-Parameter Multidimensional Item Response Theory Model.

8.6.5.2.2 Item Information Curves

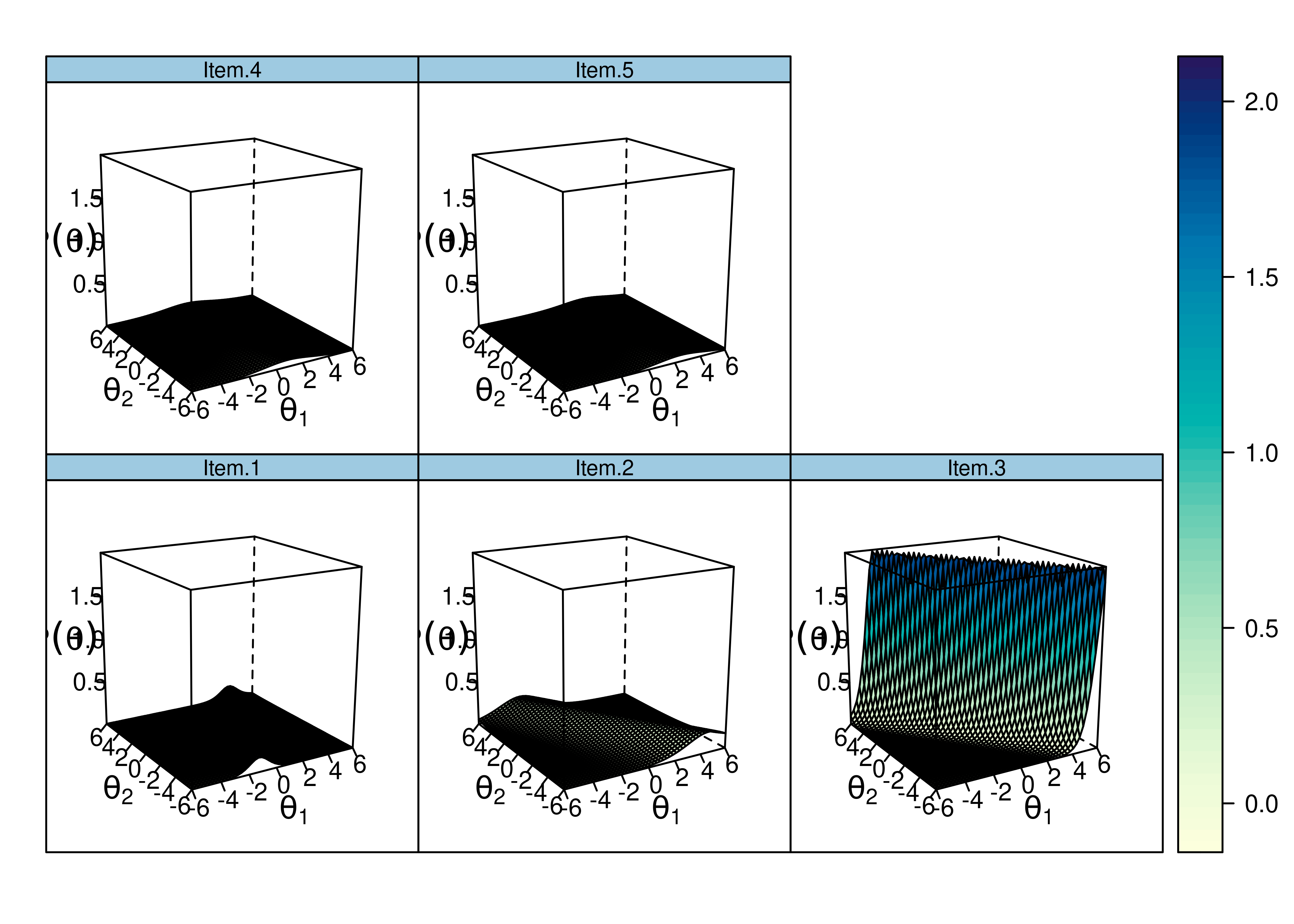

Plots of item information as a function of a person’s level on each latent construct (theta; \(\theta\)) are in Figures 8.53 and 8.54.

Figure 8.53: Item Information Curves From Two-Parameter Multidimensional Item Response Theory Model.

Figure 8.54: Item Information Curves From Two-Parameter Multidimensional Item Response Theory Model.

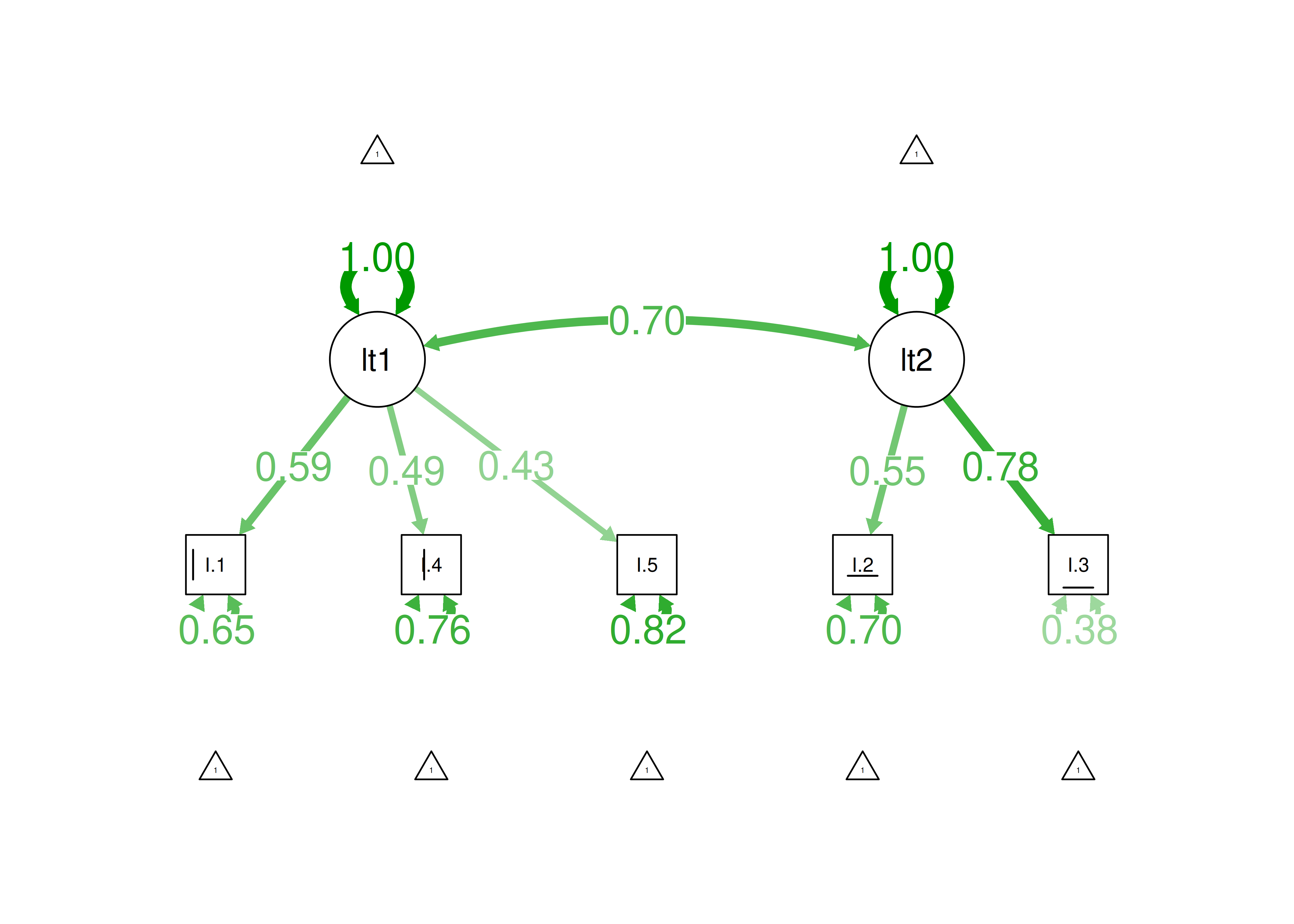

8.6.6 CFA

A two-parameter multidimensional model can also be fit in a CFA framework, sometimes called item factor analysis.

The item factor analysis models were fit in the lavaan package (Rosseel et al., 2022).

Code

twoPLModelMultidimensional_cfa <- '

# Factor Loadings (i.e., discrimination parameters)

latent1 =~ loading1*Item.1 + loading4*Item.4 + loading5*Item.5

latent2 =~ loading2*Item.2 + loading3*Item.3

# Item Thresholds (i.e., difficulty parameters)

Item.1 | threshold1*t1

Item.2 | threshold2*t1

Item.3 | threshold3*t1

Item.4 | threshold4*t1

Item.5 | threshold5*t1

'

twoPLModelMultidimensional_cfa_fit = sem(

model = twoPLModelMultidimensional_cfa,

data = mydataIRT,

ordered = c("Item.1", "Item.2", "Item.3", "Item.4","Item.5"),

mimic = "Mplus",

estimator = "WLSMV",

std.lv = TRUE,

parameterization = "theta")

summary(

twoPLModelMultidimensional_cfa_fit,

fit.measures = TRUE,

rsquare = TRUE,

standardized = TRUE)lavaan 0.6-20 ended normally after 41 iterations

Estimator DWLS

Optimization method NLMINB

Number of model parameters 11

Number of observations 1000

Model Test User Model:

Standard Scaled

Test Statistic 1.882 2.469

Degrees of freedom 4 4

P-value (Chi-square) 0.757 0.650

Scaling correction factor 0.775

Shift parameter 0.039

simple second-order correction (WLSMV)

Model Test Baseline Model:

Test statistic 244.385 228.667

Degrees of freedom 10 10

P-value 0.000 0.000

Scaling correction factor 1.072

User Model versus Baseline Model:

Comparative Fit Index (CFI) 1.000 1.000

Tucker-Lewis Index (TLI) 1.023 1.018

Robust Comparative Fit Index (CFI) 1.000

Robust Tucker-Lewis Index (TLI) 1.032

Root Mean Square Error of Approximation:

RMSEA 0.000 0.000

90 Percent confidence interval - lower 0.000 0.000

90 Percent confidence interval - upper 0.033 0.038

P-value H_0: RMSEA <= 0.050 0.994 0.989

P-value H_0: RMSEA >= 0.080 0.000 0.000

Robust RMSEA 0.000

90 Percent confidence interval - lower 0.000

90 Percent confidence interval - upper 0.088

P-value H_0: Robust RMSEA <= 0.050 0.798

P-value H_0: Robust RMSEA >= 0.080 0.072

Standardized Root Mean Square Residual:

SRMR 0.021 0.021

Parameter Estimates:

Parameterization Theta

Standard errors Robust.sem

Information Expected

Information saturated (h1) model Unstructured

Latent Variables:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

latent1 =~

Item.1 (ldn1) 0.731 0.139 5.268 0.000 0.731 0.590

Item.4 (ldn4) 0.560 0.094 5.962 0.000 0.560 0.488

Item.5 (ldn5) 0.472 0.098 4.832 0.000 0.472 0.427

latent2 =~

Item.2 (ldn2) 0.660 0.114 5.774 0.000 0.660 0.551

Item.3 (ldn3) 1.265 0.358 3.529 0.000 1.265 0.784

Covariances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

latent1 ~~

latent2 0.696 0.090 7.718 0.000 0.696 0.696

Thresholds:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

Itm.1|1 (thr1) -1.172 0.095 -12.280 0.000 -1.172 -0.946

Itm.2|1 (thr2) -0.488 0.055 -8.923 0.000 -0.488 -0.407

Itm.3|1 (thr3) -1.202 0.218 -5.502 0.000 -1.202 -0.745

Itm.4|1 (thr4) -0.308 0.048 -6.442 0.000 -0.308 -0.269

Itm.5|1 (thr5) -1.114 0.066 -16.862 0.000 -1.114 -1.007

Variances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

.Item.1 1.000 1.000 0.652

.Item.4 1.000 1.000 0.761

.Item.5 1.000 1.000 0.818

.Item.2 1.000 1.000 0.697

.Item.3 1.000 1.000 0.385

latent1 1.000 1.000 1.000

latent2 1.000 1.000 1.000

Scales y*:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

Item.1 0.807 0.807 1.000

Item.4 0.873 0.873 1.000

Item.5 0.904 0.904 1.000

Item.2 0.835 0.835 1.000

Item.3 0.620 0.620 1.000

R-Square:

Estimate

Item.1 0.348

Item.4 0.239

Item.5 0.182

Item.2 0.303

Item.3 0.615Code

chisq df pvalue baseline.chisq baseline.df

1.882 4.000 0.757 244.385 10.000

baseline.pvalue rmsea cfi tli srmr

0.000 0.000 1.000 1.023 0.021 $type

[1] "cor.bollen"

$cov

Item.1 Item.4 Item.5 Item.2 Item.3

Item.1 0.000

Item.4 0.008 0.000

Item.5 0.034 -0.048 0.000

Item.2 0.000 0.016 -0.028 0.000

Item.3 -0.031 0.009 0.032 0.000 0.000

$mean

Item.1 Item.4 Item.5 Item.2 Item.3

0 0 0 0 0

$th

Item.1|t1 Item.4|t1 Item.5|t1 Item.2|t1 Item.3|t1

0 0 0 0 0 latent1 latent2

0.321 0.426 latent1 latent2

0.263 0.504 Code

Figure 8.55: Item Factor Analysis Diagram of Two-Parameter Multidimensional Logistic Model.

8.7 Three-Parameter Logistic Model

A three-parameter logistic (3PL) IRT model is a model fit to dichotomous data, which estimates a different difficulty (\(b\)), discrimination (\(a\)), and guessing parameter for each item.

3PL models were fit using the mirt package (Chalmers, 2020).

8.7.2 Model Summary

F1 h2

Item.1 0.509 0.259

Item.2 0.750 0.562

Item.3 0.700 0.489

Item.4 0.397 0.158

Item.5 0.411 0.169

SE.F1

Item.1 0.068

Item.2 0.183

Item.3 0.068

Item.4 0.060

Item.5 0.069

SS loadings: 1.637

Proportion Var: 0.327

Factor correlations:

F1

F1 1$items

a b g u

Item.1 1.007 -1.853 0.000 1

Item.2 1.928 -0.049 0.295 1

Item.3 1.667 -1.068 0.000 1

Item.4 0.736 -0.655 0.000 1

Item.5 0.767 -2.436 0.000 1

$means

F1

0

$cov

F1

F1 18.7.4 Plots

8.7.4.1 Test Curves

The test curves suggest that the measure is most reliable (i.e., provides the most information has the smallest standard error of measurement) at lower levels of the construct.

8.7.4.1.1 Test Characteristic Curve

A test characteristic curve (TCC) plot of the expected total score as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.56.

Figure 8.56: Test Characteristic Curve From Three-Parameter Logistic Item Response Theory Model.

8.7.4.1.2 Test Information Curve

A plot of test information as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.57.

Figure 8.57: Test Information Curve From Three-Parameter Logistic Item Response Theory Model.

8.7.4.1.3 Test Reliability

The estimate of marginal reliability is below:

[1] 0.4681812A plot of test reliability as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.58.

Figure 8.58: Test Reliability From Three-Parameter Logistic Item Response Theory Model.

8.7.4.1.4 Test Standard Error of Measurement

A plot of test standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.59.

Figure 8.59: Test Standard Error of Measurement From Three-Parameter Logistic Item Response Theory Model.

8.7.4.1.5 Test Information Curve and Standard Errors

A plot of test information and standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.60.

Figure 8.60: Test Information Curve and Standard Error of Measurement From Three-Parameter Logistic Item Response Theory Model.

8.7.4.2 Item Curves

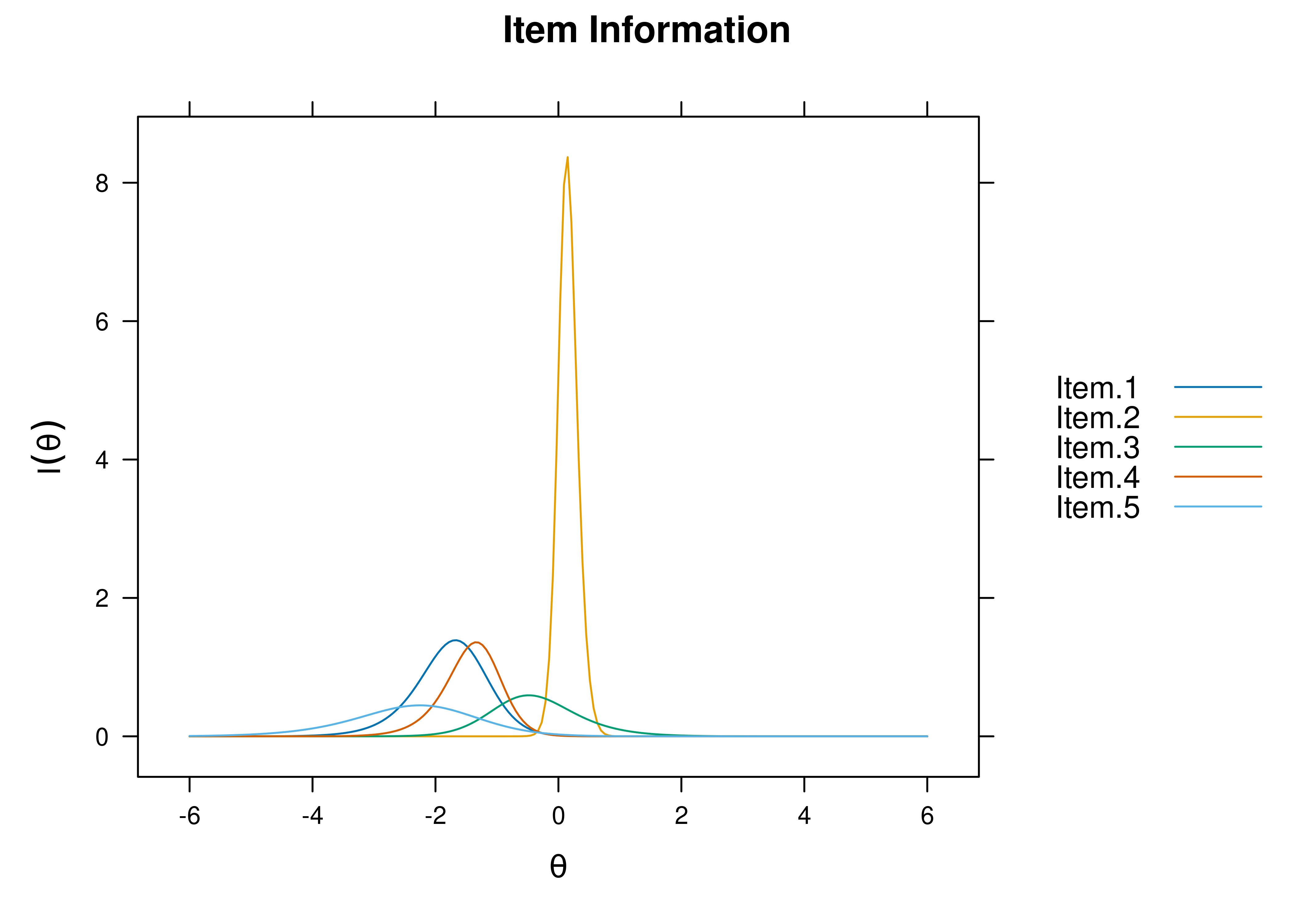

8.7.4.2.1 Item Characteristic Curves

Item characteristic curve (ICC) plots of the probability of item endorsement (or getting the item correct) as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.61 and 8.62.

Figure 8.61: Item Characteristic Curves From Three-Parameter Logistic Item Response Theory Model.

Figure 8.62: Item Characteristic Curves From Three-Parameter Logistic Item Response Theory Model.

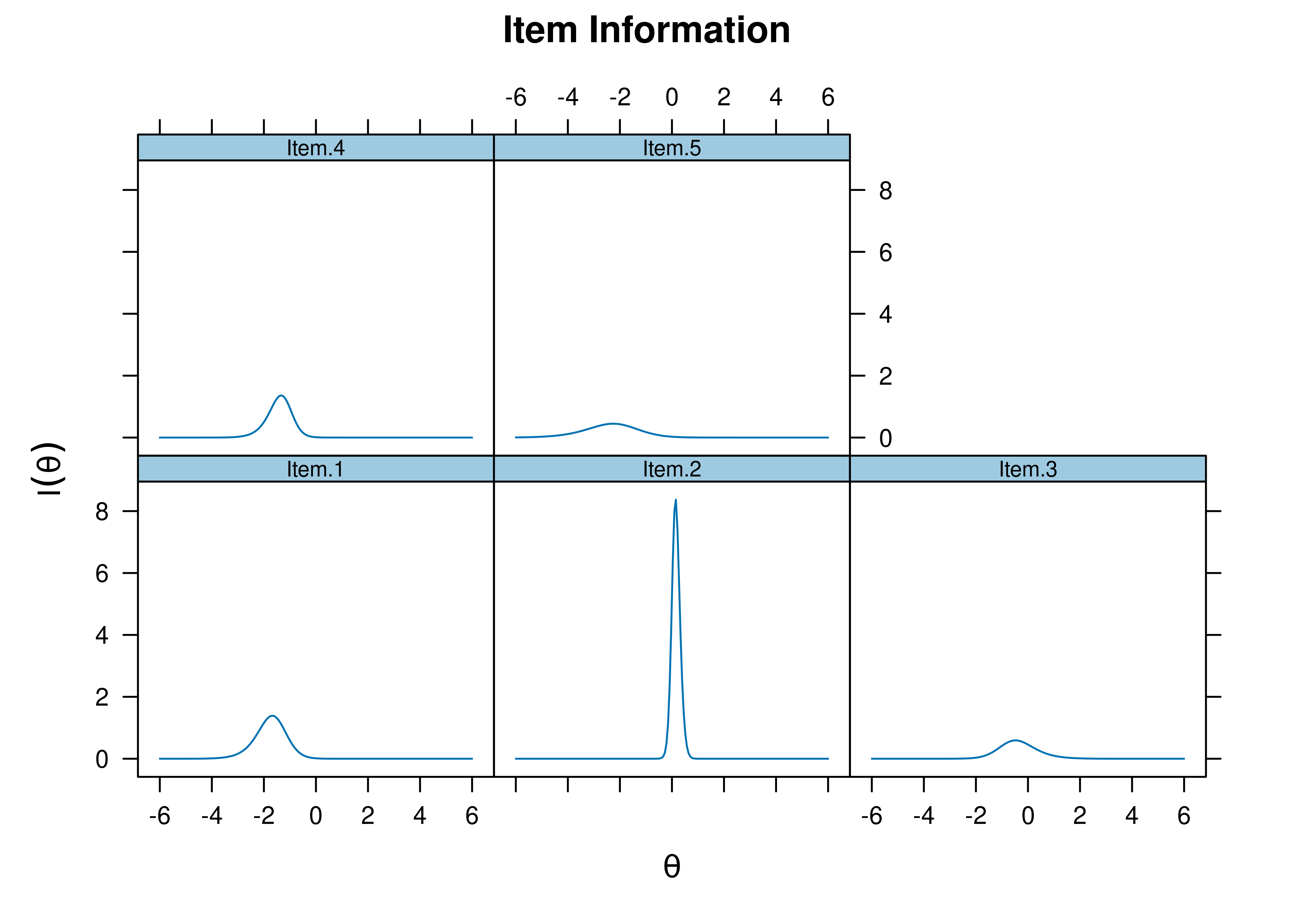

8.7.4.2.2 Item Information Curves

Plots of item information as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.63 and 8.64.

Figure 8.63: Item Information Curves From Three-Parameter Logistic Item Response Theory Model.

Figure 8.64: Item Information Curves From Three-Parameter Logistic Item Response Theory Model.

8.8 Four-Parameter Logistic Model

A four-parameter logistic (4PL) IRT model is a model fit to dichotomous data, which estimates a different difficulty (\(b\)), discrimination (\(a\)), guessing, and careless errors parameter for each item.

4PL models were fit using the mirt package (Chalmers, 2020).

8.8.2 Model Summary

F1 h2

Item.1 0.834 0.695

Item.2 0.980 0.961

Item.3 0.762 0.580

Item.4 0.876 0.768

Item.5 0.648 0.420

SE.F1

Item.1 0.129

Item.2 0.065

Item.3 0.179

Item.4 0.122

Item.5 0.193

SS loadings: 3.425

Proportion Var: 0.685

Factor correlations:

F1

F1 1$items

a b g u

Item.1 2.570 -1.619 0.000 0.911

Item.2 8.490 0.093 0.370 0.992

Item.3 2.002 -0.646 0.271 0.999

Item.4 3.094 -1.224 0.000 0.708

Item.5 1.450 -2.166 0.002 0.920

$means

F1

0

$cov

F1

F1 18.8.4 Plots

8.8.4.1 Test Curves

The test curves suggest that the measure is most reliable (i.e., provides the most information has the smallest standard error of measurement) at middle to lower levels of the construct.

8.8.4.1.1 Test Characteristic Curve

A test characteristic curve (TCC) plot of the expected total score as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.65.

Figure 8.65: Test Characteristic Curve From Four-Parameter Logistic Item Response Theory Model.

8.8.4.1.2 Test Information Curve

A plot of test information as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.66.

Figure 8.66: Test Information Curve From Four-Parameter Logistic Item Response Theory Model.

8.8.4.1.3 Test Reliability

The estimate of marginal reliability is below:

[1] 0.5060376A plot of test reliability as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.67.

Figure 8.67: Test Reliability From Four-Parameter Logistic Item Response Theory Model.

8.8.4.1.4 Test Standard Error of Measurement

A plot of test standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.68.

Figure 8.68: Test Standard Error of Measurement From Four-Parameter Logistic Item Response Theory Model.

8.8.4.1.5 Test Information Curve and Standard Errors

A plot of test information and standard error of measurement (SEM) as a function of a person’s level on the latent construct (theta; \(\theta\)) is in Figure 8.69.

Figure 8.69: Test Information Curve and Standard Error of Measurement From Four-Parameter Logistic Item Response Theory Model.

8.8.4.2 Item Curves

8.8.4.2.1 Item Characteristic Curves

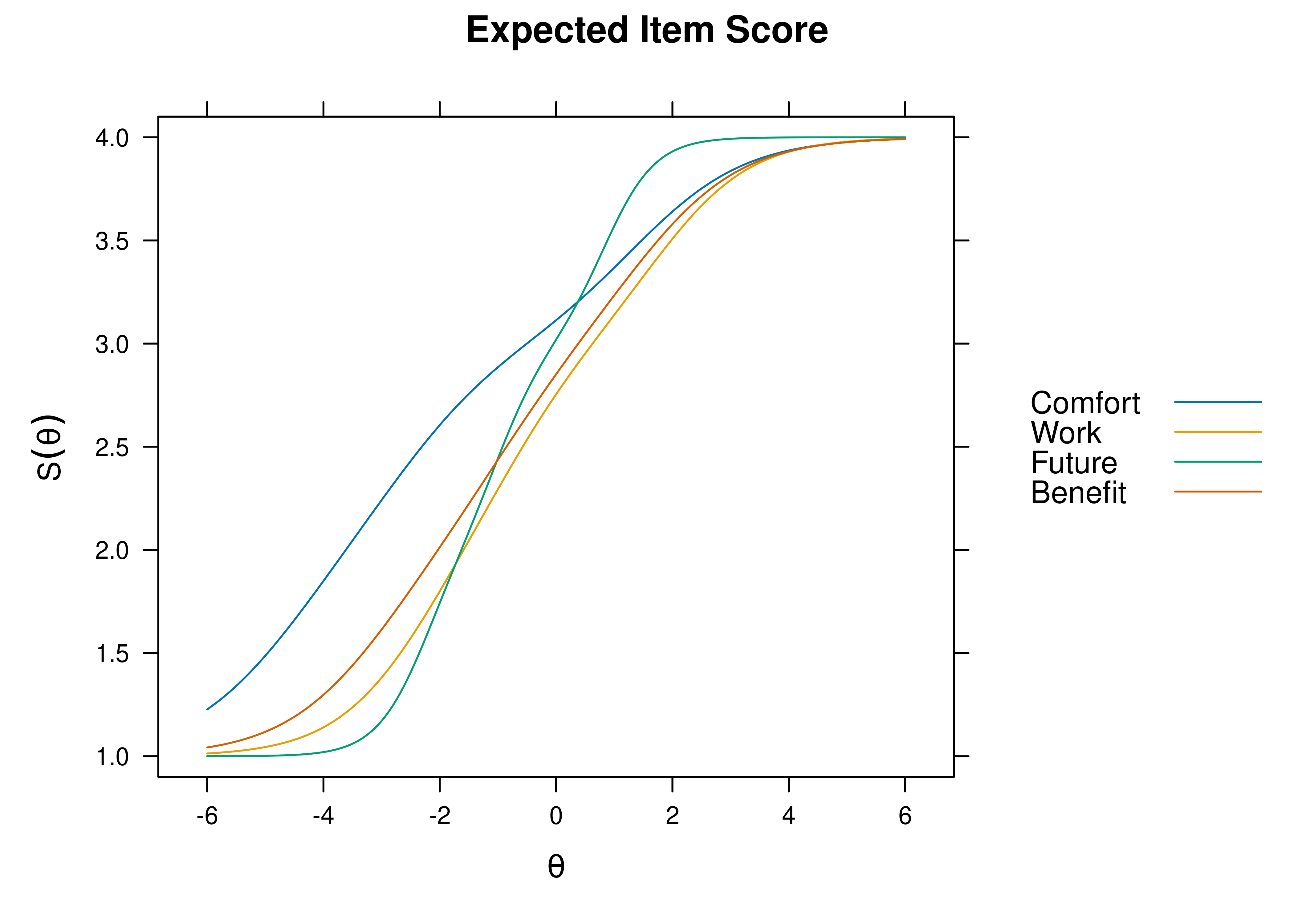

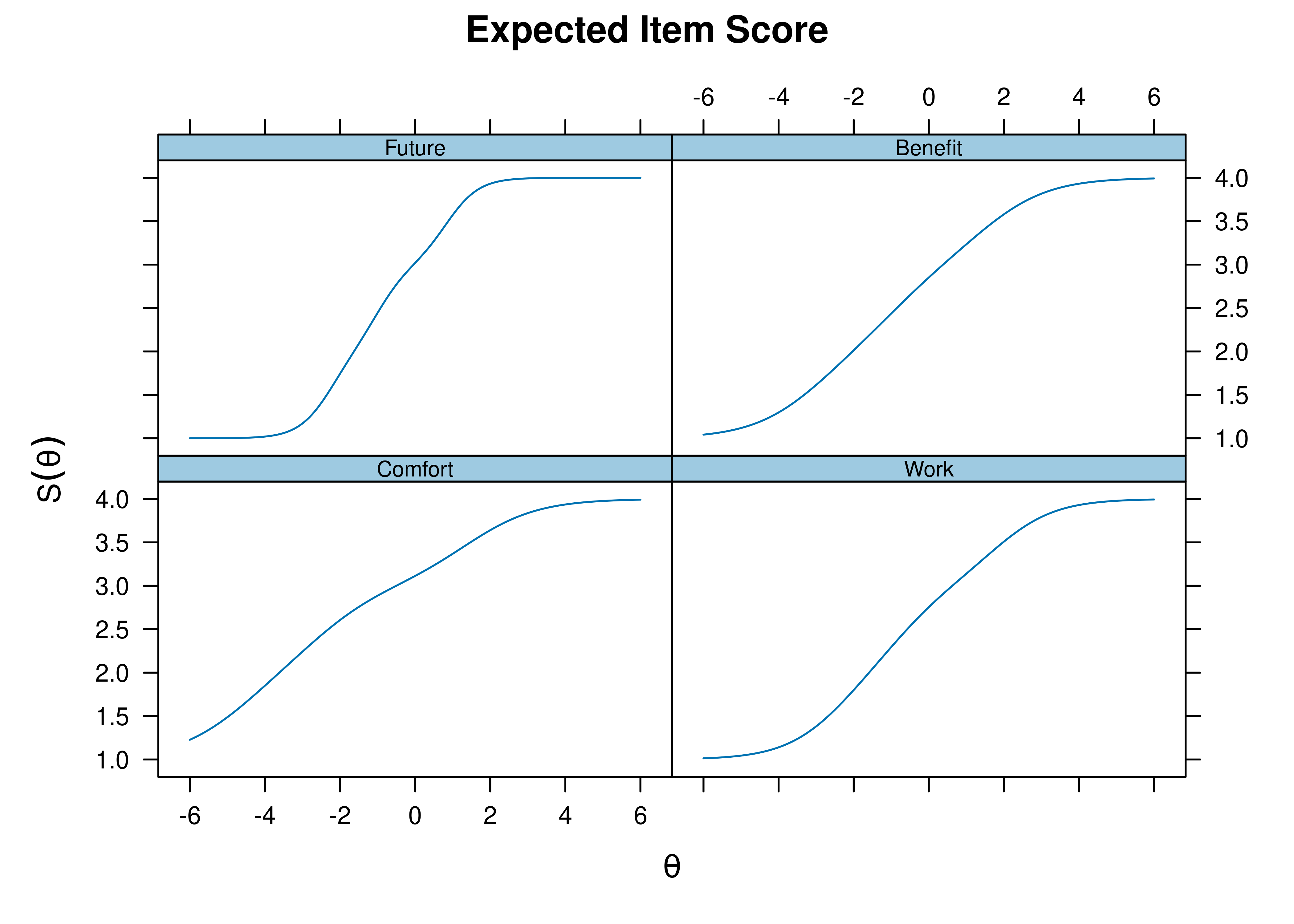

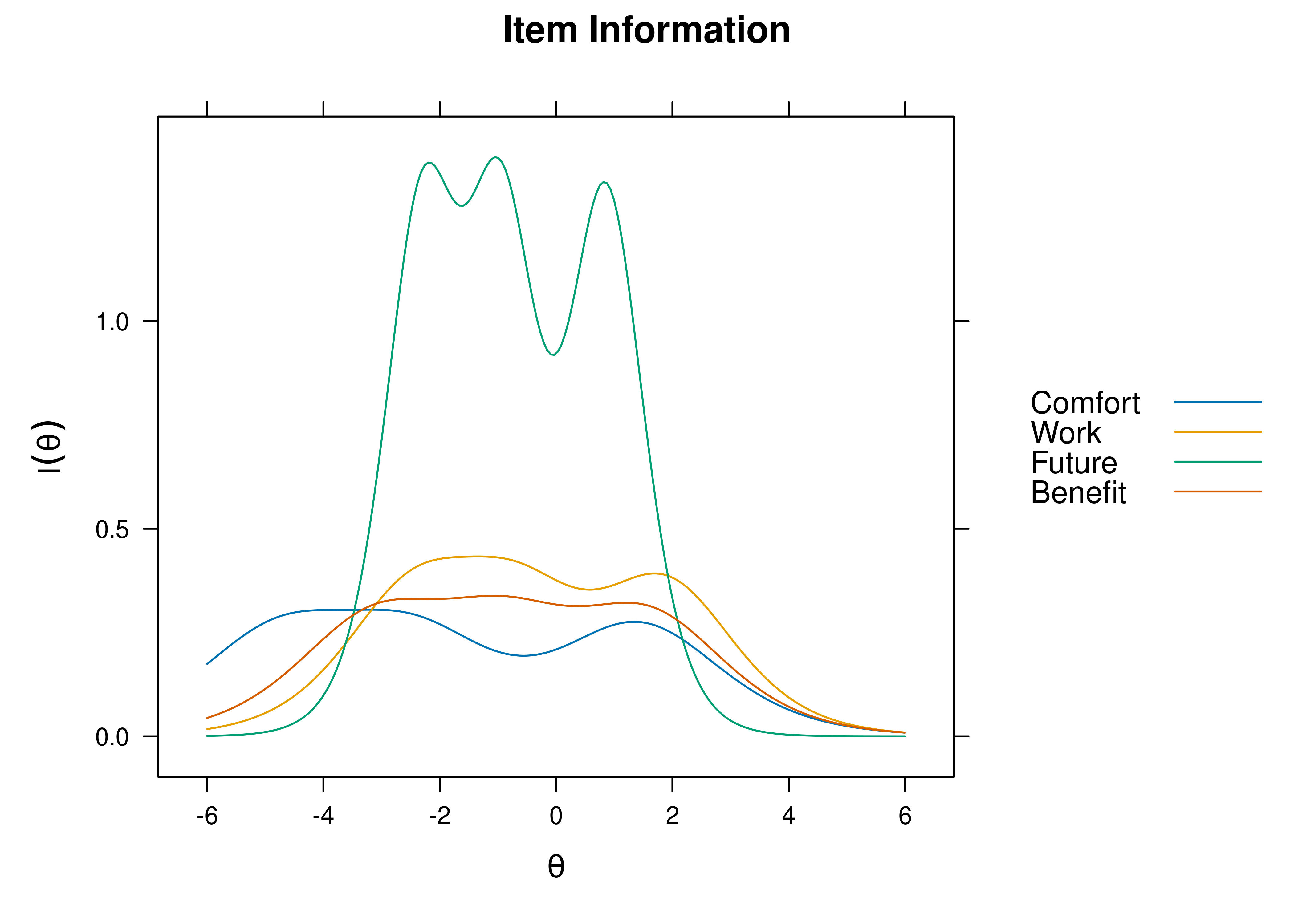

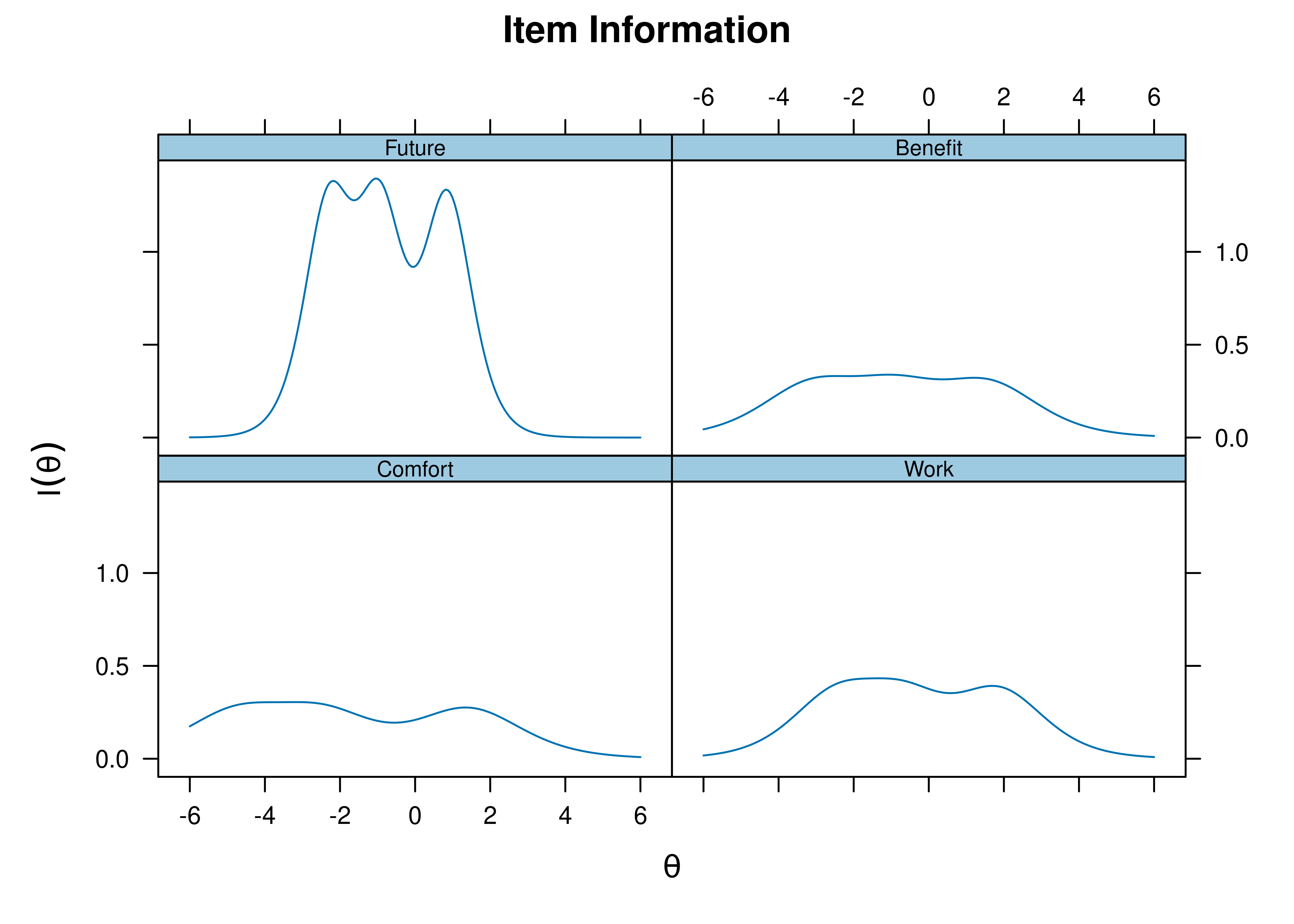

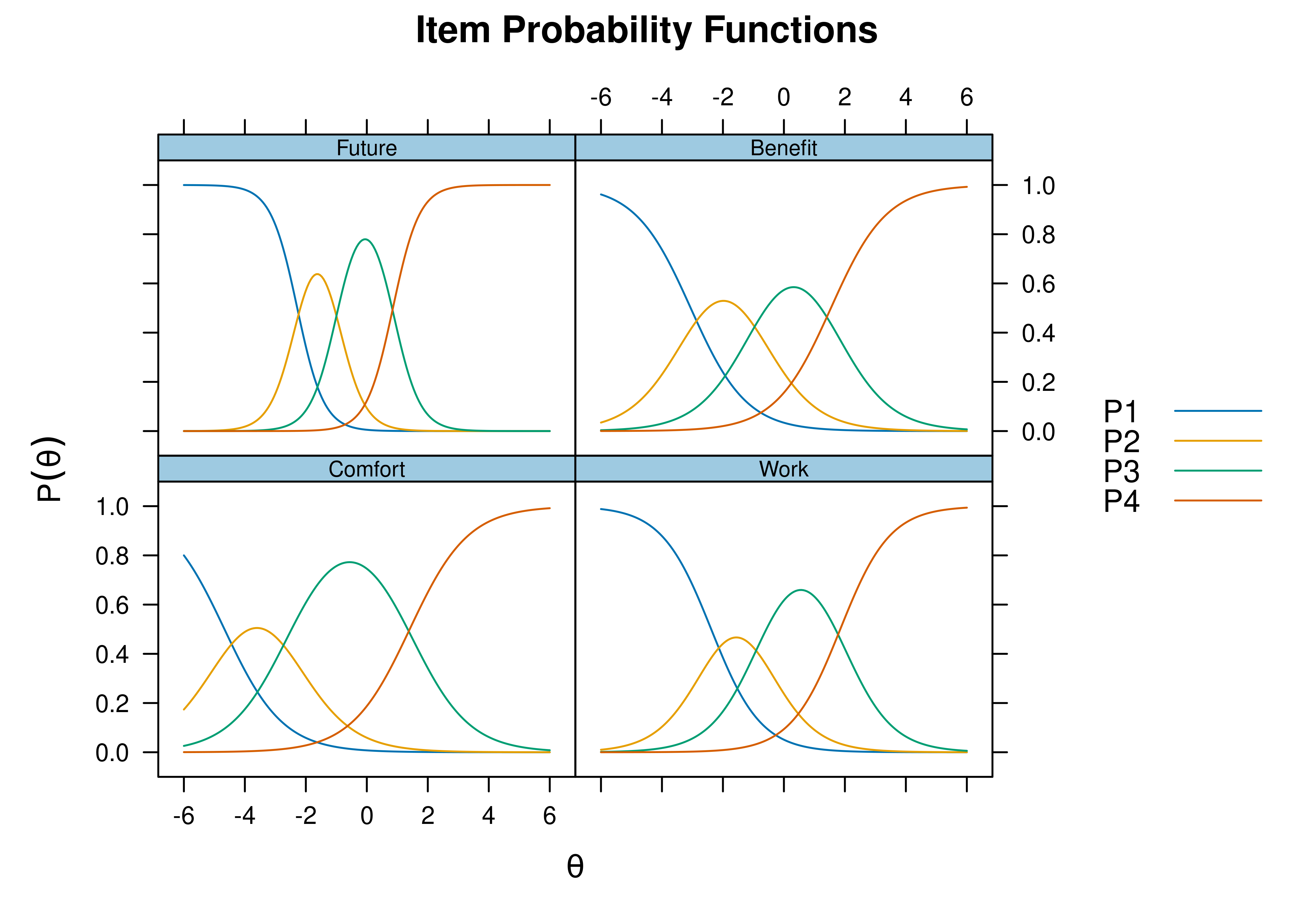

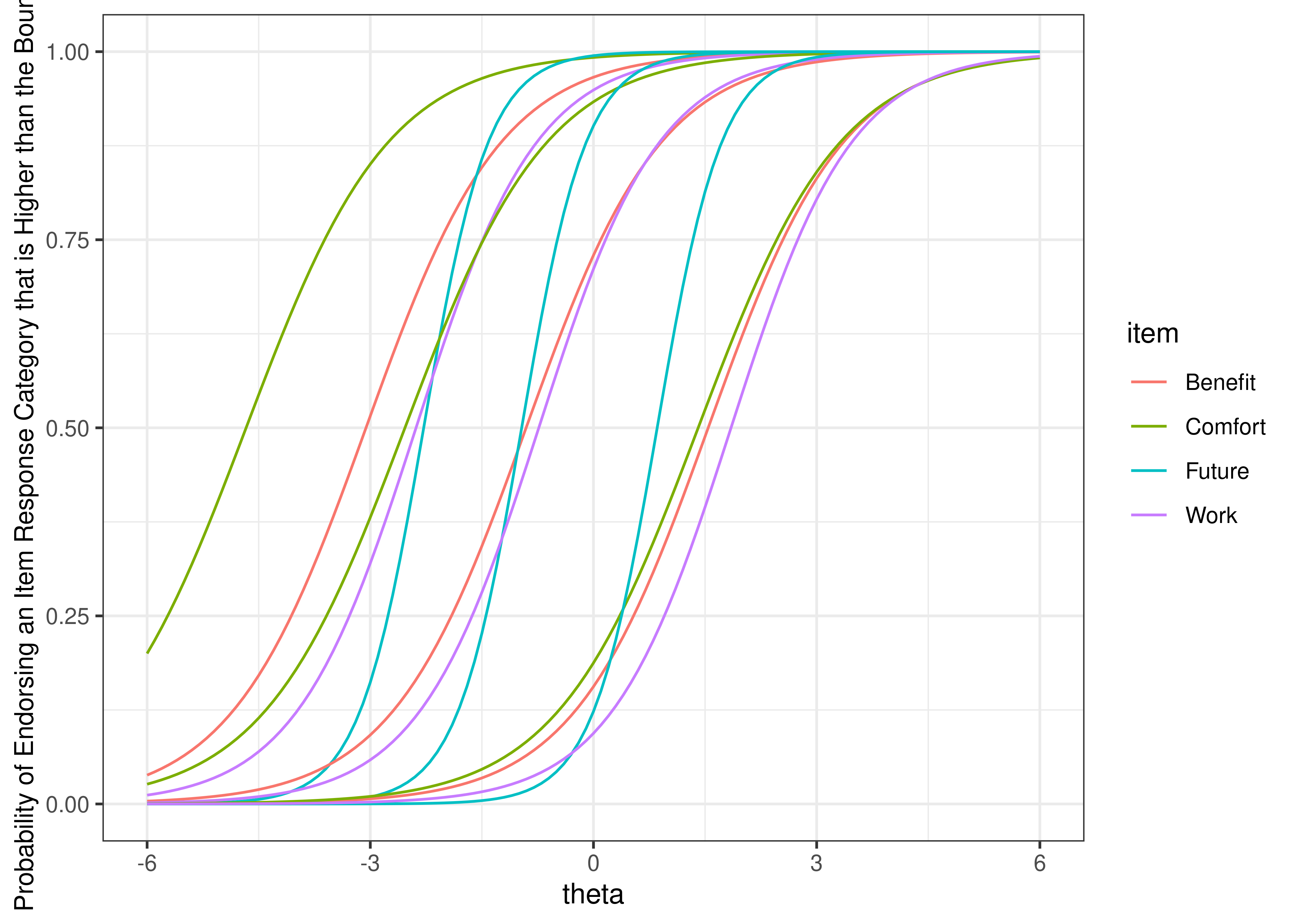

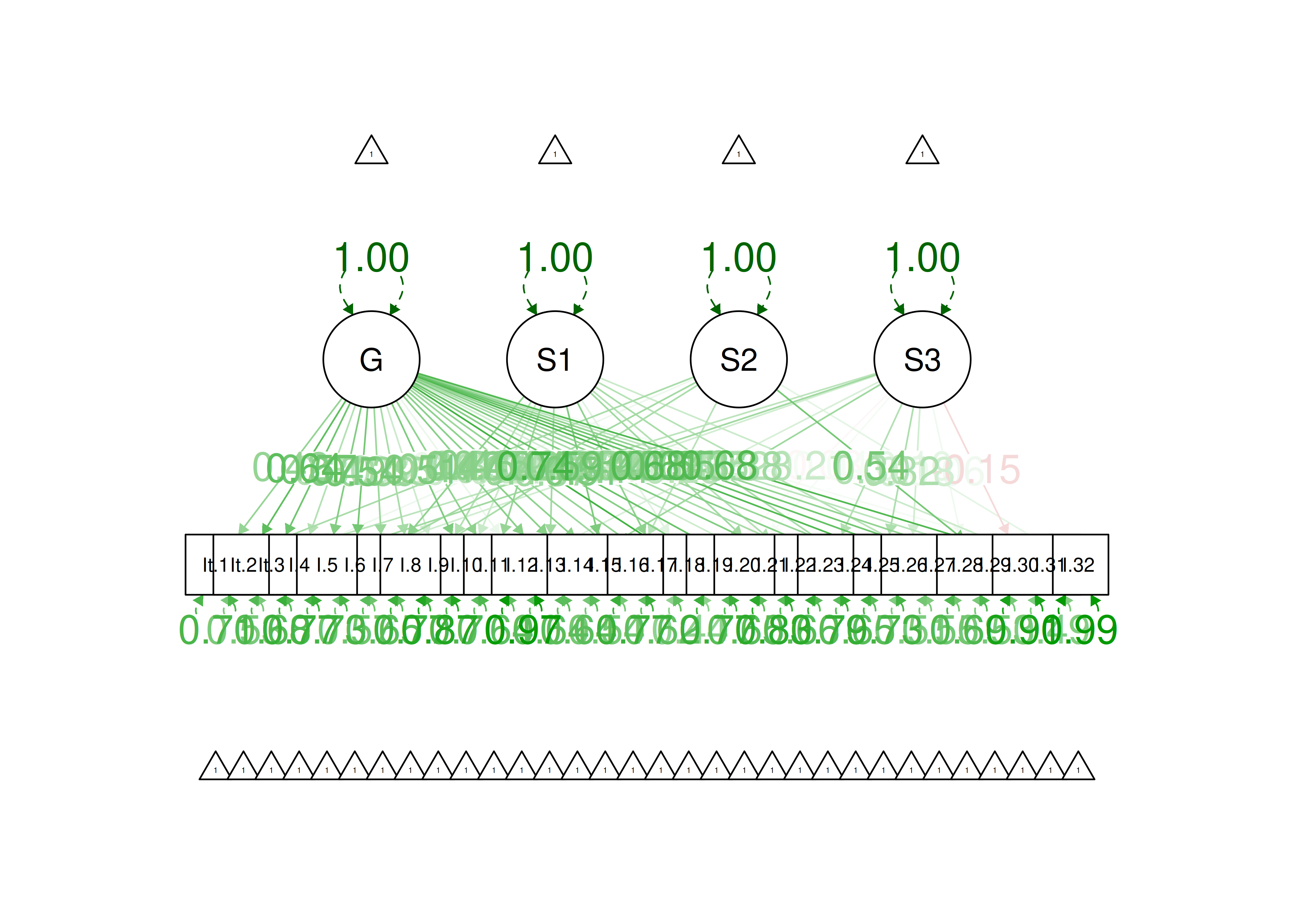

Item characteristic curve (ICC) plots of the probability of item endorsement (or getting the item correct) as a function of a person’s level on the latent construct (theta; \(\theta\)) are in Figures 8.70 and 8.71.