I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

Opening an issue or submitting a pull request on GitHub: https://github.com/isaactpetersen/Principles-Psychological-Assessment

Adding an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

Chapter 14 Factor Analysis and Principal Component Analysis

“It can scarcely be denied that the supreme goal of all theory is to make the irreducible basic elements as simple and as few as possible without having to surrender the adequate representation of a single datum of experience.”

— Albert Einstein (1934, p. 165); sometimes paraphrased as “Everything should be made as simple as possible, but not simpler.”

14.1 Overview of Factor Analysis

Factor analysis is a class of latent variable models that is designated to identify the structure of a measure or set of measures, and ideally, a construct or set of constructs. It aims to identify the optimal latent structure for a group of variables. Factor analysis encompasses two general types: confirmatory factor analysis and exploratory factor analysis. Exploratory factor analysis (EFA) is a latent variable modeling approach that is used when the researcher has no a priori hypotheses about how a set of variables is structured. EFA seeks to identify the empirically optimal-fitting model in ways that balance accuracy (i.e., variance accounted for) and parsimony (i.e., simplicity). Confirmatory factor analysis (CFA) is a latent variable modeling approach that is used when a researcher wants to evaluate how well a hypothesized model fits, and the model can be examined in comparison to alternative models. Using a CFA approach, the researcher can pit models representing two theoretical frameworks against each other to see which better accounts for the observed data.

Factor analysis is considered to be a “pure” data-driven method for identifying the structure of the data, but the “truth” that we get depends heavily on the decisions we make regarding the parameters of our factor analysis (Floyd & Widaman, 1995; Sarstedt et al., 2024). The goal of factor analysis is to identify simple, parsimonious factors that underlie the “junk” (i.e., scores filled with measurement error) that we observe.

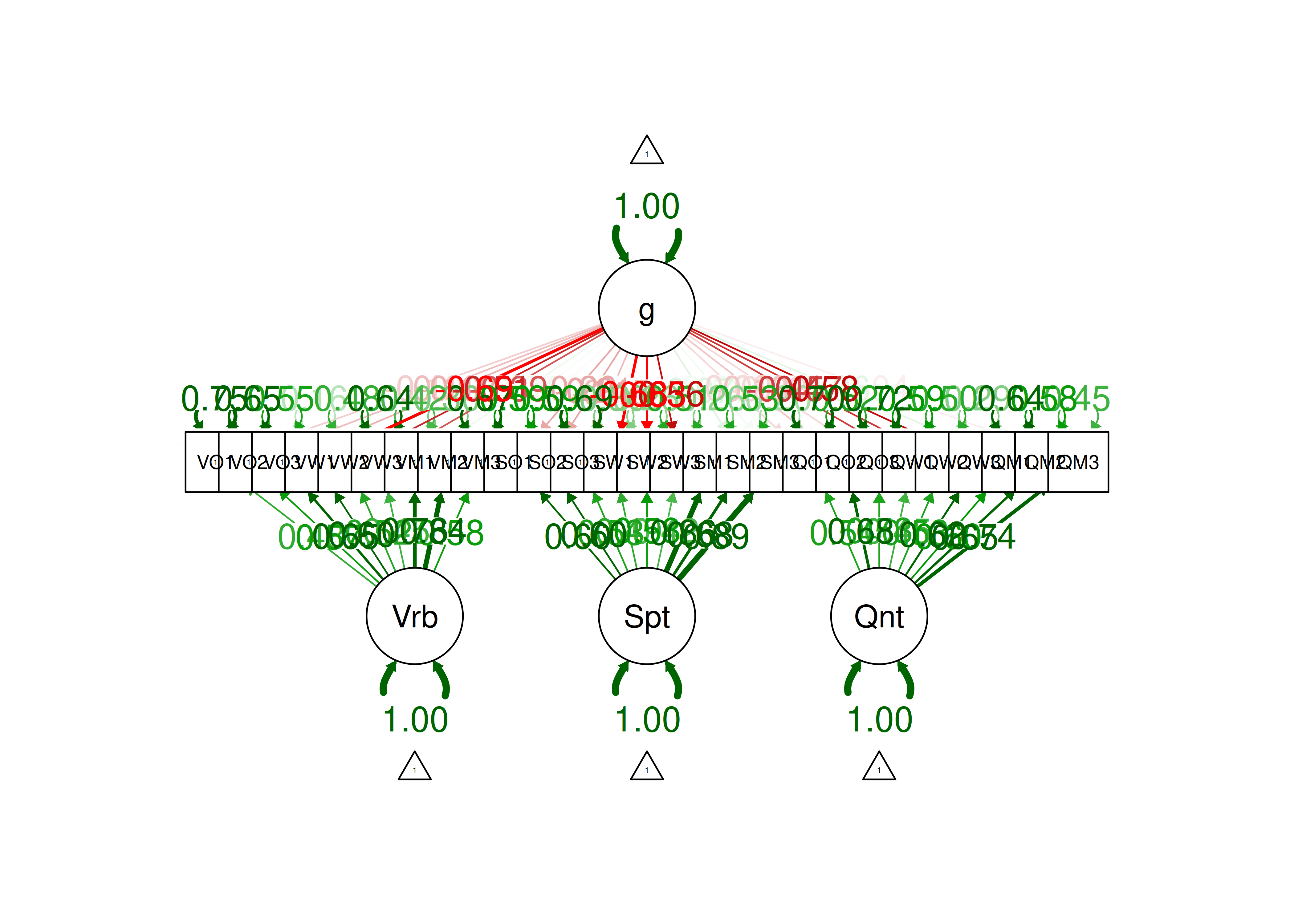

It used to take a long time to calculate a factor analysis because it was computed by hand. Now, it is fast to compute factor analysis with computers (e.g., oftentimes less than 30 ms). In the 1920s, Spearman developed factor analysis to understand the factor structure of intelligence. It was a long process—it took Spearman around one year to calculate the first factor analysis! Factor analysis takes a large dimension data set and simplifies it into a smaller set of factors that are thought to reflect underlying constructs. If you believe that nature is simple underneath, factor analysis gives nature a chance to display the simplicity that lives beneath the complexity on the surface. Spearman identified a single factor, g, that accounted for most of the covariation between the measures of intelligence.

Factor analysis involves observed (manifest) variables and unobserved (latent) factors. In a reflective model, it is assumed that the latent factor influences the manifest variables, and the latent factor therefore reflects the common (reliable) variance among the variables. A factor model potentially includes factor loadings, residuals (errors or disturbances), intercepts/means, covariances, and regression paths. A regression path indicates a hypothesis that one variable (or factor) influences another. The standardized regression coefficient represents the strength of association between the variables or factors. A factor loading is a regression path from a latent factor to an observed (manifest) variable. The standardized factor loading represents the strength of association between the variable and the latent factor. A residual is variance in a variable (or factor) that is unexplained by other variables or factors. An indicator’s intercept is the expected value of the variable when the factor(s) (onto which it loads) is equal to zero. Covariances are the associations between variables (or factors). A covariance path between two variables represents omitted shared cause(s) of the variables. For instance, if you depict a covariance path between two variables, it means that there is a shared cause of the two variables that is omitted from the model (for instance, if the common cause is not known or was not assessed).

In factor analysis, the relation between an indicator (\(\text{X}\)) and its underlying latent factor(s) (\(\text{F}\)) can be represented with a regression formula as in Equation (14.1):

\[\begin{equation} \text{X} = \lambda \cdot \text{F} + \text{Item Intercept} + \text{Error Term} \tag{14.1} \end{equation}\]

where:

- \(\text{X}\) is the observed value of the indicator

- \(\lambda\) is the factor loading, indicating the strength of the association between the indicator and the latent factor(s)

- \(\text{F}\) is the person’s value on the latent factor(s)

- \(\text{Item Intercept}\) represents the constant term that accounts for the expected value of the indicator when the latent factor(s) are zero

- \(\text{Error Term}\) is the residual, indicating the extent of variance in the indicator that is not explained by the latent factor(s)

When the latent factors are uncorrelated, the (standardized) error term for an indicator is calculated as 1 minus the sum of squared standardized factor loadings for a given item (including cross-loadings).

Another class of factor analysis models are higher-order (or hierarchical) factor models and bifactor models. Guidelines in using higher-order factor and bifactor models are discussed by Markon (2019).

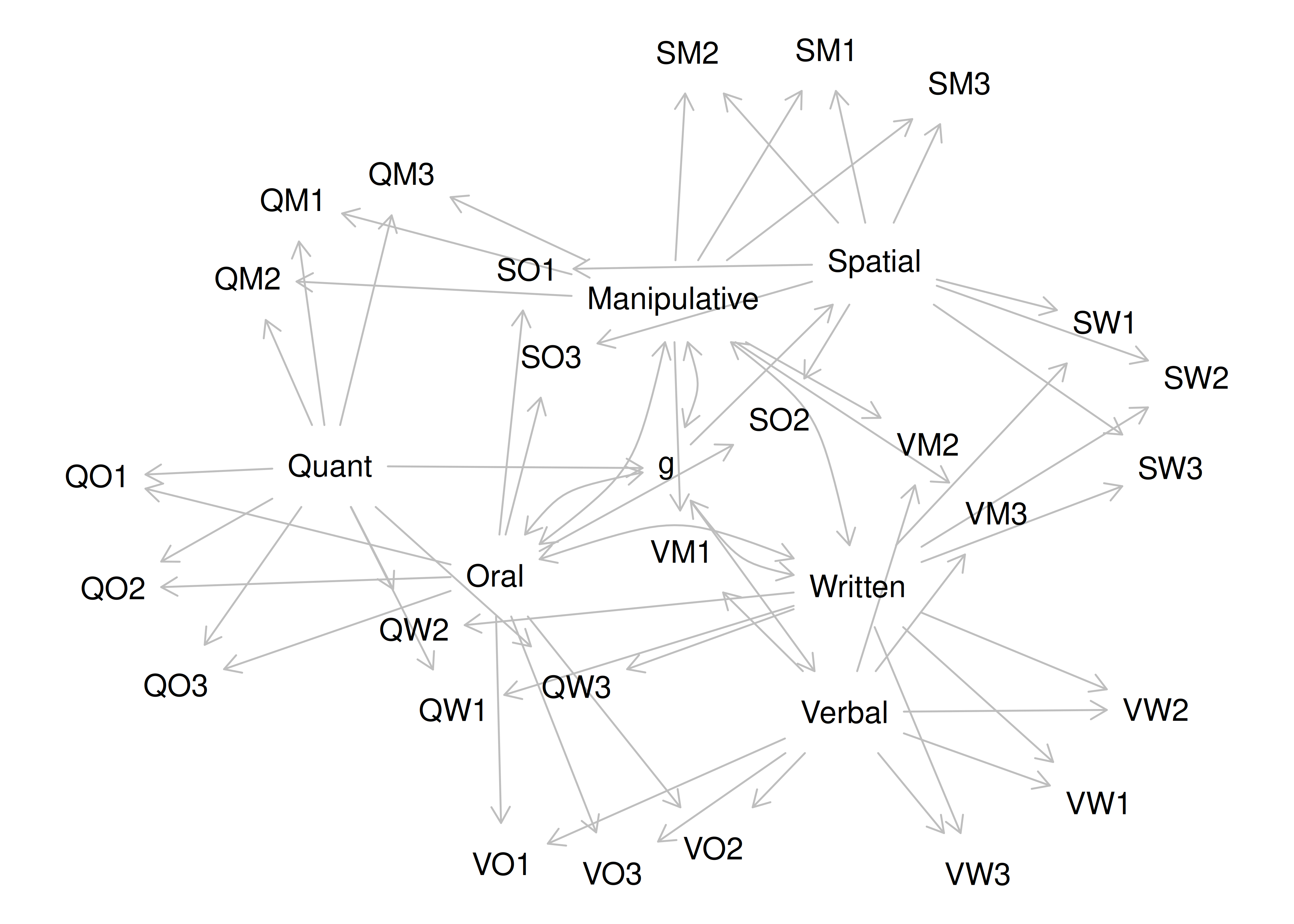

Factor analysis is a powerful technique to help identify the factor structure that underlies a measure or construct. As discussed in Section 14.1.4, however, there are many decisions to make in factor analysis, in addition to questions about which variables to use, how to scale the variables, etc. (Floyd & Widaman, 1995; Sarstedt et al., 2024). If the variables going into a factor analysis are not well assessed, factor analysis will not rescue the factor structure. In such situations, there is likely to be the problem of garbage in, garbage out. Factor analysis depends on the covariation among variables. Given the extensive method variance that measures have, factor analysis (and principal component analysis) tends to extract method factors. Method factors are factors that are related to the methods being assessed rather than the construct of interest. However, multitrait-multimethod approaches to factor analysis (such as in Section 14.4.2.13) help better partition the variance in variables that reflects method variance versus construct variance, to get more accurate estimates of constructs.

Floyd & Widaman (1995) provide an overview of factor analysis for the development of clinical assessments.

14.1.1 Example Factor Models from Correlation Matrices

Before pursuing factor analysis, it is helpful to evaluate the extent to which the variables are intercorrelated. If the variables are not correlated (or are correlated only weakly), there is no reason to believe that a common factor influences them, and thus factor analysis would not be useful. So, before conducting a factor analysis, it is important to examine the correlation matrix of the variables to determine whether the variables intercorrelate strongly enough to justify a factor analysis.

Below, I provide some example factor models from various correlation matrices. Analytical examples of factor analysis are presented in Section 14.4.

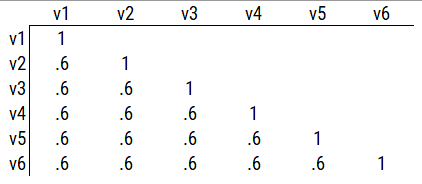

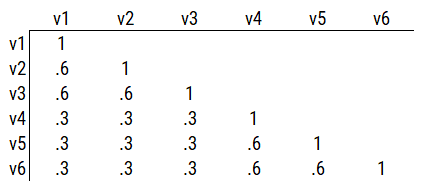

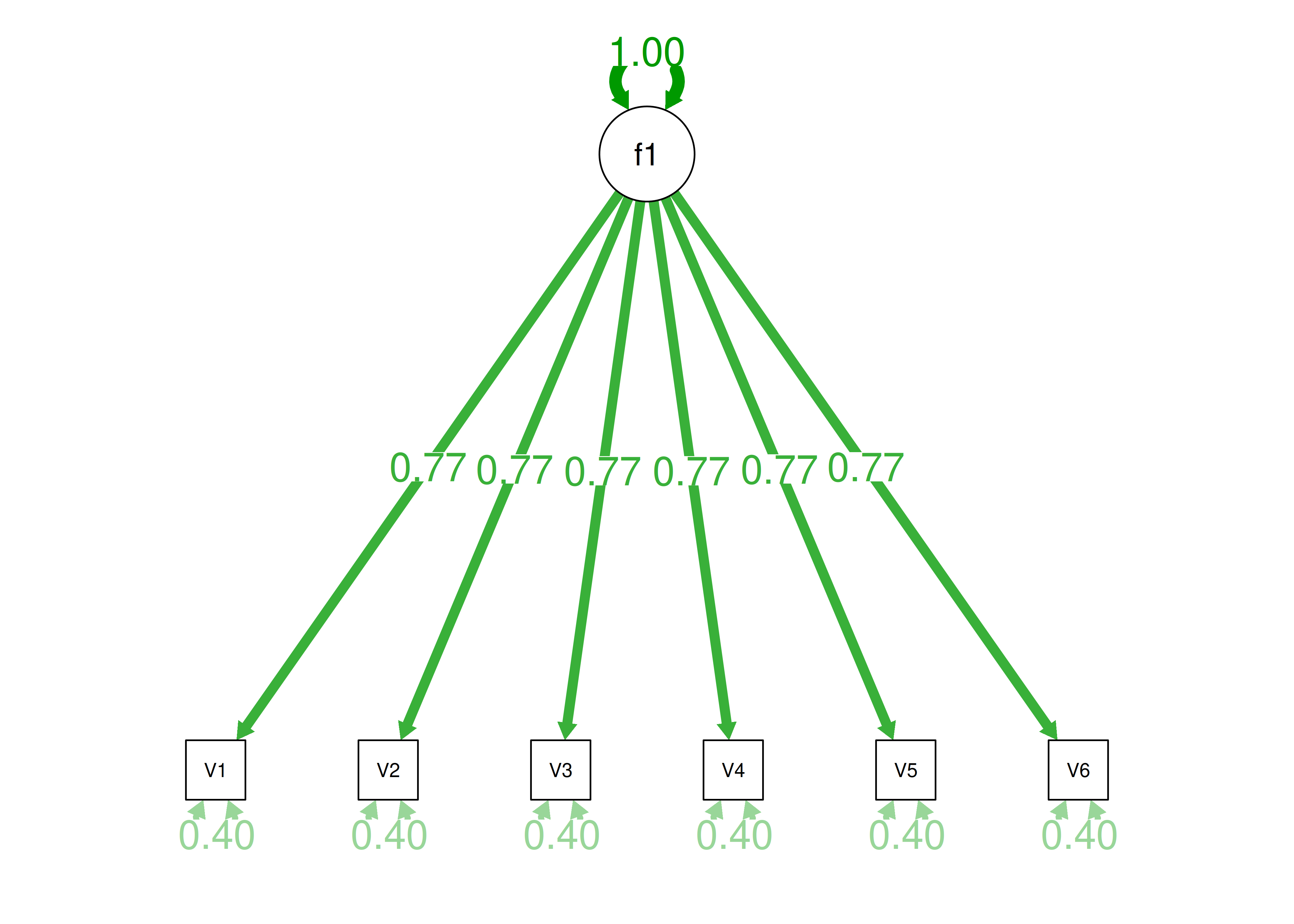

Consider the example correlation matrix in Figure 14.1. Because all of the correlations are the same (\(r = .60\)), we expect there is approximately one factor for this pattern of data.

Figure 14.1: Example Correlation Matrix 1.

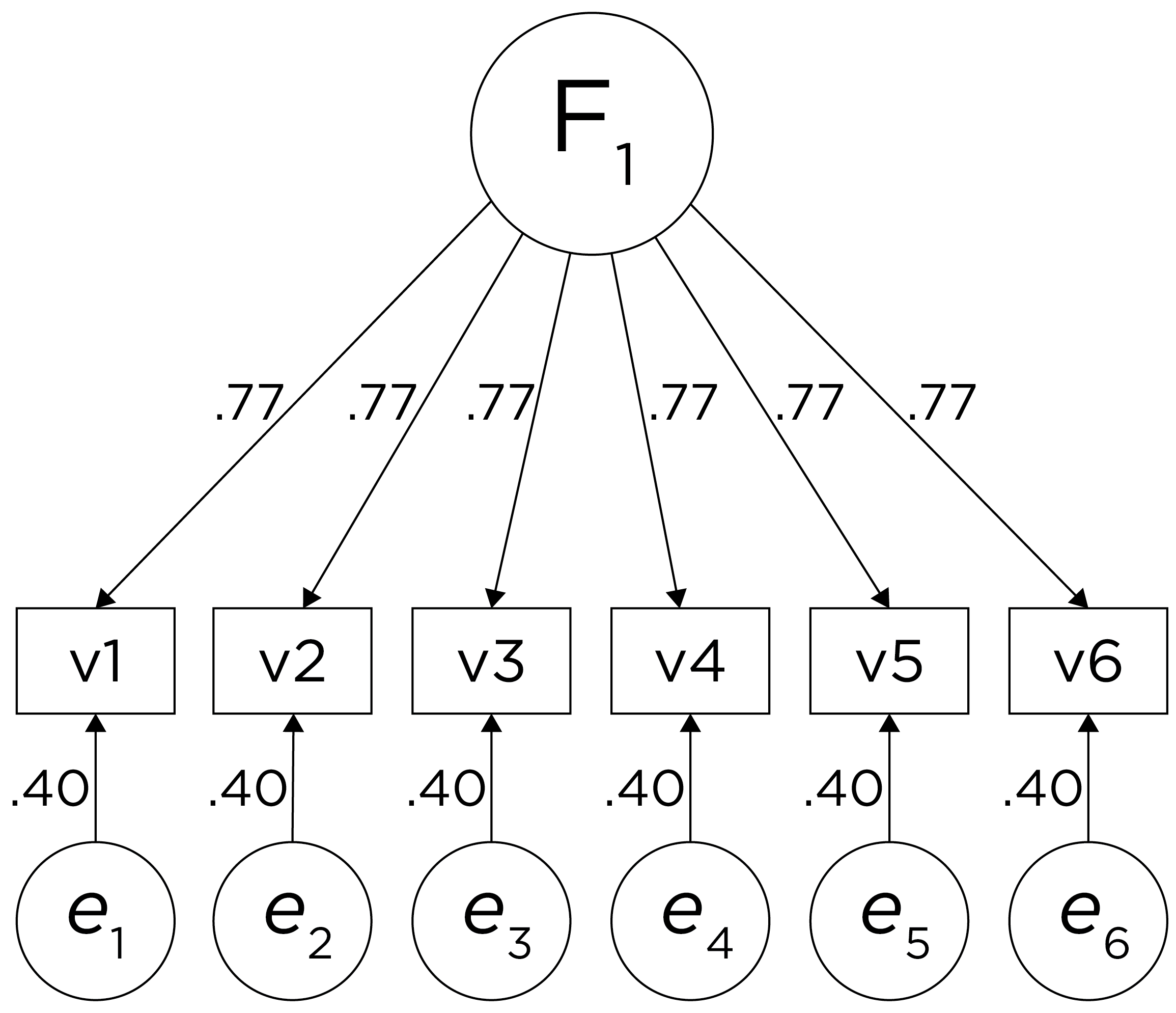

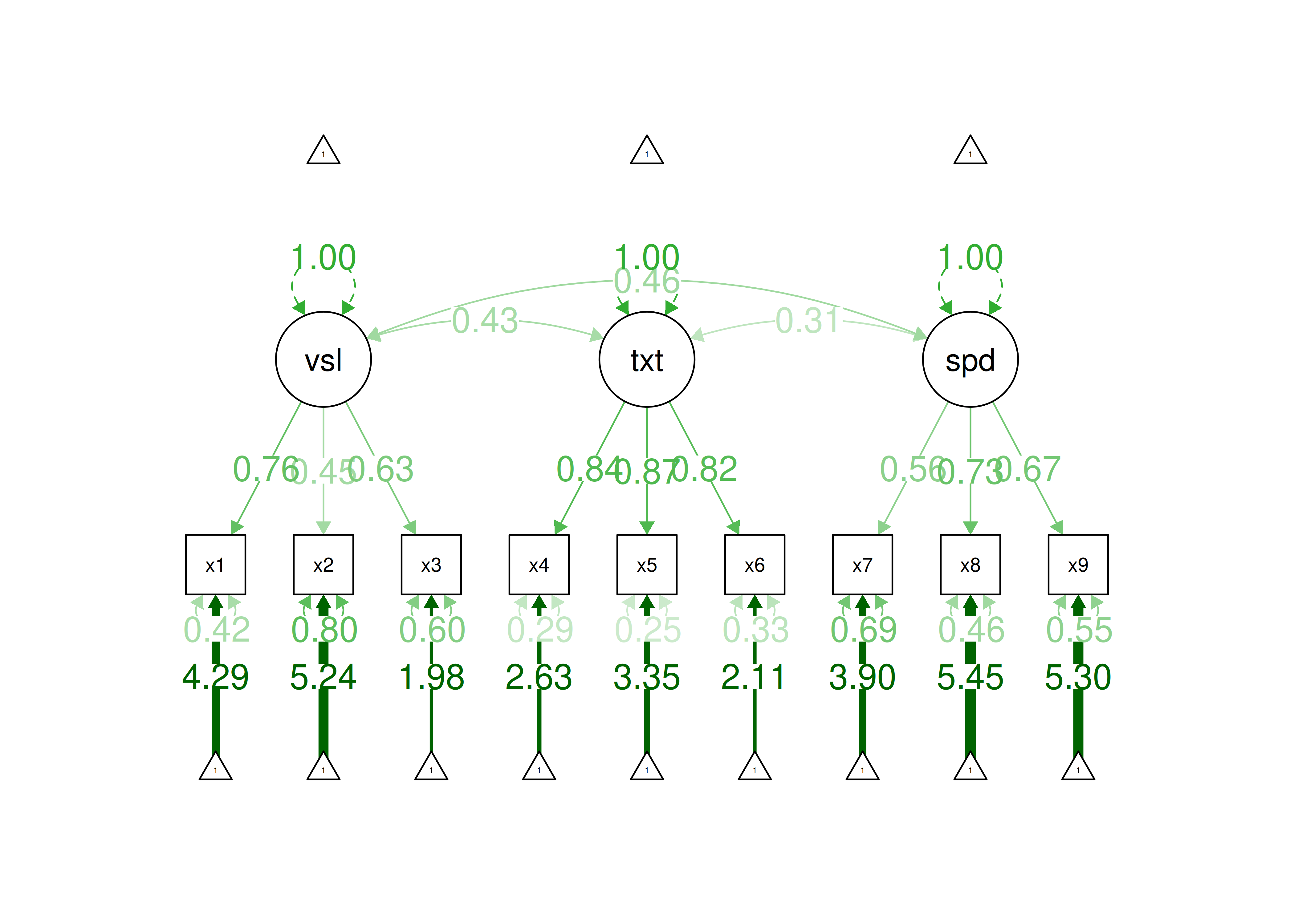

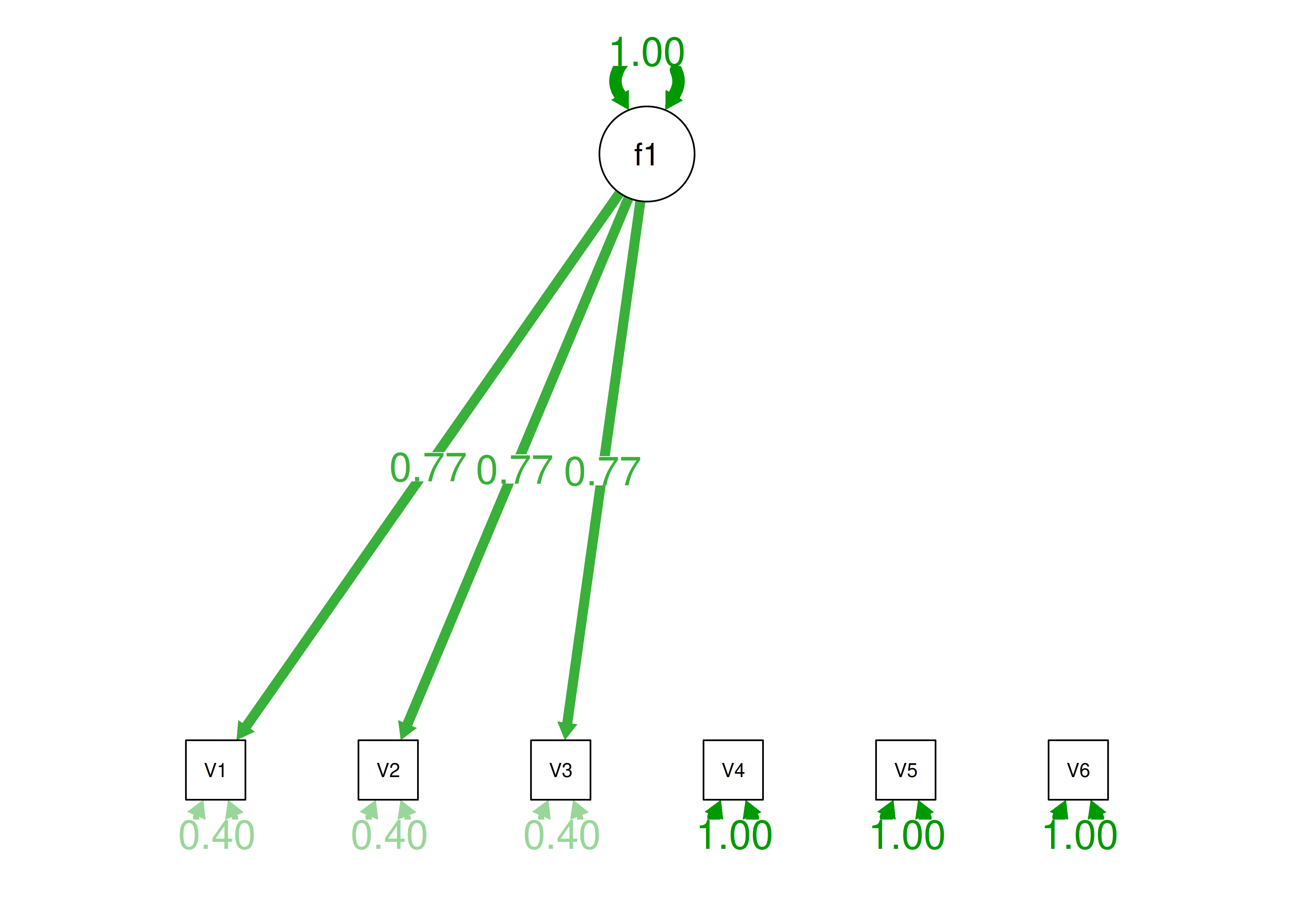

In a single-factor model fit to these data, the factor loadings are .77 and the residual error terms are .40, as depicted in Figure 14.2. The amount of common variance (\(R^2\)) that is accounted for by an indicator is estimated as the square of the standardized loading: \(.60 = .77 \times .77\). The amount of error for an indicator is estimated as: \(\text{error} = 1 - \text{common variance}\), so in this case, the amount of error is: \(.40 = 1 - .60\). The proportion of the total variance in indicators that is accounted for by the latent factor is the sum of the square of the standardized loadings divided by the number of indicators. That is, to calculate the proportion of the total variance in the variables that is accounted for by the latent factor, you would square the loadings, sum them up, and divide by the number of variables: \(\frac{.77^2 + .77^2 + .77^2 + .77^2 + .77^2 + .77^2}{6} = \frac{.60 + .60 + .60 + .60 + .60 + .60}{6} = .60\). Thus, the latent factor accounts for 60% of the variance in the indicators. In this model, the latent factor explains the covariance among the variables. If the answer is simple, a small and parsimonious model should be able to obtain the answer.

Figure 14.2: Example Confirmatory Factor Analysis Model: Unidimensional Model.

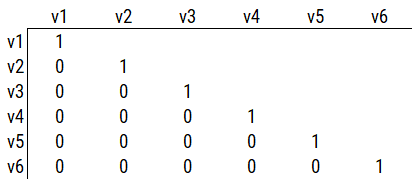

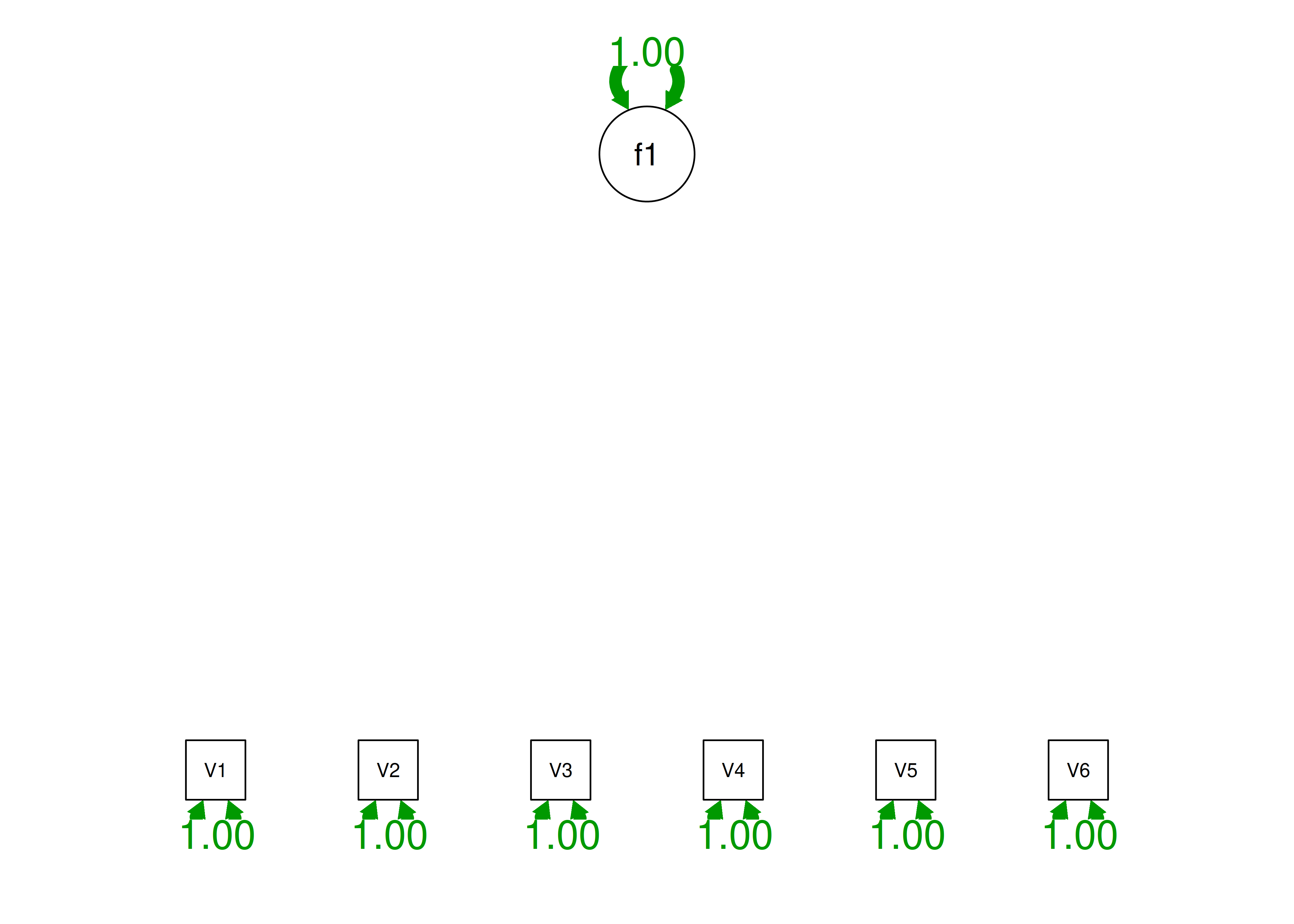

Consider a different correlation matrix in Figure 14.3. There is no common variance (correlations between the variables are zero), so there is no reason to believe there is a common factor that influences all of the variables. Variables that are not correlated cannot be related by a third variable, such as a common factor, so a common factor is not the right model.

Figure 14.3: Example Correlation Matrix 2.

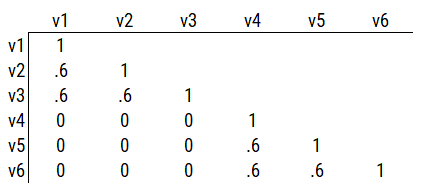

Consider another correlation matrix in Figure 14.4.

Figure 14.4: Example Correlation Matrix 3.

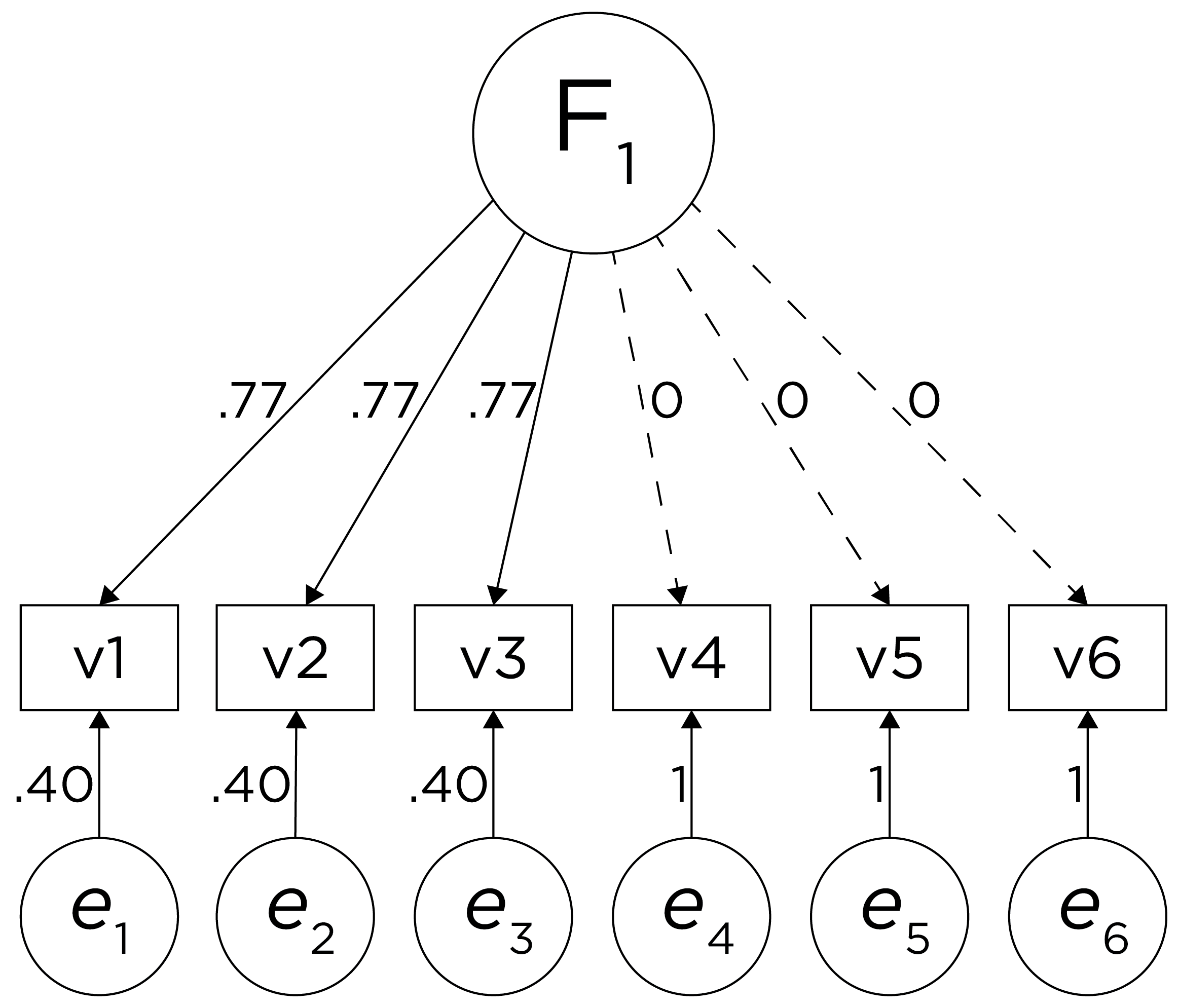

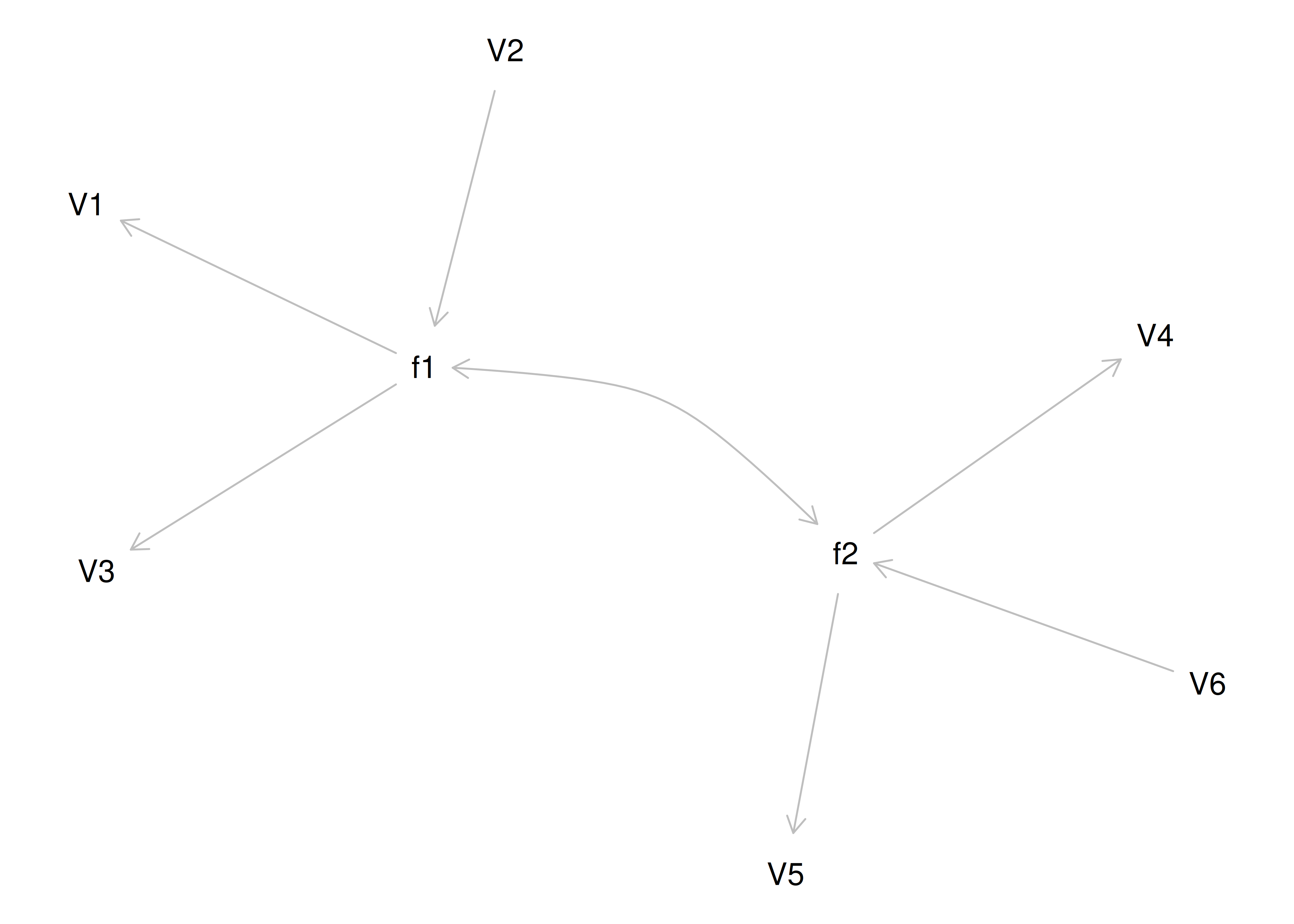

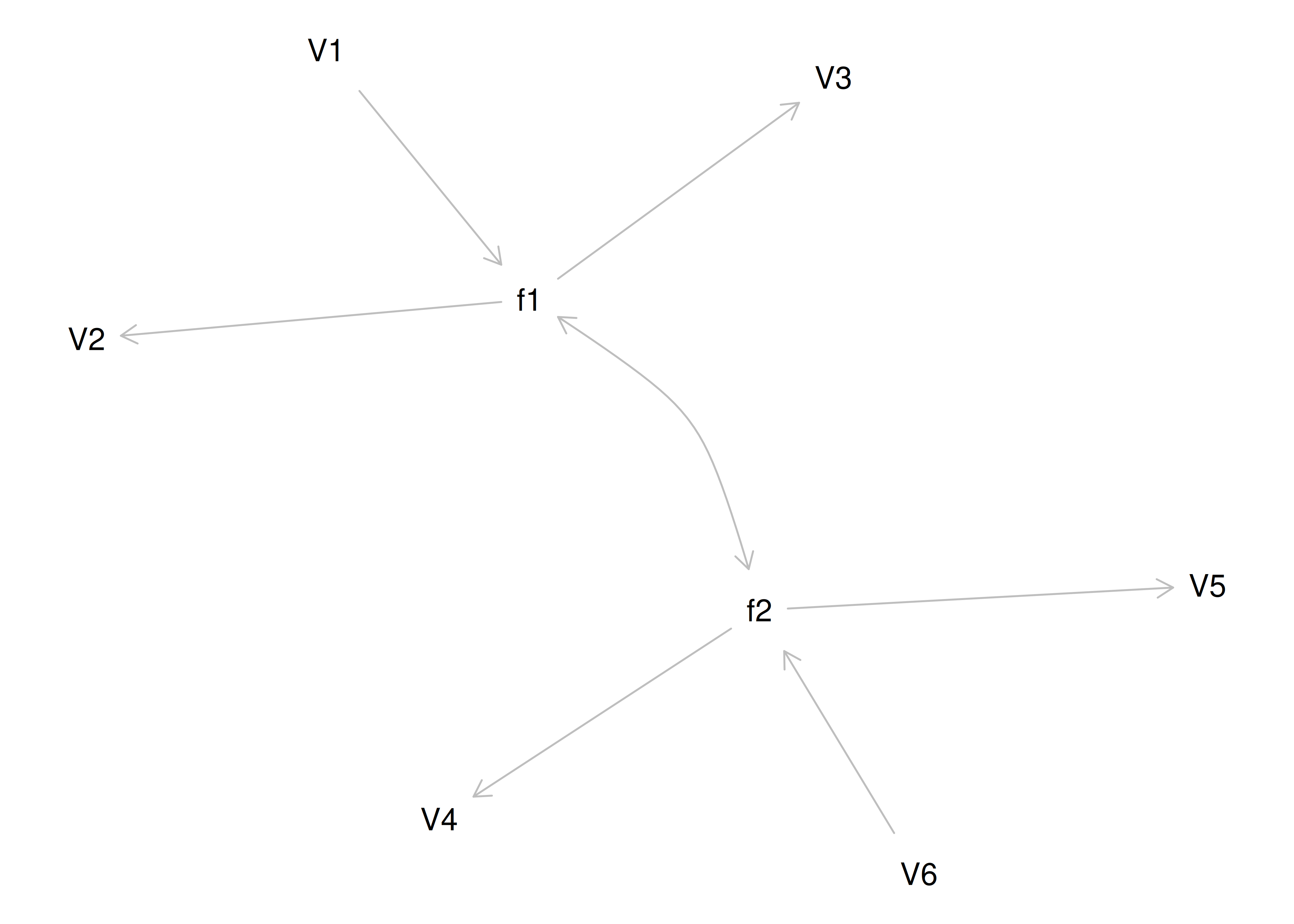

If you try to fit a single factor to this correlation matrix, it generates a factor model depicted in Figure 14.5. In this model, the first three variables have a factor loading of .77, but the remaining three variables have a factor loading of zero. This indicates that three remaining factors likely do not share a common factor with the first three variables.

Figure 14.5: Example Confirmatory Factor Analysis Model: Multidimensional Model.

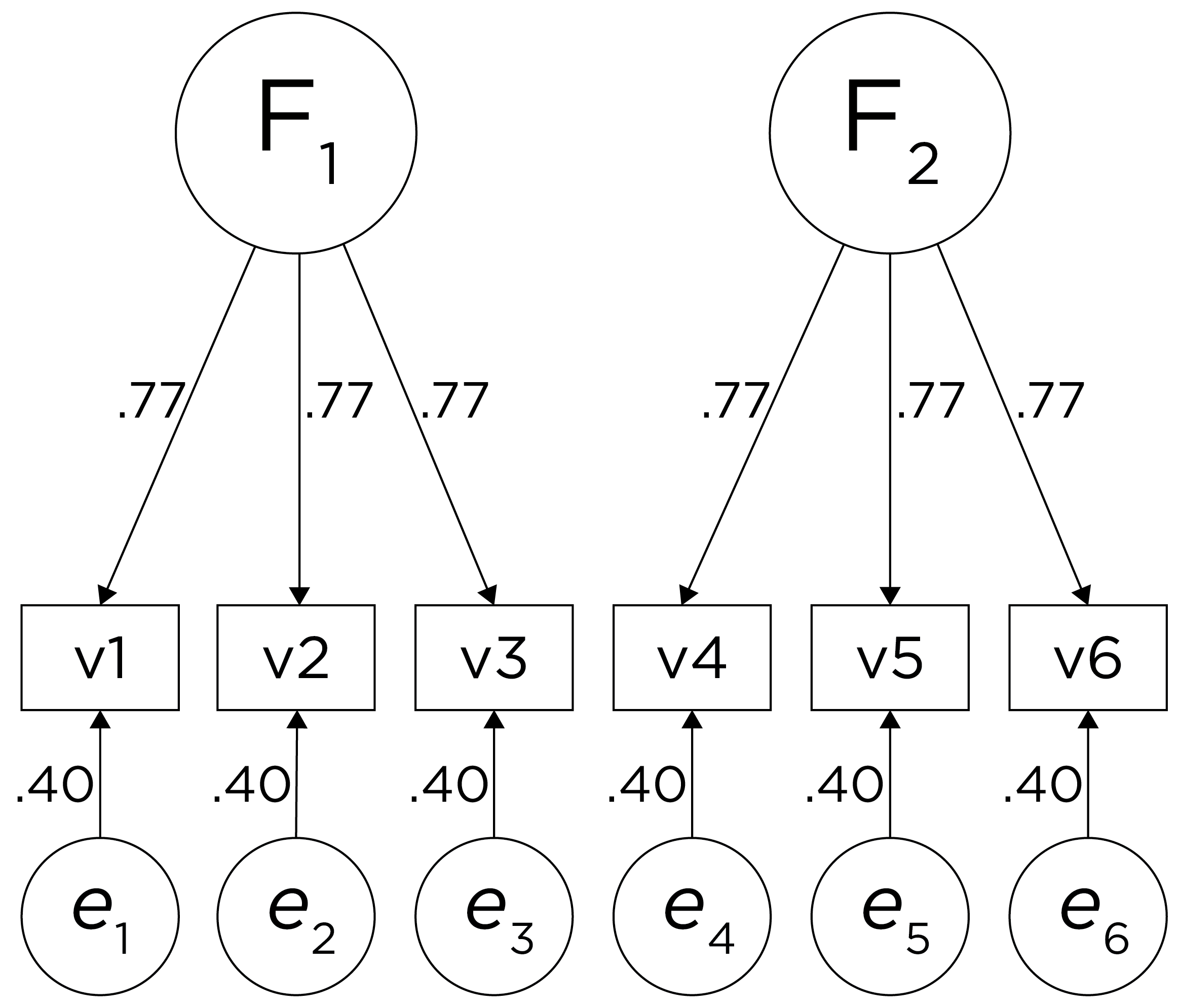

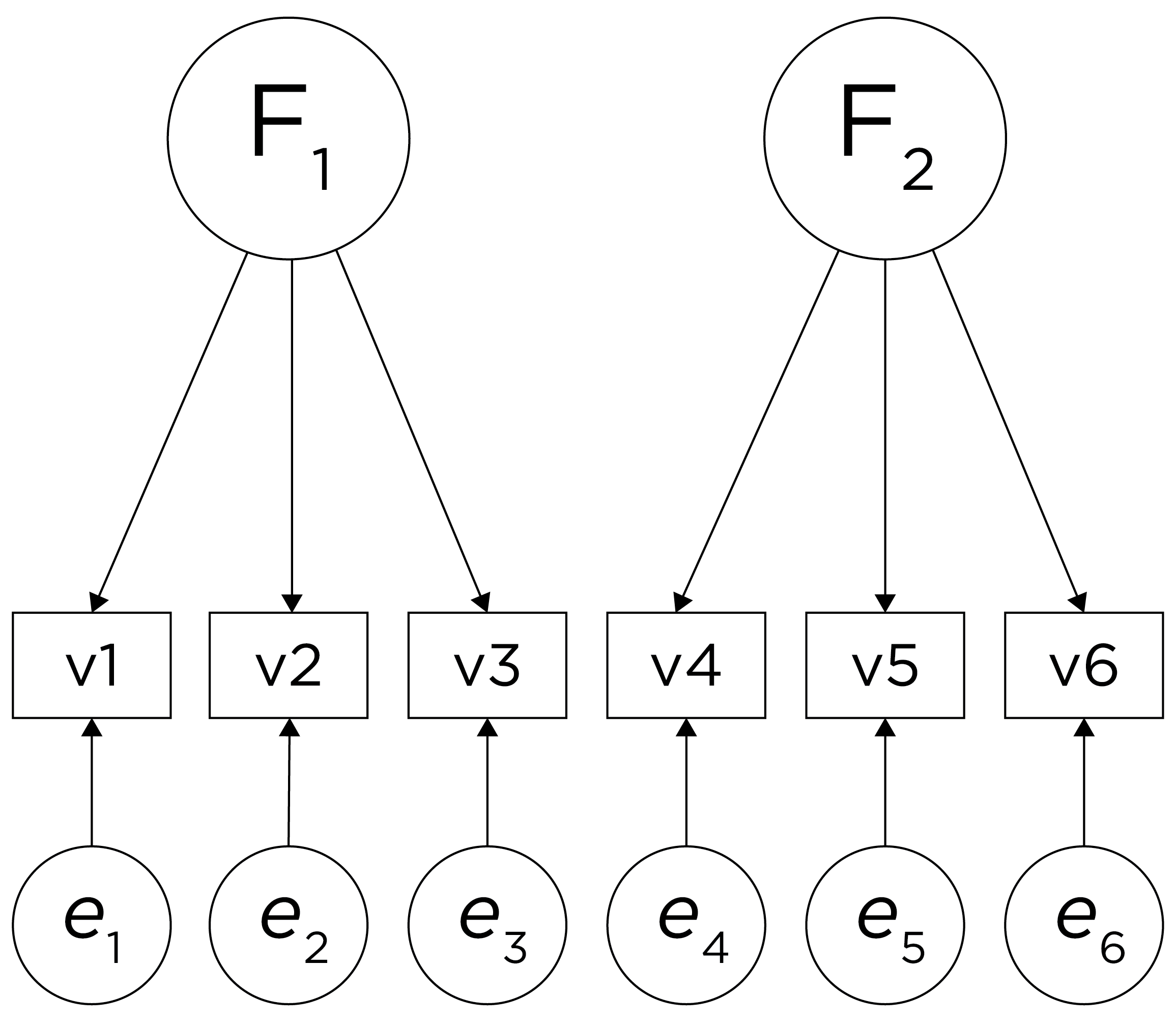

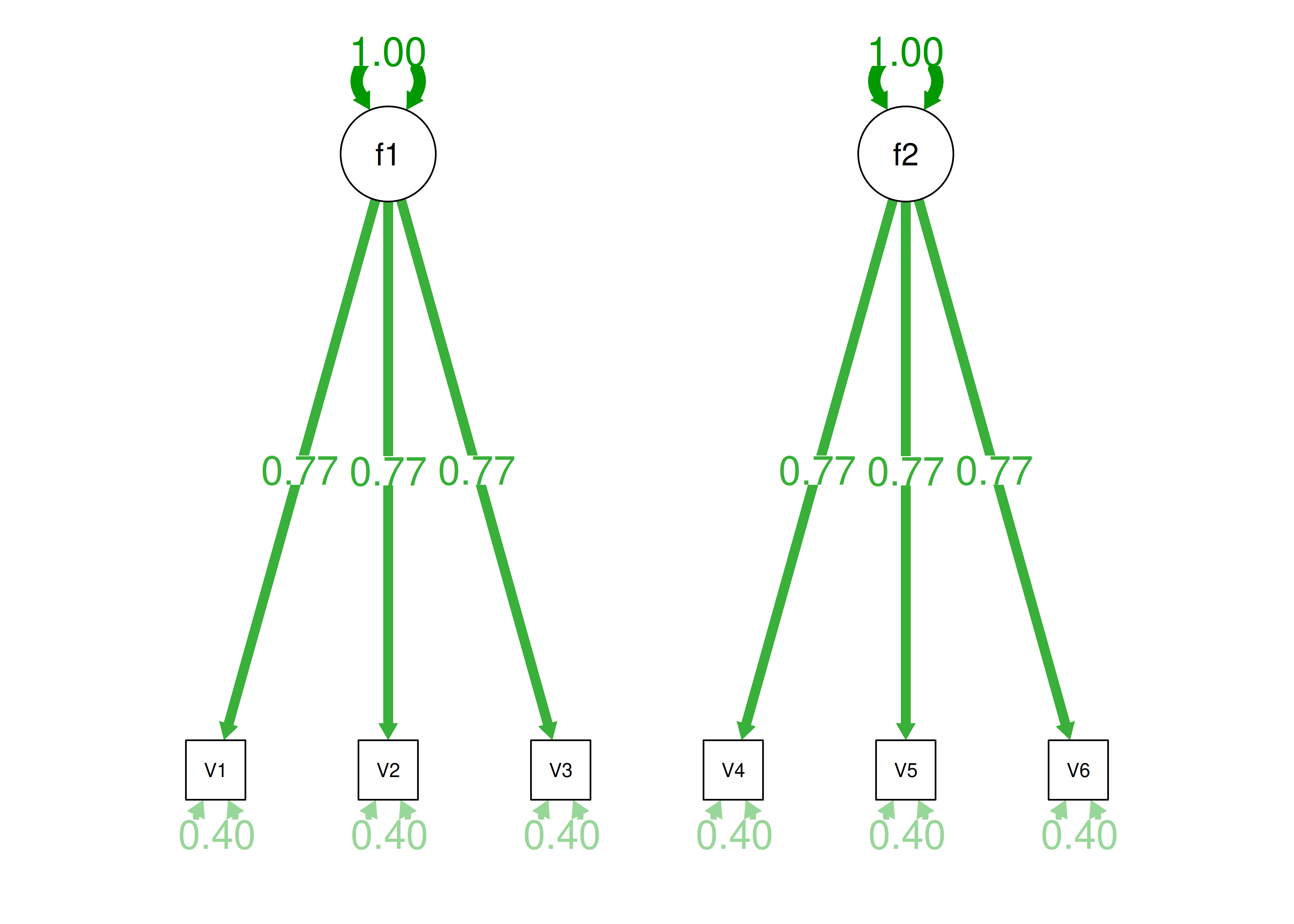

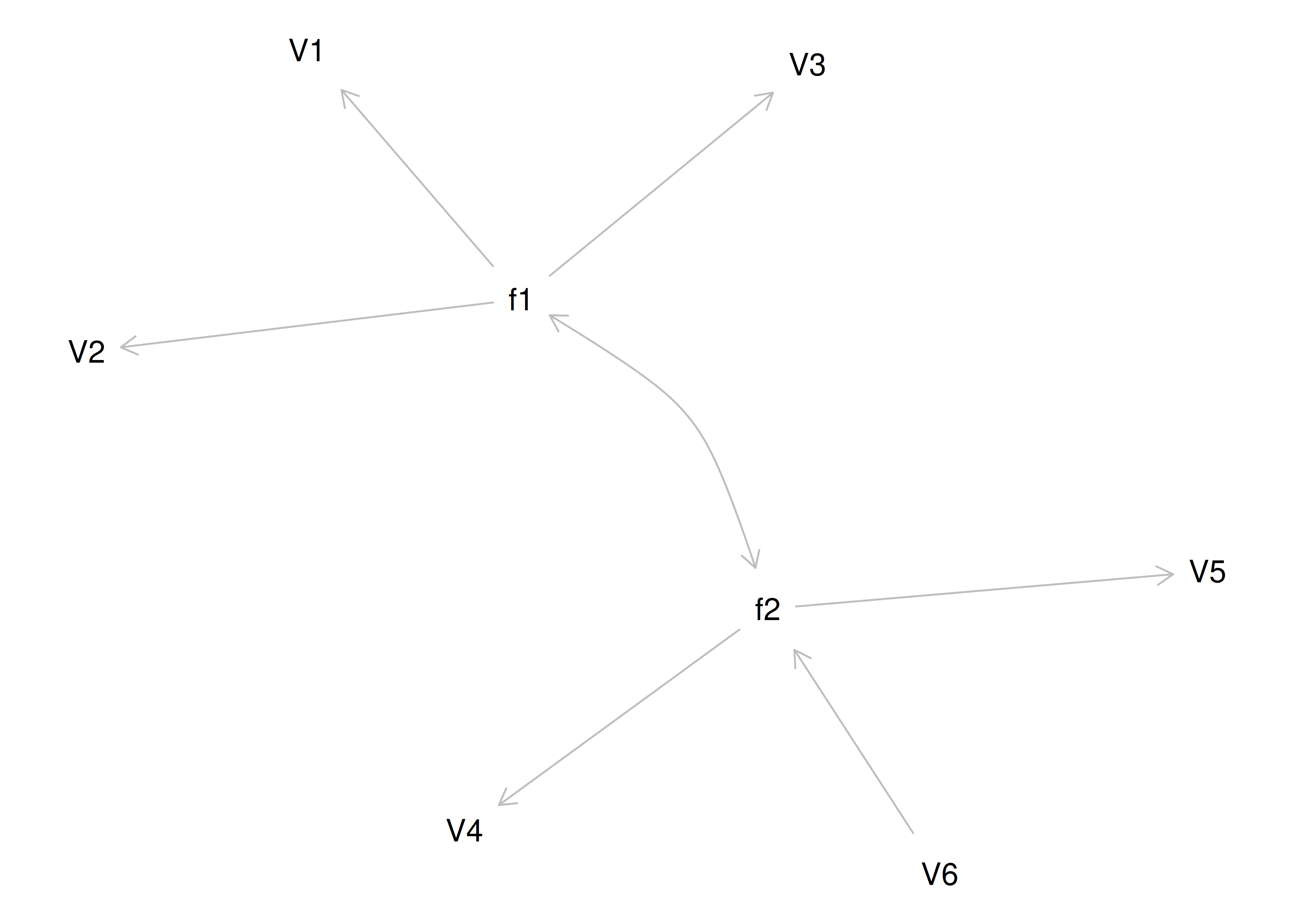

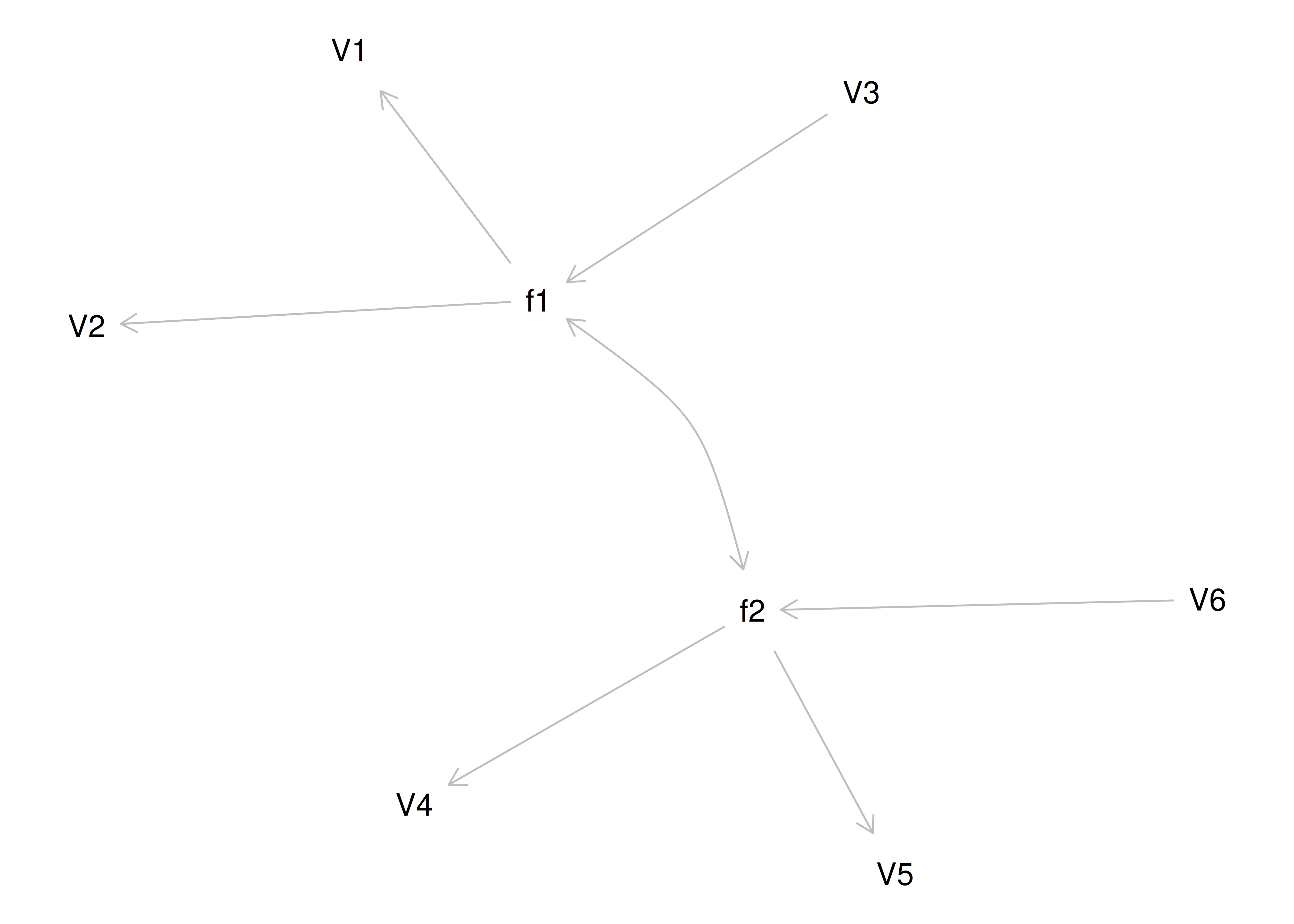

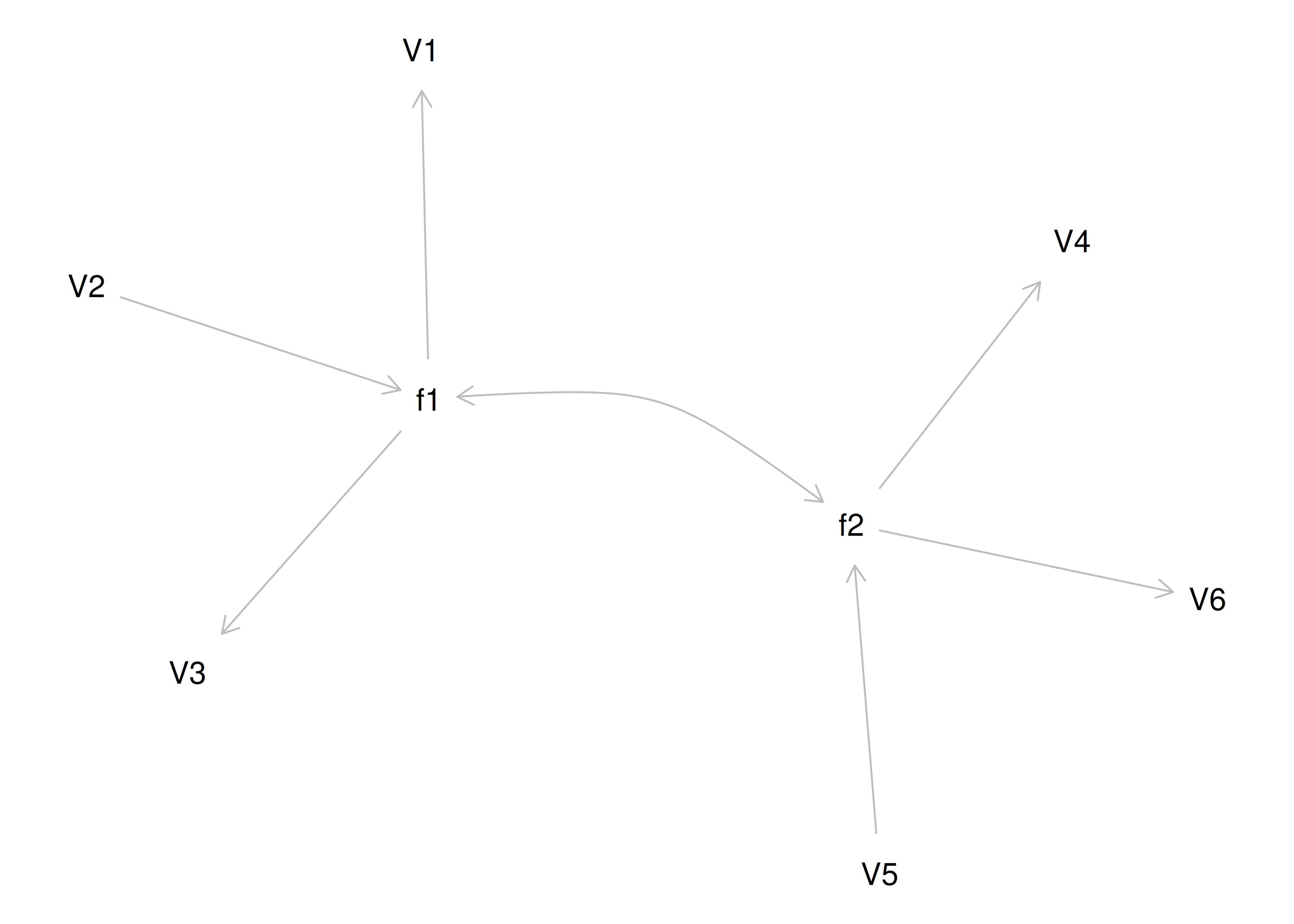

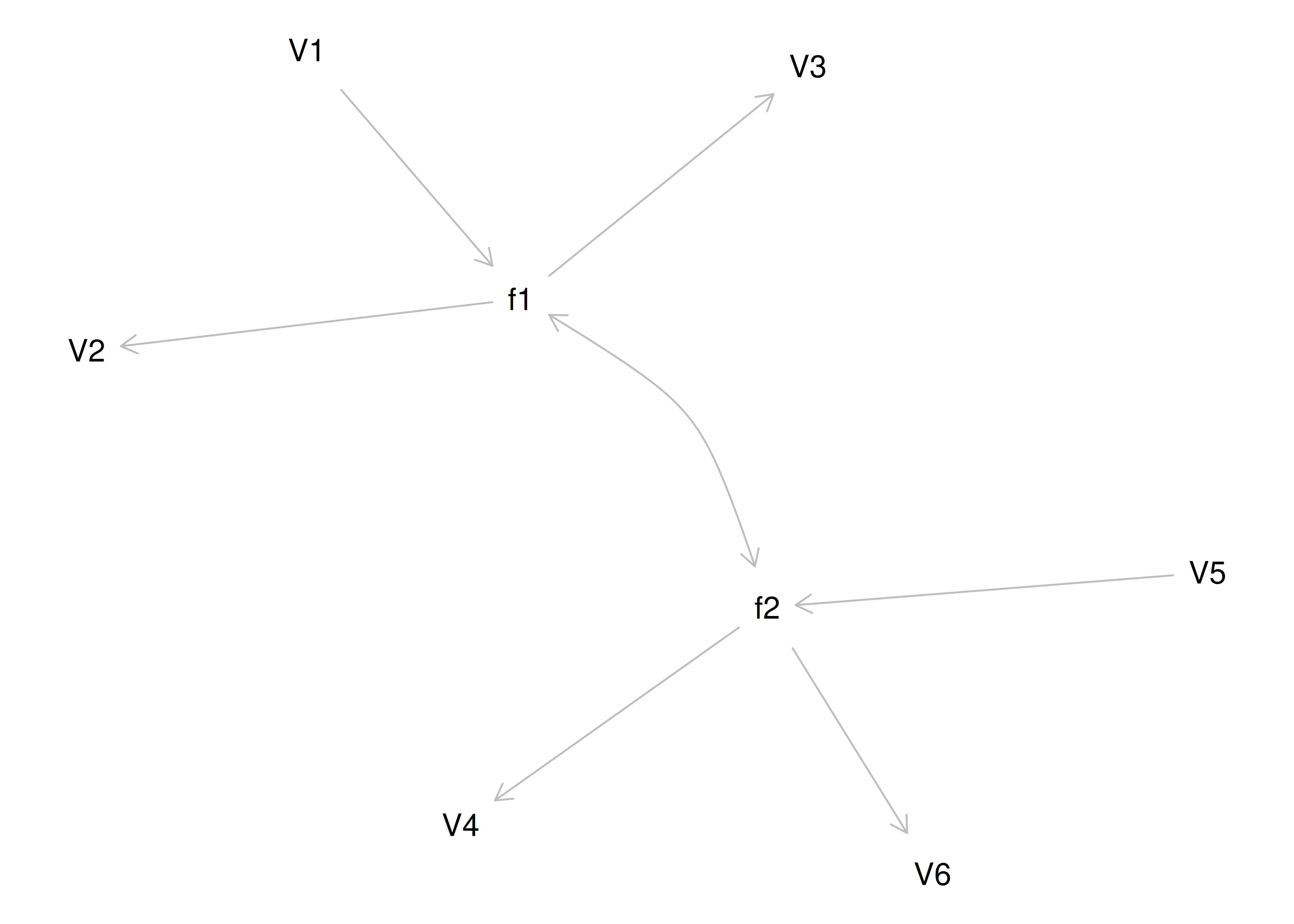

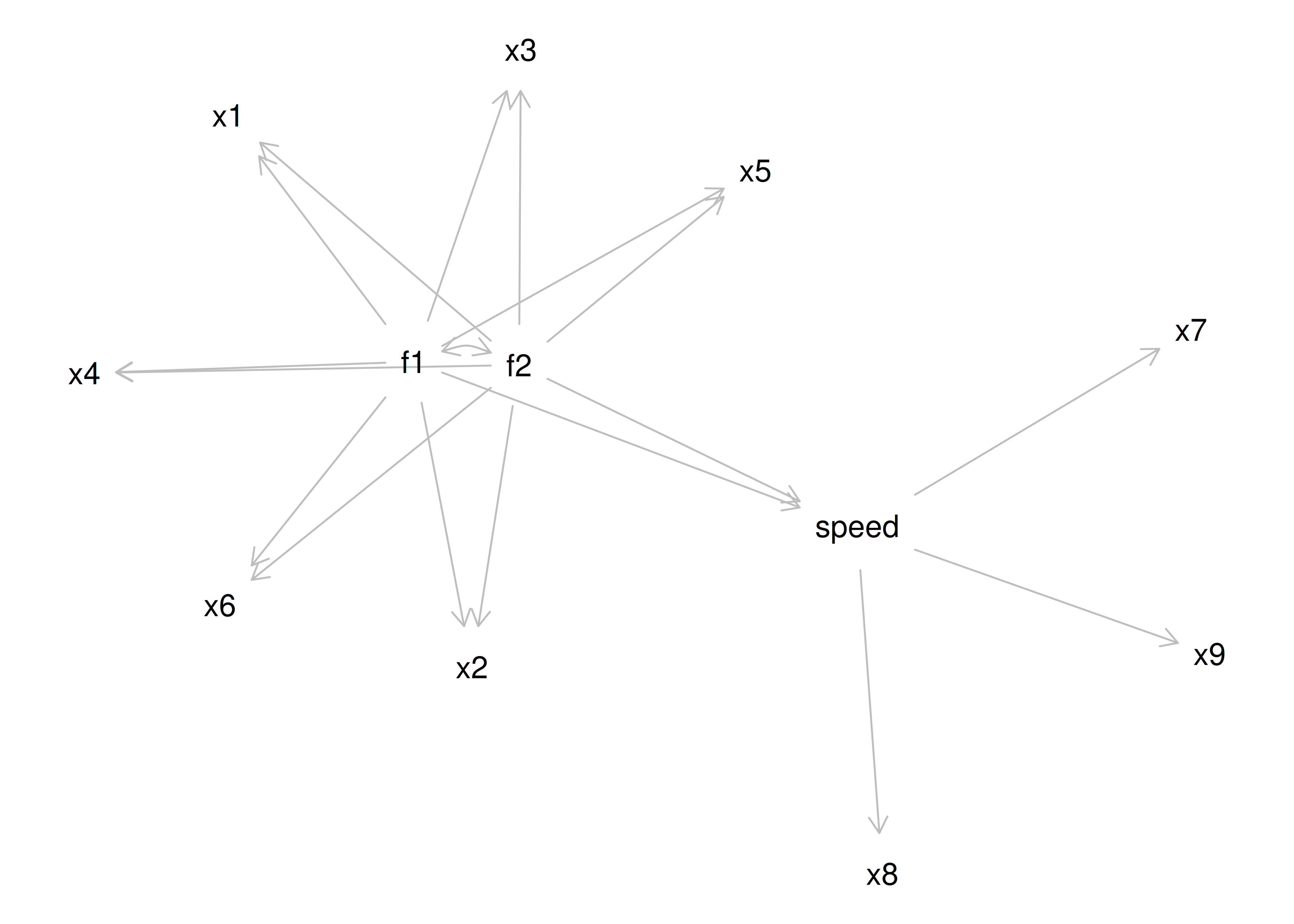

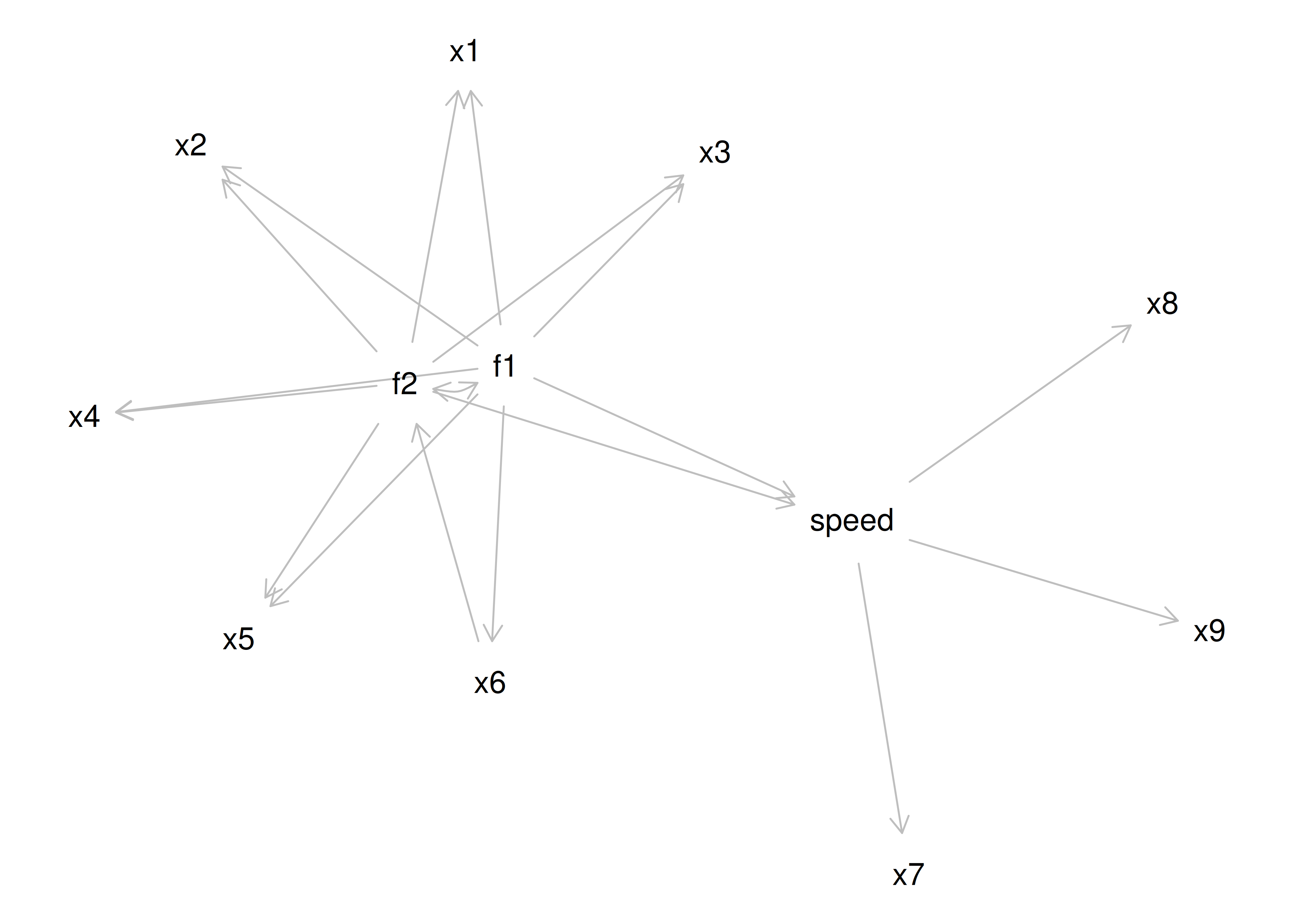

Therefore, a one-factor model is probably not correct; instead, the structure of the data is probably best represented by a two-factor model, as depicted in Figure 14.6. In the model, Factor 1 explains why measures 1, 2, and 3 are correlated, whereas Factor 2 explains why measures 4, 5, and 6 are correlated. A two-factor model thus explains why measures 1, 2, and 3 are not correlated with measures 4, 5, and 6. In this model, each latent factor accounts for 60% of the variance in the indicators that load onto it: \(\frac{.77^2 + .77^2 + .77^2}{3} = \frac{.60 + .60 + .60}{3} = .60\). Each latent factor accounts for 30% of the variance in all of the indicators: \(\frac{.77^2 + .77^2 + .77^2 + 0^2 + 0^2 + 0^2}{6} = \frac{.60 + .60 + .60 + 0 + 0 + 0}{6} = .30\).

Figure 14.6: Example Confirmatory Factor Analysis Model: Two-Factor Model With Uncorrelated Factors.

Consider another correlation matrix in Figure 14.7.

Figure 14.7: Example Correlation Matrix 4.

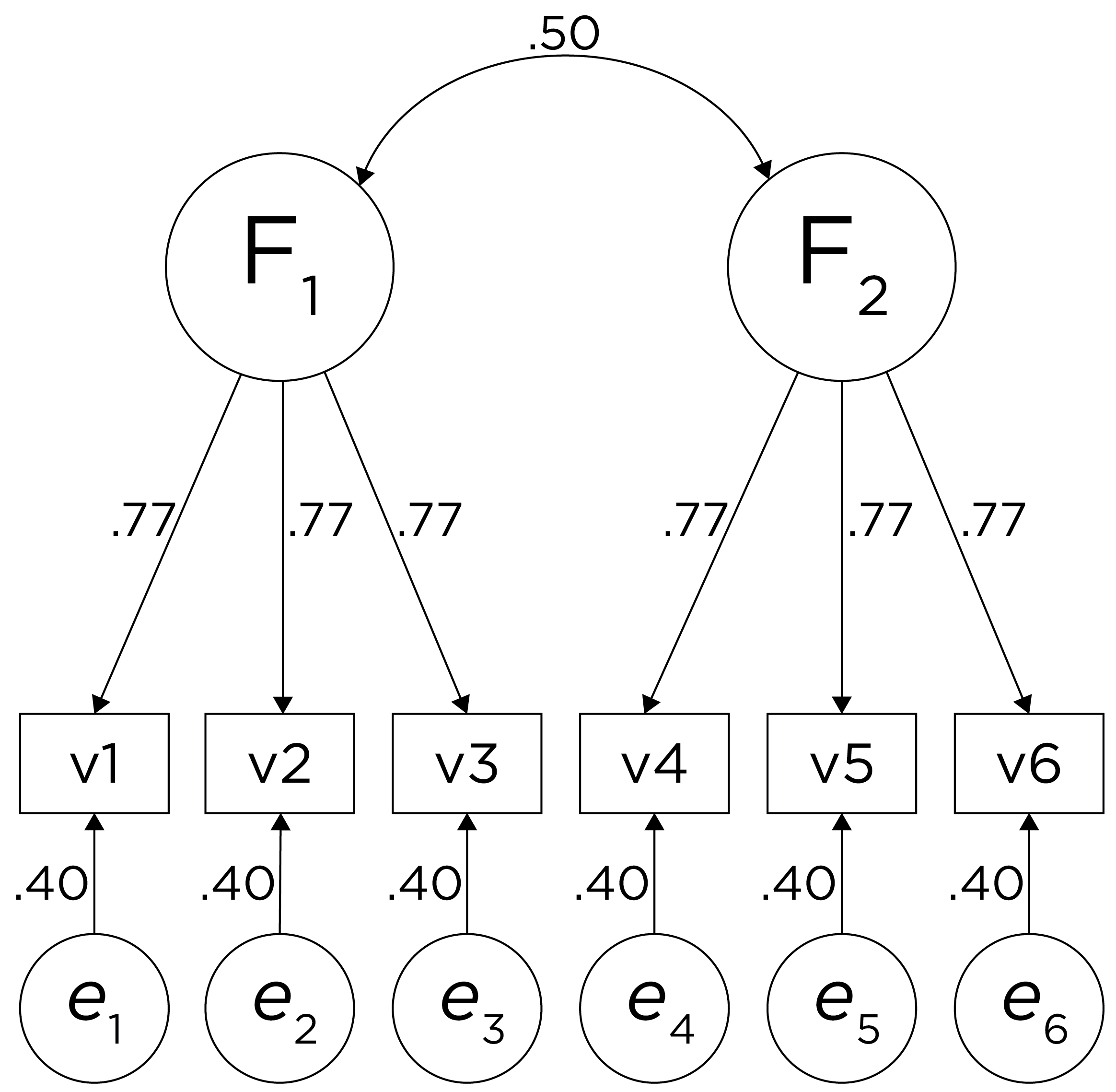

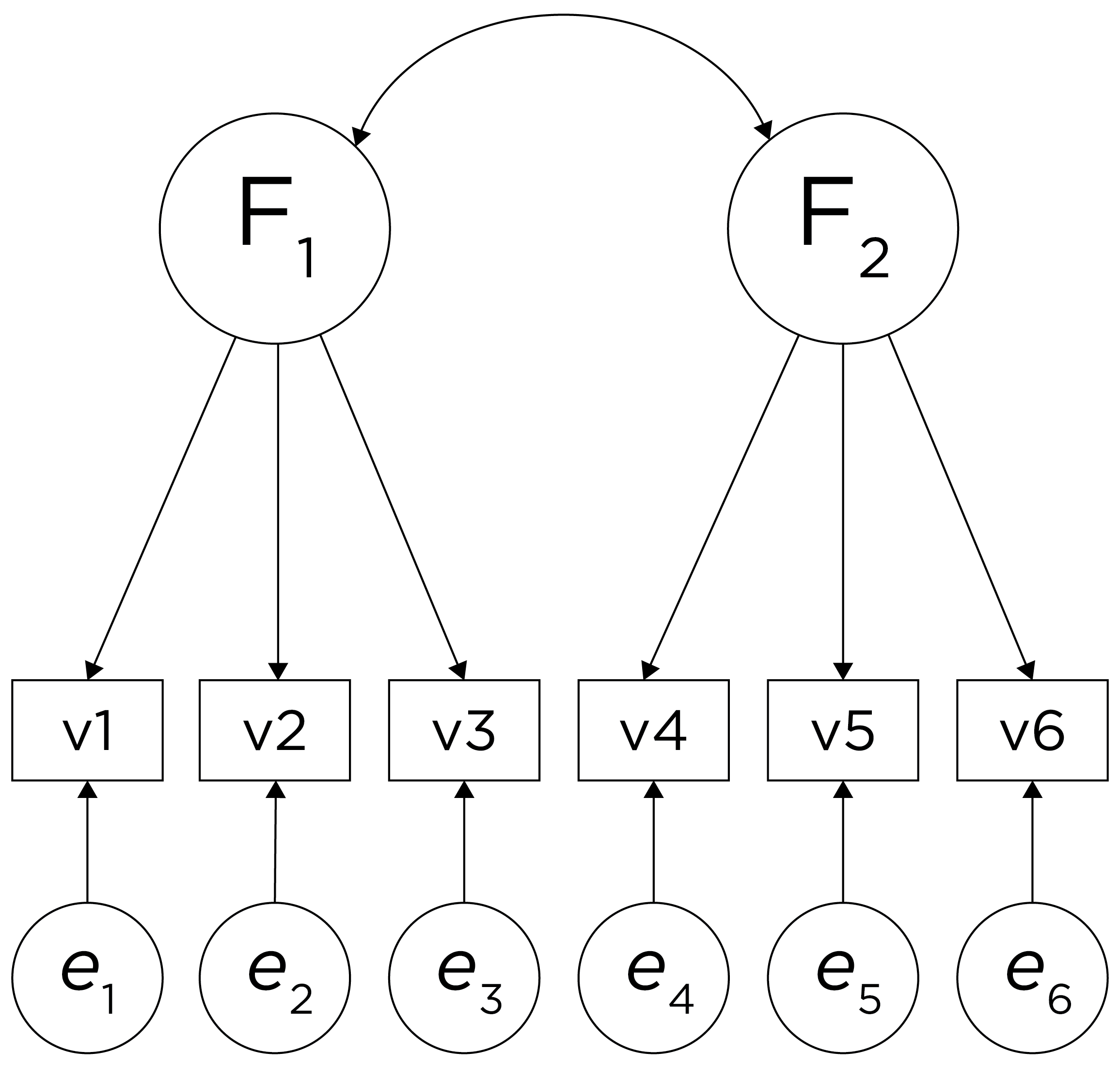

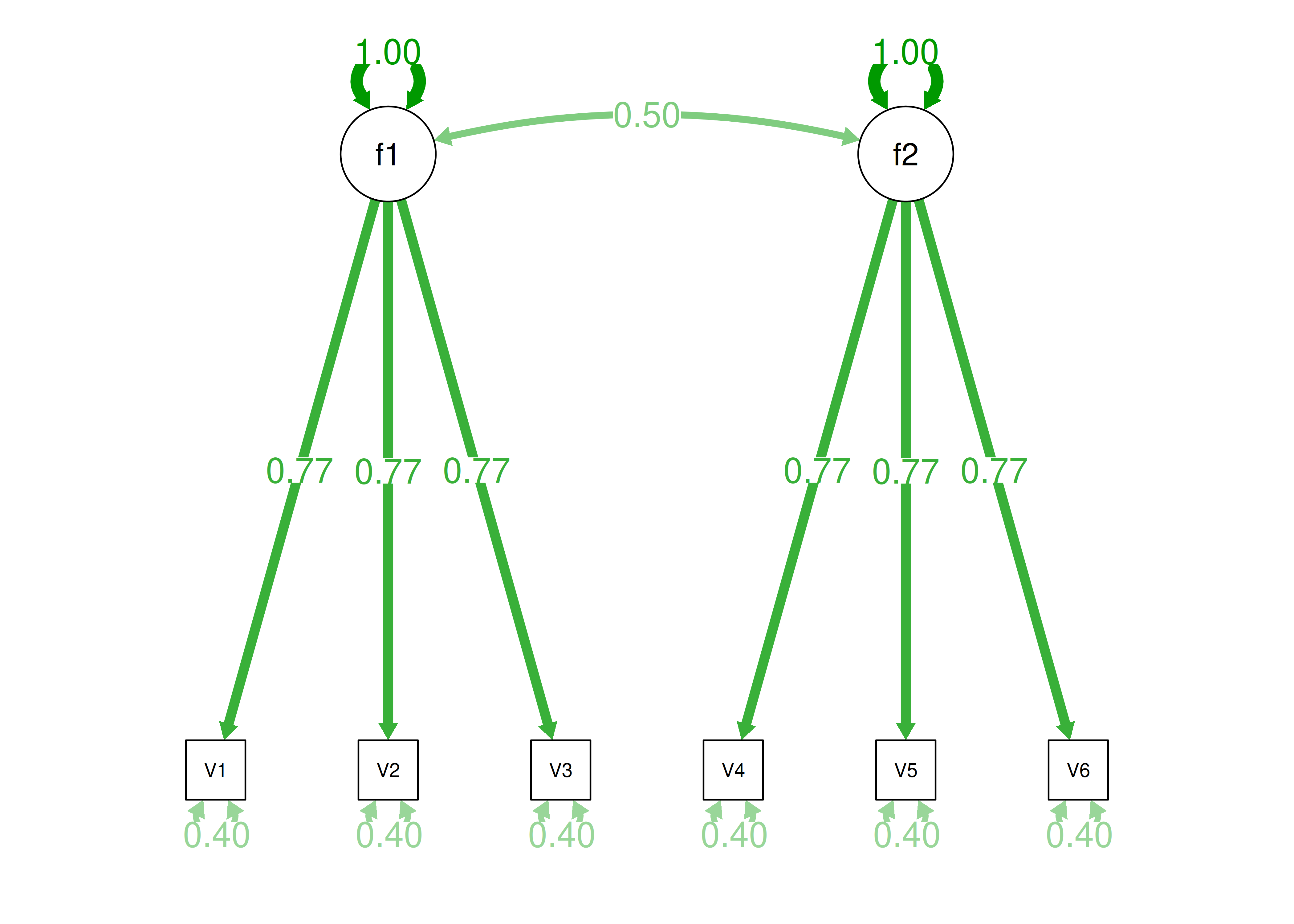

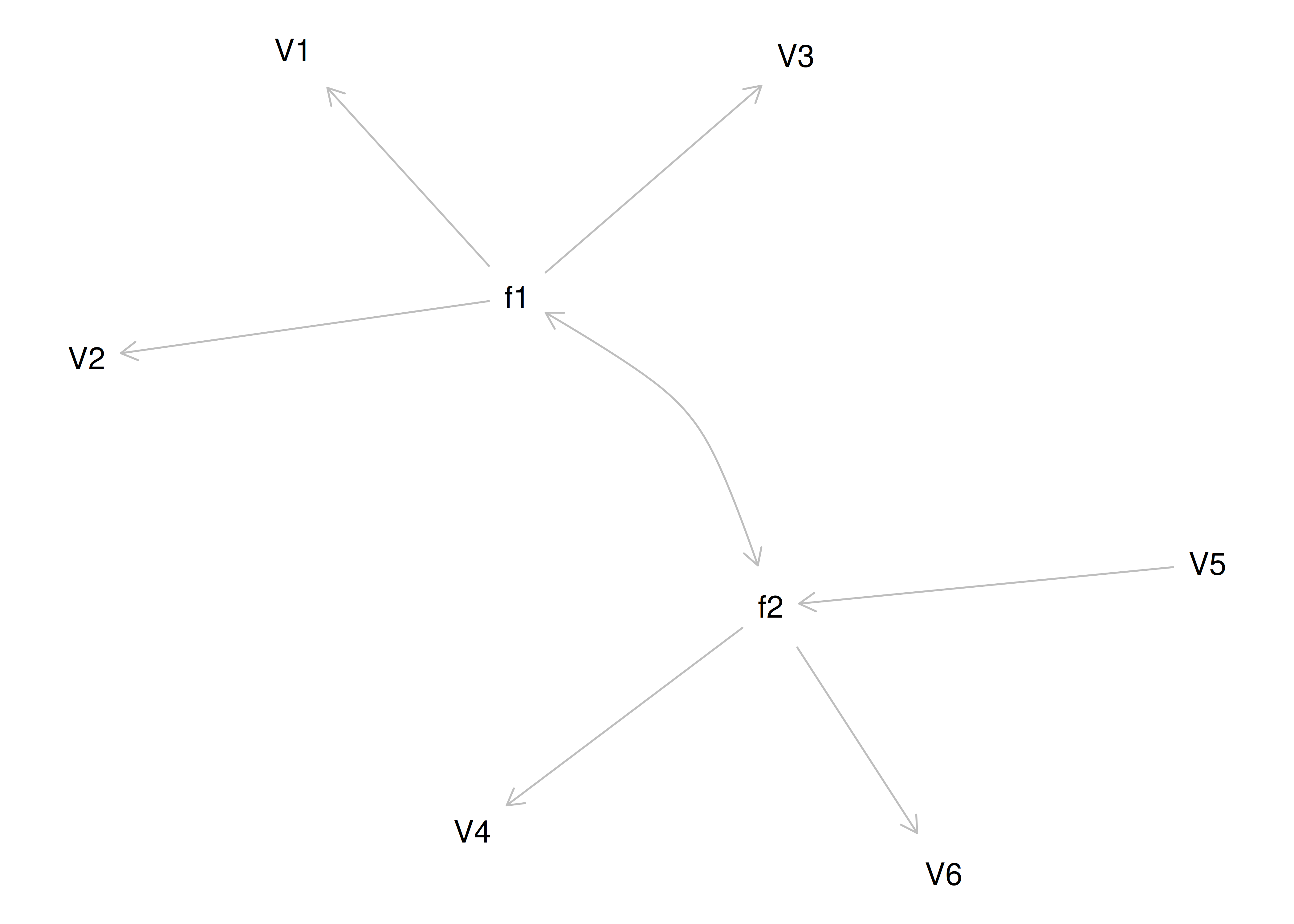

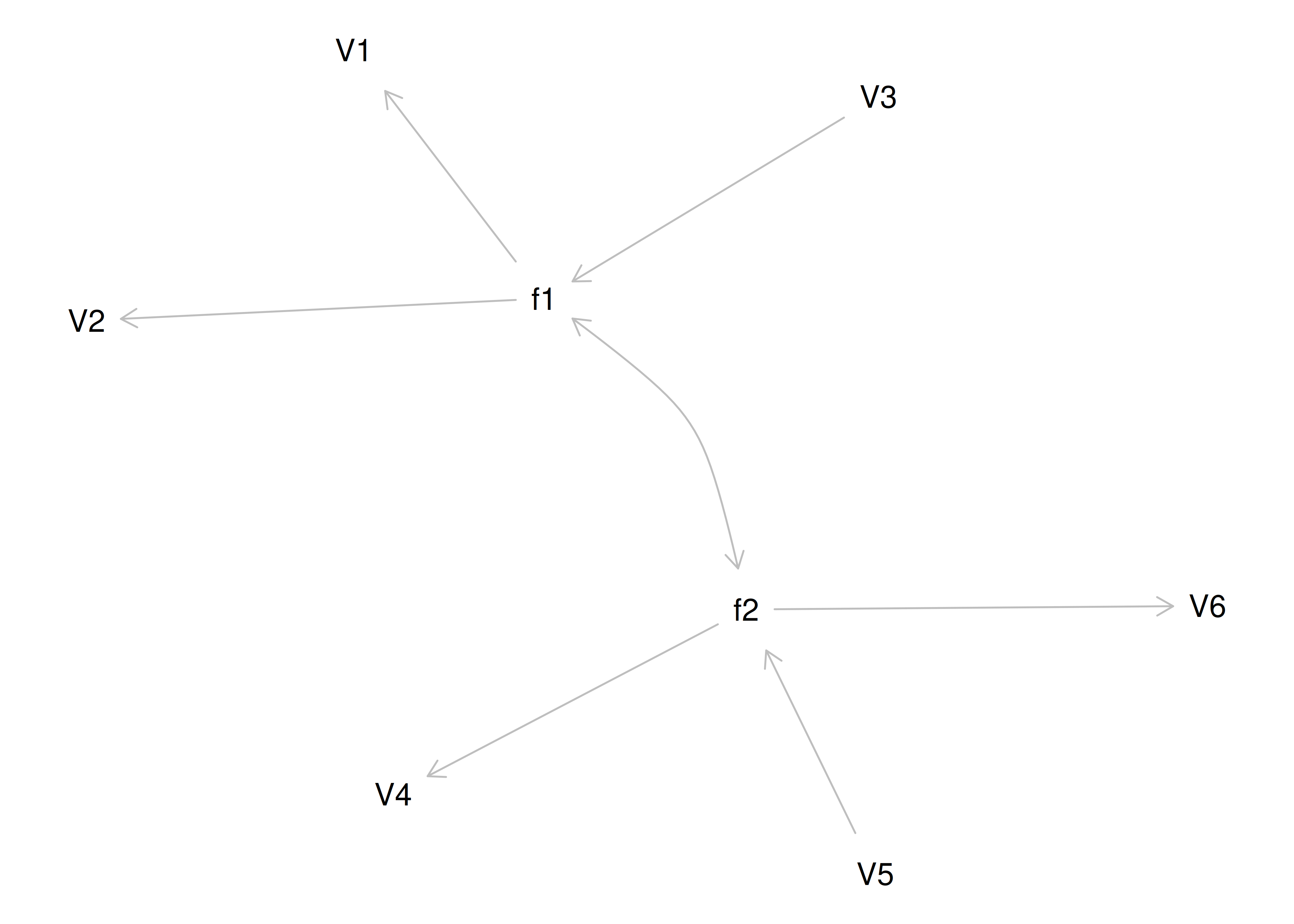

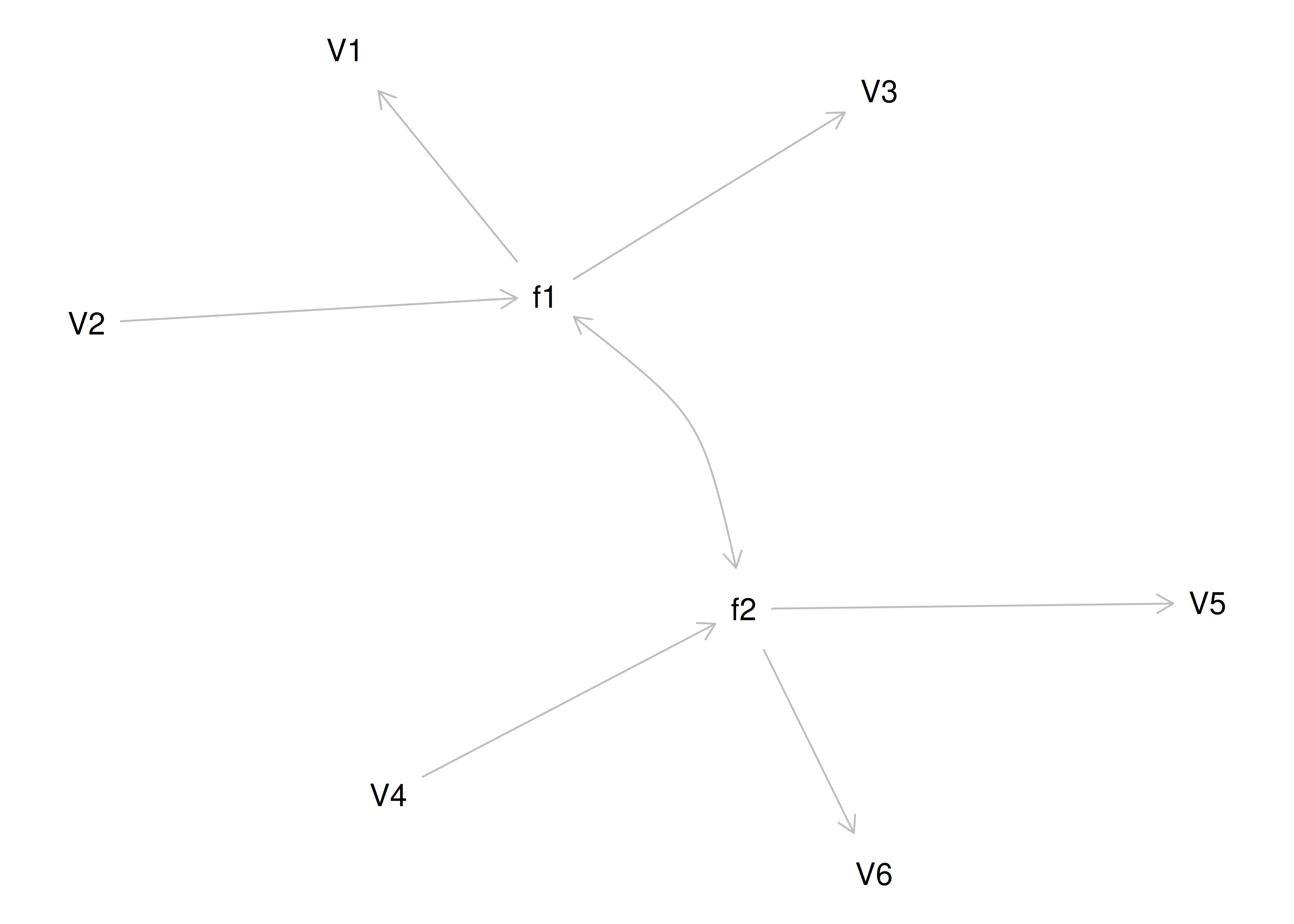

One way to model these data is depicted in Figure 14.8. In this model, the factor loadings are .77, the residual error terms are .40, and there is a covariance path of .50 for the association between Factor 1 and Factor 2. Going from the model to the correlation matrix is deterministic. If you know the model, you can calculate the correlation matrix. For instance, using path tracing rules (described in Section 4.1), the correlation of measures within a factor in this model is calculated as: \(0.60 = .77 \times .77\). Using path tracing rules, the correlation of measures across factors in this model is calculated as: \(.30 = .77 \times .50 \times .77\). In this model, each latent factor accounts for 60% of the variance in the indicators that load onto it: \(\frac{.77^2 + .77^2 + .77^2}{3} = \frac{.60 + .60 + .60}{3} = .60\). Each latent factor accounts for 37% of the variance in all of the indicators: \(\frac{.77^2 + .77^2 + .77^2 + (.50^2 \times .77^2) + (.50^2 \times .77^2) + (.50^2 \times .77^2)}{6} = \frac{.60 + .60 + .60 + .15 + .15 + .15}{6} = .37\).

Figure 14.8: Example Confirmatory Factor Analysis Model: Two-Factor Model With Correlated Factors.

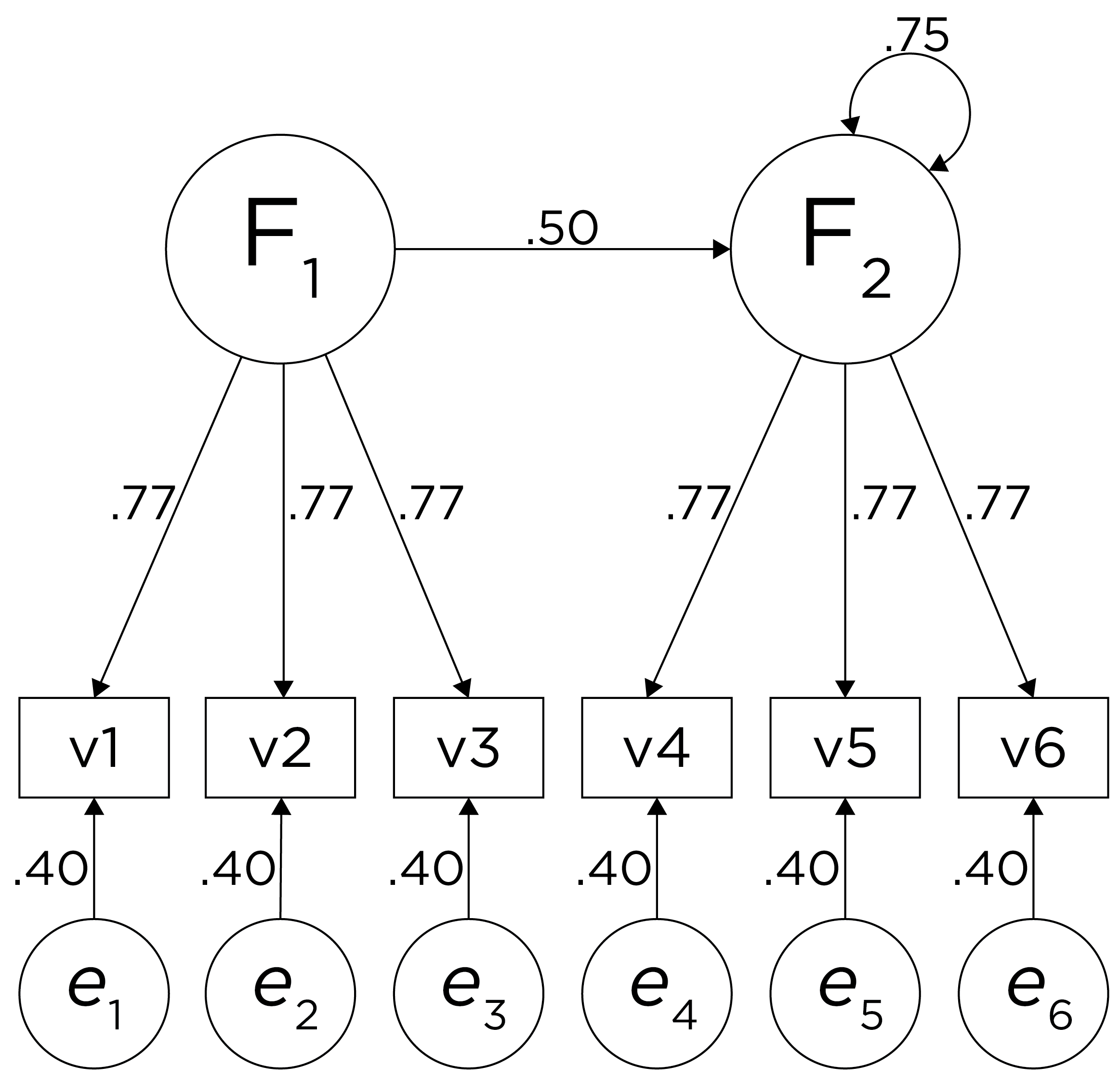

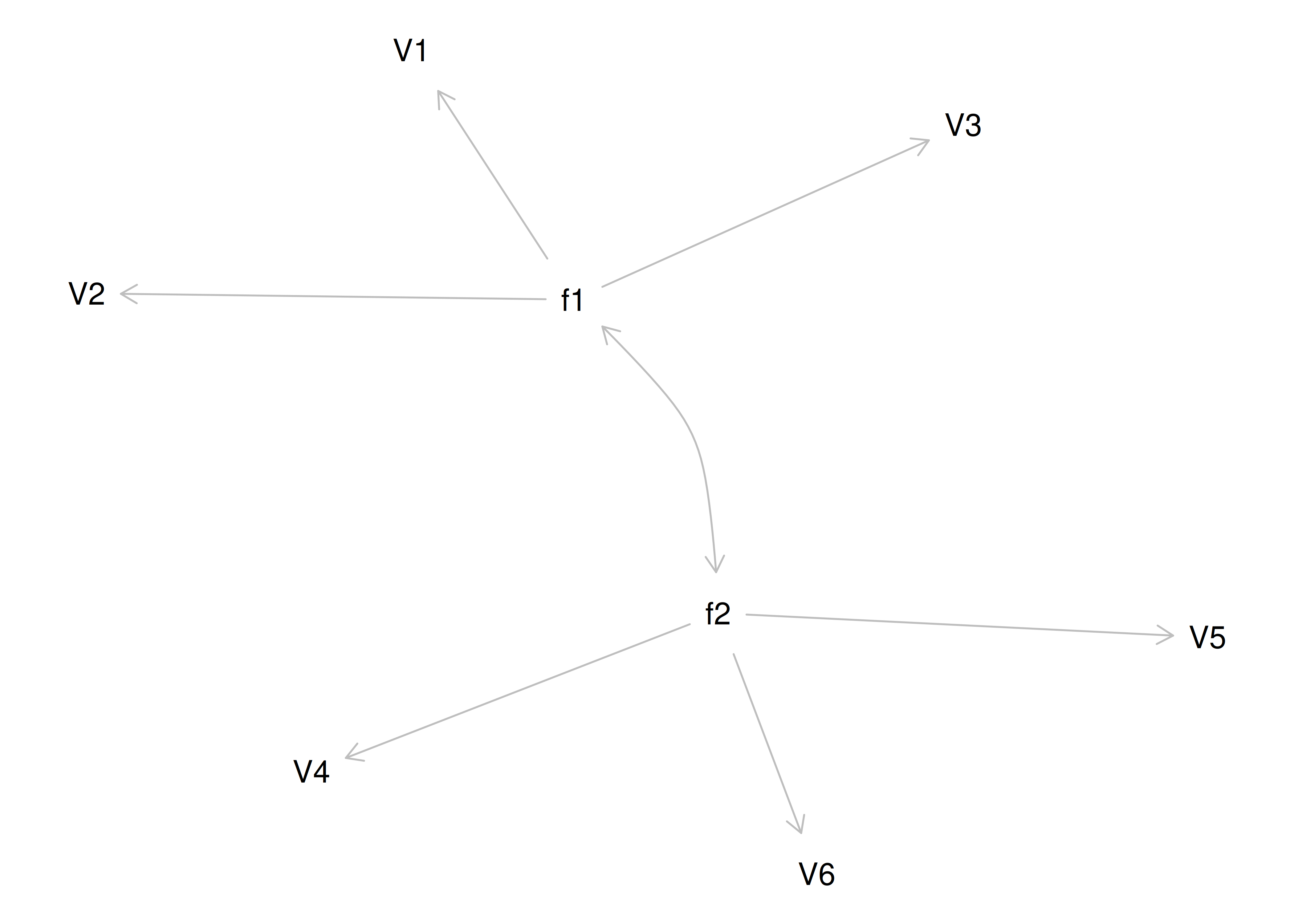

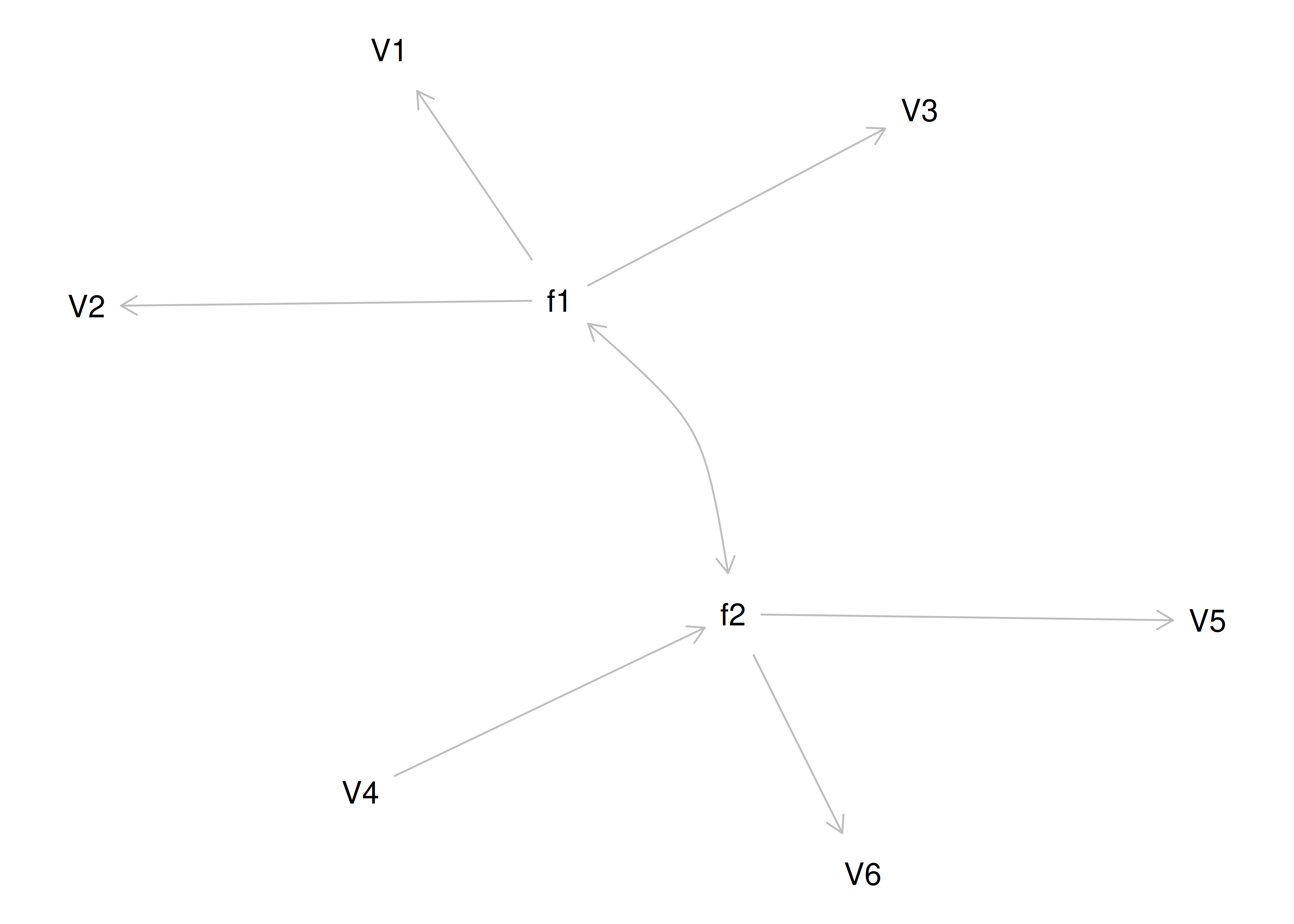

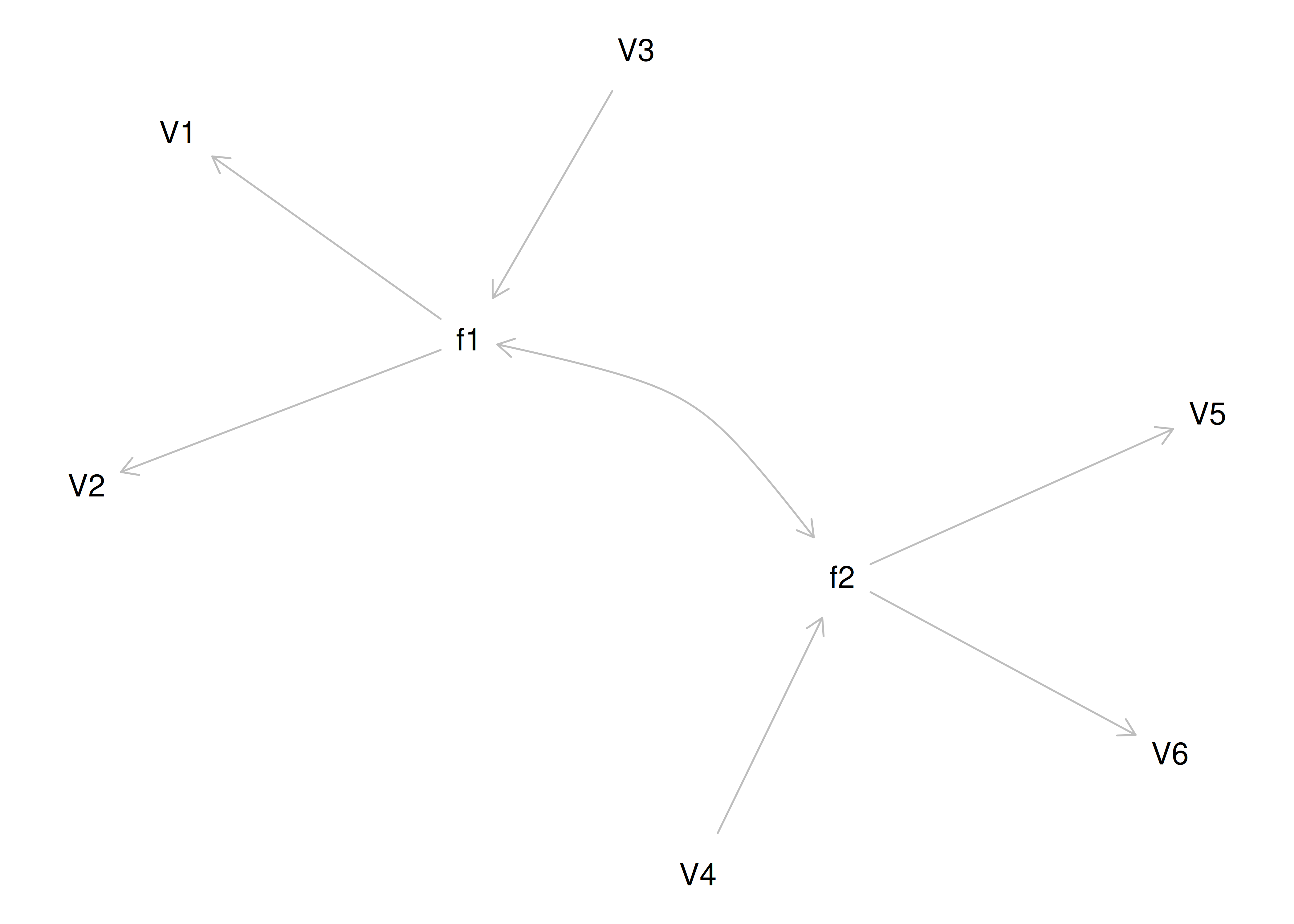

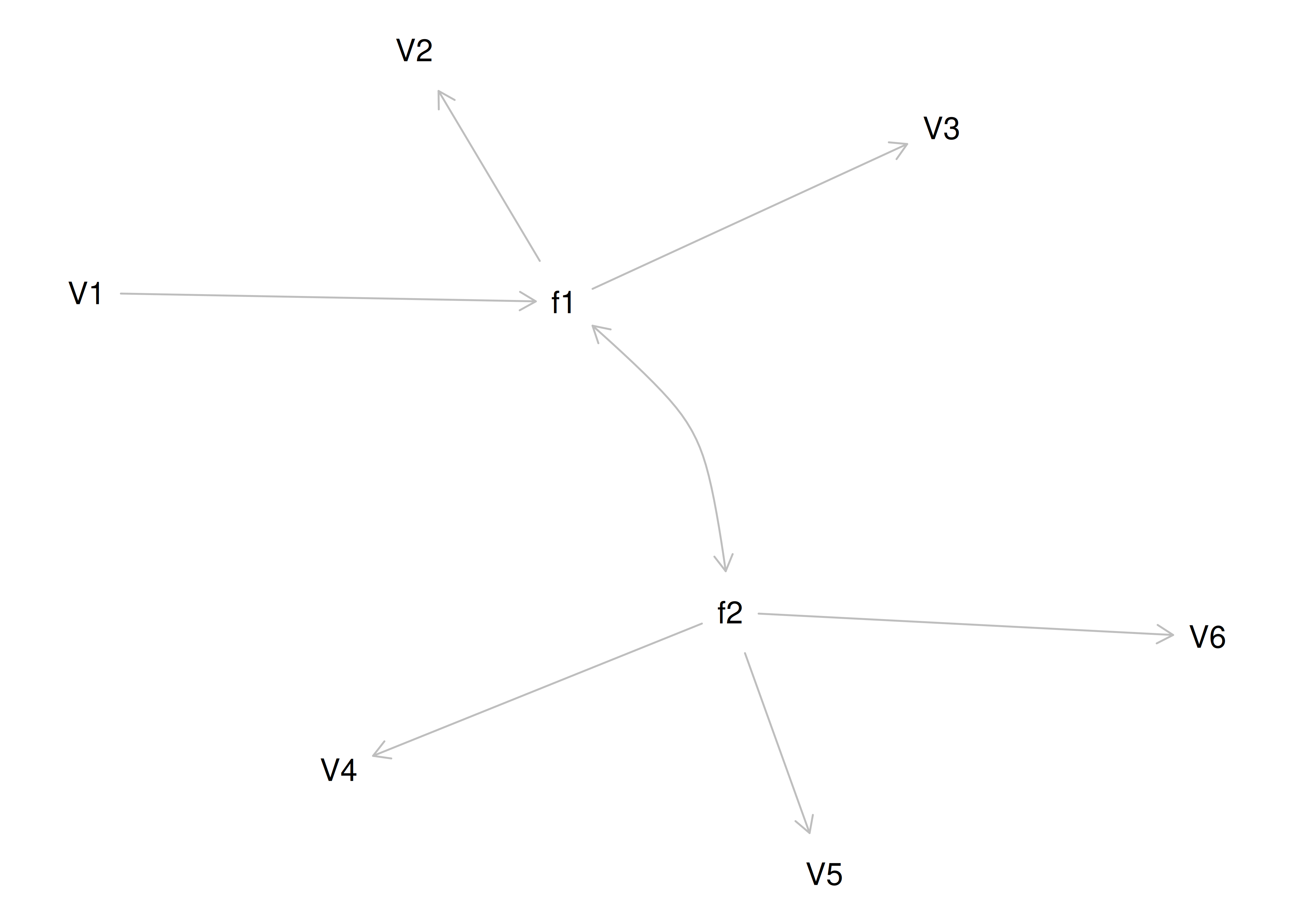

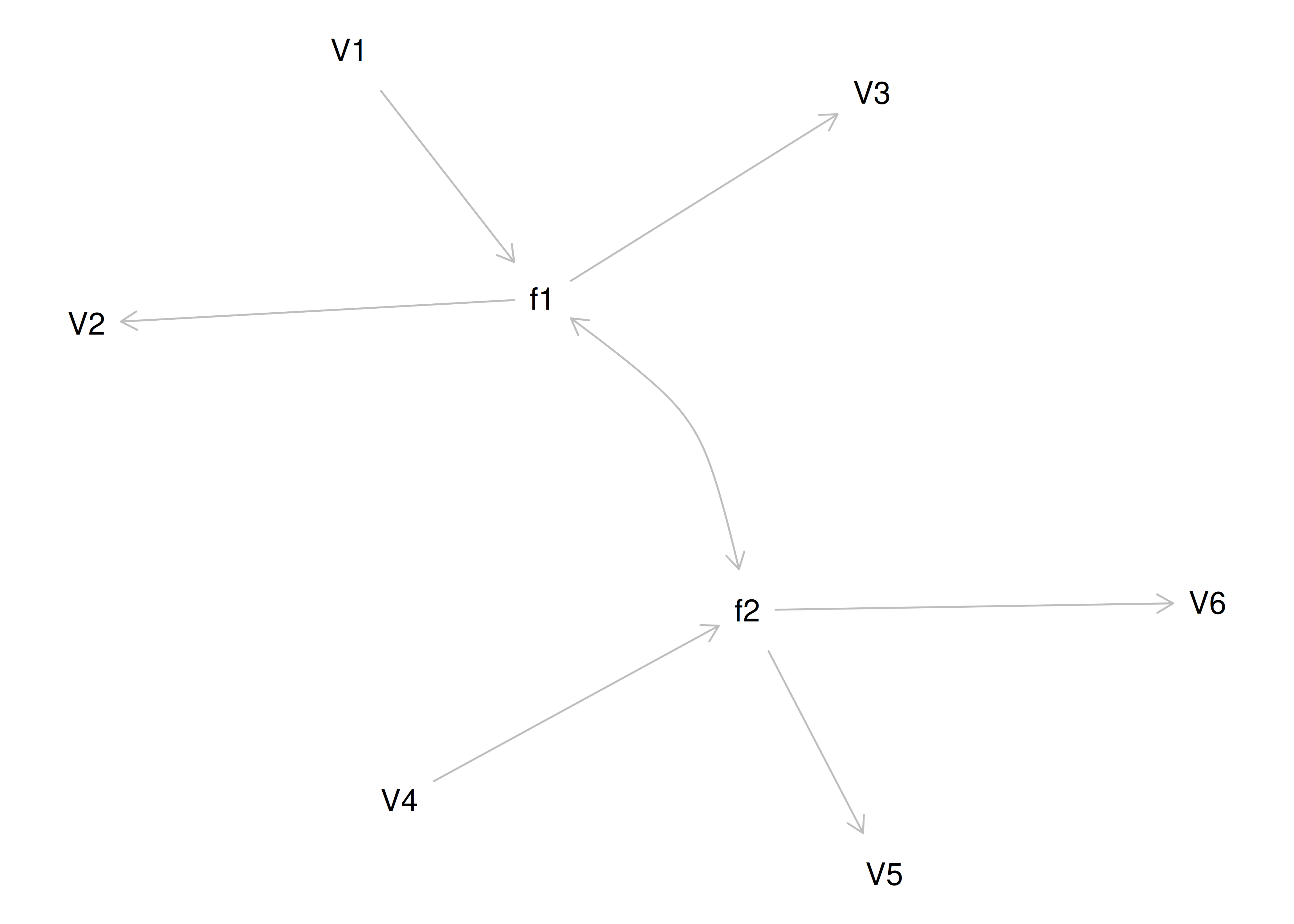

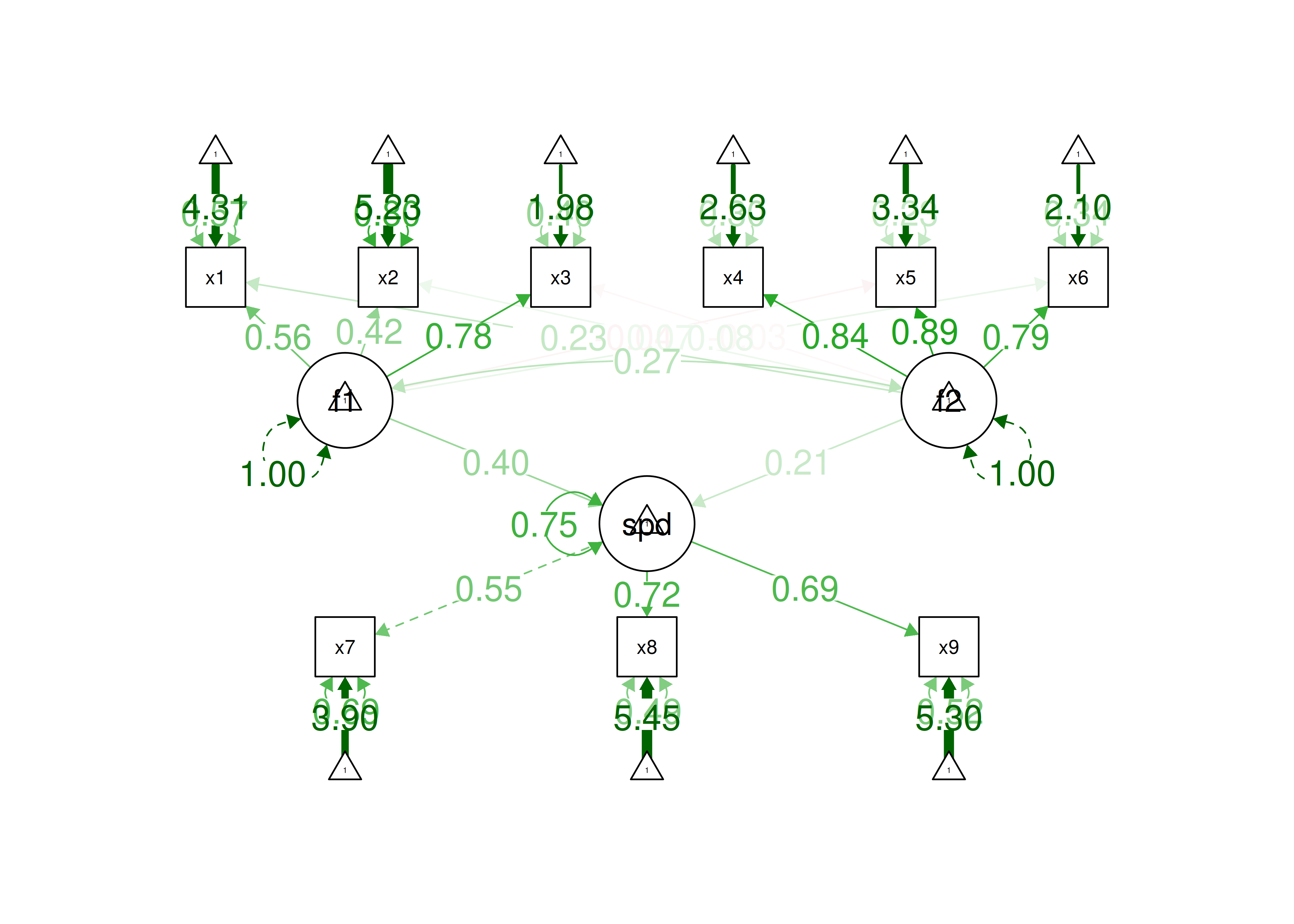

Although going from the model to the correlation matrix is deterministic, going from the correlation matrix to the model is not deterministic. If you know the correlation matrix, there may be many possible models. For instance, the model could also be the one depicted in Figure 14.9, with factor loadings of .77, residual error terms of .40, a regression path of .50, and a disturbance term of .75. The proportion of variance in Factor 2 that is explained by Factor 1 is calculated as: \(.25 = .50 \times .50\). The disturbance term is calculated as \(.75 = 1 - (.50 \times .50) = 1 - .25\). In this model, each latent factor accounts for 60% of the variance in the indicators that load onto it: \(\frac{.77^2 + .77^2 + .77^2}{3} = \frac{.60 + .60 + .60}{3} = .60\). Factor 1 accounts for 15% of the variance in the indicators that load onto Factor 2: \(\frac{(.50^2 \times .77^2) + (.50^2 \times .77^2) + (.50^2 \times .77^2)}{3} = \frac{.15 + .15 + .15}{3} = .15\). This model has the exact same fit as the previous model, but it has different implications. Unlike the previous model, in this model, there is a “causal” pathway from Factor 1 to Factor 2. However, the causal effect of Factor 1 does not account for all of the variance in Factor 2 because the correlation is only .50.

Figure 14.9: Example Confirmatory Factor Analysis Model: Two-Factor Model With Regression Path.

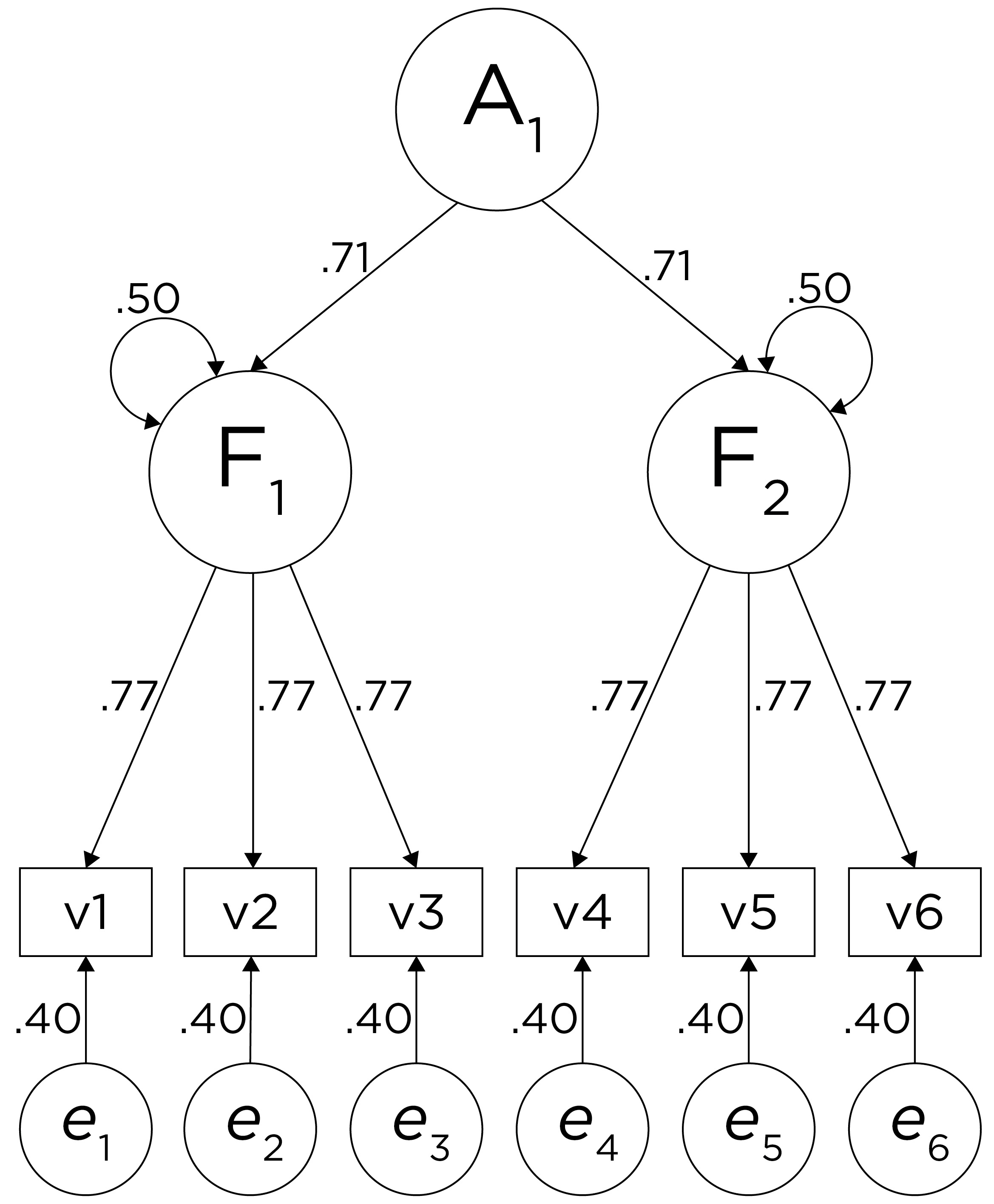

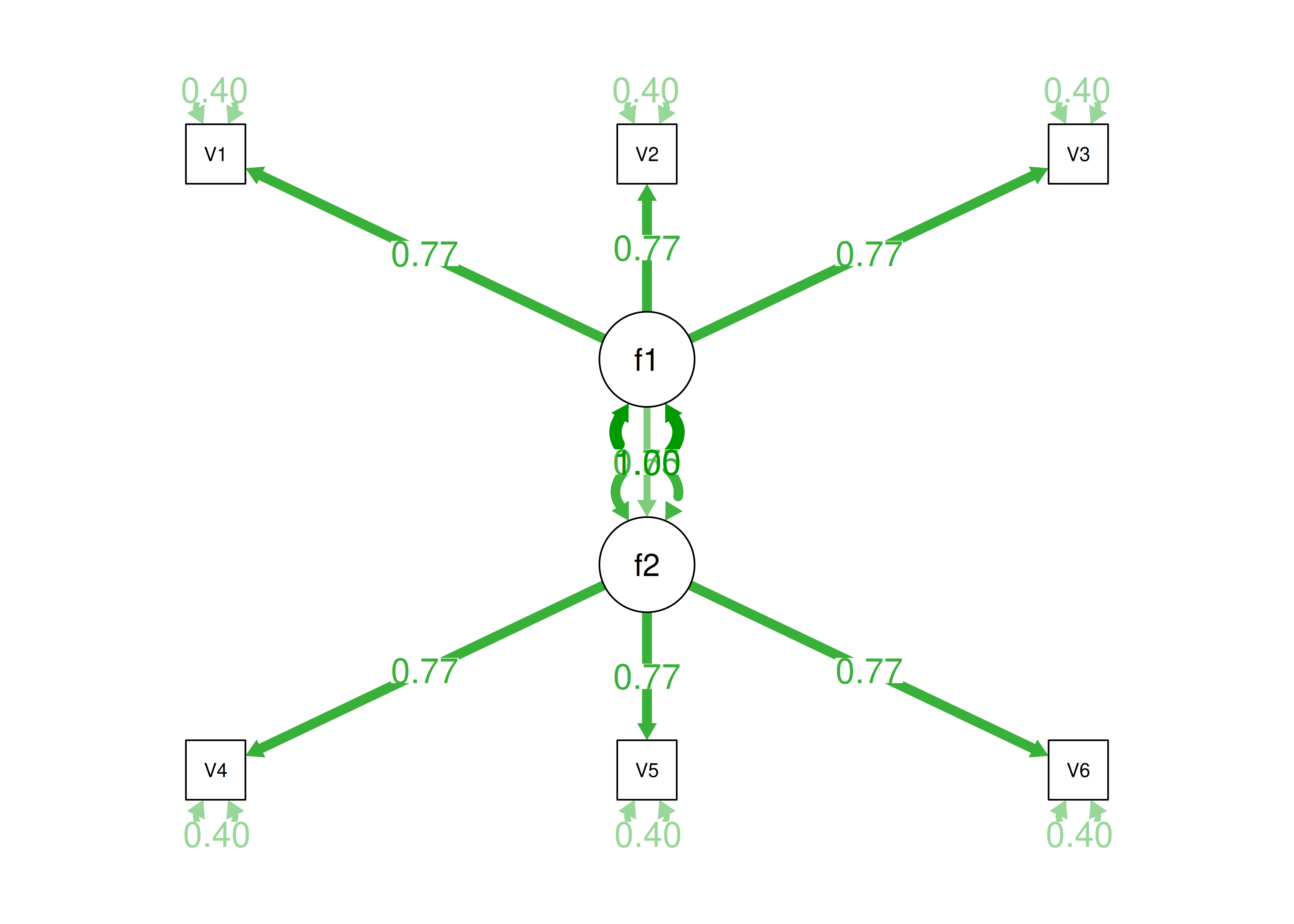

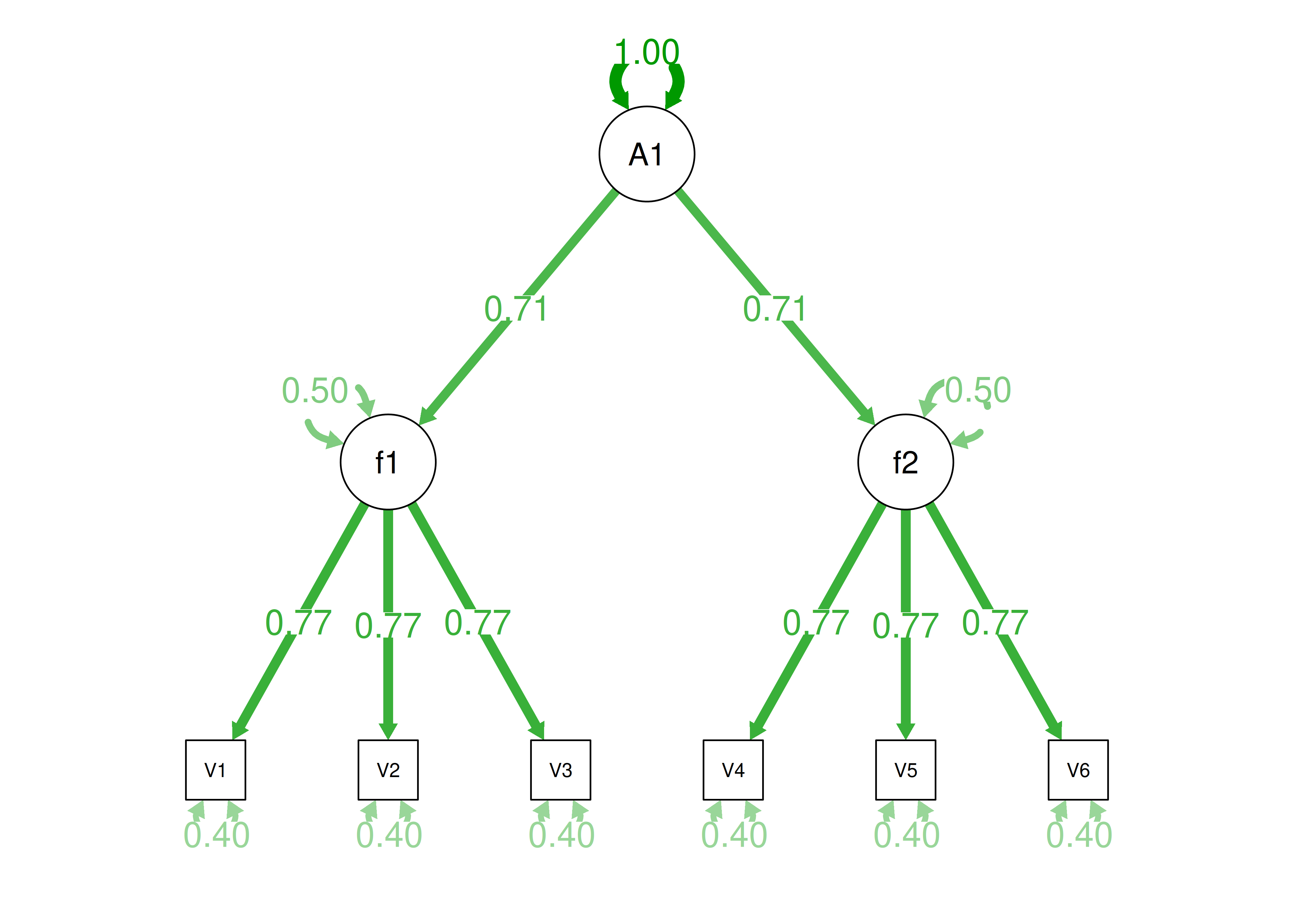

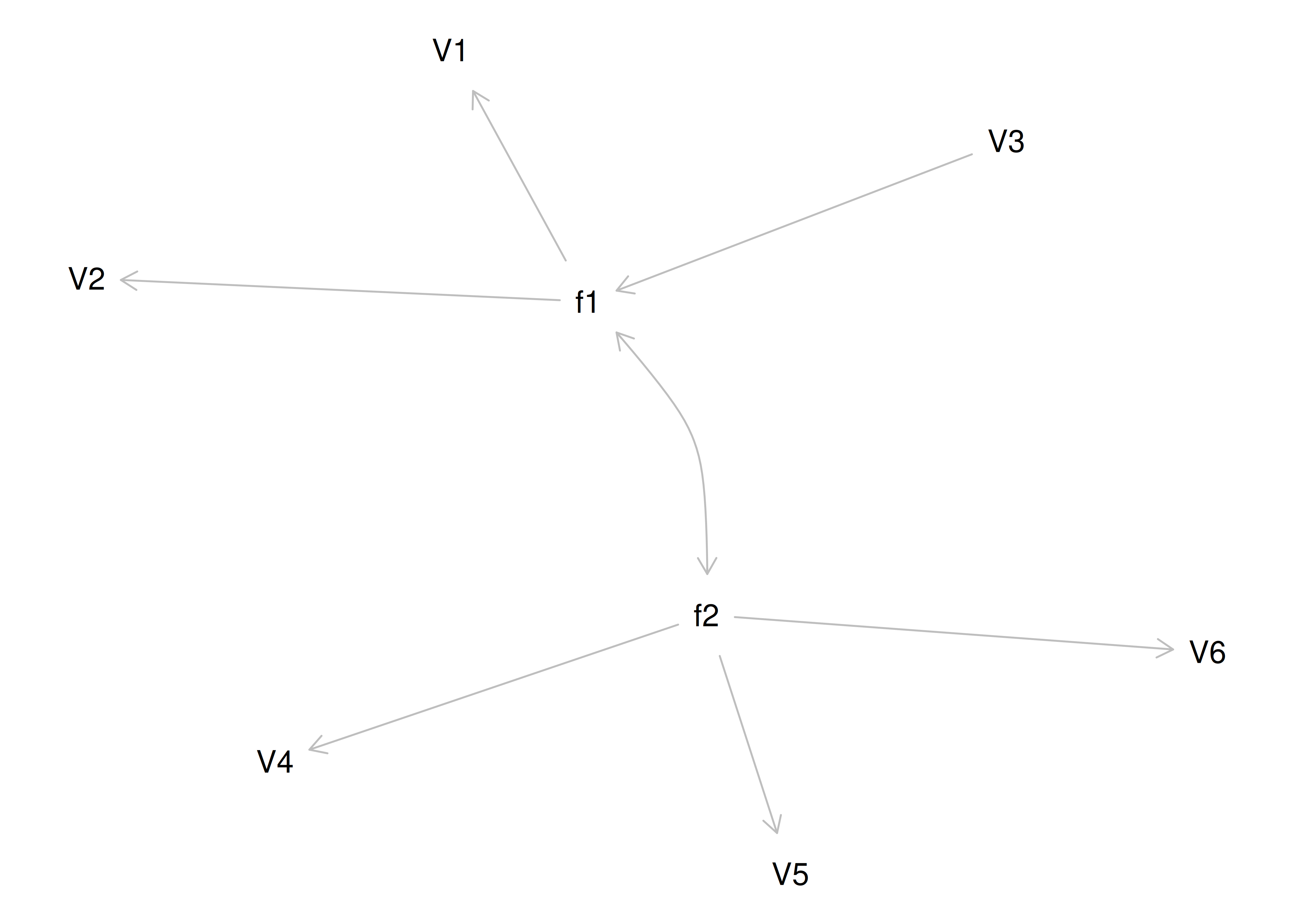

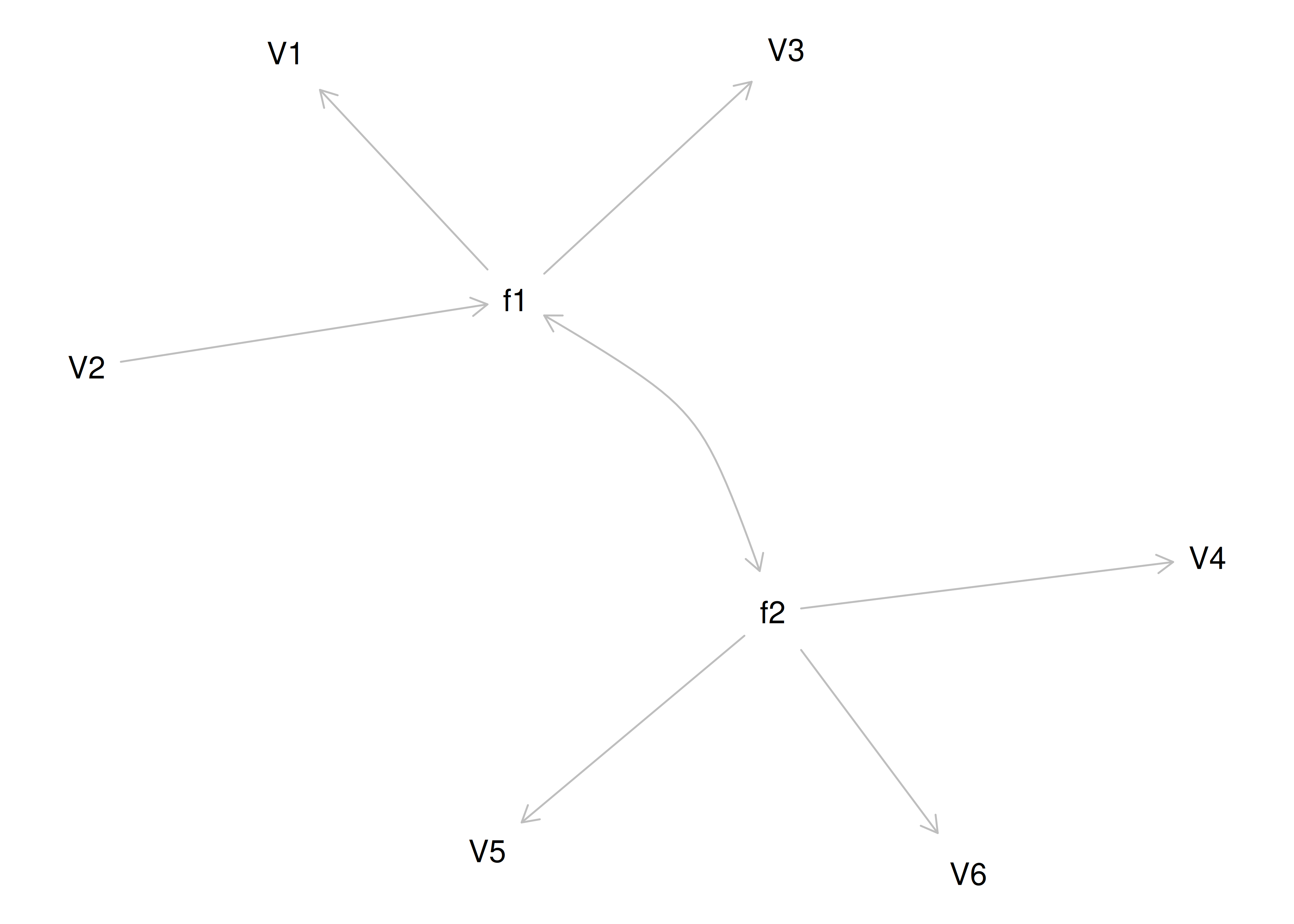

Alternatively, something else (e.g., another factor) could be explaining the data that we have not considered, as depicted in Figure 14.10. This is a higher-order factor model, in which there is a higher-order factor (\(A_1\)) that influences both lower-order factors, Factor 1 (\(F_1\)) and Factor 2 (\(F_2\)). The factor loadings from the lower order factors to the manifest variables are .77, the factor loading from the higher-order factor to the lower-order factors is .71, and the residual error terms are .40. This model has the exact same fit as the previous models. The proportion of variance in a lower-order factor (\(F_1\) or \(F_2\)) that is explained by the higher-order factor (\(A_1\)) is calculated as: \(.50 = .71 \times .71\). The disturbance term is calculated as \(.50 = 1 - (.71 \times .71) = 1 - .50\). Using path tracing rules, the correlation of measures across factors in this model is calculated as: \(.30 = .77 \times .71 \times .71 \times .77\). In this model, the higher-order factor (\(A_1\)) accounts for 30% of the variance in the indicators: \(\frac{(.77^2 \times .71^2) + (.77^2 \times .71^2) + (.77^2 \times .71^2) + (.77^2 \times .71^2) + (.77^2 \times .71^2) + (.77^2 \times .71^2)}{6} = \frac{.30 + .30 + .30 + .30 + .30 + .30}{6} = .30\).

Figure 14.10: Example Confirmatory Factor Analysis Model: Higher-Order Factor Model.

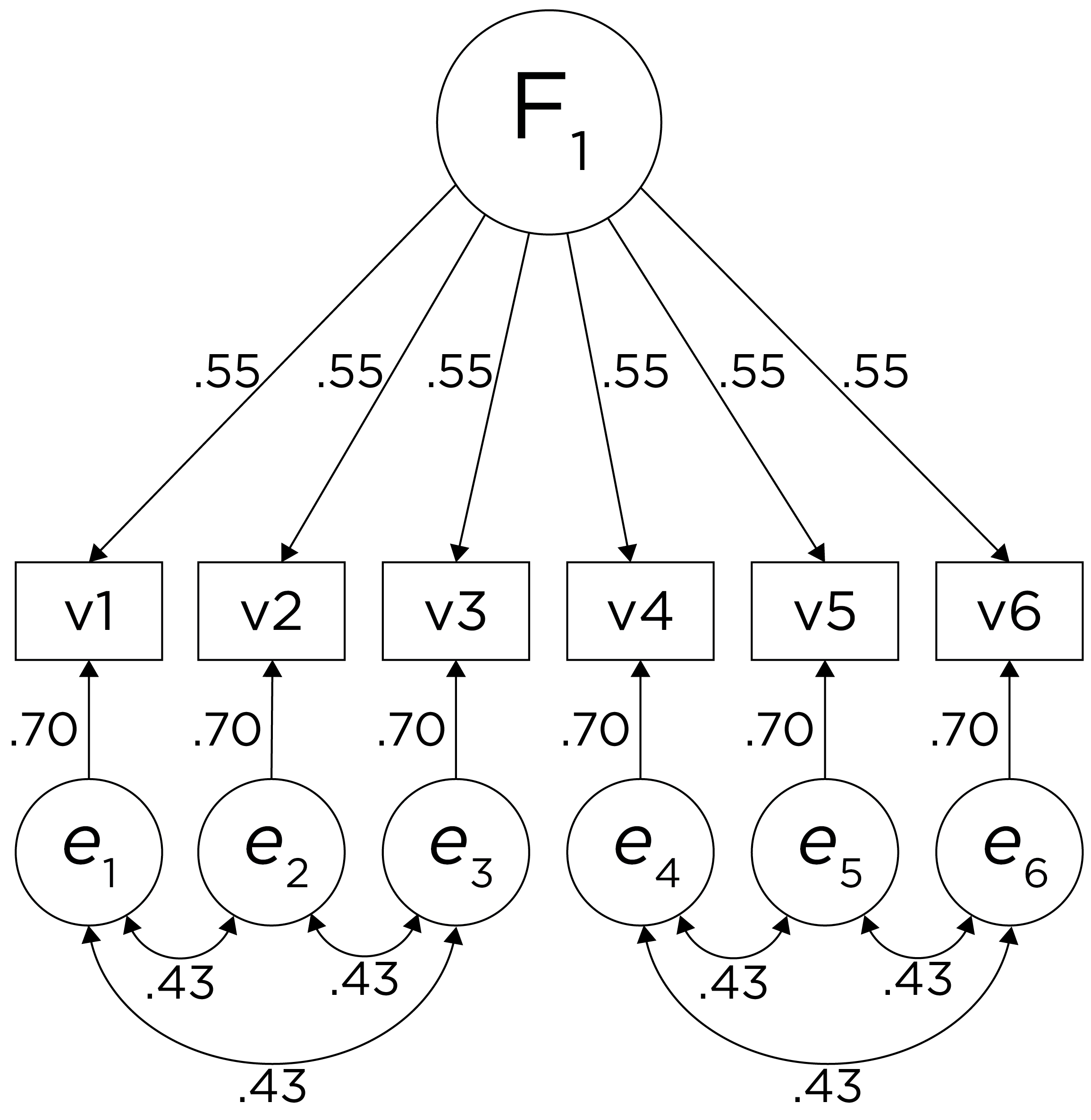

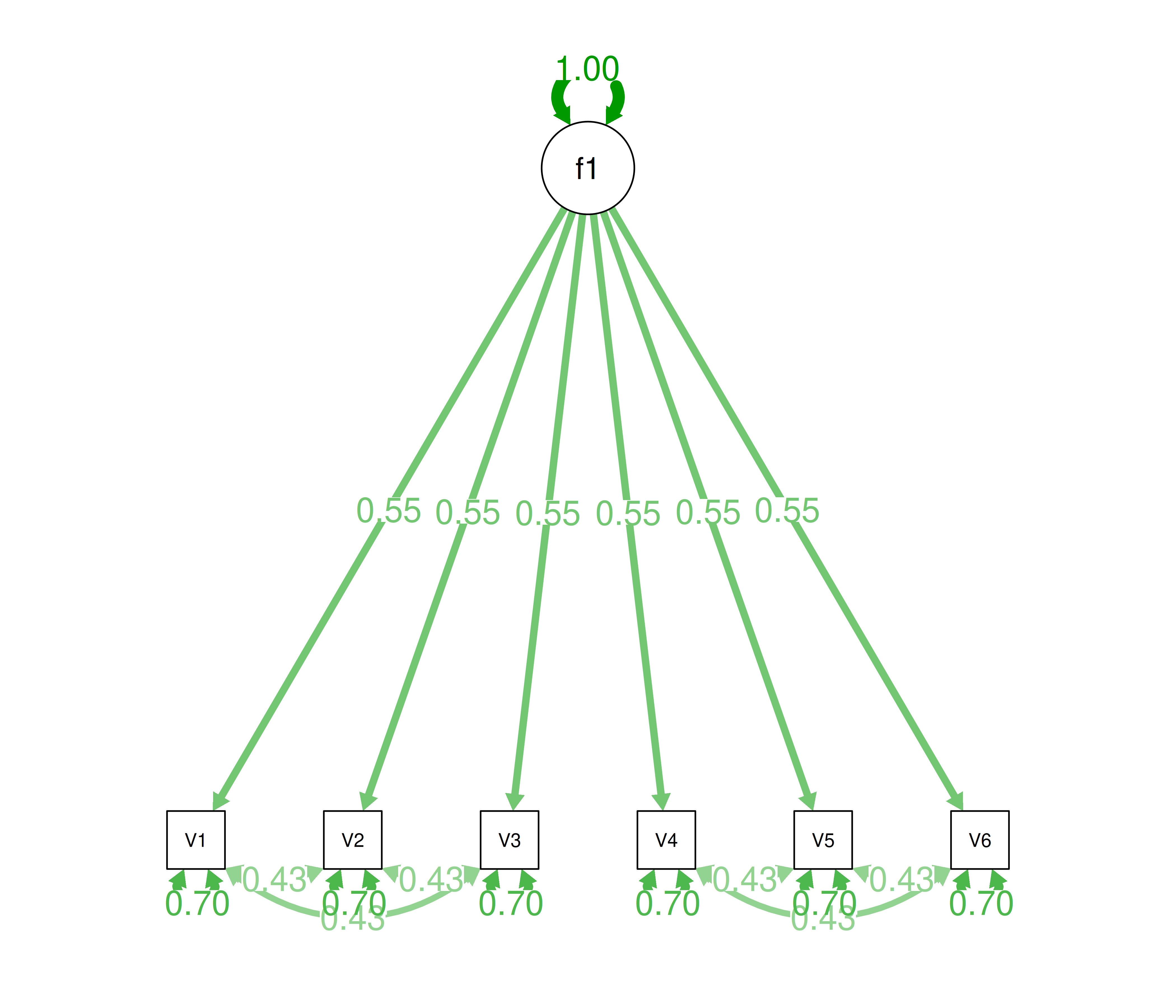

Alternatively, there could be a single factor that ties measures 1, 2, and 3 together and measures 4, 5, and 6 together, as depicted in Figure 14.11. In this model, the measures no longer have merely random error: measures 1, 2, and 3 have correlated residuals—that is, they share error variance (i.e., systematic error); likewise, measures 4, 5, and 6 have correlated residuals. This model has the exact same fit as the previous models. The amount of common variance (\(R^2\)) that is accounted for by an indicator is estimated as the square of the standardized loading: \(.30 = .55 \times .55\). The amount of error for an indicator is estimated as: \(\text{error} = 1 - \text{common variance}\), so in this case, the amount of error is: \(.70 = 1 - .30\). Using path tracing rules, the correlation of measures within a factor in this model is calculated as: \(.60 = (.55 \times .55) + (.70 \times .43 \times .70) + (.70 \times .43 \times .43 \times .70)\). The correlation of measures across factors in this model is calculated as: \(.30 = .55 \times .55\). In this model, the latent factor accounts for 30% of the variance in the indicators: \(\frac{.55^2 + .55^2 + .55^2 + .55^2 + .55^2 + .55^2}{6} = \frac{.30 + .30 + .30 + .30 + .30 + .30}{6} = .30\).

Figure 14.11: Example Confirmatory Factor Analysis Model: Unidimensional Model With Correlated Residuals.

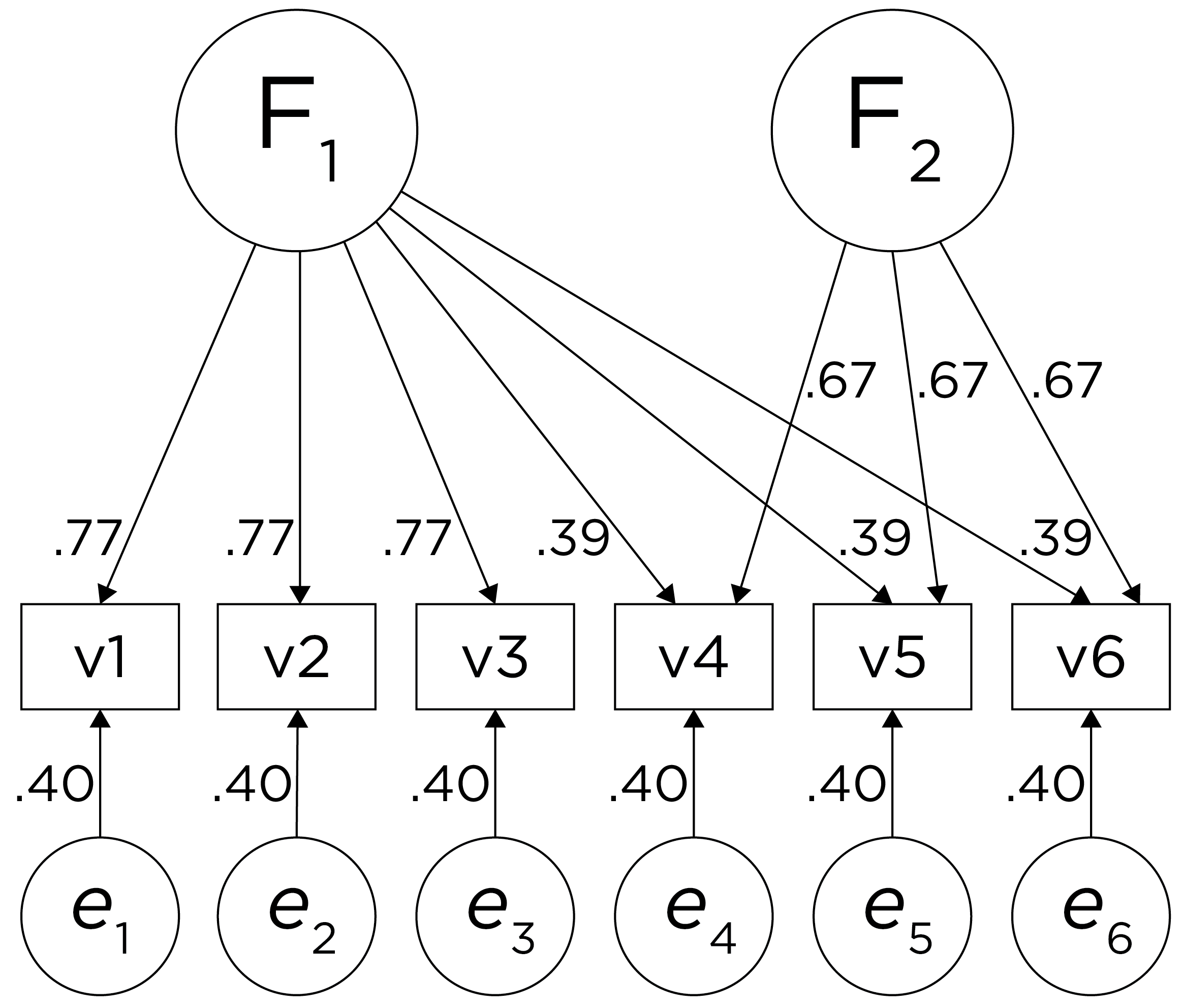

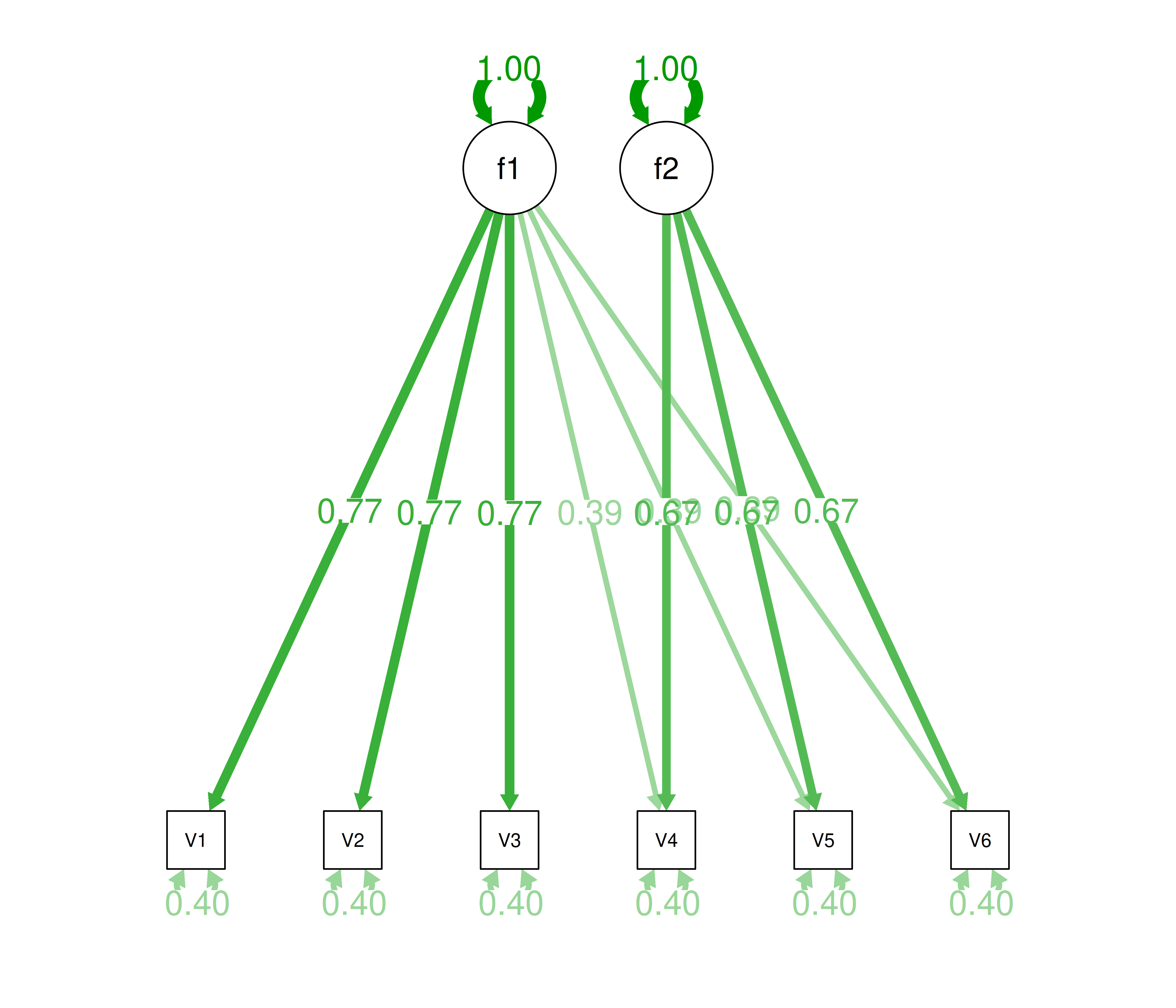

Alternatively, there could be a single factor that influences measures 1, 2, 3, 4, 5, and 6 in addition to a method bias factor (e.g., a particular measurement method, item stem, reverse-worded item, or another method bias) that influences measures 4, 5, and 6 equally, as depicted in Figure 14.12. In this model, measures 4, 5, and 6 have cross-loadings—that is, they load onto more than one latent factor. This model has the exact same fit as the previous models. The amount of common variance (\(R^2\)) that is accounted for by an indicator is estimated as the sum of the squared standardized loadings: \(.60 = .77 \times .77 = (.39 \times .39) + (.67 \times .67)\). The amount of error for an indicator is estimated as: \(\text{error} = 1 - \text{common variance}\), so in this case, the amount of error is: \(.40 = 1 - .60\). Using path tracing rules, the correlation of measures within a factor in this model is calculated as: \(.60 = (.77 \times .77) = (.39 \times .39) + (.67 \times .67)\). The correlation of measures across factors in this model is calculated as: \(.30 = .77 \times .39\). In this model, the first latent factor accounts for 37% of the variance in the indicators: \(\frac{.77^2 + .77^2 + .77^2 + .39^2 + .39^2 + .39^2}{6} = \frac{.59 + .59 + .59 + .15 + .15 + .15}{6} = .30\). The second latent factor accounts for 45% of the variance in its indicators: \(\frac{.67^2 + .67^2 + .67^2}{3} = \frac{.45 + .45 + .45}{3} = .45\).

Figure 14.12: Example Confirmatory Factor Analysis Model: Two-Factor Model With Cross-Loadings.

14.1.2 Indeterminacy

There could be many more models that have the same fit to the data. Thus, factor analysis has indeterminacy because all of these models can explain these same data equally well, with all having different theoretical meaning. Factor indeterminacy reflects uncertainty (Rigdon et al., 2019a). The goal of factor analysis is for the model to look at the data and induce the model. However, most data matrices in real life are very complicated—much more complicated than in these examples.

This is why we do not calculate our own factor analysis by hand; use a stats program! It is important to think about the possibility of other models to determine how confident you can be in your data model. For every fully specified factor model—i.e., where the relevant paths are all defined, there is one and only one predictive data matrix (correlation matrix). However, each data matrix can produce many different factor models. Equivalent models are different models with the same degrees of freedom that have the same fit to the data. Rules for generating equivalent models are provided by S. Lee & Hershberger (1990). There is no way to distinguish which of these factor models is correct from the data matrix alone. Any given data matrix can predict an infinite number of factor models that accurately represent the data structure (Raykov & Marcoulides, 2001)—so we make decisions that determine what type of factor solution our data will yield. In general, factor indeterminacy—and the uncertainty it creates—tends to be greater in models with fewer items and where those items have smaller factor loadings and larger residual variances (Rigdon et al., 2019a). Conversely, factor indeterminacy is lower when there are many items per latent factor, and when loadings and factor correlations are strong (Rigdon et al., 2019b). Further, factor indeterminacy is compounded when creating factor scores for use in separate analyses [rather than estimating the associations within the same model; Rigdon et al. (2019a)]. Thus, where possible, it is preferable to estimate associations between factors and other variables within the same model.

Many models could explain your data, and there are many more models that do not explain the data. For equally good-fitting models, decide based on interpretability. If you have strong theory, decide based on theory and things outside of factor analysis!

14.1.3 Practical Considerations

There are important considerations for doing factor analysis in real life with complex data. Traditionally, researchers had to consider what kind of data they have, and they often assumed interval-level data even though data in psychology are often not interval data. In the past, factor analysis was not good with categorical and dichotomous (e.g., True/False) data because the variance then is largely determined by the mean. So, we need something more complicated for dichotomous data. More solutions are available now for factor analysis with ordinal and dichotomous data, but it is generally best to have at least four ordered categories to perform factor analysis.

The necessary sample size depends on the complexity of the true factor structure. If there is a strong single factor for 30 items, then \(N = 50\) is plenty. But if there are five factors and some correlated errors, then the sample size will need to be closer to ~5,000. Factor analysis can recover the truth when the world is simple. However, nature is often not simple, and it may end in the distortion of nature instead of nature itself.

Recommendations for factor analysis are described by Sellbom & Tellegen (2019).

14.1.4 Decisions to Make in Factor Analysis

There are many decisions to make in factor analysis (Floyd & Widaman, 1995; Sarstedt et al., 2024). These decisions can have important impacts on the resulting solution. Decisions include things such as:

- What variables to include in the model and how to scale them

- Method of factor extraction: factor analysis or PCA

- If factor analysis, the kind of factor analysis: EFA or CFA

- How many factors to retain

- If EFA or PCA, whether and how to rotate factors (factor rotation)

- Model selection and interpretation

14.1.4.1 1. Variables to Include and their Scaling

The first decision when conducting a factor analysis is which variables to include and the scaling of those variables. What factors (or components) you extract can differ widely depending on what variables you include in the analysis. For example, if you include many variables from the same source (e.g., self-report), it is possible that you will extract a factor that represents the common variance among the variables from that source (i.e., the self-reported variables). This would be considered a method factor, which works against the goal of estimating latent factors that represent the constructs of interest (as opposed to the measurement methods used to estimate those constructs).

An additional consideration is the scaling of the variables. Before performing a PCA, it is generally important to ensure that the variables included in the PCA are on the same scale. PCA seeks to identify components that explain variance in the data, so if the variables are not on the same scale, some variables may contribute considerably more variance than others. A common way of ensuring that variables are on the same scale is to standardize them using, for example, z-scores that have a mean of zero and standard deviation of one. By contrast, factor analysis can better accommodate variables that are on different scales.

14.1.4.2 2. Method of Factor Extraction

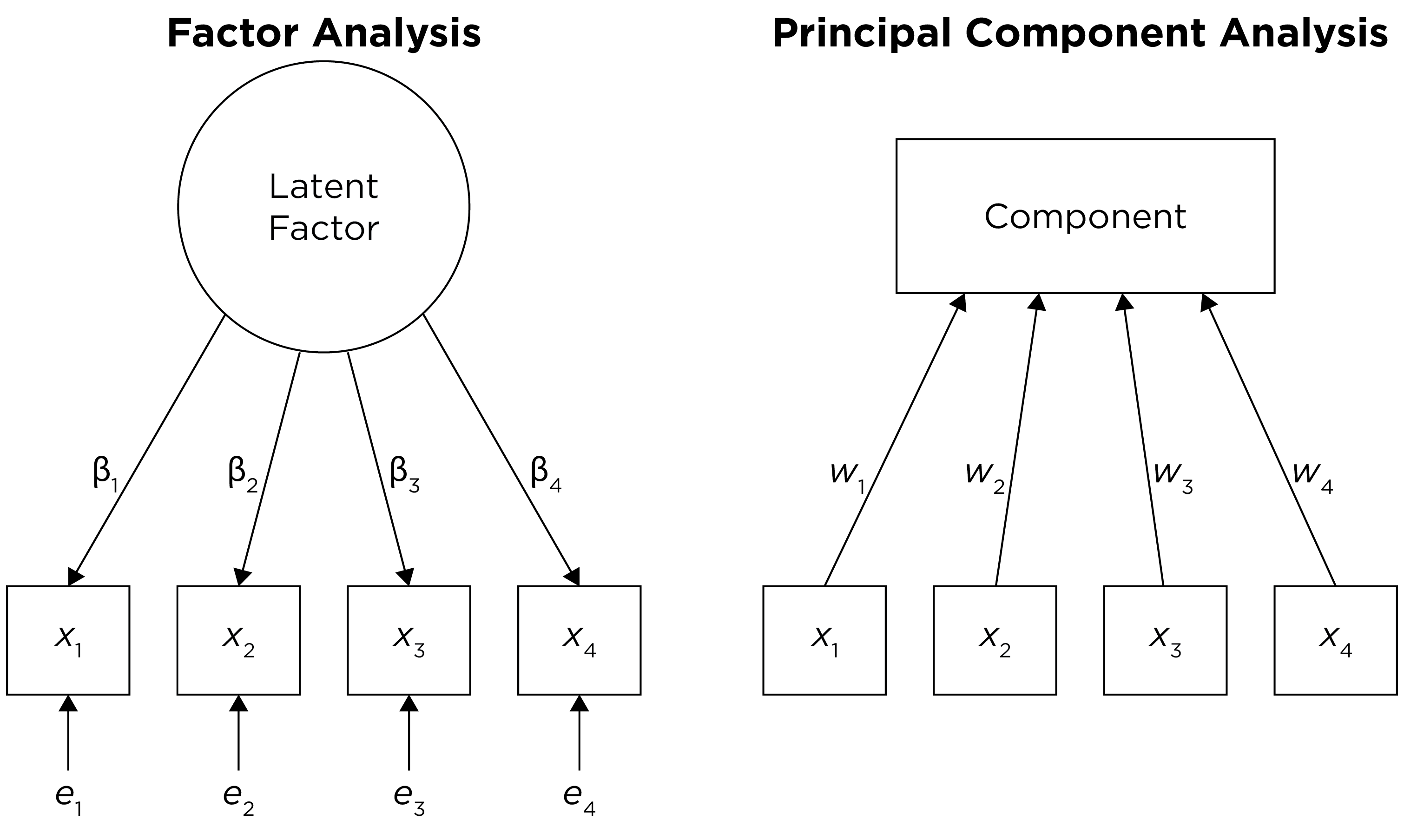

The second decision is to select the method of factor extraction. This is the algorithm that is going to try to identify factors. There are two main families of factor or component extraction: analytic or principal components. The principal components approach is called principal component analysis (PCA). PCA is not really a form factor analysis; rather, it is useful for data reduction (Lilienfeld et al., 2015). The analytic family includes factor analysis approaches such as principal axis factoring and maximum likelihood factor analysis. The distinction between factor analysis and PCA is depicted in Figure 14.13.

Figure 14.13: Distinction Between Factor Analysis and Principal Component Analysis.

14.1.4.2.1 Principal Component Analysis

Principal component analysis (PCA) is used if you want to reduce your data matrix. PCA composites represent the variances of an observed measure in as economical a fashion as possible, with no latent underlying variables. The goal of PCA is to identify a smaller number of components that explain as much variance in a set of variables as possible. It is an atheoretical way to decompose a matrix. PCA involves decomposition of a data matrix into a set of eigenvectors, which are transformations of the old variables.

The eigenvectors attempt to simplify the data in the matrix. PCA takes the data matrix and identifies the weighted sum of all variables that does the best job at explaining variance: these are the principal components, also called eigenvectors. Principal components reflect optimally weighted sums. In this way, PCA is a formative model (by contrast, factor analysis applies a reflective model).

PCA decomposes the data matrix into any number of components—as many as the number of variables, which will always account for all variance. After the model is fit, you can look at the results and discard the components which likely reflect error variance. Judgments about which factors to retain are based on empirical criteria in conjunction with theory to select a parsimonious number of components that account for the majority of variance.

The eigenvalue reflects the amount of variance explained by the component (eigenvector). When using a varimax (orthogonal) rotation, an eigenvalue for a component is calculated as the sum of squared standardized component loadings on that component. When using oblique rotation, however, the items explain more variance than is attributable to their factor loadings because the factors are correlated.

PCA pulls the first principal component out (i.e., the eigenvector that explains the most variance) and makes a new data matrix: i.e., new correlation matrix. Then the PCA pulls out the component that explains the next most variance—i.e., the eigenvector with the next largest eigenvalue, and it does this for all components, equal to the same number of variables. For instance, if there are six variables, it will iteratively extract an additional component up to six components. You can extract as many eigenvectors as there are variables. If you extract all six components, the data matrix left over will be the same as the correlation matrix in Figure 14.3. That is, the remaining variables (as part of the leftover data matrix) will be entirely uncorrelated with each other, because six components explain 100% of the variance from six variables. In other words, you can explain (6) variables with (6) new things!

However, it does no good if you have to use all (6) components because there is no data reduction from the original number of variables. When the goal is data reduction (as in PCA), the hope is that the first few components will explain most of the variance, so we can explain the variability in the data with fewer components than there are variables.

The sum of all eigenvalues is equal to the number of variables in the analysis. PCA does not have the same assumptions as factor analysis, which assumes that measures are partly from common variance and error. But if you estimate (6) eigenvectors and only keep (2), the model is a two-component model and whatever left becomes error. Therefore, PCA does not have the same assumptions as factor analysis, but it often ends up in the same place.

Most people who want to conduct a factor analysis use PCA, but PCA is not really factor analysis (Lilienfeld et al., 2015). PCA is what SPSS can do quickly. But computers are so fast now—just do a real factor analysis! Factor analysis better handles error than PCA—factor analysis assumes that what is in the variable is the combination of common construct variance and error. By contrast, PCA assumes that the measures have no measurement error.

14.1.4.2.2 Factor Analysis

Factor analysis is an analytic approach to factor extraction. Factor analysis is a special case of structural equation modeling (SEM). Factor analysis is an analytic technique that is interested in the factor structure of a measure or set of measures. Factor analysis is a theoretical approach that considers that there are latent theoretical constructs that influence the scores on particular variables. It assumes that part of the explanation for each variable is shared between variables (i.e., common variance), and that part of it is unique variance. The unique variance is considered error. In reality, part of the unique variance is random error and part of it is specific variance—systematic variance unique to the item, which may reflect either a unique facet of the construct or systematic error such as method bias that is specific to the item. The portion of common variance in an item (i.e., the portion of variance that is shared with other variables) is called communality, which is the proportion of the item’s variance that is explained by the latent factors (i.e., the \(R^2\) of an item). In factor analysis and PCA, communality of an item is estimated as the sum of squared standardized loadings for an item across all retained factors/components. The average variance extracted (AVE) is a factor-level index that represents the average communality across all items that load on a given factor. For an individual item, the unique variance (error + specific variance) is: \(1 - \text{communality}\). The common variance is thought to be a purer estimate of the latent construct than the total variance of the item, which includes error variance. However, the common variance includes all variance that is shared across all variables, including construct variance (i.e., true score variance) and systematic error such as method bias.

There are several types of factor analysis, including principal axis factoring and maximum likelihood factor analysis. Factor analysis can be used to test measurement/factorial invariance and for multitrait-multimethod designs. One example of a MTMM model in factor analysis is the correlated traits correlated methods model (Tackett, Lang, et al., 2019).

There are several differences between (real) factor analysis versus PCA. Factor analysis has greater sophistication than PCA, but greater sophistication often results in greater assumptions. Factor analysis does not always work; the data may not always fit to a factor analysis model; therefore, use PCA as a second/last option. PCA can decompose any data matrix; it always works. PCA is okay if you are not interested in the factor structure. PCA uses all variance of variables and assumes variables have no error, so it does not account for measurement error. PCA is good if you just want to form a linear composite and if the causal structure is formative (rather than reflective). However, if you are interested in the factor structure, use factor analysis, which estimates a latent variable that accounts for the common variance and discards error variance. Factor analysis is useful for the identification of latent constructs—i.e., underlying dimensions or factors that explain (cause) scores on items.

14.1.4.3 3. EFA or CFA

A third decision is the kind of factor analysis to use: exploratory factor analysis (EFA) or confirmatory factor analysis (CFA).

14.1.4.3.1 Exploratory Factor Analysis (EFA)

Exploratory factor analysis (EFA) is used if you have no a priori hypotheses about the factor structure of the model, but you would like to understand the latent variables represented by your items.

EFA is partly induced from the data. You feed in the data and let the program build the factor model. You can set some parameters going in, including how to extract or rotate the factors. The factors are extracted from the data without specifying the number and pattern of loadings between the items and the latent factors (Bollen, 2002). All cross-loadings are freely estimated.

14.1.4.3.2 Confirmatory Factor Analysis (CFA)

Confirmatory factor analysis (CFA) is used to (dis)confirm a priori hypotheses about the factor structure of the model. CFA is a test of the hypothesis. In CFA, you specify the model and ask how well this model represents the data. The researcher specifies the number, meaning, associations, and pattern of free parameters in the factor loading matrix (Bollen, 2002). A key advantage of CFA is the ability to directly compare alternative models (i.e., factor structures), which is valuable for theory testing (Strauss & Smith, 2009). For instance, you could use CFA to test whether the variance in several measures’ scores is best explained with one factor or two factors. In CFA, cross-loadings are not estimated unless the researcher specifies them.

14.1.4.3.3 Exploratory Structural Equation Modeling (ESEM)

In real life, there is not a clear distinction between EFA and CFA. In CFA, researchers often set only half of the constraints, and let the data fill in the rest. Moreover, our initial hypothesized CFA model is often not a good fit to the data—especially for models with many items (Floyd & Widaman, 1995)—and requires modification for adequate fit, such as cross loadings and correlated residuals. Such modifications are often made based on empirical criteria (e.g., modification indices) rather than our initial hypothesis. In EFA, researchers often set constraints and assumptions. Moreover, the initial items for the EFA were selected by the researcher, who often has expectations about the number and types of factors that will be extracted. Thus, the line between EFA and CFA is often blurred. There is an exploratory–confirmatory continuum, and the important thing is to report which aspects of your modeling approach were exploratory and which were confirmatory.

EFA and CFA can be considered special cases of exploratory structural equation modeling (ESEM), which combines features of EFA, CFA, and SEM (Marsh et al., 2014). ESEM can include any combination of exploratory (i.e., EFA) and confirmatory (CFA) factors. ESEM, unlike traditional CFA models, typically estimates all cross-loadings—at least for the exploratory factors. If a CFA model without cross-loadings and correlated residuals fits as well as an ESEM model with all cross-loadings, the CFA model should be retained for its simplicity. However, ESEM models often fit better than CFA models because requiring no cross-loadings is an unrealistic expectation of items from many psychological instruments (Marsh et al., 2014). The correlations between factors tend to be positively biased when fitting CFA models without cross-loadings, which leads to challenges in using CFA to establish discriminant validity (Marsh et al., 2014). Thus, compared to CFA, ESEM has the potential to more accurately estimate factor correlations and establish discriminant validity (Marsh et al., 2014). Moreover, ESEM can be useful in a multitrait-multimethod framework. We provide examples of ESEM in Section 14.4.3.

14.1.4.4 4. How Many Factors to Retain

A goal of factor analysis and PCA is simplification or parsimony, while still explaining as much variance as possible. The hope is that you can have fewer factors that explain the associations between the variables than the number of observed variables. The fewer the number of factors retained, the greater the parsimony, but the greater the amount of information that is discarded (i.e., the less the variance accounted for in the variables). The more factors we retain, the less the amount of information that is discarded (i.e., more variance is accounted for in the variables), but the less the parsimony. But how do you decide on the number of factors (in factor analysis) or components (in PCA)?

There are a number of criteria that one can use to help determine how many factors/components to keep:

- Kaiser-Guttman criterion (Kaiser, 1960): in PCA, components with eigenvalues greater than 1

- or, for factor analysis, factors with eigenvalues greater than zero

- Cattell’s scree test (Cattell, 1966): the “elbow” (inflection point) in a scree plot minus one; sometimes operationalized with optimal coordinates (OC) or the acceleration factor (AF)

- Parallel analysis: factors that explain more variance than randomly simulated data

- Very simple structure (VSS) criterion: larger is better

- Velicer’s minimum average partial (MAP) test: smaller is better

- Akaike information criterion (AIC): smaller is better

- Bayesian information criterion (BIC): smaller is better

- Sample size-adjusted BIC (SABIC): smaller is better

- Root mean square error of approximation (RMSEA): smaller is better

- Chi-square difference test: smaller is better; a significant test indicates that the more complex model is significantly better fitting than the less complex model

- Standardized root mean square residual (SRMR): smaller is better

- Comparative Fit Index (CFI): larger is better

- Tucker Lewis Index (TLI): larger is better

There is not necessarily a “correct” criterion to use in determining how many factors to keep, so it is generally recommended that researchers use multiple criteria in combination with theory and interpretability.

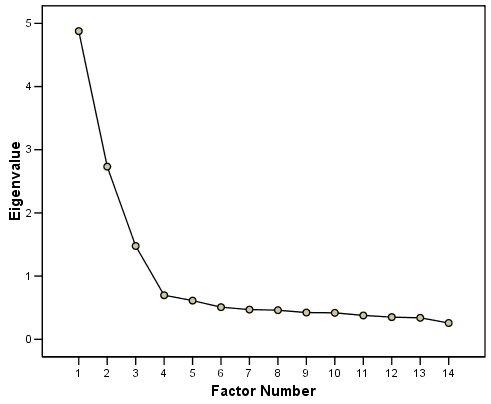

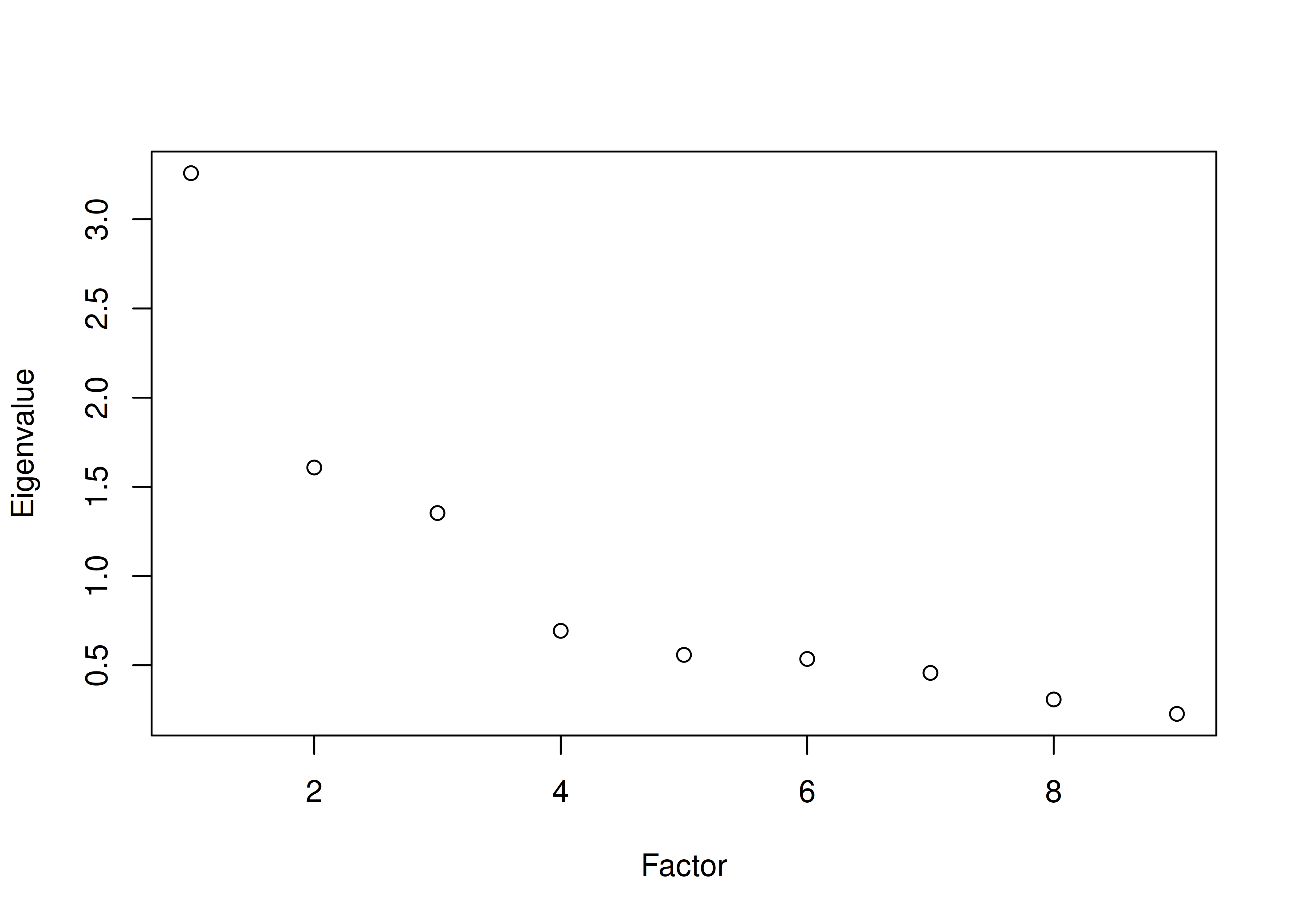

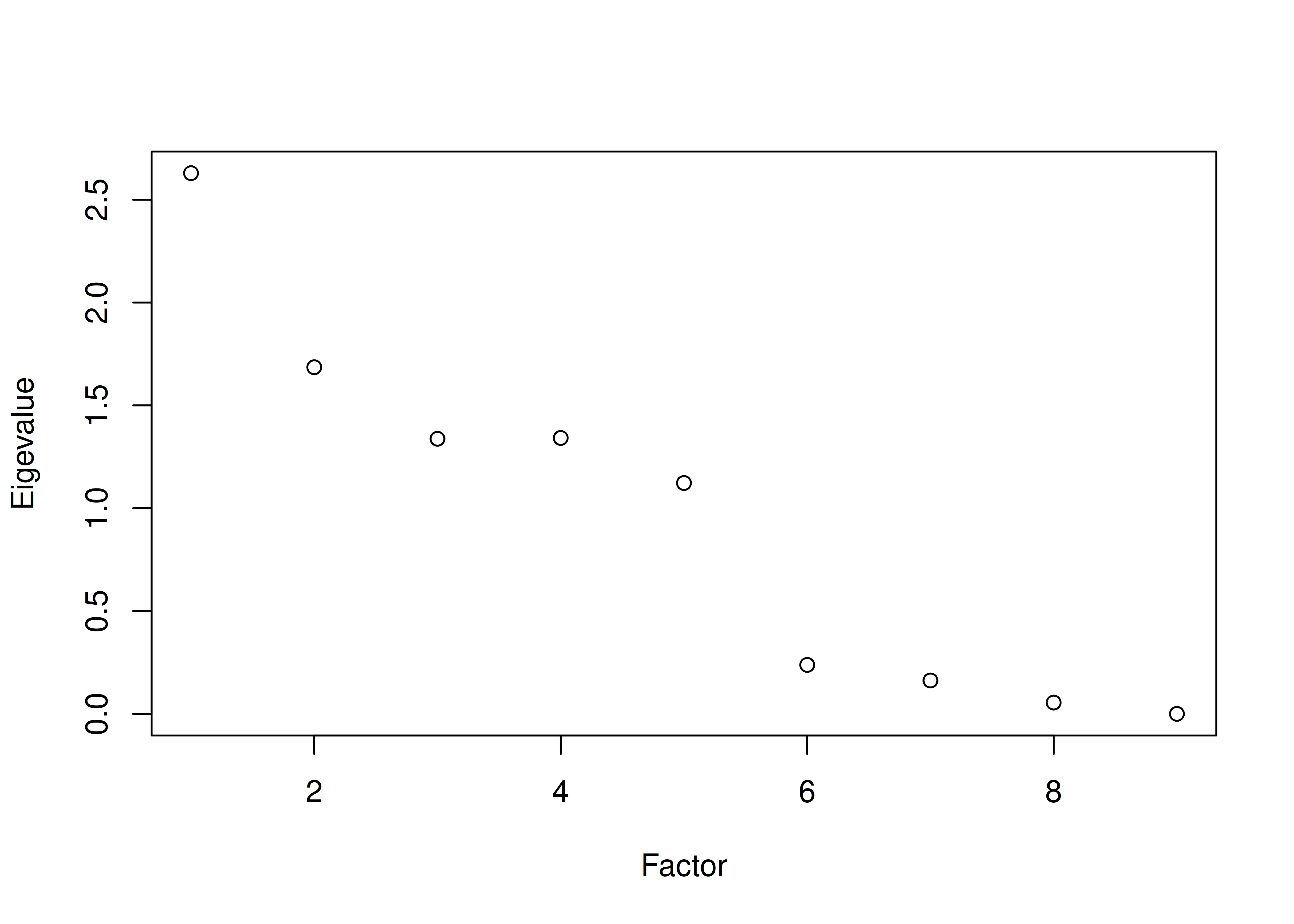

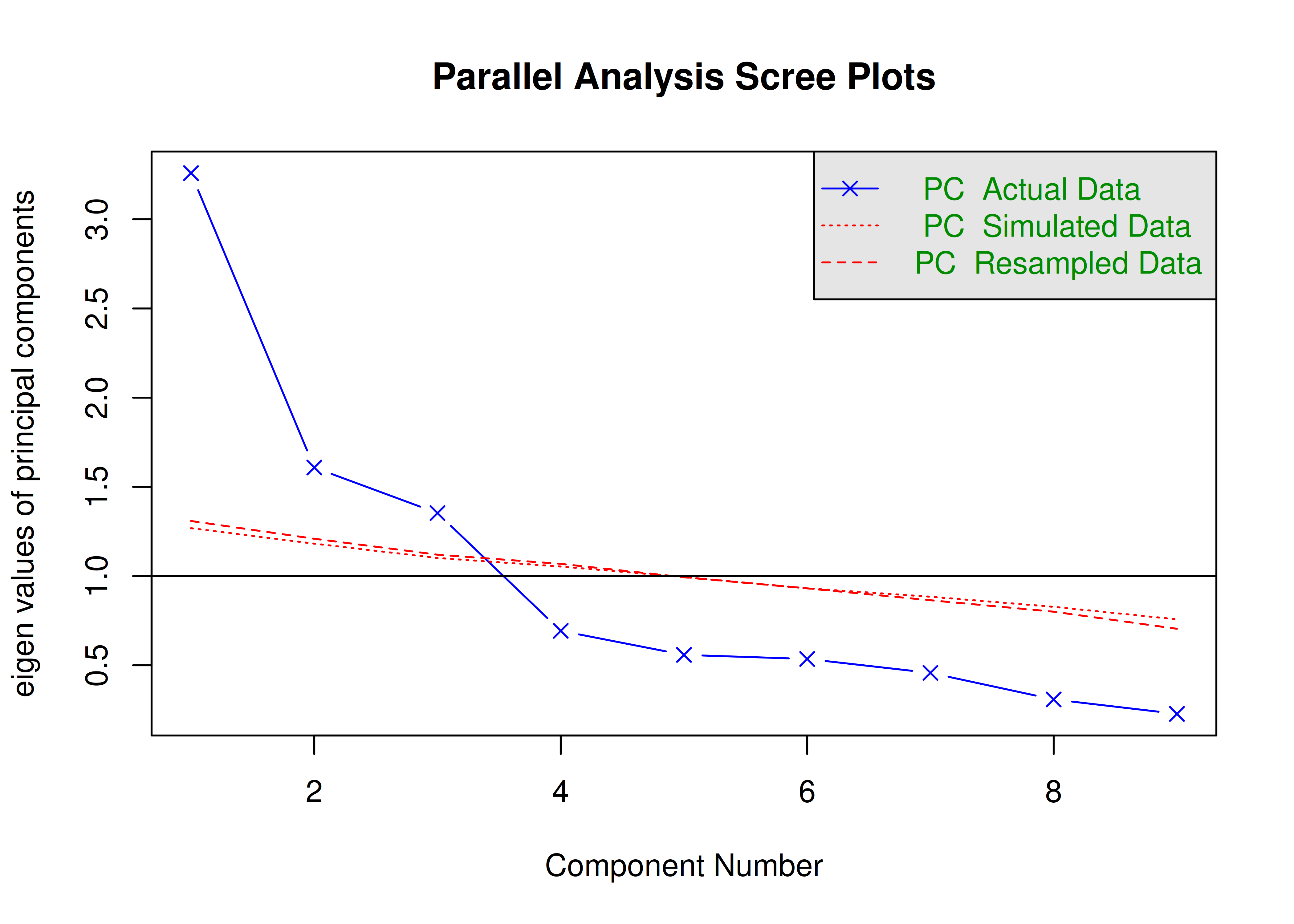

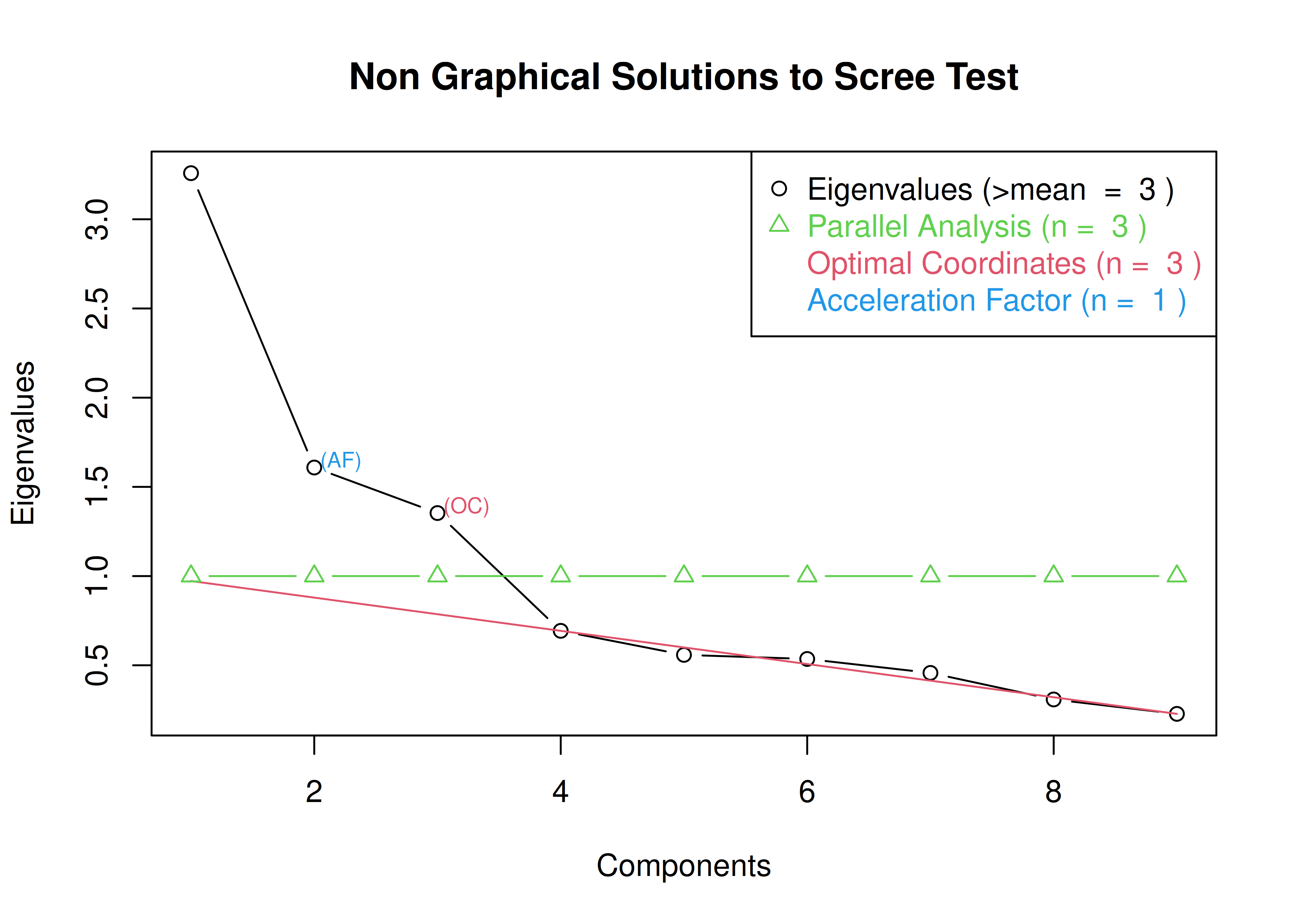

A scree plot from a factor analysis or PCA provides lots of information. A scree plot has the factor number on the x-axis and the eigenvalue on the y-axis. The eigenvalue is the variance accounted for by a factor; when using a varimax (orthogonal) rotation, an eigenvalue (or factor variance) is calculated as the sum of squared standardized factor (or component) loadings on that factor. An example of a scree plot is in Figure 14.14.

Figure 14.14: Example of a Scree Plot.

The total variance is equal to the number of variables you have, so one eigenvalue is approximately one variable’s worth of variance. In a factor analysis and PCA, the first factor (or component) accounts for the most variance, the second factor accounts for the second-most variance, and so on. The more factors you add, the less variance is explained by the additional factor.

One criterion for how many factors to keep is the Kaiser-Guttman criterion. According to the Kaiser-Guttman criterion (Kaiser, 1960), you should keep any factors whose eigenvalue is greater than 1. That is, for the sake of simplicity, parsimony, and data reduction, you should take any factors that explain more than a single variable would explain. According to the Kaiser-Guttman criterion, we would keep three factors from Figure 14.14 that have eigenvalues greater than 1. The default in SPSS is to retain factors with eigenvalues greater than 1. However, keeping factors whose eigenvalue is greater than 1 is not the most correct rule. If you let SPSS do this, you may get many factors with eigenvalues around 1 (e.g., factors with an eigenvalue ~ 1.0001) that are not adding so much that it is worth the added complexity. The Kaiser-Guttman criterion usually results in keeping too many factors. Factors with small eigenvalues around 1 could reflect error shared across variables. For instance, factors with small eigenvalues could reflect method variance (i.e., method factor), such as a self-report factor that turns up as a factor in factor analysis, but that may be useless to you as a conceptual factor of a construct of interest.

Another criterion is Cattell’s scree test (Cattell, 1966), which involves selecting the number of factors from looking at the scree plot. “Scree” refers to the rubble of stones at the bottom of a mountain. According to Cattell’s scree test, you should keep the factors before the last steep drop in eigenvalues—i.e., the factors before the rubble, where the slope approaches zero. The beginning of the scree (or rubble), where the slope approaches zero, is called the “elbow” of a scree plot. Using Cattell’s scree test, you retain the number of factors that explain the most variance prior to the explained variance drop-off, because, ultimately, you want to include only as many factors in which you gain substantially more by the inclusion of these factors. That is, you would keep the number of factors at the elbow of the scree plot minus one. If the last steep drop occurs from Factor 4 to Factor 5 and the elbow is at Factor 5, we would keep four factors. In Figure 14.14, the last steep drop in eigenvalues occurs from Factor 3 to Factor 4; the elbow of the scree plot occurs at Factor 4. We would keep the number of factors at the elbow minus one. Thus, using Cattell’s scree test, we would keep three factors based on Figure 14.14.

There are more sophisticated ways of using a scree plot, but they usually end up at a similar decision. Examples of more sophisticated tests include parallel analysis and very simple structure (VSS) plots. In a parallel analysis, you examine where the eigenvalues from observed data and random data converge, so you do not retain a factor that explains less variance than would be expected by random chance. A parallel analysis can be helpful when you have many variables and one factor accounts for the majority of the variance such that the elbow is at Factor 2 (which would result in keeping one factor), but you have theoretical reasons to select more than one factor. An example in which parallel analysis may be helpful is with neurophysiological data. For instance, parallel analysis can be helpful when conducting temporo-spatial PCA of event-related potential (ERP) data in which you want to separate multiple time windows and multiple spatial locations despite a predominant signal during a given time window and spatial location (Dien, 2012).

In general, my recommendation is to use Cattell’s scree test, and then test the factor solutions with plus or minus one factor. You should never accept PCA components with eigenvalues less than one (or factors with eigenvalues less than zero), because they are likely to be largely composed of error. If you are using maximum likelihood factor analysis, you can compare the fit of various models with model fit criteria to see which model fits best for its parsimony. A model will always fit better when you add additional parameters or factors, so you examine if there is significant improvement in model fit when adding the additional factor—that is, we keep adding complexity until additional complexity does not buy us much. Always try a factor solution that is one less and one more than suggested by Cattell’s scree test to buffer your final solution because the purpose of factor analysis is to explain things and to have interpretability. Even if all rules or indicators suggest to keep X number of factors, maybe \(\pm\) one factor helps clarify things. Even though factor analysis is empirical, theory and interpretatability should also inform decisions.

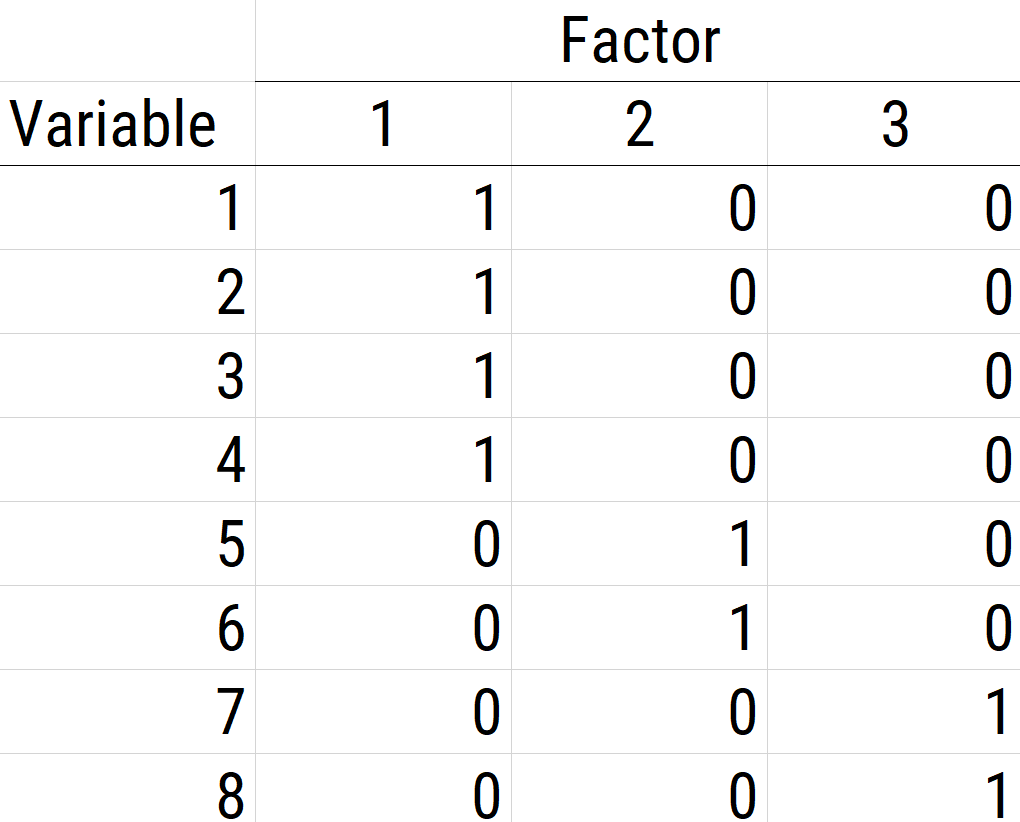

14.1.4.5 5. Factor Rotation

The next step if using EFA or PCA is, possibly, to rotate the factors to make them more interpretable and simple, which is the whole goal. To interpret the results of a factor analysis, we examine the factor matrix. The columns refer to the different factors; the rows refer to the different observed variables. The cells in the table are the factor loadings—they are basically the correlation between the variable and the factor. Our goal is to achieve a model with simple structure because it is easily interpretable. Simple structure means that every variable loads perfectly on one and only one factor, as operationalized by a matrix of factor loadings with values of one and zero and nothing else. An example of a factor matrix that follows simple structure is depicted in Figure 14.15.

Figure 14.15: Example of a Factor Matrix That Follows Simple Structure.

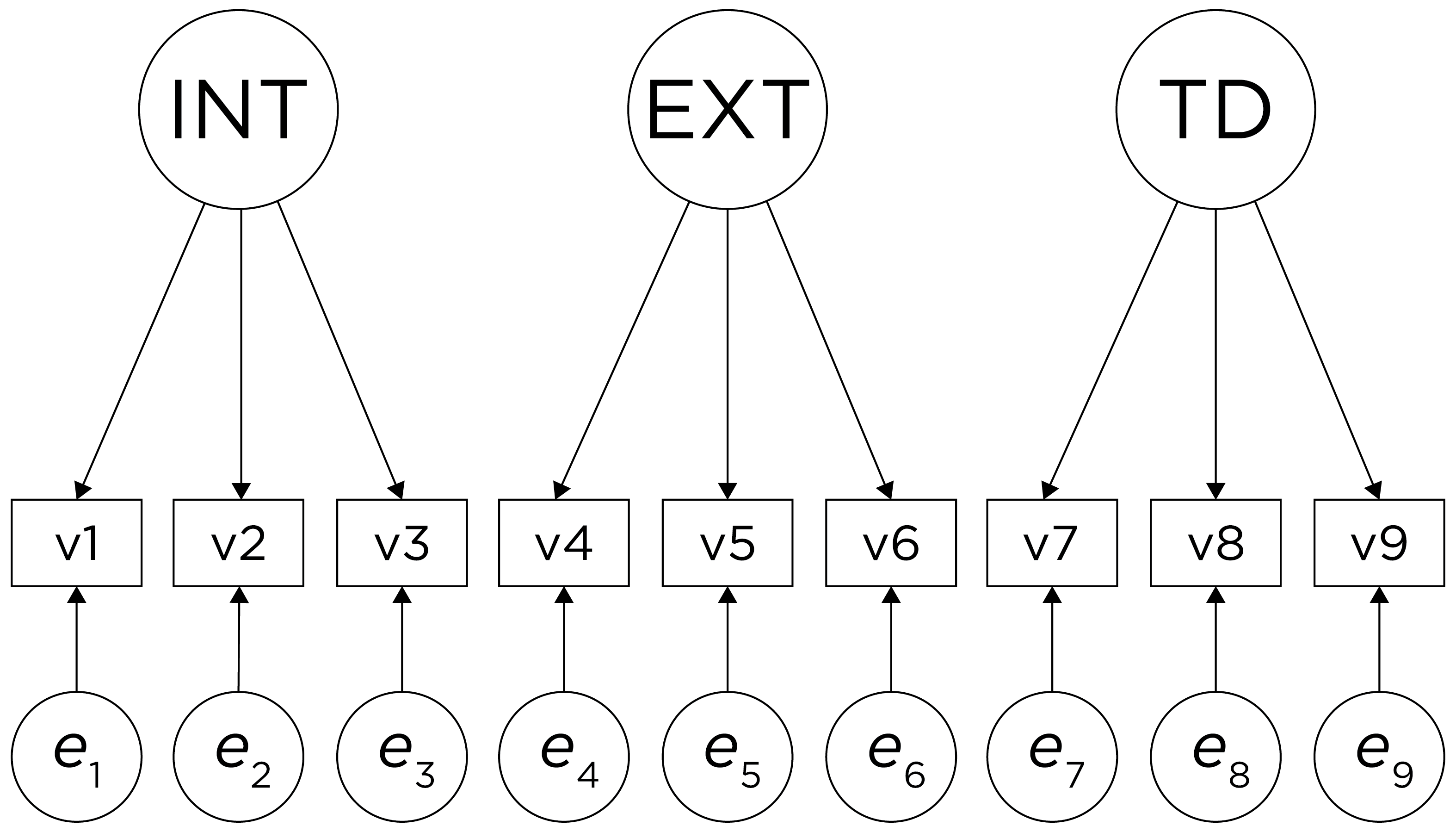

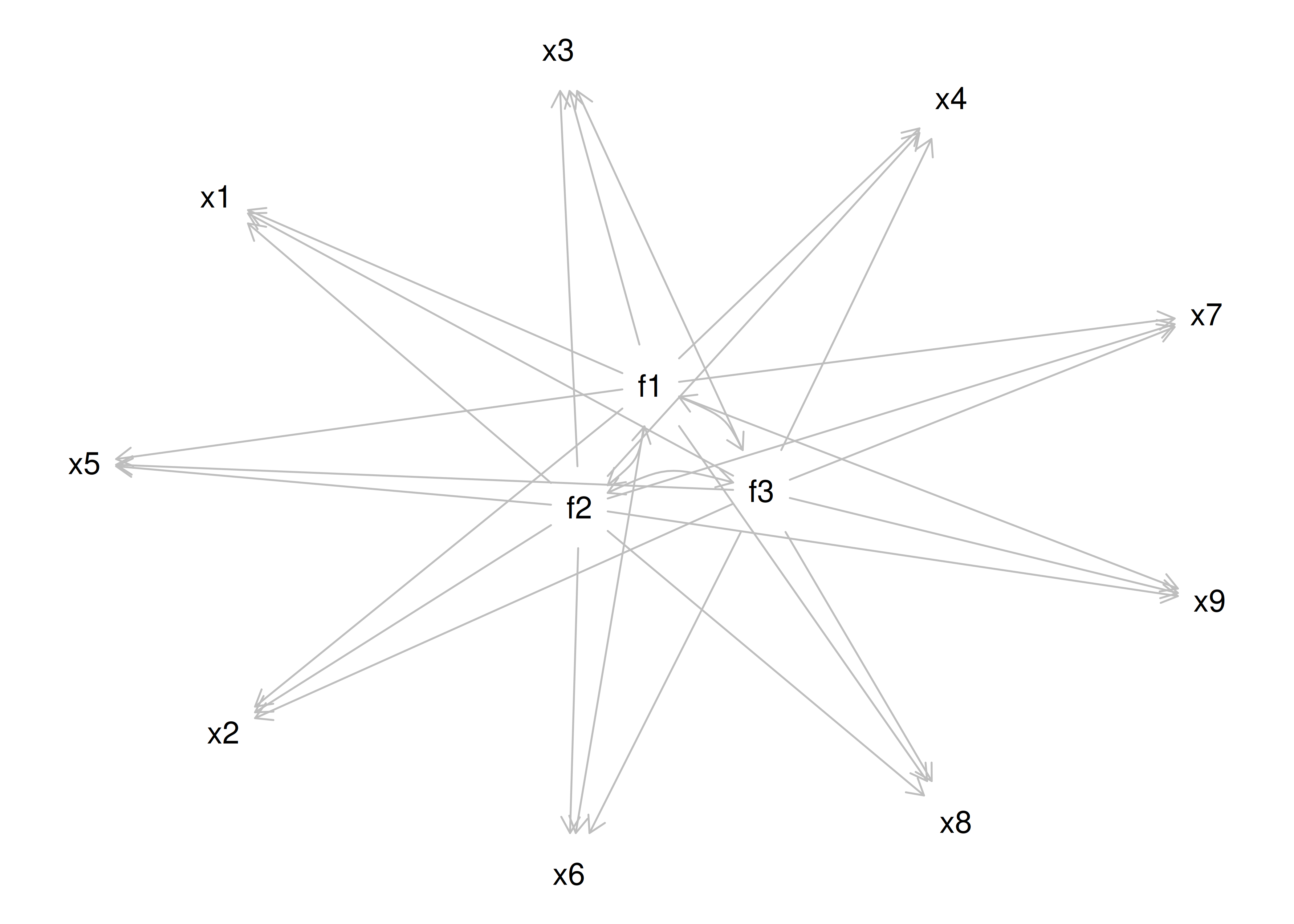

An example of a measurement model that follows simple structure is depicted in Figure 14.16. Each variable loads onto one and only one factor, which makes it easy to interpret the meaning of each factor, because a given factor represents the common variance among the items that load onto it.

Figure 14.16: Example of a Measurement Model That Follows Simple Structure. ‘INT’ = internalizing problems; ‘EXT’ = externalizing problems; ‘TD’ = thought-disordered problems.

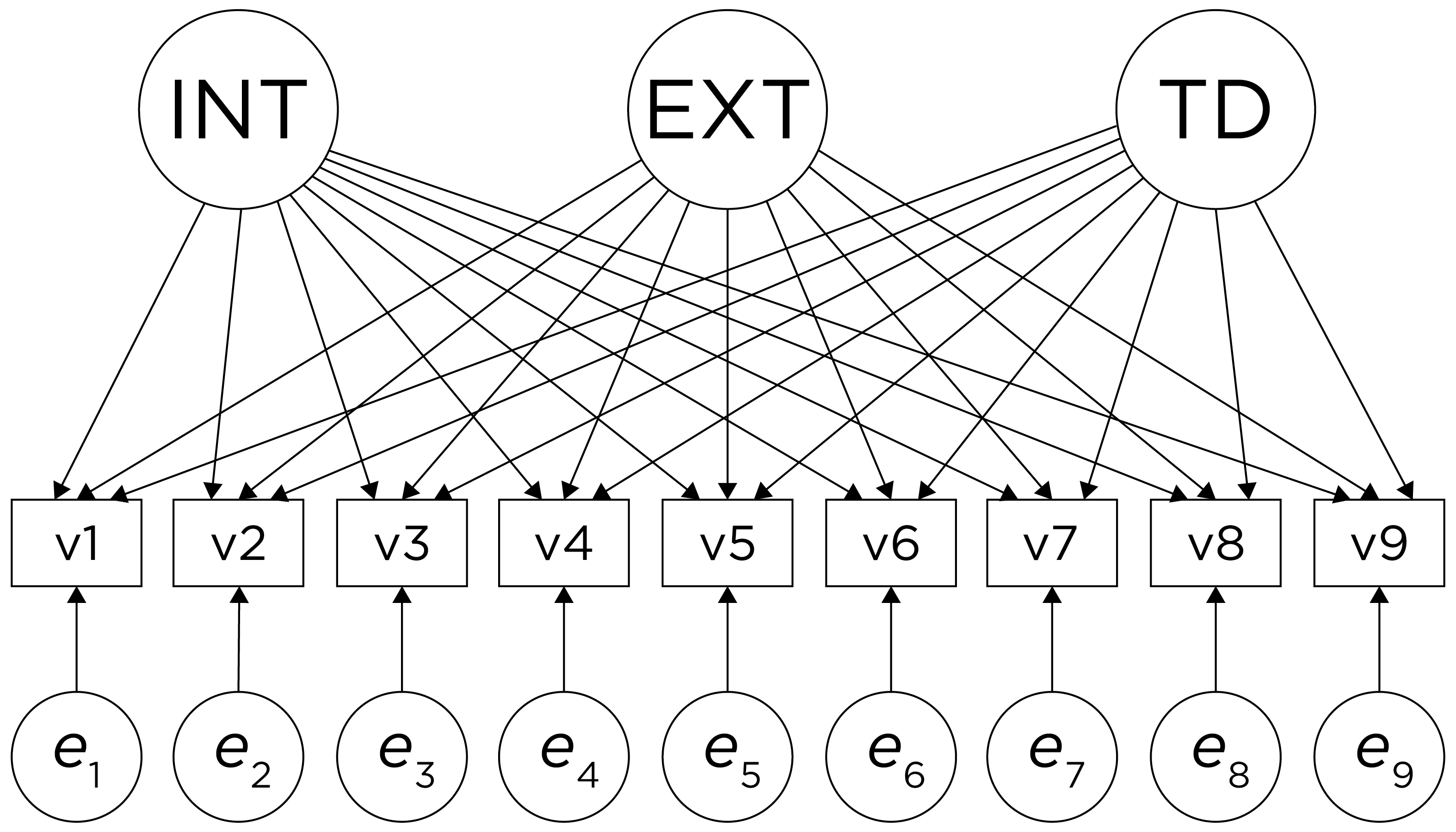

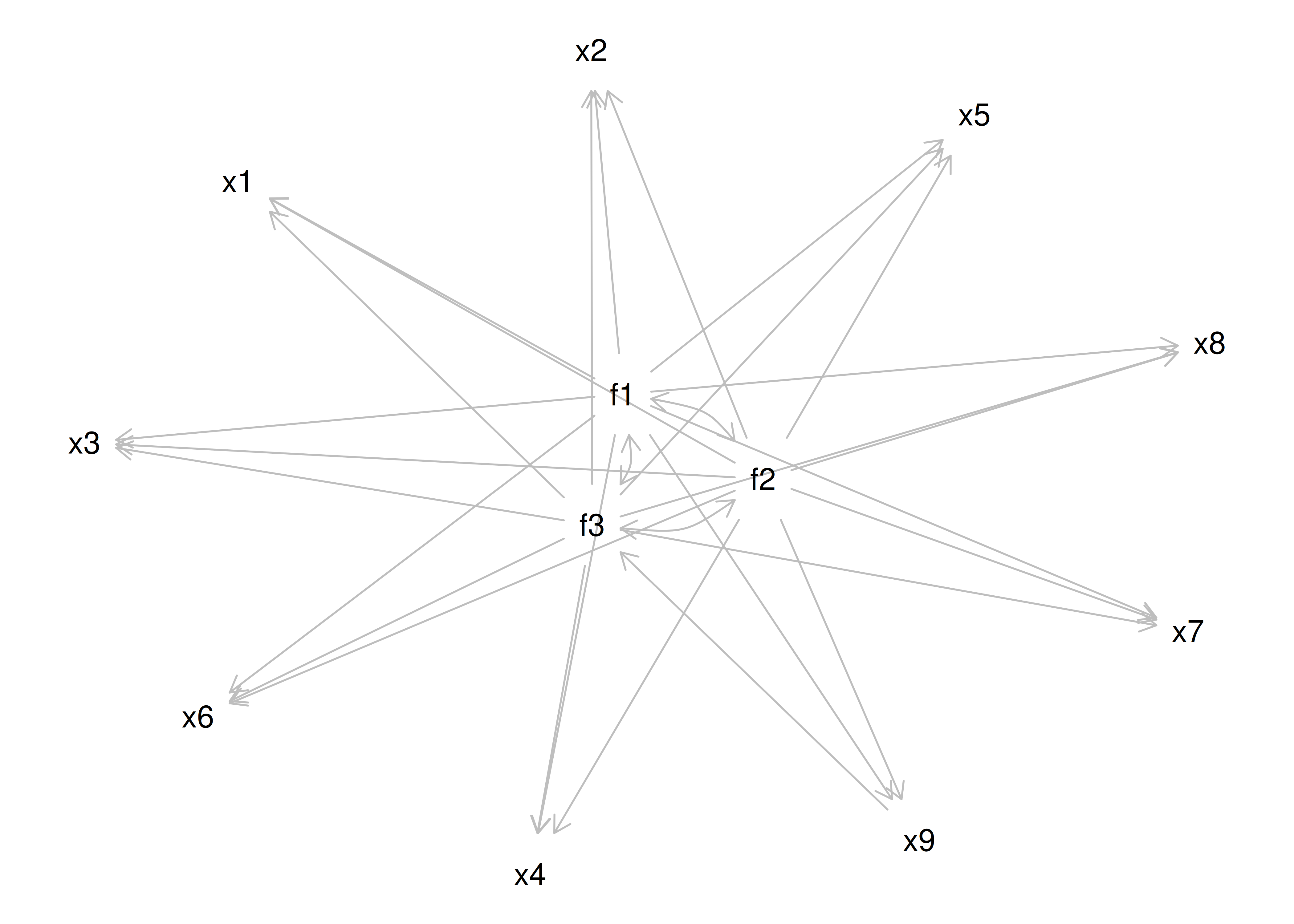

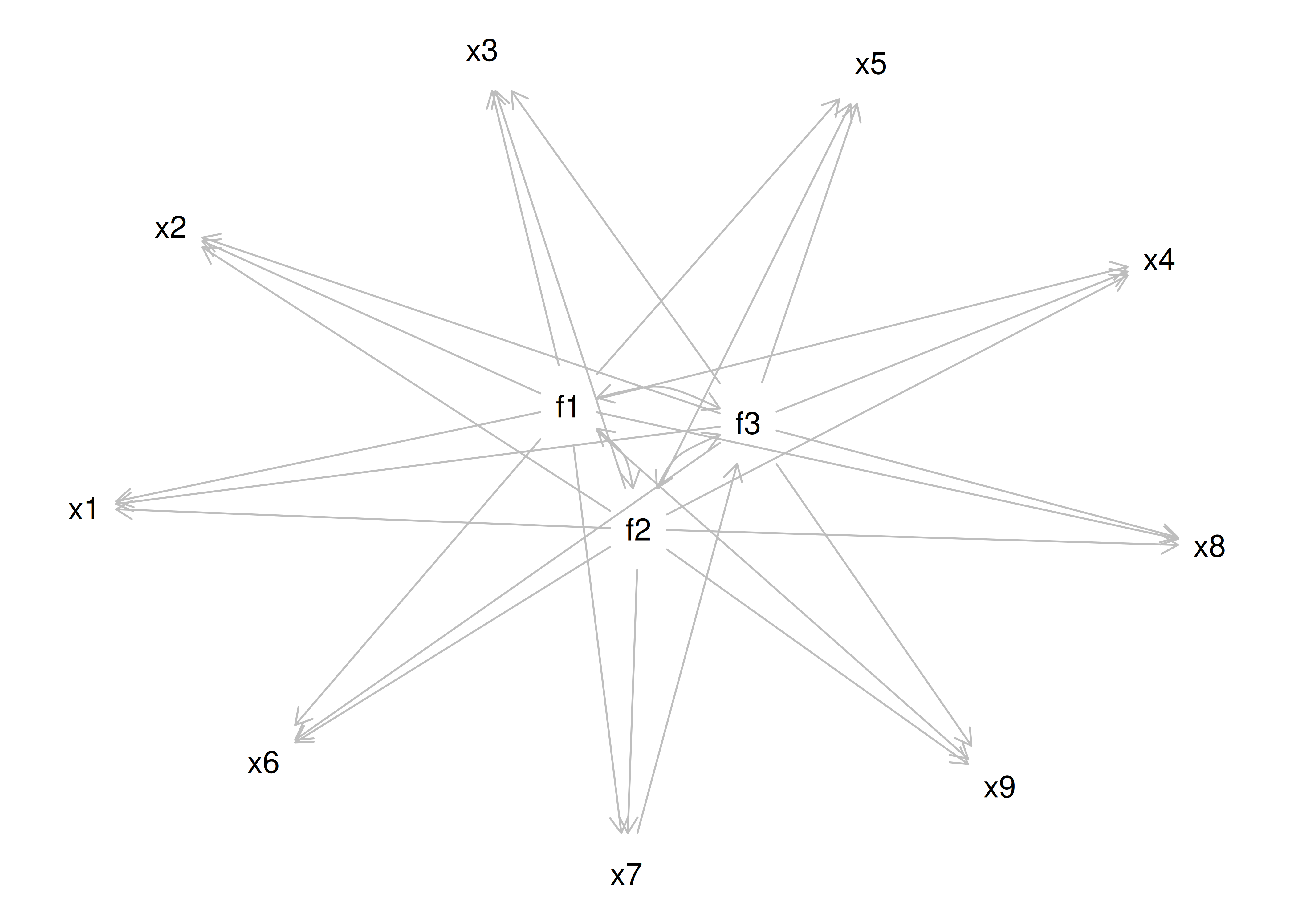

However, pure simple structure only occurs in simulations, not in real-life data. In reality, our measurement model in an unrotated factor analysis model might look like the model in Figure 14.17. In this example, the measurement model does not show simple structure because the items have cross-loadings—that is, the items load onto more than one factor. The cross-loadings make it difficult to interpret the factors, because all of the items load onto all of the factors, so the factors are not very distinct from each other, which makes it difficult to interpret what the factors mean.

Figure 14.17: Example of a Measurement Model That Does Not Follow Simple Structure. ‘INT’ = internalizing problems; ‘EXT’ = externalizing problems; ‘TD’ = thought-disordered problems.

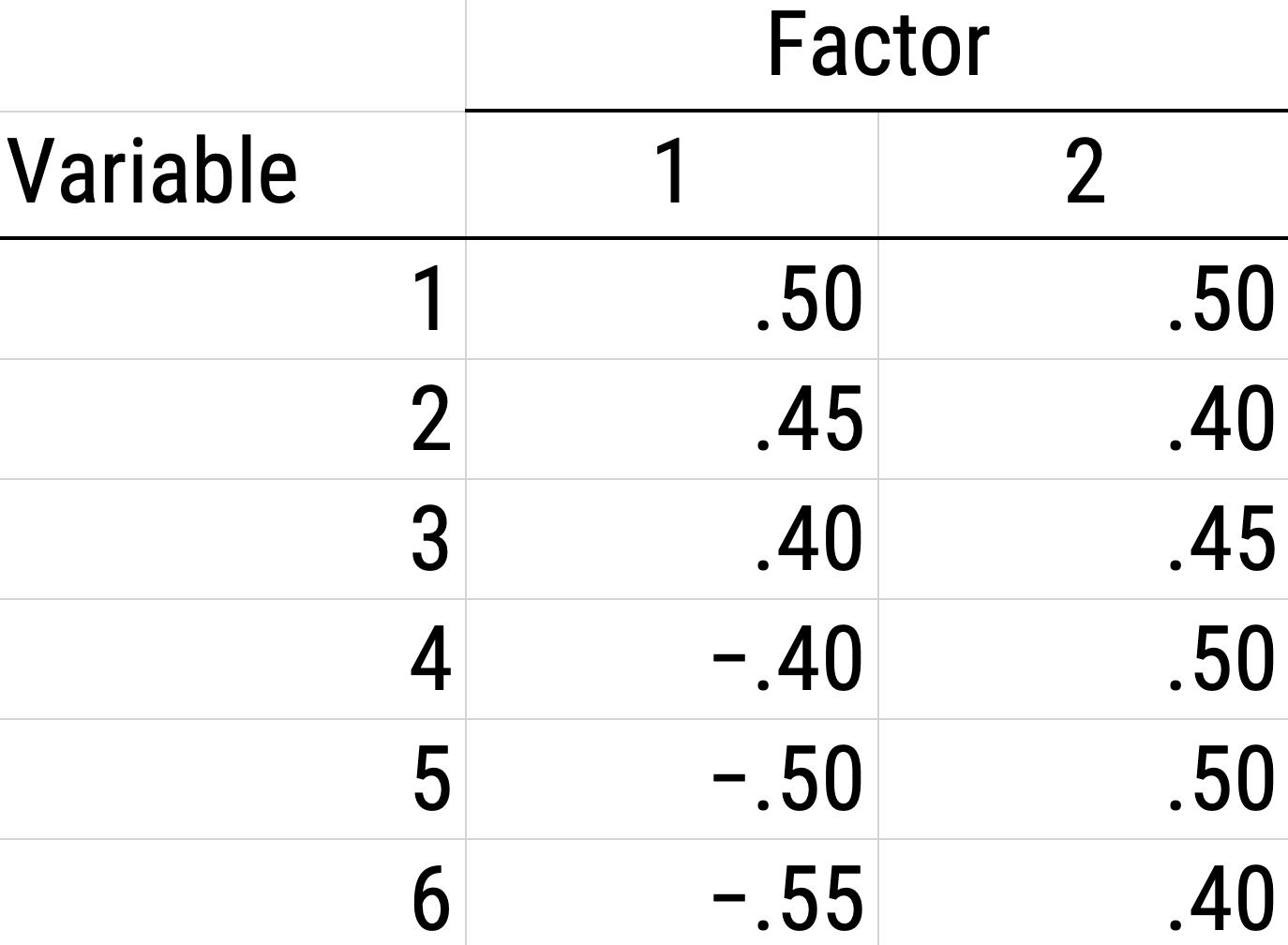

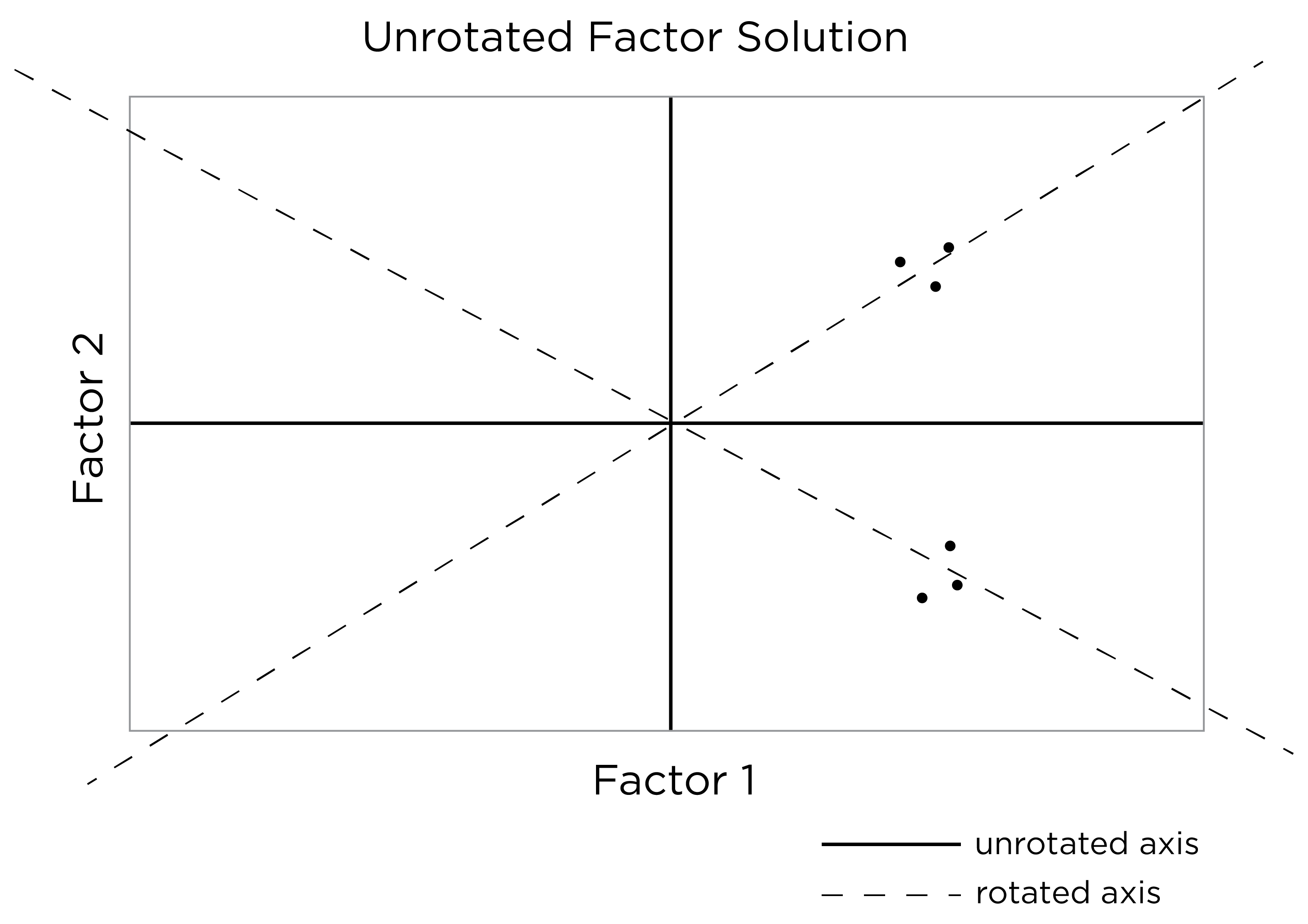

As a result of the challenges of interpretability caused by cross-loadings, factor rotations are often performed. An example of an unrotated factor matrix is in Figure 14.18.

Figure 14.18: Example of a Factor Matrix.

In the example factor matrix in Figure 14.18, the factor analysis is not very helpful—it tells us very little because it did not distinguish between the two factors. The variables have similar loadings on Factor 1 and Factor 2. An example of a unrotated factor solution is in Figure 14.19. In the figure, all of the variables are in the midst of the quadrants—they are not on the factors’ axes. Thus, the factors are not very informative.

Figure 14.19: Example of an Unrotated Factor Solution.

As a result, to improve the interpretability of the factor analysis, we can do what is called rotation. Rotation leverages the idea that there are infinite solutions to the factor analysis model that fit equally well. Rotation involves changing the orientation of the factors by changing the axes so that variables end up with very high (close to one or negative one) or very low (close to zero) loadings, so that it is clear which factors include which variables (and which factors each variable most strongly loads onto). That is, rotation rescales the factors and tries to identify the ideal solution (factor) for each variable. Rotation occurs by changing the variables’ loadings while keeping the structure of correlations among the variables intact (Field et al., 2012). Moreover, rotation does not change the variance explained by factors. Rotation searches for simple structure and keeps searching until it finds a minimum (i.e., the closest as possible to simple structure). After rotation, if the rotation was successful for imposing simple structure, each factor will have loadings close to one (or negative one) for some variables and close to zero for other variables. The goal of factor rotation is to achieve simple structure, to help make it easier to interpret the meaning of the factors.

To perform factor rotation, orthogonal rotations are often used. Orthogonal rotations make the rotated factors uncorrelated. An example of a commonly used orthogonal rotation is varimax rotation. Varimax rotation maximizes the sum of the variance of the squared loadings (i.e., so that items have either a very high or very low loading on a factor) and yields axes with a 90-degree angle.

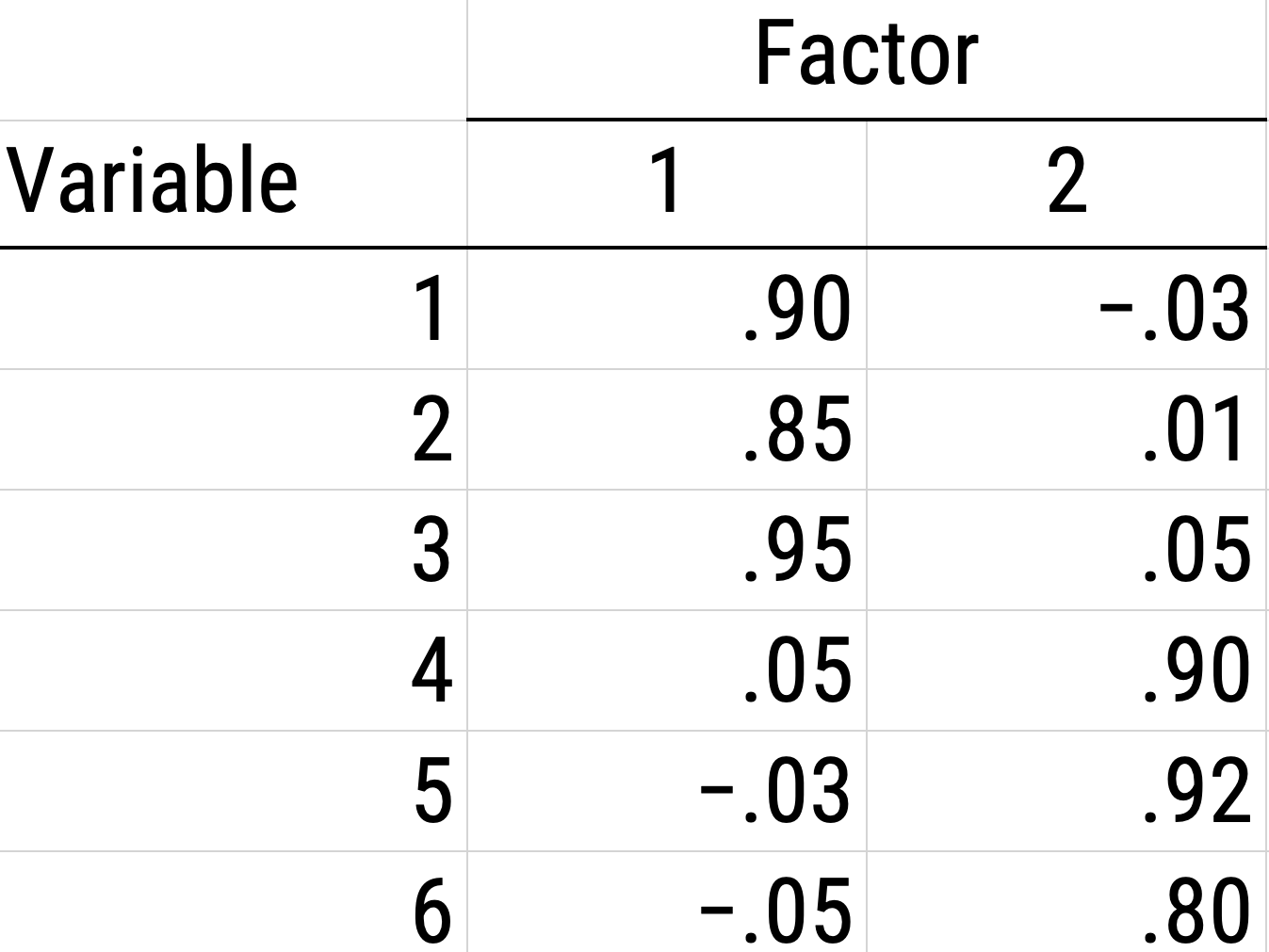

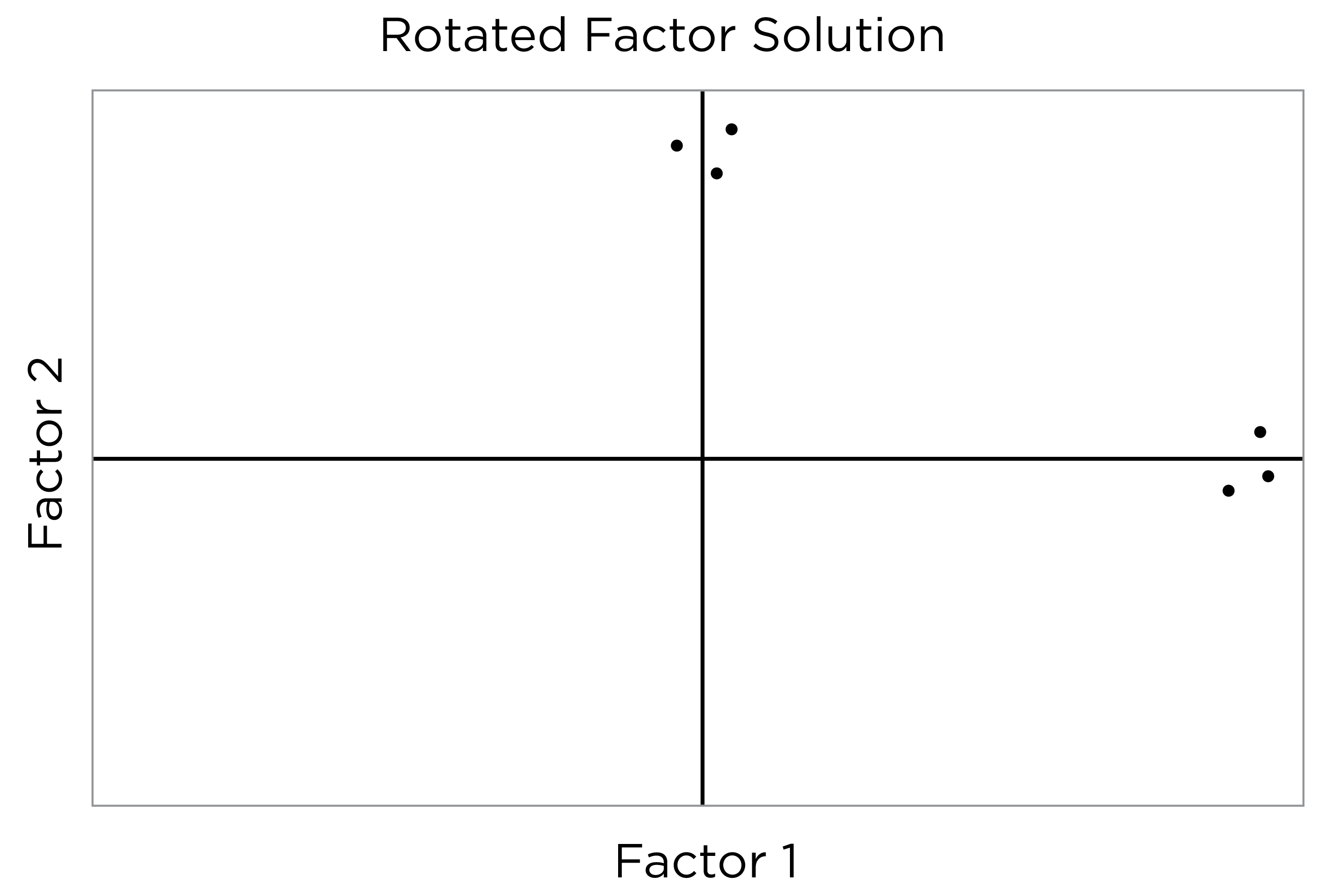

An example of a factor matrix following an orthogonal rotation is depicted in Figure 14.20. An example of a factor solution following an orthogonal rotation is depicted in Figure 14.21.

Figure 14.20: Example of a Rotated Factor Matrix.

Figure 14.21: Example of a Rotated Factor Solution.

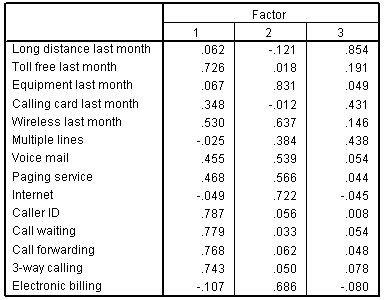

An example of a factor matrix from SPSS following an orthogonal rotation is depicted in Figure 14.22.

Figure 14.22: Example of a Rotated Factor Matrix From SPSS.

An example of a factor structure from an orthogonal rotation is in Figure 14.23.

Figure 14.23: Example of a Factor Structure From an Orthogonal Rotation.

Sometimes, however, the two factors and their constituent variables may be correlated. Examples of two correlated factors may be depression and anxiety. When the two factors are correlated in reality, if we make them uncorrelated, this would result in an inaccurate model. Oblique rotation allows for factors to be correlated and yields axes with an angle of less than 90 degrees. However, if the factors have low correlation (e.g., .2 or less), you can likely continue with orthogonal rotation. Nevertheless, just because an oblique rotation allows for correlated factors does not mean that the factors will be correlated, so oblique rotation provides greater flexibility than orthogonal rotation. An example of a factor structure from an oblique rotation is in Figure 14.24. Results from an oblique rotation are more complicated than orthogonal rotation—they provide lots of output and are more complicated to interpret. In addition, oblique rotation might not yield a smooth answer if you have a relatively small sample size.

Figure 14.24: Example of a Factor Structure From an Oblique Rotation.

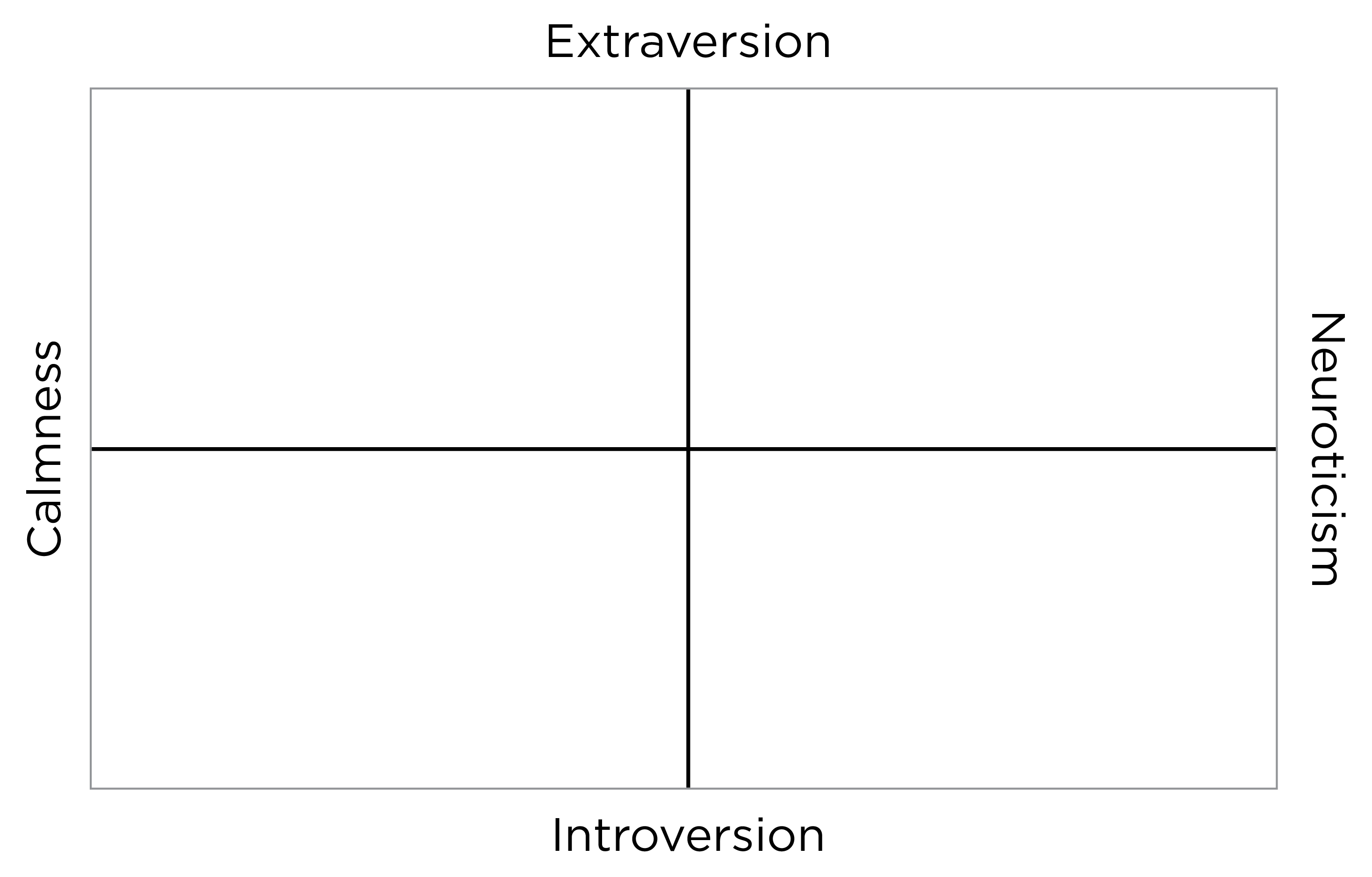

As an example of rotation based on interpretability, consider the Five-Factor Model of Personality (the Big Five), which goes by the acronym, OCEAN: Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. Although the five factors of personality are somewhat correlated, we can use rotation to ensure they are maximally independent. Upon rotation, extraversion and neuroticism are essentially uncorrelated, as depicted in Figure 14.25. The other pole of extraversion is intraversion and the other pole of neuroticism might be emotional stability or calmness.

Figure 14.25: Example of a Factor Rotation of Neuroticism and Extraversion.

Simple structure is achieved when each variable loads highly onto as few factors as possible (i.e., each item has only one significant or primary loading). Oftentimes this is not the case, so we choose our rotation method in order to decide if the factors can be correlated (an oblique rotation) or if the factors will be uncorrelated (an orthogonal rotation). If the factors are not correlated with each other, use an orthogonal rotation. The correlation between an item and a factor is a factor loading, which is simply a way to ask how much a variable is correlated with the underlying factor. However, its interpretation is more complicated if there are correlated factors!

An orthogonal rotation (e.g., varimax) can help with simplicity of interpretation because it seeks to yield simple structure without cross-loadings. Cross-loadings are instances where a variable loads onto multiple factors. My recommendation would always be to use an orthogonal rotation if you have reason to believe that finding simple structure in your data is possible; otherwise, the factors are extremely difficult to interpret—what exactly does a cross-loading even mean? However, you should always try an oblique rotation, too, to see how strongly the factors are correlated. If the factors are not correlated, an oblique rotation will resolve to yield similar results as an orthogonal rotation (and thus may be preferred over othogonal rotation given its flexibility). Examples of oblique rotations include oblimin and promax.

14.1.4.6 6. Model Selection and Interpretation

The next step of factor analysis is selecting and interpreting the model. One data matrix can lead to many different (correct) models—you must choose one based on the factor structure and theory. Use theory to interpret the model and label the factors. When interpreting others’ findings, do not rely just on the factor labels—look at the actual items to determine what they assess. What they are called matters much less than what the actual items are! This calls to mind the jingle-jangle fallacy. The jingle fallacy is the erroneous assumption that two different things are the same because they have the same label; the jangle fallacy is the erroneous assumption that two identical or near-identical things are different because they have different labels. Thus, it is important to evaluate the measures and what they actually assess, and not just to rely on labels and what they intend to assess.

14.1.5 The Downfall of Factor Analysis

The downfall of factor analysis is cross-validation. Cross-validating a factor structure would mean getting the same factor structure with a new sample. We want factor structures to show good replicability across samples. However, cross-validation often falls apart. The way to attempt to replicate a factor structure in an independent sample is to use CFA to set everything up and test the hypothesized factor structure in the independent sample.

14.1.6 What to Do with Factors

What can you do with factors once you have them? In SEM, factors have meaning. You can use them as predictors, mediators, moderators, or outcomes. And, using latent factors in SEM helps disattenuate associations for measurement error, as described in Section 5.6.3. People often want to use factors outside of SEM, but there is confusion here: When researchers find that three variables load onto Factor A, the researchers often combine those three using a sum or average—but this is not accurate. If you just add or average them, this ignores the factor loadings and the error. A mean or sum score is a measurement model that assumes that all items have the same factor loading (i.e., a loading of 1) and no error (residual of 0), which is unrealistic. Another solution is to form a linear composite by adding and weighting the variables by the factor loadings, which retains the differences in correlations (i.e., a weighted sum), but this still ignores the estimated error, so it still may not be generalizable and meaningful. At the same time, weighted sums may be less generalizable than unit-weighted composites where each variable is given equal weight (Garb & Wood, 2019; Wainer, 1976) because some variability in factor loadings likely reflects sampling error.

A comparison of various measurement models is in Table 14.1.

| Measurement Model | Items’ Factor Loadings | Items’ Residuals/Error |

|---|---|---|

| Mean/Sum Score | All are the same (fixed to 1) | Assumes items are measured without error (fixed to 0) |

| PCA or Weighted Mean/Sum Score | Allowed to differ | Assumes items are measured without error (fixed to 0) |

| Latent Variable Modeling (CFA, EFA, SEM, IRT) | Allowed to differ | Estimates error for each item |

14.1.7 Missing Data Handling

The PCA default in SPSS is listwise deletion of missing data: if a participant is missing data on any variable, the subject gets excluded from the analysis, so you might end up with too few participants.

Instead, use a correlation matrix with pairwise deletion for PCA with missing data.

Maximum likelihood factor analysis can make use of all available data points for a participant, even if they are missing some data points.

Mplus, which is often used for SEM and factor analysis, will notify you if you are removing many participants in CFA/EFA.

The lavaan package (Rosseel et al., 2022) in R also notifies you if you are removing participants in CFA/SEM models.

14.1.8 Troubleshooting Improper Solutions

A PCA will always converge; however, a factor analysis model may not converge. If a factor analysis model does not converge, you will need to modify the model to get it to converge. Model identification is described here.

In addition, a factor analysis model may converge but be improper. For example, if the factor analysis model has a negative residual variance or correlation above 1, the model is improper. A negative residual variance is also called a Heywood case. Problematic parameters such as a negative residual variance or correlation above 1 can lead to problems with other parameters in the model. It is thus preferable to avoid or address improper solutions. A negative residual variance or correlation above 1 could be caused by a variety of things such as a misspecified model, too many factors, too few indicators per factor, or too few participants (relative to the complexity of the model). Another issue that could arise is a non-positive definite matrix. A non-positive definite matrix could also be caused by a variety of things such as multicollinearity or singularity, variables with zero variance, too many variables relative to the sample size, or too many factors.

Here are various ways you may troubleshoot improper solutions in factor analysis:

- Modify the model to be a represent a truer representation of the data-generating process

- Simplify the model

- Estimate fewer paths/parameters

- Reduce the number of factors estimated/extracted

- Reduce the number of variables included

- Increase the sample size

- Increase the number of indicators per factor

- Examine the data for outliers

- Drop the offending item that has a negative residual variance (if theoretically justifiable because the item may be poorly performing)

- Use different starting values

- Test whether the negative residual variance is significantly different from zero; if not, can consider fixing it to zero (though this is not an ideal solution)

- Examine the data for possible outliers

- Use regularization with constraints so the residual variances do not become negative

14.2 Getting Started

14.2.1 Load Libraries

Code

library("petersenlab") #to install: install.packages("remotes"); remotes::install_github("DevPsyLab/petersenlab")

library("lavaan")

library("psych")

library("corrplot")

library("nFactors")

library("semPlot")

library("lavaan")

library("semTools")

library("dagitty")

library("BifactorIndicesCalculator") #to install: install.packages("remotes"); remotes::install_github("ddueber/BifactorIndicesCalculator")

library("kableExtra")

library("MOTE")

library("tidyverse")

library("here")

library("tinytex")

library("knitr")

library("rmarkdown")14.2.3 Prepare Data

14.2.3.1 Add Missing Data

Adding missing data to dataframes helps make examples more realistic to real-life data and helps you get in the habit of programming to account for missing data. For reproducibility, I set the seed below. Using the same seed will yield the same answer every time. There is nothing special about this particular seed.

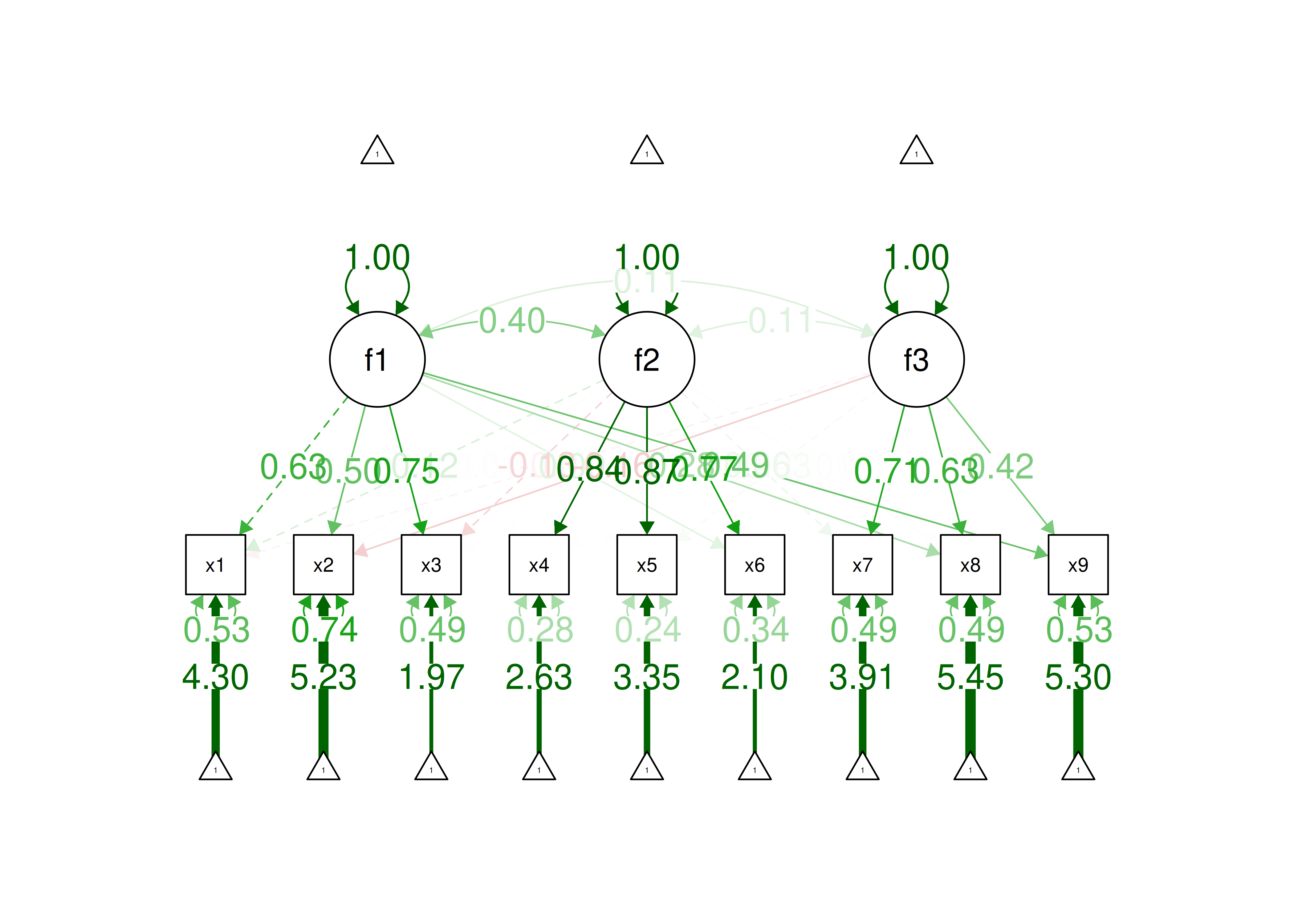

HolzingerSwineford1939 is a data set from the lavaan package (Rosseel et al., 2022) that contains mental ability test scores (x1–x9) for seventh- and eighth-grade children.

Code

set.seed(52242)

varNames <- names(HolzingerSwineford1939)

dimensionsDf <- dim(HolzingerSwineford1939)

unlistedDf <- unlist(HolzingerSwineford1939)

unlistedDf[sample(

1:length(unlistedDf),

size = .15 * length(unlistedDf))] <- NA

HolzingerSwineford1939 <- as.data.frame(matrix(

unlistedDf,

ncol = dimensionsDf[2]))

names(HolzingerSwineford1939) <- varNames

vars <- c("x1","x2","x3","x4","x5","x6","x7","x8","x9")14.3 Descriptive Statistics and Correlations

Before conducting a factor analysis, it is important examine descriptive statistics and correlations among variables.

14.3.1 Descriptive Statistics

Descriptive statistics are presented in Table ??.

Code

summaryTable <- HolzingerSwineford1939 %>%

dplyr::select(all_of(vars)) %>%

summarise(across(

everything(),

.fns = list(

n = ~ length(na.omit(.)),

missingness = ~ mean(is.na(.)) * 100,

M = ~ mean(., na.rm = TRUE),

SD = ~ sd(., na.rm = TRUE),

min = ~ min(., na.rm = TRUE),

max = ~ max(., na.rm = TRUE),

skewness = ~ psych::skew(., na.rm = TRUE),

kurtosis = ~ kurtosi(., na.rm = TRUE)),

.names = "{.col}.{.fn}")) %>%

pivot_longer(

cols = everything(),

names_to = c("variable","index"),

names_sep = "\\.") %>%

pivot_wider(

names_from = index,

values_from = value)

summaryTableTransposed <- summaryTable[-1] %>%

t() %>%

as.data.frame() %>%

setNames(summaryTable$variable) %>%

round(., digits = 2)

summaryTableTransposed14.3.2 Correlations

x1 x2 x3 x4 x5 x6 x7

x1 1.00000000 0.31671519 0.4592132 0.4009470 0.3060026 0.3204367 0.04336147

x2 0.31671519 1.00000000 0.3373084 0.1474393 0.1925946 0.1686010 -0.08744963

x3 0.45921315 0.33730843 1.0000000 0.1770624 0.1504923 0.1930589 0.08771510

x4 0.40094704 0.14743932 0.1770624 1.0000000 0.7314651 0.6796334 0.14825470

x5 0.30600259 0.19259455 0.1504923 0.7314651 1.0000000 0.6931306 0.10592715

x6 0.32043667 0.16860100 0.1930589 0.6796334 0.6931306 1.0000000 0.09881660

x7 0.04336147 -0.08744963 0.0877151 0.1482547 0.1059272 0.0988166 1.00000000

x8 0.25775028 0.09357234 0.2012953 0.1297963 0.2134497 0.1856636 0.48743045

x9 0.34289304 0.20083617 0.3959389 0.1917355 0.2605835 0.1805793 0.34622036

x8 x9

x1 0.25775028 0.3428930

x2 0.09357234 0.2008362

x3 0.20129535 0.3959389

x4 0.12979634 0.1917355

x5 0.21344970 0.2605835

x6 0.18566357 0.1805793

x7 0.48743045 0.3462204

x8 1.00000000 0.4637719

x9 0.46377193 1.0000000Correlation matrices of various types using the cor.table() function from the petersenlab package (Petersen, 2025) are in Tables 14.2, 14.3, and 14.4.

Code

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. x1.r | 1.00 | .32*** | .46*** | .40*** | .31*** | .32*** | .04 | .26*** | .34*** |

| 2. sig | NA | .00 | .00 | .00 | .00 | .00 | .52 | .00 | .00 |

| 3. n | 251 | 221 | 211 | 208 | 215 | 205 | 221 | 219 | 215 |

| 4. x2.r | .32*** | 1.00 | .34*** | .15* | .19*** | .17* | -.09 | .09 | .20*** |

| 5. sig | .00 | NA | .00 | .03 | .00 | .01 | .18 | .16 | .00 |

| 6. n | 221 | 265 | 225 | 227 | 228 | 216 | 234 | 227 | 229 |

| 7. x3.r | .46*** | .34*** | 1.00 | .18* | .15* | .19* | .09 | .20*** | .40*** |

| 8. sig | .00 | .00 | NA | .01 | .03 | .01 | .19 | .00 | .00 |

| 9. n | 211 | 225 | 255 | 211 | 215 | 206 | 227 | 219 | 220 |

| 10. x4.r | .40*** | .15* | .18* | 1.00 | .73*** | .68*** | .15* | .13† | .19*** |

| 11. sig | .00 | .03 | .01 | NA | .00 | .00 | .03 | .06 | .00 |

| 12. n | 208 | 227 | 211 | 252 | 216 | 209 | 224 | 217 | 215 |

| 13. x5.r | .31*** | .19*** | .15* | .73*** | 1.00 | .69*** | .11 | .21*** | .26*** |

| 14. sig | .00 | .00 | .03 | .00 | NA | .00 | .11 | .00 | .00 |

| 15. n | 215 | 228 | 215 | 216 | 259 | 217 | 226 | 222 | 219 |

| 16. x6.r | .32*** | .17* | .19* | .68*** | .69*** | 1.00 | .10 | .19* | .18* |

| 17. sig | .00 | .01 | .01 | .00 | .00 | NA | .15 | .01 | .01 |

| 18. n | 205 | 216 | 206 | 209 | 217 | 248 | 218 | 214 | 209 |

| 19. x7.r | .04 | -.09 | .09 | .15* | .11 | .10 | 1.00 | .49*** | .35*** |

| 20. sig | .52 | .18 | .19 | .03 | .11 | .15 | NA | .00 | .00 |

| 21. n | 221 | 234 | 227 | 224 | 226 | 218 | 266 | 231 | 226 |

| 22. x8.r | .26*** | .09 | .20*** | .13† | .21*** | .19* | .49*** | 1.00 | .46*** |

| 23. sig | .00 | .16 | .00 | .06 | .00 | .01 | .00 | NA | .00 |

| 24. n | 219 | 227 | 219 | 217 | 222 | 214 | 231 | 259 | 222 |

| 25. x9.r | .34*** | .20*** | .40*** | .19*** | .26*** | .18* | .35*** | .46*** | 1.00 |

| 26. sig | .00 | .00 | .00 | .00 | .00 | .01 | .00 | .00 | NA |

| 27. n | 215 | 229 | 220 | 215 | 219 | 209 | 226 | 222 | 257 |

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. x1 | 1.00 | ||||||||

| 2. x2 | .32*** | 1.00 | |||||||

| 3. x3 | .46*** | .34*** | 1.00 | ||||||

| 4. x4 | .40*** | .15* | .18* | 1.00 | |||||

| 5. x5 | .31*** | .19*** | .15* | .73*** | 1.00 | ||||

| 6. x6 | .32*** | .17* | .19* | .68*** | .69*** | 1.00 | |||

| 7. x7 | .04 | -.09 | .09 | .15* | .11 | .10 | 1.00 | ||

| 8. x8 | .26*** | .09 | .20*** | .13† | .21*** | .19* | .49*** | 1.00 | |

| 9. x9 | .34*** | .20*** | .40*** | .19*** | .26*** | .18* | .35*** | .46*** | 1.00 |

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | x8 | x9 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. x1 | 1.00 | ||||||||

| 2. x2 | .32 | 1.00 | |||||||

| 3. x3 | .46 | .34 | 1.00 | ||||||

| 4. x4 | .40 | .15 | .18 | 1.00 | |||||

| 5. x5 | .31 | .19 | .15 | .73 | 1.00 | ||||

| 6. x6 | .32 | .17 | .19 | .68 | .69 | 1.00 | |||

| 7. x7 | .04 | -.09 | .09 | .15 | .11 | .10 | 1.00 | ||

| 8. x8 | .26 | .09 | .20 | .13 | .21 | .19 | .49 | 1.00 | |

| 9. x9 | .34 | .20 | .40 | .19 | .26 | .18 | .35 | .46 | 1.00 |

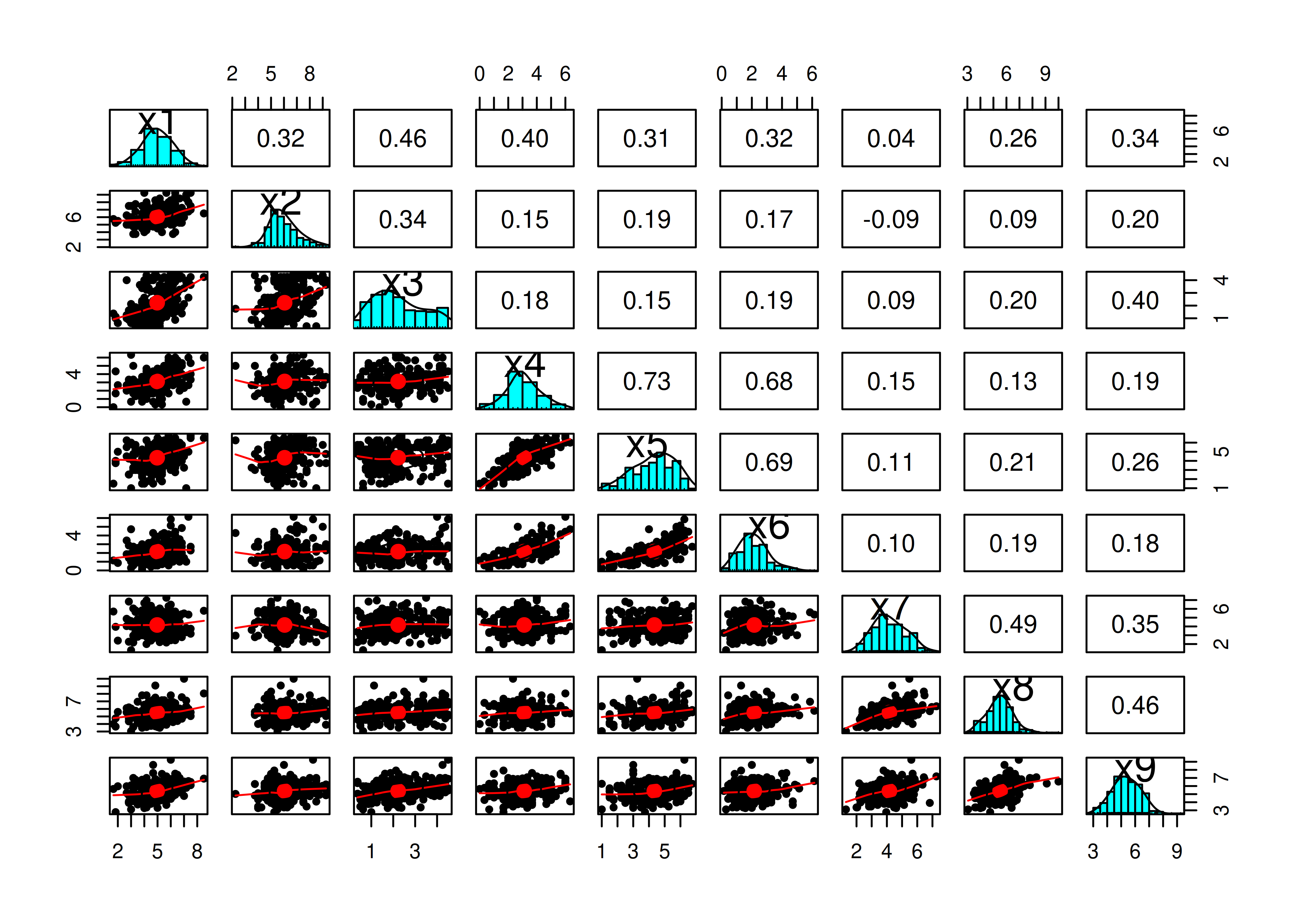

Pairs panel plots were generated using the psych package (Revelle, 2022).

Correlation plots were generated using the corrplot package (Wei & Simko, 2021).

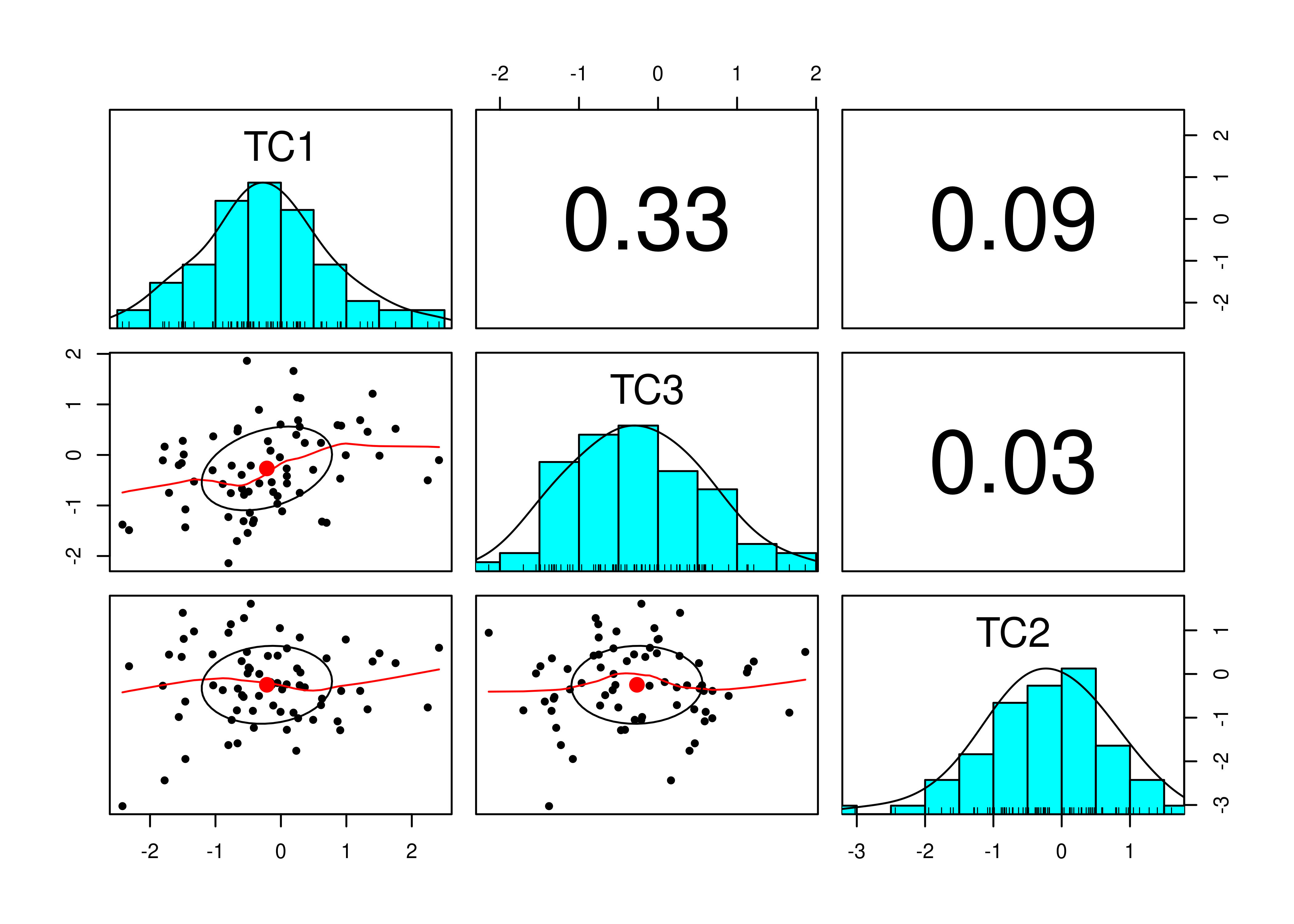

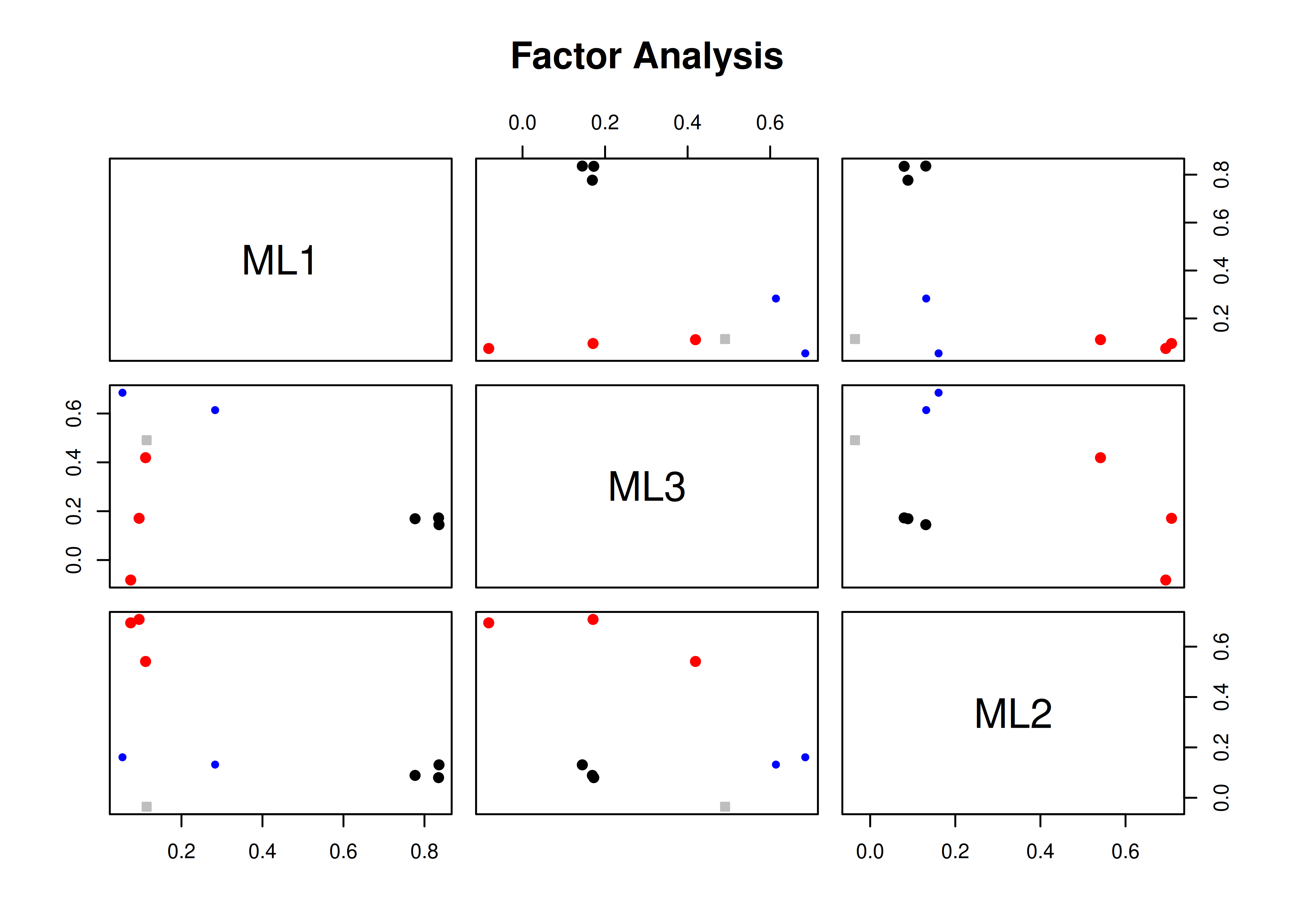

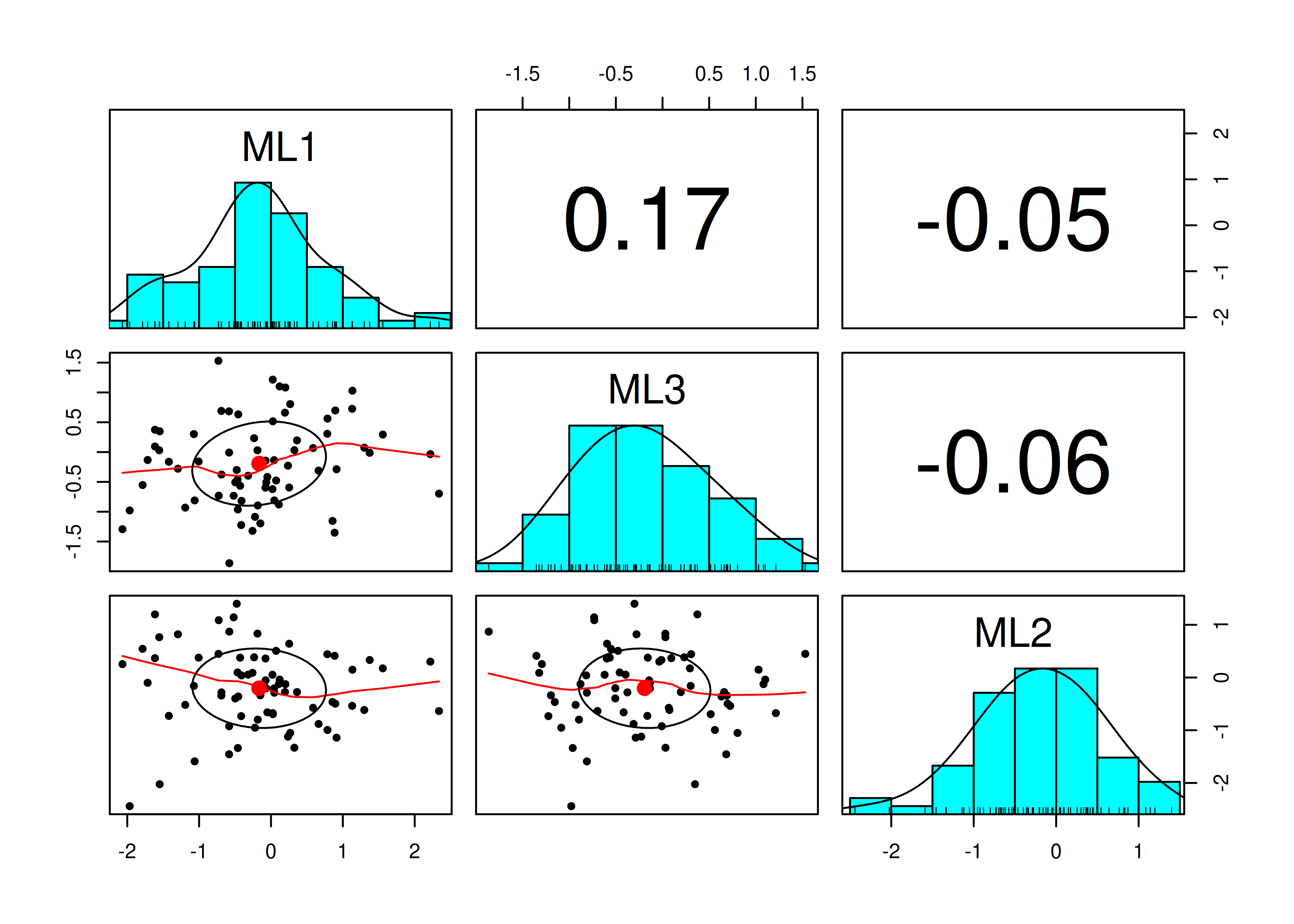

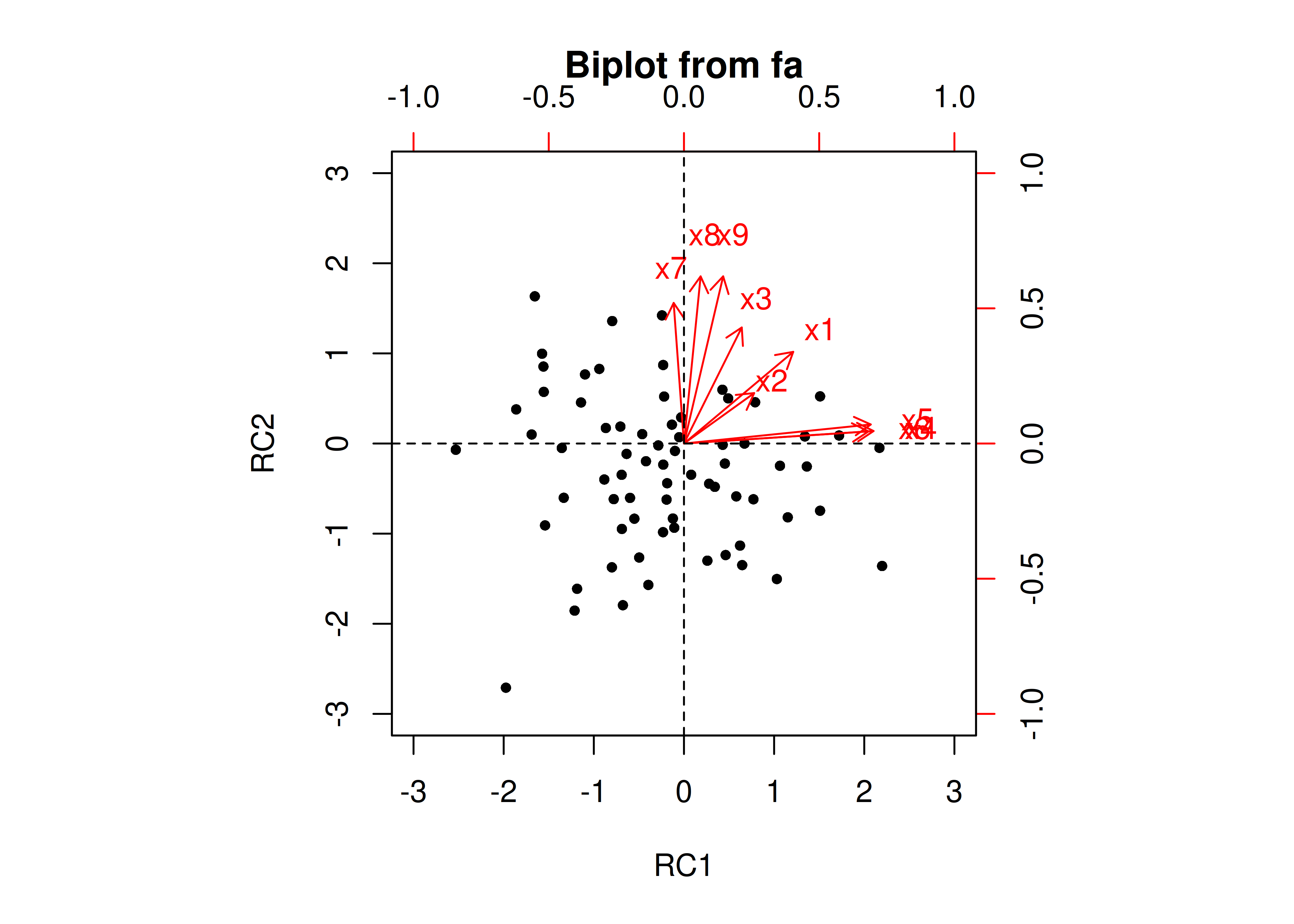

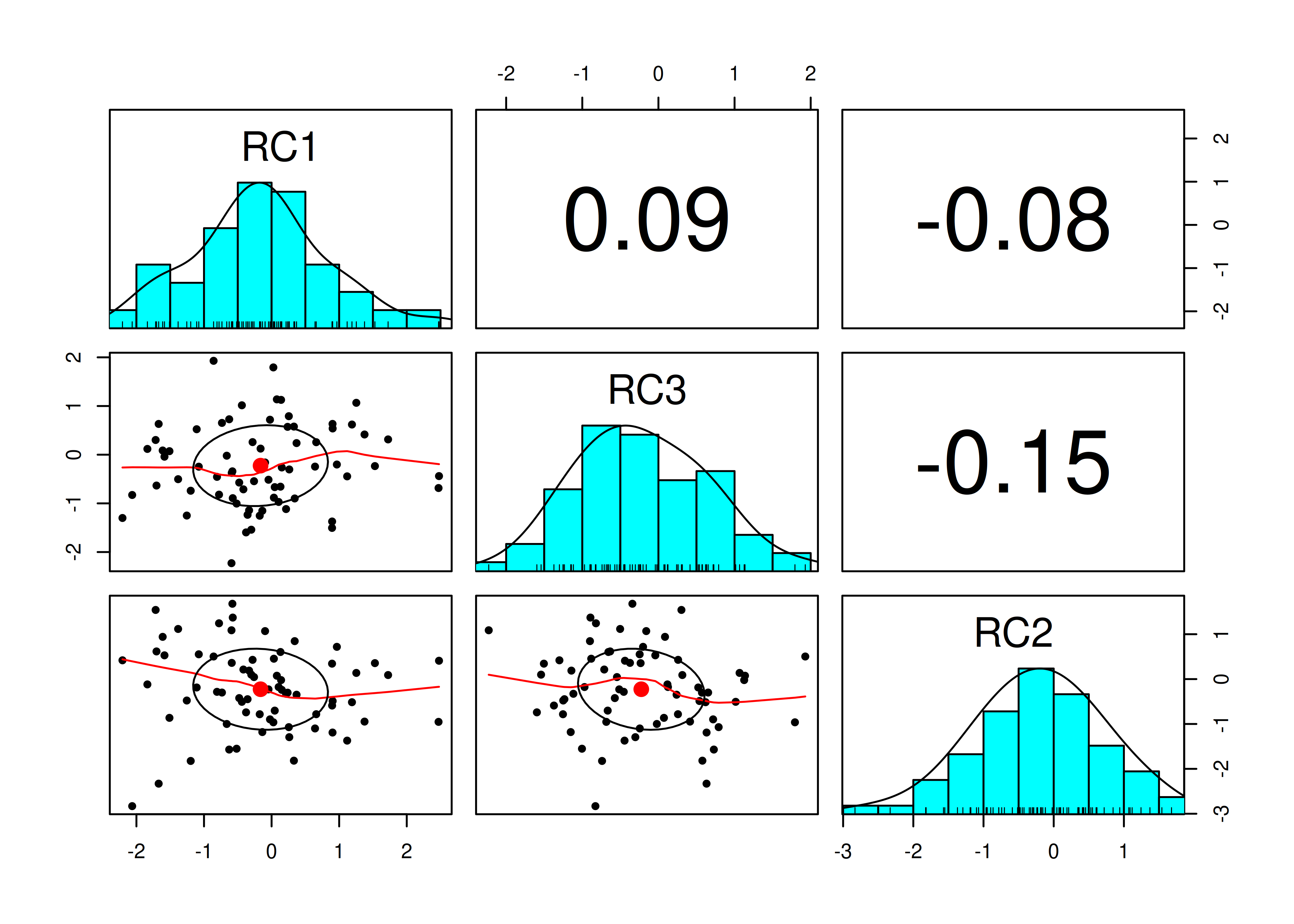

A pairs panel plot is in Figure 14.26.

Figure 14.26: Pairs Panel Plot.

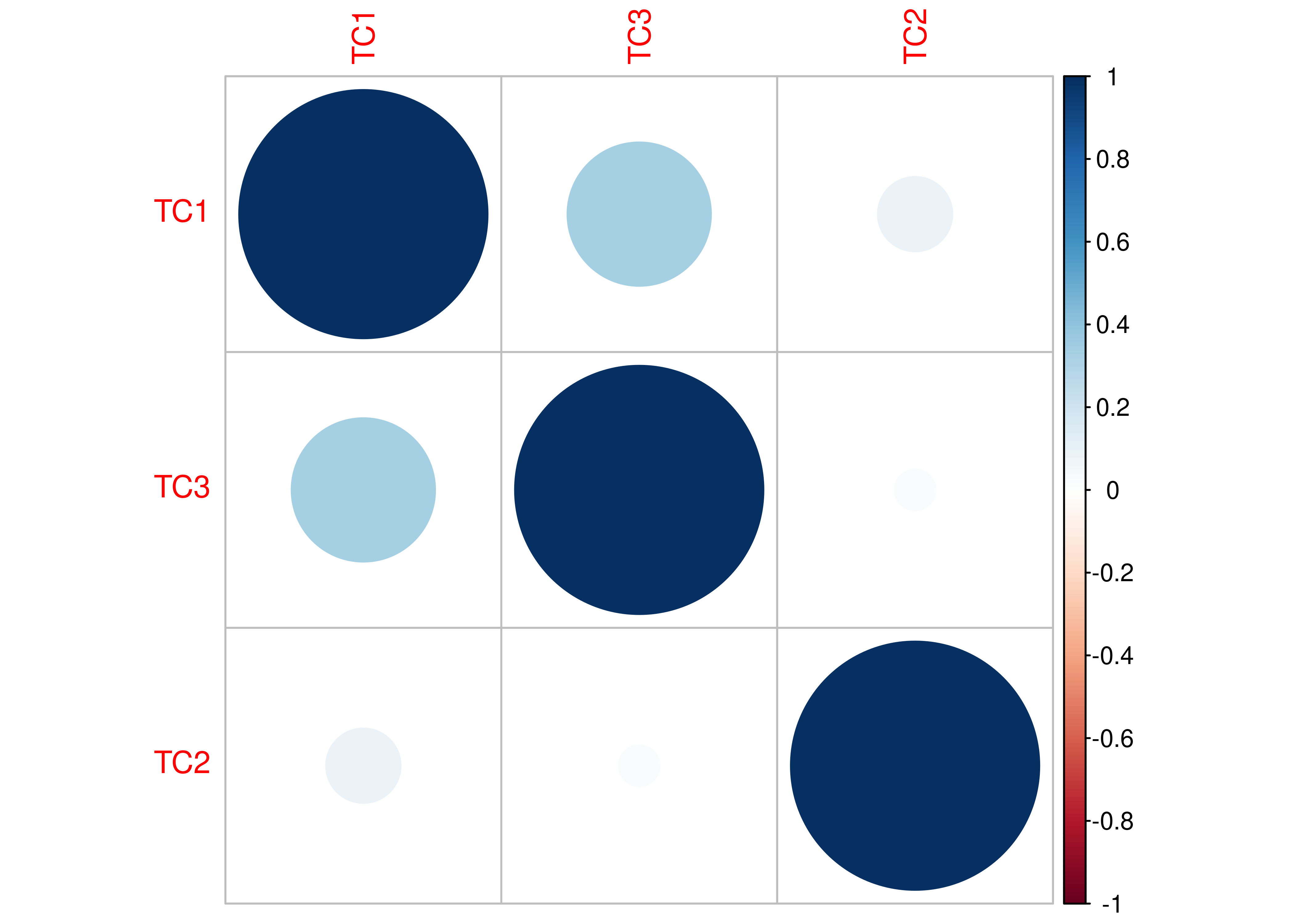

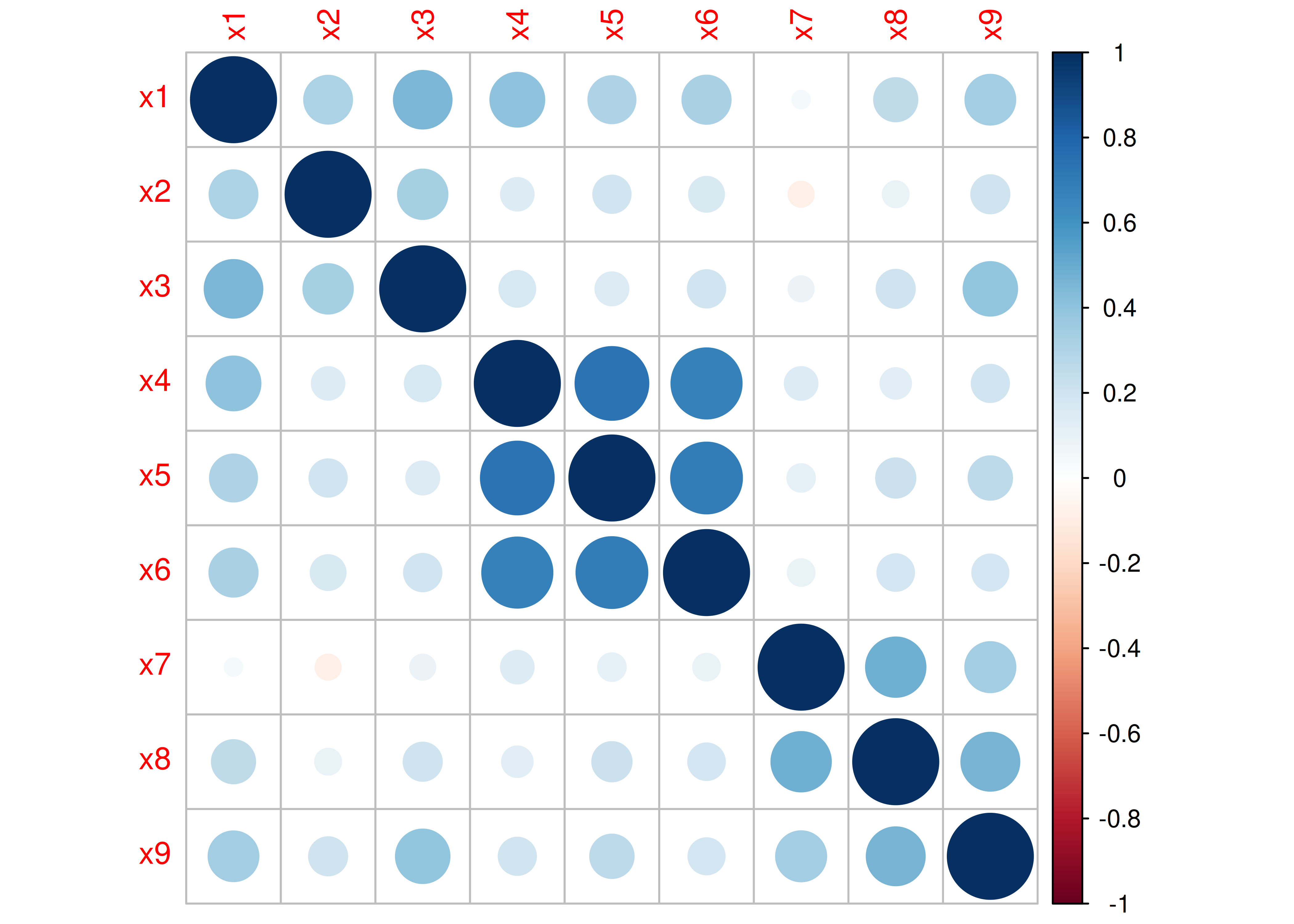

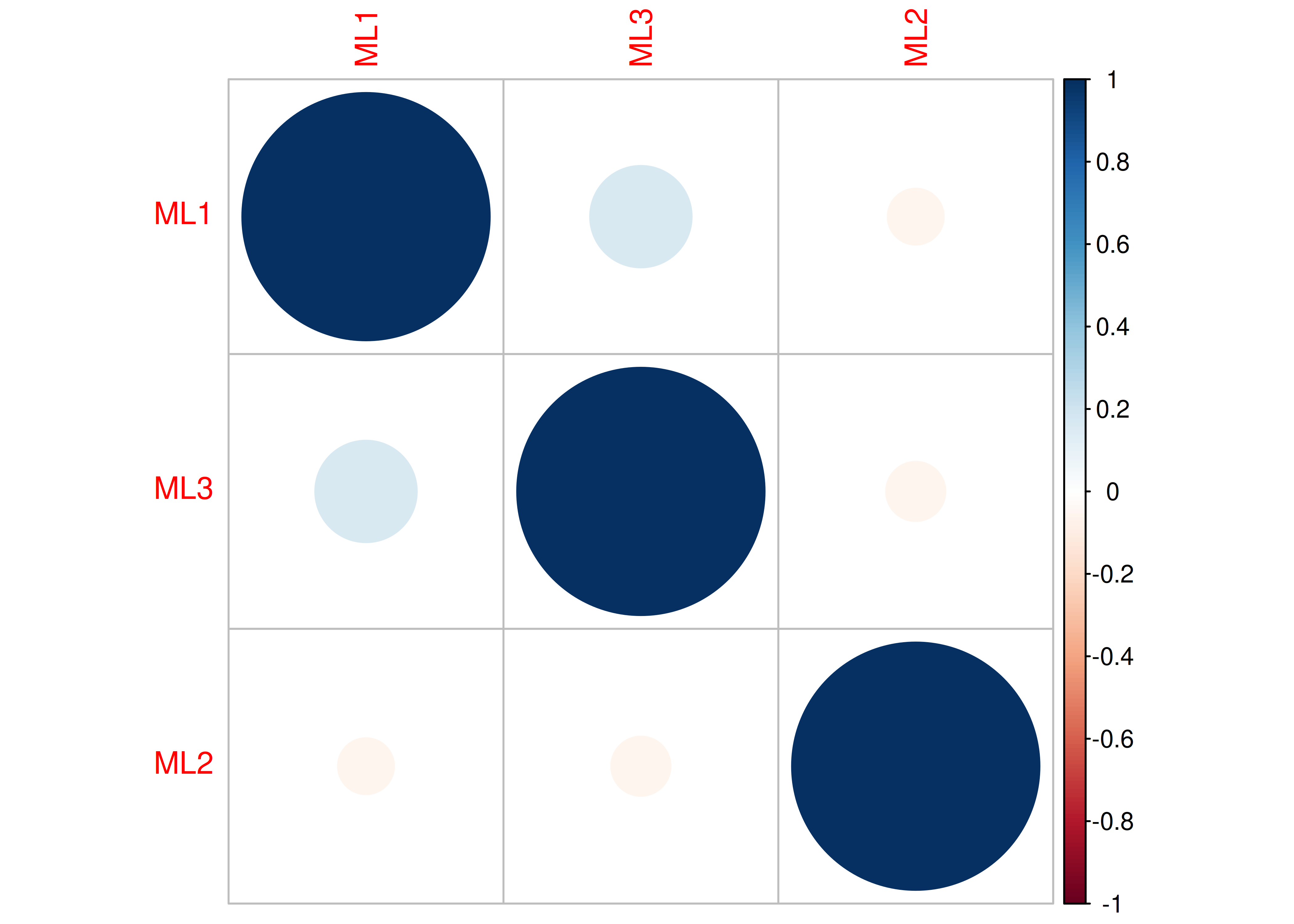

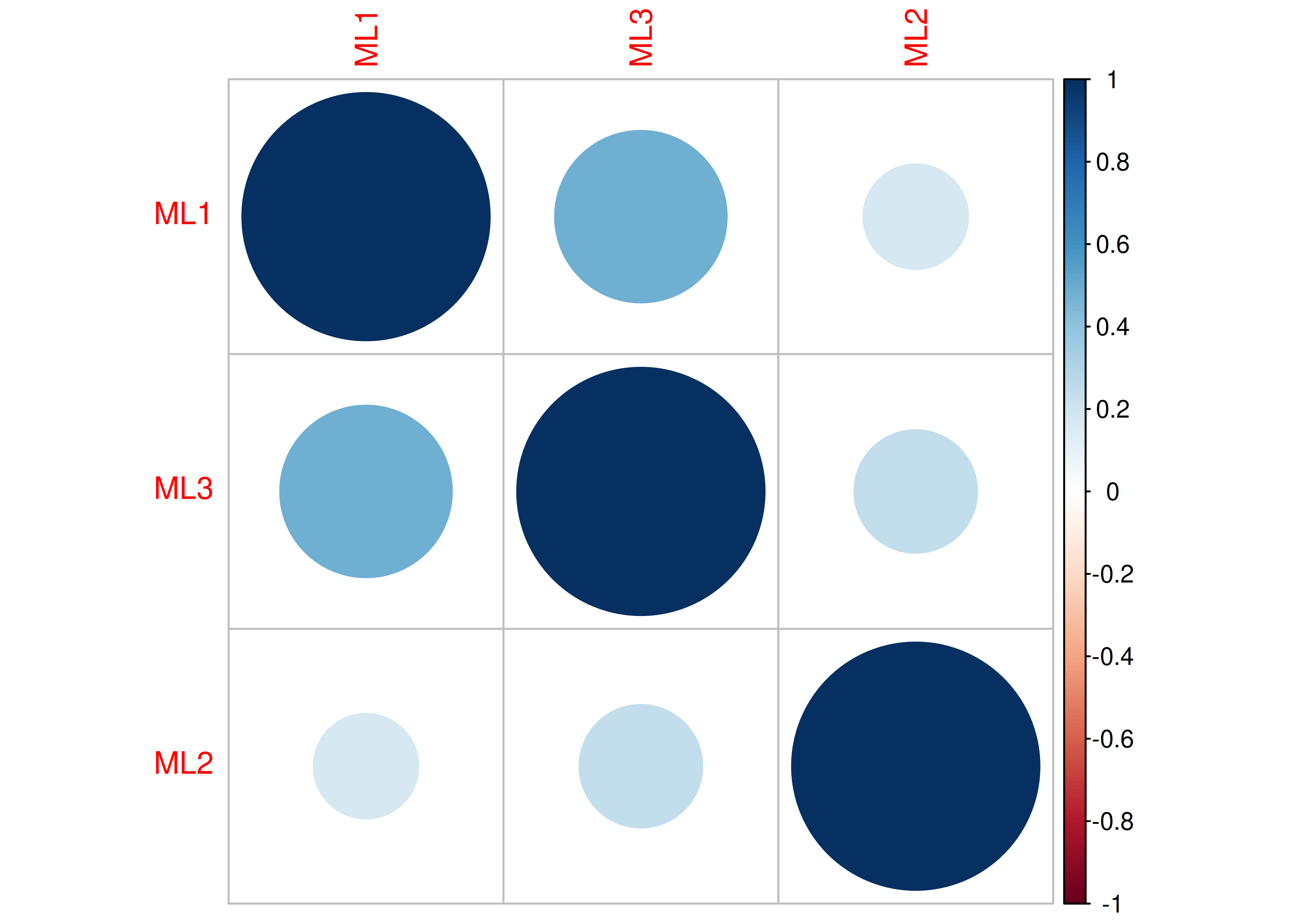

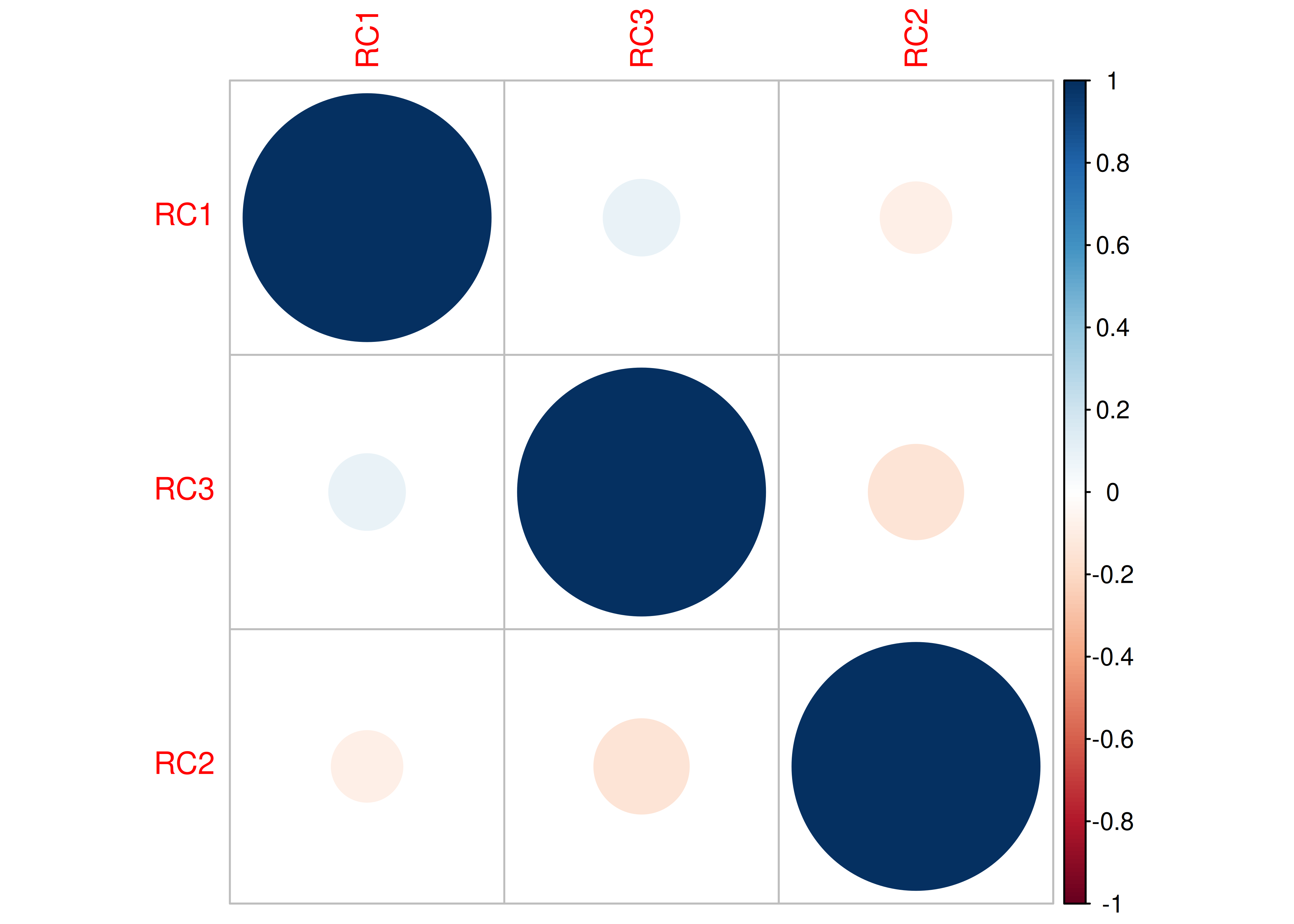

A correlation plot is in Figure 14.27.

Figure 14.27: Correlation Plot.

14.3.3 Kaiser-Meyer-Oklin (KMO) Test

The Kaiser-Meyer-Oklin (KMO) measure of sampling adequacy (Kaiser, 1970; Kaiser & Rice, 1974) evaluates the extent to which a set of variables is suitable for factor analysis. It indicates the proportion of variance in your variables that might be caused by underlying factors, where a higher proportion indicates the data are potentially more suitable for factor analysis. The following criteria are used for evaluating KMO (Kaiser, 1974):

- Above 0.90 - Marvelous

- 0.80 to 0.90 - Meritorious

- 0.7 to 0.80 - Average

- 0.60 to 0.70 - Mediocre

- 0.50 to 0.60 - Terrible

- Below 0.50 - Unacceptable

Kaiser-Meyer-Olkin factor adequacy

Call: psych::KMO(r = HolzingerSwineford1939[, vars])

Overall MSA = 0.74

MSA for each item =

x1 x2 x3 x4 x5 x6 x7 x8 x9

0.77 0.78 0.74 0.72 0.74 0.82 0.58 0.68 0.78 14.3.4 Determinant of a Correlation Matrix

The determinant of a correlation matrix can be used to evaluate the potential for multicollinearity, which can be problematic in factor analysis. For purposes of pursuing factor analysis, the determinant of the correlation matrix should be greater than 0.00001 (Field et al., 2012).

Code

[1] 0.0471407214.3.5 Bartlett’s Test of Sphericity

Bartlett’s test of sphericity evaluates whether the correlations between the variables are significantly different from zero. A significant Bartlett’s test indicates that the correlations between the variables significantly differ from zero and that factor analysis may be able to be used.

$chisq

[1] 290.6978

$p.value

[1] 1.378106e-41

$df

[1] 3614.4 Factor Analysis

14.4.1 Exploratory Factor Analysis (EFA)

I introduced exploratory factor analysis (EFA) models in Section 14.1.4.3.1.

14.4.1.1 Determine number of factors

Determine the number of factors to retain using the Scree plot and Very Simple Structure plot.

14.4.1.1.1 Scree Plot

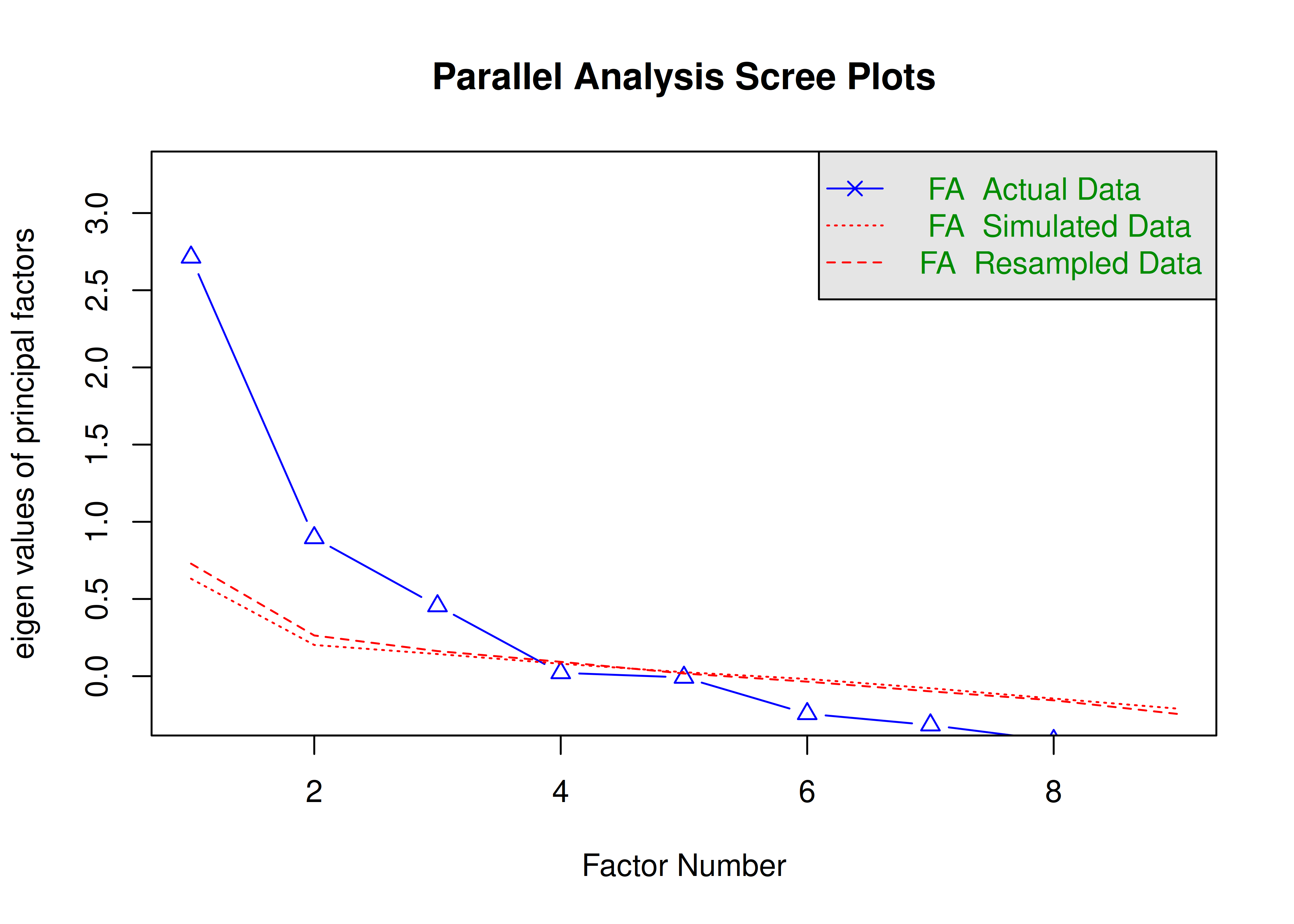

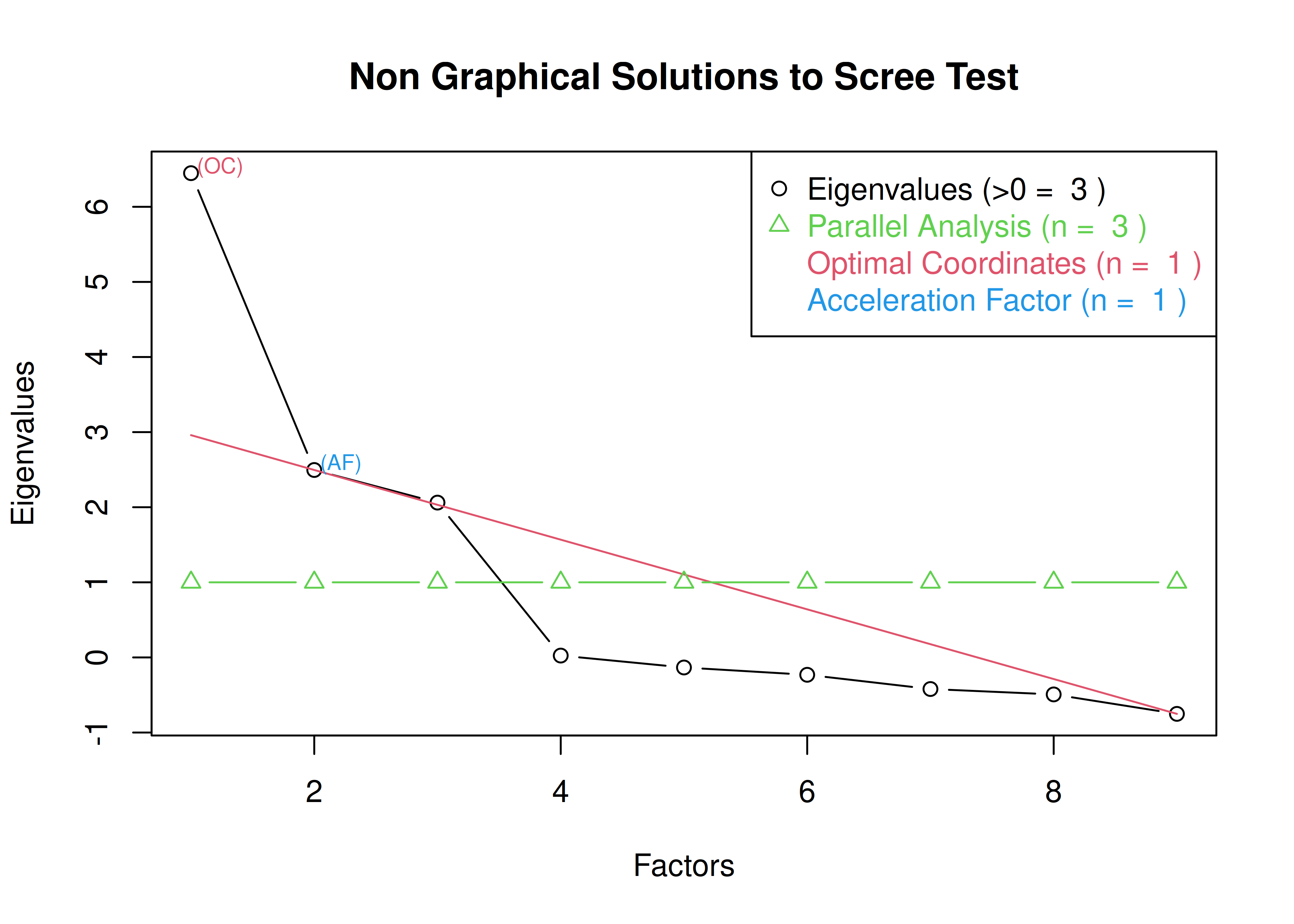

Scree plots were generated using the psych (Revelle, 2022) and nFactors (Raiche & Magis, 2020) packages.

The optimal coordinates and the acceleration factor attempt to operationalize the Cattell scree test: i.e., the “elbow” of the scree plot (Ruscio & Roche, 2012).

The optimal coordinators factor is quantified using a series of linear equations to determine whether observed eigenvalues exceed the predicted values.

The acceleration factor is quantified using the acceleration of the curve, that is, the second derivative.

The Kaiser-Guttman rule states to keep principal components whose eigenvalues are greater than 1.

However, for exploratory factor analysis (as opposed to PCA), the criterion is to keep the factors whose eigenvalues are greater than zero (i.e., not the factors whose eigenvalues are greater than 1) (Dinno, 2014).

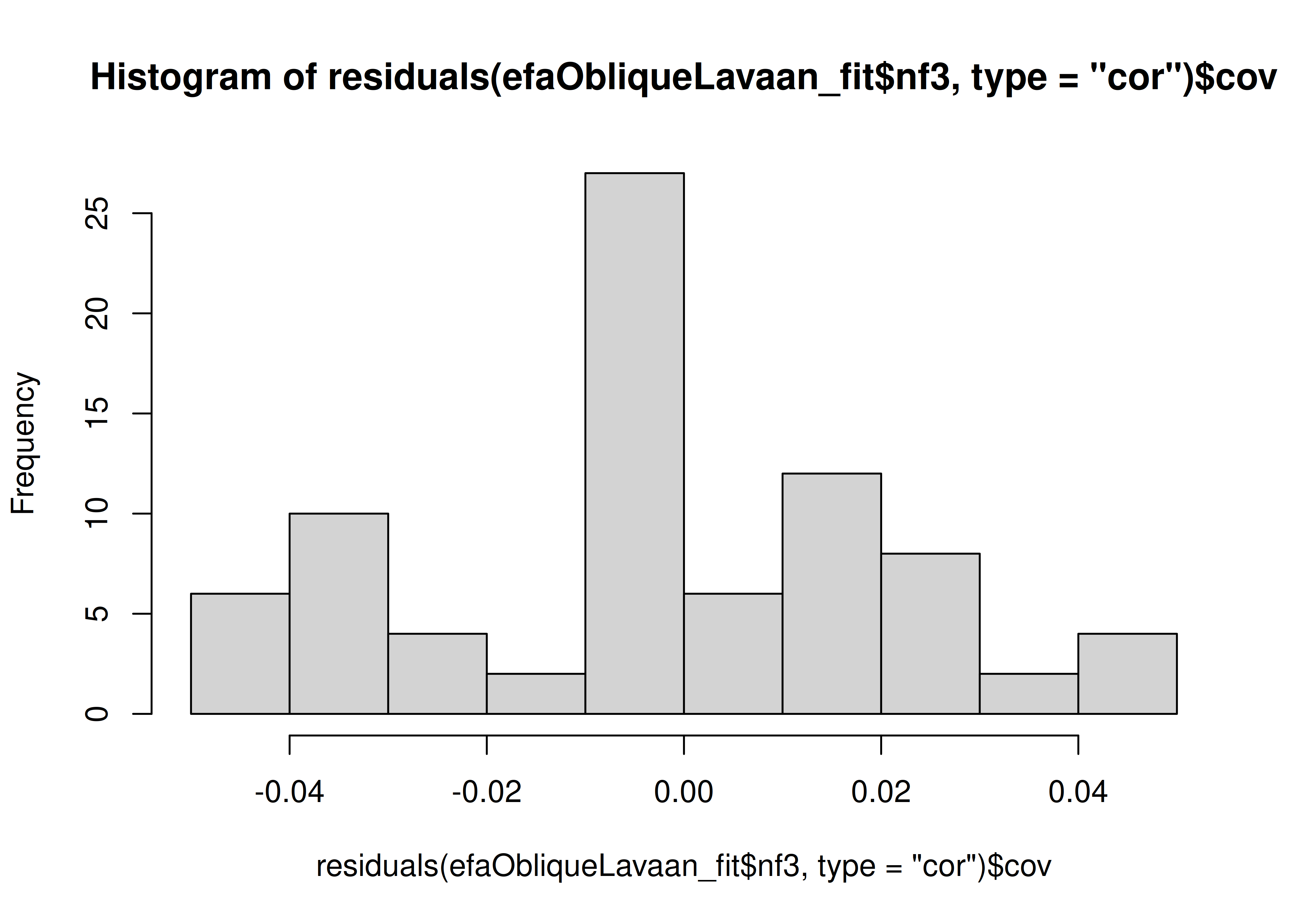

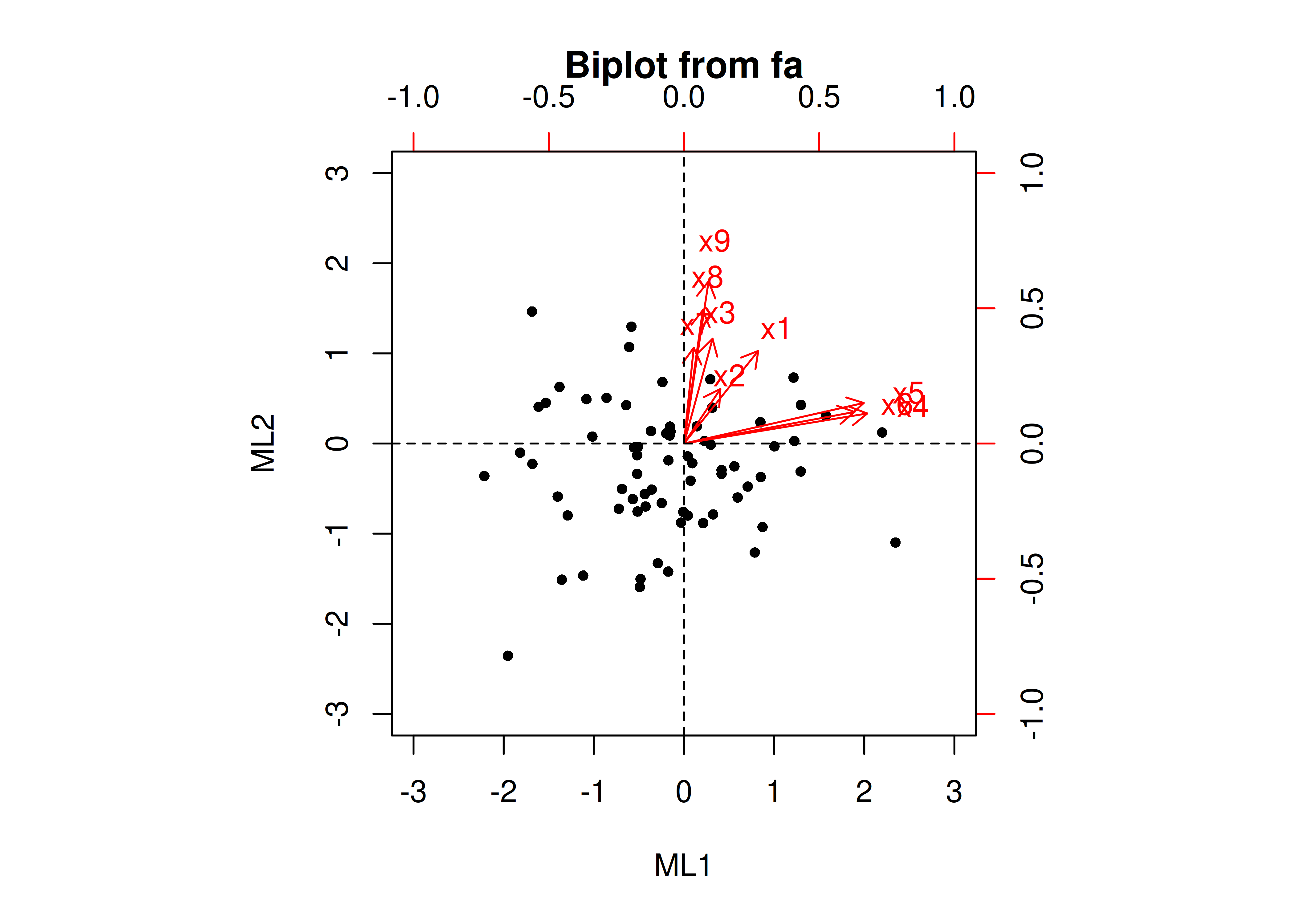

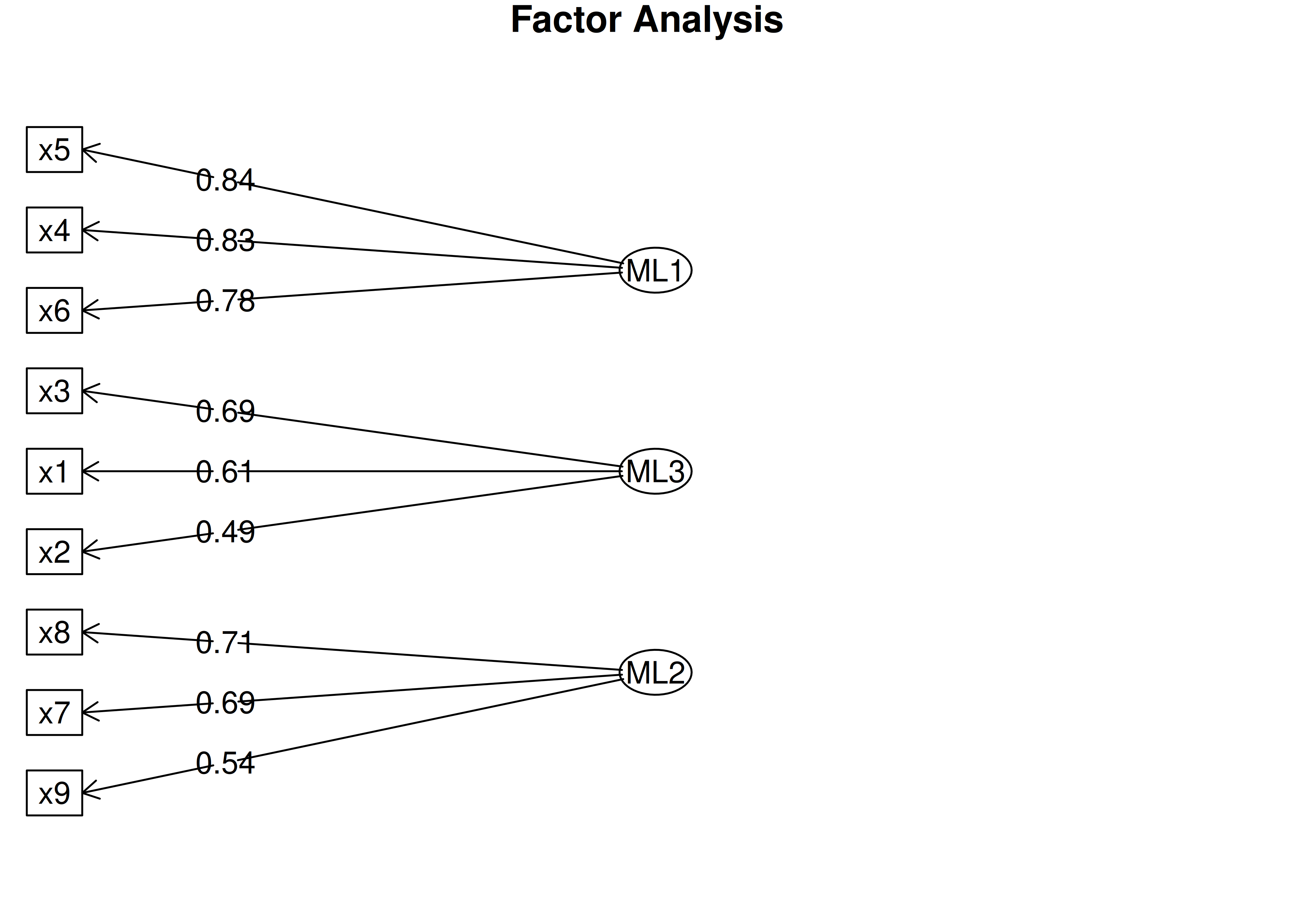

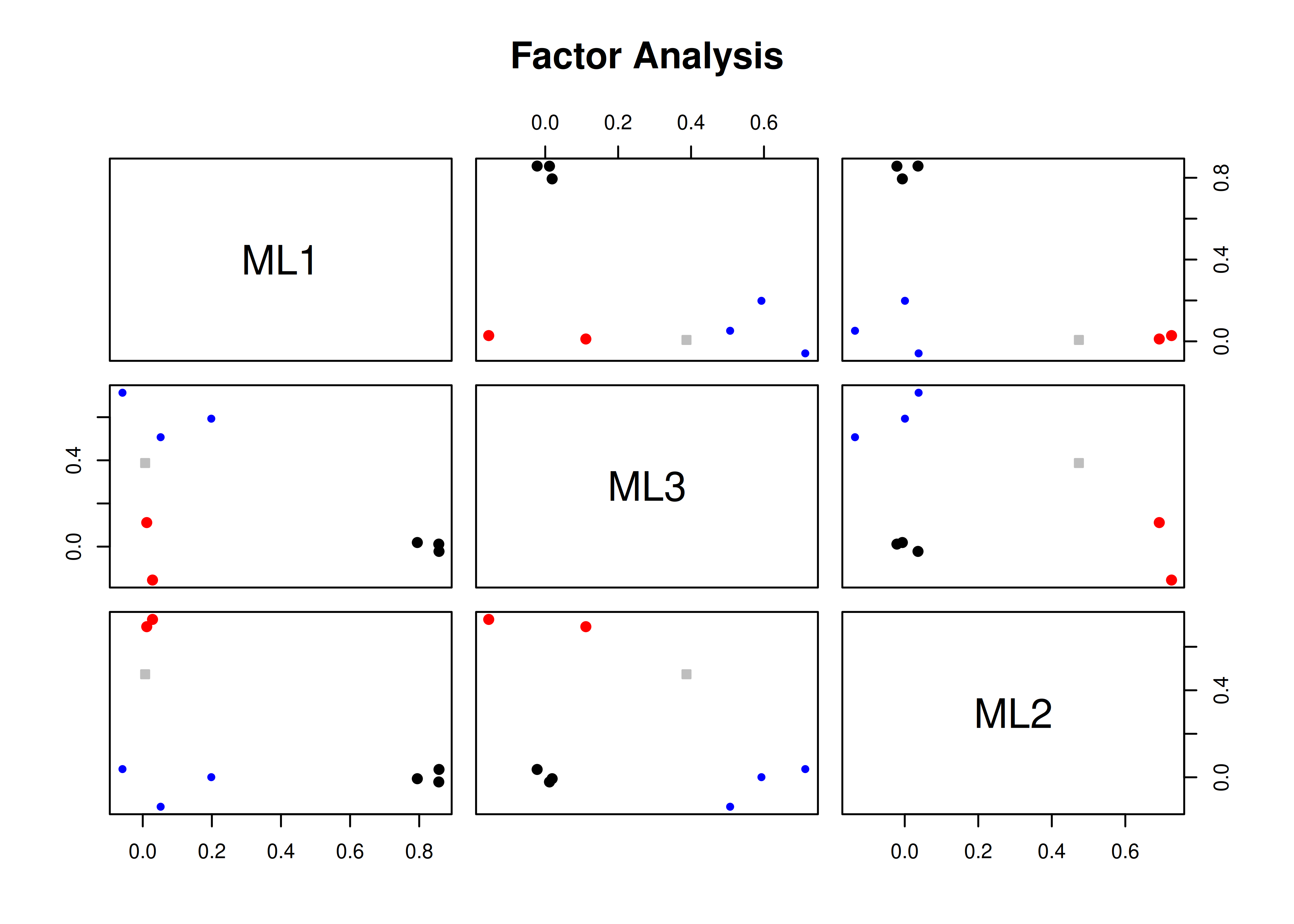

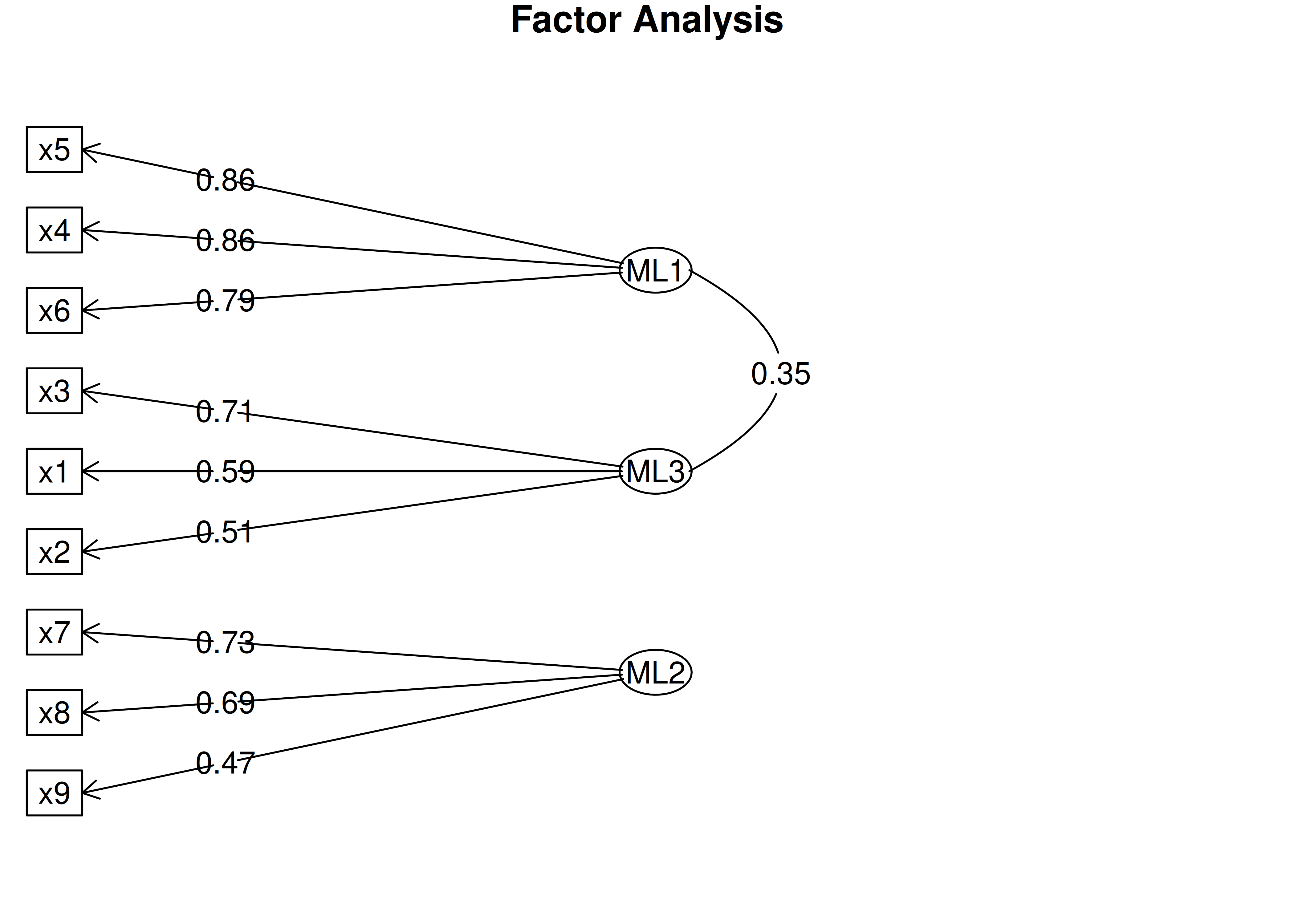

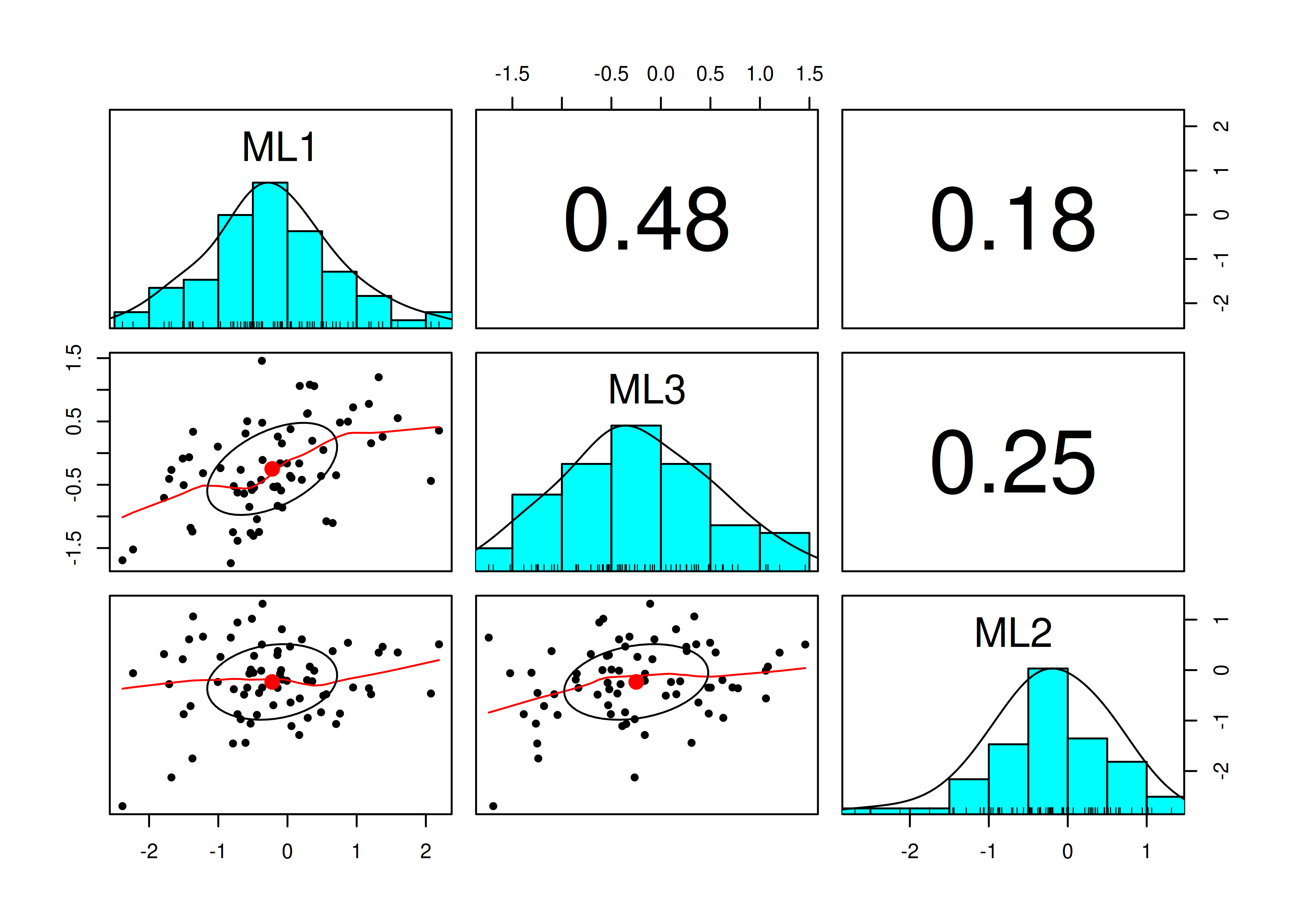

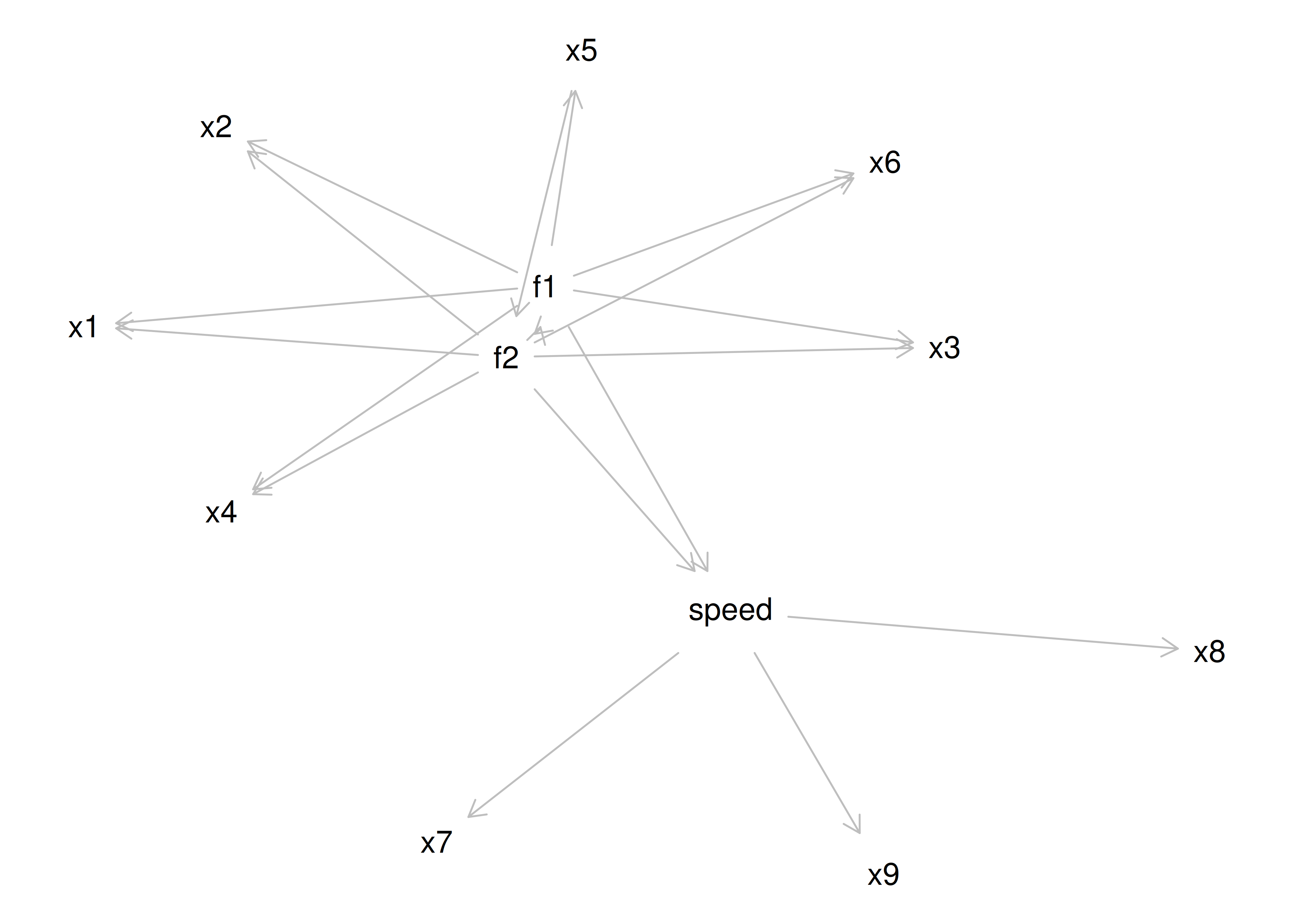

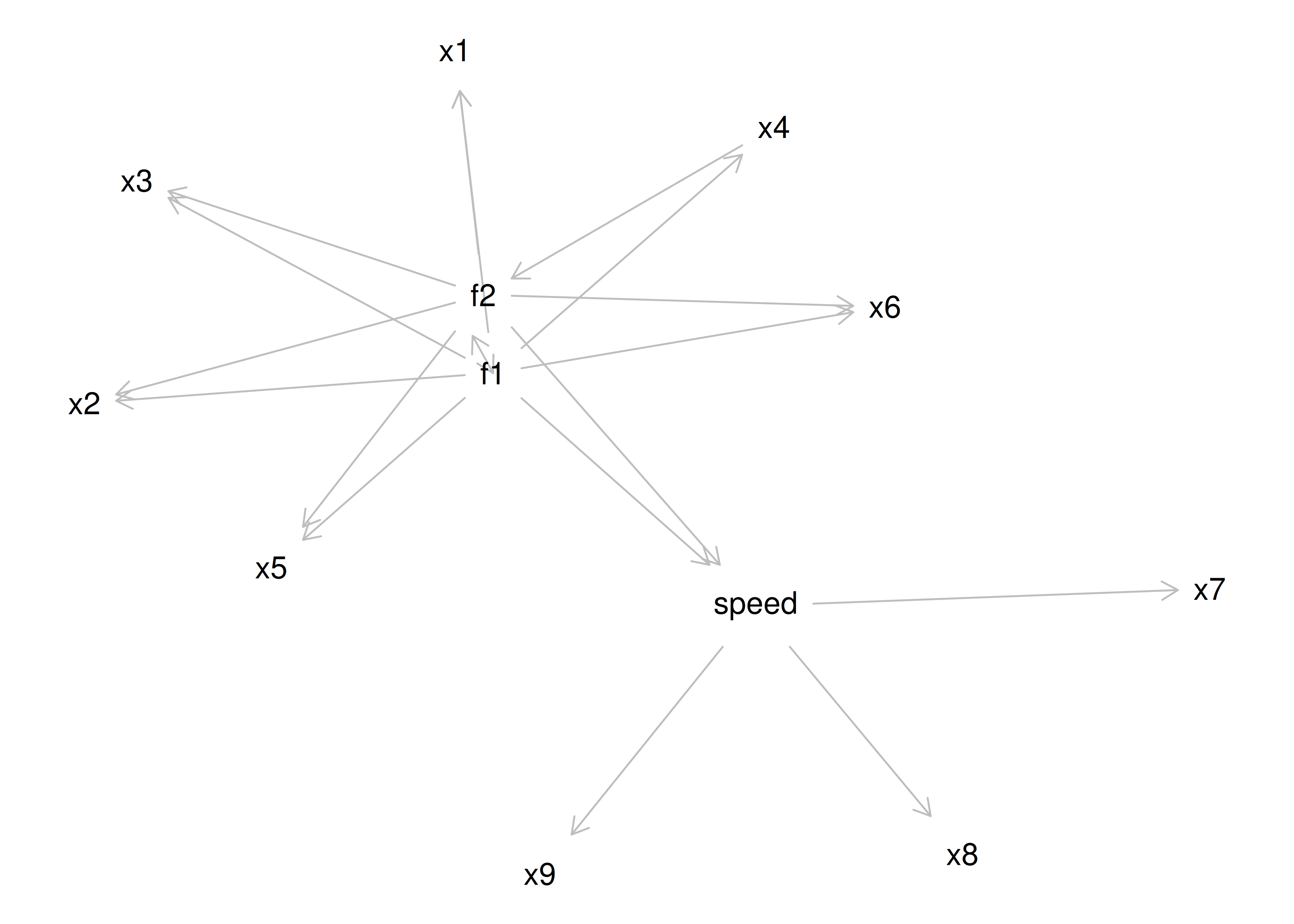

The number of factors to keep would depend on which criteria one uses. Based on the rule to keep factors whose eigenvalues are greater than zero and based on the parallel test, we would keep three factors. However, based on the Cattell scree test (as operationalized by the optimal coordinates and acceleration factor), we would keep one factor. Therefore, interpretability of the factors would be important for deciding between whether to keep one, two, or three factors.

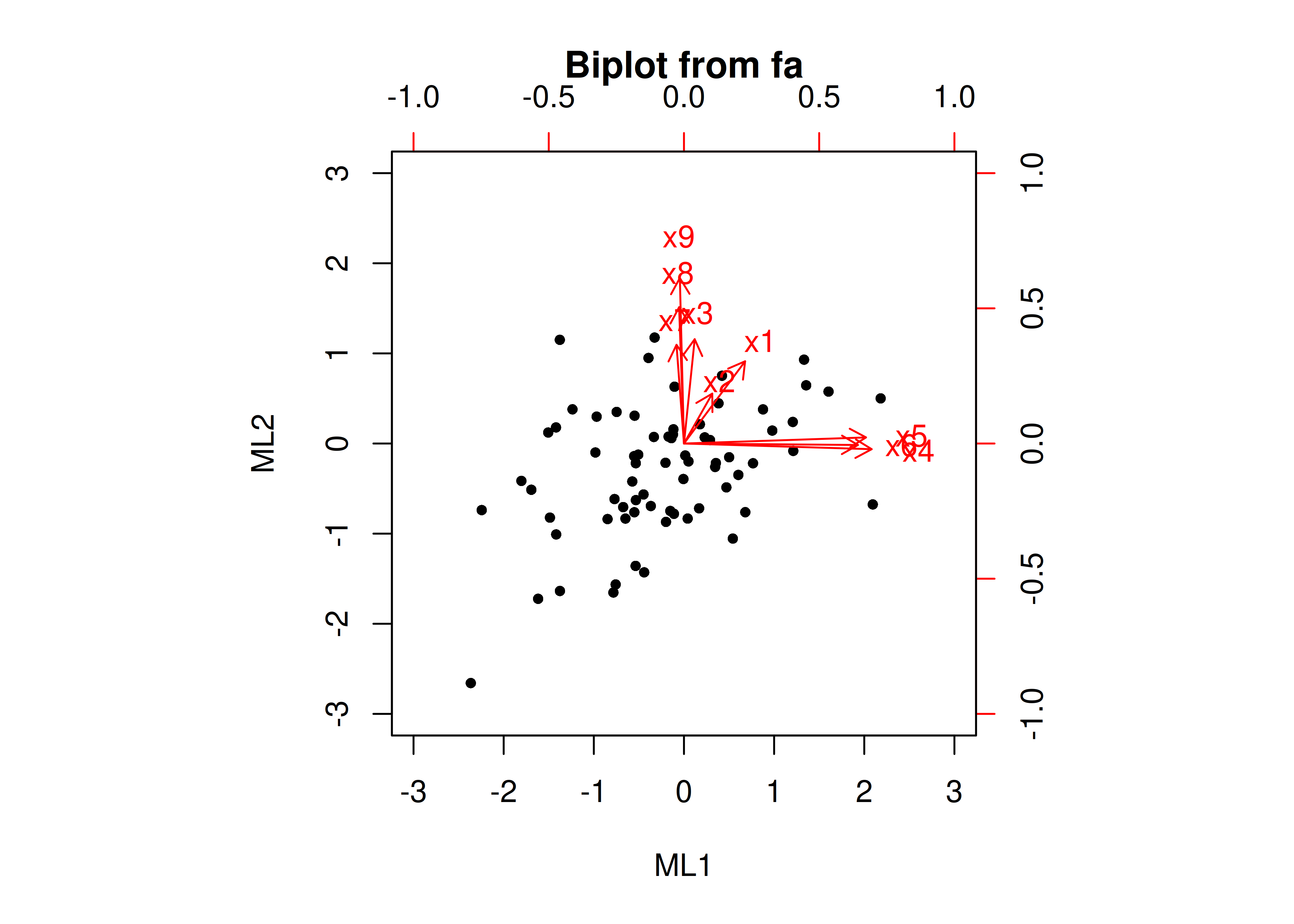

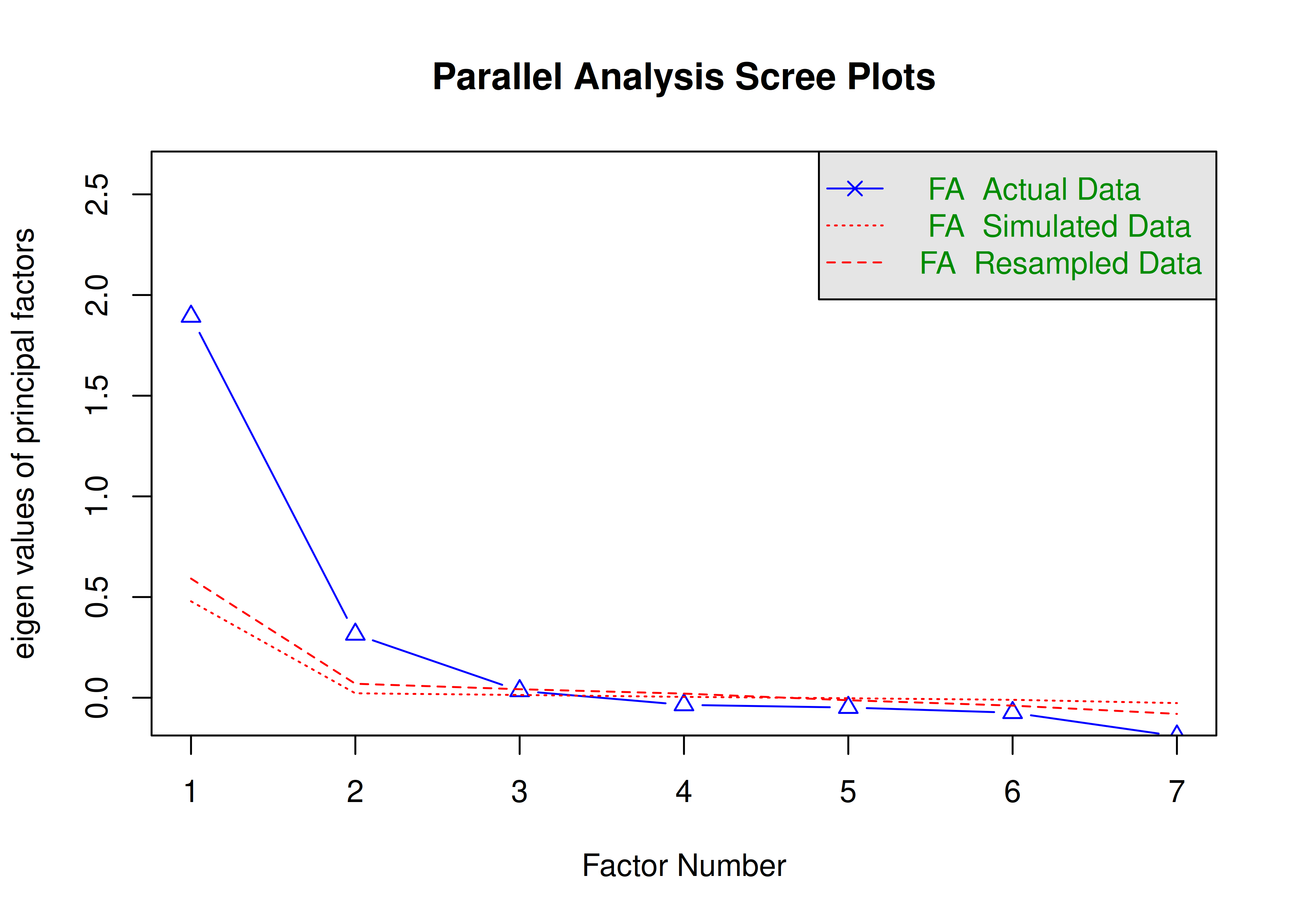

A scree plot from a parallel analysis is in Figure 14.28.

Figure 14.28: Scree Plot from Parallel Analysis in Exploratory Factor Analysis.

Parallel analysis suggests that the number of factors = 3 and the number of components = NA A scree plot from EFA is in Figure 14.29.

Code

Figure 14.29: Scree Plot in Exploratory Factor Analysis.

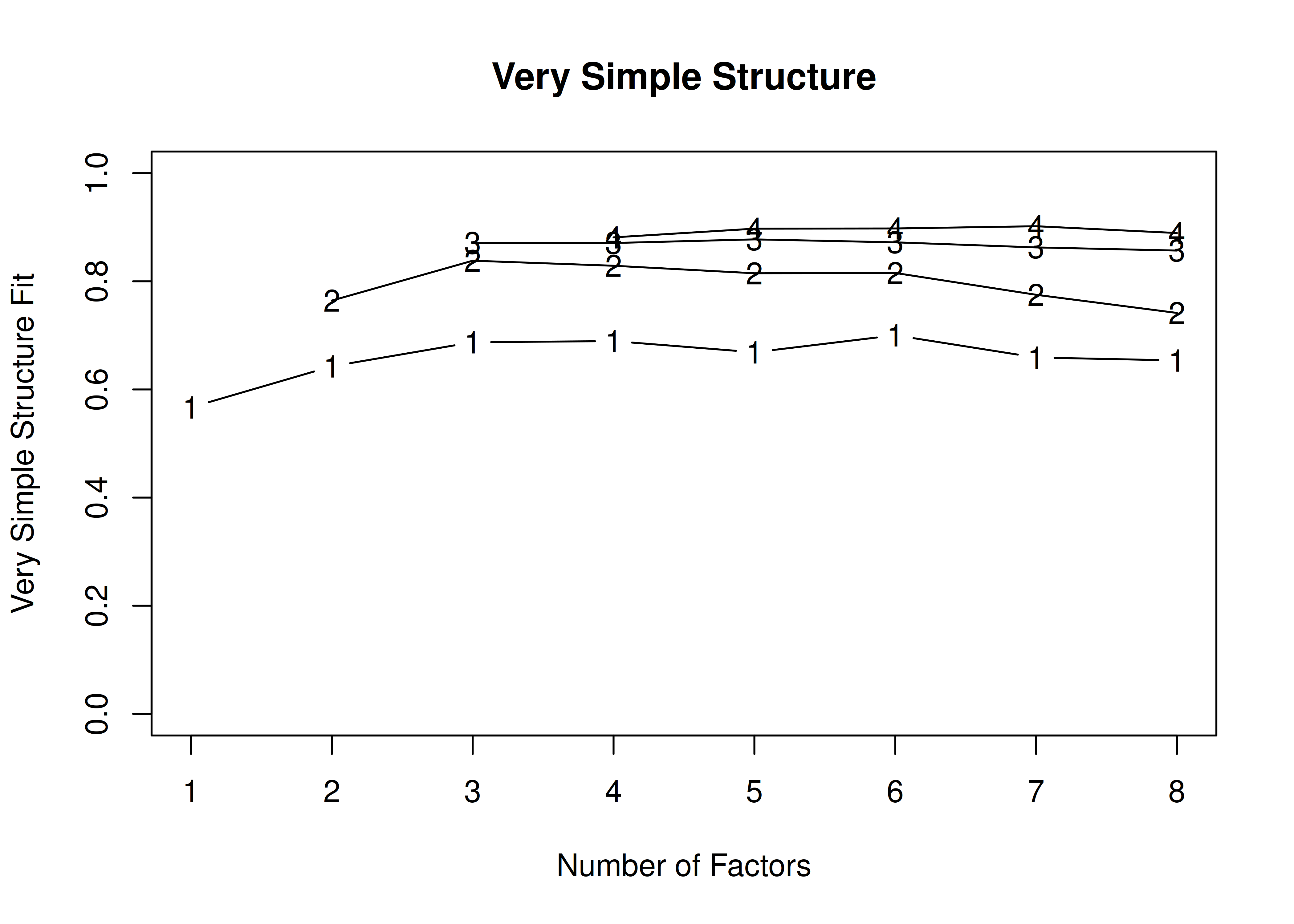

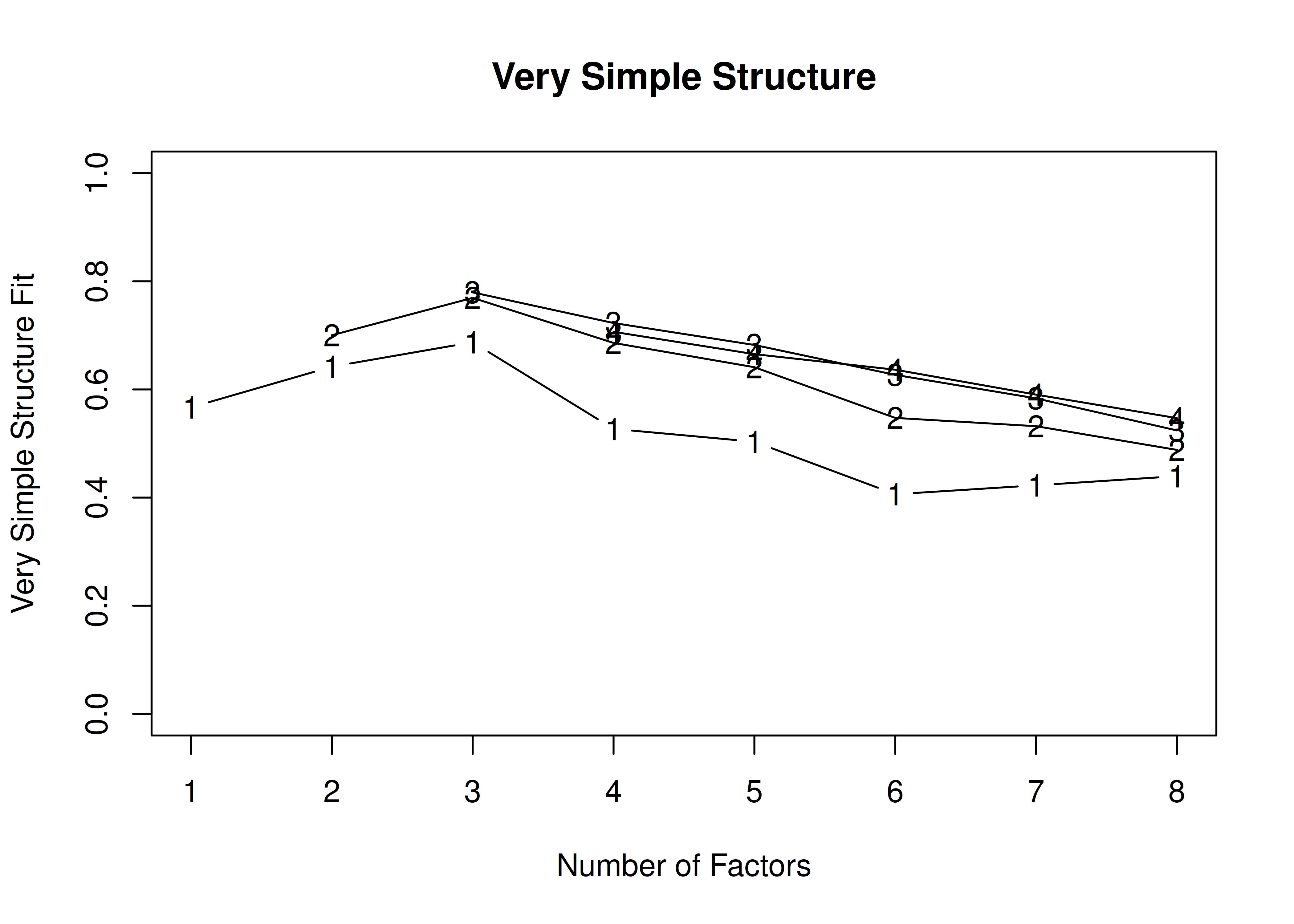

14.4.1.1.2 Very Simple Structure (VSS) Plot

The very simple structure (VSS) is another criterion that can be used to determine the optimal number of factors or components to retain.

Using the VSS criterion, the optimal number of factors to retain is the number of factors that maximizes the VSS criterion (Revelle & Rocklin, 1979).

The VSS criterion is evaluated with models in which factor loadings for a given item that are less than the maximum factor loading for that item are suppressed to zero, thus forcing simple structure (i.e., no cross-loadings).

The goal is finding a factor structure with interpretability so that factors are clearly distinguishable.

Thus, we want to identify the number of factors with the highest VSS criterion (i.e., the highest line).

Very simple structure (VSS) plots were generated using the psych package (Revelle, 2022).

The output also provides additional criteria by which to determine the optimal number of factors, each for which lower values are better, including the Velicer minimum average partial (MAP) test, the Bayesian information criterion (BIC), the sample size-adjusted BIC (SABIC), and the root mean square error of approximation (RMSEA).

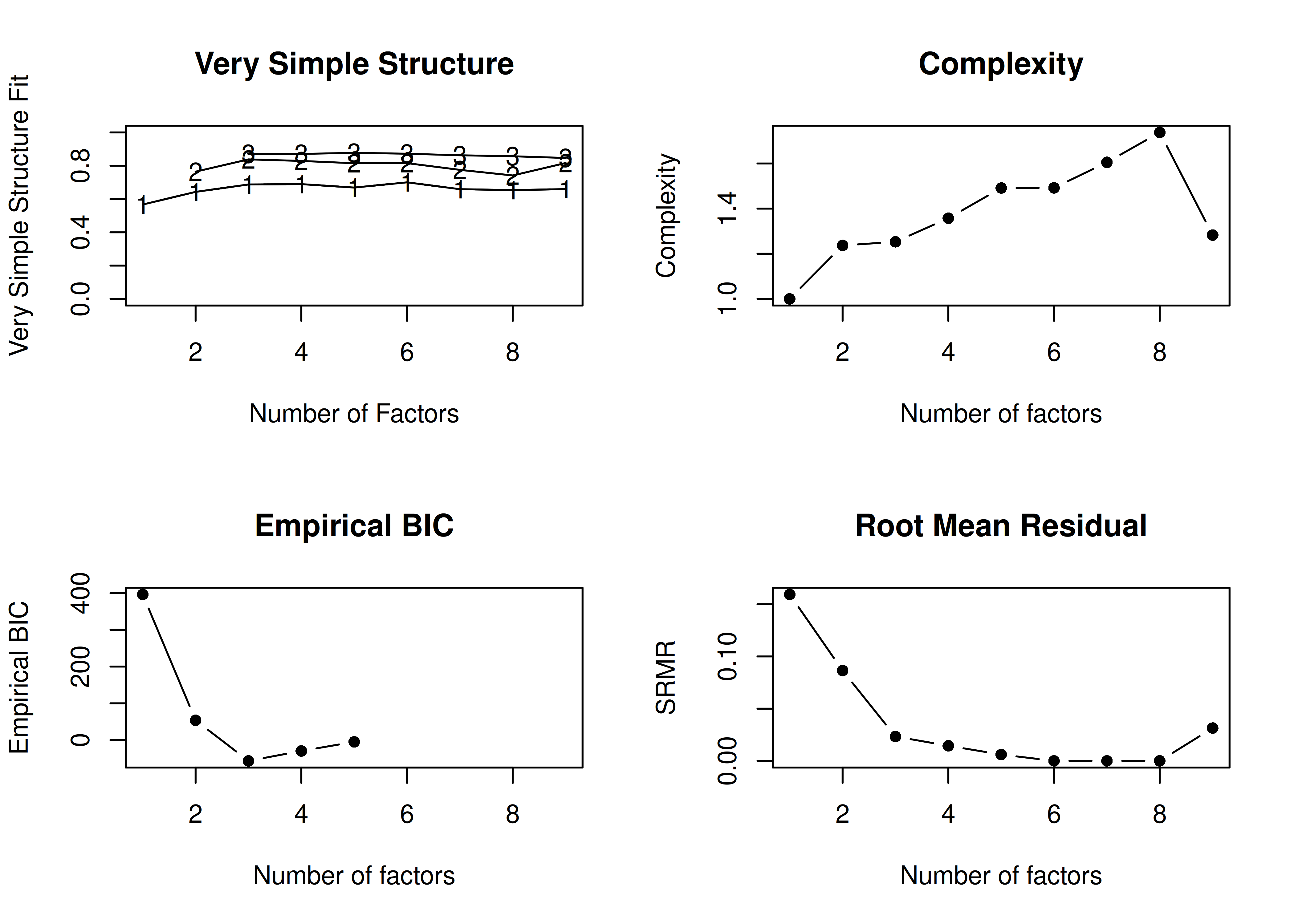

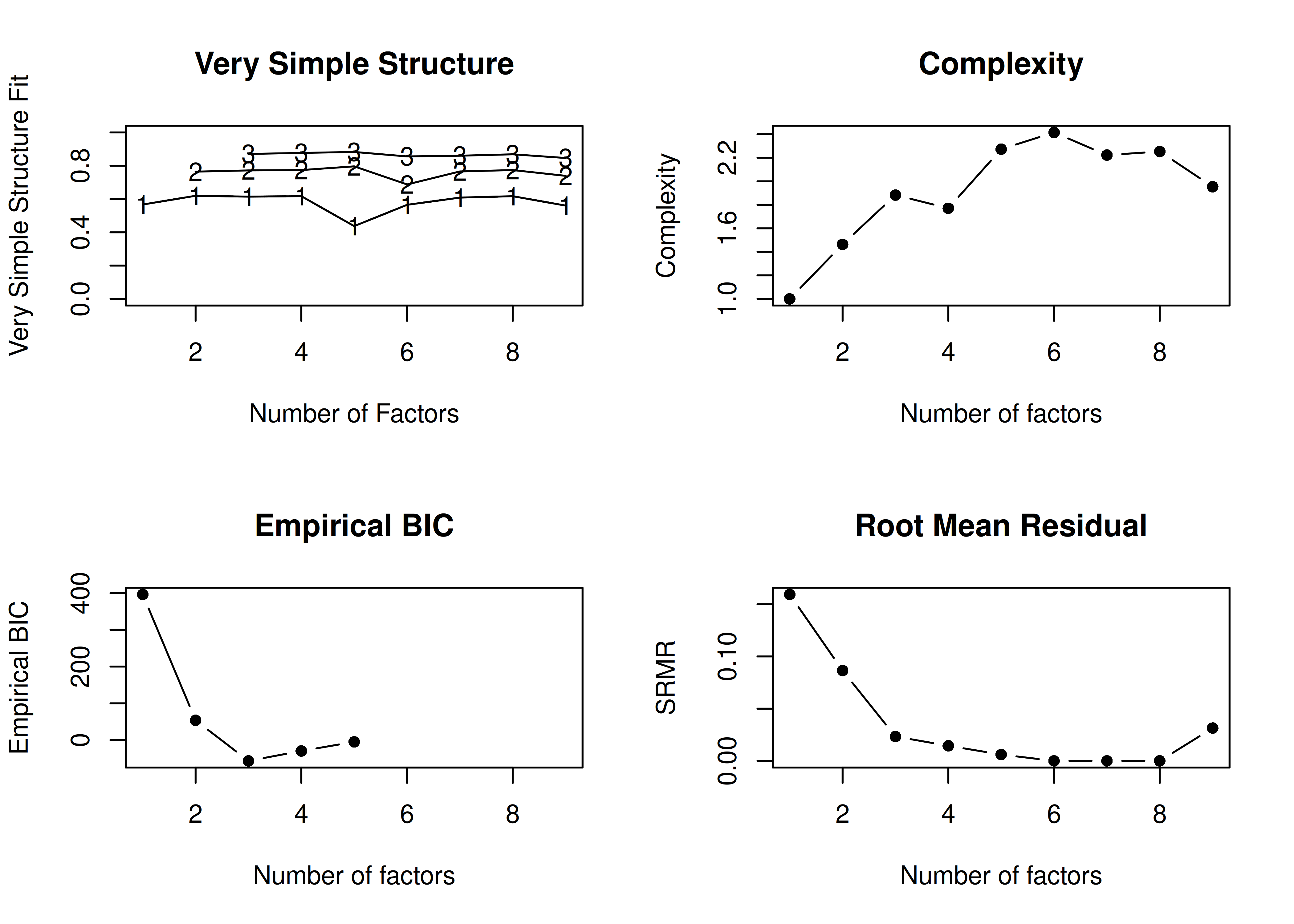

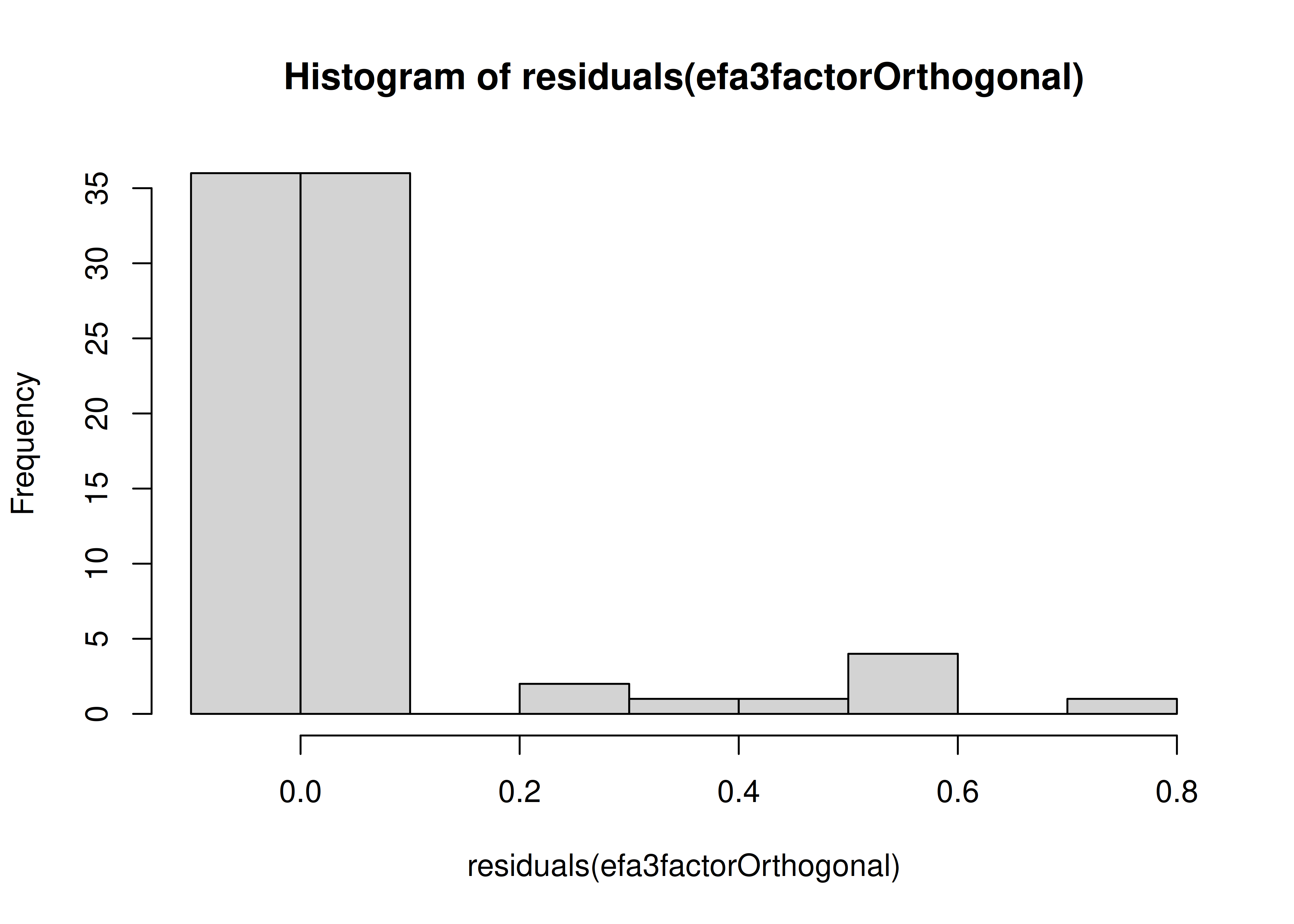

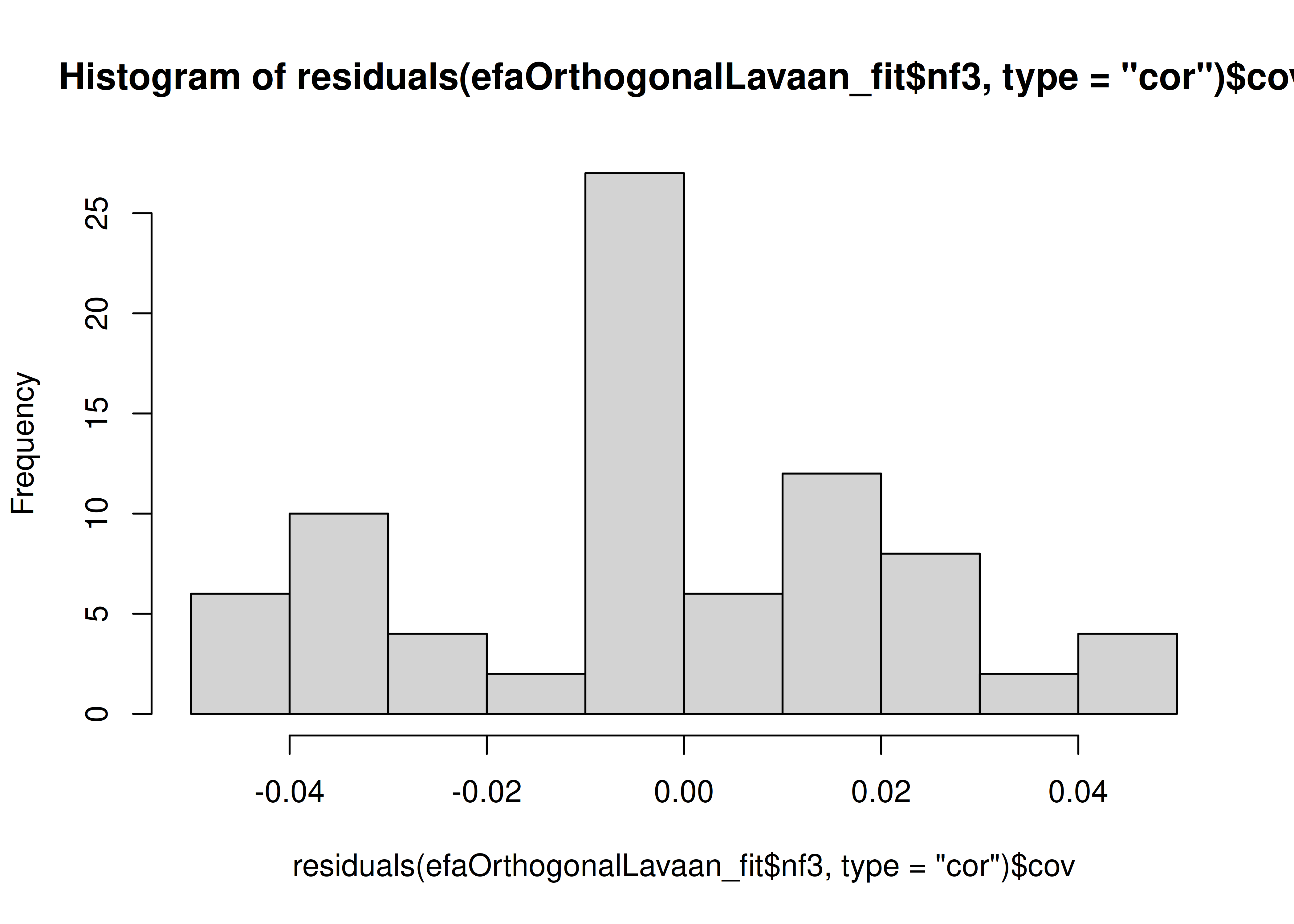

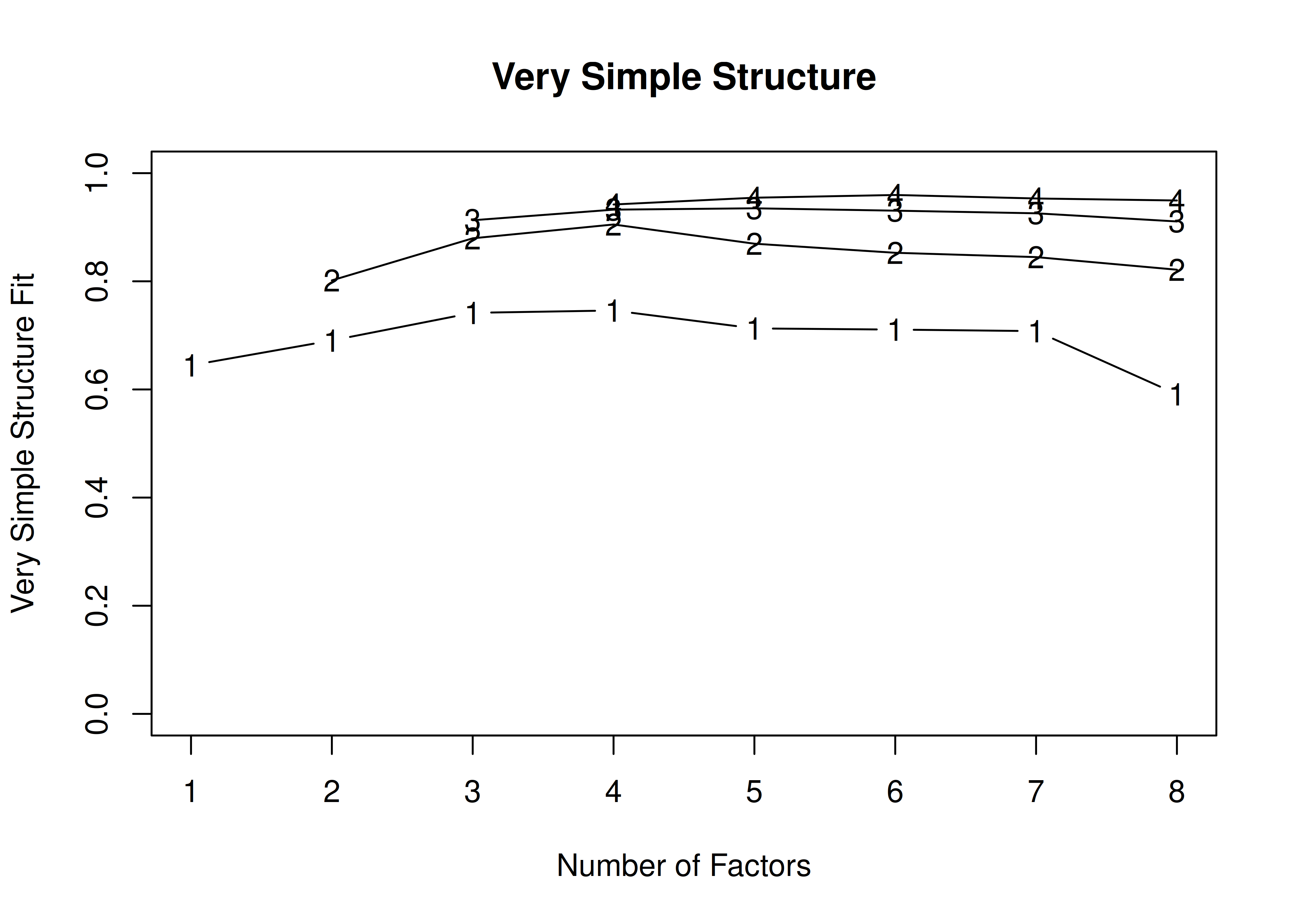

14.4.1.1.2.1 Orthogonal (Varimax) rotation

In the example with orthogonal rotation below, the VSS criterion is highest with 3 or 4 factors. A three-factor solution is supported by the lowest BIC, whereas a four-factor solution is supported by the lowest SABIC.

A VSS plot is in Figure 14.30.

Figure 14.30: Very Simple Structure Plot With Orthogonal Rotation in Exploratory Factor Analysis.

Very Simple Structure

Call: vss(x = HolzingerSwineford1939[, vars], rotate = "varimax", fm = "ml")

VSS complexity 1 achieves a maximimum of 0.7 with 4 factors

VSS complexity 2 achieves a maximimum of 0.84 with 3 factors

The Velicer MAP achieves a minimum of 0.07 with 2 factors

BIC achieves a minimum of -32.61 with 3 factors

Sample Size adjusted BIC achieves a minimum of -5.65 with 4 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.57 0.00 0.078 27 3.2e+02 2.3e-52 7.1 0.57 0.191 168.4 254.05 1.0

2 0.64 0.76 0.067 19 1.4e+02 1.2e-20 3.9 0.76 0.146 32.5 92.75 1.2

3 0.69 0.84 0.071 12 3.6e+01 3.4e-04 2.1 0.87 0.081 -32.6 5.45 1.3

4 0.69 0.83 0.125 6 9.6e+00 1.4e-01 2.0 0.88 0.044 -24.7 -5.65 1.4

5 0.67 0.81 0.199 1 1.8e+00 1.8e-01 1.6 0.90 0.050 -3.9 -0.77 1.5

6 0.70 0.82 0.403 -3 2.6e-08 NA 1.6 0.91 NA NA NA 1.5

7 0.66 0.78 0.447 -6 0.0e+00 NA 1.4 0.91 NA NA NA 1.6

8 0.65 0.74 1.000 -8 0.0e+00 NA 1.3 0.92 NA NA NA 1.7

eChisq SRMR eCRMS eBIC

1 5.5e+02 1.6e-01 0.184 396.3

2 1.6e+02 8.7e-02 0.119 53.8

3 1.2e+01 2.3e-02 0.040 -56.7

4 4.5e+00 1.4e-02 0.035 -29.7

5 8.0e-01 6.1e-03 0.036 -4.9

6 1.3e-08 7.7e-07 NA NA

7 2.1e-13 3.1e-09 NA NA

8 2.1e-16 1.0e-10 NA NAMultiple VSS-related fit indices are in Figure 14.31.

Figure 14.31: Very Simple Structure-Related Indices With Orthogonal Rotation in Exploratory Factor Analysis.

Number of factors

Call: vss(x = x, n = n, rotate = rotate, diagonal = diagonal, fm = fm,

n.obs = n.obs, plot = FALSE, title = title, use = use, cor = cor)

VSS complexity 1 achieves a maximimum of Although the vss.max shows 6 factors, it is probably more reasonable to think about 4 factors

VSS complexity 2 achieves a maximimum of 0.84 with 3 factors

The Velicer MAP achieves a minimum of 0.07 with 2 factors

Empirical BIC achieves a minimum of -56.69 with 3 factors

Sample Size adjusted BIC achieves a minimum of -5.65 with 4 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.57 0.00 0.078 27 3.2e+02 2.3e-52 7.1 0.57 0.191 168.4 254.05 1.0

2 0.64 0.76 0.067 19 1.4e+02 1.2e-20 3.9 0.76 0.146 32.5 92.75 1.2

3 0.69 0.84 0.071 12 3.6e+01 3.4e-04 2.1 0.87 0.081 -32.6 5.45 1.3

4 0.69 0.83 0.125 6 9.6e+00 1.4e-01 2.0 0.88 0.044 -24.7 -5.65 1.4

5 0.67 0.81 0.199 1 1.8e+00 1.8e-01 1.6 0.90 0.050 -3.9 -0.77 1.5

6 0.70 0.82 0.403 -3 2.6e-08 NA 1.6 0.91 NA NA NA 1.5

7 0.66 0.78 0.447 -6 0.0e+00 NA 1.4 0.91 NA NA NA 1.6

8 0.65 0.74 1.000 -8 0.0e+00 NA 1.3 0.92 NA NA NA 1.7

9 0.66 0.82 NA -9 4.4e+01 NA 2.5 0.85 NA NA NA 1.3

eChisq SRMR eCRMS eBIC

1 5.5e+02 1.6e-01 0.184 396.3

2 1.6e+02 8.7e-02 0.119 53.8

3 1.2e+01 2.3e-02 0.040 -56.7

4 4.5e+00 1.4e-02 0.035 -29.7

5 8.0e-01 6.1e-03 0.036 -4.9

6 1.3e-08 7.7e-07 NA NA

7 2.1e-13 3.1e-09 NA NA

8 2.1e-16 1.0e-10 NA NA

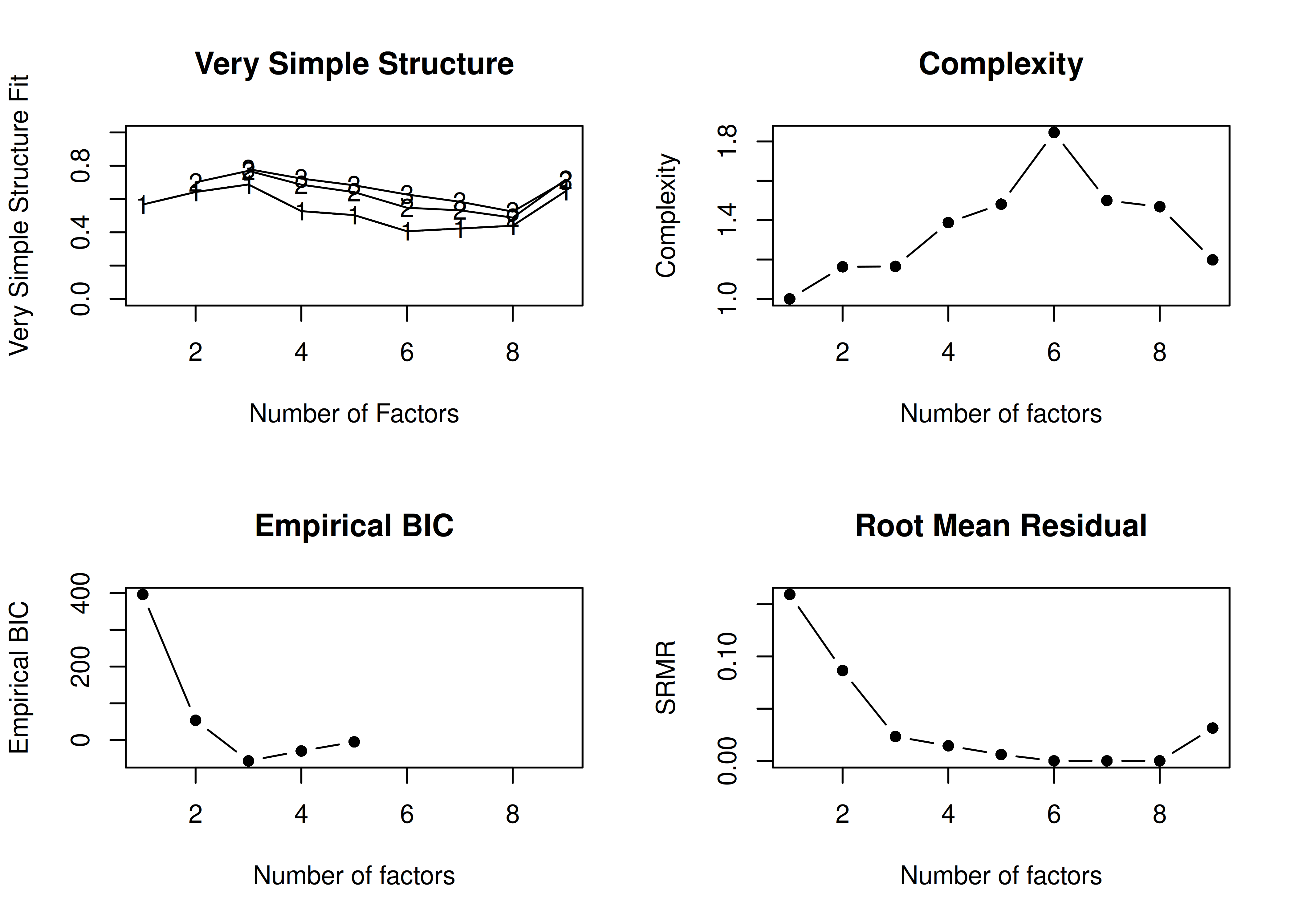

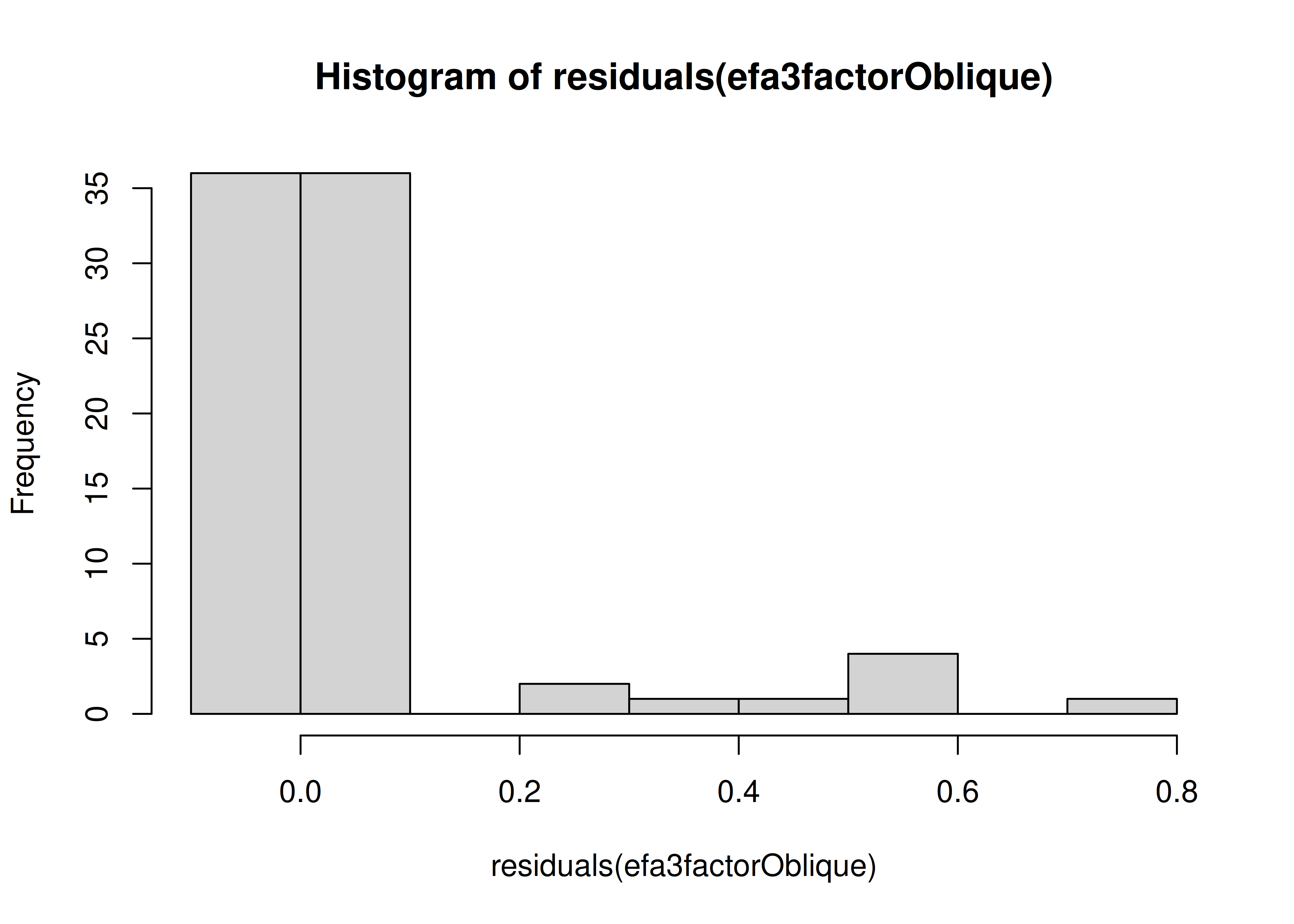

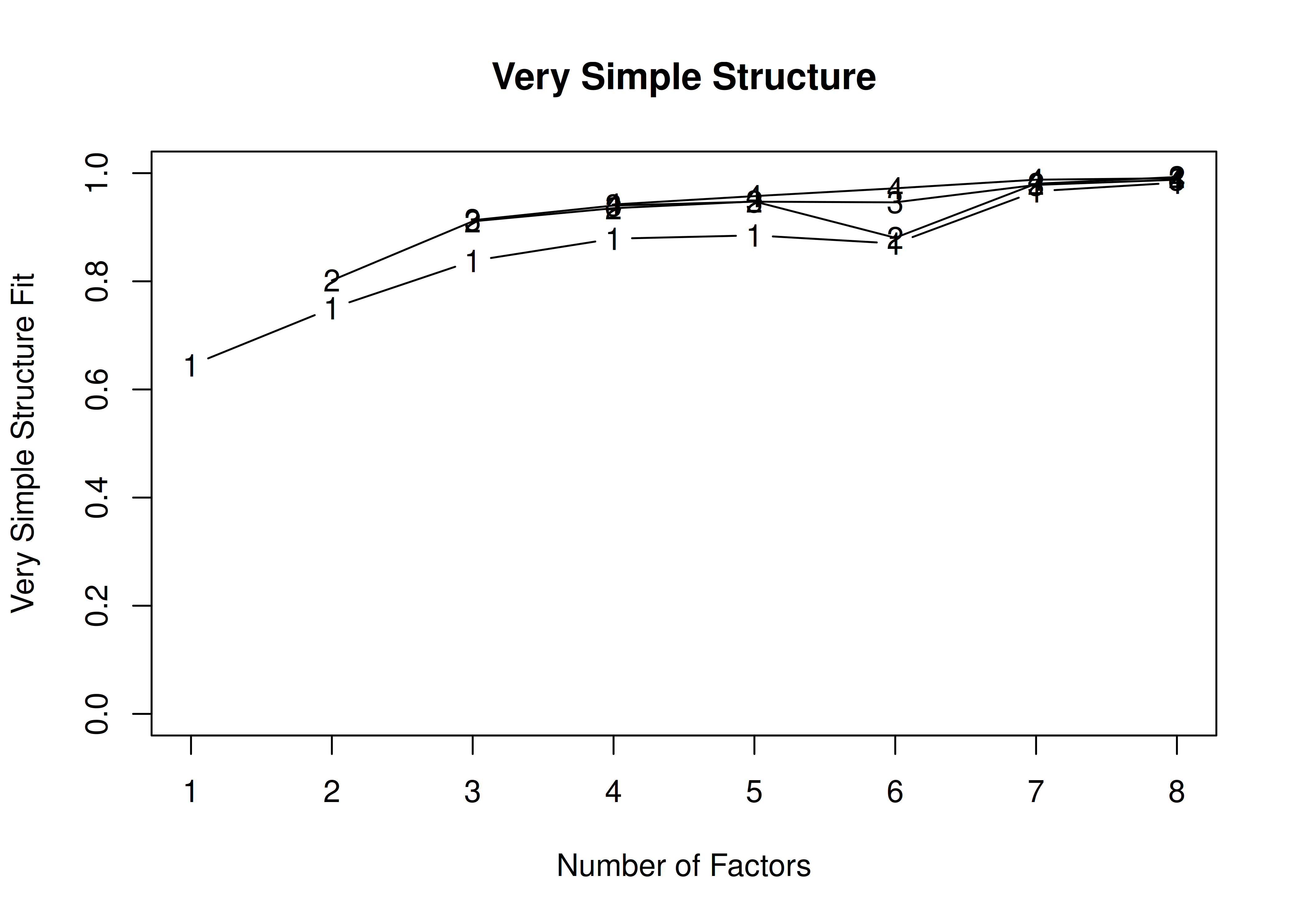

9 2.1e+01 3.1e-02 NA NA14.4.1.1.2.2 Oblique (Oblimin) rotation

In the example with oblique rotation below, the VSS criterion is highest with 3 factors. A three-factor solution is supported by the lowest BIC.

A VSS plot is in Figure 14.32.

Figure 14.32: Very Simple Structure Plot With Oblique Rotation in Exploratory Factor Analysis.

Very Simple Structure

Call: vss(x = HolzingerSwineford1939[, vars], rotate = "oblimin", fm = "ml")

VSS complexity 1 achieves a maximimum of 0.69 with 3 factors

VSS complexity 2 achieves a maximimum of 0.77 with 3 factors

The Velicer MAP achieves a minimum of 0.07 with 2 factors

BIC achieves a minimum of -32.61 with 3 factors

Sample Size adjusted BIC achieves a minimum of -5.65 with 4 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.57 0.00 0.078 27 3.2e+02 2.3e-52 7.1 0.57 0.191 168.4 254.05 1.0

2 0.64 0.70 0.067 19 1.4e+02 1.2e-20 4.9 0.70 0.146 32.5 92.75 1.2

3 0.69 0.77 0.071 12 3.6e+01 3.4e-04 3.6 0.78 0.081 -32.6 5.45 1.2

4 0.53 0.69 0.125 6 9.6e+00 1.4e-01 4.8 0.71 0.044 -24.7 -5.65 1.4

5 0.50 0.64 0.199 1 1.8e+00 1.8e-01 5.3 0.68 0.050 -3.9 -0.77 1.5

6 0.41 0.55 0.403 -3 2.6e-08 NA 6.1 0.63 NA NA NA 1.8

7 0.42 0.53 0.447 -6 0.0e+00 NA 6.8 0.59 NA NA NA 1.5

8 0.44 0.49 1.000 -8 0.0e+00 NA 7.5 0.55 NA NA NA 1.5

eChisq SRMR eCRMS eBIC

1 5.5e+02 1.6e-01 0.184 396.3

2 1.6e+02 8.7e-02 0.119 53.8

3 1.2e+01 2.3e-02 0.040 -56.7

4 4.5e+00 1.4e-02 0.035 -29.7

5 8.0e-01 6.1e-03 0.036 -4.9

6 1.3e-08 7.7e-07 NA NA

7 2.1e-13 3.1e-09 NA NA

8 2.1e-16 1.0e-10 NA NAMultiple VSS-related fit indices are in Figure 14.33.

Figure 14.33: Very Simple Structure-Related Indices With Oblique Rotation in Exploratory Factor Analysis.

Number of factors

Call: vss(x = x, n = n, rotate = rotate, diagonal = diagonal, fm = fm,

n.obs = n.obs, plot = FALSE, title = title, use = use, cor = cor)

VSS complexity 1 achieves a maximimum of 0.69 with 3 factors

VSS complexity 2 achieves a maximimum of 0.77 with 3 factors

The Velicer MAP achieves a minimum of 0.07 with 2 factors

Empirical BIC achieves a minimum of -56.69 with 3 factors

Sample Size adjusted BIC achieves a minimum of -5.65 with 4 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.57 0.00 0.078 27 3.2e+02 2.3e-52 7.1 0.57 0.191 168.4 254.05 1.0

2 0.64 0.70 0.067 19 1.4e+02 1.2e-20 4.9 0.70 0.146 32.5 92.75 1.2

3 0.69 0.77 0.071 12 3.6e+01 3.4e-04 3.6 0.78 0.081 -32.6 5.45 1.2

4 0.53 0.69 0.125 6 9.6e+00 1.4e-01 4.8 0.71 0.044 -24.7 -5.65 1.4

5 0.50 0.64 0.199 1 1.8e+00 1.8e-01 5.3 0.68 0.050 -3.9 -0.77 1.5

6 0.41 0.55 0.403 -3 2.6e-08 NA 6.1 0.63 NA NA NA 1.8

7 0.42 0.53 0.447 -6 0.0e+00 NA 6.8 0.59 NA NA NA 1.5

8 0.44 0.49 1.000 -8 0.0e+00 NA 7.5 0.55 NA NA NA 1.5

9 0.65 0.72 NA -9 4.4e+01 NA 4.5 0.73 NA NA NA 1.2

eChisq SRMR eCRMS eBIC

1 5.5e+02 1.6e-01 0.184 396.3

2 1.6e+02 8.7e-02 0.119 53.8

3 1.2e+01 2.3e-02 0.040 -56.7

4 4.5e+00 1.4e-02 0.035 -29.7

5 8.0e-01 6.1e-03 0.036 -4.9

6 1.3e-08 7.7e-07 NA NA

7 2.1e-13 3.1e-09 NA NA

8 2.1e-16 1.0e-10 NA NA

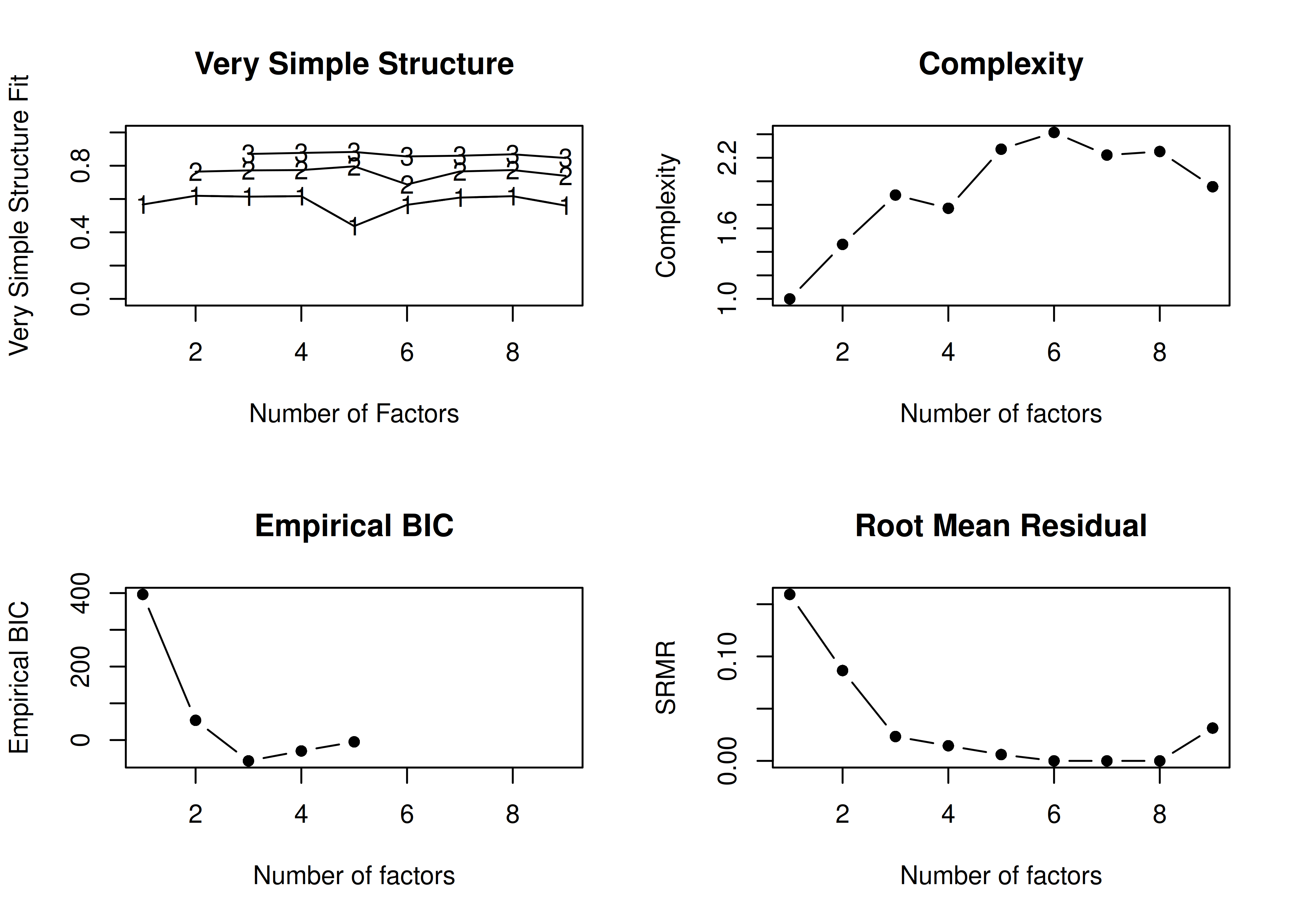

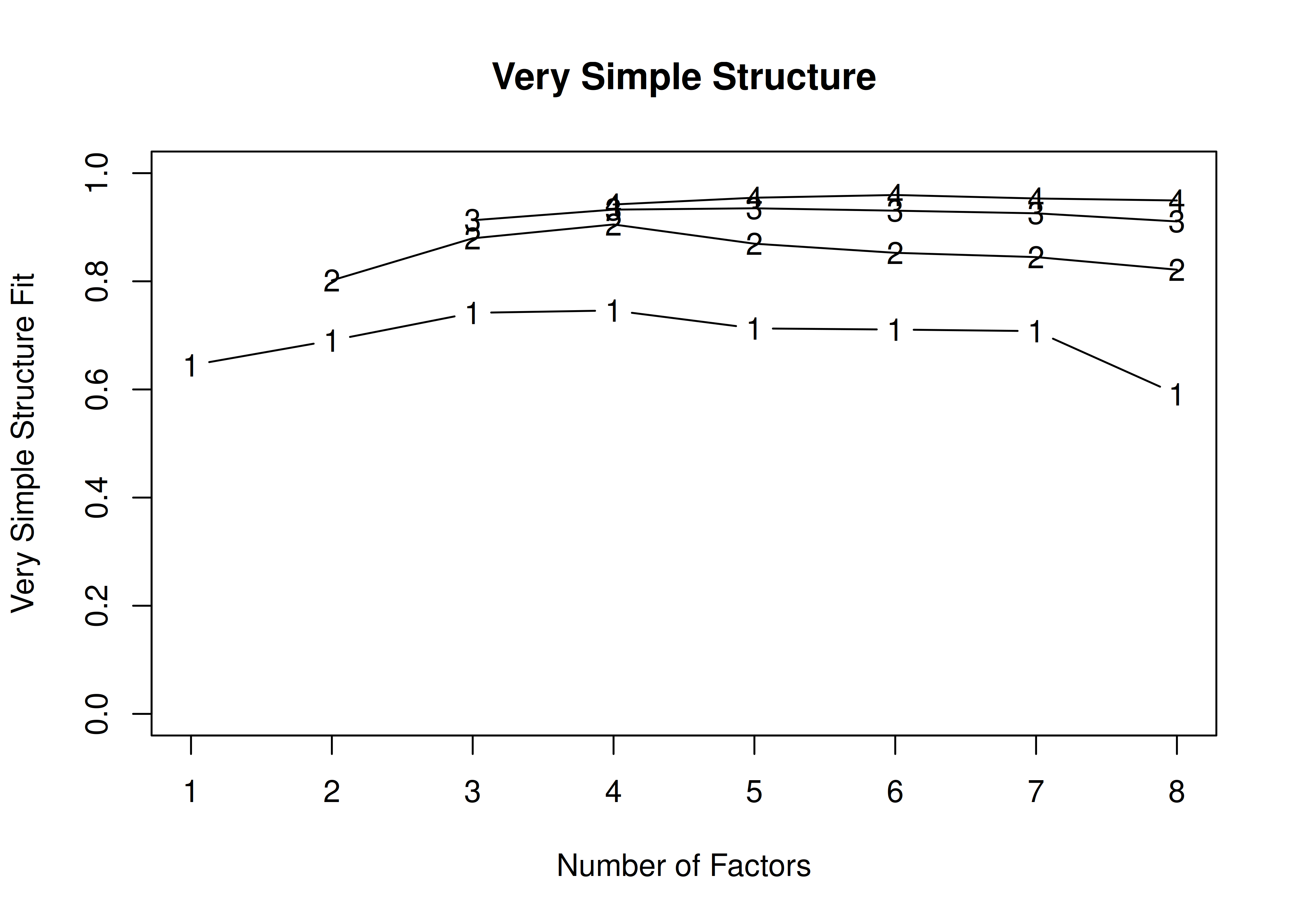

9 2.1e+01 3.1e-02 NA NA14.4.1.1.2.3 No rotation

In the example with no rotation below, the VSS criterion is highest with 3 or 4 factors. A three-factor solution is supported by the lowest BIC, whereas a four-factor solution is supported by the lowest SABIC.

A VSS plot is in Figure 14.34.

Figure 14.34: Very Simple Structure Plot With no Rotation in Exploratory Factor Analysis.

Number of factors

Call: vss(x = x, n = n, rotate = rotate, diagonal = diagonal, fm = fm,

n.obs = n.obs, plot = FALSE, title = title, use = use, cor = cor)

VSS complexity 1 achieves a maximimum of 0.62 with 2 factors

VSS complexity 2 achieves a maximimum of 0.8 with 5 factors

The Velicer MAP achieves a minimum of 0.07 with 2 factors

Empirical BIC achieves a minimum of -56.69 with 3 factors

Sample Size adjusted BIC achieves a minimum of -5.65 with 4 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.57 0.00 0.078 27 3.2e+02 2.3e-52 7.1 0.57 0.191 168.4 254.05 1.0

2 0.62 0.76 0.067 19 1.4e+02 1.2e-20 3.9 0.76 0.146 32.5 92.75 1.5

3 0.61 0.77 0.071 12 3.6e+01 3.4e-04 2.1 0.87 0.081 -32.6 5.45 1.9

4 0.62 0.77 0.125 6 9.6e+00 1.4e-01 2.0 0.88 0.044 -24.7 -5.65 1.8

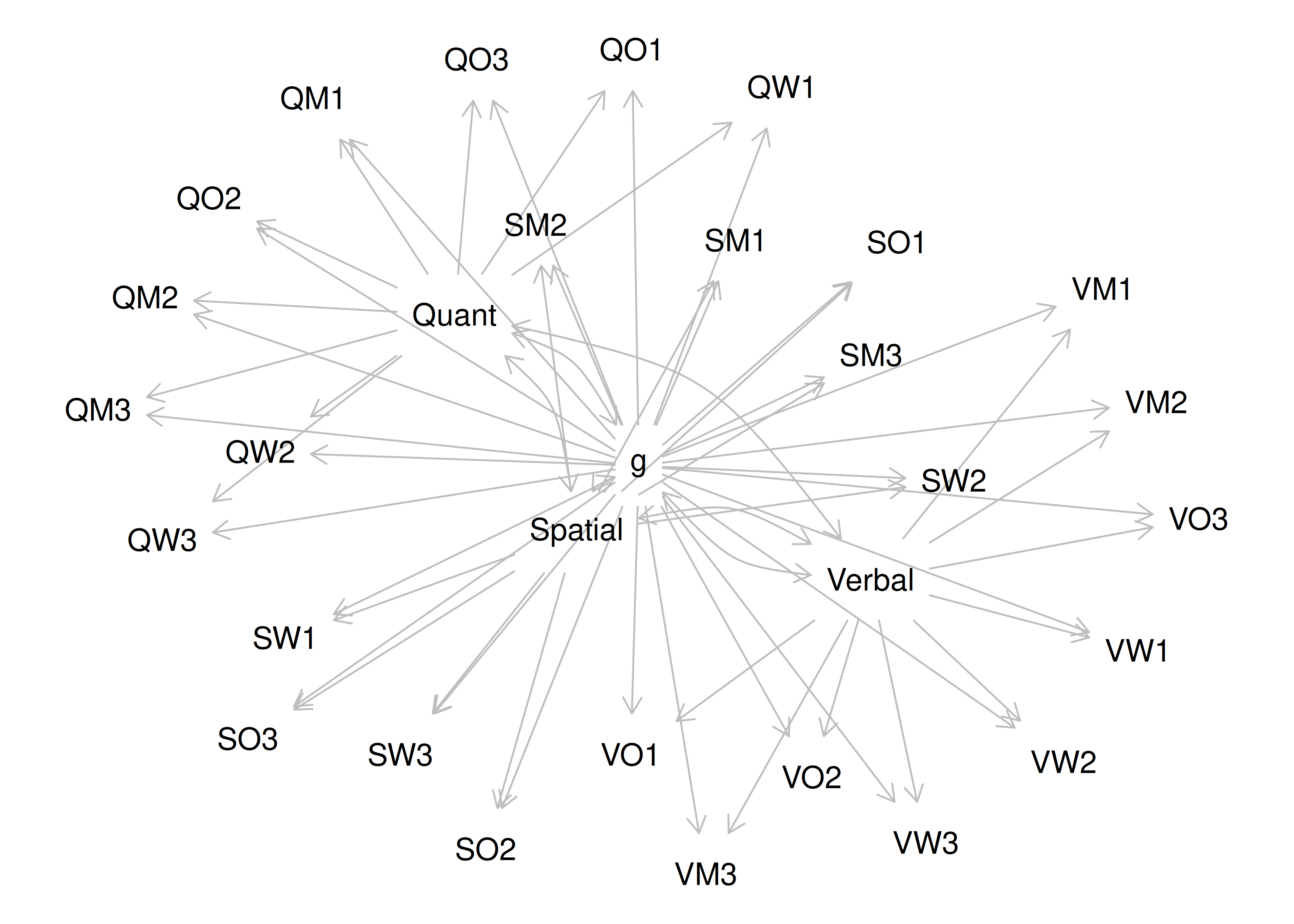

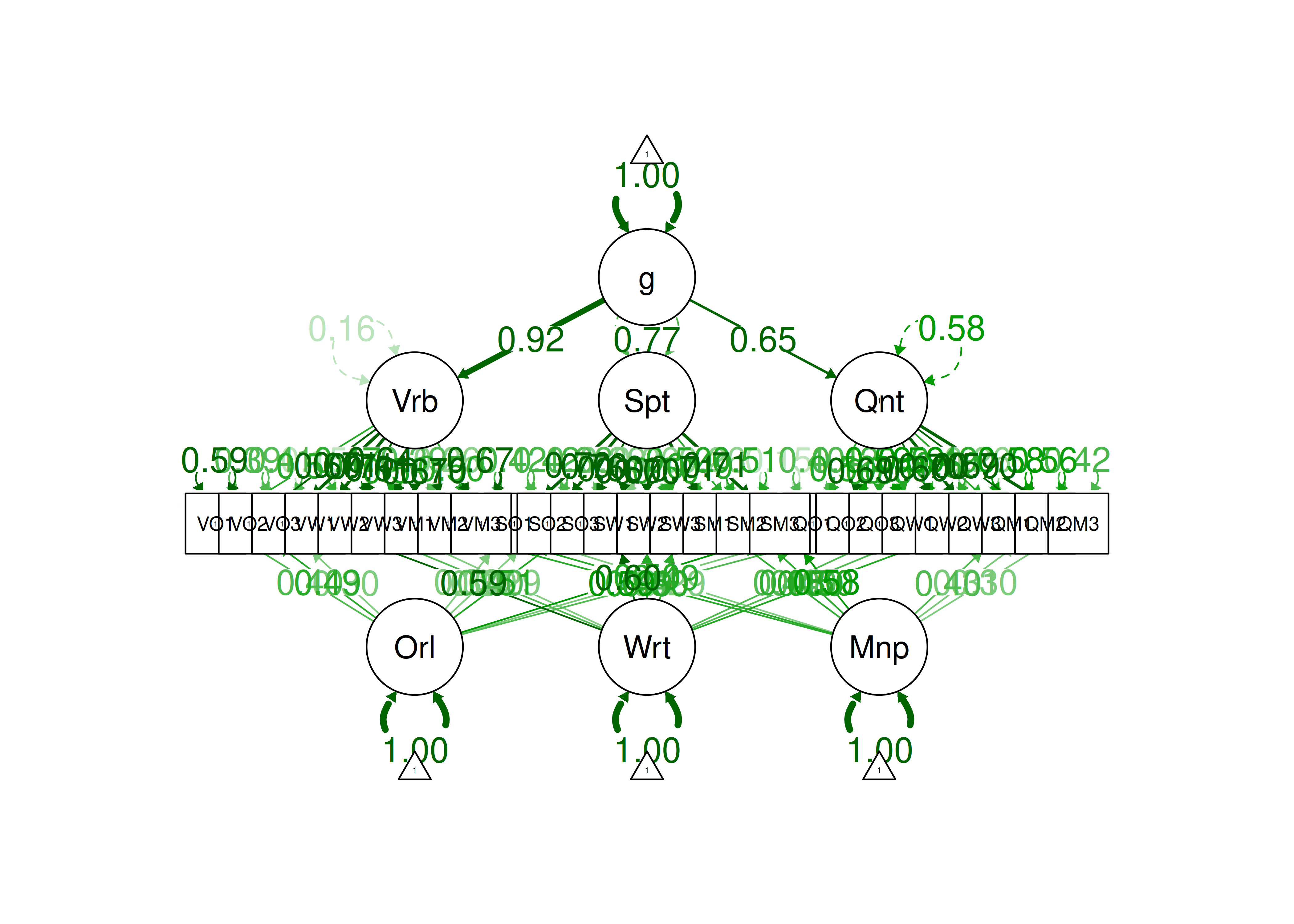

5 0.44 0.80 0.199 1 1.8e+00 1.8e-01 1.6 0.90 0.050 -3.9 -0.77 2.3