I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

You can leave a comment at the bottom of the page/chapter, or open an issue or submit a pull request on GitHub: https://github.com/isaactpetersen/Fantasy-Football-Analytics-Textbook

Alternatively, you can leave an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

14 Heuristics and Cognitive Biases in Prediction

This chapter provides an overview of many heuristics, cognitive biases, and fallacies that people engage in when making judgments and predictions in fantasy football.

14.1 Getting Started

14.1.1 Load Packages

14.2 Overview

When considering judgment and prediction, it is important to consider psychological concepts, including heuristics and cognitive biases. In the modern world of big data, research and society need people who know how to make sense of the information around us. Given humans’ cognitive biases, it is valuable to leverage more objective approaches than relying on our “gut” and intutition. Statistical approaches can be a more objective way to identify systematic patterns.

Statistical analysis—and science more generally—is a process to the pursuit of knowledge. An epistemology is an approach to knowledge. Science is perhaps the best approach (epistemology) that society has to approximate truth. Unlike other approaches to knowledge, science relies on empirical evidence and does not give undue weight to anecdotal evidence, intuition, tradition, or authority.

Per Petersen (2025), here are the characteristics of science that distinguish it from pseudoscience:

- Risky hypotheses are posed that are falsifiable. The hypotheses can be shown to be wrong.

- Findings can be replicated independently by different research groups and different methods. Evidence converges across studies and methods.

- Potential alternative explanations for findings are specified and examined empirically (with data).

- Steps are taken to guard against the undue influence of personal beliefs and biases.

- The strength of claims reflects the strength of evidence. Findings and the ability to make judgments or predictions are not overstated. For instance, it is important to present the degree of uncertainty from assessments with error bars or confidence intervals.

- Scientifically supported measurement strategies are used based on their psychometrics, including reliability and validity.

By contrast, according to Lilienfeld et al. (2015), some of the frequent features of pseudoscience include:

- An overuse of ad hoc hypotheses designed to immunize claims from falsification

- Absence of self-correction

- Evasion of peer review

- Emphasis on confirmation rather than refutation

- Reversed burden of proof

- Absence of connectivity

- Overreliance on testimonial and anecdotal evidence

- Use of obscurantist language

- Absence of boundary conditions

- The mantra of holism

Nevertheless, statistical analysis is not purely objective and is not a panacea. Science is a human enterprise—it is performed by humans each of whom has their own biases. For instance, cognitive biases can influence how people interpret statistics. As a result, the findings from any given study may be incorrect. Thus, it would be imprudent to make decisions based solely on the results of one study. That is why we wait for findings to be independently replicated by different groups of researchers using different methods.

If a research team publishes flashy new and exciting findings, other researchers have an incentive to disprove the prior findings. Thus, we have more confidence if findings stand up to scrutiny from independent groups of researchers. We also draw upon meta-analyses—studies of many studies, to summarize the results of many studies and not just the findings from any single study that may not replicate. In this way, we can identify which findings are robust and most likely true versus the findings that fail to replicate. Thus, despite its flaws like any other human enterprise, science is a self-correcting process in which the long arc bends toward truth.

In our everyday lives, humans are presented with overwhelming amounts of information. Because human minds cannot parse every piece of information equally, we tend to take mental shortcuts, called heuristics. These mental shortcuts can be helpful. They reduce our mental load and can help us make quick judgments to stay alive or to make complex decisions in the face of uncertainty. For instance, snap judgments can help us rapidly differentiate between a friend and a foe, for survival. However, these mental shortcuts can also lead us astray and to make systematic errors in our judgments and predictions. For instance, heuristics can lead us to identify patterns out of randomness. Cognitive biases are systematic errors in thinking. Cognitive biases can result from heuristics. Fallacies are forms of flawed reasoning (invoked as an argument). Fallacies are a characteristic of an argument, whereas cognitive biases are characteristics of the reasoning agent. Thus, fallacies can result from heuristics and cognitive biases.

One might wonder why fallacies and cognitive biases exist, given that errors in thinking and reasoning would seem to be disadvantageous. However, fallacies and cognitive biases reflect the tradeoffs between speed and accuracy in thinking and, in some cases, may also serve to help people feel better about themselves.

Below, we provide examples of heuristics, cognitive biases, and fallacies. These are not exhaustive lists of all the heuristics, cognitive biases, and fallacies. They are some examples of key ones that are relevant fantasy football.

14.3 Examples of Heuristics

As described above, heuristics are mental shortcuts that people use to handle the overwhelming amount of information to process. Important heuristics used in judgment and prediction in the face of uncertainty include (Critcher & Rosenzweig, 2014; Kahneman, 2011; Tversky & Kahneman, 1974):

- availability heuristic

- representativeness heuristic

- anchoring and adjustment heuristic

- affect heuristic

- performance heuristic

- “What You See Is All There Is” (WYSIATI) heuristic

Use of heuristics has been observed among fantasy sports managers (B. Smith et al., 2006).

14.3.1 Availability Heuristic

The availability heuristic refers to the tendency for a person to make judgments or predictions about the frequency or probability of something based on how readily instances can be brought to mind. For instance, when making fantasy predictions about a player, more recent big performance games (or games that receive more news coverage) may more easily come to mind compared to lower-scoring games and games that occurred longer ago. Thus, a manager may be more inclined to pick players to start who had more recent, stronger performances rather than players who have higher long-term averages.

14.3.2 Representativeness Heuristic

The representativess heuristic refers to the tendency for a person’s judgments or predictions about individuals to be made based on how similar the individual is to (i.e., how closely the individual resembles) the person’s existing mental prototypes. In this way, the representativeness heuristic involves stereotyping, which is not a statistically optimal approach to predictions (Kahneman, 2011). Stereotypes are statements about groups or categories that are at least tentatively accepted as facts about every member of that group or category (Kahneman, 2011). That is, a (perhaps common) characteristic of a group is attributed to each member of that group. In addition, the representativness heuristic does not adequately consider the quality of the evidence (Kahneman, 2011). It is thus important to question the predictive accuracy of the evidence for the particular prediction question.

Consider the following example of the representativeness heuristic. When coming out of college, Tight End Kyle Pitts drew comparisons to the “LeBron James” of Tight Ends [Nivison (2021); archived at https://perma.cc/JQB5-XPVL]. The idea that his athletic profile leads him to be similar to the prototype of LeBron James may have led him to be too highly drafted by fantasy managers in his first seasons.

Figure 14.1 is a video from Get Up ESPN (2021) (archived at https://perma.cc/JW8E-KV2C) of Kyle Pitts drawing comparisons to the LeBron James of Tight Ends:

The representativeness heuristic has been observed in gambling markets for predicting team wins in the National Football League (NFL) (Woodland & Woodland, 2015) and in decision making in fantasy soccer (Kotrba, 2020).

14.3.3 Anchoring and Adjustment Heuristic

The anchoring and adjustment heuristic refers to the tendency for a person’s judgments or predictions to be made with a reference point—an anchor—as a starting point from which they adjust their estimates upward or downward. The anchor provides a suggestion to the person—even without their paying attention, leading to a priming effect which evokes information that is compatible with the suggestion (i.e., a suggestive influence). We are more suggestible than we would like to think we are. The anchor is often inaccurate and given too much weight in the person’s calculation, and too little adjustment is made away from the anchor. As an example, insufficient adjustment from an anchor may lead someone to drive too fast after exiting a highway (Kahneman, 2011). The anchoring effect occurs even when the anchor (i.e., suggestion) is a random number and is completely uninformative of the correct answer (Kahneman, 2011)!

Applied to fantasy football, a manager is trying to predict how many fantasy points a top Running Back may score. The player scored 300 fantasy points last season, but the team added a stronger backup Running Back and changed the Offensive Coordinator to be a more pass-heavy offense. The manager may use 300 fantasy points as an anchor (based on the player’s performance last season), and may adjust downward 15 points to account for the offseason changes. However, it is possible that this downward adjustment is insufficient to account not only for the offseasons changes but also for potential regression effects. Regression effects are discussed further in Section 14.5.2. As another example, the first number or players named in a negotiation can serve as an anchor.

To counter the effect of the anchoring heuristic, Kahneman (2011) suggests deliberately thinking the opposite of the anchor. And, if a person you are negotiating with makes an outrageous initial offer, instead of making an equally outrageous counter-offer, Kahneman (2011) suggests storming out or threatening to leave, and indicating that you are unwilling to negotiate with that number on the table. This technique could be helpful during trade negotiations for players in fantasy football. Other techniques include, instead of focusing on the anchor, focusing on the minimal offer that the opponent would accept, or on the costs to the opponent of failing to reach an agreement (Kahneman, 2011).

14.3.4 Affect Heuristic

The affect heuristic refers to the tendency for a person to make judgments or predictions based on their emotions and feelings—such as whether they like or dislike something—with little deliberation or reasoning. That is, the affect heuristic involves letting one’s likes and dislikes determine one’s beliefs about the world (Kahneman, 2011). For instance, if a manager’s favorite team is the Dallas Cowboys, they may hold a distaste for players on teams that are rivals of the Cowboys, such as the Philadelphia Eagles. The affect heuristic may lead them to believe that Eagles players are overrated and to avoid drafting Eagles players even though some Eagles players may perform well.

14.3.5 Performance Heuristic

The performance heuristic refers to the tendency for people to predict their improvement in a task based on their previous performance (Critcher & Rosenzweig, 2014). Although past performance tends to be correlated with future performance, people tend to show regression to the mean, as described in Section 14.5.2. Thus, people who show strong performance in their first few games may in many cases show worse performance in subsequent games. Moreover, initial performance does not necessarily indicate the extent of one’s future improvement. For instance, if a manager has a successful first season, the performance heuristic may lead them to overestimate the extent to which they will improve in their skill or performance in the future.

14.3.6 What You See Is All There Is (WYSIATI) Heuristic

The “What You See Is All There Is” (WYSIATI) heuristic refers to the tendency for a person to make a decision or judgment based solely on the information that is immediately available to them (i.e., “right in front of them”), without considering the possibility that there may be additional important information that they do not have (Enke, 2020). The WYSIATI heuristic involves jumping to conclusions on the basis of limited information (Kahneman, 2011). For instance, a manager might start a player based on the fact that the player scored lots of points in their most recent game. However, the manager may not consider other important factors that they did not know about, such as the fact that the player filled in for an injured player, and will get fewer touches in the upcoming game now that the original starter is back to full health.

14.4 Examples of Cognitive Biases

As described above, cognitive biases are systematic errors in thinking. They lead us to be less accurate in our judgments and predictions. Inaccuracy may not always be bad; several cognitive biases may serve to help people feel better about themselves (R. M. Miller, 2013), including confirmation bias, overconfidence bias, optimism bias, and self-serving bias; or to prevent feelings of regret (e.g., omission bias)—or to prevent having to admit failure (e.g., commitment bias). In fantasy football, however, cognitive biases and inaccurate judgments can lead to worse performance. Cognitive biases are often due to the use of heuristics.

Miller (2013) describes cognitive biases in fantasy sports. Examples of cognitive biases relevant to fantasy football that result from one or more heuristics include:

- overconfidence bias

- optimism bias

- confirmation bias

- in-group bias

- hindsight bias

- outcome bias

- self-serving bias

- omission bias

- loss aversion bias

- risk aversion bias

- primacy effect bias

- recency effect bias

- framing effect bias

- endowment effect bias

- bandwagon effect bias

- Dunning–Kruger effect bias

In general, when faced with a situation in which bias is likely, Kahneman (2011) advises slowing down, being thoughtful and deliberate, questioning your intuitions, and imposing orderly procedures, such as checklists, reference-class forecasting, and a “premortem”. A premortem is when you consider, in advance of the decision, what likely went wrong if the decision were to work out terribly. Reference-class forecasting involves examining a reference class of similar projects to identify the most likely range of outcomes for the project.

14.4.1 Overconfidence Bias

In general, people tend to be overconfident in their judgments and predictions. Overconfidence bias is the tendency for a person to have greater confidence in their abilities (including judgments and predictions) than is objectively warranted. There are three general ways that overconfidence has been identified (Moore & Healy, 2008):

- overestimation of one’s actual performance

- overplacement of one’s performance relative to others

- overprecision in one’s beliefs/judgments/predictions

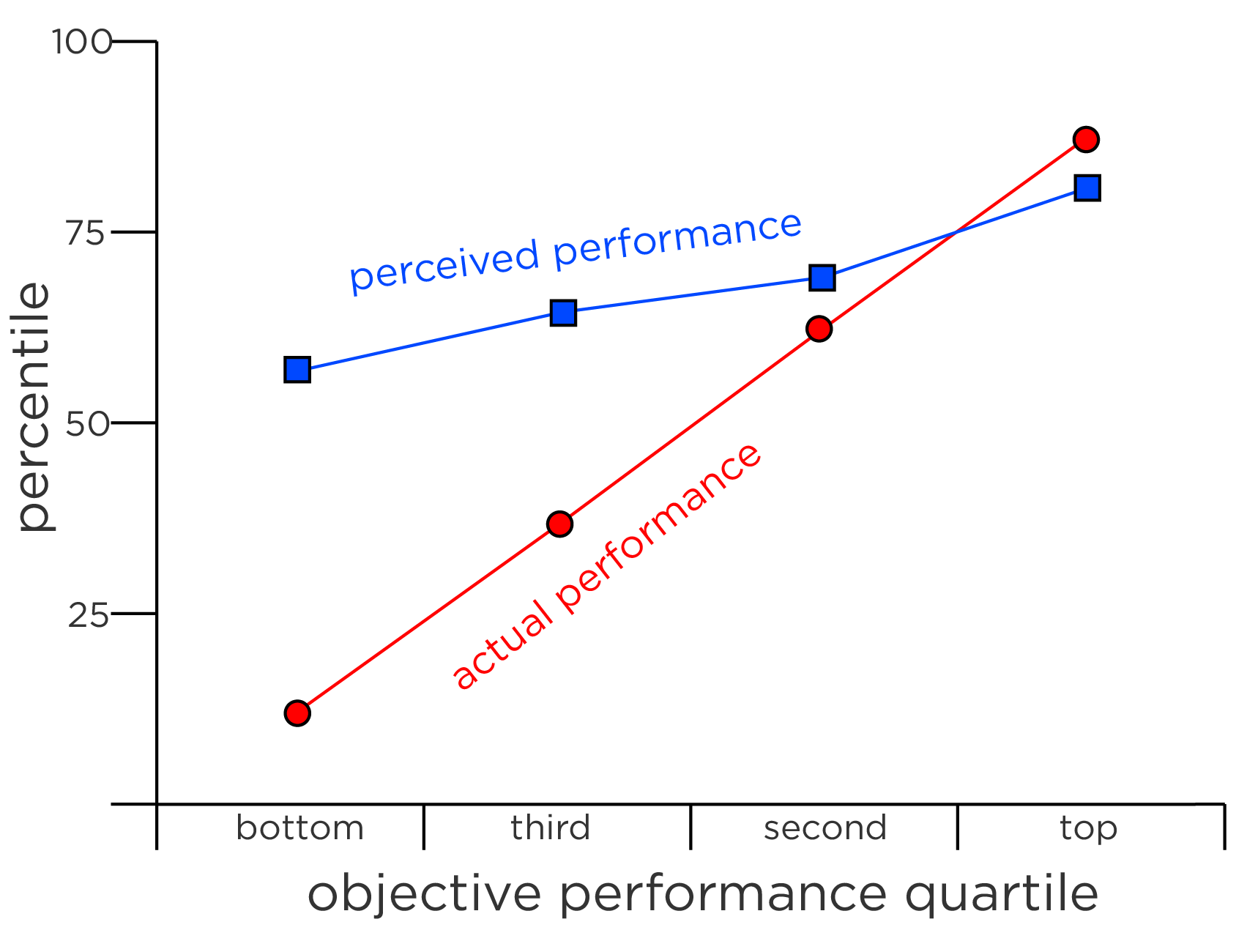

Overestimation involves believing that one will perform better than one actually performs. It thus reflects a greater mean confidence than overall accuracy (Kahneman & Tversky, 1996). In general, people tend to show excessive confidence in their beliefs; for instance, when people express 90% confidence about their belief, they may be correct only about 75% of the time (Kahneman & Tversky, 1996). As an example of overconfidence in football, early picks in the NFL draft tend to be considerably overvalued because teams believe their predictions will be more accurate than they actually are (Massey & Thaler, 2013). The probability that a player is better than the next player chosen in the draft at the same position is ~52%, or basically a coin flip (Massey & Thaler, 2013). As noted by Massey & Thaler (2013) (pp. 1493–1494), “The task of picking players…is an extremely difficult one…decision makers often know less than they think they know…The problem is not that future performance is difficult to predict, but that decision makers do not appreciate how difficult it is.” This suggests that a) the presence of high stakes for the prediction does not eliminate overconfidence, and that b) teams should “trade down” to acquire more, later picks if they possess very early picks (Massey & Thaler, 2013). Overestimation can be identified with a calibration plot of the predicted performance versus actual performance, where the person’s predicted performance is systematically higher (in at least some cases) than their actual performance. Overestimation corresponds to the “overprediction” form of miscalibration.

Overplacement involves believing that one is better than others or will perform better than others, even when they do not. For instance, it is a common finding that more than half of people believe they are “above average” (i.e., above the median), even though that is statistically impossible. This calls to mind the fictitious Lake Wobegon in the radio show A Praririe Home Companion, “where all the women are strong, all the men are good-looking, and all the children are above average.” As an example, 90% of drivers believe they are better than average (Kahneman, 2011).

Overprecision involves expressing excessive certainty regarding the accuracy of one’s beliefs/judgments/predictions. For instance, if when a given meteorologist says it will rain 80% of the time, it actually rains 30% of the time, the meteorologist’s predictions are overprecise. Likewise, if the weather forecast says it will rain 10% of the time and it actually rains 30% of the time, the predictions are also overprecise because the forecaster is expressing stronger confidence than is warranted that it will not rain. Overprecision can be identified with a calibration plot of the predicted probabilities versus the actual probabilities. Overprecision corresponds to the “overextremity” form of miscalibration.

With respect to fantasy football, projections of players’ fantasy points tend to be overprecise, as described in Section 17.12. However, predictions about how well oneself will do in a fantasy football league may involve overestimation and overplacement.

Overestimation and overprecision are studied in various ways. Typically, people are asked about (a) whether an event will occur or (b) the likelihood that the event will occur, across many events. For the former (approach “a”), people may be asked to make a dichotomous judgment or prediction, by responding to the question: e.g., “Will it rain tomorrow? [YES/NO]”. They will then rate their confidence (as a percentage) in their answer (0–100%). They would make each of these two ratings for each of many events. Then, we can evaluate, for a given respondent, the degree to which the probabilistic estimate of an event reflects the true underlying probability of the event. For instance, for a given respondent (and for respondents in general), for the events when the respondent says they are 80% confident an event (e.g., rain) will occur, does the event actually occur around 80% of the time? For the latter (approach “b”), people may indicate the likelihood that the event will occur, by responding to the question: “How likely is it that it will rain tomorrow? (0–100%)”. Then, we can evaluate, for instance, for the events when the respondent says that an event (e.g., rain) is 80% likely to occur, does the event actually occur around 80% of the time?

The confidence that people tend to have in their predictions depends less and the quantity and quality of the evidence for their predictions and more on the quality of the narrative they can tell about the (little) information they have immediate access to (Kahneman, 2011). For instance, people tend not to consider other relevant, important factors that they do not have immediate access to, consistent with the WYSIATI heuristic. Instead, people tend to focus on the coherence and plausibility of the story, which differs from its probability (Kahneman, 2011). That is, one’s confidence is not necessarily related to the likelihood that the judgment is correct—it often reflects the “illusion of validity” (Kahneman, 2011). The illusion of validity is the unwarranted confidence that arises from a perceived good fit between the predicted outcome and the input information (Tversky & Kahneman, 1974). As one gains more knowledge in a domain, the person also tends to become unrealistically overconfident in their ability to make accurate judgments and predictions (Kahneman, 2011). In general, people tend to focus on what they know and to neglect what they do not know leading to overconfidence in one’s beliefs (Kahneman, 2011). Moreover, overconfidence tends to be exacerbated by information—the more information people have, the more they tend to be overconfident (Massey & Thaler, 2013). Thus, lots of scouting evidence may lead teams and fantasy managers to be overconfident in the draft.

A fantasy manager may be even more likely to exhibit overconfidence if they previously performed well or won their league, for which luck and random chance plays an important role. Indeed, it is estimated that nearly half (~45%) of the variability in fantasy football performance is estimated to be luck [and around 55% due to skill; Getty et al. (2018)]. A manager who won their league in the prior season may believe they will perform better than they actually will (overestimation), will perform better than average (overplacement), and may hold excessive confidence regarding the accuracy of their predictions about which players will perform well or poorly (overprecision). These various types of overconfidence may lead them to draft high-risk players based on gut feeling, neglecting statistical analysis and expert consensus.

People tend to focus on the role of skill and to neglect the role of luck when explaining the past and predicting the future, giving people an illusion of control (Kahneman, 2011). Players’ performance in fantasy football, and human behavior more generally, is complex and multiply determined (i.e., is influenced by many factors). Despite the bluster of so-called experts who pretend to know more than they can know, no one can consistently and accurately predict how all players will perform. Remain humble in your predictions; do not be more confident than is warranted. If you approach the task of prediction with humility, you may be more able to be flexible and more willing to consider other players who you can draft for good value.

14.4.2 Optimism Bias

Optimism bias is the tendency for people to overestimate one’s likelihood of experiencing positive events and underestimate one’s likelihood of experiencing negative events. For instance a manager might overestimate the likelihood that their team will win the championship and may underestimate the likelihood that they may lose a player to injury. In terms of its key consequences for decisions, optimism bias may be considered the most important cognitive bias (Kahneman, 2011). In general, people show an optimism bias. However, some people (“optimists”) are even more optimistic than others (Kahneman, 2011). The optimism bias—in particular a delusional optimism—can lead to overconfidence (Kahneman, 2011). Although optimism is associated with many advantages in terms of well-being, it can also lead to excessive risk-taking and to costly persistence (Kahneman, 2011).

14.4.3 Confirmation Bias

Confirmation bias is the tendency for people to search for, interpret, and remember information that confirms one’s beliefs, as opposed to information that might disconfirm one’s beliefs. The search for confirming evidence is called “positive test strategy”; it runs counter to advice by philosophers of science who advise testing hypotheses by trying to refute them [i.e., by searching for disconfirming evidence; Kahneman (2011)]. The result of confirmation bias is that people are unlikely to change their minds about something that they have a pre-existing belief about, because they tend to look only for information that supports their pre-existing beliefs. For instance, if you believe that a particular player is a strong breakout candidate to be a sleeper, you may be more likely to pay attention to evidence that supports that the player will breakout and may be less likely to pay attention to evidence that indicates the player may struggle. That is, people tend to look for confirmation that the players they want to draft (or start) are preferred by the experts (R. M. Miller, 2013). Confirmation bias may lead to “going with your gut” (i.e., making a decision based on your intuition).

As a budding empiricist, you should actively seek out information that challenges or disconfirms your beliefs—particularly from trustworthy, reputable sources—and work to incorporate the information into your beliefs. Do your best to go into observation, data analysis, and data interpretation with an open mind.

14.4.4 In-Group Bias

In-group bias is the tendency for people to prefer others who are members of their same (in-)group. For instance, a manager whose favorite team is the Dallas Cowboys might try to draft mostly Cowboys players, regardless of their historical performance.

14.4.5 Hindsight Bias

“Hindsight is 20/20.” – Idiom

Hindsight bias is the tendency to perceive that past events were more predictable than they were (i.e., the “I-knew-it-all-along” effect). Everything seems to make sense in hindsight (Kahneman, 2011). People tend to remember the successes of their predictions and forget the failures of their predictions. For instance, if a third-string Quarterback has a breakout game, a fantasy manager may claim that they “knew it all along” that the player was going to breakout, despite not having picked up the player. That same manager may forget the many other predictions they had that did not come true.

14.4.6 Outcome Bias

Outcome bias is the tendency to evaluate the quality of a decision based on the eventual outcome of that decision, rather than based on the quality of the decision based on the information that was known at the time of the decision. For instance, consider that a manager starts a lower-ranked player over a higher-ranked player because the lower-ranked player has a more favorable matchup. Now, consider that the lower-ranked player scores fewer points than the higher-ranked player because the lower-ranked player got injured. If the manager concludes that their decision-making process for who to start was flawed and thus not pay attention to matchups in subsequent decisions, this would reflect outcome bias. Likewise, if the manager makes a poor-quality decision but gets lucky, and they attribute their success to a sound decision-making process, this would also reflect outcome bias. In general, people tend to blame decision makers for good decisions that turned out badly, and to give them too little credit for good decisions that turned out well [because the decisions appear obvious but only after the fact; Kahneman (2011)]. Hindsight bias and outcome bias tend to lead to risk aversion (Kahneman, 2011).

Focus on the decision-making process when evaluating the quality of decisions, rather than the outcome (Tanney, 2021). Yes, also evaluate the outcomes of decisions, but do not give yourself too much credit for lucky decisions or too much blame for unlucky decisions (like injuries). Luck is going to happen. If you are making optimal decisions that maximize the expected value of returns (e.g., projected fantasy points), you are following a strong process. That said, one thing you can count on is that players get injured and have bye weeks. So, it is to your benefit to draft and compose your team to have some bench depth so you can handle injuries and bye weeks when they inevitably occur.

14.4.7 Self-Serving Bias

Self-serving bias is the tendency to perceive oneself in an overly positive manner. Doing so may function to bolster people’s self-esteem. One way that self-serving bias can commonly manifest is the tendency people to attribute successes to internal factors—such as one’s character, ability, effort, or actions—but to attribute failures to external factors beyond one’s control—such as others, unfairness, bad luck, etc. That is, self-serving bias involves claiming more responsibility for one’s success than for one’s failure (R. M. Miller, 2013). For instance, a manager who wins the league may attribute their success to their fantasy football skill and smart decisions (rather than luck). However, if they get last place, they may attribute their failure not to themselves but instead to factors outside of their control, such as bad luck, player injuries, and poor performance by players due to circumstances out of their control (e.g., bad weather conditions, coaching decisions).

Although the self-serving bias may help us feel better about ourselves, it can lead us to overestimate our abilities when things go well and to fail to learn from mistakes when things go poorly. Remember that nearly half of the variability in fantasy football performance is estimated to be luck, and the other half likely due to skill (Getty et al., 2018). Thus, successes in fantasy football likely involve a good amount of luck; likewise, failures in fantasy football can also reflect mistakes and poor decision making that arise from lower skill.

14.4.8 Omission Bias

Omission bias is the tendency to prefer inaction over action, or to prefer the default option over changes from the default option (Kahneman, 2011). For instance, consider a manager who is on the fence to drop an underperforming player and to pick up someone else to start. If the manager does not drop the player and the player scores few points, the manager is likely to be less critical and regretful of their decision than if they had picked up another player who then scored the same (low) number of points. Thus, a person’s decisions may be influenced by the anticipation or fear of regret so as to keep the status quo, avoid losses, and minimize the expectation of regret—such as choosing the same order each time at a restaurant to avoid regret (Kahneman, 2011). However, people may anticipate more regret than they actually experience, because people tend to underestimate the protective impact of the psychological defenses they will deploy against regret (Kahneman, 2011). In general, Kahneman (2011) recommends not to put too much weight on potential future regret when making decisions: “even if you have some [regret], it will hurt less than you now think.” (p. 352).

Consider another example adapted from Miller (2013): two players scored the same number of points in a given week. A Wide Receiver scored 1.6 points from 16 receiving yards on 1 target. A Running Back scored 1.6 points from 56 receiving yards and two dropped fumbles. The manager may harbor more blame for the player who performed a harmful action (losing two fumbles) than the player who received only one target (harmful inaction).

Pay attention to players’ usage and opportunities, not just how many points a player scored and whether they had more fumbles or interceptions than other players. The more times a player touches the ball, the more points they are likely to score. And do not let the anticipation of regret prevent you from making important decisions.

14.4.9 Loss Aversion Bias

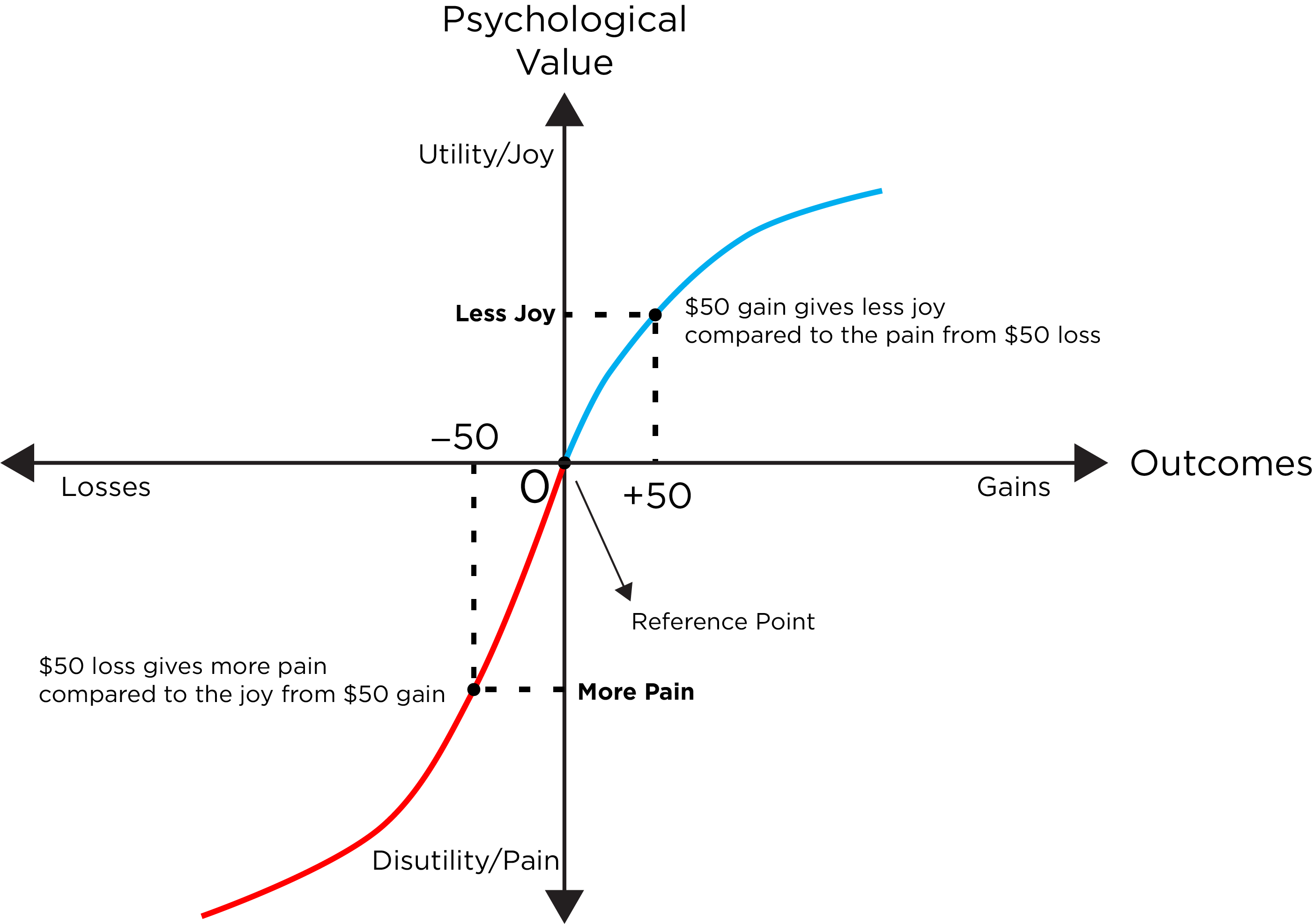

Loss aversion bias is the tendency to avoid losses rather than acquiring equivalent gains. That is, loss aversion (similar to punishment sensitivity, as opposed to reward sensitivity) is the tendency to weigh losses (or punishment) more heavily than gains (or rewards). Thus, the target state matters less than the change (i.e., gains and/or losses) from one’s reference point [e.g., their current roster; Kahneman (2011)]. Loss aversion is a key aspect of prospect theory and suggests that individuals tend to be biased in favor of their reference situation and toward making smaller rather than larger changes (Kahneman, 2011). Loss aversion explains why people tend to favor the status quo; the negative consequences of a change often loom larger than the positive consequences of a change (Kahneman, 2011). Aversion to losses and potential threats may be related to humans’ survival instinct (Kahneman, 2011). With par as a reference point, this may explain why professional golfers tend to putt more accurately for par than for birdie, even for putts of equivalent distance (Kahneman, 2011).

Principles of prospect theory and loss aversion bias are depicted in Figure 14.2.

The loss aversion ratio indicates the extent to which a person weighs losses greater than gains, and for many people is around 2 (typically between 1.5 to 2.5; (Kahneman, 2011). That is, people tend to give as much twice the weight to losses as to gains. The late baseball manager Sparky Anderson once said, “Losing hurts twice as bad as winning feels good.” Loss aversion is different from risk aversion. Loss aversion is exemplified when teams play conservatively so as “not to lose” instead of “to win.” In fantasy football, loss aversion may lead managers to start or hold onto players for too long who were highly drafted yet are underperforming instead of starting a more promising player out of fear of losing potential value from their initial investment. Loss aversion can also influence trade negotiations.

An example of loss aversion bias in coaching basketball is removing a star player from a game so you can keep the player for the end of the game (Moskowitz & Wertheim, 2011). As described in Section 27.3.1, an example of loss aversion bias in football is punting on fourth down when, in many cases, it would be advantageous to go for it (Moskowitz & Wertheim, 2011).

14.4.10 Risk Aversion Bias

Risk aversion bias is the tendency to prefer outcomes with low uncertainty (i.e., risk), even if they offer lower potential rewards compared to outcomes with greater uncertainty but potentially greater rewards. Risk aversion leads people to select safer options but may lead them to miss out on higher-gain opportunities. For instance, risk aversion may lead a fantasy manager to start players who are more steady (i.e., show greater game-to-game consistency) over players who are more volatile (i.e., show greater game-to-game variability) but have higher upside potential.

In mixed gambles, in which it is possible for a person to experience either a gain or a loss, loss aversion tends to lead to risk-averse choices (Kahneman, 2011). By contrast, when all of a person’s options are poor, people tend to engage in risk seeking, as has been observed in entrepreneurs and in generals (Kahneman, 2011). In fantasy football, risk seeking may be more likely when a manager has a team full of underperforming players and a weak record.

14.4.11 Primacy Effect Bias

Primacy effect bias is the tendency to recall the first information that one encounters better than information presented later on. For instance, primacy effect bias might lead a manager to draft a player based on the first information they encountered about the player.

14.4.12 Recency Effect Bias

Recency effect bias is the tendency to weigh recent events more than earlier ones. For instance, a manager might observe that a Running Back on the waiver wire performed well in the last two games. Recency effect bias may lead the manager to pick up the player, overvaluing their recent performance. For instance, the manager may not have adequately weighed the player’s overall season performance and the fact that the starting Running Back is returning to the lineup from injury, and that is why the player received more carries in the past two games (i.e., in place of the injured starter).

Recency effect bias seems a bit at odds with primacy effect bias; however, they can both coexist. People tend to remember the earliest information they encounter in addition to the most recent information, and they tend to forget the information encountered in between.

14.4.13 Framing Effect Bias

Framing effect bias is the tendency to make decisions based on how information is framed (e.g., based on positive or negative connotations), rather than just the information itself. For instance, consider a weekly column written by two different fantasy football expert commentators. Expert A may write that a Running Back has scored at least 15 fantasy points in 70% of games this season (i.e., positive framing). Expert B may write that the same Running Back has scored fewer than 15 fantasy points in 30% of games this season (i.e., negative framing). Both statements convey the same statistical information; however, a manager might be more inclined to start the player when reading the statement from Expert A than from Expert B because of how the information was framed (i.e., presented). People prefer to make decisions to avoid loss, consistent with loss aversion bias, so the negative framing might lead a person to be less likely to start the player.

Pay attention to how information is framed, knowing that the framing itself could lead you astray. Thus, try to focus on the information itself rather than the framing when making decisions.

14.4.14 Endowment Effect Bias

Endowment effect bias is the tendency to overvalue merely because one owns it—owning it increases its value to the owner [especially for goods that are not regularly traded; Kahneman (2011)]. That is, a manager tends to value the same player more when they play for their team rather than an opponent’s team. It is related to loss aversion bias and prospect theory; when owning a good (i.e., a person’s reference point), they focus on the loss of the good if they were they to sell or trade it away rather than on the gains from the sale or trade. When trading, the endowment effect bias might lead a manager to demand more to trade away a player than to acquire the same player (R. M. Miller, 2013). For instance, a manager might overvalue a player they drafted in the first round, refusing to trade them even if they could get a better-performing player in return.

A player’s trade value is not fixed. It makes sense to adjust a player’s trade value based on many factors such as your team’s needs, the needs of your trade partner’s team, the player’s remaining strength of schedule, etc. However, you want to avoid adjusting a player’s value based merely on the fact that he is on your team. Evaluate a variety of trade analyzers to more objectively evaluate players’ worth.

14.4.15 Bandwagon Effect Bias

The bandwagon effect bias is the tendency to do or believe things because other people are. It involves social conformity. For instance, consider if a rookie Wide Receiver has a breakout game and he is picked up in many fantasy leagues. A given manager might pick up the player because the player is frequently being picked up in many fantasy leagues, without evaluating whether the player’s success is sustainable.

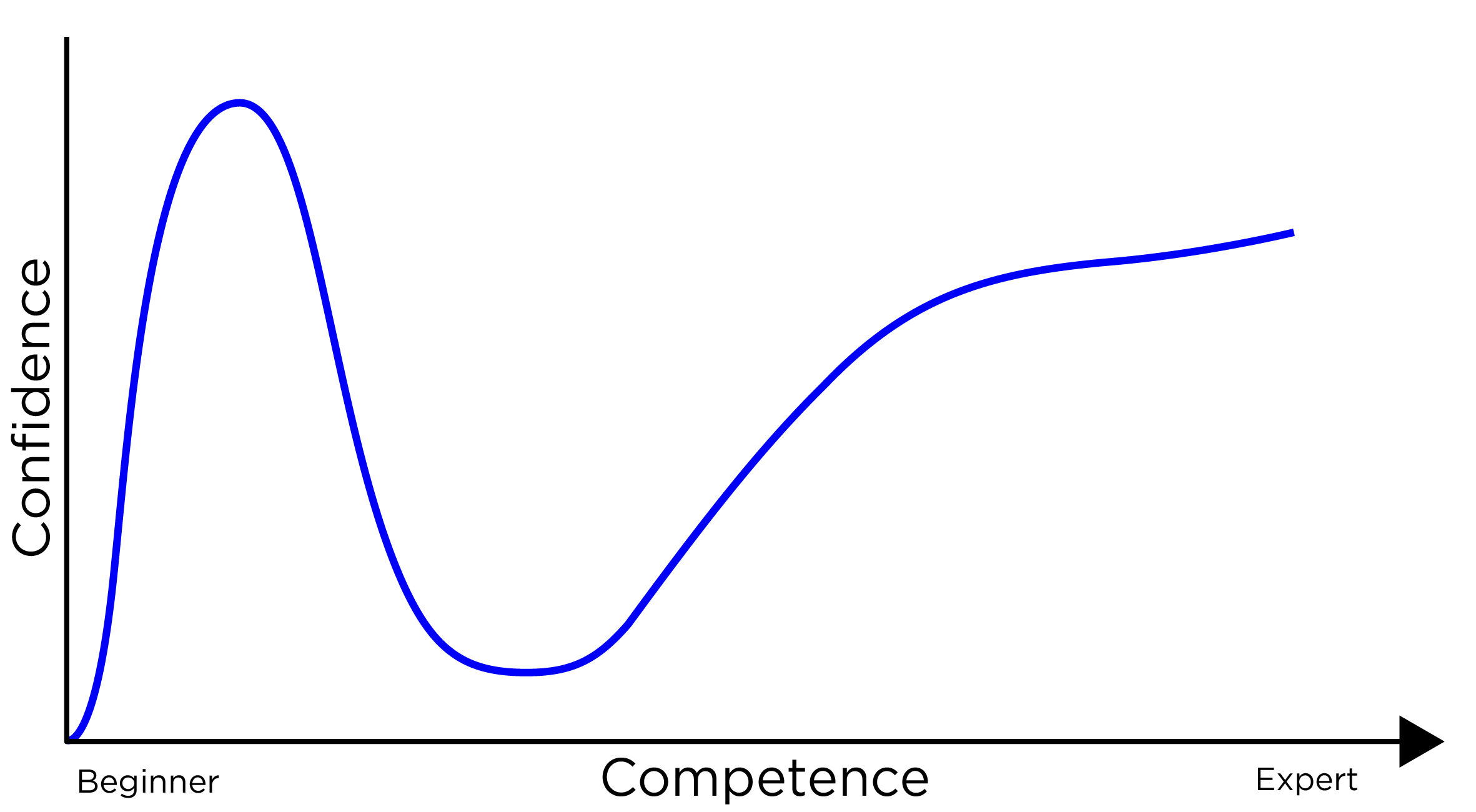

14.4.16 Dunning–Kruger Effect Bias

“The more you know, the more you know you don’t know.” – Anonymous

The Dunning–Kruger effect bias is the tendency for people with low ability/competency in a task to overestimate their ability. The Dunning–Kruger effect is depicted in Figures 14.3 and 14.4. For instance, consider a new fantasy manager who experiences some initial wins (often called “beginner’s luck”). They may attribute their successes to their skill rather than to luck. Their overconfidence may lead them to believe they can win the league without much preparation.

14.5 Examples of Fallacies

As described above, fallacies are mistaken beliefs and flawed reasoning. Fallacies are often due to the use of heuristics and to cognitive biases. Examples of fallacies include:

- base rate fallacy (aka base rate neglect)

- regression fallacy

- hot hand fallacy

- sunk cost fallacy

- gambler’s fallacy

- anecdotal fallacy

- narrative fallacy

- conditional probability fallacy

- ecological fallacy

14.5.1 Base Rate Fallacy

The base rate fallacy (aka base rate neglect) is the tendency to ignore information about the general probability of an event in favor of specific information about the event. The base rate is a marginal probability, which is the general probability of an event irrespective of other things. Thus, a “low base-rate” event is an event with a low probability of occurring (i.e., an unlikely event). Focusing on the specific information about the event (and thus underweighting the base rate) involves the representativeness heuristic (Kahneman, 2011)—making judgments based on how similar the event is to a mental prototype regardless of how frequent the event the event actually is. As noted by Kahneman (2011), “people who have information about an individual case rarely feel the need to know the statistics of the class which the case belongs.” (p. 249). In general, people tend to a) overestimate the likelihood of low base-rate events and b) overweight low base-rate events in their decisions (Kahneman, 2011). This is especially true if the unlikely event attracts attention, because of the confirmatory bias of memory—that is, when thinking about the event, you try to make it true in your mind (Kahneman, 2011).

For example, the base rate of work-related injury is the general probability of experiencing a work-related injury, irrespective of other factors (e.g., the type of job, the person’s age, the person’s sex). Among the working population in the U.S., the lifetime prevalence of work-related injuries (i.e., the percent of people who will experience a work-related injury at some point in their lives), is ~35% [Free et al. (2020); archived at https://perma.cc/A2L6-WPEH]. Thus, the base rate of work-related injuries in the U.S. is ~35%. The probability of work-related injuries is higher for some occupations (e.g., construction) and for some groups (e.g., men, 55–64-year-olds, Black or Multiracial, who are self-employed and have less than high school education) than others. Nevertheless, if we ignore all of the interacting factors, the general probability of work-related injuries is 35%. If we made a prediction that someone would be highly likely (> 90%) to experience a work-related injury because they are male and self-employed, this would be ignoring the relatively lower base rate of work-related injury. Indeed, even men (36.7%) and self-employed individuals (41.2%) have less than a 50% chance of experiencing a work-related injury.

The framing of an event can also alter its availability in mind, judgments of probability, and weighting in decisions (Kahneman, 2011). A more vivid description of an outcome can lead people to be more influenced by its ease of recall (i.e., availability) than its objective probability (Kahneman, 2011). In addition, concrete representations tend to be weighted more heavily than abstract representations (Kahneman, 2011). Abstract representations are framed in terms of “how likely” the event will occur in in terms of chance, risk, probability, or likelihood. Concrete representations are framed in terms of “how many” people will be affected by the event. An example abstract representation is that there is a 0.001% chance of dying from this particular disease. When framed in this way, the risk appears small. Now, consider the same information framed in terms of a concrete representation: 1 in 100,000 people will die from this disease. Due to a phenomenon called denominator neglect, low base-rate events are more heavily weighted when they are framed using a concrete representation than an abstract representation (Kahneman, 2011).

There are low base-rate events that tend to be underweighted when making decisions, such as whether to make anticipatory preparations for a future earthquake, housing bubble, or financial crisis (Kahneman, 2011). One major cause of underweighting is if a person has never experienced the rare event (i.e., the earthquake or finanical crisis) (Kahneman, 2011). However, for low base-rate events that people have experienced, the availability and representativeness heuristics tend to lead people to overestimate the likelihood that the unlikely event occurs. In general, many factors—including obsessive concerns about the event, vivid images of the event, concrete representations of the consequences of the event (e.g., “a disease kills 1 person out of every 1,000”), and explicit reminders of the event—contribute to the overweighting of unlikely events (Kahneman, 2011).

As applied to fantasy football, consider that you read about a potential sleeper Wide Receiver who had a stellar performance in a preseason game. If you select this player early on in the draft based on this information, this would be ignoring the general probability that most players who have a strong performance in the preseason do not perform as well in the regular season (i.e., base rate neglect). Performance in the preseason is not strongly predictive of performance in the regular season [Schalter (2022); archived at https://perma.cc/FSG2-6AXE].

More information on base rates and how to counteract the base rate fallacy in described in Chapter 16.

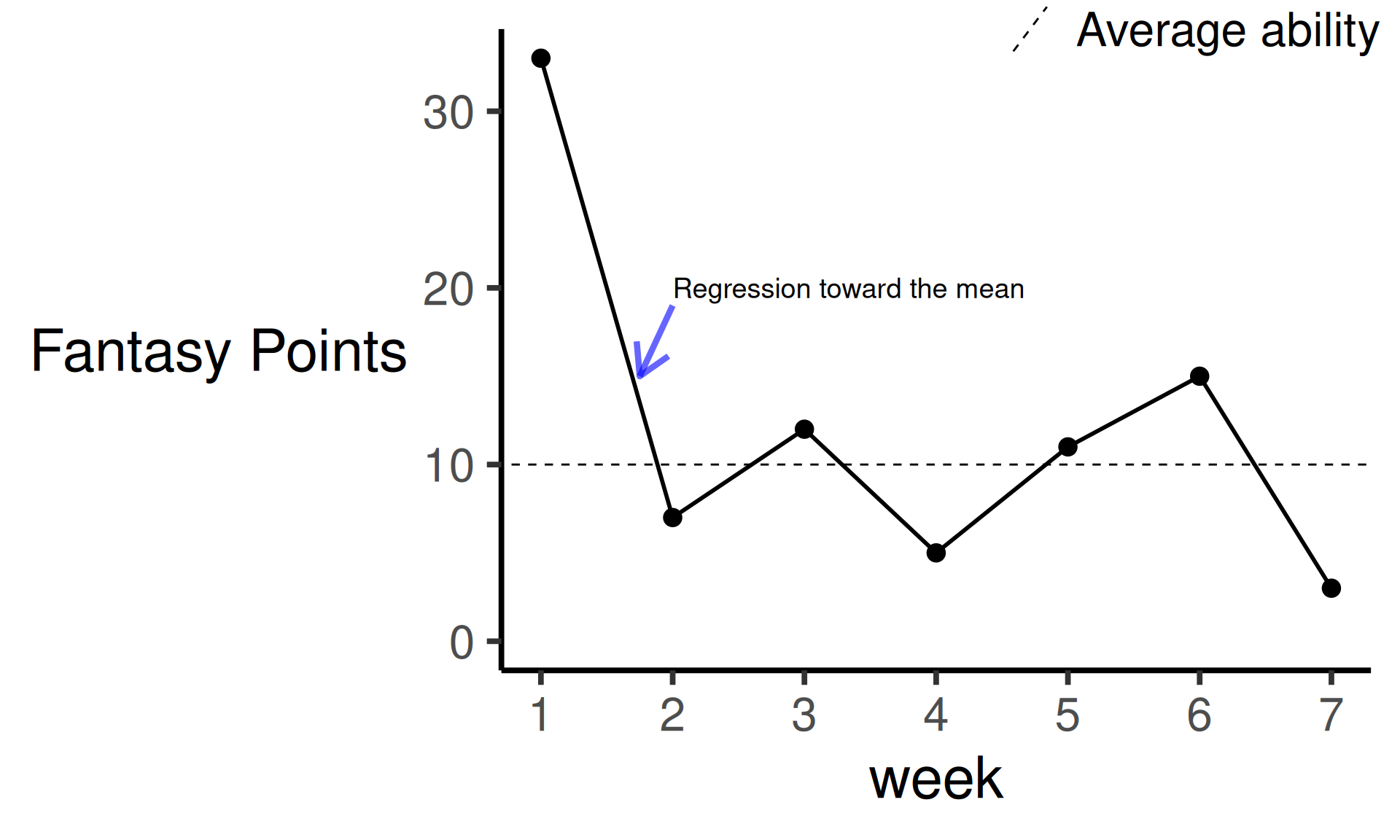

14.5.2 Regression Fallacy

The regression fallacy is the failure to account for the fact that things tend to naturally fluctuate around their mean and that, after an extreme fluctuation, subsequent scores tend to regress (or reverse) to the mean. Regression to the mean is depicted in Figure 14.5. Regression to the mean occurs because performance—both success and failure—is influenced by talent and by luck (Kahneman, 2011). And, unlike talent (or one’s level one the construct of interest), luck is fickle. Regression to the mean can explain why those with depression tend to improve over time without doing anything, and can explain why highly intelligent women tend to marry men who are less intelligent than they are (Kahneman, 2011). Despite peoples’ tendency to ascribe such changes to causal phenomena, regression-related changes do not need a causal explanation—they are a mathematically inevitable consequence that arises from the fact that an outcome is influenced, to some degree, both by one’s level on the construct and by error (Kahneman, 2011).

Code

mydata <- data.frame(

week = 1:7,

fantasyPoints = c(33,7,12,5,11,15,3)

)

ggplot(

data = mydata,

mapping = aes(

x = week,

y = fantasyPoints

)

) +

geom_point(

size = 4

) +

geom_line(

linewidth = 1

) +

geom_abline(

aes(

intercept = 10,

slope = 0,

linetype = "Average ability"

),

) +

scale_linetype_manual(values = "dashed") +

coord_cartesian(

ylim = c(0, NA)) +

scale_x_continuous(

breaks = 1:7

) +

labs(

y = "Fantasy Points",

#title = "Regression to the Mean",

linetype = NULL

) +

annotate(

"segment",

x = 2,

xend = 1.75,

y = 19,

yend = 15,

color = "blue",

linewidth = 1.5,

alpha = 0.6,

arrow = arrow()) +

annotate(

"text",

x = 2,

y = 20,

label = "Regression toward the mean",

hjust = 0, # left-justify

size = 5) +

theme_classic(base_size = 30) +

theme(

axis.title.y = element_text(angle = 0, vjust = 0.5),

legend.position = "inside",

legend.position.inside = c(0.8, 1))An example of the regression fallacy is the so-called Sports Illustrated or Madden cover jinx curse. The Sports Illustrated or Madden cover jinx curse is the urban legend that players who appear on the cover of Sports Illustrated (the magazine) or Madden (the video game) will perform poorly. But, such a phenomenon can be more simply explained by regression to the mean [Kahneman (2011); G. Smith (2016); archived at https://perma.cc/CZM9-TVFN]. When a player has a superb season, they likely benefited from some degree to good luck, and it is unlikely that they will repeat such a stellar season the following year. Instead, they are likely—at least based on random fluctuation—to regress to their long-term mean.

Applied to fantasy football, consider that a Quarterback had a 5-touchdown game in Week 1. You are in need of a strong Quarterback, so you drop a solid player to pick him up. However, it is possible that the Quarterback benefited from playing against a week defense in the first game of the season. Future matchups may prove more difficult, and the player is unlikely to sustain such a solid performance consistently throughout the season (i.e., they are likely to regress toward their mean).

14.5.3 Hot Hand Fallacy

The “hot hand” is the idea that a player who experiences a successful outcome will have greater chance of success in subsequent attempts. For instance, in basketball, it is widely claimed by coaches, players, and commentators that players who have the hot hand (i.e., who are “on fire”) are more likely to make shots because they made previous shots and are gaining confidence. The hot hand is a supposed example of momentum. However, there is not strong evidence of momentum in sports either at the player or team level; rather, there may be evidence of reversals, the opposite of momentum (Moskowitz & Wertheim, 2011). Streaks certainly exist, but momentum suggests that if a person performs well (or poorly) in a given instance, their performance will influence (and thus predict) their future performance. In general, streaks appear to be largely random variation (i.e., luck/chance) around a player’s mean performance ability.

Evidence on the hot hand is mixed. Considerable evidence historically has suggested that there is no such thing as a “hot hand” even though many players and fans believe in it (Avugos et al., 2013; Bar-Eli et al., 2006; Gilovich et al., 1985; Moskowitz & Wertheim, 2011; Wetzels et al., 2016). Even though streaks may occur, making a shot may not increase the likelihood of making the next shot; that is, the likelihood of making a field goal seems to be independent of past success (or failure) on recent attempts. Defenses may adjust to guard a “hot” player more closely. However, the findings suggesting no hot hand tend to hold even whether there is no defense (Gilovich et al., 1985). Some recent research, however, has suggested that there may be a small hot hand effect in some contexts (Bocskocsky et al., 2014; Miller & Sanjurjo, 2014; Miller & Sanjurjo, 2024). However, if any such effect exists, the hot hand may be limited to a small subset of players and the effect size of any hot hand effect appears to be small (Pelechrinis & Winston, 2022). Indeed, the slight potential increase in shooting ability tends to be offset by the tendency that “hot” players have to increase their shot difficulty after making a previous shot, so the net hot hand effect appears to be “vanishingly small” (Partnow, 2021).

In football, when trying how to distribute the ball among multiple Running Backs, it is not uncommon to hear that a coach wants to give the ball to the Running Back with the “hot hand.” In fantasy football, consider that a player just had a multiple touchdown game. Due to the hot hand fallacy, a manager might continue to start the player because they believe the player is “on fire” and is likely to continue to score at an unsustainable rate.

It is important consider whether such a string of strong performances are outliers and if the player may, in future games, regress to the mean. When considering whether strong performances are outliers and may regress to the mean, it is valuable to consider whether the player’s health, skill, or situation has appreciably changed (compared to the player’s earlier, weaker performances). Is the player finally fully healthy? Has the player appreciably improved in some skill that will benefit them in future games? Has the player’s long-term situation improved, such as moving up the depth chart, or receiving more carries/targets that is not tied to a specific opponent or game script? Or, alternatively, do the improvements appear to be driven by transient, game-specific factors, such as the health of a teammate, the opponents they played, or the game script that ensued? If long-term outlook of the player has appreciably changed due to changes in the fundamentals of a player’s value, such as their health, skill, or situation, it is less likely that such performance improvements will regress over the long run.

14.5.4 Sunk Cost Fallacy

A sunk cost is a cost (e.g., in money, time, or effort) that has already been incurred and cannot be recovered. For instance, if a person orders an expensive meal at a restaurant, the order is a sunk cost. The sunk cost fallacy is the tendency to continue an endeavor when there is a sunk cost. For instance, when ordering the expensive meal at the restaurant, a person may over-eat so that they feel that they eat their money’s worth of food. The sunk cost fallacy is an example of the commitment bias, an escalation of commitment by which a person continues to commit additional time, effort, or resources to a failing project. People tend to refuse to cut their losses when doing so would admit failure (Kahneman, 2011). It is important to be willing to identify and terminate failing projects to cut one’s losses.

Applied to fantasy football, consider a situation in which you invest a lot of salary cap or a high draft pick to draft a promising player, but they repeatedly underperform. If you continue to start the player to justify your large investment, instead of benching him in favor of a higher-performing player, you are committing the sunk cost fallacy. It is important to own up to our mistakes; when you make a mistake, the sooner you realize it, own it—for instance, by either benching or dropping the weak-performing player—and move on, the sooner you will be able to replace him with a better-performing player. At the same time, you probably do not want to give too much weight to a player’s performance in any given week, due to random chance that may lead a player to greatly under- or overperform; and whose future peformance is likely to regress (whether positively or negatively) to their mean.

14.5.5 Gambler’s Fallacy

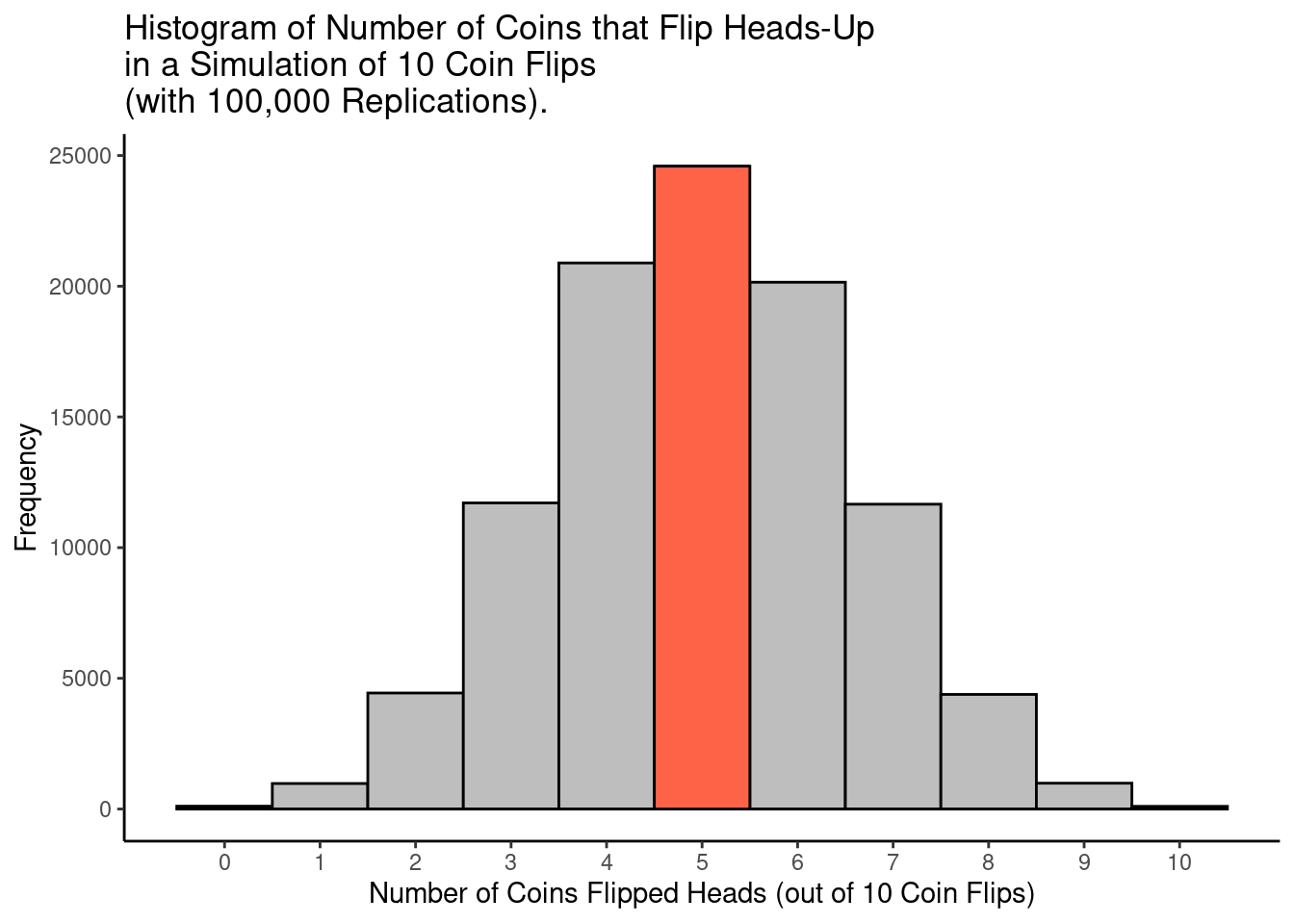

The gambler’s fallacy occurs due to an erroneous belief in the law of small numbers. The law of large numbers is a mathematical theorem that the average of a sufficiently large number of independent observations converges to the true value. For instance, if you flip a fair coin 1 million times, it is likely to land heads-up ~50% of the time. The law of small numbers (aka hasty generalization), by contrast, is an erroneous belief that small samples are representative of the populations from which they were drawn. In general, large samples yield more precise results than small samples, whereas extreme results (in either direction—e.g., much higher or much lower than 50% of coins that flip heads-up) are more likely to be obtained from small samples (Kahneman, 2011). For instance, if you flip a coin 10 times, belief in the law of small numbers would lead one to believe that the coin will flip heads-up exactly 5 times out of 10. However, in reality, the chance is less than 1 in 4 (24.6%) that exactly 5 of 10 coin flips turn up heads, as calculated below and as depicted in Figure 14.6:

Code

[1] 0.2460938The stats::dbinom() function in R provides the density of a binomial distribution. A binomial distribution is the probability of a particular number of successes (e.g., coins flipping heads-up) given a certain number of independent trials.

Code

set.seed(52242)

smallNumFlips <- 10

largeNumFlips <- 1000000

numSimulations <- 100000

numHeadsSmallNumFlips <- rbinom(

n = numSimulations,

size = smallNumFlips,

prob = .5

)

numHeadsLargeNumFlips <- rbinom(

n = numSimulations,

size = largeNumFlips,

prob = .5

)

simulationOfFlippingCoins <- data.frame(

numHeadsSmallNumFlips = numHeadsSmallNumFlips,

numHeadsLargeNumFlips = numHeadsLargeNumFlips

)

simulationOfFlippingCoins <- simulationOfFlippingCoins %>%

mutate(

proportionHeadsSmallNumFlips = numHeadsSmallNumFlips / smallNumFlips,

proportionHeadsLargeNumFlips = numHeadsLargeNumFlips / largeNumFlips,

highlight = ifelse(numHeadsSmallNumFlips == 5, "yes", "no"),

extremeSmallNumFlips = ifelse(proportionHeadsSmallNumFlips <= 0.2 | proportionHeadsSmallNumFlips >= 0.8, TRUE, FALSE),

extremeLargeNumFlips = ifelse(proportionHeadsLargeNumFlips <= 0.2 | proportionHeadsLargeNumFlips >= 0.8, TRUE, FALSE),

)

ggplot2::ggplot(

data = simulationOfFlippingCoins,

mapping = aes(

x = numHeadsSmallNumFlips,

fill = highlight)

) +

geom_histogram(

color = "#000000",

bins = 11

) +

scale_x_continuous(

breaks = 0:10

) +

scale_fill_manual(

values = c(

"yes" = "tomato",

"no" = "gray")

) +

labs(

x = "Number of Coins Flipped Heads (out of 10 Coin Flips)",

y = "Frequency",

title = "Histogram of Number of Coins that Flip Heads-Up\nin a Simulation of 10 Coin Flips\n(with 100,000 Replications)."

) +

theme_classic() +

theme(legend.position = "none")Although 5 is the modal count of coins that flip heads-up out of 10 flips (i.e., it was more common than any other number), it is less common than the aggregate probability of flipping any number of heads besides 5. The probability of getting any other number of coin flips turning up heads (other than 5) is:

Moreover, among the simulations with only 10 coin flips, nearly 11% of sets had extreme results (≤ 20% heads or ≥ 80% heads):

By contrast, among the simulations with 1 million coin flips, none of the sets had extreme results:

The gambler’s fallacy is the erroneous belief that future probabilities are influenced by past events, even when the events are independent. For example, a gambler may pay close attention to a particular slot machine. If the slot machine has not paid out in a while, the gambler may believe that the slot machine is about to pay out soon, and may start putting coins in the slots. Or, a gambler on a losing streak may (wrongly) believe that they “are due” and keeps gambling as a result.

Applied to fantasy football, consider that a Quarterback has had several lousy games in a row. The gambler’s fallacy might lead a manager to start the player under the belief that the player “is due” for a big game, expecting a strong performance from the player merely because the player has not had a good game in a while.

14.5.6 Anecdotal Fallacy

The anecdotal fallacy is the error of drawing inferences based on personal experience or singular examples rather than based on stronger forms of evidence. Although people tend to find stories and anecdotes compelling, they are among the weakest forms of evidence, as described in Section 8.6.3. Consider that a fantasy football commentator writes an article about how they won their league by drafting a Quarterback and Tight End with their first two picks, respectively. The anecdotal fallacy might lead a manager who reads the article to believe that they are more likely to win their league if they follow a similar strategy, despite stronger evidence otherwise. It is important to be skeptical of anecdotes and personal experience.

14.5.7 Narrative Fallacy

The narrative fallacy is the error of creating a story with cause-and-effect explanations from random events. According to Kahneman (2011), people find stories more compelling if they a) are simple (as opposed to complex), b) are concrete (as opposed to abstract), c) provide a greater attribution to talent, stupidity, and intentions (compared to luck), and d) focus on a few salient events that occurred (rather than the many events that failed to occur). For instance, consider that a player undergoes a challenging start to the season; however, they have higher-performing games in the middle of the season. A fantasy football manager may construct a “causal narrative” that the player overcame obstacles and is now trending toward strong performance for the rest of the season, even though the stronger performance could reflect some natural and random fluctuation.

14.5.8 Conditional Probability Fallacy

We describe the conditional probability fallacy in Section 16.3.2 after introducing conditional probability in Section 16.3.1.3.

14.5.9 Ecological Fallacy

The ecological fallacy is the error of drawing inferences about an individual from group-level data. For instance, a manager is interested in how age is associated with fantasy performance. They examine the correlation between age and fantasy points and find that age is positively correlated with fantasy points among Quarterbacks. The ecological fallacy would then take this finding to infer that an individual Quarterback is likely to increase in their performance with age. We describe the ecological fallacy more in Section 12.2.1.

14.6 Conclusion

In conclusion, there are many heuristics, cognitive biases, and fallacies that people engage in when making judgments and predictions. It is prudent to be aware of these common biases and to work to counteract them. For instance, look for information that challenges or disconfirms your beliefs, and work to incorporate this information into your beliefs. Do your best to pursue observation, data analysis, and data interpretation with an open mind. You never know what important information you might discover if you go in with an open mind. Pay attention to fundamentals of a player’s value, such as their health, skill, or situation when considering whether a player’s performance may regress to the mean. If the strong performances appear to be driven by transient, game-specific factors, such as the health of a teammate, the opponents they played, or the game script that ensued, future performances may be more likely to regress to the mean. In general, people tend to be overconfident in their predictions. There is considerable luck in fantasy football. Approach the task of prediction with humility; no one is consistently able to accurately predict how well players will perform.

14.7 Session Info

R version 4.5.2 (2025-10-31)

Platform: x86_64-pc-linux-gnu

Running under: Ubuntu 24.04.3 LTS

Matrix products: default

BLAS: /usr/lib/x86_64-linux-gnu/openblas-pthread/libblas.so.3

LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.26.so; LAPACK version 3.12.0

locale:

[1] LC_CTYPE=C.UTF-8 LC_NUMERIC=C LC_TIME=C.UTF-8

[4] LC_COLLATE=C.UTF-8 LC_MONETARY=C.UTF-8 LC_MESSAGES=C.UTF-8

[7] LC_PAPER=C.UTF-8 LC_NAME=C LC_ADDRESS=C

[10] LC_TELEPHONE=C LC_MEASUREMENT=C.UTF-8 LC_IDENTIFICATION=C

time zone: UTC

tzcode source: system (glibc)

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] lubridate_1.9.5 forcats_1.0.1 stringr_1.6.0 dplyr_1.2.0

[5] purrr_1.2.1 readr_2.1.6 tidyr_1.3.2 tibble_3.3.1

[9] ggplot2_4.0.2 tidyverse_2.0.0

loaded via a namespace (and not attached):

[1] gtable_0.3.6 jsonlite_2.0.0 compiler_4.5.2 tidyselect_1.2.1

[5] scales_1.4.0 yaml_2.3.12 fastmap_1.2.0 R6_2.6.1

[9] labeling_0.4.3 generics_0.1.4 knitr_1.51 htmlwidgets_1.6.4

[13] pillar_1.11.1 RColorBrewer_1.1-3 tzdb_0.5.0 rlang_1.1.7

[17] stringi_1.8.7 xfun_0.56 S7_0.2.1 otel_0.2.0

[21] timechange_0.4.0 cli_3.6.5 withr_3.0.2 magrittr_2.0.4

[25] digest_0.6.39 grid_4.5.2 hms_1.1.4 lifecycle_1.0.5

[29] vctrs_0.7.1 evaluate_1.0.5 glue_1.8.0 farver_2.1.2

[33] rmarkdown_2.30 tools_4.5.2 pkgconfig_2.0.3 htmltools_0.5.9