I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

You can leave a comment at the bottom of the page/chapter, or open an issue or submit a pull request on GitHub: https://github.com/isaactpetersen/Fantasy-Football-Analytics-Textbook

Alternatively, you can leave an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

22 Factor Analysis

“All models are wrong, but some are useful.”

— George Box (1979, p. 202)

This chapter provides an overview of factor analysis, which encompasses a range of latent variable modeling approaches, including exploratory and confirmatory factor analysis.

22.1 Getting Started

22.1.1 Load Packages

22.1.2 Load Data

We created the player_stats_weekly.RData and player_stats_seasonal.RData objects in Section 4.4.3.

22.1.3 Prepare Data

22.1.3.1 Season-Averages

Code

player_stats_seasonal_avgPerGame <- player_stats_seasonal %>%

mutate(

completionsPerGame = completions / games,

attemptsPerGame = attempts / games,

passing_yardsPerGame = passing_yards / games,

passing_tdsPerGame = passing_tds / games,

passing_interceptionsPerGame = passing_interceptions / games,

sacks_sufferedPerGame = sacks_suffered / games,

sack_yards_lostPerGame = sack_yards_lost / games,

sack_fumblesPerGame = sack_fumbles / games,

sack_fumbles_lostPerGame = sack_fumbles_lost / games,

passing_air_yardsPerGame = passing_air_yards / games,

passing_yards_after_catchPerGame = passing_yards_after_catch / games,

passing_first_downsPerGame = passing_first_downs / games,

#passing_epaPerGame = passing_epa / games,

#passing_cpoePerGame = passing_cpoe / games,

passing_2pt_conversionsPerGame = passing_2pt_conversions / games,

#pacrPerGame = pacr / games

pass_40_ydsPerGame = pass_40_yds / games,

pass_incPerGame = pass_inc / games,

#pass_comp_pctPerGame = pass_comp_pct / games,

fumblesPerGame = fumbles / games

)

nfl_nextGenStats_seasonal <- nfl_nextGenStats_weekly %>%

filter(season_type == "REG") %>%

select(-any_of(c("week","season_type","player_display_name","player_position","team_abbr","player_first_name","player_last_name","player_jersey_number","player_short_name"))) %>%

group_by(player_gsis_id, season) %>%

summarise(

across(everything(),

~ mean(.x, na.rm = TRUE)),

.groups = "drop")22.1.3.2 Merge Data

22.1.3.3 Specify Variables

Code

faVars <- c(

"completionsPerGame","attemptsPerGame","passing_yardsPerGame","passing_tdsPerGame",

"passing_air_yardsPerGame","passing_yards_after_catchPerGame","passing_first_downsPerGame",

"avg_completed_air_yards","avg_intended_air_yards","aggressiveness","max_completed_air_distance",

"avg_air_distance","max_air_distance","avg_air_yards_to_sticks","passing_cpoe","pass_comp_pct",

"passer_rating","completion_percentage_above_expectation"

)22.1.3.4 Subset Data

22.1.3.5 Standardize Variables

Standardizing variables is not strictly necessary in factor analysis; however, standardization can be helpful to prevent some variables from having considerably larger variances than others.

22.2 Overview of Factor Analysis

Factor analysis involves the estimation of latent variables. Latent variables are ways of studying and operationalizing theoretical constructs that cannot be directly observed or quantified. Factor analysis is a class of latent variable models that is designated to identify the structure of a measure or set of measures, and ideally, a construct or set of constructs. It aims to identify the optimal latent structure for a group of variables. The goal of factor analysis is to identify simple, parsimonious factors that underlie the “junk” (i.e., scores filled with measurement error) that we observe. That is, similar to principal component analysis, factor analysis can help us perform data reduction—i.e., it can help us reduce down a larger set of variables into a smaller set of factors.

Factor analysis encompasses two general types: confirmatory factor analysis and exploratory factor analysis. Exploratory factor analysis (EFA) is a latent variable modeling approach that is used when the researcher has no a priori hypotheses about how a set of variables is structured. EFA seeks to identify the empirically optimal-fitting model in ways that balance accuracy (i.e., variance accounted for) and parsimony (i.e., simplicity). Confirmatory factor analysis (CFA) is a latent variable modeling approach that is used when a researcher wants to evaluate how well a hypothesized model fits, and the model can be examined in comparison to alternative models. Using a CFA approach, the researcher can pit models representing two theoretical frameworks against each other to see which better accounts for the observed data.

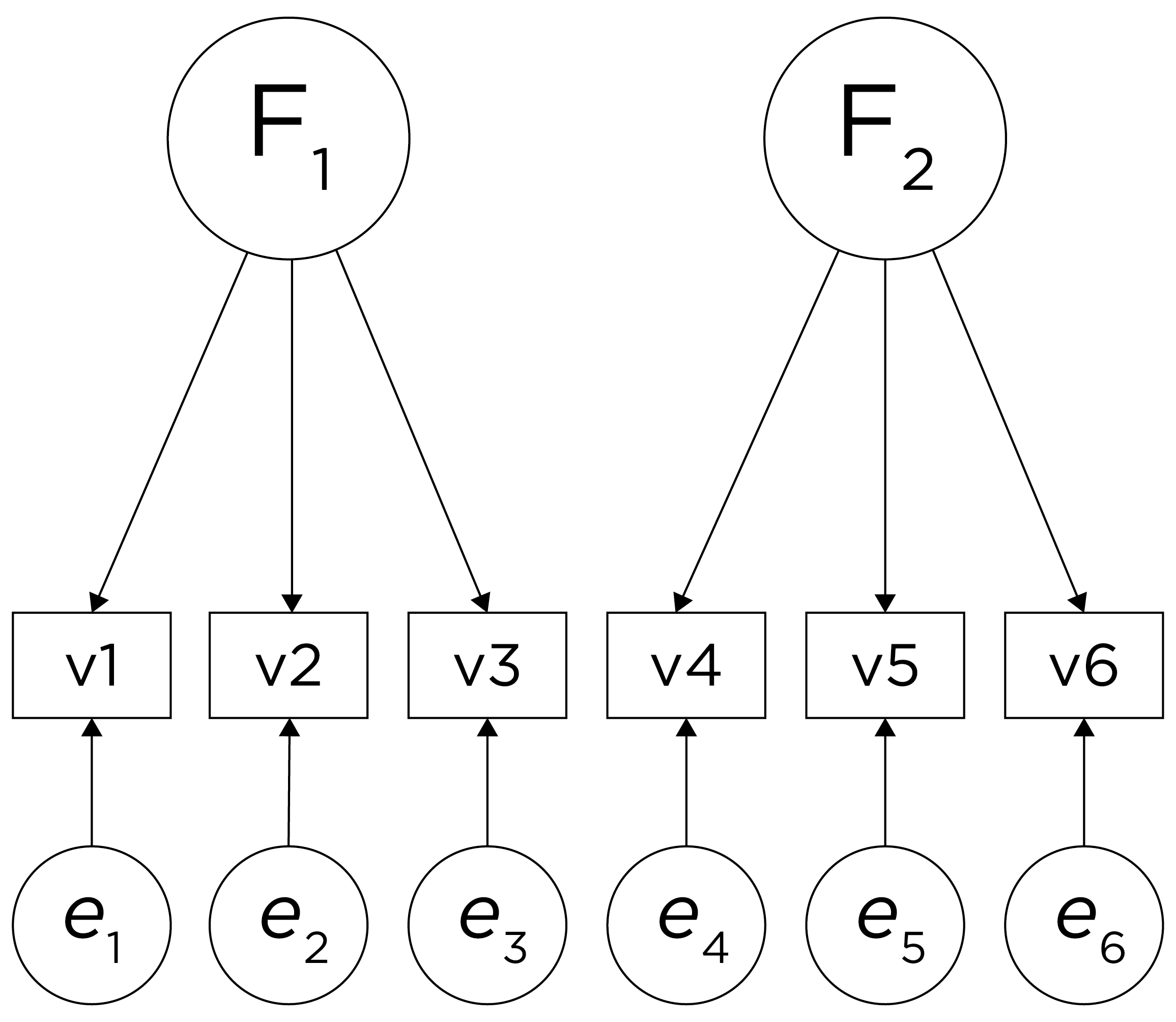

Factor analysis involves observed (manifest) variables and unobserved (latent) factors. Factor analysis assumes that the latent factor influences the manifest variables, and the latent factor therefore reflects the common variance among the variables. A factor model potentially includes factor loadings, residuals (errors or disturbances), intercepts/means, covariances, and regression paths. When depicting a factor analysis model, rectangles represent variables we observe (i.e., manifest variables), and circles represent latent (i.e., unobserved) variables. A regression path indicates a hypothesis that one variable (or factor) influences another, and it is depicted using a single-headed arrow. The standardized regression coefficient (i.e., beta or \(\beta\)) represents the strength of association between the variables or factors. A factor loading is a regression path from a latent factor to an observed (manifest) variable. The standardized factor loading represents the strength of association between the variable and the latent factor, where conceptually, it is intended to reflect the magnitude that the latent factor influences the observed variable. A residual is variance in a variable (or factor) that is unexplained by other variables or factors. A variable’s intercept is the expected value of the variable when the factor(s) (onto which it loads) is equal to zero. A covariance is the unstandardized index of the strength of association between two variables (or factors), and it is depicted with a double-headed arrow.

Because a covariance is unstandardized, its scale depends on the scale of the variables. The covariance between two variables is the average product of their deviations from their respective means, as in Equation 10.1. The covariance of a variable with itself is equivalent to its variance, as in Equation 10.2. By contrast, a correlation is a standardized index of the strength of association between two variables. Because a correlation is standardized (fixed between [−1,1]), its scale does not depend on the scales of the variables. Because a covariance is unstandardized, its scale depends on the scale of the variables. A covariance path between two variables represents omitted shared cause(s) of the variables. For instance, if you depict a covariance path between two variables, it means that there is a shared cause of the two variables that is omitted from the model (for instance, if the common cause is not known or was not assessed).

In factor analysis, the relation between an indicator (\(\text{X}\)) and its underlying latent factor(s) (\(\text{F}\)) can be represented with a regression formula as in Equation 22.1:

\[ X = \lambda \cdot \text{F} + \text{Item Intercept} + \text{Error Term} \tag{22.1}\]

where:

- \(\text{X}\) is the observed value of the indicator

- \(\lambda\) is the factor loading, indicating the strength of the association between the indicator and the latent factor(s)

- \(\text{F}\) is the person’s value on the latent factor(s)

- \(\text{Item Intercept}\) represents the constant term that accounts for the expected value of the indicator when the latent factor(s) are zero

- \(\text{Error Term}\) is the residual, indicating the extent of variance in the indicator that is not explained by the latent factor(s)

When the latent factors are uncorrelated, the (standardized) error term for an indicator is calculated as 1 minus the sum of squared standardized factor loadings for a given item (including cross-loadings). A cross-loading is when a variable loads onto more than one latent factor.

Factor analysis is a powerful technique to help identify the factor structure that underlies a measure or construct. However, given the extensive method variance that influences scores on measure, factor analysis (and principal component analysis) tends to extract method factors. Method factors are factors that are related to the methods being assessed rather than the construct of interest. To better estimate construct factors, it is sometimes necessary to estimate both construct and method factors.

Before pursuing factor analysis, it is helpful to evaluate the extent to which the variables are intercorrelated. If the variables are not correlated (or are correlated only weakly), there is no reason to believe that a common factor influences them, and thus factor analysis would not be useful. So, before conducting a factor analysis, it is important to examine the correlation matrix of the variables to determine whether the variables intercorrelate strongly enough to justify a factor analysis.

22.3 Factor Analysis and Structural Equation Modeling

Factor analysis forms the measurement model component of a structural equation model (SEM). The measurement model is what we settle on as the estimation of each construct before we add the structural component to estimate the relations among latent variables. Basically, in a structural equation model, we add the structural component onto the measurement model. For instance, our measurement model (i.e., based on factor analyis) might be to estimate, from a set of items, three latent factors: usage, aggressiveness, and performance. Our structural model then may examine what processes (e.g., sport drink consumption or sleep) influence these latent factors, how the latent factors influence each other, and what the latent factors influence (e.g., fantasy points). SEM is confirmatory factor analysis with regression paths that specify hypothesized causal relations between the latent variables (the structural component of the model). Exploratory structural equation modeling (ESEM) is a form of SEM that allows for a combination of exploratory factor analysis and confirmatory factor analysis to estimate latent variables and the relations between them.

22.4 Path Diagrams

A key tool when designing a factor analysis or structural equation model is a conceptual depiction of the hypothesized causal processes. A path diagram depicts the hypothesized causal processes that link two or more variables. Path diagrams are an example of a causal diagram and are similar to directed acyclic graphs discussed in Section 13.6. Karch (2025a) provides a tool to create lavaan R syntax from a path analytic diagram: https://lavaangui.org.

In a path analysis diagram, rectangles represent variables we observe, and circles represent latent (i.e., unobserved) variables. Single-headed arrows indicate regression paths, where conceptually, one variable is thought to influence another variable. Double-headed arrows indicate covariance paths, where conceptually, two variables are associated for some unknown reason (i.e., an omitted shared cause).

22.5 Decisions in Factor Analysis

There are five primary decisions to make in factor analysis:

- what variables to include in the model and how to scale them

- method of factor extraction: whether to use exploratory or confirmatory factor analysis

- if using exploratory factor analysis, whether and how to rotate factors

- how many factors to retain (and what variables load onto which factors)

- how to interpret and use the factors

The answer you get can differ highly depending on the decisions you make. Below, we provide guidance for each of these decisions.

22.5.1 1. Variables to Include and their Scaling

The first decision when conducting a factor analysis is which variables to include and the scaling of those variables. What factors you extract can differ widely depending on what variables you include in the analysis. For example, if you include many variables from the same source (e.g., self-report), it is possible that you will extract a factor that represents the common variance among the variables from that source (i.e., the self-reported variables). This would be considered a method factor, which works against the goal of estimating latent factors that represent the constructs of interest (as opposed to the measurement methods used to estimate those constructs).

An additional consideration is the scaling of the variables: whether to use raw variables, standardized variables, or dividing some variables by a constant to make the variables’ variances more similar. Before performing a principal component analysis (PCA), it is generally important to ensure that the variables included in the PCA are on the same scale. PCA seeks to identify components that explain variance in the data, so if the variables are not on the same scale, some variables may contribute considerably more variance than others. A common way of ensuring that variables are on the same scale is to standardize them using, for example, z scores that have a mean of zero and standard deviation of one. By contrast, factor analysis can better accommodate variables that are on different scales.

22.5.2 2. Method of Factor Extraction

22.5.2.1 Exploratory Factor Analysis

Exploratory factor analysis (EFA) is used if you have no a priori hypotheses about the factor structure of the model, but you would like to understand the latent variables represented by your items.

EFA is partly induced from the data. You feed in the data and let the program build the factor model. You can set some parameters going in, including how to extract or rotate the factors. The factors are extracted from the data without specifying the number and pattern of loadings between the items and the latent factors (Bollen, 2002). All cross-loadings are freely estimated.

22.5.2.2 Confirmatory Factor Analysis

Confirmatory factor analysis (CFA) is used to (dis)confirm a priori hypotheses about the factor structure of the model. CFA is a test of the hypothesis. In CFA, you specify the model and ask how well this model represents the data. The researcher specifies the number, meaning, associations, and pattern of free parameters in the factor loading matrix (Bollen, 2002). A key advantage of CFA is the ability to directly compare alternative models (i.e., factor structures), which is valuable for theory testing (Strauss & Smith, 2009). For instance, you could use CFA to test whether the variance in several measures’ scores is best explained with one factor or two factors. In CFA, cross-loadings are not estimated unless the researcher specifies them.

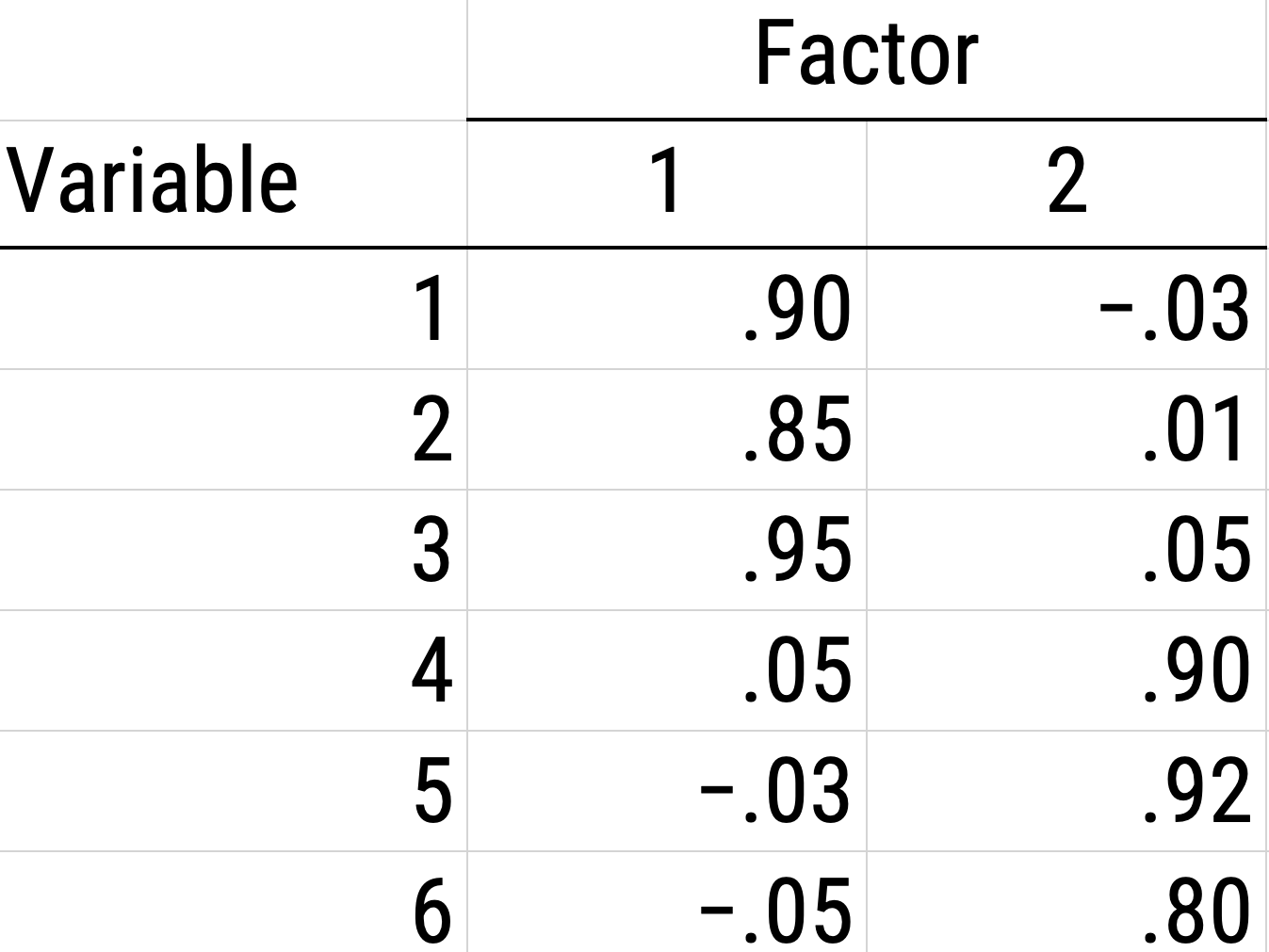

22.5.3 3. Factor Rotation

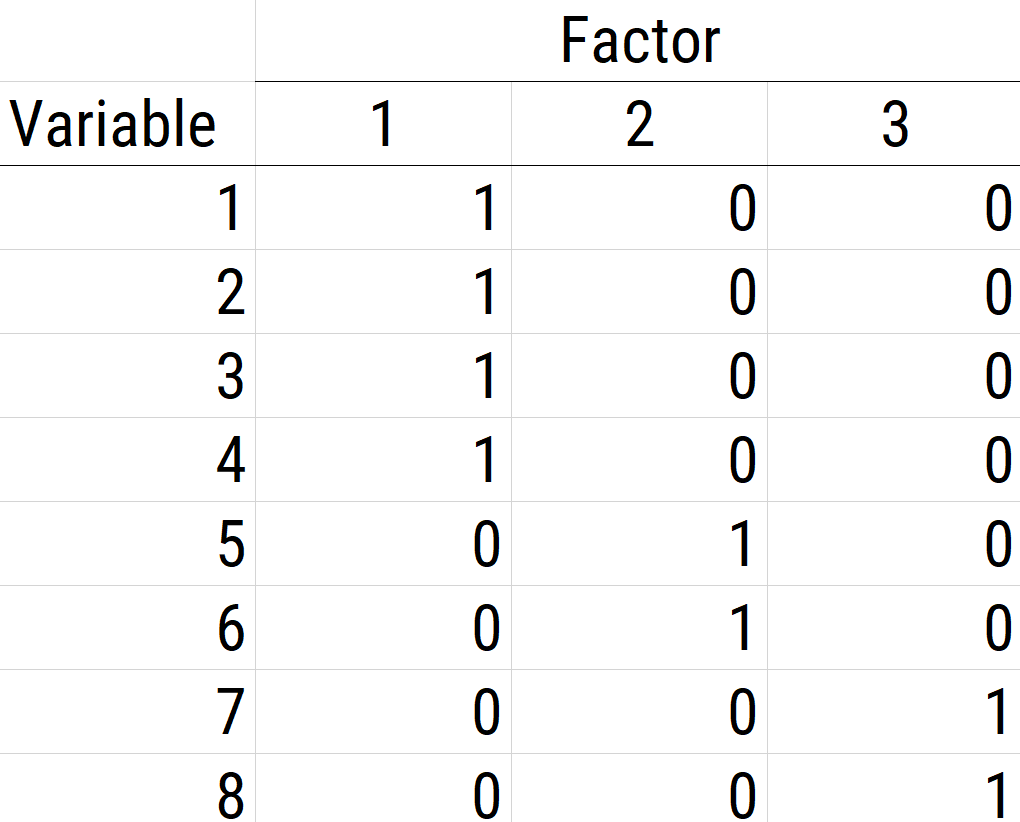

When using EFA or principal component analysis, an important step is, possibly, to rotate the factors to make them more interpretable and simple, which is the whole goal. To interpret the results of a factor analysis, we examine the factor matrix. The columns refer to the different factors; the rows refer to the different observed variables. The cells in the table are the factor loadings—they are basically the correlation between the variable and the factor. Our goal is to achieve a model with simple structure because it is easily interpretable. Simple structure means that every variable loads perfectly on one and only one factor, as operationalized by a matrix of factor loadings with values of one and zero and nothing else. An example of a factor matrix that follows simple structure is depicted in Figure 22.1.

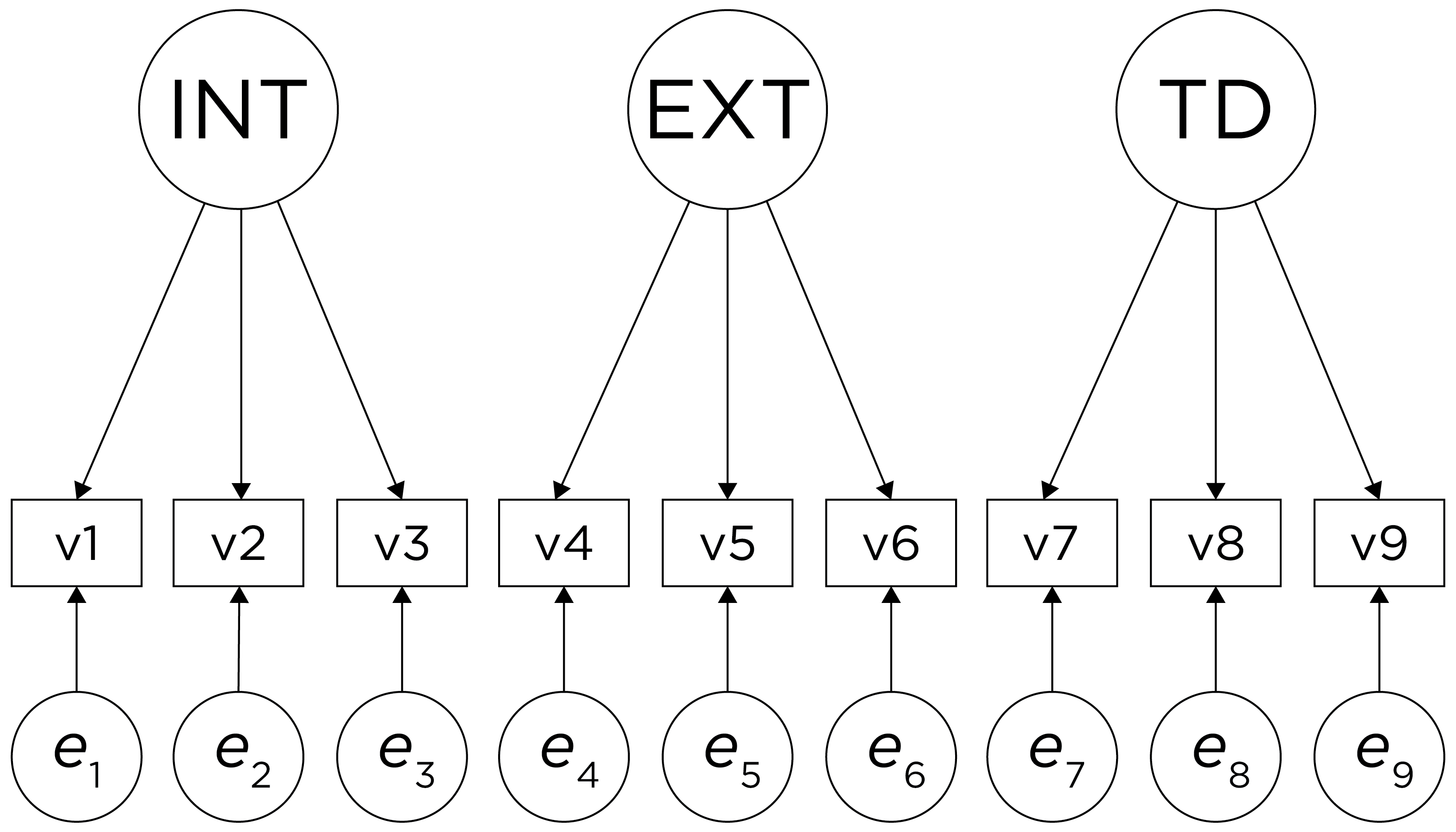

An example of a factor analysis model that follows simple structure is depicted in Figure 22.2. Each variable loads onto one and only one factor, which makes it easy to interpret the meaning of each factor, because a given factor represents the common variance among the items that load onto it.

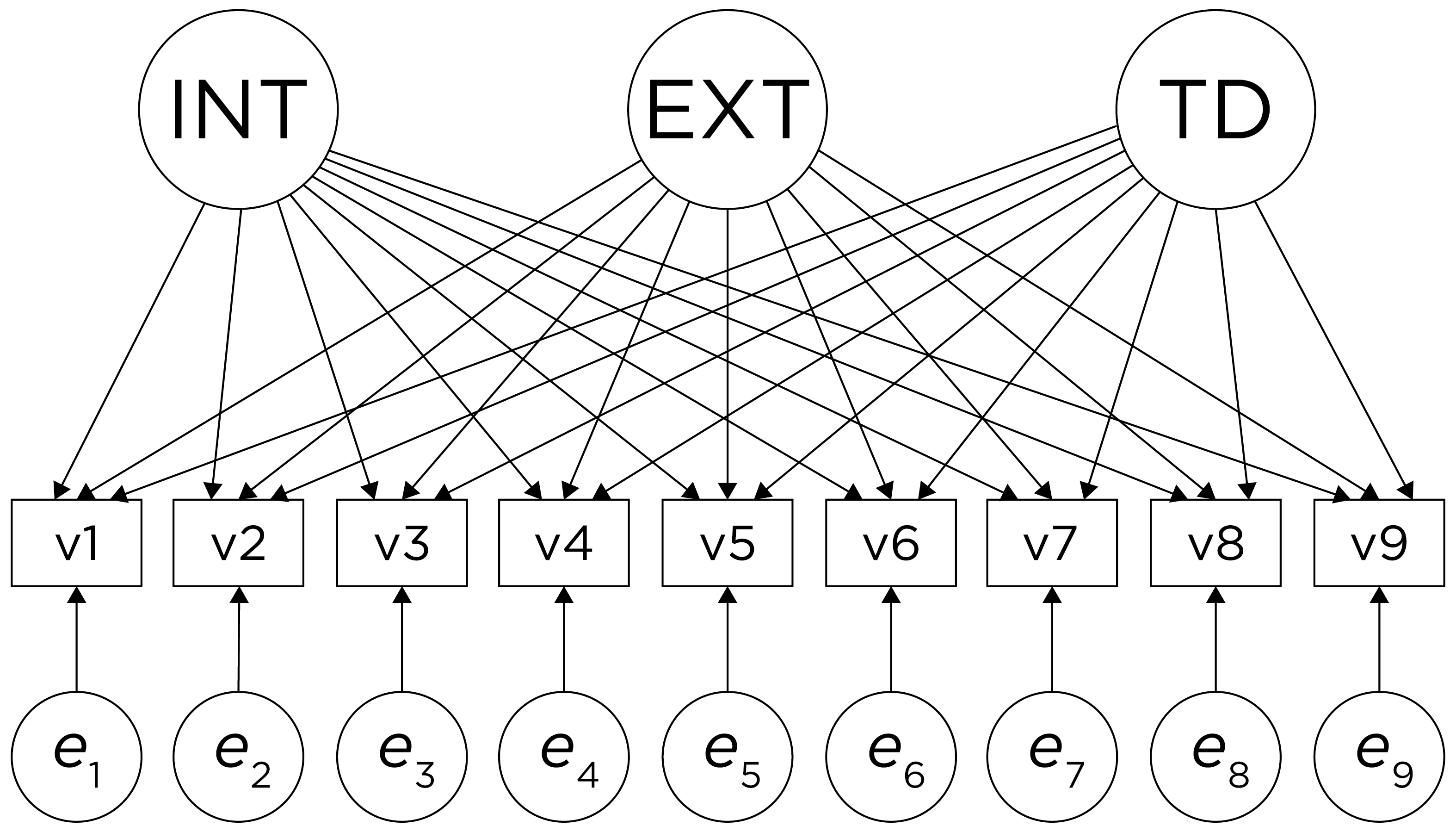

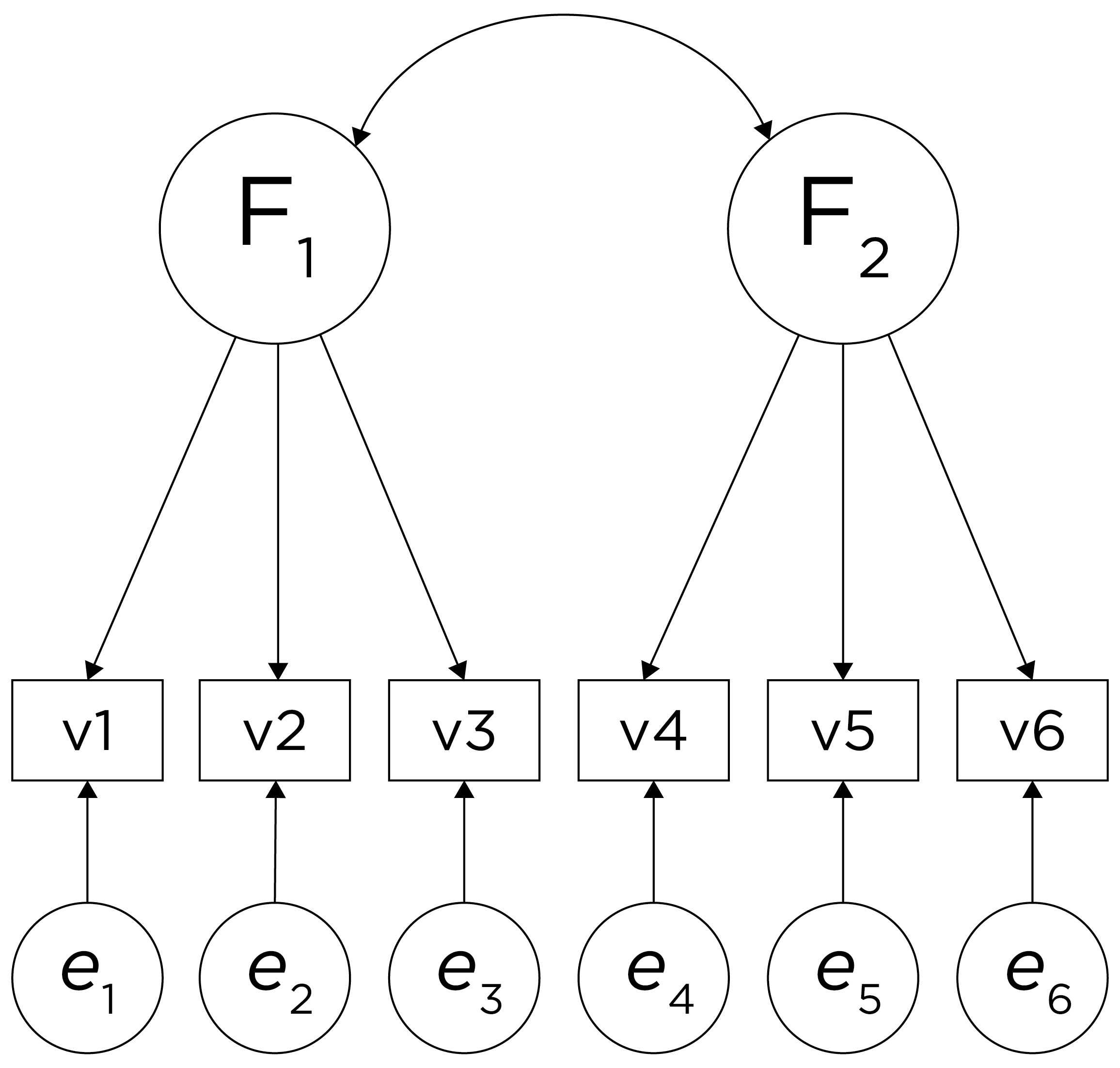

However, pure simple structure only occurs in simulations, not in real-life data. In reality, our unrotated factor analysis model might look like the model in Figure 22.3. In this example, the factor analysis model does not show simple structure because the items have cross-loadings—that is, the items load onto more than one factor. The cross-loadings make it difficult to interpret the factors, because all of the items load onto all of the factors, so the factors are not very distinct from each other, which makes it difficult to interpret what the factors mean.

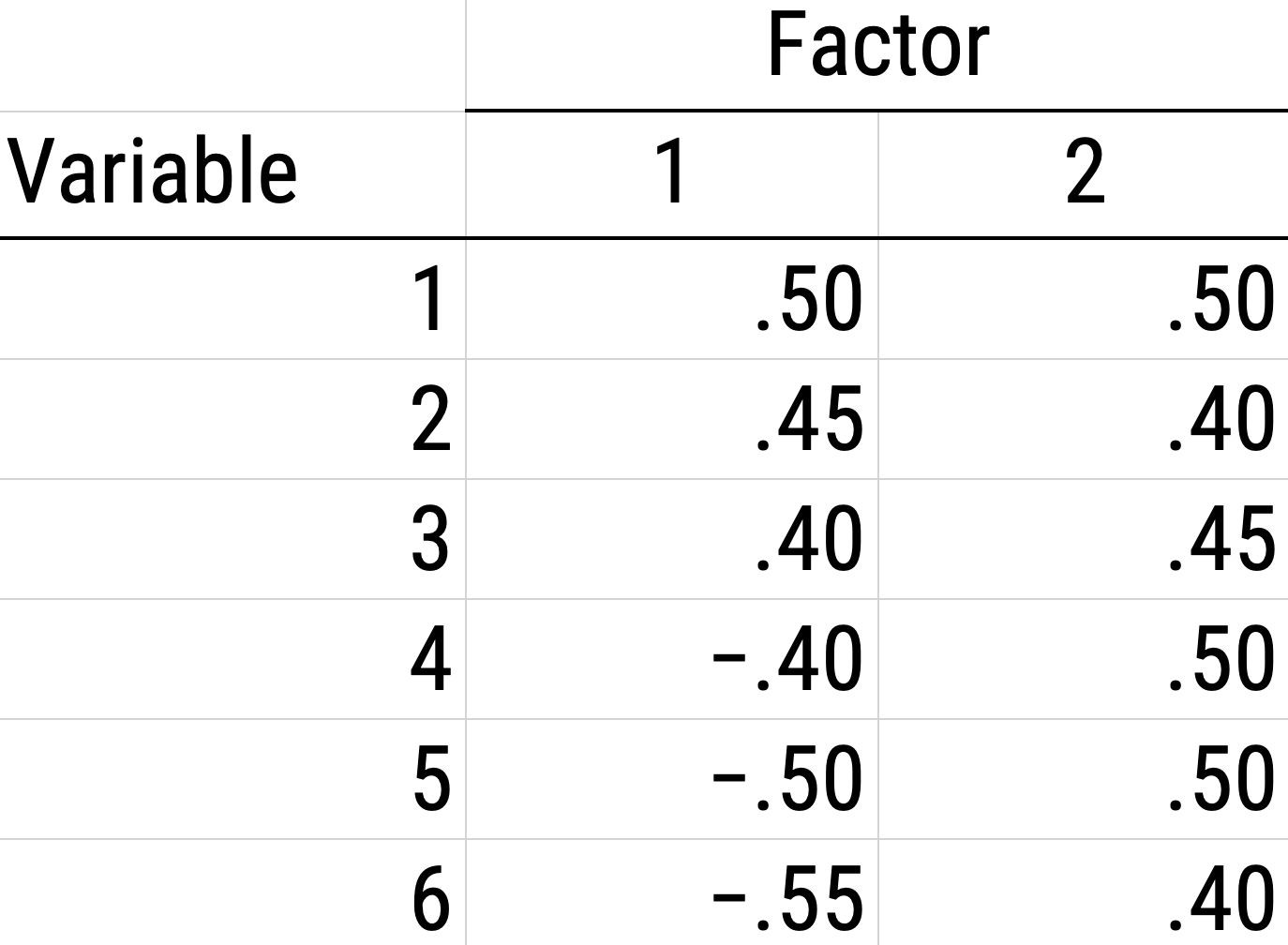

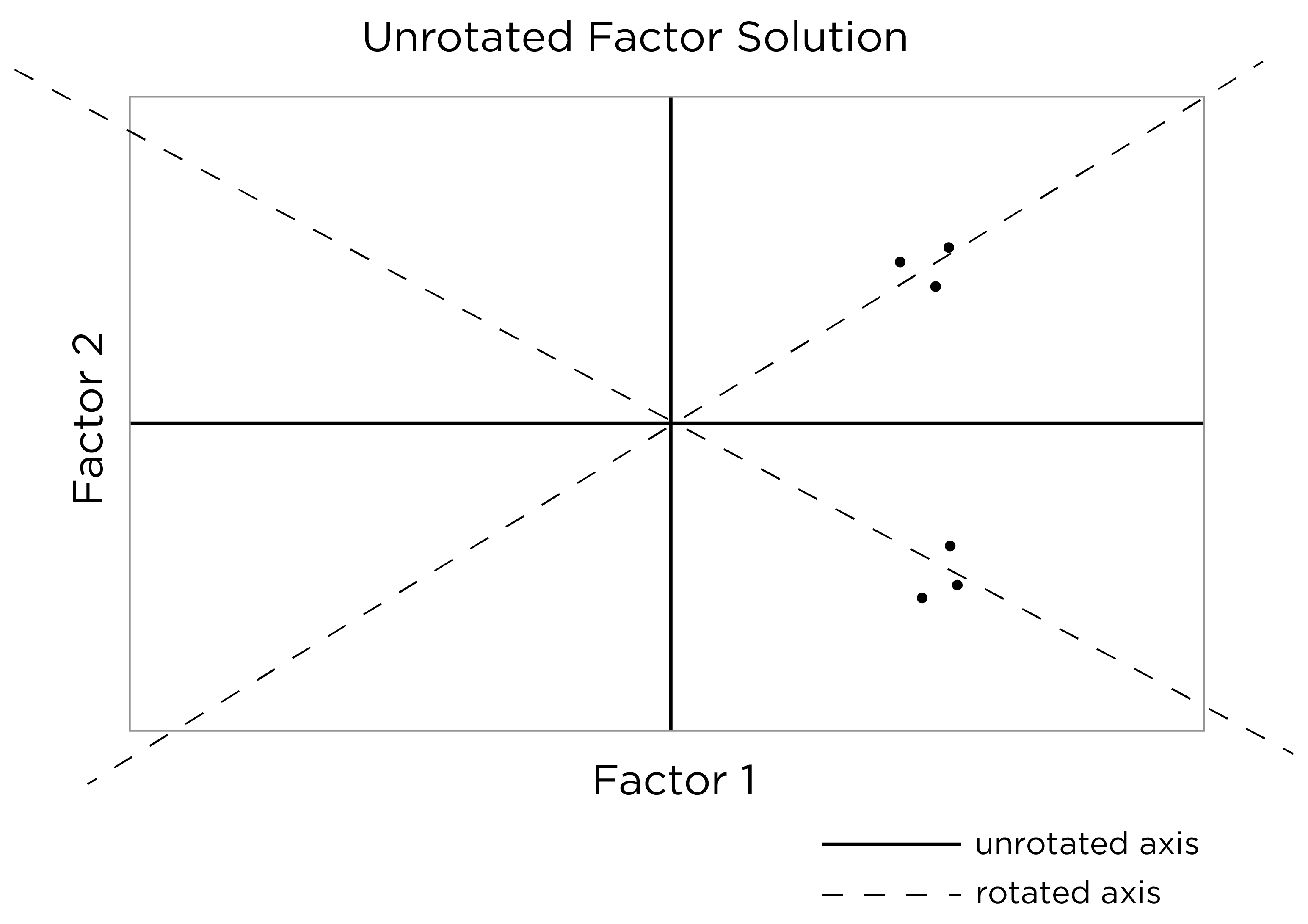

As a result of the challenges of interpretability caused by cross-loadings, factor rotations are often performed. An example of an unrotated factor matrix is in Figure 22.4.

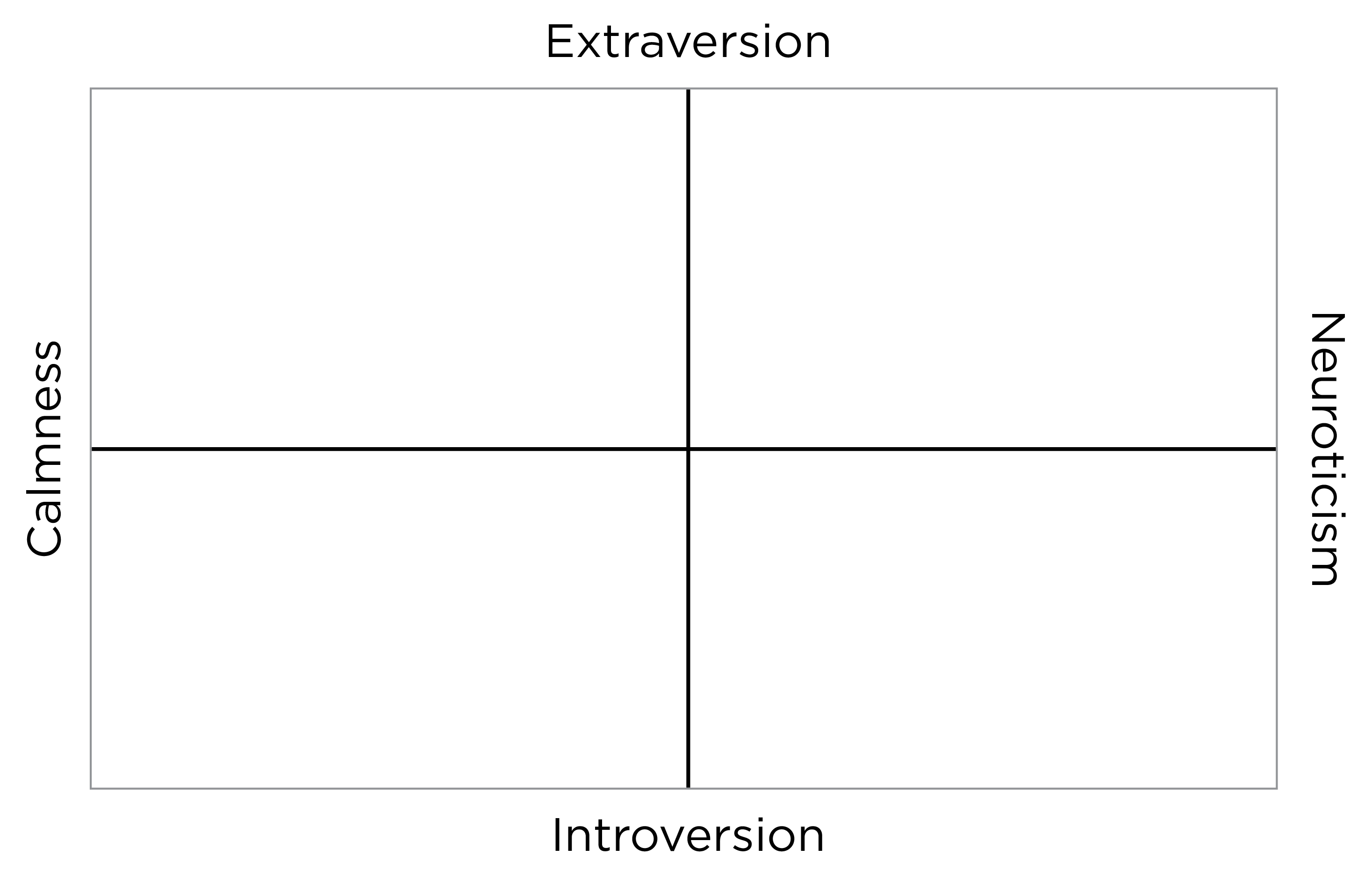

In the example factor matrix in Figure 22.5, the factor analysis is not very helpful—it tells us very little because it did not distinguish between the two factors. The variables have similar loadings on Factor 1 and Factor 2. An example of a unrotated factor solution is in Figure 22.5. In the figure, all of the variables are in the midst of the quadrants—they are not on the factors’ axes. Thus, the factors are not very informative.

As a result, to improve the interpretability of the factor analysis, we can do what is called rotation. Rotation leverages the idea that there are infinite solutions to the factor analysis model that fit equally well. Rotation involves changing the orientation of the factors by changing the axes so that variables end up with very high (close to one or negative one) or very low (close to zero) loadings, so that it is clear which factors include which variables (and which factors each variable most strongly loads onto). That is, rotation rescales the factors and tries to identify the ideal solution (factor) for each variable. Rotation occurs by changing the variables’ loadings while keeping the structure of correlations among the variables intact (Field et al., 2012). Moreover, rotation does not change the variance explained by factors. Rotation searches for simple structure and keeps searching until it finds a minimum (i.e., the closest as possible to simple structure). After rotation, if the rotation was successful for imposing simple structure, each factor will have loadings close to one (or negative one) for some variables and close to zero for other variables. The goal of factor rotation is to achieve simple structure, to help make it easier to interpret the meaning of the factors.

To perform factor rotation, orthogonal rotations are often used. Orthogonal rotations make the rotated factors uncorrelated. An example of a commonly used orthogonal rotation is varimax rotation. Varimax rotation maximizes the sum of the variance of the squared loadings (i.e., so that items have either a very high or very low loading on a factor) and yields axes with a 90-degree angle.

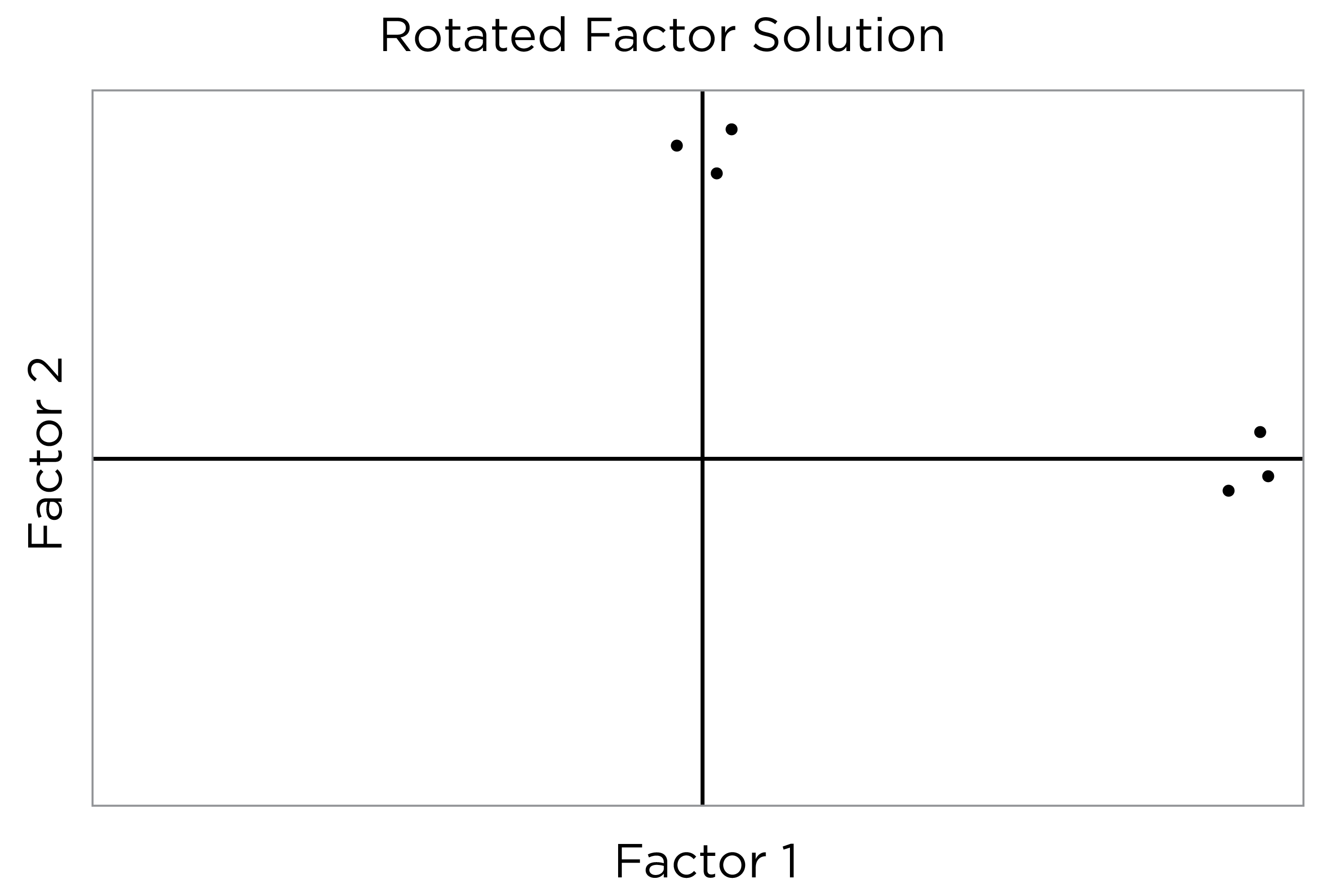

An example of a factor matrix following an orthogonal rotation is depicted in Figure 22.6. An example of a factor solution following an orthogonal rotation is depicted in Figure 22.7.

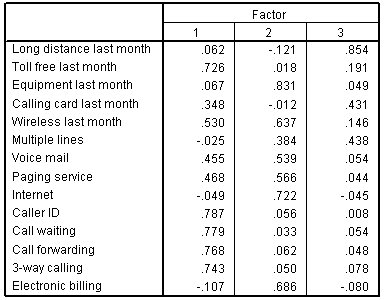

An example of a factor matrix from SPSS following an orthogonal rotation is depicted in Figure 22.8.

An example of a factor structure from an orthogonal rotation is in Figure 22.9.

Sometimes, however, the two factors and their constituent variables may be correlated. Examples of two correlated factors may be depression and anxiety. When the two factors are correlated in reality, if we make them uncorrelated, this would result in an inaccurate model. Oblique rotation allows for factors to be correlated and yields axes with an angle of less than 90 degrees. However, if the factors have low correlation (e.g., .2 or less), you can likely continue with orthogonal rotation. Nevertheless, just because an oblique rotation allows for correlated factors does not mean that the factors will be correlated, so oblique rotation provides greater flexibility than orthogonal rotation. An example of a factor structure from an oblique rotation is in Figure 22.10. Results from an oblique rotation are more complicated than orthogonal rotation—they provide lots of output and are more complicated to interpret. In addition, oblique rotation might not yield a smooth answer if you have a relatively small sample size.

As an example of rotation based on interpretability, consider the Five-Factor Model of Personality (the Big Five), which goes by the acronym, OCEAN: Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. Although the five factors of personality are somewhat correlated, we can use rotation to ensure they are maximally independent. Upon rotation, extraversion and neuroticism are essentially uncorrelated, as depicted in Figure 22.11. The other pole of extraversion is intraversion and the other pole of neuroticism might be emotional stability or calmness.

Simple structure is achieved when each variable loads highly onto as few factors as possible (i.e., each item has only one significant or primary loading). Oftentimes this is not the case, so we choose our rotation method in order to decide if the factors can be correlated (an oblique rotation) or if the factors will be uncorrelated (an orthogonal rotation). If the factors are not correlated with each other, use an orthogonal rotation. The correlation between an item and a factor is a factor loading, which is simply a way to ask how much a variable is correlated with the underlying factor. However, its interpretation is more complicated if there are correlated factors!

An orthogonal rotation (e.g., varimax) can help with simplicity of interpretation because it seeks to yield simple structure without cross-loadings. Cross-loadings are instances where a variable loads onto multiple factors. My recommendation would always be to use an orthogonal rotation if you have reason to believe that finding simple structure in your data is possible; otherwise, the factors are extremely difficult to interpret—what exactly does a cross-loading even mean? However, you should always try an oblique rotation, too, to see how strongly the factors are correlated. Examples of oblique rotations include oblimin and promax.

22.5.4 4. Determining the Number of Factors to Retain

A goal of factor analysis and principal component analysis is simplification or parsimony, while still explaining as much variance as possible. The hope is that you can have fewer factors that explain the associations between the variables than the number of observed variables. It does not make sense to replace 18 variables with 18 latent factors because that would not result in any simplification. The fewer the number of factors retained, the greater the parsimony, but the greater the amount of information that is discarded (i.e., the less the variance accounted for in the variables). The more factors we retain, the less the amount of information that is discarded (i.e., more variance is accounted for in the variables), but the less the parsimony. But how do you decide on the number of factors?

There are a number of criteria that one can use to help determine how many factors/components to keep:

- Kaiser-Guttman criterion (Kaiser, 1960): factors with eigenvalues greater than zero

- or, for principal component analysis, components with eigenvalues greater than 1

- Cattell’s scree test (Cattell, 1966): the “elbow” (inflection point) in a scree plot minus one; sometimes operationalized with optimal coordinates (OC) or the acceleration factor (AF)

- Parallel analysis: factors that explain more variance than randomly simulated data

- Very simple structure (VSS) criterion: larger is better

- Velicer’s minimum average partial (MAP) test: smaller is better

- Akaike information criterion (AIC): smaller is better

- Bayesian information criterion (BIC): smaller is better

- Sample size-adjusted BIC (SABIC): smaller is better

- Root mean square error of approximation (RMSEA): smaller is better

- Chi-square difference test: smaller is better; a significant test indicates that the more complex model is significantly better fitting than the less complex model

- Standardized root mean square residual (SRMR): smaller is better

- Comparative Fit Index (CFI): larger is better

- Tucker Lewis Index (TLI): larger is better

There is not necessarily a “correct” criterion to use in determining how many factors to keep, so it is generally recommended that researchers use multiple criteria in combination with theory and interpretability.

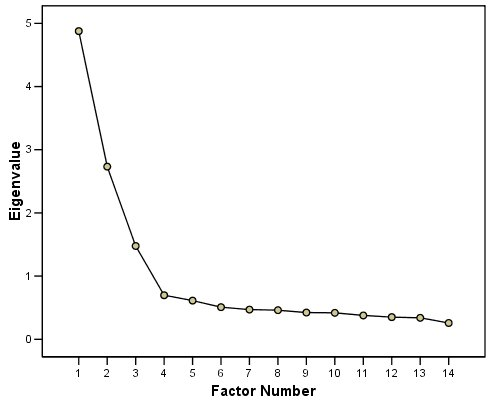

A scree plot provides lots of information. A scree plot has the factor number on the x-axis and the eigenvalue on the y-axis. The eigenvalue is the variance accounted for by a factor; when using a varimax (orthogonal) rotation, an eigenvalue (or factor variance) is calculated as the sum of squared standardized factor (or component) loadings on that factor. An example of a scree plot is in Figure 22.12.

The total variance is equal to the number of variables you have, so one eigenvalue is approximately one variable’s worth of variance. The first factor accounts for the most variance, the second factor accounts for the second-most variance, and so on. The more factors you add, the less variance is explained by the additional factor.

One criterion for how many factors to keep is the Kaiser-Guttman criterion. According to the Kaiser-Guttman criterion (Kaiser, 1960), you should keep any principal components (from PCA) whose eigenvalue is greater than 1 and factors (from factor analysis) whose eigenvalue is greater than 1. That is, for the sake of simplicity, parsimony, and data reduction, you should take any factors that explain more than a single variable would explain. According to the Kaiser-Guttman criterion, we would keep three components from Figure 22.12 that have eigenvalues greater than 1. The default in SPSS is to retain factors with eigenvalues greater than 1. However, keeping factors whose eigenvalue is greater than 1 is not the most correct rule. If you let SPSS do this, you may get many factors with eigenvalues around 1 (e.g., factors with an eigenvalue ~ 1.0001) that are not adding so much that it is worth the added complexity. The Kaiser-Guttman criterion usually results in keeping too many factors. Factors with small eigenvalues around 1 could reflect error shared across variables. For instance, factors with small eigenvalues could reflect method variance (i.e., method factor), such as a self-report factor that turns up as a factor in factor analysis, but that may be useless to you as a conceptual factor of a construct of interest.

Another criterion is Cattell’s scree test (Cattell, 1966), which involves selecting the number of factors from looking at the scree plot. “Scree” refers to the rubble of stones at the bottom of a mountain. According to Cattell’s scree test, you should keep the factors before the last steep drop in eigenvalues—i.e., the factors before the rubble, where the slope approaches zero. The beginning of the scree (or rubble), where the slope approaches zero, is called the “elbow” of a scree plot. Using Cattell’s scree test, you retain the number of factors that explain the most variance prior to the explained variance drop-off, because, ultimately, you want to include only as many factors in which you gain substantially more by the inclusion of these factors. That is, you would keep the number of factors at the elbow of the scree plot minus one. If the last steep drop occurs from Factor 4 to Factor 5 and the elbow is at Factor 5, we would keep four factors. In Figure 22.12, the last steep drop in eigenvalues occurs from Factor 3 to Factor 4; the elbow of the scree plot occurs at Factor 4. We would keep the number of factors at the elbow minus one. Thus, using Cattell’s scree test, we would keep three factors based on Figure 22.12.

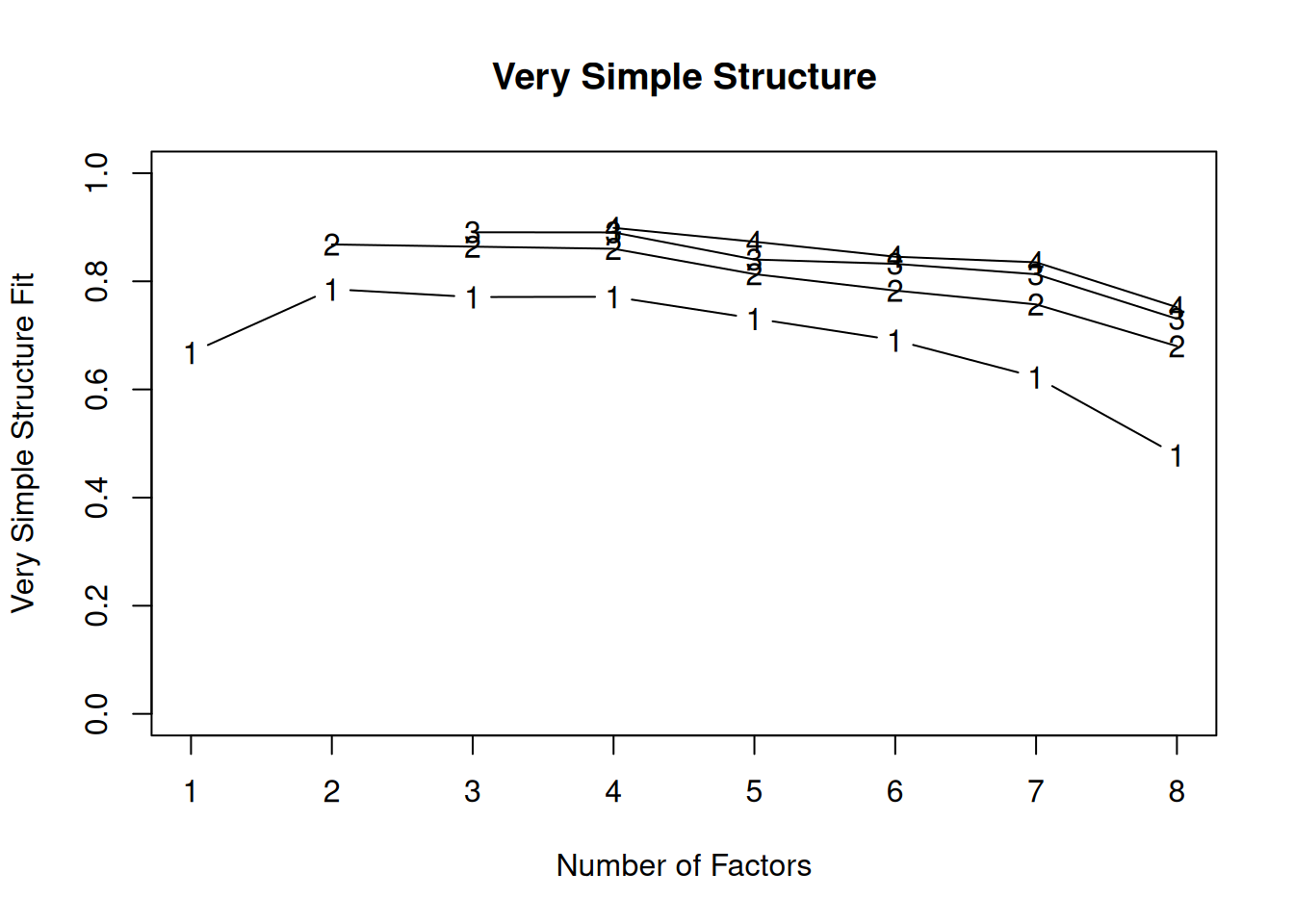

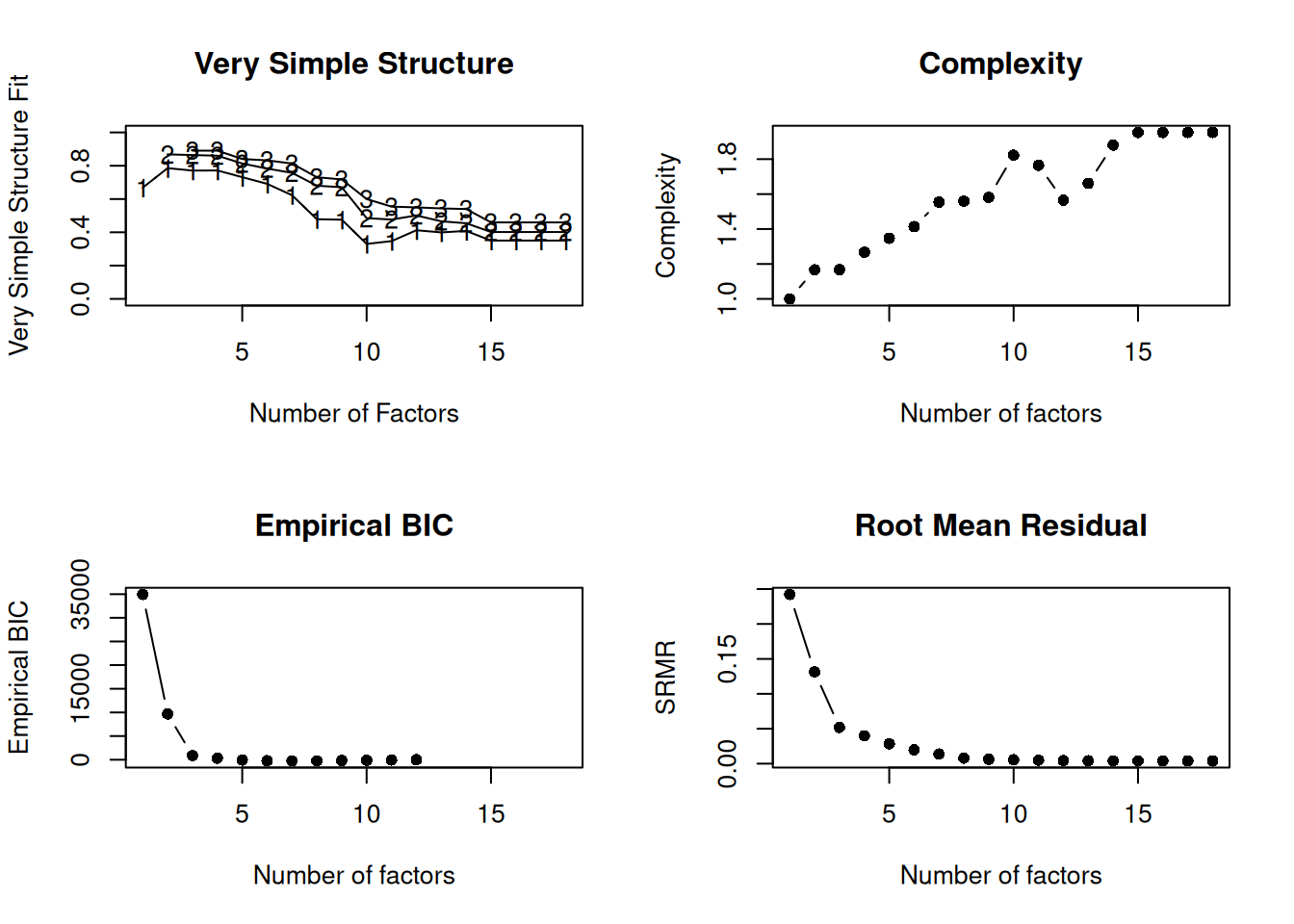

There are more sophisticated ways of using a scree plot, but they usually end up at a similar decision. Examples of more sophisticated tests include parallel analysis and very simple structure (VSS) plots. In a parallel analysis, you examine where the eigenvalues from observed data and random data converge, so you do not retain a factor that explains less variance than would be expected by random chance. Using the VSS criterion, the optimal number of factors to retain is the number of factors that maximizes the VSS criterion (Revelle & Rocklin, 1979). The VSS criterion is evaluated with models in which factor loadings for a given item that are less than the maximum factor loading for that item are suppressed to zero, thus forcing simple structure (i.e., no cross-loadings). The goal is finding a factor structure with interpretability so that factors are clearly distinguishable. Thus, we want to identify the number of factors with the highest VSS criterion.

In general, my recommendation is to use Cattell’s scree test, and then test the factor solutions with plus or minus one factor, in addition to examining model fit. You should never accept factors with eigenvalues less than zero (or components from PCA with eigenvalues less than one), because they are likely to be largely composed of error. If you are using maximum likelihood factor analysis, you can compare the fit of various models with model fit criteria to see which model fits best for its parsimony. A model will always fit better when you add additional parameters or factors, so you examine if there is significant improvement in model fit when adding the additional factor—that is, we keep adding complexity until additional complexity does not buy us much. Always try a factor solution that is one less and one more than suggested by Cattell’s scree test to buffer your final solution because the purpose of factor analysis is to explain things and to have interpretability. Even if all rules or indicators suggest to keep X number of factors, maybe \(\pm\) one factor helps clarify things. Even though factor analysis is empirical, theory and interpretatability should also inform decisions.

22.5.4.1 Model Fit Indices

In factor analysis, we fit a model to observed data, or to the variance-covariance matrix, and we evaluate the degree of model misfit. That is, fit indices evaluate how likely it is that a given causal model gave rise to the observed data. Various model fit indices can be used for evaluating how well a model fits the data and for comparing the fit of two competing models. Fit indices known as absolute fit indices compare whether the model fits better than the best-possible fitting model (i.e., a saturated model). Examples of absolute fit indices include the chi-square test, root mean square error of approximation (RMSEA), and the standardized root mean square residual (SRMR).

The chi-square test evaluates whether the model has a significant degree of misfit relative to the best-possible fitting model (a saturated model that fits as many parameters as possible; i.e., as many parameters as there are degrees of freedom); the null hypothesis of a chi-square test is that there is no difference between the predicted data (i.e., the data that would be observed if the model were true) and the observed data. Thus, a non-significant chi-square test indicates good model fit. However, because the null hypothesis of the chi-square test is that the model-implied covariance matrix is exactly equal to the observed covariance matrix (i.e., a model of perfect fit), this may be an unrealistic comparison. Models are simplifications of reality, and our models are virtually never expected to be a perfect description of reality. Thus, we would say a model is “useful” and partially validated if “it helps us to understand the relation between variables and does a ‘reasonable’ job of matching the data…A perfect fit may be an inappropriate standard, and a high chi-square estimate may indicate what we already know—that the hypothesized model holds approximately, not perfectly.” (Bollen, 1989, p. 268). The power of the chi-square test depends on sample size, and a large sample will likely detect small differences as significantly worse than the best-possible fitting model (Bollen, 1989).

RMSEA is an index of absolute fit. Lower values indicate better fit.

SRMR is an index of absolute fit with no penalty for model complexity. Lower values indicate better fit.

There are also various fit indices known as incremental, comparative, or relative fit indices that compare whether the model fits better than the worst-possible fitting model (i.e., a “baseline” or “null” model). Incremental fit indices include a chi-square difference test, the comparative fit index (CFI), and the Tucker-Lewis index (TLI). Unlike the chi-square test comparing the model to the best-possible fitting model, a significant chi-square test of the relative fit index indicates better fit—i.e., that the model fits better than the worst-possible fitting model.

CFI is another relative fit index that compares the model to the worst-possible fitting model. Higher values indicate better fit.

TLI is another relative fit index. Higher values indicate better fit.

Parsimony fit include fit indices that use information criteria fit indices, including the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC). BIC penalizes model complexity more so than AIC. Lower AIC and BIC values indicate better fit.

Chi-square difference tests and CFI can be used to compare two nested models. AIC and BIC can be used to compare two non-nested models.

Criteria for acceptable fit and good fit of SEM models are in Table 22.1. In addition, dynamic fit indexes have been proposed based on simulation to identify fit index cutoffs that are tailored to the characteristics of the specific model and data (McNeish & Wolf, 2023); dynamic fit indexes are available via the dynamic package (Wolf & McNeish, 2022) or with a webapp.

| SEM Fit Index | Acceptable Fit | Good Fit |

|---|---|---|

| RMSEA | \(\leq\) .08 | \(\leq\) .05 |

| CFI | \(\geq\) .90 | \(\geq\) .95 |

| TLI | \(\geq\) .90 | \(\geq\) .95 |

| SRMR | \(\leq\) .10 | \(\leq\) .08 |

However, good model fit does not necessarily indicate a true model.

In addition to global fit indices, it can also be helpful to examine evidence of local fit, such as the residual covariance matrix. The residual covariance matrix represents the difference between the observed covariance matrix and the model-implied covariance matrix (the observed covariance matrix minus the model-implied covariance matrix). These difference values are called covariance residuals. Standardizing the covariance matrix by converting each to a correlation matrix can be helpful for interpreting the magnitude of any local misfit. This is known as a residual correlation matrix, which is composed of correlation residuals. Correlation residuals greater than |.10| are possible evidence for poor local fit (Kline, 2023). If a correlation residual is positive, it suggests that the model underpredicts the observed association between the two variables (i.e., the observed covariance is greater than the model-implied covariance). If a correlation residual is negative, it suggests that the model overpredicts their observed association between the two variables (i.e., the observed covariance is smaller than the model-implied covariance). If the two variables are connected by only indirect pathways, it may be helpful to respecify the model with direct pathways between the two variables, such as a direct effect (i.e., regression path) or a covariance path. For guidance on evaluating local fit, see Kline (2024).

22.5.5 5. Interpreting and Using Latent Factors

The next step is interpreting the model and latent factors. One data matrix can lead to many different (correct) models—you must choose one based on the factor structure and theory. Use theory to interpret the model and label the factors. In latent variable models, factors have meaning. You can use them as predictors, mediators, moderators, or outcomes. When possible, it is preferable to use the factors by examining their associations with other variables in the same model. You can extract factor scores, if necessary, for use in other analyses; however, it is preferable to examine the associations between factors and other variables in the same model if possible. And, using latent factors helps disattenuate associations for measurement error, to identify what the association is between variables when removing random measurement error.

22.6 Example of Exploratory Factor Analysis

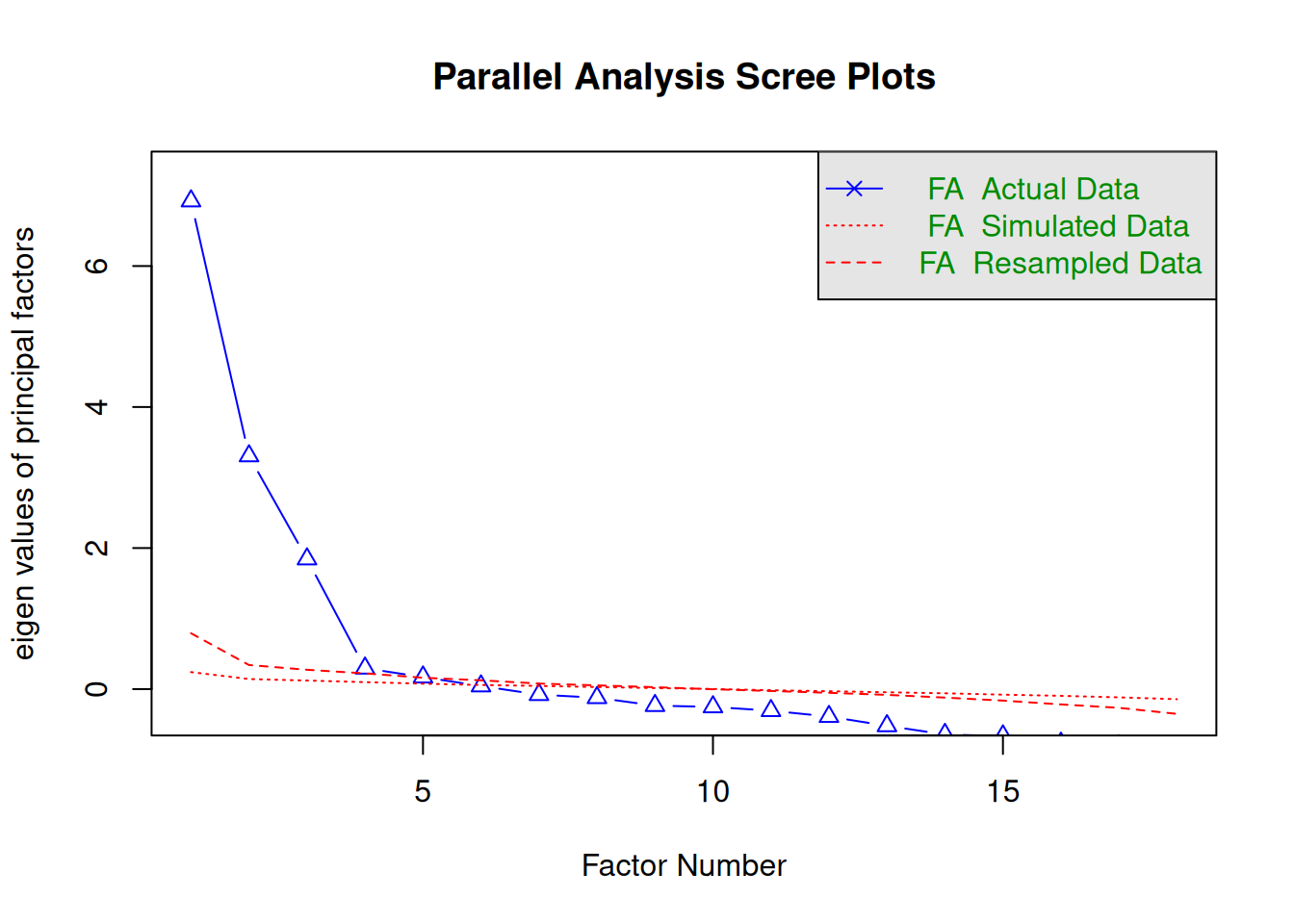

We generated the scree plot in Figure 22.13 using the psych::fa.parallel() function of the psych package (Revelle, 2025). The optimal coordinates and the acceleration factor attempt to operationalize the Cattell scree test: i.e., the “elbow” of the scree plot (Ruscio & Roche, 2012). The optimal coordinators factor is quantified using a series of linear equations to determine whether observed eigenvalues exceed the predicted values. The acceleration factor is quantified using the acceleration of the curve, that is, the second derivative. The Kaiser-Guttman rule states to keep principal components whose eigenvalues are greater than 1. However, for exploratory factor analysis (as opposed to PCA), the criterion is to keep the factors whose eigenvalues are greater than zero (i.e., not the factors whose eigenvalues are greater than 1) (Dinno, 2014).

The number of factors to keep would depend on which criteria one uses. Based on the rule to keep factors whose eigenvalues are greater than zero and based on the parallel test, we would keep five factors. However, based on the Cattell scree test (the “elbow” of the screen plot minus one), we would keep three factors. If using the optimal coordinates, we would keep eight factors; if using the acceleration factor, we would keep one factor. Therefore, interpretability of the factors would be important for deciding how many factors to keep.

Code

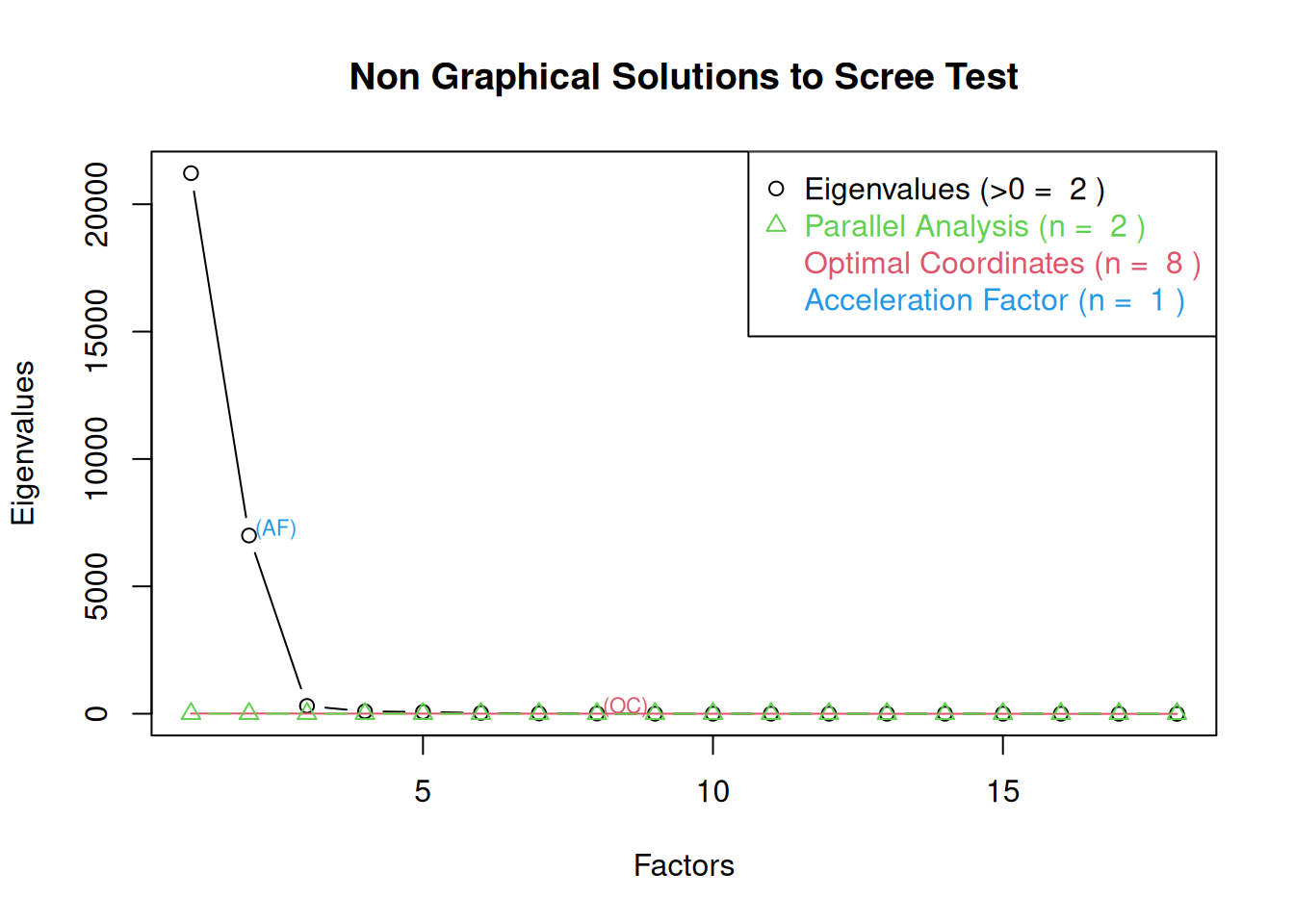

Parallel analysis suggests that the number of factors = 5 and the number of components = NA We generated the scree plot in Figure 22.14 using the nFactors::nScree() and nFactors::plotnScree() functions of the nFactors package (Raiche & Magis, 2025).

Code

#screeDataEFA <- nFactors::nScree(

# x = cor( # throws error with correlation matrix, so use covariance instead (below)

# dataForFA[faVars],

# use = "pairwise.complete.obs"),

# model = "factors")

screeDataEFA <- nFactors::nScree(

x = cov(

dataForFA[faVars],

use = "pairwise.complete.obs"),

cor = FALSE,

model = "factors")

nFactors::plotnScree(screeDataEFA)We generated the very simple structure (VSS) plots in Figures 22.15 and 22.16 using the psych::vss() and psych::nfactors() functions of the psych package (Revelle, 2025). In addition to VSS plots, the output also provides additional criteria by which to determine the optimal number of factors, each for which lower values are better, including the Velicer minimum average partial (MAP) test, the Bayesian information criterion (BIC), the sample size-adjusted BIC (SABIC), and the root mean square error of approximation (RMSEA). Depending on the criterion, the optimal number of factors extracted varies between 2 and 8 factors.

Very Simple Structure

Call: psych::vss(x = dataForFA[faVars], rotate = "oblimin", fm = "minres")

VSS complexity 1 achieves a maximimum of 0.79 with 2 factors

VSS complexity 2 achieves a maximimum of 0.87 with 2 factors

The Velicer MAP achieves a minimum of 0.13 with 3 factors

BIC achieves a minimum of 121337.1 with 8 factors

Sample Size adjusted BIC achieves a minimum of 121454.6 with 8 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.67 0.00 0.19 135 150953 0 26.4 0.67 0.75 149927 150356 1.0

2 0.79 0.87 0.16 118 137866 0 10.5 0.87 0.76 136969 137344 1.2

3 0.77 0.86 0.13 102 NaN NaN 8.6 0.89 NA NA NA 1.2

4 0.77 0.86 0.14 87 132814 0 8.0 0.90 0.87 132153 132429 1.3

5 0.73 0.81 0.30 73 150832 0 10.0 0.87 1.02 150277 150509 1.3

6 0.69 0.79 0.45 60 NaN NaN 11.6 0.85 NA NA NA 1.4

7 0.62 0.76 1.28 48 NaN NaN 13.1 0.84 NA NA NA 1.6

8 0.48 0.68 NaN 37 121618 0 17.6 0.78 1.28 121337 121455 1.6

eChisq SRMR eCRMS eBIC

1 17950 0.1714 0.182 16924

2 5293 0.0931 0.106 4397

3 830 0.0369 0.045 55

4 489 0.0283 0.038 -172

5 248 0.0201 0.029 -307

6 120 0.0140 0.022 -336

7 58 0.0098 0.017 -307

8 20 0.0057 0.012 -262Code

Number of factors

Call: vss(x = x, n = n, rotate = rotate, diagonal = diagonal, fm = fm,

n.obs = n.obs, plot = FALSE, title = title, use = use, cor = cor)

VSS complexity 1 achieves a maximimum of 0.79 with 2 factors

VSS complexity 2 achieves a maximimum of 0.87 with 2 factors

The Velicer MAP achieves a minimum of 0.13 with 3 factors

Empirical BIC achieves a minimum of -336.08 with 6 factors

Sample Size adjusted BIC achieves a minimum of 107165.5 with 9 factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex

1 0.67 0.00 0.19 135 150953 0 26.4 0.67 0.75 149927 150356 1.0

2 0.79 0.87 0.16 118 137866 0 10.5 0.87 0.76 136969 137344 1.2

3 0.77 0.86 0.13 102 NaN NaN 8.6 0.89 NA NA NA 1.2

4 0.77 0.86 0.14 87 132814 0 8.0 0.90 0.87 132153 132429 1.3

5 0.73 0.81 0.30 73 150832 0 10.0 0.87 1.02 150277 150509 1.3

6 0.69 0.79 0.45 60 NaN NaN 11.6 0.85 NA NA NA 1.4

7 0.62 0.76 1.28 48 NaN NaN 13.1 0.84 NA NA NA 1.6

8 0.48 0.68 NaN 37 121618 0 17.6 0.78 1.28 121337 121455 1.6

9 0.48 0.67 NaN 27 107285 0 18.7 0.76 1.41 107080 107166 1.6

10 0.33 0.49 NaN 18 135089 0 27.9 0.65 1.94 134953 135010 1.8

11 0.32 0.46 NaN 10 NaN NaN 31.3 0.61 NA NA NA 1.8

12 0.37 0.46 NaN 3 NaN NaN 31.0 0.61 NA NA NA 1.6

13 0.42 0.48 NaN -3 NaN NA 29.7 0.63 NA NA NA 1.7

14 0.42 0.45 NaN -8 NaN NA 30.7 0.61 NA NA NA 1.9

15 0.25 0.33 NaN -12 NaN NA 40.2 0.49 NA NA NA 2.3

16 0.25 0.33 NaN -15 NaN NA 40.2 0.49 NA NA NA 2.3

17 0.25 0.33 NaN -17 NaN NA 40.2 0.49 NA NA NA 2.3

18 0.25 0.33 NA -18 NaN NA 40.2 0.49 NA NA NA 2.3

eChisq SRMR eCRMS eBIC

1 17950.2 0.1714 0.182 16924

2 5293.3 0.0931 0.106 4397

3 830.2 0.0369 0.045 55

4 489.1 0.0283 0.038 -172

5 247.6 0.0201 0.029 -307

6 119.9 0.0140 0.022 -336

7 58.2 0.0098 0.017 -307

8 19.7 0.0057 0.012 -262

9 12.6 0.0045 0.011 -193

10 9.4 0.0039 0.011 -127

11 7.4 0.0035 0.014 -69

12 6.1 0.0032 0.023 -17

13 5.2 0.0029 NA NA

14 4.9 0.0028 NA NA

15 4.9 0.0028 NA NA

16 4.9 0.0028 NA NA

17 4.9 0.0028 NA NA

18 4.9 0.0028 NA NAWe fit EFA models using the lavaan::efa() function of the lavaan package (Rosseel, 2012; Rosseel et al., 2024).

The model fits well according to CFI with 5 or more factors; the model fits well according to RMSEA with 6 or more factors. However, 5+ factors does not represent much of a simplification (relative to the 18 variables included). Moreover, in the model with four factors, only one variable had a significant loading on Factor 1. Thus, even with four factors, one of the factors does not seem to represent the aggregation of multiple variables. For these reasons, and because the “elbow test” for the scree plot suggested three factors, we decided to retain three factors and to see if we could achieve better fit by making additional model modifications (e.g., correlated residuals). Correlated residuals may be necessary, for example, when variables are correlated for reasons other than the latent factors.

This is lavaan 0.6-21 -- running exploratory factor analysis

Estimator ML

Rotation method GEOMIN OBLIQUE

Geomin epsilon 0.001

Rotation algorithm (rstarts) GPA (30)

Standardized metric TRUE

Row weights None

Number of observations 1997

Number of missing patterns 4

Overview models:

aic bic sabic chisq df pvalue cfi rmsea

nfactors = 1 124823.3 125125.6 124954.1 9257.083 135 0 0.222 0.568

nfactors = 2 117633.1 118030.6 117805.1 5228.488 118 0 0.665 0.403

nfactors = 3 113620.9 114108.0 113831.6 3182.380 102 0 0.730 0.399

nfactors = 4 110556.7 111127.8 110803.8 1451.176 87 0 0.895 0.287

nfactors = 5 109508.1 110157.6 109789.1 746.214 73 0 0.900 0.342

nfactors = 6 109268.7 109991.1 109581.2 502.468 60 0 0.940 0.406

nfactors = 7 108877.6 109667.1 109219.2 474.070 48 0 1.000 0.694

Eigenvalues correlation matrix:

ev1 ev2 ev3 ev4 ev5 ev6 ev7 ev8

6.62289 6.52671 2.96433 0.53788 0.40121 0.33897 0.21006 0.11017

ev9 ev10 ev11 ev12 ev13 ev14 ev15 ev16

0.08214 0.05588 0.04133 0.03158 0.02365 0.02080 0.01566 0.00891

ev17 ev18

0.00583 0.00201

Number of factors: 1

Standardized loadings: (* = significant at 1% level)

f1 unique.var communalities

completionsPerGame 0.987* 0.027 0.973

attemptsPerGame 0.974* 0.051 0.949

passing_yardsPerGame 0.994* 0.012 0.988

passing_tdsPerGame 0.864* 0.254 0.746

passing_air_yardsPerGame 0.692* 0.522 0.478

passing_yards_after_catchPerGame 0.771* 0.406 0.594

passing_first_downsPerGame 0.992* 0.016 0.984

avg_completed_air_yards .* 0.919 0.081

avg_intended_air_yards . 0.977 0.023

aggressiveness 0.997 0.003

max_completed_air_distance 0.472* 0.777 0.223

avg_air_distance 1.000 0.000

max_air_distance 0.367* 0.866 0.134

avg_air_yards_to_sticks .* 0.955 0.045

passing_cpoe .* 0.915 0.085

pass_comp_pct .* 0.914 0.086

passer_rating 0.627* 0.606 0.394

completion_percentage_above_expectation 0.519* 0.731 0.269

f1

Sum of squared loadings 7.057

Proportion of total 1.000

Proportion var 0.392

Cumulative var 0.392

Number of factors: 2

Standardized loadings: (* = significant at 1% level)

f1 f2 unique.var

completionsPerGame 0.986* 0.029

attemptsPerGame 0.974* * 0.050

passing_yardsPerGame 0.996* 0.008

passing_tdsPerGame 0.863* 0.255

passing_air_yardsPerGame 0.723* 0.695* 0.033

passing_yards_after_catchPerGame 0.748* -0.613* 0.029

passing_first_downsPerGame 0.990* 0.019

avg_completed_air_yards 0.965* 0.070

avg_intended_air_yards * 0.998* 0.002

aggressiveness . 0.785* 0.366

max_completed_air_distance .* 0.813* 0.288

avg_air_distance * 0.979* 0.032

max_air_distance .* 0.850* 0.260

avg_air_yards_to_sticks 0.991* 0.017

passing_cpoe 0.317* -0.345* 0.772

pass_comp_pct .* 0.917

passer_rating 0.614* . 0.614

completion_percentage_above_expectation 0.487* . 0.730

communalities

completionsPerGame 0.971

attemptsPerGame 0.950

passing_yardsPerGame 0.992

passing_tdsPerGame 0.745

passing_air_yardsPerGame 0.967

passing_yards_after_catchPerGame 0.971

passing_first_downsPerGame 0.981

avg_completed_air_yards 0.930

avg_intended_air_yards 0.998

aggressiveness 0.634

max_completed_air_distance 0.712

avg_air_distance 0.968

max_air_distance 0.740

avg_air_yards_to_sticks 0.983

passing_cpoe 0.228

pass_comp_pct 0.083

passer_rating 0.386

completion_percentage_above_expectation 0.270

f2 f1 total

Sum of sq (obliq) loadings 6.891 6.620 13.511

Proportion of total 0.510 0.490 1.000

Proportion var 0.383 0.368 0.751

Cumulative var 0.383 0.751 0.751

Factor correlations: (* = significant at 1% level)

f1 f2

f1 1.000

f2 -0.038 1.000

Number of factors: 3

Standardized loadings: (* = significant at 1% level)

f1 f2 f3 unique.var

completionsPerGame 0.978* * 0.025

attemptsPerGame 0.993* * * 0.038

passing_yardsPerGame 0.987* * 0.010

passing_tdsPerGame 0.833* * .* 0.257

passing_air_yardsPerGame 0.791* 0.732* 0.022

passing_yards_after_catchPerGame 0.697* -0.603* 0.031

passing_first_downsPerGame 0.979* * 0.020

avg_completed_air_yards 0.784* 0.332* 0.043

avg_intended_air_yards 0.887* .* 0.002

aggressiveness 0.796* 0.349

max_completed_air_distance .* 0.662* 0.379* 0.181

avg_air_distance * 0.871* .* 0.024

max_air_distance .* 0.806* . 0.219

avg_air_yards_to_sticks 0.861* .* 0.012

passing_cpoe 1.001* 0.021

pass_comp_pct -0.474* 1.055* 0.107

passer_rating * 0.930* 0.090

completion_percentage_above_expectation 0.964* 0.046

communalities

completionsPerGame 0.975

attemptsPerGame 0.962

passing_yardsPerGame 0.990

passing_tdsPerGame 0.743

passing_air_yardsPerGame 0.978

passing_yards_after_catchPerGame 0.969

passing_first_downsPerGame 0.980

avg_completed_air_yards 0.957

avg_intended_air_yards 0.998

aggressiveness 0.651

max_completed_air_distance 0.819

avg_air_distance 0.976

max_air_distance 0.781

avg_air_yards_to_sticks 0.988

passing_cpoe 0.979

pass_comp_pct 0.893

passer_rating 0.910

completion_percentage_above_expectation 0.954

f2 f1 f3 total

Sum of sq (obliq) loadings 6.033 5.746 4.724 16.503

Proportion of total 0.366 0.348 0.286 1.000

Proportion var 0.335 0.319 0.262 0.917

Cumulative var 0.335 0.654 0.917 0.917

Factor correlations: (* = significant at 1% level)

f1 f2 f3

f1 1.000

f2 -0.145* 1.000

f3 0.158* 0.446* 1.000

Number of factors: 4

Standardized loadings: (* = significant at 1% level)

f1 f2 f3 f4

completionsPerGame 0.971* *

attemptsPerGame 1.025*

passing_yardsPerGame .* 0.901*

passing_tdsPerGame 0.381* 0.666*

passing_air_yardsPerGame 0.821* 0.733*

passing_yards_after_catchPerGame .* 0.598* -0.607*

passing_first_downsPerGame .* 0.904* *

avg_completed_air_yards .* 0.960*

avg_intended_air_yards 1.024*

aggressiveness 0.816*

max_completed_air_distance .* .* 0.777*

avg_air_distance 0.995*

max_air_distance .* 0.869*

avg_air_yards_to_sticks 1.003*

passing_cpoe . 0.917*

pass_comp_pct -0.449* 1.065*

passer_rating .* 0.801*

completion_percentage_above_expectation . 0.891*

unique.var communalities

completionsPerGame 0.015 0.985

attemptsPerGame 0.001 0.999

passing_yardsPerGame 0.001 0.999

passing_tdsPerGame 0.206 0.794

passing_air_yardsPerGame 0.015 0.985

passing_yards_after_catchPerGame 0.023 0.977

passing_first_downsPerGame 0.023 0.977

avg_completed_air_yards 0.067 0.933

avg_intended_air_yards 0.003 0.997

aggressiveness 0.322 0.678

max_completed_air_distance 0.293 0.707

avg_air_distance 0.034 0.966

max_air_distance 0.238 0.762

avg_air_yards_to_sticks 0.018 0.982

passing_cpoe 0.037 0.963

pass_comp_pct 0.074 0.926

passer_rating 0.148 0.852

completion_percentage_above_expectation 0.028 0.972

f3 f2 f4 f1 total

Sum of sq (obliq) loadings 6.953 5.369 3.406 0.726 16.454

Proportion of total 0.423 0.326 0.207 0.044 1.000

Proportion var 0.386 0.298 0.189 0.040 0.914

Cumulative var 0.386 0.685 0.874 0.914 0.914

Factor correlations: (* = significant at 1% level)

f1 f2 f3 f4

f1 1.000

f2 0.391* 1.000

f3 -0.006 -0.171* 1.000

f4 0.279* 0.130* 0.433* 1.000

Number of factors: 5

Standardized loadings: (* = significant at 1% level)

f1 f2 f3 f4 f5

completionsPerGame 0.952* * .*

attemptsPerGame 0.979* *

passing_yardsPerGame 0.864* .* *

passing_tdsPerGame 0.646* 0.439*

passing_air_yardsPerGame 0.801* 0.760* *

passing_yards_after_catchPerGame 0.527* 0.301* -0.611* .* *

passing_first_downsPerGame 0.878* .* *

avg_completed_air_yards 1.007* .* .

avg_intended_air_yards 1.005*

aggressiveness . 0.792*

max_completed_air_distance .* . 0.734*

avg_air_distance 0.943* .*

max_air_distance .* 0.662* .* .

avg_air_yards_to_sticks 0.974*

passing_cpoe . 0.899*

pass_comp_pct -0.302* 1.038*

passer_rating 0.320* 0.758*

completion_percentage_above_expectation .* 0.851*

unique.var communalities

completionsPerGame 0.008 0.992

attemptsPerGame 0.005 0.995

passing_yardsPerGame 0.003 0.997

passing_tdsPerGame 0.189 0.811

passing_air_yardsPerGame 0.016 0.984

passing_yards_after_catchPerGame 0.000 1.000

passing_first_downsPerGame 0.018 0.982

avg_completed_air_yards 0.037 0.963

avg_intended_air_yards 0.002 0.998

aggressiveness 0.432 0.568

max_completed_air_distance 0.343 0.657

avg_air_distance 0.048 0.952

max_air_distance 0.300 0.700

avg_air_yards_to_sticks 0.027 0.973

passing_cpoe 0.017 0.983

pass_comp_pct 0.100 0.900

passer_rating 0.136 0.864

completion_percentage_above_expectation 0.039 0.961

f3 f1 f5 f2 f4 total

Sum of sq (obliq) loadings 6.505 5.147 3.356 1.000 0.272 16.280

Proportion of total 0.400 0.316 0.206 0.061 0.017 1.000

Proportion var 0.361 0.286 0.186 0.056 0.015 0.904

Cumulative var 0.361 0.647 0.834 0.889 0.904 0.904

Factor correlations: (* = significant at 1% level)

f1 f2 f3 f4 f5

f1 1.000

f2 0.371* 1.000

f3 -0.121* 0.090 1.000

f4 0.189 0.090 0.020 1.000

f5 0.121* 0.381* 0.446* 0.278 1.000

Number of factors: 6

Standardized loadings: (* = significant at 1% level)

f1 f2 f3 f4 f5

completionsPerGame 0.966*

attemptsPerGame 0.987*

passing_yardsPerGame . 0.881*

passing_tdsPerGame 0.385* 0.678*

passing_air_yardsPerGame 0.835* 0.768*

passing_yards_after_catchPerGame . 0.520* -0.560* .*

passing_first_downsPerGame . 0.899*

avg_completed_air_yards 0.800* . 0.320

avg_intended_air_yards 0.996*

aggressiveness . 0.699* .

max_completed_air_distance . 0.479 0.328

avg_air_distance 0.953*

max_air_distance . 0.732* .

avg_air_yards_to_sticks 0.945*

passing_cpoe .

pass_comp_pct . .

passer_rating .*

completion_percentage_above_expectation .

f6 unique.var communalities

completionsPerGame 0.010 0.990

attemptsPerGame 0.000 1.000

passing_yardsPerGame 0.002 0.998

passing_tdsPerGame 0.199 0.801

passing_air_yardsPerGame . 0.012 0.988

passing_yards_after_catchPerGame 0.000 1.000

passing_first_downsPerGame 0.019 0.981

avg_completed_air_yards 0.000 1.000

avg_intended_air_yards 0.002 0.998

aggressiveness 0.445 0.555

max_completed_air_distance . 0.340 0.660

avg_air_distance 0.048 0.952

max_air_distance . 0.291 0.709

avg_air_yards_to_sticks 0.027 0.973

passing_cpoe 0.895* 0.018 0.982

pass_comp_pct 1.002* 0.094 0.906

passer_rating 0.782* 0.146 0.854

completion_percentage_above_expectation 0.887* 0.038 0.962

f3 f2 f6 f1 f5 f4 total

Sum of sq (obliq) loadings 5.990 5.301 3.503 0.684 0.500 0.332 16.309

Proportion of total 0.367 0.325 0.215 0.042 0.031 0.020 1.000

Proportion var 0.333 0.294 0.195 0.038 0.028 0.018 0.906

Cumulative var 0.333 0.627 0.822 0.860 0.888 0.906 0.906

Factor correlations: (* = significant at 1% level)

f1 f2 f3 f4 f5 f6

f1 1.000

f2 0.384* 1.000

f3 0.054 -0.118 1.000

f4 0.169 0.303 -0.102 1.000

f5 0.396 0.207 0.321 0.045 1.000

f6 0.397* 0.187* 0.438* 0.309 0.071 1.000

Number of factors: 7

Standardized loadings: (* = significant at 1% level)

f1 f2 f3 f4 f5

completionsPerGame 0.959*

attemptsPerGame 0.977*

passing_yardsPerGame 0.866* .* .

passing_tdsPerGame 0.674* 0.425*

passing_air_yardsPerGame 0.774* 0.511* .

passing_yards_after_catchPerGame 0.550* 0.359* -0.481*

passing_first_downsPerGame 0.903* .*

avg_completed_air_yards 0.561 0.442*

avg_intended_air_yards 0.546* 0.496*

aggressiveness .* 0.693*

max_completed_air_distance 0.719

avg_air_distance * 0.591* 0.438*

max_air_distance 0.691*

avg_air_yards_to_sticks 0.453* 0.607*

passing_cpoe

pass_comp_pct -0.476*

passer_rating 0.306*

completion_percentage_above_expectation *

f6 f7 unique.var

completionsPerGame .* 0.010

attemptsPerGame 0.003

passing_yardsPerGame 0.002

passing_tdsPerGame 0.194

passing_air_yardsPerGame 0.008

passing_yards_after_catchPerGame .* 0.000

passing_first_downsPerGame 0.017

avg_completed_air_yards . 0.025

avg_intended_air_yards 0.001

aggressiveness 0.429

max_completed_air_distance 0.958* 0.000

avg_air_distance 0.047

max_air_distance 0.416* 0.162

avg_air_yards_to_sticks 0.028

passing_cpoe 0.942* 0.019

pass_comp_pct 0.950* 0.041

passer_rating 0.735* 0.142

completion_percentage_above_expectation 0.981* 0.046

communalities

completionsPerGame 0.990

attemptsPerGame 0.997

passing_yardsPerGame 0.998

passing_tdsPerGame 0.806

passing_air_yardsPerGame 0.992

passing_yards_after_catchPerGame 1.000

passing_first_downsPerGame 0.983

avg_completed_air_yards 0.975

avg_intended_air_yards 0.999

aggressiveness 0.571

max_completed_air_distance 1.000

avg_air_distance 0.953

max_air_distance 0.838

avg_air_yards_to_sticks 0.972

passing_cpoe 0.981

pass_comp_pct 0.959

passer_rating 0.858

completion_percentage_above_expectation 0.954

f1 f7 f5 f3 f4 f6 f2 total

Sum of sq (obliq) loadings 5.154 3.451 2.472 2.428 1.324 1.009 0.989 16.826

Proportion of total 0.306 0.205 0.147 0.144 0.079 0.060 0.059 1.000

Proportion var 0.286 0.192 0.137 0.135 0.074 0.056 0.055 0.935

Cumulative var 0.286 0.478 0.615 0.750 0.824 0.880 0.935 0.935

Factor correlations: (* = significant at 1% level)

f1 f2 f3 f4 f5 f6 f7

f1 1.000

f2 0.370* 1.000

f3 -0.038 -0.218 1.000

f4 -0.179 -0.251 0.693* 1.000

f5 -0.156 -0.209 0.712* 0.829* 1.000

f6 0.366* 0.348* 0.171 -0.310 -0.030 1.000

f7 0.095 0.348* 0.585* 0.145 0.179 0.452 1.000 A path diagram of the three-factor EFA model in Figure 22.17 was created using the lavaanPlot::lavaanPlot() function of the lavaanPlot package (Lishinski, 2024).

To make the plot interactive for editing, you can use the lavaangui::plot_lavaan() function of the lavaangui package (Karch, 2025b; Karch, 2025a):

Here is the syntax for estimating a three-factor EFA using exploratory structural equation modeling (ESEM). Estimating the model in a ESEM framework allows us to make modifications to the model, such as adding correlated residuals, and adding predictors or outcomes of the latent factors. The syntax below represents the same model (with the same fit indices) as the three-factor EFA model above.

Code

efa3factor_syntax <- '

# EFA Factor Loadings

efa("efa1")*f1 +

efa("efa1")*f2 +

efa("efa1")*f3 =~ completionsPerGame + attemptsPerGame + passing_yardsPerGame + passing_tdsPerGame +

passing_air_yardsPerGame + passing_yards_after_catchPerGame + passing_first_downsPerGame +

avg_completed_air_yards + avg_intended_air_yards + aggressiveness + max_completed_air_distance +

avg_air_distance + max_air_distance + avg_air_yards_to_sticks + passing_cpoe + pass_comp_pct +

passer_rating + completion_percentage_above_expectation

'To fit the ESEM model, we use the lavaan::sem() function of the lavaan package (Rosseel, 2012; Rosseel et al., 2024).

The fit indices suggests that the model does not fit well to the data and that additional model modifications are necessary. The fit indices are below.

lavaan 0.6-21 ended normally after 230 iterations

Estimator ML

Optimization method NLMINB

Number of model parameters 93

Row rank of the constraints matrix 24

Rotation method GEOMIN OBLIQUE

Geomin epsilon 0.001

Rotation algorithm (rstarts) GPA (30)

Standardized metric TRUE

Row weights None

Number of observations 1997

Number of missing patterns 4

Model Test User Model:

Standard Scaled

Test Statistic 5446.238 3182.380

Degrees of freedom 102 102

P-value (Chi-square) 0.000 0.000

Scaling correction factor 1.711

Yuan-Bentler correction (Mplus variant)

Model Test Baseline Model:

Test statistic 42942.078 23496.779

Degrees of freedom 153 153

P-value 0.000 0.000

Scaling correction factor 1.828

User Model versus Baseline Model:

Comparative Fit Index (CFI) 0.875 0.868

Tucker-Lewis Index (TLI) 0.813 0.802

Robust Comparative Fit Index (CFI) 0.730

Robust Tucker-Lewis Index (TLI) 0.595

Loglikelihood and Information Criteria:

Loglikelihood user model (H0) -56723.436 -56723.436

Scaling correction factor 1.784

for the MLR correction

Loglikelihood unrestricted model (H1) -54000.317 -54000.317

Scaling correction factor 1.745

for the MLR correction

Akaike (AIC) 113620.871 113620.871

Bayesian (BIC) 114108.019 114108.019

Sample-size adjusted Bayesian (SABIC) 113831.616 113831.616

Root Mean Square Error of Approximation:

RMSEA 0.162 0.123

90 Percent confidence interval - lower 0.158 0.120

90 Percent confidence interval - upper 0.166 0.126

P-value H_0: RMSEA <= 0.050 0.000 0.000

P-value H_0: RMSEA >= 0.080 1.000 1.000

Robust RMSEA 0.399

90 Percent confidence interval - lower 0.376

90 Percent confidence interval - upper 0.422

P-value H_0: Robust RMSEA <= 0.050 0.000

P-value H_0: Robust RMSEA >= 0.080 1.000

Standardized Root Mean Square Residual:

SRMR 0.469 0.469

Parameter Estimates:

Standard errors Sandwich

Information bread Observed

Observed information based on Hessian

Latent Variables:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

f1 =~ efa1

completinsPrGm 7.843 0.085 91.879 0.000 7.843 0.978

attemptsPerGam 12.462 0.130 95.742 0.000 12.462 0.993

pssng_yrdsPrGm 91.612 0.955 95.881 0.000 91.612 0.987

passng_tdsPrGm 0.574 0.010 56.126 0.000 0.574 0.833

pssng_r_yrdsPG 95.681 2.355 40.623 0.000 95.681 0.791

pssng_yrds__PG 45.672 1.088 41.967 0.000 45.672 0.697

pssng_frst_dPG 4.445 0.048 92.412 0.000 4.445 0.979

avg_cmpltd_r_y 0.016 0.050 0.331 0.741 0.016 0.004

avg_ntndd_r_yr -0.043 0.045 -0.965 0.335 -0.043 -0.009

aggressiveness -0.289 0.382 -0.757 0.449 -0.289 -0.037

mx_cmpltd_r_ds 1.678 0.424 3.953 0.000 1.678 0.156

avg_air_distnc -0.212 0.076 -2.797 0.005 -0.212 -0.048

max_air_distnc 1.683 0.435 3.870 0.000 1.683 0.183

avg_r_yrds_t_s 0.005 0.042 0.129 0.897 0.005 0.001

passing_cpoe -0.486 0.232 -2.093 0.036 -0.486 -0.035

pass_comp_pct 0.000 0.001 0.651 0.515 0.000 0.003

passer_rating 2.776 0.929 2.988 0.003 2.776 0.085

cmpltn_prcnt__ -0.433 0.307 -1.414 0.157 -0.433 -0.036

f2 =~ efa1

completinsPrGm 0.024 0.031 0.769 0.442 0.024 0.003

attemptsPerGam 0.441 0.086 5.134 0.000 0.441 0.035

pssng_yrdsPrGm -0.299 0.295 -1.012 0.312 -0.299 -0.003

passng_tdsPrGm -0.032 0.009 -3.439 0.001 -0.032 -0.047

pssng_r_yrdsPG 88.473 1.904 46.460 0.000 88.473 0.732

pssng_yrds__PG -39.512 0.982 -40.249 0.000 -39.512 -0.603

pssng_frst_dPG -0.029 0.015 -1.960 0.050 -0.029 -0.006

avg_cmpltd_r_y 3.212 0.187 17.167 0.000 3.212 0.784

avg_ntndd_r_yr 4.141 0.205 20.227 0.000 4.141 0.887

aggressiveness 6.305 0.920 6.857 0.000 6.305 0.796

mx_cmpltd_r_ds 7.115 0.802 8.872 0.000 7.115 0.662

avg_air_distnc 3.836 0.223 17.203 0.000 3.836 0.871

max_air_distnc 7.399 0.757 9.773 0.000 7.399 0.806

avg_r_yrds_t_s 4.070 0.241 16.910 0.000 4.070 0.861

passing_cpoe -0.197 0.446 -0.442 0.659 -0.197 -0.014

pass_comp_pct -0.062 0.006 -10.310 0.000 -0.062 -0.474

passer_rating 0.515 0.931 0.553 0.580 0.515 0.016

cmpltn_prcnt__ 0.460 0.543 0.847 0.397 0.460 0.038

f3 =~ efa1

completinsPrGm 0.412 0.051 8.113 0.000 0.412 0.051

attemptsPerGam -0.681 0.078 -8.746 0.000 -0.681 -0.054

pssng_yrdsPrGm 4.081 0.466 8.749 0.000 4.081 0.044

passng_tdsPrGm 0.076 0.009 8.469 0.000 0.076 0.110

pssng_r_yrdsPG -2.190 1.685 -1.299 0.194 -2.190 -0.018

pssng_yrds__PG 0.357 0.496 0.720 0.472 0.357 0.005

pssng_frst_dPG 0.257 0.027 9.467 0.000 0.257 0.057

avg_cmpltd_r_y 1.359 0.238 5.716 0.000 1.359 0.332

avg_ntndd_r_yr 0.975 0.197 4.962 0.000 0.975 0.209

aggressiveness 0.088 0.614 0.144 0.886 0.088 0.011

mx_cmpltd_r_ds 4.079 0.986 4.138 0.000 4.079 0.379

avg_air_distnc 0.920 0.212 4.333 0.000 0.920 0.209

max_air_distnc 1.386 0.812 1.706 0.088 1.386 0.151

avg_r_yrds_t_s 1.148 0.226 5.071 0.000 1.148 0.243

passing_cpoe 13.717 0.598 22.920 0.000 13.717 1.001

pass_comp_pct 0.138 0.005 26.672 0.000 0.138 1.055

passer_rating 30.269 2.324 13.026 0.000 30.269 0.930

cmpltn_prcnt__ 11.669 0.735 15.880 0.000 11.669 0.964

Covariances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

f1 ~~

f2 -0.145 0.028 -5.189 0.000 -0.145 -0.145

f3 0.158 0.036 4.391 0.000 0.158 0.158

f2 ~~

f3 0.446 0.038 11.657 0.000 0.446 0.446

Intercepts:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

.completinsPrGm 13.257 0.179 73.894 0.000 13.257 1.654

.attemptsPerGam 21.899 0.281 77.987 0.000 21.899 1.745

.pssng_yrdsPrGm 148.475 2.078 71.456 0.000 148.475 1.599

.passng_tdsPrGm 0.850 0.015 55.064 0.000 0.850 1.232

.pssng_r_yrdsPG 133.536 2.706 49.349 0.000 133.536 1.104

.pssng_yrds__PG 87.878 1.466 59.924 0.000 87.878 1.341

.pssng_frst_dPG 7.166 0.102 70.504 0.000 7.166 1.578

.avg_cmpltd_r_y 3.688 0.167 22.072 0.000 3.688 0.901

.avg_ntndd_r_yr 5.718 0.166 34.429 0.000 5.718 1.225

.aggressiveness 13.715 0.580 23.659 0.000 13.715 1.731

.mx_cmpltd_r_ds 33.432 0.638 52.365 0.000 33.432 3.109

.avg_air_distnc 19.136 0.175 109.577 0.000 19.136 4.344

.max_air_distnc 42.843 0.662 64.729 0.000 42.843 4.668

.avg_r_yrds_t_s -3.302 0.198 -16.672 0.000 -3.302 -0.699

.passing_cpoe -6.307 0.443 -14.237 0.000 -6.307 -0.460

.pass_comp_pct 0.591 0.003 179.174 0.000 0.591 4.523

.passer_rating 71.677 1.786 40.132 0.000 71.677 2.202

.cmpltn_prcnt__ -5.709 0.521 -10.956 0.000 -5.709 -0.472

Variances:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

.completinsPrGm 1.618 0.117 13.781 0.000 1.618 0.025

.attemptsPerGam 6.034 0.389 15.526 0.000 6.034 0.038

.pssng_yrdsPrGm 87.431 8.391 10.420 0.000 87.431 0.010

.passng_tdsPrGm 0.122 0.006 20.555 0.000 0.122 0.257

.pssng_r_yrdsPG 327.643 30.954 10.585 0.000 327.643 0.022

.pssng_yrds__PG 132.016 8.928 14.787 0.000 132.016 0.031

.pssng_frst_dPG 0.413 0.026 15.887 0.000 0.413 0.020

.avg_cmpltd_r_y 0.717 0.075 9.561 0.000 0.717 0.043

.avg_ntndd_r_yr 0.033 0.013 2.517 0.012 0.033 0.002

.aggressiveness 21.887 2.181 10.035 0.000 21.887 0.349

.mx_cmpltd_r_ds 20.925 1.927 10.858 0.000 20.925 0.181

.avg_air_distnc 0.470 0.032 14.534 0.000 0.470 0.024

.max_air_distnc 18.462 1.911 9.661 0.000 18.462 0.219

.avg_r_yrds_t_s 0.272 0.033 8.154 0.000 0.272 0.012

.passing_cpoe 3.920 1.101 3.559 0.000 3.920 0.021

.pass_comp_pct 0.002 0.000 6.535 0.000 0.002 0.107

.passer_rating 95.570 15.835 6.035 0.000 95.570 0.090

.cmpltn_prcnt__ 6.744 0.908 7.430 0.000 6.744 0.046

f1 1.000 1.000 1.000

f2 1.000 1.000 1.000

f3 1.000 1.000 1.000

R-Square:

Estimate

completinsPrGm 0.975

attemptsPerGam 0.962

pssng_yrdsPrGm 0.990

passng_tdsPrGm 0.743

pssng_r_yrdsPG 0.978

pssng_yrds__PG 0.969

pssng_frst_dPG 0.980

avg_cmpltd_r_y 0.957

avg_ntndd_r_yr 0.998

aggressiveness 0.651

mx_cmpltd_r_ds 0.819

avg_air_distnc 0.976

max_air_distnc 0.781

avg_r_yrds_t_s 0.988

passing_cpoe 0.979

pass_comp_pct 0.893

passer_rating 0.910

cmpltn_prcnt__ 0.954Code

chisq df pvalue

5446.238 102.000 0.000

chisq.scaled df.scaled pvalue.scaled

3182.380 102.000 0.000

chisq.scaling.factor baseline.chisq baseline.df

1.711 42942.078 153.000

baseline.pvalue rmsea cfi

0.000 0.162 0.875

tli srmr rmsea.robust

0.813 0.469 0.399

cfi.robust tli.robust

0.730 0.595 $type

[1] "cor.bollen"

$cov

cmplPG attmPG pssng_yPG pssng_tPG

completionsPerGame 0.000

attemptsPerGame 0.020 0.000

passing_yardsPerGame -0.004 -0.007 0.000

passing_tdsPerGame -0.022 -0.043 0.014 0.000

passing_air_yardsPerGame 0.003 0.007 0.001 -0.011

passing_yards_after_catchPerGame -0.006 0.001 0.006 0.001

passing_first_downsPerGame 0.000 -0.006 0.003 0.019

avg_completed_air_yards -0.103 -0.084 -0.056 -0.018

avg_intended_air_yards -0.083 -0.073 -0.052 -0.022

aggressiveness -0.044 -0.013 -0.031 -0.021

max_completed_air_distance 0.088 0.111 0.135 0.157

avg_air_distance -0.086 -0.072 -0.054 -0.025

max_air_distance 0.038 0.042 0.053 0.069

avg_air_yards_to_sticks -0.082 -0.070 -0.048 -0.010

passing_cpoe 0.022 0.022 0.027 0.024

pass_comp_pct 0.012 0.009 0.000 -0.016

passer_rating 0.070 0.065 0.105 0.184

completion_percentage_above_expectation 0.000 -0.003 0.000 -0.004

pssng_r_PG p___PG pssng_f_PG avg_c__

completionsPerGame

attemptsPerGame

passing_yardsPerGame

passing_tdsPerGame

passing_air_yardsPerGame 0.000

passing_yards_after_catchPerGame -0.008 0.000

passing_first_downsPerGame -0.003 -0.002 0.000

avg_completed_air_yards -0.071 -0.041 -0.066 0.000

avg_intended_air_yards -0.064 -0.010 -0.058 -0.017

aggressiveness -0.168 0.112 -0.021 -0.120

max_completed_air_distance -0.062 0.226 0.100 -0.185

avg_air_distance -0.085 0.010 -0.065 -0.038

max_air_distance -0.018 0.113 0.037 -0.133

avg_air_yards_to_sticks -0.072 0.003 -0.050 -0.024

passing_cpoe -0.026 0.061 0.025 -0.392

pass_comp_pct 0.003 0.000 0.001 -0.412

passer_rating -0.181 0.288 0.099 -0.601

completion_percentage_above_expectation -0.001 -0.001 0.001 -0.305

avg_n__ aggrss mx_c__ avg_r_ mx_r_d

completionsPerGame

attemptsPerGame

passing_yardsPerGame

passing_tdsPerGame

passing_air_yardsPerGame

passing_yards_after_catchPerGame

passing_first_downsPerGame

avg_completed_air_yards

avg_intended_air_yards 0.000

aggressiveness -0.129 0.000

max_completed_air_distance -0.238 -0.295 0.000

avg_air_distance -0.012 -0.134 -0.214 0.000

max_air_distance -0.090 -0.232 -0.010 -0.080 0.000

avg_air_yards_to_sticks -0.007 -0.122 -0.228 -0.019 -0.100

passing_cpoe -0.332 -0.421 -0.285 -0.345 -0.187

pass_comp_pct -0.341 -0.368 -0.237 -0.361 -0.148

passer_rating -0.582 -0.601 -0.370 -0.592 -0.371

completion_percentage_above_expectation -0.253 -0.298 -0.250 -0.266 -0.137

av____ pssng_ pss_c_ pssr_r cmp___

completionsPerGame

attemptsPerGame

passing_yardsPerGame

passing_tdsPerGame

passing_air_yardsPerGame

passing_yards_after_catchPerGame

passing_first_downsPerGame

avg_completed_air_yards

avg_intended_air_yards

aggressiveness

max_completed_air_distance

avg_air_distance

max_air_distance

avg_air_yards_to_sticks 0.000

passing_cpoe -0.350 0.000

pass_comp_pct -0.368 0.027 0.000

passer_rating -0.591 -0.085 0.079 0.000

completion_percentage_above_expectation -0.274 -0.003 -0.011 -0.132 0.000

$mean

completionsPerGame attemptsPerGame

0.000 0.000

passing_yardsPerGame passing_tdsPerGame

0.000 0.000

passing_air_yardsPerGame passing_yards_after_catchPerGame

0.000 0.000

passing_first_downsPerGame avg_completed_air_yards

0.000 0.423

avg_intended_air_yards aggressiveness

0.298 0.290

max_completed_air_distance avg_air_distance

0.441 0.310

max_air_distance avg_air_yards_to_sticks

0.155 0.361

passing_cpoe pass_comp_pct

0.039 -0.002

passer_rating completion_percentage_above_expectation

0.265 0.020 We can examine the model modification indices to identify parameters that, if estimated, would substantially improve model fit. For instance, the modification indices below indicate additional correlated residuals that could substantially improve model fit. However, it is generally not recommended to blindly estimate additional parameters solely based on modification indices, which can lead to data dredging and overfitting. Rather, it is generally advised to consider modification indices in light of theory. Based on the modification indices, we will add several correlated residuals to the model, to help account for why variables are associated with each other for reasons other than their underlying latent factors.

Below are factor scores from the model for the first six players:

f1 f2 f3

[1,] -0.09754726 -1.27792310 0.3402415

[2,] 0.22104567 -1.66217518 -0.9854991

[3,] 0.43811983 -1.83305997 -1.2122425

[4,] 0.06686330 0.08889608 0.7651670

[5,] 0.89608460 1.16088344 0.4625355

[6,] -0.02801636 0.13318347 -0.2137671A path diagram of the three-factor ESEM model is in Figure 22.18.

To make the plot interactive for editing, you can use the lavaangui::plot_lavaan() function of the lavaangui package (Karch, 2025b; Karch, 2025a):

Below is a modification of the three-factor model with correlated residuals. For instance, it makes sense that passing completions and attempts are related to each other (even after accounting for their latent factor).

Code

efa3factorModified_syntax <- '

# EFA Factor Loadings

efa("efa1")*F1 +

efa("efa1")*F2 +

efa("efa1")*F3 =~ completionsPerGame + attemptsPerGame + passing_yardsPerGame + passing_tdsPerGame +

passing_air_yardsPerGame + passing_yards_after_catchPerGame + passing_first_downsPerGame +

avg_completed_air_yards + avg_intended_air_yards + aggressiveness + max_completed_air_distance +

avg_air_distance + max_air_distance + avg_air_yards_to_sticks + passing_cpoe + pass_comp_pct +

passer_rating + completion_percentage_above_expectation

# Correlated Residuals

completionsPerGame ~~ attemptsPerGame

passing_yardsPerGame ~~ passing_yards_after_catchPerGame

attemptsPerGame ~~ passing_yardsPerGame

attemptsPerGame ~~ passing_air_yardsPerGame

passing_air_yardsPerGame ~~ passing_yards_after_catchPerGame

passing_yards_after_catchPerGame ~~ avg_completed_air_yards

passing_tdsPerGame ~~ passer_rating

completionsPerGame ~~ passing_air_yardsPerGame

max_completed_air_distance ~~ max_air_distance

aggressiveness ~~ completion_percentage_above_expectation

'lavaan 0.6-21 ended normally after 805 iterations

Estimator ML

Optimization method NLMINB

Number of model parameters 103

Row rank of the constraints matrix 34

Rotation method GEOMIN OBLIQUE

Geomin epsilon 0.001

Rotation algorithm (rstarts) GPA (30)

Standardized metric TRUE

Row weights None

Number of observations 1997

Number of missing patterns 4

Model Test User Model:

Standard Scaled

Test Statistic 1398.863 844.273

Degrees of freedom 92 92

P-value (Chi-square) 0.000 0.000

Scaling correction factor 1.657

Yuan-Bentler correction (Mplus variant)

Model Test Baseline Model:

Test statistic 42942.078 23496.779

Degrees of freedom 153 153

P-value 0.000 0.000

Scaling correction factor 1.828

User Model versus Baseline Model:

Comparative Fit Index (CFI) 0.969 0.968

Tucker-Lewis Index (TLI) 0.949 0.946

Robust Comparative Fit Index (CFI) 0.946

Robust Tucker-Lewis Index (TLI) 0.911

Loglikelihood and Information Criteria:

Loglikelihood user model (H0) -54699.748 -54699.748

Scaling correction factor 1.828

for the MLR correction

Loglikelihood unrestricted model (H1) -54000.317 -54000.317

Scaling correction factor 1.745

for the MLR correction

Akaike (AIC) 109593.497 109593.497

Bayesian (BIC) 110136.639 110136.639

Sample-size adjusted Bayesian (SABIC) 109828.465 109828.465

Root Mean Square Error of Approximation:

RMSEA 0.084 0.064

90 Percent confidence interval - lower 0.080 0.061

90 Percent confidence interval - upper 0.088 0.067

P-value H_0: RMSEA <= 0.050 0.000 0.000

P-value H_0: RMSEA >= 0.080 0.967 0.000

Robust RMSEA 0.191

90 Percent confidence interval - lower 0.163

90 Percent confidence interval - upper 0.218

P-value H_0: Robust RMSEA <= 0.050 0.000

P-value H_0: Robust RMSEA >= 0.080 1.000

Standardized Root Mean Square Residual:

SRMR 0.141 0.141

Parameter Estimates:

Standard errors Sandwich

Information bread Observed

Observed information based on Hessian

Latent Variables:

Estimate Std.Err z-value P(>|z|) Std.lv Std.all

F1 =~ efa1

completinsPrGm 7.797 0.091 85.801 0.000 7.797 0.972

attemptsPerGam 12.288 0.136 90.139 0.000 12.288 0.980

pssng_yrdsPrGm 91.931 1.008 91.163 0.000 91.931 0.990

passng_tdsPrGm 0.589 0.010 56.989 0.000 0.589 0.854

pssng_r_yrdsPG 89.910 3.331 26.990 0.000 89.910 0.744

pssng_yrds__PG 47.857 1.598 29.950 0.000 47.857 0.730

pssng_frst_dPG 4.468 0.051 87.820 0.000 4.468 0.984

avg_cmpltd_r_y 0.012 0.105 0.115 0.909 0.012 0.004

avg_ntndd_r_yr -0.059 0.113 -0.527 0.598 -0.059 -0.017

aggressiveness 0.017 0.251 0.069 0.945 0.017 0.003

mx_cmpltd_r_ds 1.694 0.508 3.331 0.001 1.694 0.200

avg_air_distnc -0.239 0.122 -1.963 0.050 -0.239 -0.073