I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

You can leave a comment at the bottom of the page/chapter, or open an issue or submit a pull request on GitHub: https://github.com/isaactpetersen/Fantasy-Football-Analytics-Textbook

Alternatively, you can leave an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

17 Evaluation of Prediction/Forecasting Accuracy

“Nothing ruins fantasy more than reality.” – Renee Miller, Ph.D. (Yahoo! Sports, 2024)

This chapter provides an overview of ways to evaluate the accuracy of predictions. In addition, we evaluate the accuracy of fantasy football projections.

17.1 Getting Started

17.1.1 Load Packages

17.1.2 Load Data

We created the player_stats_weekly.RData and player_stats_seasonal.RData objects in Section 4.4.3. The players_projections_weekly and players_projections_seasonal objects were derived from projected points objects that were created in Section 4.3.27.

17.1.3 Specify Options

17.1.4 Prepare Data

17.1.4.1 Seasonal Projections

To evaluate the accuracy of projections, we must first merge projections with actual performance. Below, we merge seasonal projections with actual performance.

Code

player_stats_seasonal_subset <- player_stats_seasonal %>%

filter(!is.na(player_id))

nfl_playerIDs_subset <- nfl_playerIDs %>%

filter(!is.na(gsis_id)) %>%

distinct(gsis_id, .keep_all = TRUE) %>% # keep only rows that do not have duplicate values of gsis_id

select(-all_of(c("team", "position", "height", "weight", "age")))

players_projectedPoints_seasonal_combined$season <- as.integer(players_projectedPoints_seasonal_combined$season)

players_projections_seasonal_average_merged$season <- as.integer(players_projections_seasonal_average_merged$season)

player_stats_seasonal_subset_IDs <- left_join(

player_stats_seasonal_subset,

nfl_playerIDs_subset,

by = c("player_id" = "gsis_id")

) %>%

filter(!is.na(mfl_id))

projectionsWithActuals_seasonal <- full_join(

player_stats_seasonal_subset_IDs,

players_projectedPoints_seasonal_combined,

by = c("mfl_id" = "id", "season"),

suffix = c("", "_proj"),

)

crowdAveragedProjectionsWithActuals_seasonal <- full_join(

player_stats_seasonal_subset_IDs,

players_projections_seasonal_average_merged %>% filter(avg_type == "average"),

by = c("mfl_id" = "id", "season"),

suffix = c("", "_proj"),

)

projectionsWithActuals_seasonal <- projectionsWithActuals_seasonal %>%

unite(

"player_id_season",

player_id,

season,

remove = FALSE

)

crowdAveragedProjectionsWithActuals_seasonal <- crowdAveragedProjectionsWithActuals_seasonal %>%

unite(

"player_id_season",

player_id,

season,

remove = FALSE

)Players in the projectionsWithActuals_seasonal object are (supposed to be) uniquely identified by player_id-season-data_src:

Code

Players in the crowdAveragedProjectionsWithActuals_seasonal object are (supposed to be) uniquely identified by player_id-season-avg_type:

Code

We save the data object for use in other chapters:

Code

playersWithHighProjectedOrActualPoints <- projectionsWithActuals_seasonal %>%

filter(raw_points > 100 | fantasyPoints > 100) %>%

select(player_id_season) %>%

pull()

projectionsWithActuals_seasonal_qb <- projectionsWithActuals_seasonal %>%

filter(position_group == "QB")

projectionsWithActuals_seasonal_rb <- projectionsWithActuals_seasonal %>%

filter(position_group == "RB")

projectionsWithActuals_seasonal_wr <- projectionsWithActuals_seasonal %>%

filter(position_group == "WR")

projectionsWithActuals_seasonal_te <- projectionsWithActuals_seasonal %>%

filter(position_group == "TE")

projectionsWithActuals_seasonal_k <- projectionsWithActuals_seasonal %>%

filter(position == "K")

projectionsWithActuals_seasonal_dl <- projectionsWithActuals_seasonal %>%

filter(position_group == "DL")

projectionsWithActuals_seasonal_lb <- projectionsWithActuals_seasonal %>%

filter(position_group == "LB")

projectionsWithActuals_seasonal_db <- projectionsWithActuals_seasonal %>%

filter(position_group == "DB")

crowdAveragedProjectionsWithActuals_seasonal_qb <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "QB")

crowdAveragedProjectionsWithActuals_seasonal_rb <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "RB")

crowdAveragedProjectionsWithActuals_seasonal_wr <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "WR")

crowdAveragedProjectionsWithActuals_seasonal_te <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "TE")

crowdAveragedProjectionsWithActuals_seasonal_k <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position == "K")

crowdAveragedProjectionsWithActuals_seasonal_dl <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "DL")

crowdAveragedProjectionsWithActuals_seasonal_lb <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "LB")

crowdAveragedProjectionsWithActuals_seasonal_db <- crowdAveragedProjectionsWithActuals_seasonal %>%

filter(position_group == "DB")17.1.4.2 Weekly Projections

Below, we merge weekly projections with actual performance.

Code

player_stats_weekly_subset <- player_stats_weekly %>%

filter(!is.na(player_id))

nfl_playerIDs_subset <- nfl_playerIDs %>%

filter(!is.na(gsis_id)) %>%

distinct(gsis_id, .keep_all = TRUE) %>% # keep only rows that do not have duplicate values of gsis_id

select(-all_of(c("team", "position", "height", "weight", "age")))

players_projectedPoints_weekly_combined$season <- as.integer(players_projectedPoints_weekly_combined$season)

players_projections_weekly_average_merged$season <- as.integer(players_projections_weekly_average_merged$season)

player_stats_weekly_subset_IDs <- left_join(

player_stats_weekly_subset,

nfl_playerIDs_subset,

by = c("player_id" = "gsis_id")

) %>%

filter(!is.na(mfl_id))

projectionsWithActuals_weekly <- full_join(

player_stats_weekly_subset_IDs,

players_projectedPoints_weekly_combined,

by = c("mfl_id" = "id", "season", "week"),

suffix = c("", "_proj"),

)

crowdAveragedProjectionsWithActuals_weekly <- full_join(

player_stats_weekly_subset_IDs,

players_projections_weekly_average_merged,

by = c("mfl_id" = "id", "season", "week"),

suffix = c("", "_proj"),

)

projectionsWithActuals_weekly <- projectionsWithActuals_weekly %>%

unite(

"player_id_season_week",

player_id,

season,

week,

remove = FALSE

)

crowdAveragedProjectionsWithActuals_weekly <- crowdAveragedProjectionsWithActuals_weekly %>%

unite(

"player_id_season_week",

player_id,

season,

week,

remove = FALSE

)Players in the projectionsWithActuals_weekly object are (supposed to be) uniquely identified by player_id-season-week-data_src:

Code

Players in the crowdAveragedProjectionsWithActuals_weekly object are (supposed to be) uniquely identified by player_id-season-week-avg_type:

Code

We save the data object for use in other chapters:

Code

playersWithHighProjectedOrActualPoints_weekly <- projectionsWithActuals_weekly %>%

filter(raw_points > 6 | fantasyPoints > 6) %>%

select(player_id_season_week) %>%

pull()

projectionsWithActuals_weekly_qb <- projectionsWithActuals_weekly %>%

filter(position_group == "QB")

projectionsWithActuals_weekly_rb <- projectionsWithActuals_weekly %>%

filter(position_group == "RB")

projectionsWithActuals_weekly_wr <- projectionsWithActuals_weekly %>%

filter(position_group == "WR")

projectionsWithActuals_weekly_te <- projectionsWithActuals_weekly %>%

filter(position_group == "TE")

projectionsWithActuals_weekly_k <- projectionsWithActuals_weekly %>%

filter(position == "K")

projectionsWithActuals_weekly_dl <- projectionsWithActuals_weekly %>%

filter(position_group == "DL")

projectionsWithActuals_weekly_lb <- projectionsWithActuals_weekly %>%

filter(position_group == "LB")

projectionsWithActuals_weekly_db <- projectionsWithActuals_weekly %>%

filter(position_group == "DB")

crowdAveragedProjectionsWithActuals_weekly_qb <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "QB")

crowdAveragedProjectionsWithActuals_weekly_rb <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "RB")

crowdAveragedProjectionsWithActuals_weekly_wr <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "WR")

crowdAveragedProjectionsWithActuals_weekly_te <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "TE")

crowdAveragedProjectionsWithActuals_weekly_k <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position == "K")

crowdAveragedProjectionsWithActuals_weekly_dl <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "DL")

crowdAveragedProjectionsWithActuals_weekly_lb <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "LB")

crowdAveragedProjectionsWithActuals_weekly_db <- crowdAveragedProjectionsWithActuals_weekly %>%

filter(position_group == "DB")17.2 Overview

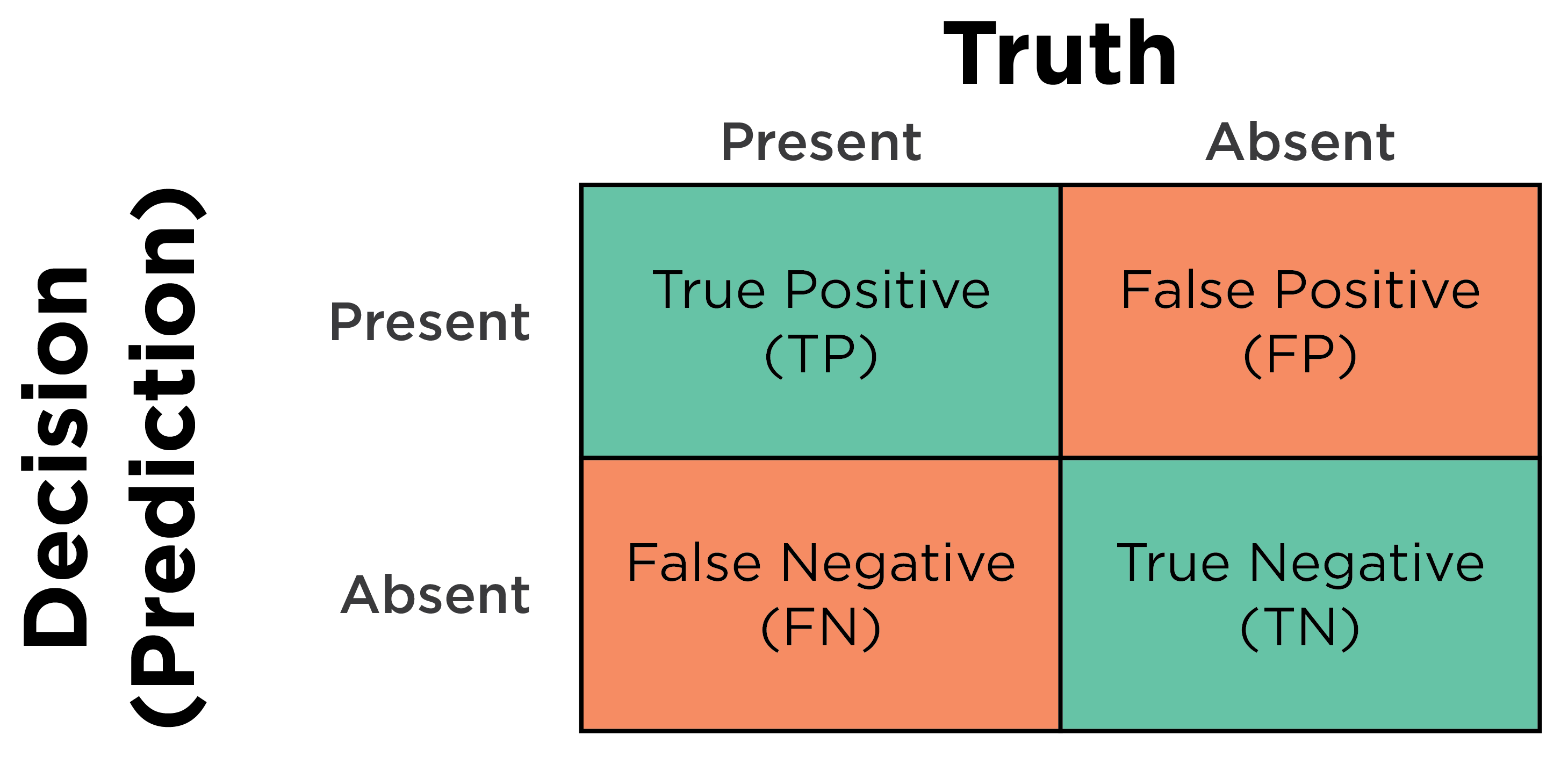

Predictions can come in different types. Some predictions involve categorical data, whereas other predictions involve continuous data. When dealing with a dichotomous (nominal data that are binary) predictor and outcome variable (or continuous data that have been dichotomized using a cutoff), we can evaluate predictions using a 2x2 table known as a confusion matrix (see Figure 17.2), or with logistic regression models. When dealing with a continuous outcome variable (e.g., ordinal, interval, or ratio data), we can evaluate predictions using multiple regression or similar variants such as structural equation modeling and mixed models.

In fantasy football, we most commonly predict continuous outcome variables (e.g., fantasy points, rushing yards). Nevertheless, it is also important to understand principles in the prediction of categorical outcomes variables.

In any domain, it is important to evaluate the accuracy of predictions, so we can know how (in)accurate we are, and we can strive to continually improve our predictions. Fantasy performance—and human behavior more general—is incredibly challenging to predict. Indeed, many things in the world, in particular long-term trends, are unpredictable (Kahneman, 2011). In fantasy football, there is considerable luck/chance/randomness. There are relatively few (i.e. 17) games, and there is a sizeable injury risk for each player in a given game. These and other factors combine to render fantasy football predictions not highly accurate. Domains with high uncertainty and unpredictability are considered “low-validity environments” (Kahneman, 2011, p. 223). But, first, let’s learn about the various ways we can evaluate the accuracy of predictions.

17.3 Types of Accuracy

There are two primary dimensions of accuracy: (1) discrimination and (2) calibration. Discrimination and calibration are distinct forms of accuracy. Just because predictions are high in one form of accuracy does not mean that they will be high in the other form of accuracy. As described by Lindhiem et al. (2020), predictions can follow any of the following configurations (and anywhere in between):

- high discrimination, high calibration

- high discrimination, low calibration

- low discrimination, high calibration

- low discrimination, low calibration

Some general indexes of accuracy combine discrimination and calibration, as described in Section 17.3.3.

In addition, accuracy indices can be threshold-dependent or -independent and can be scale-dependent or -independent. Threshold-dependent accuracy indices differ based on the cutoff (i.e., threshold), whereas threshold-independent accuracy indices do not. Thus, raising or lowering the cutoff will change threshold-dependent accuracy indices. Scale-dependent accuracy indices depend on the metric/scale of the data, whereas scale-independent accuracy indices do not. Thus, scale-dependent accuracy indices cannot be directly compared when using measures of differing scales, whereas scale-independent accuracy indices can be compared across data of differing scales.

17.3.1 Discrimination

When dealing with a categorical outcome, discrimination is the ability to separate events from non-events. When dealing with a continuous outcome, discrimination is the strength of the association between the predictor and the outcome. Aspects of discrimination at a particular cutoff (e.g., sensitivity, specificity, area under the ROC curve) are described in Section 17.6.

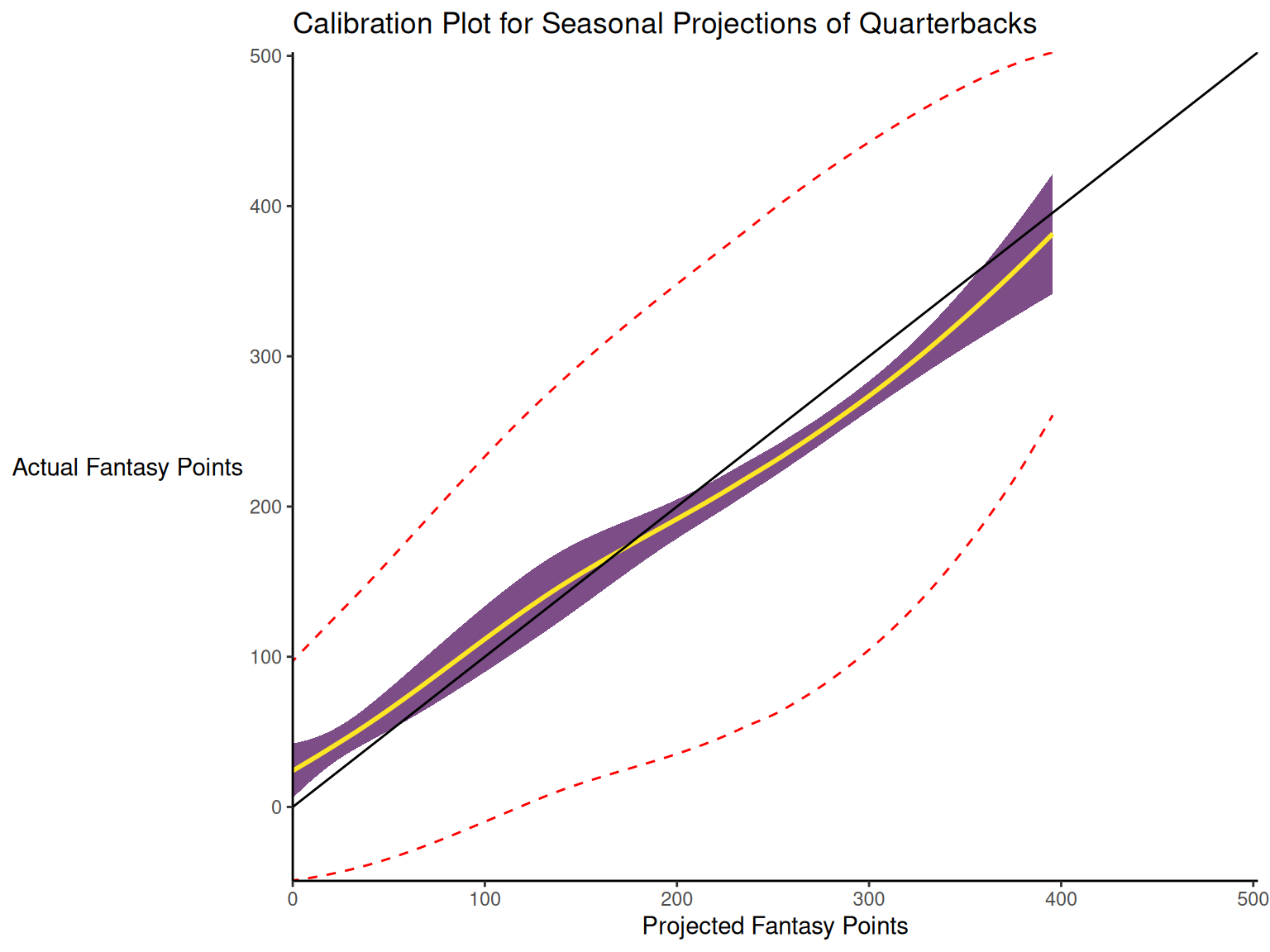

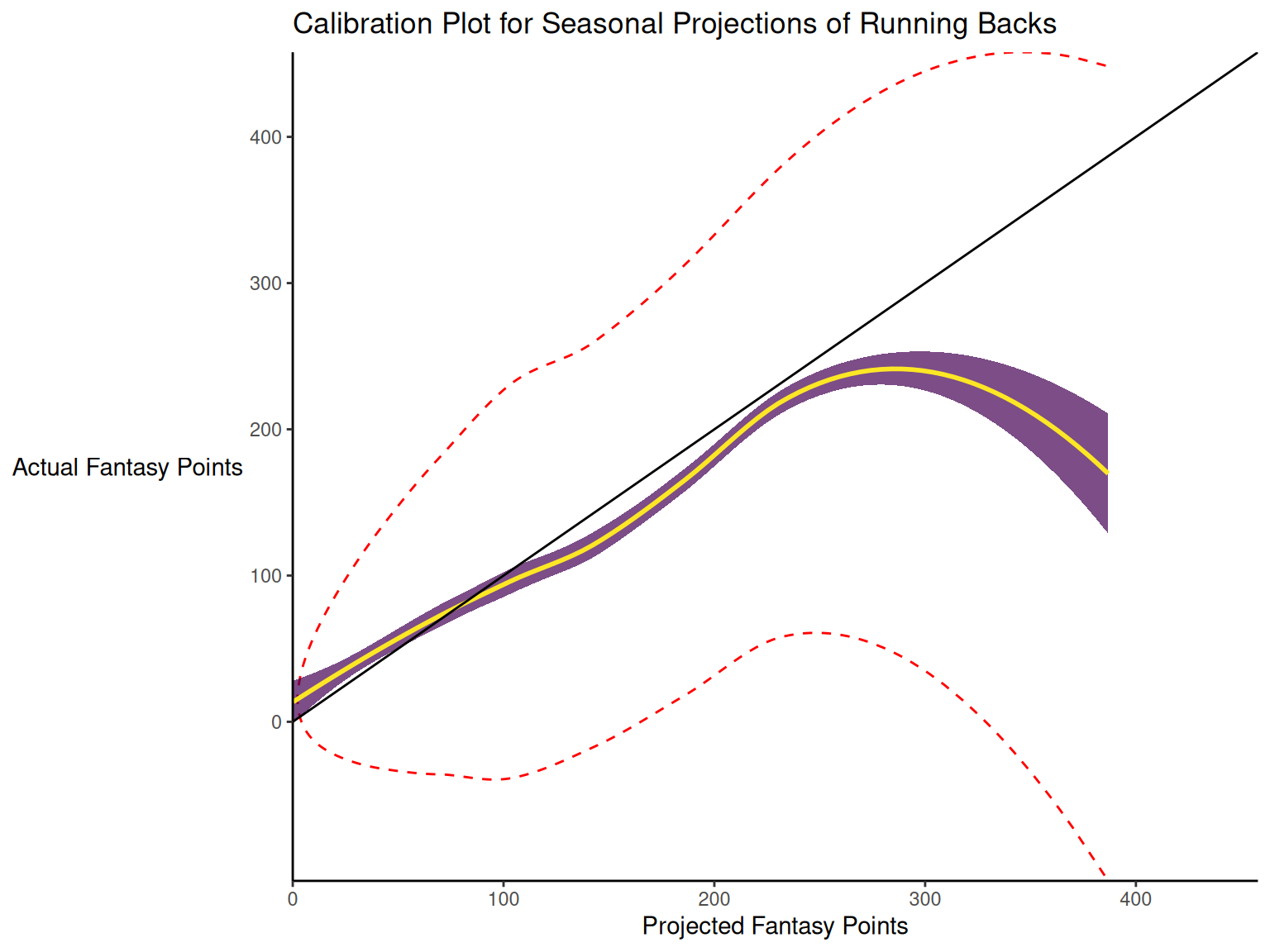

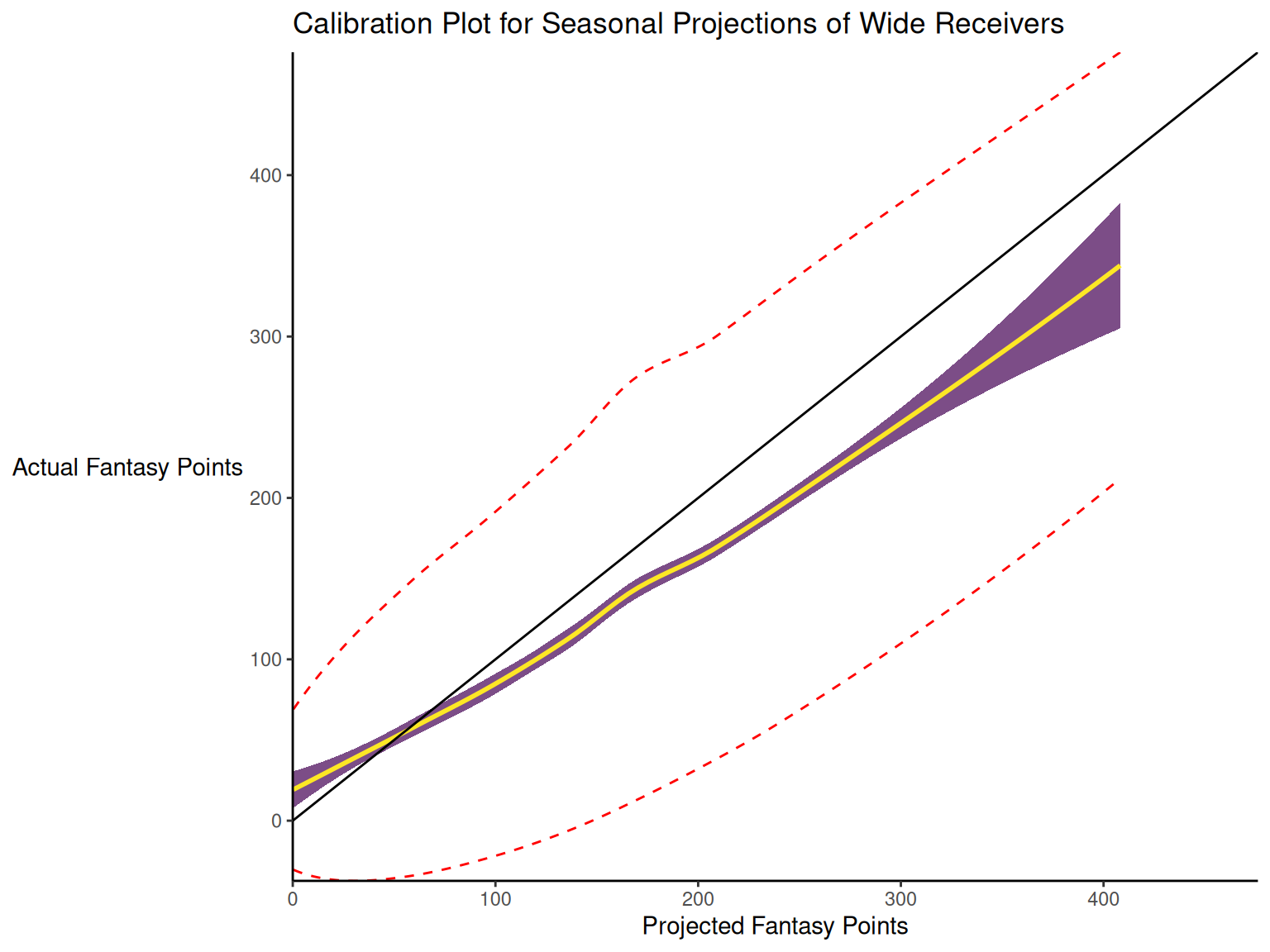

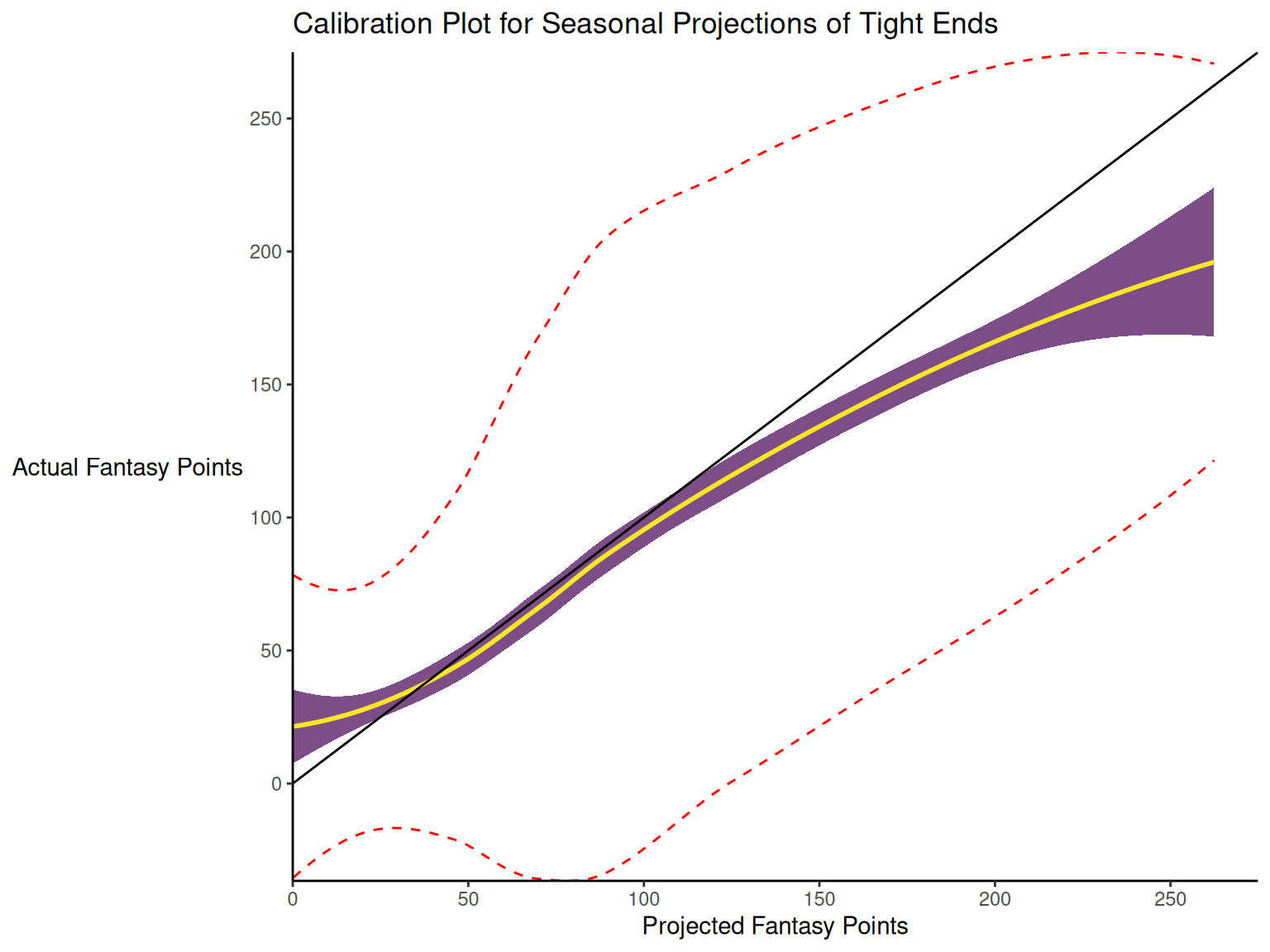

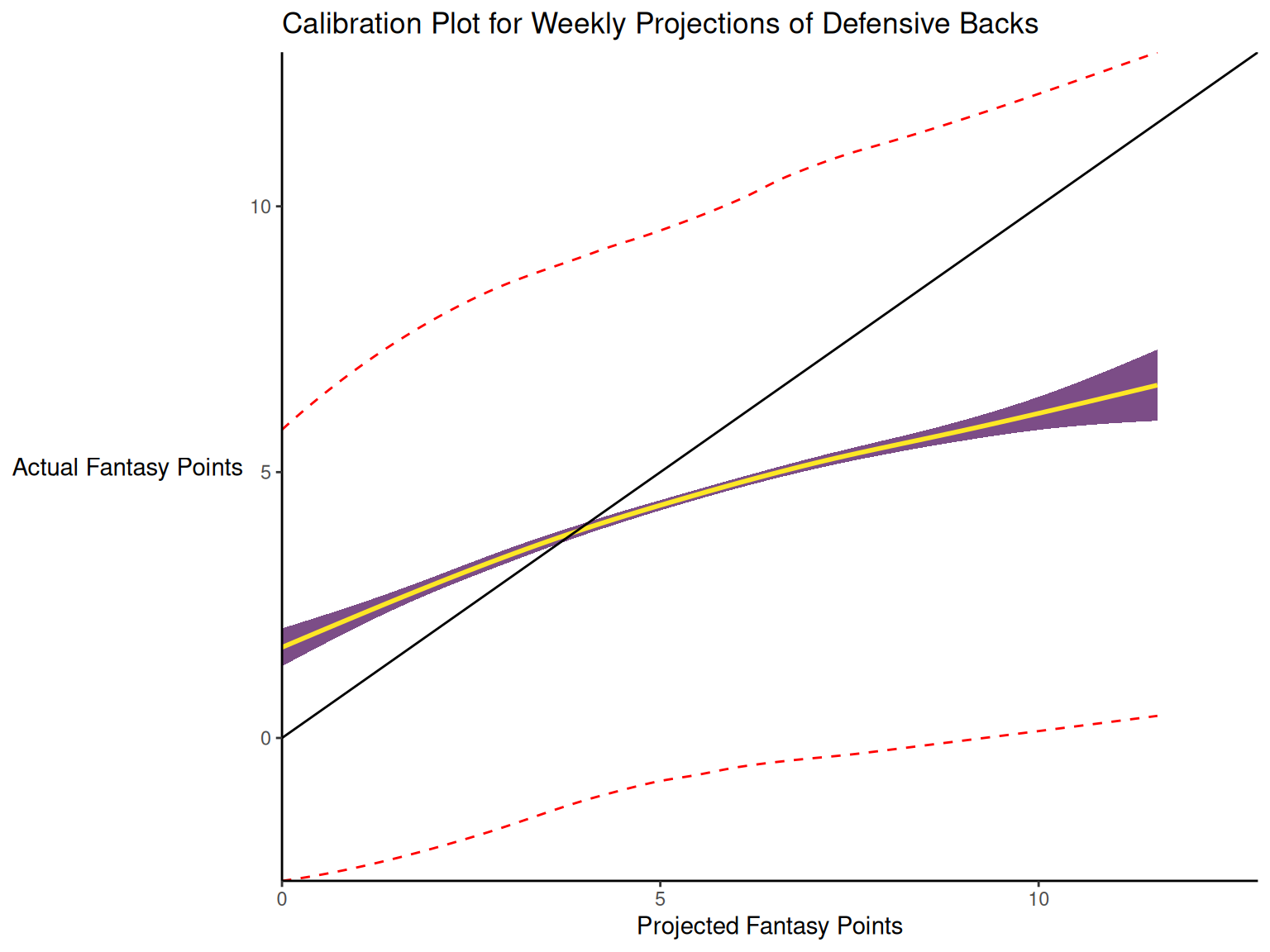

17.3.2 Calibration

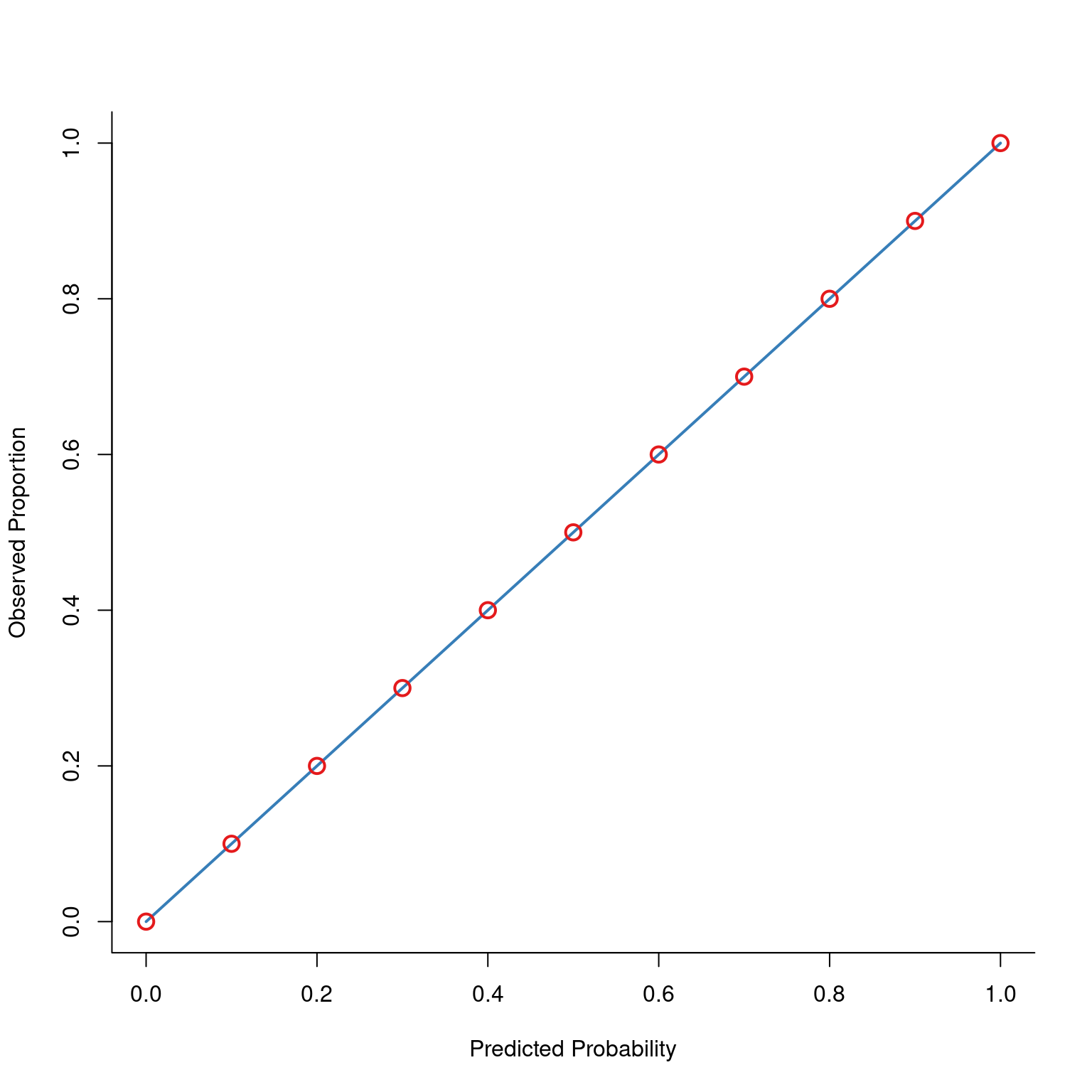

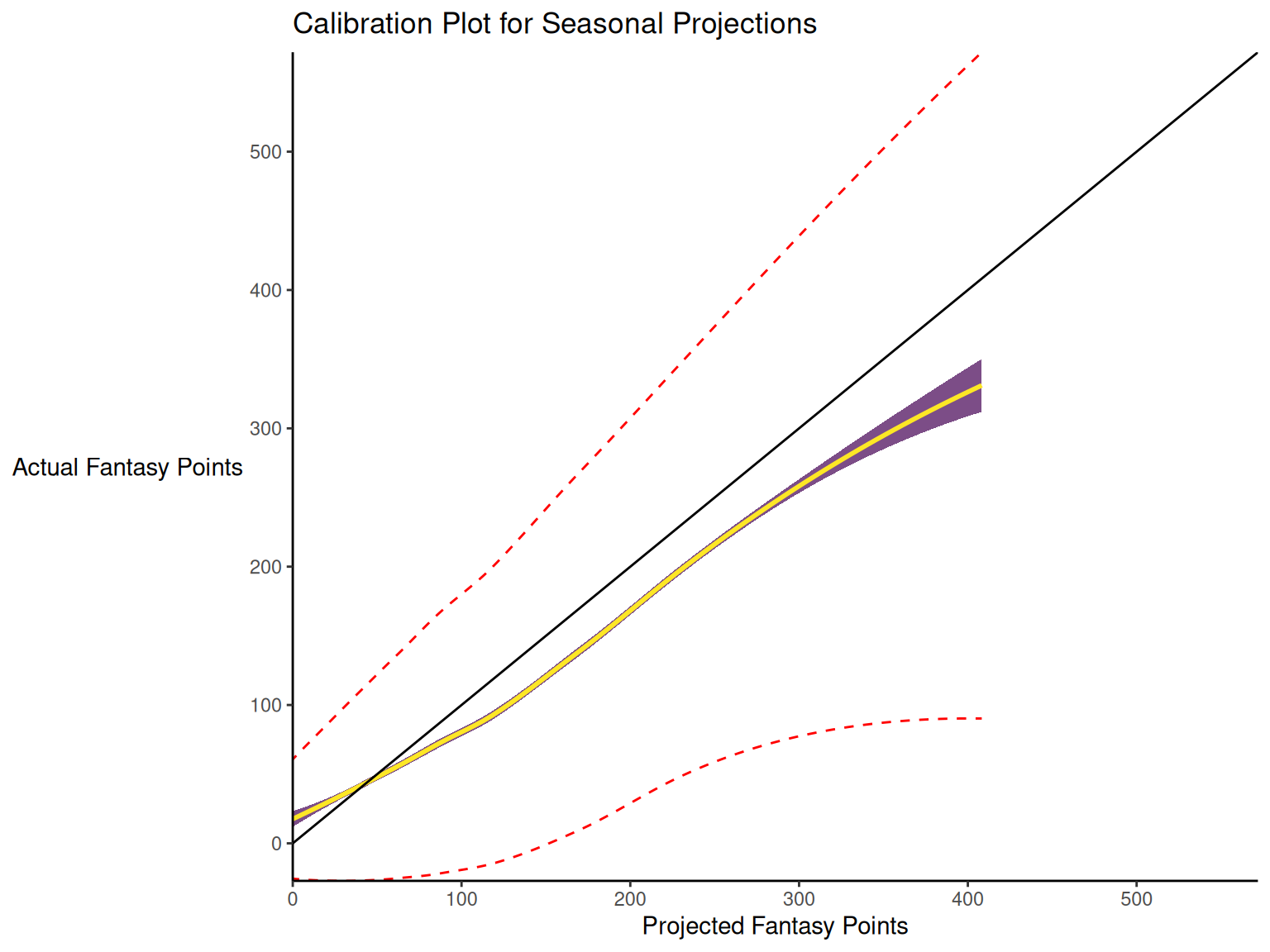

When dealing with a categorical outcome, calibration is the degree to which a probabilistic estimate of an event reflects the true underlying probability of the event. When dealing with a continuous outcome, calibration is the degree to which the predicted values are close in value to the outcome values. The importance of examining calibration (in addition to discrimination) is described by Lindhiem et al. (2020).

Calibration is relevant to all kinds of predictions, including weather forecasts. For instance, on the days that the meteorologist says there is a 60% chance of rain, it should rain about 60% of the time. Calibration is also important for fantasy football predictions. When projections state that a group of players is each expected to score 200 points, their projections would be miscalibrated if those players scored only 150 points on average.

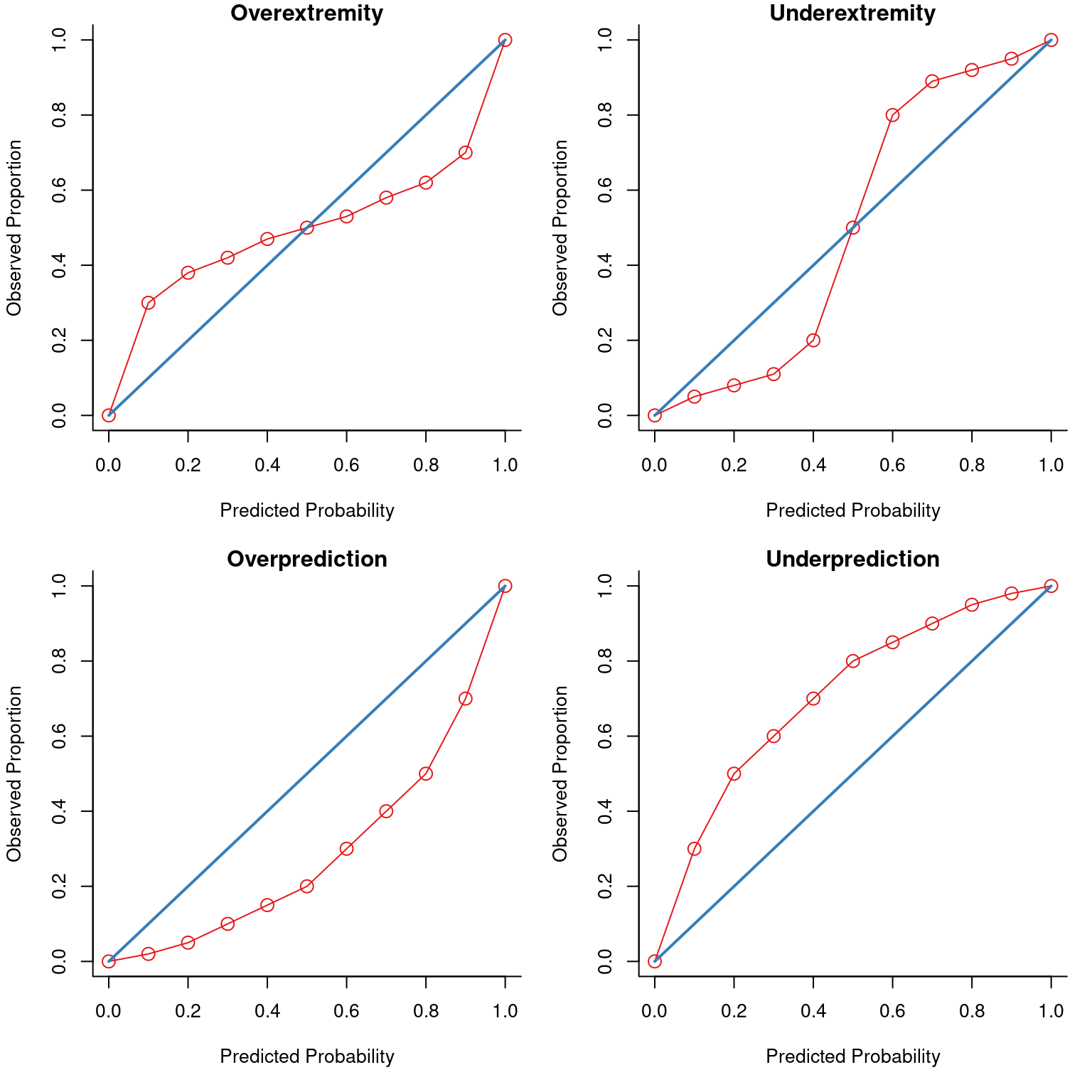

There are four general patterns of miscalibration: overextremity, underextremity, overprediction, and underprediction (see Figure 17.15). Overextremity exists when the predicted probabilities are too close to the extremes (zero or one). Underextremity exists when the predicted probabilities are too far away from the extremes. Overprediction exists when the predicted probabilities are consistently greater than the observed probabilities. Underprediction exists when the predicted probabilities are consistently less than the observed probabilities. For a more thorough description of these types of miscalibration, see Lindhiem et al. (2020).

Indices for evaluating calibration are described in Section 17.7.3.

17.3.3 General Accuracy

General accuracy indices combine estimates of discrimination and calibration.

17.4 Prediction of Categorical Outcomes

To evaluate the accuracy of our predictions for categorical outcome variables (e.g., binary, dichotomous, or nominal data), we can use either threshold-dependent or threshold-independent accuracy indices.

17.5 Prediction of Continuous Outcomes

To evaluate the accuracy of our predictions for continuous outcome variables (e.g., ordinal, interval, or ratio data), the outcome variable does not have cutoffs, so we would use threshold-independent accuracy indices.

17.6 Threshold-Dependent Accuracy Indices

17.6.1 Decision Outcomes

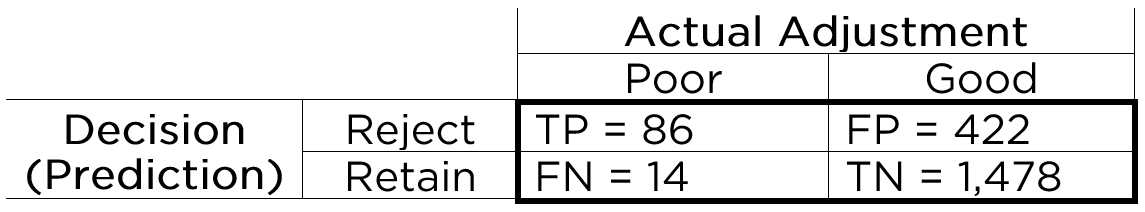

To consider how we can evaluate the accuracy of predictions for a categorical outcome, consider an example adapted from Meehl & Rosen (1955). The military conducts a test of its prospective members to screen out applicants who would likely fail basic training. To evaluate the accuracy of our predictions using the test, we can examine a confusion matrix. A confusion matrix is a matrix that presents the predicted outcome on one dimension and the actual outcome (truth) on the other dimension. If the predictions and outcomes are dichotomous, the confusion matrix is a 2x2 matrix with two rows and two columns that represent four possible predicted-actual combinations (decision outcomes), as in Figure 17.2: true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).

When discussing the four decision outcomes, “true” means an accurate judgment, whereas “false” means an inaccurate judgment. “Positive” means that the judgment was that the person has the characteristic of interest, whereas “negative” means that the judgment was that the person does not have the characteristic of interest. A true positive is a correct judgment (or prediction) where the judgment was that the person has (or will have) the characteristic of interest, and, in truth, they actually have (or will have) the characteristic. A true negative is a correct judgment (or prediction) where the judgment was that the person does not have (or will not have) the characteristic of interest, and, in truth, they actually do not have (or will not have) the characteristic. A false positive is an incorrect judgment (or prediction) where the judgment was that the person has (or will have) the characteristic of interest, and, in truth, they actually do not have (or will not have) the characteristic. A false negative is an incorrect judgment (or prediction) where the judgment was that the person does not have (or will not have) the characteristic of interest, and, in truth, they actually do have (or will have) the characteristic.

An example of a confusion matrix is in Figure 17.3.

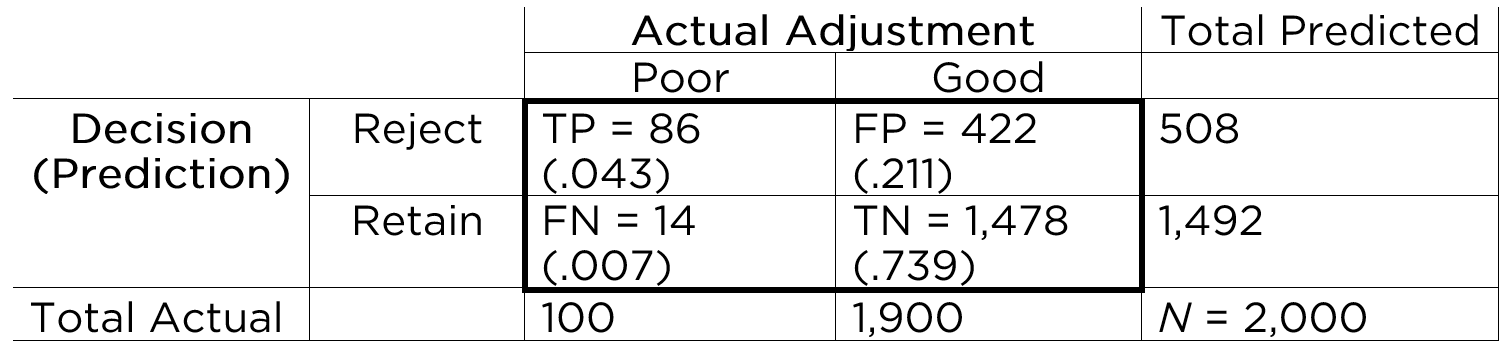

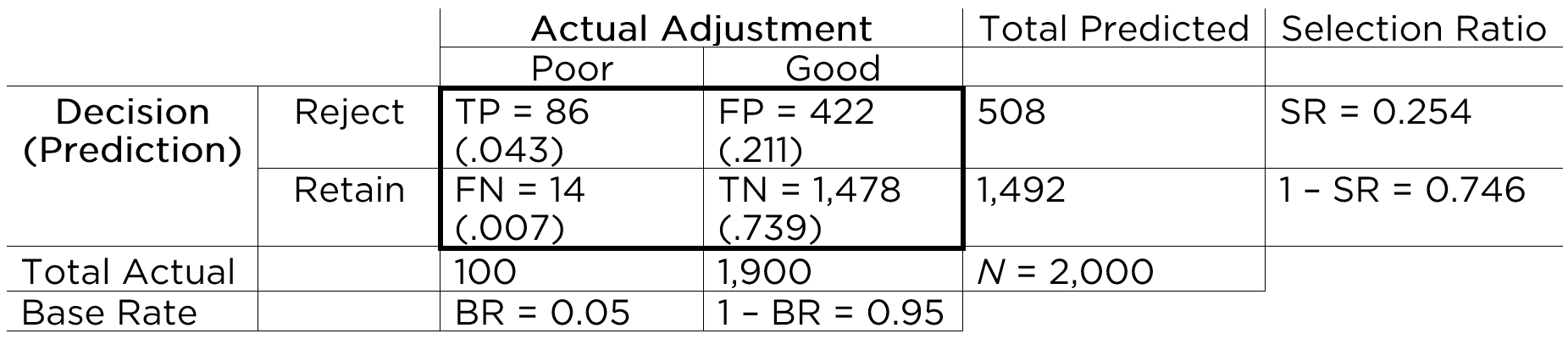

With the information in the confusion matrix, we can calculate the marginal sums and the proportion of people in each cell (in parentheses), as depicted in Figure 17.4.

That is, we can sum across the rows and columns to identify how many people actually showed poor adjustment (\(n = 100\)) versus good adjustment (\(n = 1,900\)), and how many people were selected to reject (\(n = 508\)) versus retain (\(n = 1,492\)). If we sum the column of predicted marginal sums (\(508 + 1,492\)) or the row of actual marginal sums (\(100 + 1,900\)), we get the total number of people (\(N = 2,000\)).

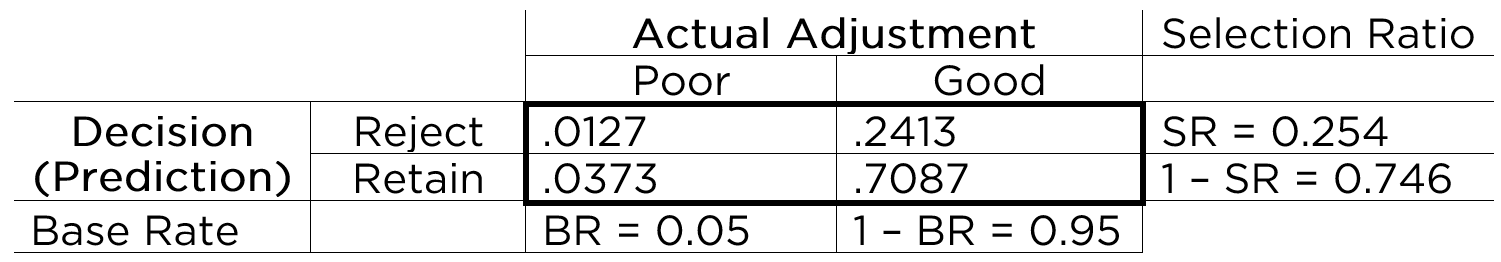

Based on the marginal sums, we can compute the marginal probabilities, as depicted in Figure 17.5.

The marginal probability of the person having the characteristic of interest (i.e., showing poor adjustment) is called the base rate (BR). That is, the base rate is the proportion of people who have the characteristic. It is calculated by dividing the number of people with poor adjustment (\(n = 100\)) by the total number of people (\(N = 2,000\)): \(BR = \frac{FN + TP}{N}\). Here, the base rate reflects the prevalence of poor adjustment. In this case, the base rate is .05, so there is a 5% chance that an applicant will be poorly adjusted. The marginal probability of good adjustment is equal to 1 minus the base rate of poor adjustment.

The marginal probability of predicting that a person has the characteristic (i.e., rejecting a person) is called the selection ratio (SR). The selection ratio is the proportion of people who will be selected (in this case, rejected rather than retained); i.e., the proportion of people who are identified as having the characteristic. The selection ratio is calculated by dividing the number of people selected to reject (\(n = 508\)) by the total number of people (\(N = 2,000\)): \(SR = \frac{TP + FP}{N}\). In this case, the selection ratio is .25, so 25% of people are rejected. The marginal probability of not selecting someone to reject (i.e., the marginal probability of retaining) is equal to 1 minus the selection ratio.

The selection ratio might be something that the test dictates according to its cutoff score. Or, the selection ratio might be imposed by external factors that place limits on how many people you can assign a positive test value. For instance, when deciding whether to treat a client, the selection ratio may depend on how many therapists are available and how many cases can be treated.

17.6.2 Percent Accuracy

Based on the confusion matrix, we can calculate the prediction accuracy based on the percent accuracy of the predictions. The percent accuracy is the number of correct predictions divided by the total number of predictions, and multiplied by 100. In the context of a confusion matrix, this is calculated as: \(100\% \times \frac{\text{TP} + \text{TN}}{N}\). In this case, our percent accuracy was 78%—that is, 78% of our predictions were accurate, and 22% of our predictions were inaccurate.

17.6.3 Percent Accuracy by Chance

78% sounds pretty accurate. And it is much higher than 50%, so we are doing a pretty good job, right? Well, it is important to compare our accuracy to what accuracy we would expect to get by chance alone, if predictions were made by a random process rather than using a test’s scores. Our selection ratio was 25.4%. How accurate would we be if we randomly selected 25.4% of people to reject? To determine what accuracy we could get by chance alone given the selection ratio and the base rate, we can calculate the chance probability of true positives and the chance probability of true negatives. The probability of a given cell in the confusion matrix is a joint probability—the probability of two events occurring simultaneously. To calculate a joint probability, we multiply the probability of each event.

So, to get the chance expectancies of true positives, we would multiply the respective marginal probabilities, as in Equation 17.1:

\[ \begin{aligned} P(TP) &= P(\text{Poor adjustment}) \times P(\text{Reject})\\ &= BR \times SR \\ &= .05 \times .254 \\ &= .0127 \end{aligned} \tag{17.1}\]

To get the chance expectancies of true negatives, we would multiply the respective marginal probabilities, as in Equation 17.2:

\[ \begin{aligned} P(TN) &= P(\text{Good adjustment}) \times P(\text{Retain})\\ &= (1 - BR) \times (1 - SR) \\ &= .95 \times .746 \\ &= .7087 \end{aligned} \tag{17.2}\]

To get the percent accuracy by chance, we sum the chance expectancies for the correct predictions (TP and TN): \(.0127 + .7087 = .7214\). Thus, the percent accuracy you can get by chance alone is 72%. This is because most of our predictions are to retain people, and the base rate of poor adjustment is quite low (.05). Our measure with 78% accuracy provides only a 6% increment in correct predictions. Thus, you cannot judge how good your judgment or prediction is until you know how you would do by random chance.

The chance expectancies for each cell of the confusion matrix are in Figure 17.6.

17.6.4 Predicting from the Base Rate

Now, let us consider how well you would do if you were to predict from the base rate. Predicting from the base rate is also called “betting from the base rate”, and it involves setting the selection ratio by taking advantage of the base rate so that you go with the most likely outcome in every prediction. Because the base rate is quite low (.05), we could predict from the base rate by selecting no one to reject (i.e., setting the selection ratio at zero). Our percent accuracy by chance if we predict from the base rate would be calculated by multiplying the marginal probabilities, as we did above, but with a new selection ratio, as in Equation 17.3:

\[ \begin{aligned} P(TP) &= P(\text{Poor adjustment}) \times P(\text{Reject})\\ &= BR \times SR \\ &= .05 \times 0 \\ &= 0 \\ \\ P(TN) &= P(\text{Good adjustment}) \times P(\text{Retain})\\ &= (1 - BR) \times (1 - SR) \\ &= .95 \times 1 \\ &= .95 \end{aligned} \tag{17.3}\]

We sum the chance expectancies for the correct predictions (TP and TN): \(0 + .95 = .95\). Thus, our percent accuracy by predicting from the base rate is 95%. This is damning to our measure because it is a much higher accuracy than the accuracy of our measure. That is, we can be much more accurate than our measure simply by predicting from the base rate and selecting no one to reject.

Going with the most likely outcome in every prediction (predicting from the base rate) can be highly accurate (in terms of percent accuracy) as noted by Meehl & Rosen (1955), especially when the base rate is very low or very high. This should serve as an important reminder that we need to compare the accuracy of our measures to the accuracy by (1) random chance and (2) predicting from the base rate. There are several important implications of the impact of base rates on prediction accuracy. One implication is that using the same test in different settings with different base rates will markedly change the accuracy of the test. Oftentimes, using a test will actually decrease the predictive accuracy when the base rate deviates greatly from .50. But percent accuracy is not everything. Percent accuracy treats different kinds of errors as if they are equally important. However, the value we place on different kinds of errors may be different, as described next.

17.6.5 Different Kinds of Errors Have Different Costs

Some errors have a high cost, and some errors have a low cost. Among the four decision outcomes, there are two types of errors: false positives and false negatives. The extent to which false positives and false negatives are costly depends on the prediction problem. So, even though you can often be most accurate by going with the base rate, it may be advantageous to use a screening instrument despite lower overall accuracy because of the huge difference in costs of false positives versus false negatives in some cases.

Consider the example of a screening instrument for HIV. False positives would be cases where we said that someone is at high risk of HIV when they are not, whereas false negatives are cases where we said that someone is not at high risk when they actually are. The costs of false positives include a shortage of blood, some follow-up testing, and potentially some anxiety, but that is about it. The costs of false negatives may be people getting HIV. In this case, the costs of false negatives greatly outweigh the costs of false positives, so we use a screening instrument to try to identify the cases at high risk for HIV because of the important consequences of failing to do so, even though using the screening instrument will lower our overall accuracy level.

Another example is when the Central Intelligence Agency (CIA) used a screen for protective typists during wartime to try to detect spies. False positives would be cases where the CIA believes that a person is a spy when they are not, and the CIA does not hire them. False negatives would be cases where the CIA believes that a person is not a spy when they actually are, and the CIA hires them. In this case, a false positive would be fine, but a false negative would be really bad.

How you weigh the costs of different errors depends considerably on the domain and context. Possible costs of false positives to society include: unnecessary and costly treatment with side effects and sending an innocent person to jail (despite our presumption of innocence in the United States criminal justice system that a person is innocent until proven guilty). Possible costs of false negatives to society include: setting a guilty person free, failing to detect a bomb or tumor, and preventing someone from getting treatment who needs it.

The differential costs of different errors also depend on how much flexibility you have in the selection ratio in being able to set a stringent versus loose selection ratio. Consider if there is a high cost of getting rid of people during the selection process. For example, if you must hire 100 people and only 100 people apply for the position, you cannot lose people, so you need to hire even high-risk people. However, if you do not need to hire many people, then you can hire more conservatively.

Any time the selection ratio differs from the base rate, you will make errors. For example, if you reject 25% of applicants, and the base rate of poor adjustment is 5%, then you are making errors of over-rejecting (false positives). By contrast, if you reject 1% of applicants and the base rate of poor adjustment is 5%, then you are making errors of under-rejecting or over-accepting (false negatives).

17.6.6 Difficulty Predicting Low Base Rate Events

A low base rate makes it harder to make predictions, and tends to lead to less accurate predictions. For instance, it is very challenging to predict low base rate behaviors, including suicide (Kessler et al., 2020). For this reason, it is likely much more challenging to predict touchdowns—which happen relatively less often—than it is to predict passing/rushing/receiving yards—which are more frequent and continuously distributed.

Here is the accuracy of the prediction of passing touchdowns versus passing yards among Quarterbacks:

Code

Code

The accuracy for predicting passing yards (\(R^2 = .19\)) is higher than the accuracy for predicting passing touchdowns (\(R^2 = .10\)).

Here is the accuracy of the prediction of rushing touchdowns versus rushing yards among Running Backs:

Code

Code

The accuracy for predicting rushing yards (\(R^2 = .44\)) is higher than the accuracy for predicting rushing touchdowns (\(R^2 = .12\)).

Here is the accuracy of the prediction of receiving touchdowns versus receiving yards among Wide Receivers:

Code

Code

The accuracy for predicting receiving yards (\(R^2 = .31\)) is higher than the accuracy for predicting rushing touchdowns (\(R^2 = .02\)) among Wide Receivers.

Here is the accuracy of the prediction of receiving touchdowns versus receiving yards among Tight Ends:

Code

Code

The accuracy for predicting receiving yards (\(R^2 = .26\)) is higher than the accuracy for predicting rushing touchdowns (\(R^2 = -.01\)) among Tight Ends.

In sum, the proportion of variance explained by the prediction (i.e., \(R^2\)) is much higher for passing/rushing/receiving yards than it is for touchdowns. This is consistent with the notion that prediction accuracy tends to be lower for lower base rate events (like touchdowns) compared to higher base rate events.

17.6.7 Sensitivity, Specificity, PPV, and NPV

As described earlier, percent accuracy is not the only important aspect of accuracy. Percent accuracy can be misleading because it is highly influenced by base rates. You can have a high percent accuracy by predicting from the base rate and saying that no one has the condition (if the base rate is low) or that everyone has the condition (if the base rate is high). Thus, it is also important to consider other aspects of accuracy, including sensitivity (SN), specificity (SP), positive predictive value (PPV), and negative predictive value (NPV). We want our predictions to be sensitive to be able to detect the characteristic but also to be specific so that we classify only people actually with the characteristic as having the characteristic.

Let us return to the confusion matrix in Figure 17.5. If we know the frequency of each of the four predicted-actual combinations of the confusion matrix (TP, TN, FP, FN), we can calculate sensitivity, specificity, PPV, and NPV.

Sensitivity is the proportion of those with the characteristic (\(\text{TP} + \text{FN}\)) that we identified with our measure (\(\text{TP}\)): \(\frac{\text{TP}}{\text{TP} + \text{FN}} = \frac{86}{86 + 14} = .86\). Specificity is the proportion of those who do not have the characteristic (\(\text{TN} + \text{FP}\)) that we correctly classify as not having the characteristic (\(\text{TN}\)): \(\frac{\text{TN}}{\text{TN} + \text{FP}} = \frac{1,478}{1,478 + 422} = .78\). PPV is the proportion of those who we classify as having the characteristic (\(\text{TP} + \text{FP}\)) who actually have the characteristic (\(\text{TP}\)): \(\frac{\text{TP}}{\text{TP} + \text{FP}} = \frac{86}{86 + 422} = .17\). NPV is the proportion of those we classify as not having the characteristic (\(\text{TN} + \text{FN}\)) who actually do not have the characteristic (\(\text{TN}\)): \(\frac{\text{TN}}{\text{TN} + \text{FN}} = \frac{1,478}{1,478 + 14} = .99\).

Sensitivity, specificity, PPV, and NPV are proportions, and their values therefore range from 0 to 1, where higher values reflect greater accuracy. With sensitivity, specificity, PPV, and NPV, we have a good snapshot of how accurate the measure is at a given cutoff. In our case, our measure is good at finding whom to reject (high sensitivity), but it is rejecting too many people who do not need to be rejected (lower PPV due to many FPs). Most people whom we classify as having the characteristic do not actually have the characteristic. However, the fact that we are over-rejecting could be okay depending on our goals, for instance, if we do not care about over-dropping (i.e., the PPV being low).

17.6.7.1 Some Accuracy Estimates Depend on the Cutoff

Sensitivity, specificity, PPV, and NPV differ based on the cutoff (i.e., threshold) for classification. Consider the following example. Aliens visit Earth, and they develop a test to determine whether a berry is edible or inedible.

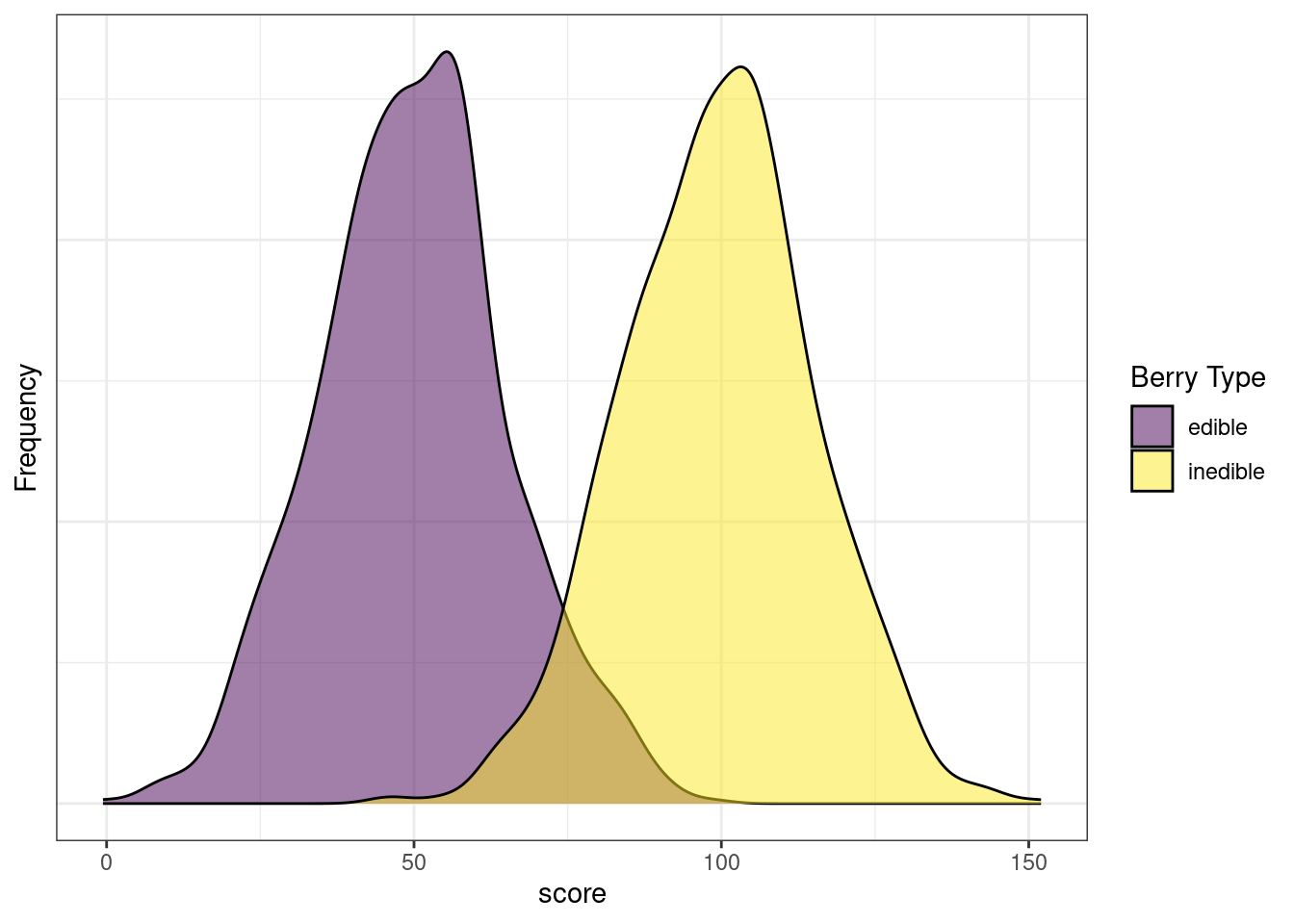

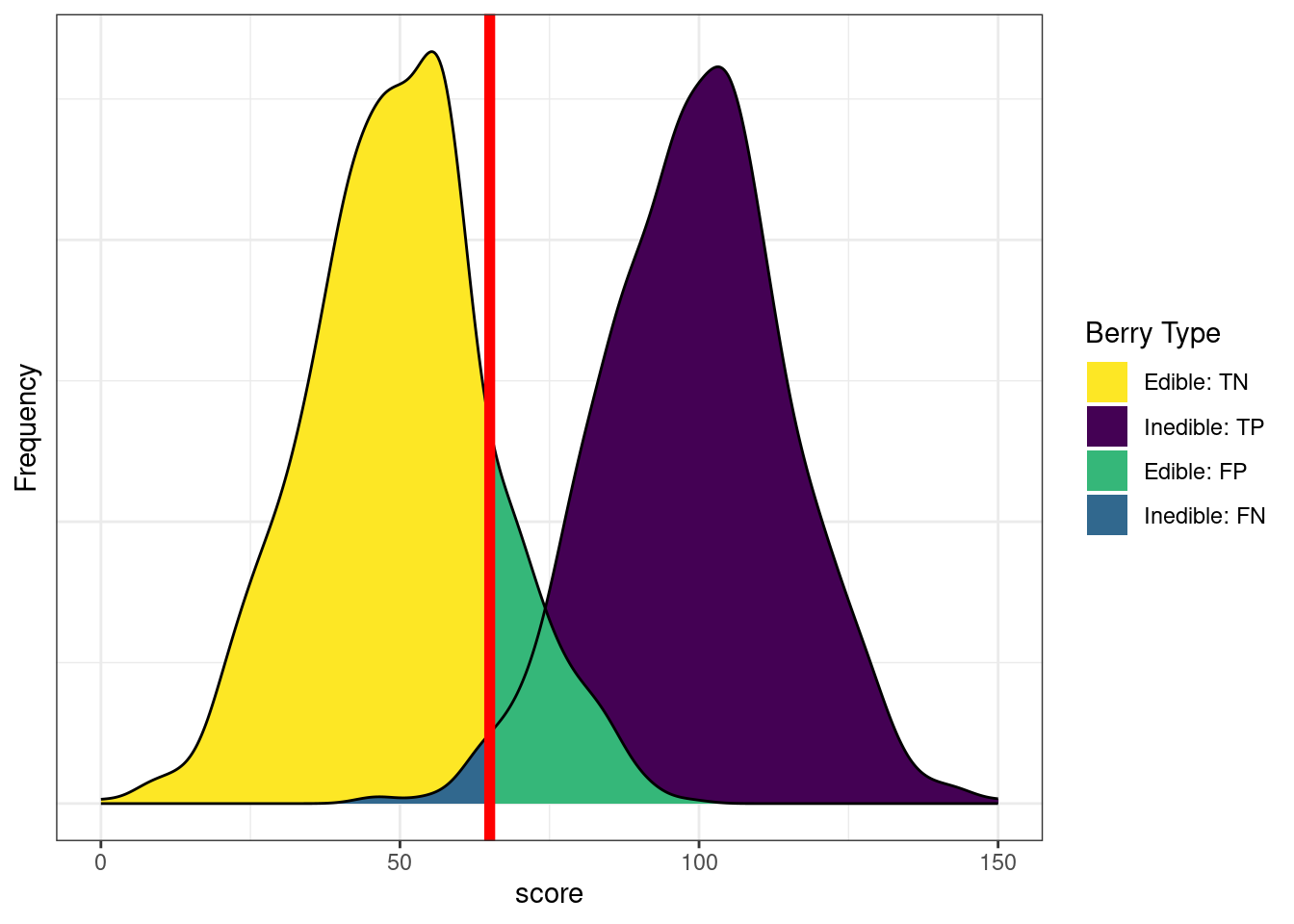

Figure 17.7 depicts the distributions of scores by berry type. Note how there are clearly two distinct distributions. However, the distributions overlap to some degree. Thus, any cutoff will have at least some inaccurate classifications. The extent of overlap of the distributions reflects the amount of measurement error of the measure with respect to the characteristic of interest.

Code

#No Cutoff

sampleSize <- 1000

edibleScores <- rnorm(sampleSize, 50, 15)

inedibleScores <- rnorm(sampleSize, 100, 15)

edibleData <- data.frame(

score = c(

edibleScores,

inedibleScores),

type = c(

rep("edible", sampleSize),

rep("inedible", sampleSize)))

cutoff <- 75

hist_edible <- density(

edibleScores,

from = 0,

to = 150) %$% # exposition pipe magrittr::`%$%`

data.frame(

x = x,

y = y) %>%

mutate(area = x >= cutoff)

hist_edible$type[hist_edible$area == TRUE] <- "edible_FP"

hist_edible$type[hist_edible$area == FALSE] <- "edible_TN"

hist_inedible <- density(

inedibleScores,

from = 0,

to = 150) %$% # exposition pipe magrittr::`%$%`

data.frame(

x = x,

y = y) %>%

mutate(area = x < cutoff)

hist_inedible$type[hist_inedible$area == TRUE] <- "inedible_FN"

hist_inedible$type[hist_inedible$area == FALSE] <- "inedible_TP"

density_data <- bind_rows(

hist_edible,

hist_inedible)

density_data$type <- factor(

density_data$type,

levels = c(

"edible_TN",

"inedible_TP",

"edible_FP",

"inedible_FN"))

ggplot(

data = edibleData,

aes(

x = score,

ymin = 0,

fill = type)) +

geom_density(alpha = .5) +

scale_fill_manual(

name = "Berry Type",

values = c(

viridis::viridis(2)[1],

viridis::viridis(2)[2])) +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(

axis.text.y = element_blank(),

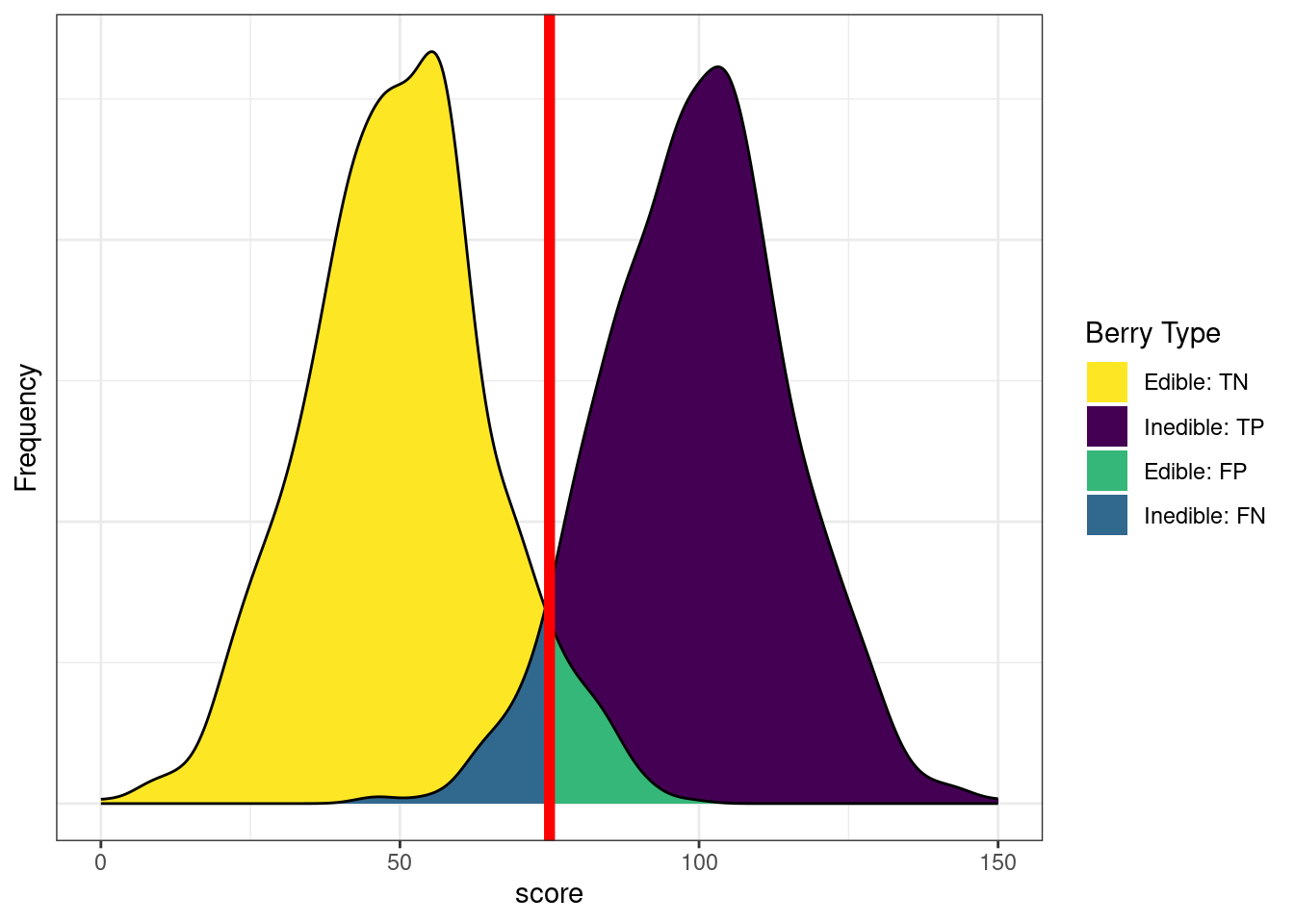

axis.ticks.y = element_blank())Figure 17.8 depicts the distributions of scores by berry type with a cutoff. The red line indicates the cutoff—the level above which berries are classified by the test as inedible. There are errors on each side of the cutoff. Below the cutoff, there are some false negatives (blue): inedible berries that are inaccurately classified as edible. Above the cutoff, there are some false positives (green): edible berries that are inaccurately classified as inedible. Costs of false negatives could include sickness or death from eating the inedible berries. Costs of false positives could include taking longer to find food, finding insufficient food, and starvation.

Code

#Standard Cutoff

ggplot(

data = density_data,

aes(

x = x,

ymin = 0,

ymax = y,

fill = type)) +

geom_ribbon(alpha = 1) +

scale_fill_manual(

name = "Berry Type",

values = c(

viridis::viridis(4)[4],

viridis::viridis(4)[1],

viridis::viridis(4)[3],

viridis::viridis(4)[2]),

breaks = c("edible_TN","inedible_TP","edible_FP","inedible_FN"),

labels = c("Edible: TN","Inedible: TP","Edible: FP","Inedible: FN")) +

geom_line(aes(y = y)) +

geom_vline(

xintercept = cutoff,

color = "red",

linewidth = 2) +

scale_x_continuous(name = "score") +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(

axis.text.y = element_blank(),

axis.ticks.y = element_blank())Based on our assessment goals, we might use a different selection ratio by changing the cutoff. Figure 17.9 depicts the distributions of scores by berry type when we raise the cutoff. There are now more false negatives (blue) and fewer false positives (green). If we raise the cutoff (to be more conservative), the number of false negatives increases and the number of false positives decreases. Consequently, as the cutoff increases, sensitivity and NPV decrease (because we have more false negatives), whereas specificity and PPV increase (because we have fewer false positives). A higher cutoff could be optimal if the costs of false positives are considered greater than the costs of false negatives. For instance, if the aliens cannot risk eating the inedible berries because the berries are fatal, and there are sufficient edible berries that can be found to feed the alien colony.

Code

#Raise the cutoff

cutoff <- 85

hist_edible <- density(

edibleScores,

from = 0,

to = 150) %$% # exposition pipe magrittr::`%$%`

data.frame(

x = x,

y = y) %>%

mutate(area = x >= cutoff)

hist_edible$type[hist_edible$area == TRUE] <- "edible_FP"

hist_edible$type[hist_edible$area == FALSE] <- "edible_TN"

hist_inedible <- density(

inedibleScores,

from = 0,

to = 150) %$% # exposition pipe magrittr::`%$%`

data.frame(

x = x,

y = y) %>%

mutate(area = x < cutoff)

hist_inedible$type[hist_inedible$area == TRUE] <- "inedible_FN"

hist_inedible$type[hist_inedible$area == FALSE] <- "inedible_TP"

density_data <- bind_rows(

hist_edible,

hist_inedible)

density_data$type <- factor(

density_data$type,

levels = c(

"edible_TN",

"inedible_TP",

"edible_FP",

"inedible_FN"))

ggplot(

data = density_data,

aes(

x = x,

ymin = 0,

ymax = y,

fill = type)) +

geom_ribbon(alpha = 1) +

scale_fill_manual(

name = "Berry Type",

values = c(

viridis::viridis(4)[4],

viridis::viridis(4)[1],

viridis::viridis(4)[3],

viridis::viridis(4)[2]),

breaks = c("edible_TN","inedible_TP","edible_FP","inedible_FN"),

labels = c("Edible: TN","Inedible: TP","Edible: FP","Inedible: FN")) +

geom_line(aes(y = y)) +

geom_vline(

xintercept = cutoff,

color = "red",

linewidth = 2) +

scale_x_continuous(name = "score") +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(

axis.text.y = element_blank(),

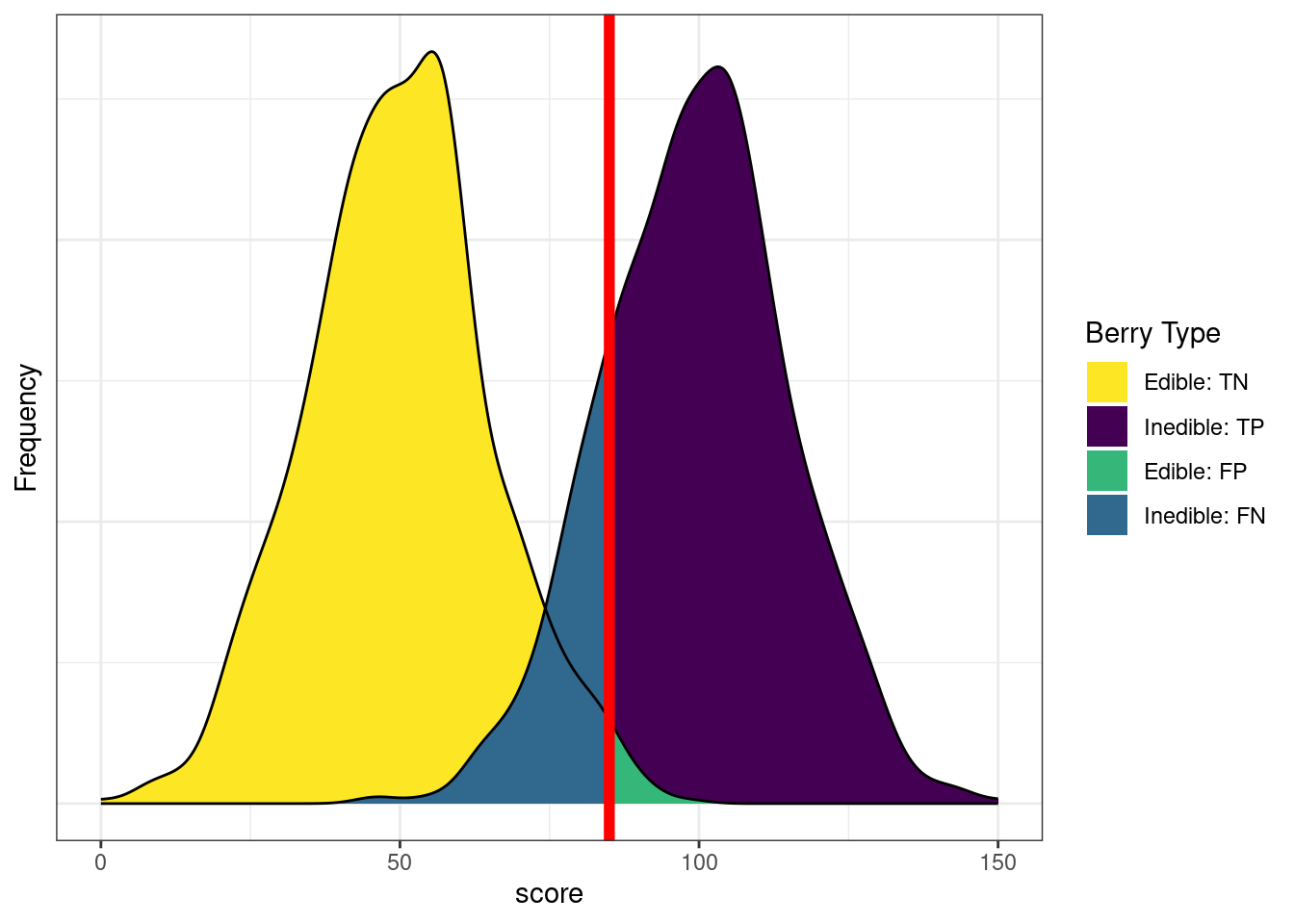

axis.ticks.y = element_blank())Figure 17.10 depicts the distributions of scores by berry type when we lower the cutoff. There are now fewer false negatives (blue) and more false positives (green). If we lower the cutoff (to be more liberal), the number of false negatives decreases and the number of false positives increases. Consequently, as the cutoff decreases, sensitivity and NPV increase (because we have fewer false negatives), whereas specificity and PPV decrease (because we have more false positives). A lower cutoff could be optimal if the costs of false negatives are considered greater than the costs of false positives. For instance, if the aliens cannot risk missing edible berries because they are in short supply relative to the size of the alien colony, and eating the inedible berries would, at worst, lead to minor, temporary discomfort.

Code

#Lower the cutoff

cutoff <- 65

hist_edible <- density(

edibleScores,

from = 0,

to = 150) %$% # exposition pipe magrittr::`%$%`

data.frame(

x = x,

y = y) %>%

mutate(area = x >= cutoff)

hist_edible$type[hist_edible$area == TRUE] <- "edible_FP"

hist_edible$type[hist_edible$area == FALSE] <- "edible_TN"

hist_inedible <- density(

inedibleScores,

from = 0,

to = 150) %$% # exposition pipe magrittr::`%$%`

data.frame(

x = x,

y = y) %>%

mutate(area = x < cutoff)

hist_inedible$type[hist_inedible$area == TRUE] <- "inedible_FN"

hist_inedible$type[hist_inedible$area == FALSE] <- "inedible_TP"

density_data <- bind_rows(

hist_edible,

hist_inedible)

density_data$type <- factor(

density_data$type,

levels = c(

"edible_TN",

"inedible_TP",

"edible_FP",

"inedible_FN"))

ggplot(

data = density_data,

aes(

x = x,

ymin = 0,

ymax = y,

fill = type)) +

geom_ribbon(alpha = 1) +

scale_fill_manual(

name = "Berry Type",

values = c(

viridis::viridis(4)[4],

viridis::viridis(4)[1],

viridis::viridis(4)[3],

viridis::viridis(4)[2]),

breaks = c("edible_TN","inedible_TP","edible_FP","inedible_FN"),

labels = c("Edible: TN","Inedible: TP","Edible: FP","Inedible: FN")) +

geom_line(aes(y = y)) +

geom_vline(

xintercept = cutoff,

color = "red",

linewidth = 2) +

scale_x_continuous(name = "score") +

scale_y_continuous(name = "Frequency") +

theme_bw() +

theme(

axis.text.y = element_blank(),

axis.ticks.y = element_blank())In sum, sensitivity and specificity differ based on the cutoff for classification. If we raise the cutoff, sensitivity and PPV increase (due to fewer false positives), whereas sensitivity and NPV decrease (due to more false negatives). If we lower the cutoff, sensitivity and NPV increase (due to fewer false negatives), whereas specificity and PPV decrease (due to more false positives). Thus, the optimal cutoff depends on how costly each type of error is: false negatives and false positives. If false negatives are more costly than false positives, we would set a low cutoff. If false positives are more costly than false negatives, we would set a high cutoff.

17.6.8 Set a Cutoff/Threshold

For evaluating sensitivity, specificity, positive predictive value, negative predictive value, and the receiver operating characteristic (ROC) curve, we need to make a dichotomous decision/prediction. For this example, our goal is to predict which Running Backs or Wide Receivers will score 300 or more fantasy points. We will predict a player as scoring 300 or more fantasy points for a given projection source if that projection source projected the player to score 300 or more fantasy points. Thus, our cutoff or threshold is 300 or more projected points. The same player has multiple rows in the data file—one for each projection source.

Code

projectionsWithActuals_seasonal_subset <- projectionsWithActuals_seasonal %>%

filter(position_group %in% c("RB","WR")) %>%

filter(season == 2024)

projectionsWithActuals_seasonal_subset$prediction <- ifelse(projectionsWithActuals_seasonal_subset$raw_points >= 300, 1, 0)

projectionsWithActuals_seasonal_subset$truth <- ifelse(projectionsWithActuals_seasonal_subset$fantasyPoints >= 300, 1, 0)

projectionsWithActuals_seasonal_subset$predictionFactor <- factor(

projectionsWithActuals_seasonal_subset$prediction,

levels = c(1,0),

labels = c("Prediction: Will Score 300+ Points", "Prediction: Will Not Score 300+ Points"))

projectionsWithActuals_seasonal_subset$truthFactor <- factor(

projectionsWithActuals_seasonal_subset$truth,

levels = c(1,0),

labels = c("Truth: Scored 300+ Points", "Truth: Did Not Score 300+ Points")

)

table(projectionsWithActuals_seasonal_subset$predictionFactor)

Prediction: Will Score 300+ Points Prediction: Will Not Score 300+ Points

57 2115

Truth: Scored 300+ Points Truth: Did Not Score 300+ Points

81 2129 17.6.9 Signal Detection Theory

Signal detection theory (SDT) is a probability-based theory for the detection of a given stimulus (signal) from a stimulus set that includes non-target stimuli (noise). SDT arose through the development of radar (RAdio Detection And Ranging) and sonar (SOund Navigation And Ranging) in World War II based on research on sensory-perception research. The military wanted to determine which objects on radar/sonar were enemy aircraft/submarines, and which were noise (e.g., different object in the environment or even just the weather itself). SDT allowed determining how many errors operators made (how accurate they were) and decomposing errors into different kinds of errors. SDT distinguishes between sensitivity and bias. In SDT, sensitivity (or discriminability) is how well an assessment distinguishes between a target stimulus and non-target stimuli (i.e., how well the assessment detects the target stimulus amid non-target stimuli). Bias is the extent to which the probability of a selection decision from the assessment is higher or lower than the true rate of the target stimulus.

Some radar/sonar operators were not as sensitive to the differences between signal and noise, due to factors such as age, ability to distinguish gradations of a signal, etc. People who showed low sensitivity (i.e., who were not as successful at distinguishing between signal and noise) were screened out because the military perceived sensitivity as a skill that was not easily taught. By contrast, other operators could distinguish signal from noise, but their threshold was too low or high—they could take in information, but their decisions tended to be wrong due to systematic bias or poor calibration. That is, they systematically over-rejected or under-rejected stimuli. Over-rejecting leads to many false negatives (i.e., saying that a stimulus is safe when it is not). Under-rejecting leads to many false positives (i.e., saying that a stimulus is harmful when it is not). A person who showed good sensitivity but systematic bias was considered more teach-able than a person who showed low sensitivity. Thus, radar and sonar operators were selected based on their sensitivity to distinguish signal from noise, and then were trained to improve the calibration so they reduce their systematic bias and do not systematically over- or under-reject.

Although SDT was originally developed for use in World War II, it now plays an important role in many areas of science and medicine. A medical application of SDT is tumor detection in radiology. Another application of SDT in society is using x-ray to detect bombs or other weapons. An example of applying SDT to fantasy football could be in the prediction (and evaluation) of whether or not a player scores a touchdown in a game.

SDT metrics of sensitivity include \(d'\) (“\(d\)-prime”), \(A\) (or \(A'\)), and the area under the receiver operating characteristic (ROC) curve. SDT metrics of bias include \(\beta\) (beta), \(c\), and \(b\).

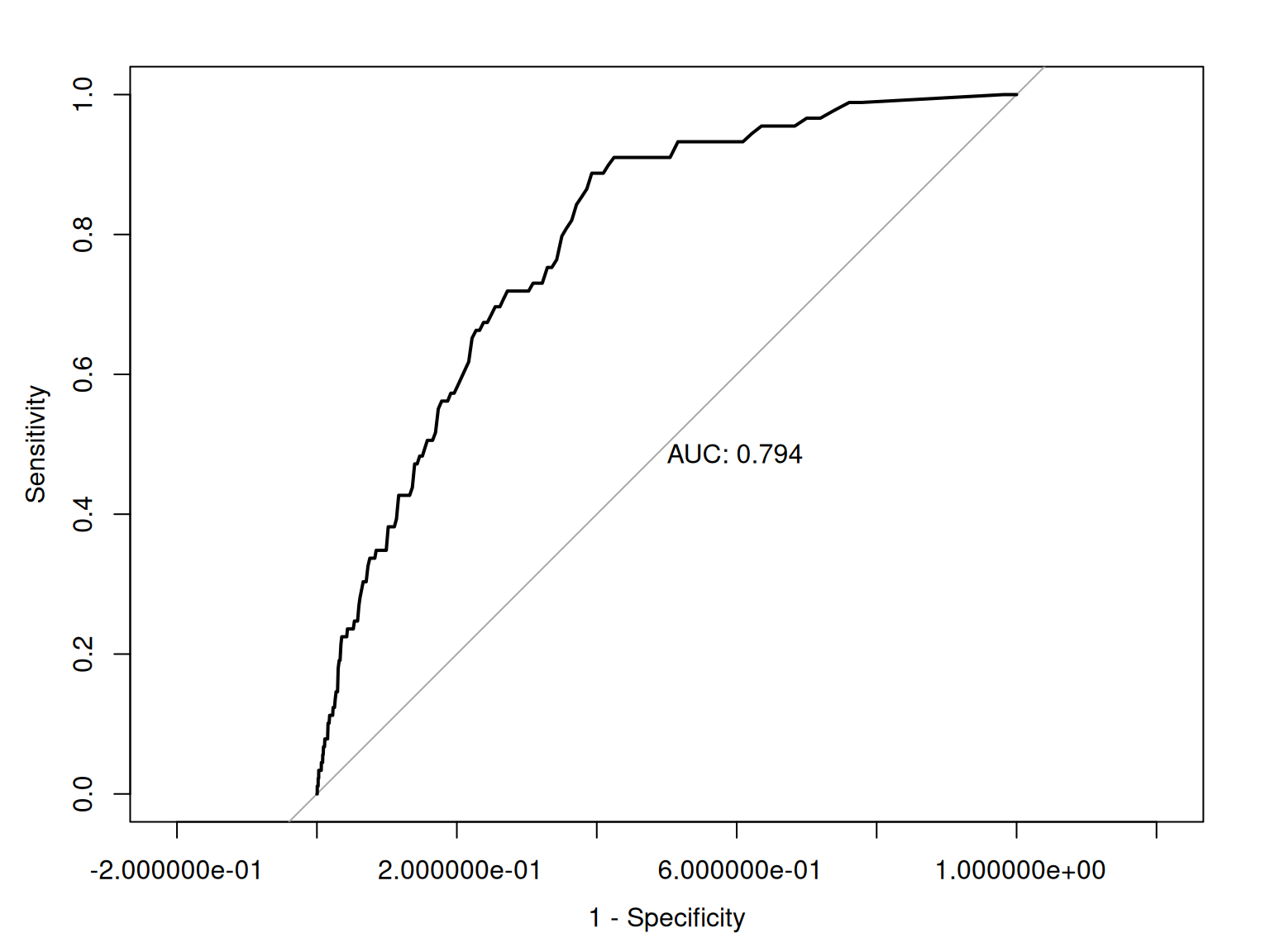

17.6.9.1 Receiver Operating Characteristic (ROC) Curve

The x-axis of the ROC curve is the false alarm rate or false positive rate (\(1 -\) specificity). The y-axis is the hit rate or true positive rate (sensitivity). We can trace the ROC curve as the combination between sensitivity and specificity at every possible cutoff. At a cutoff of zero (top right of ROC curve), we calculate sensitivity (1.0) and specificity (0) and plot it. At a cutoff of zero, the assessment tells us to make an action for every stimulus (i.e., it is the most liberal). We then gradually increase the cutoff, and plot sensitivity and specificity at each cutoff. As the cutoff increases, sensitivity decreases and specificity increases. We end at the highest possible cutoff, where the sensitivity is 0 and the specificity is 1.0 (i.e., we never make an action; i.e., it is the most conservative). Each point on the ROC curve corresponds to a pair of hit and false alarm rates (sensitivity and specificity) resulting from a specific cutoff value. Then, we can draw lines or a curve to connect the points.

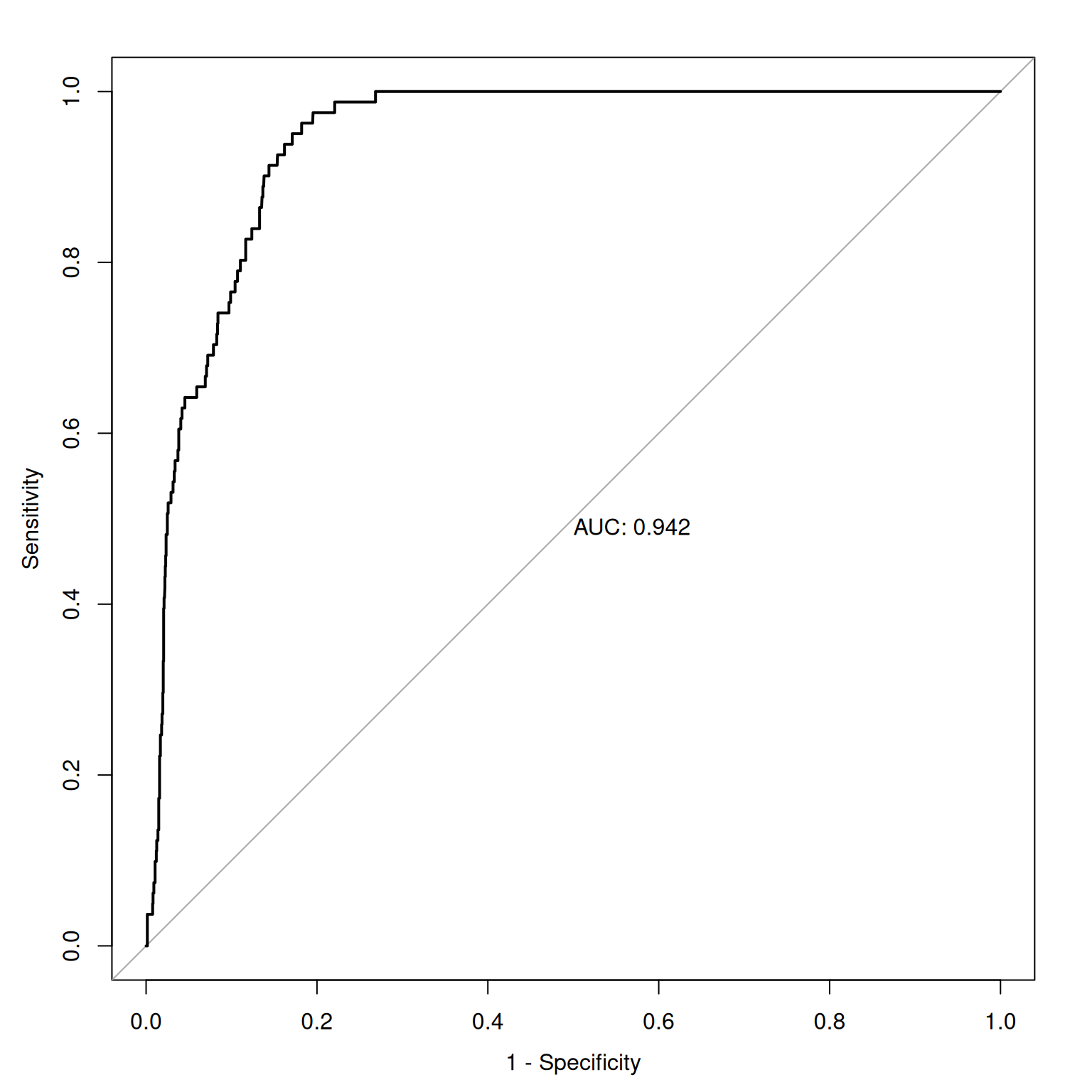

Figure 17.11 depicts an empirical ROC plot where lines are drawn to connect the hit and false alarm rates, in predicting Running Backs and Wide Receivers who score 300 or more fantasy points.

Code

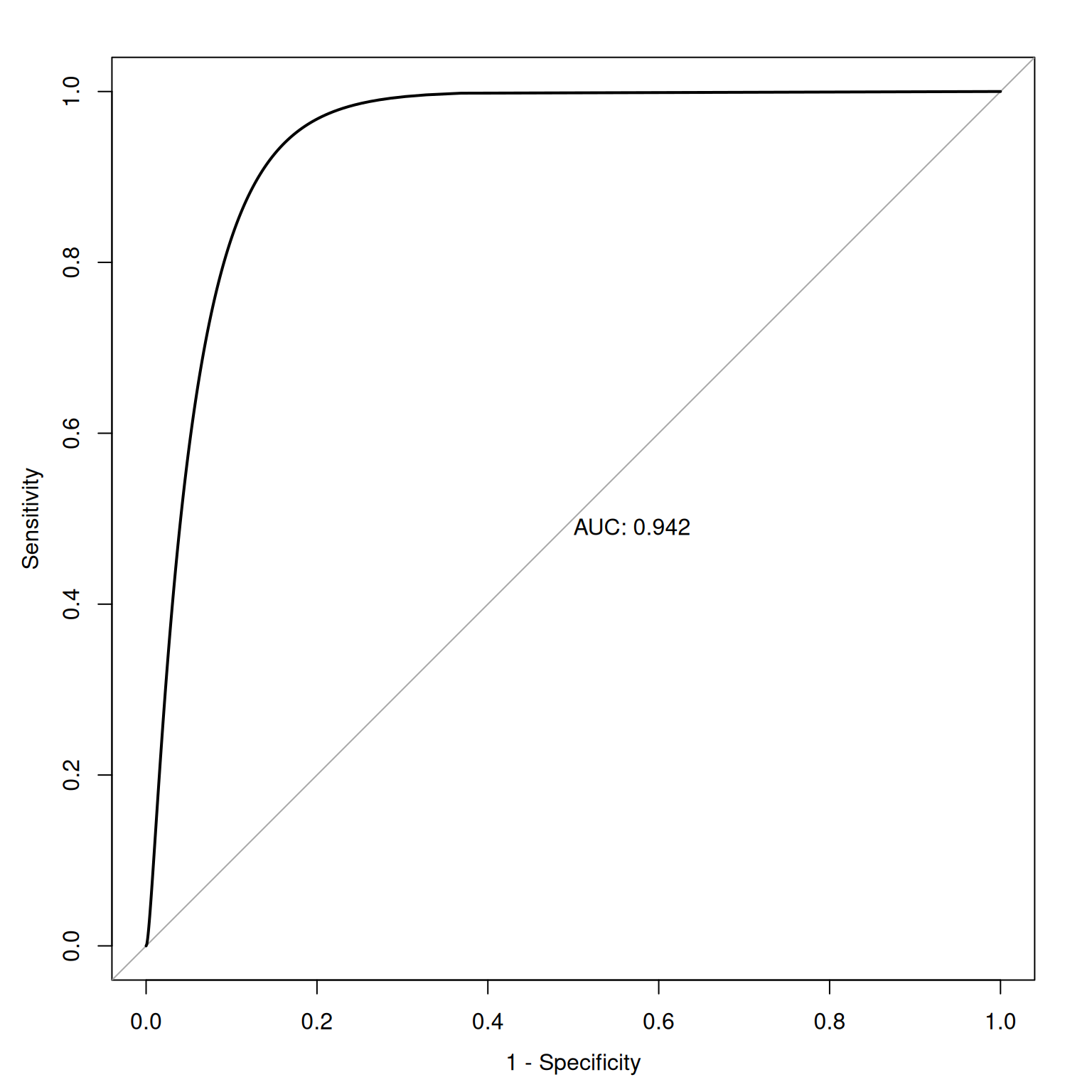

Figure 17.12 depicts an ROC curve where a smoothed and fitted curve is drawn to connect the hit and false alarm rates, in predicting Running Backs and Wide Receivers who score 300 or more fantasy points.

Code

17.6.9.1.1 Area Under the ROC Curve

ROC methods can be used to compare and compute the discriminative power of measurement devices free from the influence of selection ratios, base rates, and costs and benefits. An ROC analysis yields a quantitative index of how well an index predicts a signal of interest or can discriminate between different signals. ROC analysis can help tell us how often our assessment would be correct. If we randomly pick two observations, and we were right once and wrong once, we were 50% accurate. But this would be a useless measure because it reflects chance responding.

The geometrical area under the ROC curve reflects the discriminative accuracy of the measure. The index is called the area under the curve (AUC) of an ROC curve. AUC quantifies the discriminative power of an assessment. AUC is the probability that a randomly selected target and a randomly selected non-target is ranked correctly by the assessment method. AUC values range from 0.0 to 1.0, where chance accuracy is 0.5 as indicated by diagonal line in the ROC curve. That is, a measure can be useful to the extent that its ROC curve is above the diagonal line (i.e., its discriminative accuracy is above chance).

AUC is a threshold-independent accuracy index that applies across all possible cutoff values.

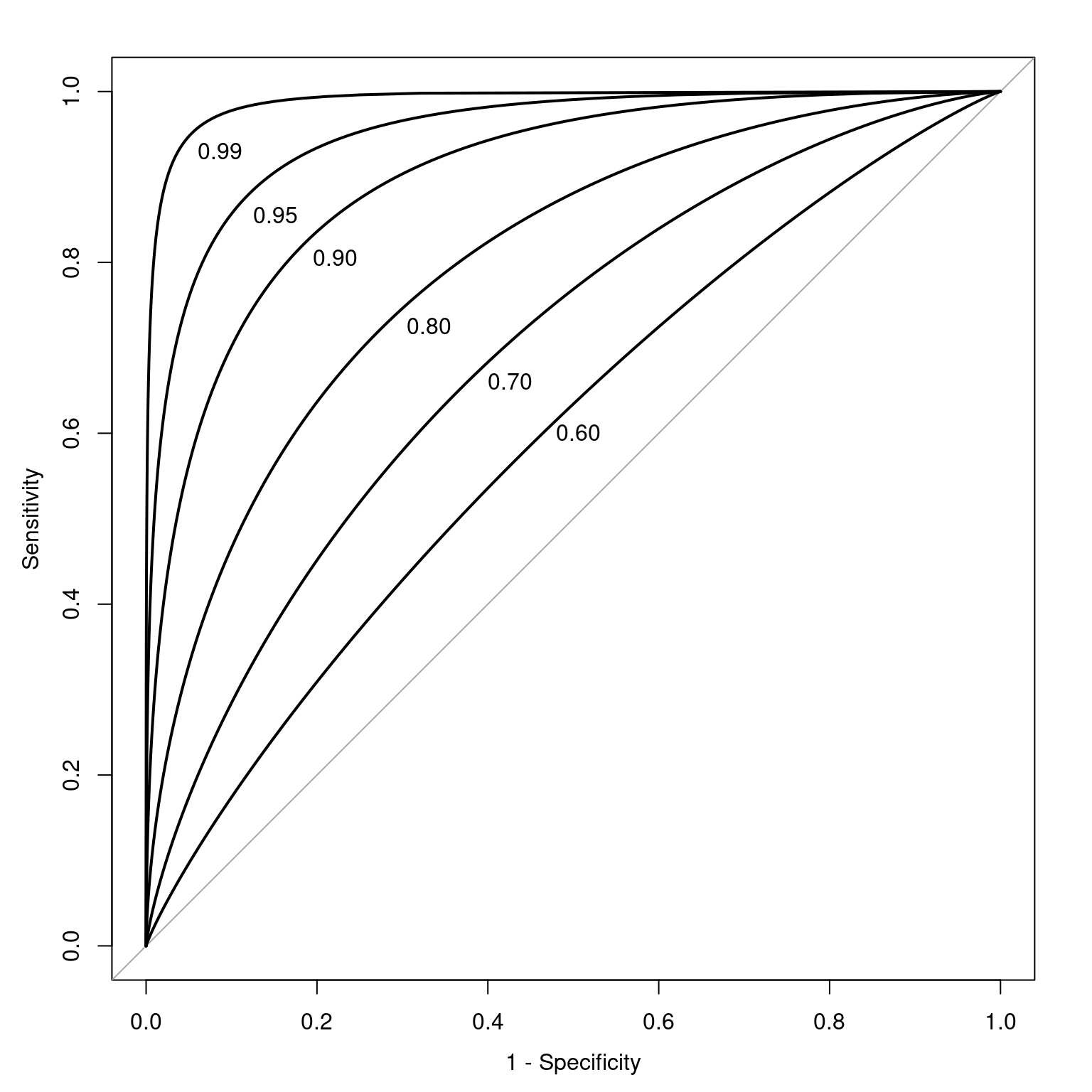

Figure 17.13 depicts ROC curves with a range of AUC values.

Code

set.seed(52242)

auc60 <- petersenlab::simulateAUC(.60, 50000)

auc70 <- petersenlab::simulateAUC(.70, 50000)

auc80 <- petersenlab::simulateAUC(.80, 50000)

auc90 <- petersenlab::simulateAUC(.90, 50000)

auc95 <- petersenlab::simulateAUC(.95, 50000)

auc99 <- petersenlab::simulateAUC(.99, 50000)

plot(

pROC::roc(

y ~ x,

auc60,

smooth = TRUE),

legacy.axes = TRUE,

print.auc = TRUE,

print.auc.x = .52,

print.auc.y = .61,

print.auc.pattern = "%.2f")

plot(

pROC::roc(

y ~ x,

auc70,

smooth = TRUE),

legacy.axes = TRUE,

print.auc = TRUE,

print.auc.x = .6,

print.auc.y = .67,

print.auc.pattern = "%.2f",

add = TRUE)

plot(

pROC::roc(

y ~ x,

auc80,

smooth = TRUE),

legacy.axes = TRUE,

print.auc = TRUE,

print.auc.x = .695,

print.auc.y = .735,

print.auc.pattern = "%.2f",

add = TRUE)

plot(

pROC::roc(

y ~ x,

auc90,

smooth = TRUE),

legacy.axes = TRUE,

print.auc = TRUE,

print.auc.x = .805,

print.auc.y = .815,

print.auc.pattern = "%.2f",

add = TRUE)

plot(

pROC::roc(

y ~ x,

auc95,

smooth = TRUE),

legacy.axes = TRUE,

print.auc = TRUE,

print.auc.x = .875,

print.auc.y = .865,

print.auc.pattern = "%.2f",

add = TRUE)

plot(

pROC::roc(

y ~ x,

auc99,

smooth = TRUE),

legacy.axes = TRUE,

print.auc = TRUE,

print.auc.x = .94,

print.auc.y = .94,

print.auc.pattern = "%.2f",

add = TRUE)As an example, given an AUC of .75, this says that the overall score of an individual who has the characteristic in question will be higher 75% of the time than the overall score of an individual who does not have the characteristic. In lay terms, AUC provides the probability that we will classify correctly based on our instrument if we were to randomly pick one good and one bad outcome. AUC is a stronger index of accuracy than percent accuracy, because you can have high percent accuracy just by going with the base rate. AUC tells us how much better than chance a measure is at discriminating outcomes. AUC is useful as a measure of general discriminative accuracy, and it tells us how accurate a measure is at all possible cutoffs. Knowing the accuracy of a measure at all possible cutoffs can be helpful for selecting the optimal cutoff, given the goals of the assessment. In reality, however, we may not be interested in all cutoffs because not all errors are equal in their costs.

If we lower the base rate, we would need a larger sample to get enough people to classify into each group. SDT/ROC methods are traditionally about dichotomous decisions (yes/no), not graded judgments. SDT/ROC methods can get messy with ordinal data that are more graded because you would have an AUC curve for each ordinal grouping.

17.6.10 Accuracy Indices

There are various accuracy indices we can use to evaluate the accuracy of predictions for categorical outcome variables. We have already described several accuracy indices, including percent accuracy, sensitivity, specificity, positive predictive value, negative predictive value, and area under the ROC curve. We describe these and other indices in greater detail below.

The petersenlab package (Petersen, 2025a) contains the petersenlab::accuracyAtCutoff() function that computes many accuracy indices for the prediction of categorical outcome variables. In the example below, we compute the accuracy of predictions for which Running Backs or Wide Receivers will score 300 or more fantasy points, using a cutoff for projected fantasy points of 300.

Code

The petersenlab package (Petersen, 2025a) contains the petersenlab::accuracyAtEachCutoff() function that computes many accuracy indices for the prediction of categorical outcome variables at each possible cutoff. In the example below, we compute the accuracy of predictions for which Running Backs or Wide Receivers will score 300 or more fantasy points, at each possible cutoff for projected fantasy points.

Code

There are also test calculators available online:

- http://araw.mede.uic.edu/cgi-bin/testcalc.pl [Schwartz (2006); archived at https://perma.cc/X8TF-7YBX]

- https://dlrs.shinyapps.io/shinyDLRs (Goodman et al., 2022)

17.6.10.1 Confusion Matrix aka 2x2 Accuracy Table aka Cross-Tabulation aka Contingency Table

A confusion matrix (aka 2x2 accuracy table, cross-tabulation table, or contigency table) is a matrix for categorical data that presents the predicted outcome on one dimension and the actual outcome (truth) on the other dimension. If the predictions and outcomes are dichotomous, the confusion matrix is a 2x2 matrix with two rows and two columns that represent four possible predicted-actual combinations (decision outcomes). In such a case, the confusion matrix provides a tabular count of each type of accurate cases (true positives and true negatives) versus the number of each type of error (false positives and false negatives), as shown in Figure 17.2. An example of a confusion matrix is in Figure 17.3.

Here is our 2x2 confusion matrix, predicting which Running Backs or Wide Receivers will score 300 or more fantasy points.

Code

projectionsWithActuals_seasonal_subset <- projectionsWithActuals_seasonal %>%

filter(position_group %in% c("RB","WR")) %>%

filter(season == 2024)

projectionsWithActuals_seasonal_subset$prediction <- ifelse(projectionsWithActuals_seasonal_subset$raw_points >= 300, 1, 0)

projectionsWithActuals_seasonal_subset$truth <- ifelse(projectionsWithActuals_seasonal_subset$fantasyPoints >= 300, 1, 0)

projectionsWithActuals_seasonal_subset$predictionFactor <- factor(

projectionsWithActuals_seasonal_subset$prediction,

levels = c(1,0),

labels = c("Prediction: Will Score 300+ Points", "Prediction: Will Not Score 300+ Points"))

projectionsWithActuals_seasonal_subset$truthFactor <- factor(

projectionsWithActuals_seasonal_subset$truth,

levels = c(1,0),

labels = c("Truth: Scored 300+ Points", "Truth: Did Not Score 300+ Points")

)

table(projectionsWithActuals_seasonal_subset$predictionFactor)

Prediction: Will Score 300+ Points Prediction: Will Not Score 300+ Points

57 2115

Truth: Scored 300+ Points Truth: Did Not Score 300+ Points

81 2129 17.6.10.1.1 Number

Code

Truth: Scored 300+ Points

Prediction: Will Score 300+ Points 20

Prediction: Will Not Score 300+ Points 61

Truth: Did Not Score 300+ Points

Prediction: Will Score 300+ Points 37

Prediction: Will Not Score 300+ Points 205417.6.10.1.2 Number With Margins Added

Code

Truth: Scored 300+ Points

Prediction: Will Score 300+ Points 20

Prediction: Will Not Score 300+ Points 61

Sum 81

Truth: Did Not Score 300+ Points Sum

Prediction: Will Score 300+ Points 37 57

Prediction: Will Not Score 300+ Points 2054 2115

Sum 2091 217217.6.10.1.3 Proportions

Code

Truth: Scored 300+ Points

Prediction: Will Score 300+ Points 0.009208103

Prediction: Will Not Score 300+ Points 0.028084715

Truth: Did Not Score 300+ Points

Prediction: Will Score 300+ Points 0.017034991

Prediction: Will Not Score 300+ Points 0.94567219217.6.10.1.4 Proportions With Margins Added

Code

Truth: Scored 300+ Points

Prediction: Will Score 300+ Points 0.009208103

Prediction: Will Not Score 300+ Points 0.028084715

Sum 0.037292818

Truth: Did Not Score 300+ Points

Prediction: Will Score 300+ Points 0.017034991

Prediction: Will Not Score 300+ Points 0.945672192

Sum 0.962707182

Sum

Prediction: Will Score 300+ Points 0.026243094

Prediction: Will Not Score 300+ Points 0.973756906

Sum 1.00000000017.6.10.2 True Positives (TP)

True positives (TPs) are instances in which a positive classification (e.g., stating that a disease is present for a person) is correct—that is, the test says that a classification is present, and the classification is present. True positives are also called valid positives (VPs) or hits. Higher values reflect greater accuracy. The formula for true positives is in Equation 17.4:

\[ \begin{aligned} \text{TP} &= \text{BR} \times \text{SR} \times N \end{aligned} \tag{17.4}\]

17.6.10.3 True Negatives (TN)

True negatives (TNs) are instances in which a negative classification (e.g., stating that a disease is absent for a person) is correct—that is, the test says that a classification is not present, and the classification is actually not present. True negatives are also called valid negatives (VNs) or correct rejections. Higher values reflect greater accuracy. The formula for true negatives is in Equation 17.5:

\[ \begin{aligned} \text{TN} &= (1 - \text{BR}) \times (1 - \text{SR}) \times N \end{aligned} \tag{17.5}\]

17.6.10.4 False Positives (FP)

False positives (FPs) are instances in which a positive classification (e.g., stating that a disease is present for a person) is incorrect—that is, the test says that a classification is present, and the classification is not present. False positives are also called false alarms (FAs). Lower values reflect greater accuracy. The formula for false positives is in Equation Equation 17.6:

\[ \begin{aligned} \text{FP} &= (1 - \text{BR}) \times \text{SR} \times N \end{aligned} \tag{17.6}\]

17.6.10.5 False Negatives (FN)

False negatives (FNs) are instances in which a negative classification (e.g., stating that a disease is absent for a person) is incorrect—that is, the test says that a classification is not present, and the classification is present. False negatives are also called misses. Lower values reflect greater accuracy. The formula for false negatives is in Equation 17.7:

\[ \begin{aligned} \text{FN} &= \text{BR} \times (1 - \text{SR}) \times N \end{aligned} \tag{17.7}\]

17.6.10.6 Selection Ratio (SR)

The selection ratio (SR) is the marginal probability of selection, independent of other things: \(P(R_i)\). It is not an index of accuracy, per se. In medicine, the selection ratio is the proportion of people who test positive for the disease. In fantasy football, the selection ratio is the proportion of players who you predict will show a given outcome. For example, if you are trying to predict the players who will score a touchdown in a game, the selection ratio is the proportion of players who you predict will score a touchdown. The formula for calculating the selection ratio is in Equation 17.8.

\[ \begin{aligned} \text{SR} &= P(R_i) \\ &= \frac{\text{TP} + \text{FP}}{N} \end{aligned} \tag{17.8}\]

17.6.10.7 Base Rate (BR)

The base rate (BR) of a classification is its marginal probability, independent of other things: \(P(C_i)\). It is not an index of accuracy, per se. In medicine, the base rate of a disease is its prevalence in the population, as in Equation 17.9. Without additional information, the base rate is used as the initial pretest probability. In fantasy football, the base rate is the proportion of players who actually show the particular outcome. For example, if you are trying to predict the players who will score a touchdown in a game, the base rate is the proportion of players who actually score a touchdown in the game. The formula for calculating the selection ratio is in Equation 17.9.

\[ \begin{aligned} \text{BR} &= P(C_i) \\ &= \frac{\text{TP} + \text{FN}}{N} \end{aligned} \tag{17.9}\]

17.6.10.8 Pretest Odds

The pretest odds of a classification can be estimated using the pretest probability (i.e., base rate). To convert a probability to odds, divide the probability by one minus that probability, as in Equation 17.10.

\[ \begin{aligned} \text{pretest odds} &= \frac{\text{pretest probability}}{1 - \text{pretest probability}} \\ \end{aligned} \tag{17.10}\]

17.6.10.9 Percent Accuracy

Percent Accuracy is also called overall accuracy. Higher values reflect greater accuracy. The formula for percent accuracy is in Equation 17.11. Percent accuracy has several problems. First, it treats all errors (FP and FN) as equally important. However, in practice, it is rarely the case that false positives and false negatives are equally important. Second, percent accuracy can be misleading because it is highly influenced by base rates. You can have a high percent accuracy by predicting from the base rate and saying that no one has the characteristic (if the base rate is low) or that everyone has the characteristic (if the base rate is high). Thus, it is also important to consider other aspects of accuracy.

\[ \text{Percent Accuracy} = 100\% \times \frac{\text{TP} + \text{TN}}{N} \tag{17.11}\]

17.6.10.10 Percent Accuracy by Chance

The formula for calculating percent accuracy by chance is in Equation 17.12.

\[ \begin{aligned} \text{Percent Accuracy by Chance} &= 100\% \times [P(\text{TP}) + P(\text{TN})] \\ &= 100\% \times \{(\text{BR} \times {\text{SR}}) + [(1 - \text{BR}) \times (1 - \text{SR})]\} \end{aligned} \tag{17.12}\]

17.6.10.11 Percent Accuracy Predicting from the Base Rate

Predicting from the base rate is going with the most likely outcome in every prediction. If the base rate is less than .50, it would involve predicting that the condition is absent for every case. If the base rate is .50 or above, it would involve predicting that the condition is present for every case. Predicting from the base rate is a special case of percent accuracy by chance when the selection ratio is set to either one (if the base rate \(\geq\) .5) or zero (if the base rate < .5).

17.6.10.12 Relative Improvement Over Chance (RIOC)

Relative improvement over chance (RIOC) is a prediction’s improvement over chance as a proportion of the maximum possible improvement over chance, as described by Farrington & Loeber (1989). Higher values reflect greater accuracy. The formula for calculating RIOC is in Equation 17.13.

\[ \begin{aligned} \text{relative improvement over chance (RIOC)} &= \frac{\text{total correct} - \text{chance correct}}{\text{maximum correct} - \text{chance correct}} \\ \end{aligned} \tag{17.13}\]

17.6.10.13 Relative Improvement Over Predicting from the Base Rate

Relative improvement over predicting from the base rate is a prediction’s improvement over predicting from the base rate as a proportion of the maximum possible improvement over predicting from the base rate. Higher values reflect greater accuracy. The formula for calculating relative improvement over predicting from the base rate is in Equation 17.14.

\[ \scriptsize \begin{aligned} \text{relative improvement over predicting from base rate} &= \frac{\text{total correct} - \text{correct by predicting from base rate}}{\text{maximum correct} - \text{correct by predicting from base rate}} \\ \end{aligned} \tag{17.14}\]

17.6.10.14 Sensitivity (SN)

Sensitivity (SN) is also called true positive rate (TPR), hit rate (HR), or recall. Sensitivity is the conditional probability of a positive test given that the person has the condition: \(P(R|C)\). Higher values reflect greater accuracy. The formula for calculating sensitivity is in Equation 17.15. As described in Section 17.6.7.1, as the cutoff increases (becomes more conservative), sensitivity decreases. As the cutoff decreases, sensitivity increases.

\[ \begin{aligned} \text{sensitivity (SN)} &= P(R|C) \\ &= \frac{\text{TP}}{\text{TP} + \text{FN}} = \frac{\text{TP}}{N \times \text{BR}} = 1 - \text{FNR} \end{aligned} \tag{17.15}\]

17.6.10.15 Specificity (SP)

Specificity (SP) is also called true negative rate (TNR) or selectivity. Specificity is the conditional probability of a negative test given that the person does not have the condition: \(P(\text{not } R|\text{not } C)\). Higher values reflect greater accuracy. The formula for calculating specificity is in Equation 17.16. As described in Section 17.6.7.1, as the cutoff increases (becomes more conservative), specificity increases. As the cutoff decreases, specificity decreases.

\[ \begin{aligned} \text{specificity (SP)} &= P(\text{not } R|\text{not } C) \\ &= \frac{\text{TN}}{\text{TN} + \text{FP}} = \frac{\text{TN}}{N (1 - \text{BR})} = 1 - \text{FPR} \end{aligned} \tag{17.16}\]

17.6.10.16 False Negative Rate (FNR)

The false negative rate (FNR) is also called the miss rate. The false negative rate is the conditional probability of a negative test given that the person has the condition: \(P(\text{not } R|C)\). Lower values reflect greater accuracy. The formula for calculating false negative rate is in Equation 17.17.

\[ \begin{aligned} \text{false negative rate (FNR)} &= P(\text{not } R|C) \\ &= \frac{\text{FN}}{\text{FN} + \text{TP}} = \frac{\text{FN}}{N \times \text{BR}} = 1 - \text{TPR} \end{aligned} \tag{17.17}\]

17.6.10.17 False Positive Rate (FPR)

The false positive rate (FPR) is also called the false alarm rate (FAR) or fall-out. The false positive rate is the conditional probability of a positive test given that the person does not have the condition: \(P(R|\text{not } C)\). Lower values reflect greater accuracy. The formula for calculating false positive rate is in Equation 17.18:

\[ \begin{aligned} \text{false positive rate (FPR)} &= P(R|\text{not } C) \\ &= \frac{\text{FP}}{\text{FP} + \text{TN}} = \frac{\text{FP}}{N (1 - \text{BR})} = 1 - \text{TNR} \end{aligned} \tag{17.18}\]

17.6.10.18 Positive Predictive Value (PPV)

The positive predictive value (PPV) is also called the positive predictive power (PPP) or precision. Many people confuse sensitivity (\(P(R|C)\)) with its inverse conditional probability, PPV (\(P(C|R)\)). PPV is the conditional probability of having the condition given a positive test: \(P(C|R)\). Higher values reflect greater accuracy. The formula for calculating positive predictive value is in Equation 17.19.

PPV can be low even when sensitivity is high because it depends not only on sensitivity, but also on specificity and the base rate. Because PPV depends on the base rate, PPV is not an intrinsic property of a measure. The same measure will have a different PPV in different contexts with different base rates (Treat & Viken, 2023). As described in Section 17.6.7.1, as the base rate increases, PPV increases. As the base rate decreases, PPV decreases. PPV also differs as a function of the cutoff. As described in Section 17.6.7.1, as the cutoff increases (becomes more conservative), PPV increases. As the cutoff decreases (becomes more liberal), PPV decreases.

\[ \small \begin{aligned} \text{positive predictive value (PPV)} &= P(C|R) \\ &= \frac{\text{TP}}{\text{TP} + \text{FP}} = \frac{\text{TP}}{N \times \text{SR}}\\ &= \frac{\text{sensitivity} \times {\text{BR}}}{\text{sensitivity} \times {\text{BR}} + [(1 - \text{specificity}) \times (1 - \text{BR})]} \end{aligned} \tag{17.19}\]

17.6.10.19 Negative Predictive Value (NPV)

The negative predictive value (NPV) is also called the negative predictive power (NPP). Many people confuse specificity (\(P(\text{not } R|\text{not } C)\)) with its inverse conditional probability, NPV (\(P(\text{not } C| \text{not } R)\)). NPV is the conditional probability of not having the condition given a negative test: \(P(\text{not } C| \text{not } R)\). Higher values reflect greater accuracy. The formula for calculating negative predictive value is in Equation 17.20.

NPV can be low even when specificity is high because it depends not only on specificity, but also on sensitivity and the base rate. Because NPV depends on the base rate, NPV is not an intrinsic property of a measure. The same measure will have a different NPV in different contexts with different base rates (Treat & Viken, 2023). As described in Section 17.6.7.1, as the base rate increases, NPV decreases. As the base rate decreases, NPV increases. NPV also differs as a function of the cutoff. As described in Section 17.6.7.1, as the cutoff increases (becomes more conservative), NPV decreases. As the cutoff decreases (becomes more liberal), NPV decreases.

\[ \small \begin{aligned} \text{negative predictive value (NPV)} &= P(\text{not } C|\text{not } R) \\ &= \frac{\text{TN}}{\text{TN} + \text{FN}} = \frac{\text{TN}}{N(\text{1 - SR})}\\ &= \frac{\text{specificity} \times (1-{\text{BR}})}{\text{specificity} \times (1-{\text{BR}}) + [(1 - \text{sensitivity}) \times \text{BR})]} \end{aligned} \tag{17.20}\]

17.6.10.20 False Discovery Rate (FDR)

Many people confuse the false positive rate (\(P(R|\text{not } C)\)) with its inverse conditional probability, the false discovery rate (\(P(\text{not } C| R)\)). The false discovery rate (FDR) is the conditional probability of not having the condition given a positive test: \(P(\text{not } C| R)\). Lower values reflect greater accuracy. The formula for calculating false discovery rate is in Equation 17.21.

\[ \begin{aligned} \text{false discovery rate (FDR)} &= P(\text{not } C|R) \\ &= \frac{\text{FP}}{\text{FP} + \text{TP}} = 1 - \text{PPV} \end{aligned} \tag{17.21}\]

17.6.10.21 False Omission Rate (FOR)

Many people confuse the false negative rate (\(P(\text{not } R|C)\)) with its inverse conditional probability, the false omission rate (\(P(C|\text{not } R)\)). The false omission rate (FOR) is the conditional probability of having the condition given a negative test: \(P(C|\text{not } R)\). Lower values reflect greater accuracy. The formula for calculating false omission rate is in Section 17.6.10.21.

\[ \begin{aligned} \text{false omission rate (FOR)} &= P(C|\text{not } R) \\ &= \frac{\text{FN}}{\text{FN} + \text{TN}} = 1 - \text{NPV} \end{aligned} \tag{17.22}\]

17.6.10.22 Youden’s J Statistic

Youden’s J statistic is also called Youden’s Index or informedness. Youden’s J statistic is the sum of sensitivity and specificity (and subtracting one). Higher values reflect greater accuracy. The formula for calculating Youden’s J statistic is in Equation 17.23.

\[ \begin{aligned} \text{Youden's J statistic} &= \text{sensitivity} + \text{specificity} - 1 \end{aligned} \tag{17.23}\]

17.6.10.23 Balanced Accuracy

Balanced accuracy is the average of sensitivity and specificity. Higher values reflect greater accuracy. The formula for calculating balanced accuracy is in Equation 17.24.

\[ \begin{aligned} \text{balanced accuracy} &= \frac{\text{sensitivity} + \text{specificity}}{2} \end{aligned} \tag{17.24}\]

17.6.10.24 F-Score

The F-score combines precision (positive predictive value) and recall (sensitivity), where \(\beta\) indicates how many times more important sensitivity is than the positive predictive value. If sensitivity and the positive predictive value are equally important, \(\beta = 1\), and the F-score is called the \(F_1\) score. Higher values reflect greater accuracy. The formula for calculating the F-score is in Equation 17.25.

\[ \begin{aligned} F_\beta &= (1 + \beta^2) \cdot \frac{\text{positive predictive value} \cdot \text{sensitivity}}{(\beta^2 \cdot \text{positive predictive value}) + \text{sensitivity}} \\ &= \frac{(1 + \beta^2) \cdot \text{TP}}{(1 + \beta^2) \cdot \text{TP} + \beta^2 \cdot \text{FN} + \text{FP}} \end{aligned} \tag{17.25}\]

The formula for calculating the \(F_1\) score is in Equation 17.26.

\[ \begin{aligned} F_1 &= \frac{2 \cdot \text{positive predictive value} \cdot \text{sensitivity}}{(\text{positive predictive value}) + \text{sensitivity}} \\ &= \frac{2 \cdot \text{TP}}{2 \cdot \text{TP} + \text{FN} + \text{FP}} \end{aligned} \tag{17.26}\]

17.6.10.25 Matthews Correlation Coefficient (MCC)

The Matthews correlation coefficient (MCC) is also called the phi coefficient. It is a correlation coefficient between predicted and observed values from a binary classification. Higher values reflect greater accuracy. The formula for calculating the MCC is in Equation 17.27.

\[ \begin{aligned} \text{MCC} &= \frac{\text{TP} \times \text{TN} - \text{FP} \times \text{FN}}{\sqrt{(\text{TP} + \text{FP})(\text{TP} + \text{FN})(\text{TN} + \text{FP})(\text{TN} + \text{FN})}} \end{aligned} \tag{17.27}\]

17.6.10.26 Diagnostic Odds Ratio

The diagnostic odds ratio is the odds of a positive test among people with the condition relative to the odds of a positive test among people without the condition. Higher values reflect greater accuracy. The formula for calculating the diagnostic odds ratio is in Equation 17.28. If the predictor is bad, the diagnostic odds ratio could be less than one, and values can go up from there. If the diagnostic odds ratio is greater than 2, we take the odds ratio seriously because we are twice as likely to predict accurately than inaccurately. However, the diagnostic odds ratio ignores/hides base rates. When interpreting the diagnostic odds ratio, it is important to keep in mind the practical significance, because otherwise it is not very meaningful. Consider a risk factor that has a diagnostic odds ratio of 3 for tuberculosis, i.e., it puts you at 3 times as likely to develop tuberculosis. The prevalence of tuberculosis is relatively low. Assuming the prevalence of tuberculosis is less than 1/10th of 1%, your risk of developing tuberculosis is still very low even if the risk factor (with a diagnostic odds ratio of 3) is present.

\[ \begin{aligned} \text{diagnostic odds ratio} &= \frac{\text{TP} \times \text{TN}}{\text{FP} \times \text{FN}} \\ &= \frac{\text{sensitivity} \times \text{specificity}}{(1 - \text{sensitivity}) \times (1 - \text{specificity})} \\ &= \frac{\text{PPV} \times \text{NPV}}{(1 - \text{PPV}) \times (1 - \text{NPV})} \\ &= \frac{\text{LR+}}{\text{LR}-} \end{aligned} \tag{17.28}\]

17.6.10.27 Diagnostic Likelihood Ratio

The diagnostic likelihood ratio is described in Section 16.8.2.1. There are two types of diagnostic likelihood ratios: the positive likelihood ratio and the negative likelihood ratio.

17.6.10.27.1 Positive Likelihood Ratio (LR+)

The positive likelihood ratio (LR+) is described in Section 16.8.2.1.1. The formula for calculating the positive likelihood ratio is in Equation 16.22.

17.6.10.27.2 Negative Likelihood Ratio (LR−)

The negative likelihood ratio (LR−) is described in Section 16.8.2.1.2. The formula for calculating the negative likelihood ratio is in Equation 16.22.

17.6.10.28 Posttest Odds

As presented in Equation 16.21, the posttest (or posterior) odds are equal to the pretest odds multiplied by the likelihood ratio. The posttest odds and posttest probability can be useful to calculate when the pretest probability is different from the pretest probability (or prevalence) of the classification. For instance, you might use a different pretest probability if a test result is already known and you want to know the updated posttest probability after conducting a second test. The formula for calculating posttest odds is in Equation 17.29.

\[ \begin{aligned} \text{posttest odds} &= \text{pretest odds} \times \text{likelihood ratio} \\ \end{aligned} \tag{17.29}\]

For calculating the posttest odds of a true positive compared to a false positive, we use the positive likelihood ratio below. We would use the negative likelihood ratio if we wanted to calculate the posttest odds of a false negative compared to a true negative.

17.6.10.29 Posttest Probability

The posttest probability is the probability of having the characteristic given a test result. When the base rate is used as the pretest probability, the posttest probability given a positive test is equal to positive predictive value. To convert odds to a probability, divide the odds by one plus the odds, as is in Equation 17.30.

\[ \begin{aligned} \text{posttest probability} &= \frac{\text{posttest odds}}{1 + \text{posttest odds}} \end{aligned} \tag{17.30}\]

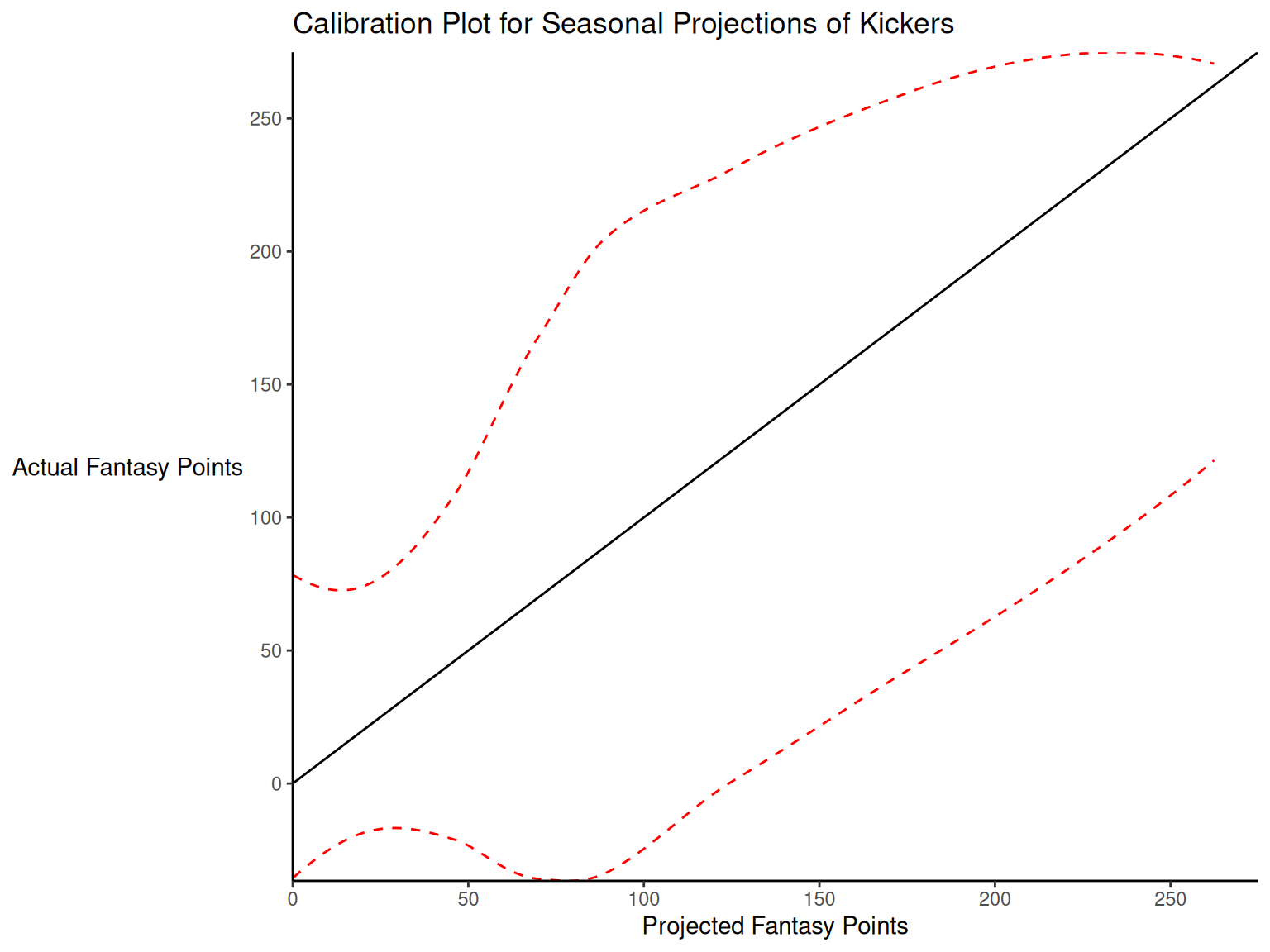

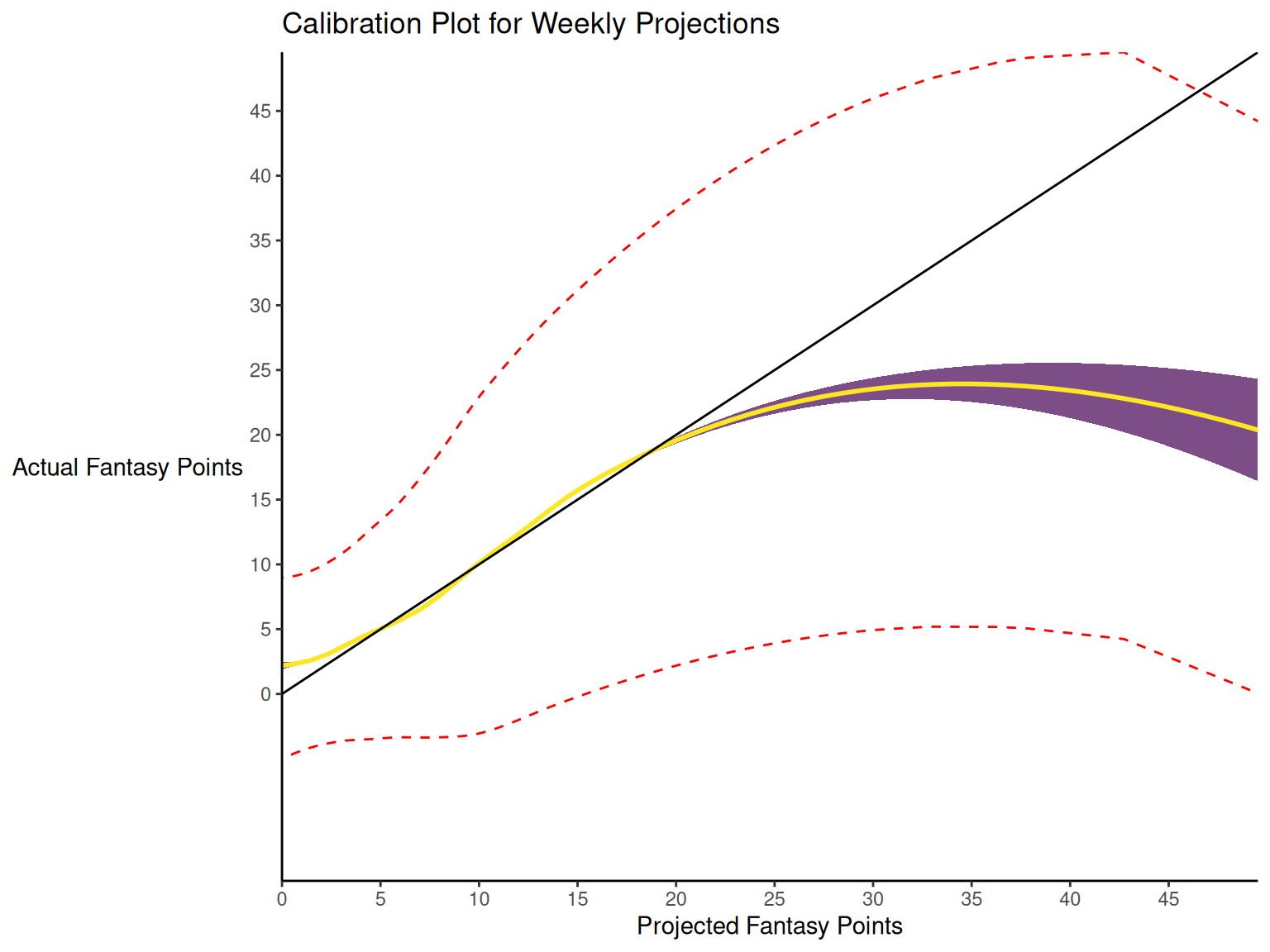

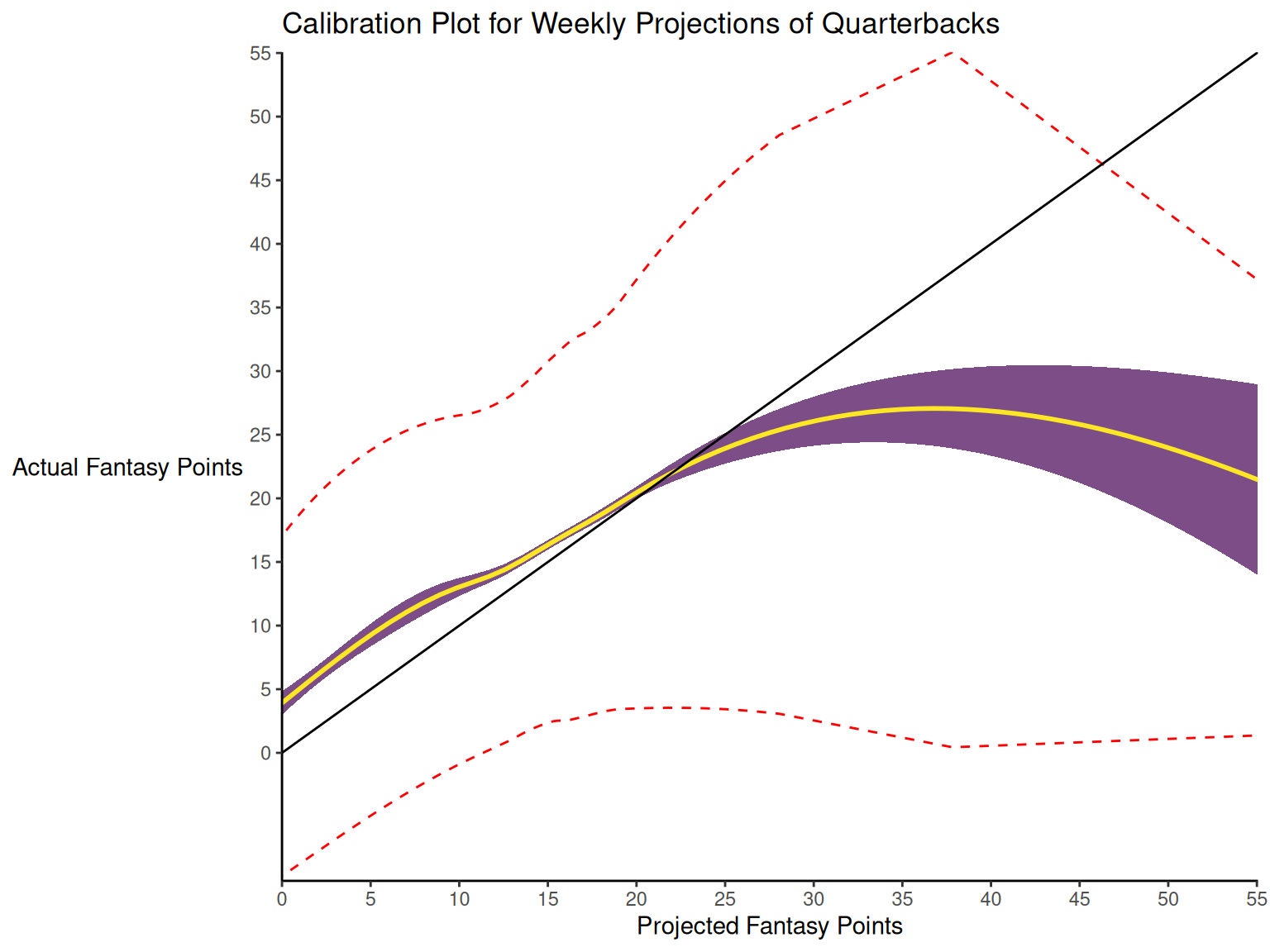

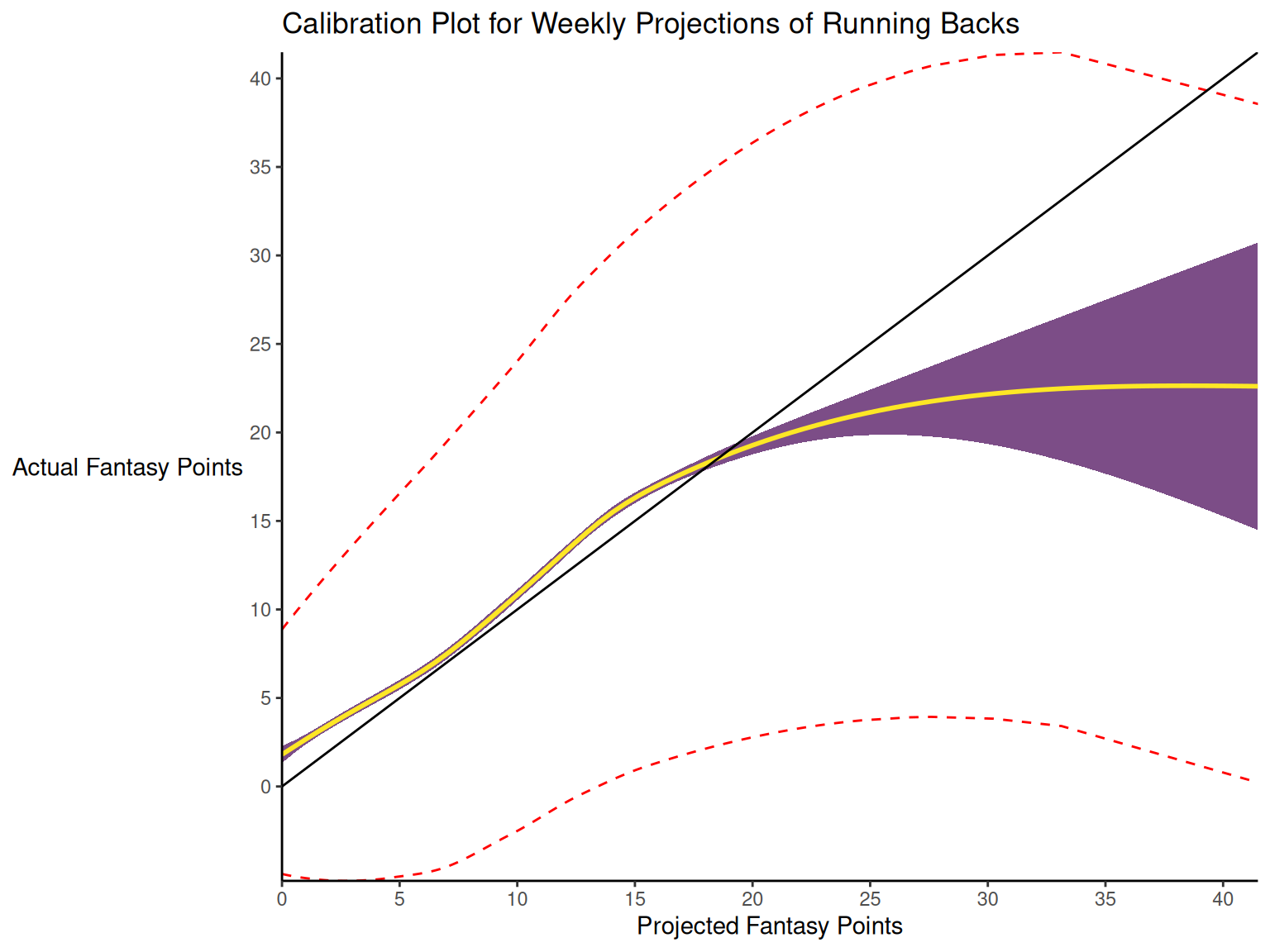

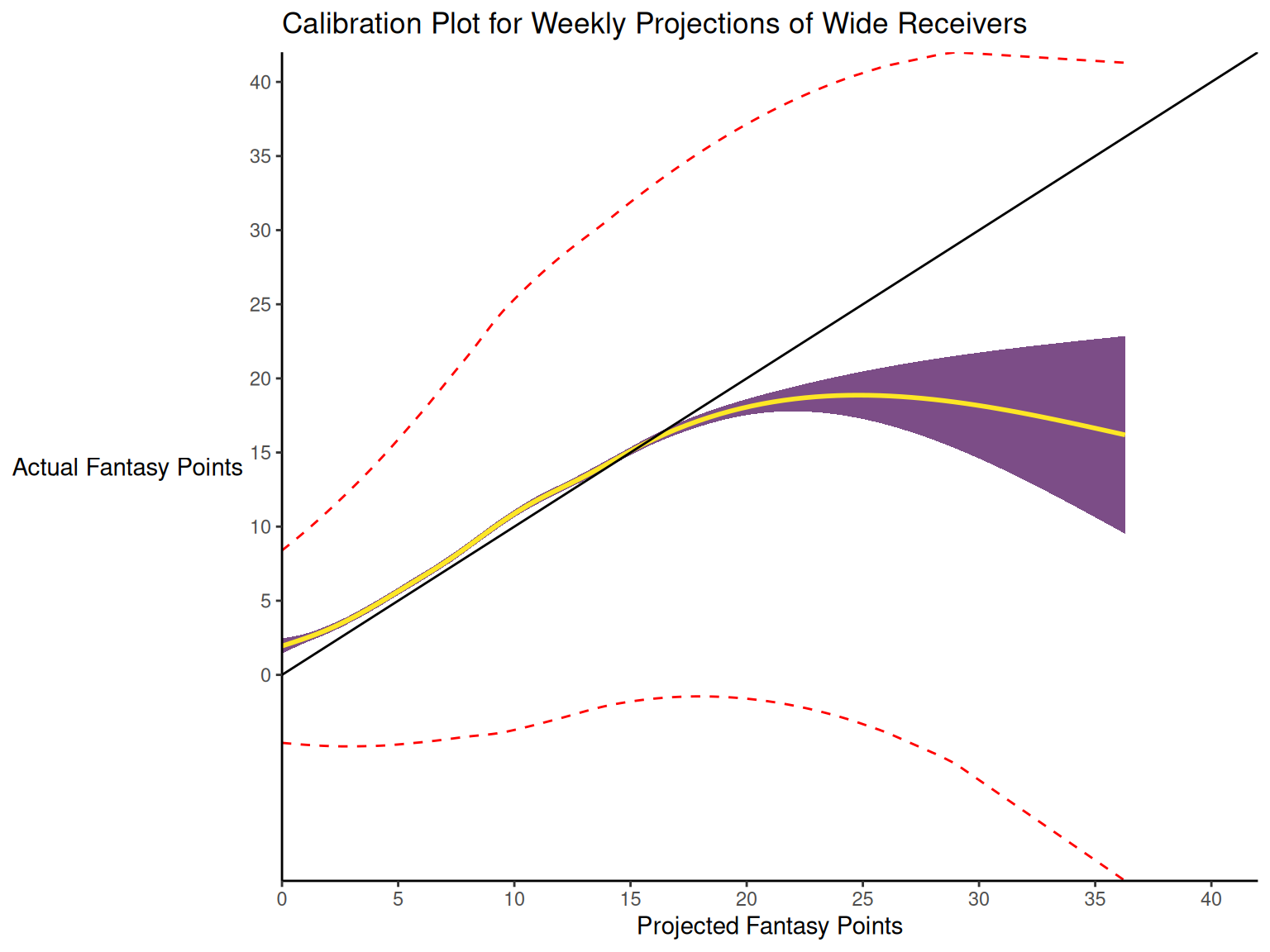

17.6.10.30 Mean Difference Between Predicted and Observed Values