I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

You can leave a comment at the bottom of the page/chapter, or open an issue or submit a pull request on GitHub: https://github.com/isaactpetersen/Fantasy-Football-Analytics-Textbook

Alternatively, you can leave an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

19 Machine Learning

This chapter provides an overview of machine learning.

19.1 Getting Started

19.1.1 Load Packages

19.1.2 Load Data

Code

# Downloaded Data - Processed

load(file = "./data/nfl_players.RData")

load(file = "./data/nfl_teams.RData")

load(file = "./data/nfl_rosters.RData")

load(file = "./data/nfl_rosters_weekly.RData")

load(file = "./data/nfl_schedules.RData")

load(file = "./data/nfl_combine.RData")

load(file = "./data/nfl_draftPicks.RData")

load(file = "./data/nfl_depthCharts.RData")

#load(file = "./data/nfl_pbp.RData")

#load(file = "./data/nfl_4thdown.RData")

#load(file = "./data/nfl_participation.RData")

#load(file = "./data/nfl_actualFantasyPoints_weekly.RData")

load(file = "./data/nfl_injuries.RData")

load(file = "./data/nfl_snapCounts.RData")

load(file = "./data/nfl_espnQBR_seasonal.RData")

load(file = "./data/nfl_espnQBR_weekly.RData")

load(file = "./data/nfl_nextGenStats_weekly.RData")

load(file = "./data/nfl_advancedStatsPFR_seasonal.RData")

load(file = "./data/nfl_advancedStatsPFR_weekly.RData")

load(file = "./data/nfl_playerContracts.RData")

load(file = "./data/nfl_ftnCharting.RData")

load(file = "./data/nfl_playerIDs.RData")

load(file = "./data/nfl_rankings_draft.RData")

load(file = "./data/nfl_rankings_weekly.RData")

load(file = "./data/nfl_expectedFantasyPoints_weekly.RData")

#load(file = "./data/nfl_expectedFantasyPoints_pbp.RData")

# Calculated Data - Processed

load(file = "./data/nfl_actualStats_player_career.RData")

load(file = "./data/nfl_actualStats_seasonal.RData")

load(file = "./data/player_stats_weekly.RData")

load(file = "./data/player_stats_seasonal.RData")We created the player_stats_weekly.RData and player_stats_seasonal.RData objects in Section 4.4.3.

19.1.3 Specify Options

19.2 Overview of Machine Learning

Machine learning is a class of algorithmic approaches that are used to identify patterns in data. Machine learning takes us away from focusing on causal inference. Machine learning does not care about which processes are causal—i.e., which processes influence the outcome. Instead, machine learning cares about prediction—it cares about a predictor variable to the extent that it increases predictive accuracy regardless of whether it is causally related to the outcome. Nevertheless, association is necessary (despite being insufficient) for causality, as described in Section 13.4. Thus, achieving strong prediction is important (even if insufficient) for the model to be useful. If a model does explains only a small portion of variance, it is difficult for it to be useful.

Machine learning can be useful for leveraging big data and many predictor variables to develop predictive models with greater accuracy. However, many machine learning techniques are black boxes—it is often unclear how or why certain predictions are made, which can make it difficult to interpret the model’s decisions and understand the underlying relationships between variables. Machine learning tends to be a data-driven, atheoretical technique. This can result in overfitting. Thus, when estimating machine learning models, it is common to keep a hold-out sample for use in cross-validation to evaluate the extent of shrinkage of model coefficients. The data that the model is trained on is known as the “training data”. The data that the model was not trained on but is then is independently tested on—i.e., the hold-out sample—is the “test data”. Shrinkage occurs when predictor variables explain some random error variance in the original model. When the model is applied to an independent sample (i.e., the test data), the predictive model will likely not perform quite as well, and the regressions coefficients will tend to get smaller (i.e., shrink).

If the test data were collected as part of the same processes as the original data and were merely held out for purposes of analysis, this is called internal cross-validation. If the test data were collected separately from the original data used to train the model, this is called external cross-validation.

Although machine learning tends to be data-driven in its execution, theory should still inform which variables are included in the model.

Most machine learning methods were developed with cross-sectional data in mind. That is, they assume that each person has only one observation on the outcome variable. However, with longitudinal data, each person has multiple observations on the outcome variable.

When performing machine learning, various approaches may help address this:

- transform data from long to wide form, so that each person has only one row

- when designing the training and test sets, keep all measurements from the same person in the same data object (either the training or test set); do not have some measurements from a given person in the training set and other measurements from the same person in the test set

- use a machine learning approach that accounts for the clustered/nested nature of the data

19.3 Types of Machine Learning

There are many approaches to machine learning. This chapter discusses several key ones:

- supervised learning

- continuous outcome (i.e., regression)

- categorical outcome (i.e., classification)

- logistic regression

- support vector machine

- random forest

- tree-based methods

- unsupervised learning

- semi-supervised learning

- reinforcement learning

- deep learning / neural network

- ensemble

19.3.1 Supervised Learning

Supervised learning involves learning from data where the correct classification or outcome is known (and the classification is thus part of the data). For instance, predicting how many points a player will score is a supervised learning task, because there is a ground truth—the actual number of points scored—that can be used to train and evaluate the model. If the outcome variable is categorical, the approach involves classification. If the outcome variable is continuous, the approach involves regression.

Unlike linear and logistic regression, various machine learning techniques can handle multicollinearity, including LASSO regression, ridge regression, and elastic net regression via regularization. Regularization involves penalizing model complexity to avoid overfitting (Ramasubramanian & Singh, 2016). Least absolute shrinkage and selection option (LASSO) regression performs selection of which predictor variables to keep in the model by shrinking some coefficients to zero, effectively removing them from the model. Ridge regression shrinks the coefficients of predictor variables toward zero, but not to zero, so it does not perform selection of which predictor variables to retain; this allows it to yield stable estimates for multiple correlated predictor variables in the context of multicollinearity. Elastic net involves a combination of LASSO and ridge regression; it performs selection of which predictor variables to keep by shrinking the coefficients of some predictor variables to zero (like LASSO, for variable selection), and it shrinks the coefficients of some predictor variables toward zero (like ridge, for handling multicollinearity among correlated predictors).

Unless interactions or nonlinear terms are specified, linear, logistic, LASSO, ridge, and elastic net regression assume additive and linear associations between the predictors and outcome. That is, they do not automatically account for interactions among the predictor variables or for nonlinear associations between the predictor variables and the outcome variable (unless interaction terms or nonlinear transformations are explicitly included). By contrast, random forests and tree boosting methods automatically account for interactions and nonlinear associations between predictors and the outcome variable. These models recursively partition the data in ways that capture complex patterns without the need to manually specify interaction or polynomial terms.

19.3.2 Unsupervised Learning

Unsupervised learning involves learning from data without known classifications. Unsupervised learning is used to discover hidden patterns, groupings, or structures in the data. For instance, if we want to identify different subtypes of Wide Receivers based on their playing style or performance metrics, or uncover underlying dimensions in a large dataset, we would use an unsupervised learning approach.

We describe cluster analysis in Chapter 21. We describe factor analysis in Chapter 22. We describe principal component analysis in Chapter 23.

19.3.3 Semi-supervised Learning

Semi-supervised learning combines supervised learning and unsupervised learning by training the model on some data for which the classification is known and some data for which the classification is not known.

19.3.4 Reinforcement Learning

Reinforcement learning involves an agent learning to make decisions by interacting with the environment. Through trial and error, the agent receives feedback in the form of rewards or penalties and learns a strategy that maximizes the cumulative reward over time.

19.3.5 Ensemble Learing

Ensemble machine learning methods combine multiple machine learning approaches with the goal that combining multiple approaches might lead to more accurate predictions than any one method might be able to achieve on its own.

19.4 Data Processing

Several data processing steps are necessary to get the data in the form necessary for machine learning.

19.4.1 Prepare Data for Merging

First, we apply several steps. We subset to the positions and variables of interest. We also rename columns and change variable types to make sure they match the column names and types across objects, which will be important later when we merge the data.

Code

# Prepare data for merging

#nfl_actualFantasyPoints_player_weekly <- nfl_actualFantasyPoints_player_weekly %>%

# rename(gsis_id = player_id)

#

#nfl_actualFantasyPoints_player_seasonal <- nfl_actualFantasyPoints_player_seasonal %>%

# rename(gsis_id = player_id)

player_stats_seasonal_offense <- player_stats_seasonal %>%

filter(position_group %in% c("QB","RB","WR","TE")) %>%

rename(gsis_id = player_id)

player_stats_weekly_offense <- player_stats_weekly %>%

filter(position_group %in% c("QB","RB","WR","TE")) %>%

rename(gsis_id = player_id)

## Rename other variables to ensure common names

## Ensure variables with the same name have the same type

nfl_players <- nfl_players %>%

mutate(

birth_date = as.Date(birth_date),

jersey_number = as.character(jersey_number),

nfl_id = as.character(nfl_id),

years_of_experience = as.integer(years_of_experience))

player_stats_seasonal_offense <- player_stats_seasonal_offense %>%

mutate(

birth_date = as.Date(birth_date),

jersey_number = as.character(jersey_number))

nfl_rosters <- nfl_rosters %>%

mutate(

draft_number = as.integer(draft_number))

nfl_rosters_weekly <- nfl_rosters_weekly %>%

mutate(

draft_number = as.integer(draft_number))

nfl_depthCharts <- nfl_depthCharts %>%

mutate(

season = as.integer(season))

nfl_expectedFantasyPoints_weekly <- nfl_expectedFantasyPoints_weekly %>%

rename(gsis_id = player_id) %>%

mutate(

season = as.integer(season),

receptions = as.integer(receptions)) %>%

distinct(gsis_id, season, week, .keep_all = TRUE) # drop duplicated rows

## Rename variables

nfl_draftPicks <- nfl_draftPicks %>%

rename(

games_career = games,

pass_completions_career = pass_completions,

pass_attempts_career = pass_attempts,

pass_yards_career = pass_yards,

pass_tds_career = pass_tds,

pass_ints_career = pass_ints,

rush_atts_career = rush_atts,

rush_yards_career = rush_yards,

rush_tds_career = rush_tds,

receptions_career = receptions,

rec_yards_career = rec_yards,

rec_tds_career = rec_tds,

def_solo_tackles_career = def_solo_tackles,

def_ints_career = def_ints,

def_sacks_career = def_sacks

)

## Subset variables

nfl_expectedFantasyPoints_weekly <- nfl_expectedFantasyPoints_weekly %>%

select(gsis_id:position, contains("_exp"), contains("_diff"), contains("_team")) #drop "raw stats" variables (e.g., rec_yards_gained) so they don't get coalesced with actual stats

# Check duplicate ids

player_stats_seasonal_offense %>%

group_by(gsis_id, season) %>%

filter(n() > 1) %>%

head()Code

Below, we identify shared variable names across objects to be merged to make sure we account for them in merging:

[1] "gsis_id" "position"[1] 24532[1] 2855[1] "gsis_id" "season" "team" "pfr_id" "age" [1] 14874[1] 10511[1] 14873[1] 10511[1] 14874[1] 10444[1] "gsis_id" "season" "week" "position" "full_name"[1] 844984[1] 105903[1] 844984[1] 105903[1] 844951[1] 103446[1] 844968[1] 10344619.4.2 Merge Data

To perform machine learning, we need all of the predictor variables and the outcome variable in the same data file. Thus, we must merge data files. To merge data, we use the powerjoin package (Fabri, 2022), which allows coalescing variables with the same name from two different objects. We specify coalesce_xy, which means that—for variables that have the same name across both objects—it keeps the value from object 1 (if present); if not, it keeps the value from object 2. We first merge variables from objects that have the same structure—player data (i.e., id form), seasonal data (i.e., id-season form), or weekly data (i.e., id-season-week form).

Code

# Create lists of objects to merge, depending on data structure: id; or id-season; or id-season-week

playerListToMerge <- list(

nfl_players %>% filter(!is.na(gsis_id)),

nfl_draftPicks %>% filter(!is.na(gsis_id)) %>% select(-season)

)

playerSeasonListToMerge <- list(

player_stats_seasonal_offense %>% filter(!is.na(gsis_id), !is.na(season)),

nfl_advancedStatsPFR_seasonal %>% filter(!is.na(gsis_id), !is.na(season))

)

playerSeasonWeekListToMerge <- list(

nfl_rosters_weekly %>% filter(!is.na(gsis_id), !is.na(season), !is.na(week)),

#nfl_actualStats_offense_weekly,

nfl_expectedFantasyPoints_weekly %>% filter(!is.na(gsis_id), !is.na(season), !is.na(week))

#nfl_advancedStatsPFR_weekly,

)

playerSeasonWeekPositionListToMerge <- list(

nfl_depthCharts %>% filter(!is.na(gsis_id), !is.na(season), !is.na(week))

)

# Merge data

playerMerged <- playerListToMerge %>%

reduce(

powerjoin::power_full_join,

by = c("gsis_id"),

conflict = powerjoin::coalesce_xy) # where the objects have the same variable name (e.g., position), keep the values from object 1, unless it's NA, in which case use the relevant value from object 2

playerSeasonMerged <- playerSeasonListToMerge %>%

reduce(

powerjoin::power_full_join,

by = c("gsis_id","season"),

conflict = powerjoin::coalesce_xy) # where the objects have the same variable name (e.g., team), keep the values from object 1, unless it's NA, in which case use the relevant value from object 2

playerSeasonWeekMerged <- playerSeasonWeekListToMerge %>%

reduce(

powerjoin::power_full_join,

by = c("gsis_id","season","week"),

conflict = powerjoin::coalesce_xy) # where the objects have the same variable name (e.g., position), keep the values from object 1, unless it's NA, in which case use the relevant value from object 2To prepare for merging player data with seasonal data, we identify shared variable names across the objects:

[1] "gsis_id" "position"

[3] "position_group" "display_name"

[5] "common_first_name" "first_name"

[7] "last_name" "short_name"

[9] "football_name" "suffix"

[11] "esb_id" "nfl_id"

[13] "pff_id" "otc_id"

[15] "espn_id" "smart_id"

[17] "birth_date" "ngs_position_group"

[19] "ngs_position" "height"

[21] "weight" "headshot"

[23] "college_name" "college_conference"

[25] "jersey_number" "rookie_season"

[27] "last_season" "status"

[29] "ngs_status" "ngs_status_short_description"

[31] "pff_position" "pff_status"

[33] "draft_year" "draft_round"

[35] "draft_pick" "draft_team"

[37] "years_of_experience" "pfr_player_name"

[39] "team" "pfr_id"

[41] "age" Then we merge the player data with the seasonal data:

Code

seasonalData <- powerjoin::power_full_join(

playerSeasonMerged,

playerMerged %>% select(-age, -years_of_experience, -team, -latest_team, -last_season, -pff_status), # drop variables from id objects that change from year to year (and thus are not necessarily accurate for a given season)

by = "gsis_id",

conflict = powerjoin::coalesce_xy # where the objects have the same variable name (e.g., position), keep the values from object 1, unless it's NA, in which case use the relevant value from object 2

) %>%

filter(!is.na(season)) %>%

select(gsis_id, season, player_display_name, position, team, games, everything())To prepare for merging player and seasonal data with weekly data, we identify shared variable names across the objects:

[1] "gsis_id" "season" "week" "team"

[5] "jersey_number" "status" "first_name" "last_name"

[9] "birth_date" "height" "weight" "college"

[13] "espn_id" "pff_id" "pfr_id" "headshot_url"

[17] "ngs_position" "football_name" "esb_id" "smart_id"

[21] "position" Then we merge the player and seasonal data with the weekly data:

Code

seasonalAndWeeklyData <- powerjoin::power_full_join(

playerSeasonWeekMerged,

seasonalData,

by = c("gsis_id","season"),

conflict = powerjoin::coalesce_xy # where the objects have the same variable name (e.g., position), keep the values from object 1, unless it's NA, in which case use the relevant value from object 2

) %>%

filter(!is.na(week)) %>%

select(gsis_id, season, week, full_name, position, team, everything())19.4.3 Additional Processing

For purposes of machine learning, we set all character and logical columns to factors.

19.4.4 Fill in Missing Data for Static Variables

For variables that are not expected to change, such as a player’s name and position, we fill in missing values by using a player’s value on those variables from other rows in the data.

19.4.5 Create New Data Object for Merging with Later Predictions

We create a new data object that contains the latest seasonal data, for merging with later predictions.

19.4.6 Lag Fantasy Points

To develop a machine learning model that uses a player’s performance metrics in a given season for predicting the player’s fantasy points in the subsequent season, we need to include the player’s fantasy points from the subsequent season in the same row as the previous season’s performance metrics. Thus, we need to create a lagged variable for fantasy points. That way, 2024 fantasy points are in the same row as 2023 performance metrics, 2023 fantasy points are in the same row as 2023 performance metrics, and so on. We call this the lagged fantasy points variable (fantasyPoints_lag). We also retain the original same-year fantasy points variable (fantasyPoints) so it can be used as predictor of their subsequent-year fantasy points.

19.4.7 Subset to Predictor Variables and Outcome Variable

Then, we drop variables that we do not want to include in the model as our predictor or outcome variable. Thus, all of the variables in the object are our predictor and outcome variables.

Code

dropVars <- c(

"birth_date", "player_display_name", "team", "player_name", "headshot_url", "season_type", "fg_made_list", "fg_missed_list", "fg_blocked_list", "gwfg_distance_list", "pff_status", "startdate", "pos", "merge_name", "pfr_player_id", "cfb_player_id", "hof", "category", "side", "college", "car_av", "display_name", "common_first_name", "first_name", "last_name", "short_name", "football_name", "suffix", "esb_id", "nfl_id", "pff_id", "otc_id", "espn_id", "smart_id", "ngs_position_group", "ngs_position", "headshot", "college_name", "college_conference", "jersey_number", "status", "ngs_status", "ngs_status_short_description", "pff_position", "draft_team", "pfr_player_name", "pfr_id")

seasonalData_lag_subset <- seasonalData_lag %>%

dplyr::select(-any_of(dropVars))19.4.8 Separate by Position

Then, we separate the objects by position, so we can develop different machine learning models for each position.

Code

seasonalData_lag_subsetQB <- seasonalData_lag_subset %>%

filter(position == "QB") %>%

select(

gsis_id, season, games, gs, years_of_experience, age, ageCentered20, ageCentered20Quadratic,

height, weight, rookie_season, draft_pick,

fantasy_points, fantasy_points_ppr, fantasyPoints, fantasyPoints_lag,

completions:rushing_2pt_conversions, special_teams_tds, contains(".pass"), contains(".rush"))

seasonalData_lag_subsetRB <- seasonalData_lag_subset %>%

filter(position == "RB") %>%

select(

gsis_id, season, games, gs, years_of_experience, age, ageCentered20, ageCentered20Quadratic,

height, weight, rookie_season, draft_pick,

fantasy_points, fantasy_points_ppr, fantasyPoints, fantasyPoints_lag,

carries:special_teams_tds, contains(".rush"), contains(".rec"))

seasonalData_lag_subsetWR <- seasonalData_lag_subset %>%

filter(position == "WR") %>%

select(

gsis_id, season, games, gs, years_of_experience, age, ageCentered20, ageCentered20Quadratic,

height, weight, rookie_season, draft_pick,

fantasy_points, fantasy_points_ppr, fantasyPoints, fantasyPoints_lag,

carries:special_teams_tds, contains(".rush"), contains(".rec"))

seasonalData_lag_subsetTE <- seasonalData_lag_subset %>%

filter(position == "TE") %>%

select(

gsis_id, season, games, gs, years_of_experience, age, ageCentered20, ageCentered20Quadratic,

height, weight, rookie_season, draft_pick,

fantasy_points, fantasy_points_ppr, fantasyPoints, fantasyPoints_lag,

carries:special_teams_tds, contains(".rush"), contains(".rec"))19.4.9 Split into Test and Training Data

Because machine learning can leverage many predictors, it is at high risk of overfitting—explaining error variance that would not generalize to new data, such as data for new players or future seasons. Thus, it is important to develop and tune the machine learning model so as not to overfit the model. In machine learning, it is common to use cross-validation where we train the model on a subset of the observations, and we evaluate how well the model generalizes to unseen (e.g., “hold-out”) observations. Then, we select the model parameters by how well the model generalizes to the hold-out data, so we are selecting a model that maximizes accuracy and generalizability (i.e., parsimony).

For internal cross-validation, it is common to divide the data into three subsets:

- training data

- validation data

- test data

The training set is used to fit the model. It is usually the largest portion of the data. We fit various models to the training set based on which parameters we want to evaluate (e.g., how many trees to use in a gradient tree boosting model).

The models fit with the training set are then evaluated using the unseen observations in the validation set. The validation set is used to tune the model parameters and prevent overfitting. We select the model parameters that yield the greatest accuracy in the validation set. In k-fold cross-validation, the validation set rotates across folds, thus replacing the need for a separate validation set.

The test set is used after model training and tuning to evaluate the model’s generalizability to unseen data.

Below, we split the data into test and training data. Our ultimate goal is to predict next year’s fantasy points. However, to do that effectively, we must first develop a model for which we can evaluate its accuracy against historical fantasy points (because we do not yet know players will score in the future). We want to include all current/active players in our training data, so that our predictions of their future performance can be accounted for by including their prior data in the model. Thus, we use retired players as our hold-out (test) data. We split our data into 80% training data and 20% testing data. The 20% testing data thus includes all retired players, but not all retired players are in the testing data.

Then, for the analysis, we can either a) use rotating folds (as the case for k-fold and leave-one-out [LOO] cross-validation) for which a separate validation set (from the training set) is not needed, as we do in Section 19.6, or we can b) subdivide the training set into an inner training set and validation set, as we do in Section 19.8.5.4.

Code

seasonalData_lag_qb_all <- seasonalData_lag_subsetQB

seasonalData_lag_rb_all <- seasonalData_lag_subsetRB

seasonalData_lag_wr_all <- seasonalData_lag_subsetWR

seasonalData_lag_te_all <- seasonalData_lag_subsetTE

set.seed(52242) # for reproducibility (to keep the same train/holdout players)

activeQBs <- unique(seasonalData_lag_qb_all$gsis_id[which(seasonalData_lag_qb_all$season == max(seasonalData_lag_qb_all$season, na.rm = TRUE))])

retiredQBs <- unique(seasonalData_lag_qb_all$gsis_id[which(seasonalData_lag_qb_all$gsis_id %ni% activeQBs)])

numQBs <- length(unique(seasonalData_lag_qb_all$gsis_id))

qbHoldoutIDs <- sample(retiredQBs, size = ceiling(.2 * numQBs)) # holdout 20% of players

activeRBs <- unique(seasonalData_lag_rb_all$gsis_id[which(seasonalData_lag_rb_all$season == max(seasonalData_lag_rb_all$season, na.rm = TRUE))])

retiredRBs <- unique(seasonalData_lag_rb_all$gsis_id[which(seasonalData_lag_rb_all$gsis_id %ni% activeRBs)])

numRBs <- length(unique(seasonalData_lag_rb_all$gsis_id))

rbHoldoutIDs <- sample(retiredRBs, size = ceiling(.2 * numRBs)) # holdout 20% of players

set.seed(52242) # for reproducibility (to keep the same train/holdout players); added here to prevent a downstream error with predict.missRanger() due to missingness; this suggests that an error can arise from including a player in the holdout sample who has missingness in particular variables; would be good to identify which player(s) in the holdout sample evoke that error to identify the kinds of missingness that yield the error

activeWRs <- unique(seasonalData_lag_wr_all$gsis_id[which(seasonalData_lag_wr_all$season == max(seasonalData_lag_wr_all$season, na.rm = TRUE))])

retiredWRs <- unique(seasonalData_lag_wr_all$gsis_id[which(seasonalData_lag_wr_all$gsis_id %ni% activeWRs)])

numWRs <- length(unique(seasonalData_lag_wr_all$gsis_id))

wrHoldoutIDs <- sample(retiredWRs, size = ceiling(.2 * numWRs)) # holdout 20% of players

activeTEs <- unique(seasonalData_lag_te_all$gsis_id[which(seasonalData_lag_te_all$season == max(seasonalData_lag_te_all$season, na.rm = TRUE))])

retiredTEs <- unique(seasonalData_lag_te_all$gsis_id[which(seasonalData_lag_te_all$gsis_id %ni% activeTEs)])

numTEs <- length(unique(seasonalData_lag_te_all$gsis_id))

teHoldoutIDs <- sample(retiredTEs, size = ceiling(.2 * numTEs)) # holdout 20% of players

seasonalData_lag_qb_train <- seasonalData_lag_qb_all %>%

filter(gsis_id %ni% qbHoldoutIDs)

seasonalData_lag_qb_test <- seasonalData_lag_qb_all %>%

filter(gsis_id %in% qbHoldoutIDs)

seasonalData_lag_rb_train <- seasonalData_lag_rb_all %>%

filter(gsis_id %ni% rbHoldoutIDs)

seasonalData_lag_rb_test <- seasonalData_lag_rb_all %>%

filter(gsis_id %in% rbHoldoutIDs)

seasonalData_lag_wr_train <- seasonalData_lag_wr_all %>%

filter(gsis_id %ni% wrHoldoutIDs)

seasonalData_lag_wr_test <- seasonalData_lag_wr_all %>%

filter(gsis_id %in% wrHoldoutIDs)

seasonalData_lag_te_train <- seasonalData_lag_te_all %>%

filter(gsis_id %ni% teHoldoutIDs)

seasonalData_lag_te_test <- seasonalData_lag_te_all %>%

filter(gsis_id %in% teHoldoutIDs)19.4.10 Impute the Missing Data

Many of the machine learning approaches described in this chapter require no missing observations in order for a case to be included in the analysis. In this section, we demonstrate one approach to imputing missing data. Here is a vignette demonstrating how to impute missing data using missForest(): https://rpubs.com/lmorgan95/MissForest (archived at: https://perma.cc/6GB4-2E22). Below, we impute the training data (and all data) separately by position. We then use the imputed training data to make out-of-sample predictions to fill in the missing data for the testing data. We do not want to impute the training and testing data together so that we can keep them separate for the purposes of cross-validation. However, we impute all data (training and test data together) for purposes of making out-of-sample predictions from the machine learning models to predict players’ performance next season (when actuals are not yet available for evaluating their accuracy). To impute data, we use the missRanger package (Mayer, 2024).

Note: the following code takes a while to run.

Code

Skip constant features for imputation: special_teams_tds

Variables to impute: fantasy_points, fantasy_points_ppr, special_teams_tds, passing_epa, pacr, rushing_epa, draft_pick, fantasyPoints_lag, passing_cpoe, gs, pass_attempts.pass, throwaways.pass, spikes.pass, drops.pass, bad_throws.pass, times_blitzed.pass, times_hurried.pass, times_hit.pass, times_pressured.pass, batted_balls.pass, on_tgt_throws.pass, rpo_plays.pass, rpo_yards.pass, rpo_pass_att.pass, rpo_pass_yards.pass, rpo_rush_att.pass, rpo_rush_yards.pass, pa_pass_att.pass, pa_pass_yards.pass, intended_air_yards.pass, intended_air_yards_per_pass_attempt.pass, completed_air_yards.pass, completed_air_yards_per_completion.pass, completed_air_yards_per_pass_attempt.pass, pass_yards_after_catch.pass, pass_yards_after_catch_per_completion.pass, scrambles.pass, scramble_yards_per_attempt.pass, att.rush, yds.rush, td.rush, x1d.rush, ybc.rush, yac.rush, brk_tkl.rush, att_br.rush, drop_pct.pass, bad_throw_pct.pass, on_tgt_pct.pass, pressure_pct.pass, ybc_att.rush, yac_att.rush, pocket_time.pass

Variables used to impute: gsis_id, season, games, gs, years_of_experience, age, ageCentered20, ageCentered20Quadratic, height, weight, rookie_season, draft_pick, fantasy_points, fantasy_points_ppr, fantasyPoints, fantasyPoints_lag, completions, attempts, passing_yards, passing_tds, passing_interceptions, sacks_suffered, sack_yards_lost, sack_fumbles, sack_fumbles_lost, passing_air_yards, passing_yards_after_catch, passing_first_downs, passing_epa, passing_cpoe, passing_2pt_conversions, pacr, carries, rushing_yards, rushing_tds, rushing_fumbles, rushing_fumbles_lost, rushing_first_downs, rushing_epa, rushing_2pt_conversions, pocket_time.pass, pass_attempts.pass, throwaways.pass, spikes.pass, drops.pass, bad_throws.pass, times_blitzed.pass, times_hurried.pass, times_hit.pass, times_pressured.pass, batted_balls.pass, on_tgt_throws.pass, rpo_plays.pass, rpo_yards.pass, rpo_pass_att.pass, rpo_pass_yards.pass, rpo_rush_att.pass, rpo_rush_yards.pass, pa_pass_att.pass, pa_pass_yards.pass, intended_air_yards.pass, intended_air_yards_per_pass_attempt.pass, completed_air_yards.pass, completed_air_yards_per_completion.pass, completed_air_yards_per_pass_attempt.pass, pass_yards_after_catch.pass, pass_yards_after_catch_per_completion.pass, scrambles.pass, scramble_yards_per_attempt.pass, drop_pct.pass, bad_throw_pct.pass, on_tgt_pct.pass, pressure_pct.pass, ybc_att.rush, yac_att.rush, att.rush, yds.rush, td.rush, x1d.rush, ybc.rush, yac.rush, brk_tkl.rush, att_br.rush

fntsy_ fnts__ spcl__ pssng_p pacr rshng_ drft_p fntsP_ pssng_c gs pss_t. thrww. spks.p drps.p bd_th. tms_b. tms_hr. tms_ht. tms_p. bttd_. on_tgt_t. rp_pl. rp_yr. rp_pss_t. rp_pss_y. rp_rsh_t. rp_rsh_y. p_pss_t. p_pss_y. int__. i_____ cmp__. c____. c_____ ps___. p_____ scrmb. sc___. att.rs yds.rs td.rsh x1d.rs ybc.rs yc.rsh brk_t. att_b. drp_p. bd_t_. on_tgt_p. prss_. ybc_t. yc_tt. pckt_.

iter 1: 0.0058 0.0026 0.0000 0.1904 0.7606 0.3600 0.6075 0.4712 0.4347 0.0133 0.0041 0.2898 0.7738 0.1277 0.0481 0.0701 0.1768 0.1835 0.0368 0.3073 0.0232 0.2624 0.1595 0.0802 0.0771 0.2590 0.2650 0.1652 0.1026 0.0529 0.1108 0.0330 0.1044 0.1453 0.0250 0.1103 0.1096 0.1182 0.0410 0.0499 0.1919 0.0398 0.0523 0.1677 0.3414 0.3708 0.7752 0.4660 0.0858 0.7115 0.3391 0.5726 0.7565

iter 2: 0.0044 0.0043 0.0000 0.1953 0.7965 0.3608 0.6987 0.4835 0.4251 0.0132 0.0041 0.2780 0.7537 0.0713 0.0334 0.0667 0.1246 0.1340 0.0302 0.2671 0.0074 0.0537 0.0699 0.0686 0.0911 0.2005 0.2822 0.0598 0.0751 0.0118 0.0818 0.0215 0.0861 0.1618 0.0228 0.1139 0.0915 0.1117 0.0261 0.0322 0.1949 0.0380 0.0491 0.1173 0.2361 0.3435 0.7364 0.4414 0.0829 0.6882 0.3195 0.5835 0.7556

iter 3: 0.0043 0.0043 0.0000 0.1957 0.7922 0.3622 0.6797 0.4820 0.4231 0.0134 0.0042 0.2784 0.7391 0.0711 0.0339 0.0670 0.1238 0.1339 0.0312 0.2683 0.0088 0.0542 0.0653 0.0686 0.0929 0.2035 0.2616 0.0583 0.0733 0.0124 0.0761 0.0210 0.0879 0.1547 0.0229 0.1098 0.0907 0.1171 0.0269 0.0283 0.2024 0.0385 0.0484 0.1170 0.2397 0.3694 0.7323 0.4258 0.0819 0.6666 0.3198 0.5825 0.7500

iter 4: 0.0046 0.0044 0.0000 0.1953 0.7895 0.3560 0.6855 0.4787 0.4309 0.0133 0.0038 0.2719 0.7516 0.0693 0.0349 0.0668 0.1272 0.1346 0.0301 0.2715 0.0079 0.0533 0.0713 0.0679 0.0939 0.2013 0.2733 0.0563 0.0737 0.0116 0.0740 0.0205 0.0840 0.1686 0.0203 0.1168 0.0904 0.1136 0.0283 0.0306 0.2090 0.0403 0.0473 0.1098 0.2276 0.3507 0.7073 0.4352 0.0821 0.6643 0.3233 0.5815 0.7552

iter 5: 0.0044 0.0044 0.0000 0.1945 0.7824 0.3462 0.6896 0.4831 0.4114 0.0133 0.0040 0.2795 0.7413 0.0723 0.0343 0.0670 0.1261 0.1362 0.0303 0.2657 0.0077 0.0545 0.0708 0.0716 0.0912 0.2091 0.2729 0.0579 0.0775 0.0111 0.0716 0.0207 0.0868 0.1687 0.0221 0.1155 0.0873 0.1149 0.0277 0.0316 0.1914 0.0387 0.0476 0.1194 0.2377 0.3611 0.6914 0.4513 0.0806 0.6571 0.3277 0.5873 0.7595

iter 6: 0.0045 0.0043 0.0000 0.1962 0.7877 0.3524 0.6905 0.4813 0.4150 0.0135 0.0040 0.2727 0.7381 0.0710 0.0339 0.0666 0.1249 0.1333 0.0298 0.2646 0.0081 0.0519 0.0722 0.0686 0.0918 0.2154 0.2737 0.0571 0.0771 0.0117 0.0739 0.0204 0.0887 0.1626 0.0254 0.1088 0.0971 0.1164 0.0292 0.0304 0.2011 0.0393 0.0504 0.1164 0.2412 0.3561 0.7288 0.4336 0.0862 0.6589 0.3259 0.5868 0.7431 missRanger object. Extract imputed data via $data

- best iteration: 5

- best average OOB imputation error: 0.205817 Code

data_all_qb <- seasonalData_lag_qb_all_imp$data

data_all_qb$fantasyPointsMC_lag <- scale(data_all_qb$fantasyPoints_lag, scale = FALSE) # mean-centered

data_all_qb_matrix <- data_all_qb %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

newData_qb <- data_all_qb %>%

filter(season == max(season, na.rm = TRUE)) %>%

select(-fantasyPoints_lag, -fantasyPointsMC_lag)

newData_qb_matrix <- data_all_qb_matrix[

data_all_qb_matrix[, "season"] == max(data_all_qb_matrix[, "season"], na.rm = TRUE), # keep only rows with the most recent season

, # all columns

drop = FALSE]

dropCol_qb <- which(colnames(newData_qb_matrix) %in% c("fantasyPoints_lag","fantasyPointsMC_lag"))

newData_qb_matrix <- newData_qb_matrix[, -dropCol_qb, drop = FALSE]

seasonalData_lag_qb_train_imp <- missRanger::missRanger(

seasonalData_lag_qb_train,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

Skip constant features for imputation: special_teams_tds

Variables to impute: fantasy_points, fantasy_points_ppr, special_teams_tds, passing_epa, pacr, rushing_epa, draft_pick, fantasyPoints_lag, passing_cpoe, gs, pass_attempts.pass, throwaways.pass, spikes.pass, drops.pass, bad_throws.pass, times_blitzed.pass, times_hurried.pass, times_hit.pass, times_pressured.pass, batted_balls.pass, on_tgt_throws.pass, rpo_plays.pass, rpo_yards.pass, rpo_pass_att.pass, rpo_pass_yards.pass, rpo_rush_att.pass, rpo_rush_yards.pass, pa_pass_att.pass, pa_pass_yards.pass, intended_air_yards.pass, intended_air_yards_per_pass_attempt.pass, completed_air_yards.pass, completed_air_yards_per_completion.pass, completed_air_yards_per_pass_attempt.pass, pass_yards_after_catch.pass, pass_yards_after_catch_per_completion.pass, scrambles.pass, scramble_yards_per_attempt.pass, att.rush, yds.rush, td.rush, x1d.rush, ybc.rush, yac.rush, brk_tkl.rush, att_br.rush, drop_pct.pass, bad_throw_pct.pass, on_tgt_pct.pass, pressure_pct.pass, ybc_att.rush, yac_att.rush, pocket_time.pass

Variables used to impute: gsis_id, season, games, gs, years_of_experience, age, ageCentered20, ageCentered20Quadratic, height, weight, rookie_season, draft_pick, fantasy_points, fantasy_points_ppr, fantasyPoints, fantasyPoints_lag, completions, attempts, passing_yards, passing_tds, passing_interceptions, sacks_suffered, sack_yards_lost, sack_fumbles, sack_fumbles_lost, passing_air_yards, passing_yards_after_catch, passing_first_downs, passing_epa, passing_cpoe, passing_2pt_conversions, pacr, carries, rushing_yards, rushing_tds, rushing_fumbles, rushing_fumbles_lost, rushing_first_downs, rushing_epa, rushing_2pt_conversions, pocket_time.pass, pass_attempts.pass, throwaways.pass, spikes.pass, drops.pass, bad_throws.pass, times_blitzed.pass, times_hurried.pass, times_hit.pass, times_pressured.pass, batted_balls.pass, on_tgt_throws.pass, rpo_plays.pass, rpo_yards.pass, rpo_pass_att.pass, rpo_pass_yards.pass, rpo_rush_att.pass, rpo_rush_yards.pass, pa_pass_att.pass, pa_pass_yards.pass, intended_air_yards.pass, intended_air_yards_per_pass_attempt.pass, completed_air_yards.pass, completed_air_yards_per_completion.pass, completed_air_yards_per_pass_attempt.pass, pass_yards_after_catch.pass, pass_yards_after_catch_per_completion.pass, scrambles.pass, scramble_yards_per_attempt.pass, drop_pct.pass, bad_throw_pct.pass, on_tgt_pct.pass, pressure_pct.pass, ybc_att.rush, yac_att.rush, att.rush, yds.rush, td.rush, x1d.rush, ybc.rush, yac.rush, brk_tkl.rush, att_br.rush

fntsy_ fnts__ spcl__ pssng_p pacr rshng_ drft_p fntsP_ pssng_c gs pss_t. thrww. spks.p drps.p bd_th. tms_b. tms_hr. tms_ht. tms_p. bttd_. on_tgt_t. rp_pl. rp_yr. rp_pss_t. rp_pss_y. rp_rsh_t. rp_rsh_y. p_pss_t. p_pss_y. int__. i_____ cmp__. c____. c_____ ps___. p_____ scrmb. sc___. att.rs yds.rs td.rsh x1d.rs ybc.rs yc.rsh brk_t. att_b. drp_p. bd_t_. on_tgt_p. prss_. ybc_t. yc_tt. pckt_.

iter 1: 0.0063 0.0030 0.0000 0.1884 0.5315 0.3607 0.6076 0.4595 0.4128 0.0133 0.0040 0.2897 0.7744 0.1320 0.0481 0.0704 0.1810 0.1809 0.0393 0.3086 0.0254 0.2643 0.1720 0.0785 0.0755 0.2482 0.2632 0.1759 0.1001 0.0521 0.1117 0.0327 0.1165 0.1335 0.0233 0.1162 0.1193 0.1233 0.0423 0.0505 0.2058 0.0430 0.0543 0.1706 0.3530 0.3645 0.7312 0.4605 0.0970 0.7020 0.3538 0.5905 0.7721

iter 2: 0.0049 0.0049 0.0000 0.1983 0.5655 0.3632 0.6840 0.4728 0.3973 0.0136 0.0040 0.2748 0.7489 0.0741 0.0348 0.0661 0.1269 0.1361 0.0311 0.2756 0.0079 0.0502 0.0700 0.0725 0.0927 0.2026 0.2808 0.0622 0.0760 0.0109 0.0776 0.0203 0.0803 0.1760 0.0219 0.1136 0.0905 0.1153 0.0295 0.0344 0.2034 0.0429 0.0484 0.1162 0.2378 0.3729 0.7683 0.4079 0.0916 0.6689 0.3539 0.6132 0.7710

iter 3: 0.0049 0.0048 0.0000 0.2010 0.5571 0.3533 0.7022 0.4691 0.4033 0.0136 0.0041 0.2758 0.7556 0.0774 0.0354 0.0685 0.1273 0.1375 0.0306 0.2700 0.0090 0.0476 0.0703 0.0718 0.0904 0.2130 0.2749 0.0598 0.0782 0.0108 0.0802 0.0194 0.0833 0.1637 0.0223 0.1120 0.0930 0.1178 0.0282 0.0325 0.2131 0.0418 0.0470 0.1196 0.2344 0.3464 0.7793 0.4142 0.0958 0.6861 0.3424 0.6006 0.7603

iter 4: 0.0051 0.0049 0.0000 0.1938 0.5664 0.3622 0.7074 0.4673 0.4133 0.0140 0.0041 0.2781 0.7628 0.0762 0.0366 0.0671 0.1247 0.1359 0.0319 0.2796 0.0084 0.0554 0.0740 0.0658 0.0906 0.2068 0.2757 0.0618 0.0785 0.0118 0.0771 0.0189 0.0832 0.1654 0.0249 0.1097 0.0911 0.1155 0.0277 0.0322 0.2033 0.0409 0.0484 0.1169 0.2505 0.3568 0.7225 0.4062 0.0915 0.6787 0.3497 0.5692 0.7516

iter 5: 0.0052 0.0050 0.0000 0.1933 0.5606 0.3490 0.6907 0.4633 0.3962 0.0137 0.0042 0.2803 0.7482 0.0729 0.0359 0.0681 0.1260 0.1367 0.0313 0.2760 0.0082 0.0518 0.0750 0.0695 0.0952 0.2059 0.2830 0.0617 0.0744 0.0126 0.0812 0.0200 0.0856 0.1440 0.0255 0.1188 0.0945 0.1144 0.0285 0.0296 0.2001 0.0421 0.0469 0.1172 0.2555 0.3732 0.7372 0.4198 0.0859 0.6769 0.3526 0.5873 0.7653 missRanger object. Extract imputed data via $data

- best iteration: 4

- best average OOB imputation error: 0.2036231 Code

data_train_qb <- seasonalData_lag_qb_train_imp$data

data_train_qb$fantasyPointsMC_lag <- scale(data_train_qb$fantasyPoints_lag, scale = FALSE) # mean-centered

data_train_qb_matrix <- data_train_qb %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

seasonalData_lag_qb_test_imp <- predict(

object = seasonalData_lag_qb_train_imp,

newdata = seasonalData_lag_qb_test,

seed = 52242)

data_test_qb <- seasonalData_lag_qb_test_imp

data_test_qb_matrix <- data_test_qb %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()Code

# RBs

seasonalData_lag_rb_all_imp <- missRanger::missRanger(

seasonalData_lag_rb_all,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

seasonalData_lag_rb_all_imp

data_all_rb <- seasonalData_lag_rb_all_imp$data

data_all_rb$fantasyPointsMC_lag <- scale(data_all_rb$fantasyPoints_lag, scale = FALSE) # mean-centered

data_all_rb_matrix <- data_all_rb %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

newData_rb <- data_all_rb %>%

filter(season == max(season, na.rm = TRUE)) %>%

select(-fantasyPoints_lag, -fantasyPointsMC_lag)

newData_rb_matrix <- data_all_rb_matrix[

data_all_rb_matrix[, "season"] == max(data_all_rb_matrix[, "season"], na.rm = TRUE), # keep only rows with the most recent season

, # all columns

drop = FALSE]

dropCol_rb <- which(colnames(newData_rb_matrix) %in% c("fantasyPoints_lag","fantasyPointsMC_lag"))

newData_rb_matrix <- newData_rb_matrix[, -dropCol_rb, drop = FALSE]

seasonalData_lag_rb_train_imp <- missRanger::missRanger(

seasonalData_lag_rb_train,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

seasonalData_lag_rb_train_imp

data_train_rb <- seasonalData_lag_rb_train_imp$data

data_train_rb$fantasyPointsMC_lag <- scale(data_train_rb$fantasyPoints_lag, scale = FALSE) # mean-centered

data_train_rb_matrix <- data_train_rb %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

seasonalData_lag_rb_test_imp <- predict(

object = seasonalData_lag_rb_train_imp,

newdata = seasonalData_lag_rb_test,

seed = 52242)

data_test_rb <- seasonalData_lag_rb_test_imp

data_test_rb_matrix <- data_test_rb %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()Code

# WRs

seasonalData_lag_wr_all_imp <- missRanger::missRanger(

seasonalData_lag_wr_all,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

seasonalData_lag_wr_all_imp

data_all_wr <- seasonalData_lag_wr_all_imp$data

data_all_wr$fantasyPointsMC_lag <- scale(data_all_wr$fantasyPoints_lag, scale = FALSE) # mean-centered

data_all_wr_matrix <- data_all_wr %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

newData_wr <- data_all_wr %>%

filter(season == max(season, na.rm = TRUE)) %>%

select(-fantasyPoints_lag, -fantasyPointsMC_lag)

newData_wr_matrix <- data_all_wr_matrix[

data_all_wr_matrix[, "season"] == max(data_all_wr_matrix[, "season"], na.rm = TRUE), # keep only rows with the most recent season

, # all columns

drop = FALSE]

dropCol_wr <- which(colnames(newData_wr_matrix) %in% c("fantasyPoints_lag","fantasyPointsMC_lag"))

newData_wr_matrix <- newData_wr_matrix[, -dropCol_wr, drop = FALSE]

seasonalData_lag_wr_train_imp <- missRanger::missRanger(

seasonalData_lag_wr_train,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

seasonalData_lag_wr_train_imp

data_train_wr <- seasonalData_lag_wr_train_imp$data

data_train_wr$fantasyPointsMC_lag <- scale(data_train_wr$fantasyPoints_lag, scale = FALSE) # mean-centered

data_train_wr_matrix <- data_train_wr %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

seasonalData_lag_wr_test_imp <- predict(

object = seasonalData_lag_wr_train_imp,

newdata = seasonalData_lag_wr_test,

seed = 52242)

data_test_wr <- seasonalData_lag_wr_test_imp

data_test_wr_matrix <- data_test_wr %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()Code

# TEs

seasonalData_lag_te_all_imp <- missRanger::missRanger(

seasonalData_lag_te_all,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

seasonalData_lag_te_all_imp

data_all_te <- seasonalData_lag_te_all_imp$data

data_all_te$fantasyPointsMC_lag <- scale(data_all_te$fantasyPoints_lag, scale = FALSE) # mean-centered

data_all_te_matrix <- data_all_te %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

newData_te <- data_all_te %>%

filter(season == max(season, na.rm = TRUE)) %>%

select(-fantasyPoints_lag, -fantasyPointsMC_lag)

newData_te_matrix <- data_all_te_matrix[

data_all_te_matrix[, "season"] == max(data_all_te_matrix[, "season"], na.rm = TRUE), # keep only rows with the most recent season

, # all columns

drop = FALSE]

dropCol_te <- which(colnames(newData_te_matrix) %in% c("fantasyPoints_lag","fantasyPointsMC_lag"))

newData_te_matrix <- newData_te_matrix[, -dropCol_te, drop = FALSE]

seasonalData_lag_te_train_imp <- missRanger::missRanger(

seasonalData_lag_te_train,

pmm.k = 5,

verbose = 2,

seed = 52242,

keep_forests = TRUE)

seasonalData_lag_te_train_imp

data_train_te <- seasonalData_lag_te_train_imp$data

data_train_te$fantasyPointsMC_lag <- scale(data_train_te$fantasyPoints_lag, scale = FALSE) # mean-centered

data_train_te_matrix <- data_train_te %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()

seasonalData_lag_te_test_imp <- predict(

object = seasonalData_lag_te_train_imp,

newdata = seasonalData_lag_te_test,

seed = 52242)

data_test_te <- seasonalData_lag_te_test_imp

data_test_te_matrix <- data_test_te %>%

mutate(across(where(is.factor), ~ as.numeric(as.integer(.)))) %>%

as.matrix()19.5 Identify Cores for Parallel Processing

We use the future package (Bengtsson, 2025) for parallel (faster) processing.

19.6 Set up the Cross-Validation Folds

In the examples below, we predict the future fantasy points of Quarterbacks. However, the examples could be applied to any of the positions. There are various approaches to cross-validation. In the examples below, we use k-fold cross-validation. However, we also provide the code to apply leave-one-out (LOO) cross-validation. k-fold and LOO cross-validation are both forms of internal cross-validation.

19.6.1 k-Fold Cross-Validation

k-fold cross-validation partitions the data into k folds (subsets). In each of the k iterations, the model is trained on \(k - 1\) folds and is evaluated on the remaining fold. For example, in a 10-fold cross-validation (i.e., \(k = 10\)), as used below, the model is trained 10 times, each time leaving out a different 10% of the data for validation. k-fold cross-validation is widely used because it tends to yield stable estimates of model performance, by balancing bias and variance. It is also computationally efficient, requiring only k model fits to evaluate model performance.

We set up the k folds using the rsample::group_vfold_cv() function of the rsample package (Frick, Chow, et al., 2025).

19.6.2 Leave-One-Out (LOO) Cross-Validation

Leave-one-out (LOO) cross-validation partitions the data into n folds, where n is the sample size. In each of the n iterations, the model is trained on \(n - 1\) observations and is evaluated on the one left out. For example, in a LOO cross-validation with 100 players, the model is trained 100 times, each time leaving out a different player for validation. LOO cross-validation is a special case of k-fold cross-validation where \(k = n\). LOO cross-validation is especially useful when the dataset is small—too small to form reliable training sets in k-fold cross-validation (e.g., with \(k = 5\) or \(k = 10\), which divide the sample into 5 or 10 folds, respectively). However, LOO tends to be less computationally efficient because it requires more model fits than k-fold cross-validation. LOO tends to have low bias, producing performance estimates closer to those obtained when fitting the model to the full dataset, because each model is trained on nearly all the data. However, LOO also tends to have high variance in its error estimates, because each validation fold contains only a single observation, making those estimates more sensitive to individual data points.

We set up the LOO folds using the rsample::loo_cv() function of the rsample package (Frick, Chow, et al., 2025).

19.7 Fitting the Traditional Linear Regression Models

We describe linear regression in Chapter 11.

19.7.1 Regression with One Predictor

Below, we fit a linear regression model with one predictor and evaluate it with cross-validation. We also evaluate its accuracy on the hold-out (test) data. For each of the models, we fit and evaluate the models using the tidymodels ecosystem of packages (Kuhn & Wickham, 2020, 2025). Modeling using tidymodels is described in Kuhn & Silge (2023): https://www.tmwr.org. We specify our model formula using the recipes::recipe() function of the recipes package (Kuhn, Wickham, et al., 2025). We define the model using the parsnip::linear_reg(), parsnip::set_engine(), and parsnip::set_mode() functions of the parsnip package (Kuhn & Vaughan, 2025). We specify the workflow using the workflows::workflow(), workflows::add_recipe(), and workflows::add_model() functions of the workflows package (Vaughan & Couch, 2025). We fit the cross-validation model using the tune::fit_resamples() function of the tune package (Kuhn, 2025). We specify the accuracy metrics to evaluate using the yardstick::metric_set() function of the yardstick package (Kuhn, Vaughan, et al., 2025). We fit the final model using the workflows::fit() function of the workflows package (Vaughan & Couch, 2025). We evaluate the accuracy of the model’s predictions on the test data using the petersenlab::accuracyOverall() of the petersenlab package (Petersen, 2025a).

Code

# Set seed for reproducibility

set.seed(52242)

# Set up Cross-Validation

folds <- folds_kFold

# Define Recipe (Formula)

rec <- recipes::recipe(

fantasyPoints_lag ~ fantasyPoints,

data = data_train_qb)

# Define Model

lm_spec <- parsnip::linear_reg() %>%

parsnip::set_engine("lm") %>%

parsnip::set_mode("regression")

# Workflow

lm_wf <- workflows::workflow() %>%

workflows::add_recipe(rec) %>%

workflows::add_model(lm_spec)

# Fit Model with Cross-Validation

cv_results <- tune::fit_resamples(

lm_wf,

resamples = folds,

metrics = yardstick::metric_set(rmse, mae, rsq),

control = tune::control_resamples(save_pred = TRUE)

)

# View Cross-Validation metrics

tune::collect_metrics(cv_results)Code

Code

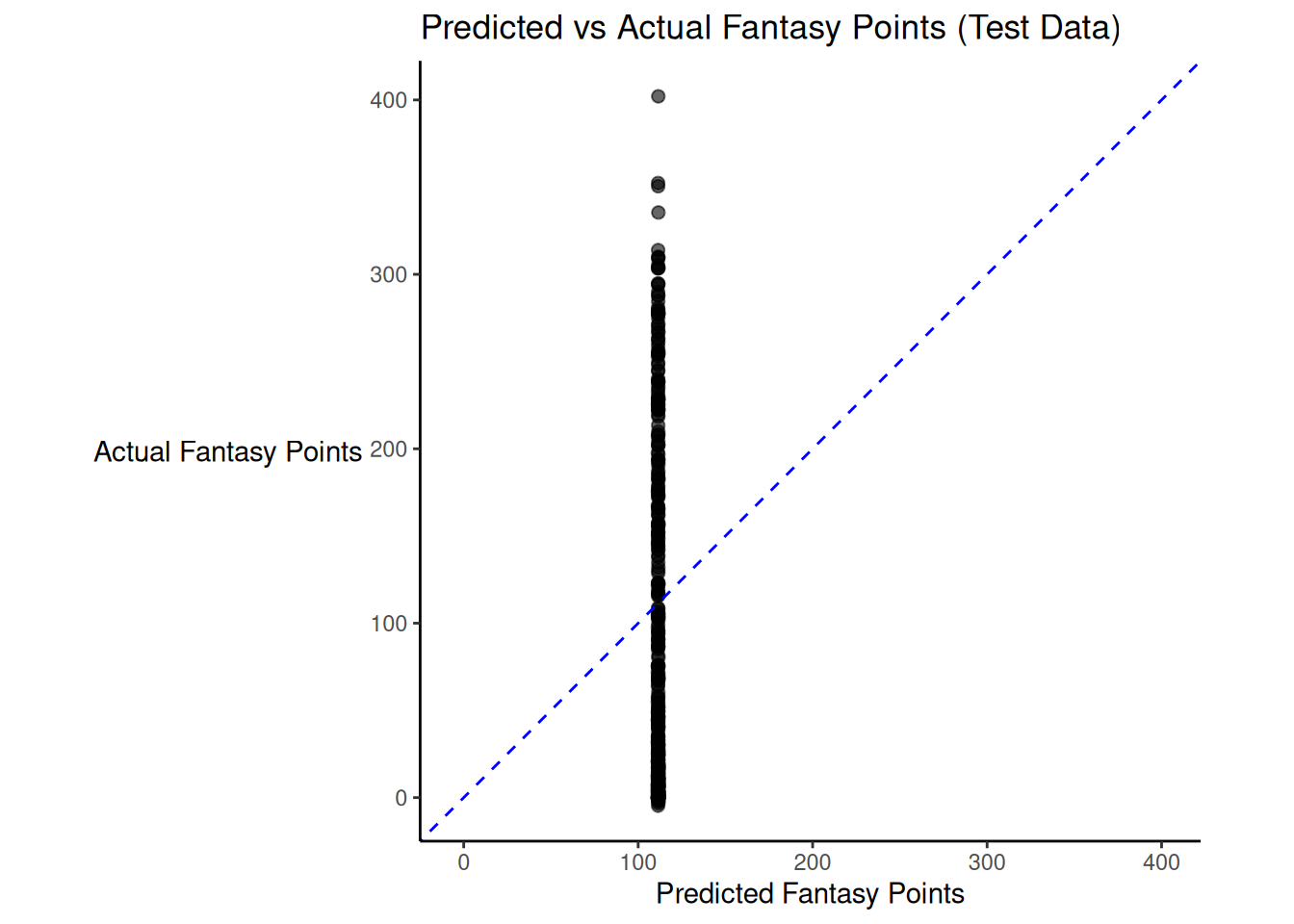

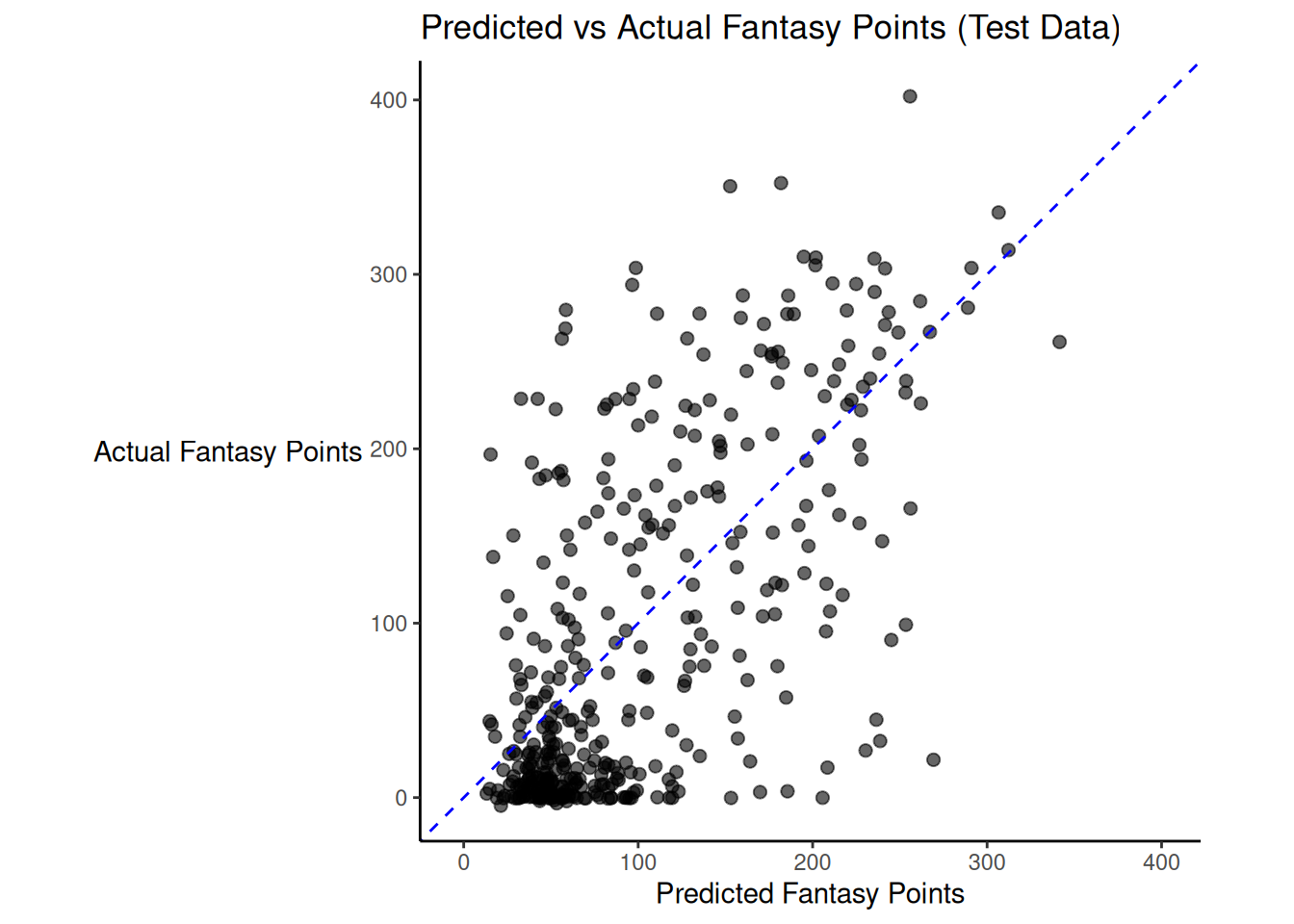

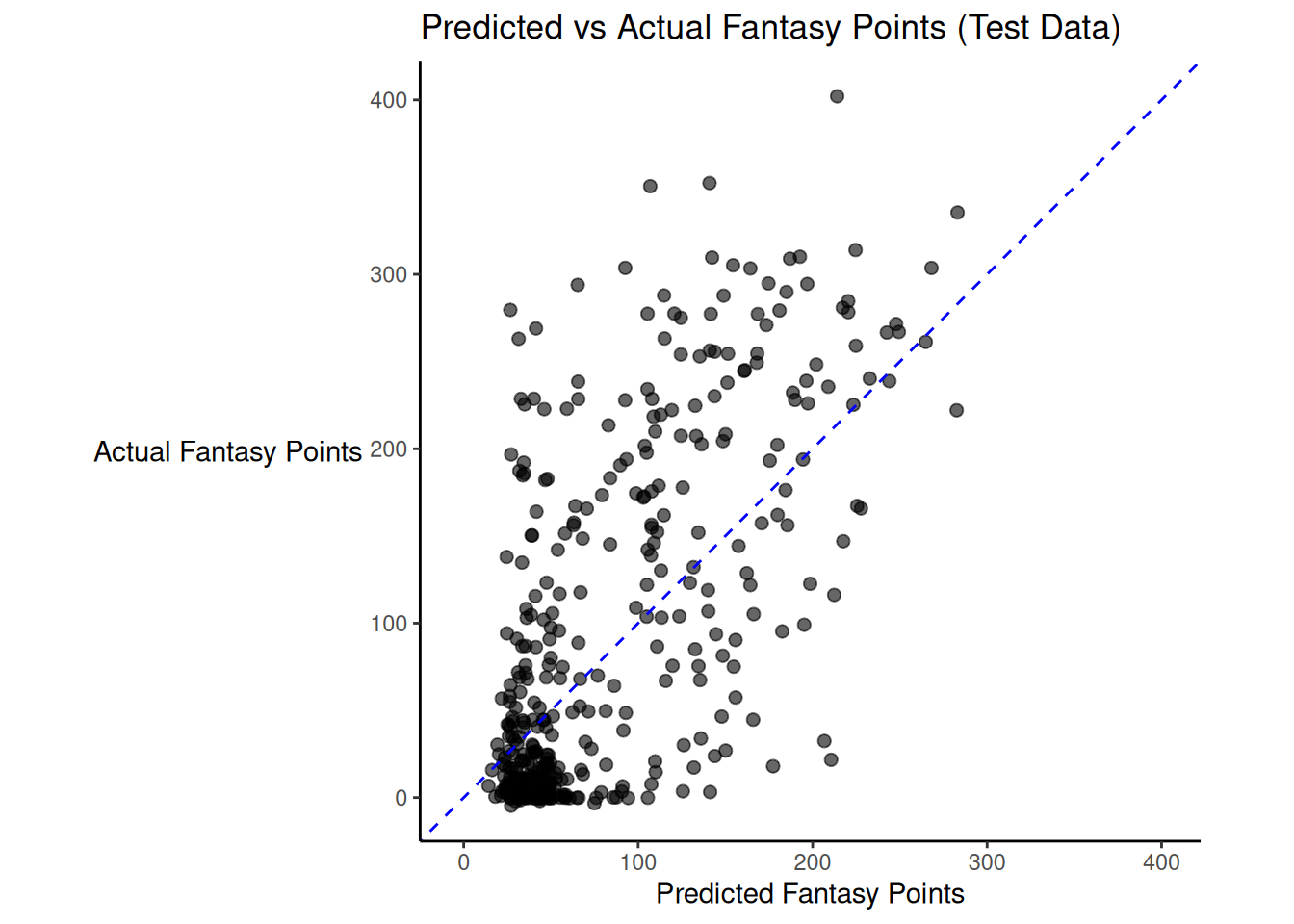

There was modest shrinkage from the training model to the test model: the \(R^2\) for the model on the training data was 0.46; the \(R^2\) for the same model applied to the test data was 0.42.

Figure 19.1 depicts the predicted versus actual fantasy points for the model on the test data.

Code

# Calculate combined range for axes

axis_limits <- range(c(df$pred, df$fantasyPoints_lag), na.rm = TRUE)

ggplot(

df,

aes(

x = pred,

y = fantasyPoints_lag)) +

geom_point(

size = 2,

alpha = 0.6) +

geom_abline(

slope = 1,

intercept = 0,

color = "blue",

linetype = "dashed") +

coord_equal(

xlim = axis_limits,

ylim = axis_limits) +

labs(

title = "Predicted vs Actual Fantasy Points (Test Data)",

x = "Predicted Fantasy Points",

y = "Actual Fantasy Points"

) +

theme_classic() +

theme(axis.title.y = element_text(angle = 0, vjust = 0.5)) # horizontal y-axis titleBelow are the model predictions for next year’s fantasy points:

19.7.2 Regression with Multiple Predictors

Below, we fit a linear regression model with multiple predictors and evaluate it with cross-validation. We also evaluate its accuracy on the hold-out (test) data.

Code

# Set seed for reproducibility

set.seed(52242)

# Set up Cross-Validation

folds <- folds_kFold

# Define Recipe (Formula)

rec <- recipes::recipe(

fantasyPoints_lag ~ ., # use all predictors

data = data_train_qb %>% select(-gsis_id, -fantasyPointsMC_lag))

# Define Model

lm_spec <- parsnip::linear_reg() %>%

parsnip::set_engine("lm") %>%

parsnip::set_mode("regression")

# Workflow

lm_wf <- workflows::workflow() %>%

workflows::add_recipe(rec) %>%

workflows::add_model(lm_spec)

# Fit Model with Cross-Validation

cv_results <- tune::fit_resamples(

lm_wf,

resamples = folds,

metrics = yardstick::metric_set(rmse, mae, rsq),

control = tune::control_resamples(save_pred = TRUE)

)

# View Cross-Validation metrics

tune::collect_metrics(cv_results)Code

Code

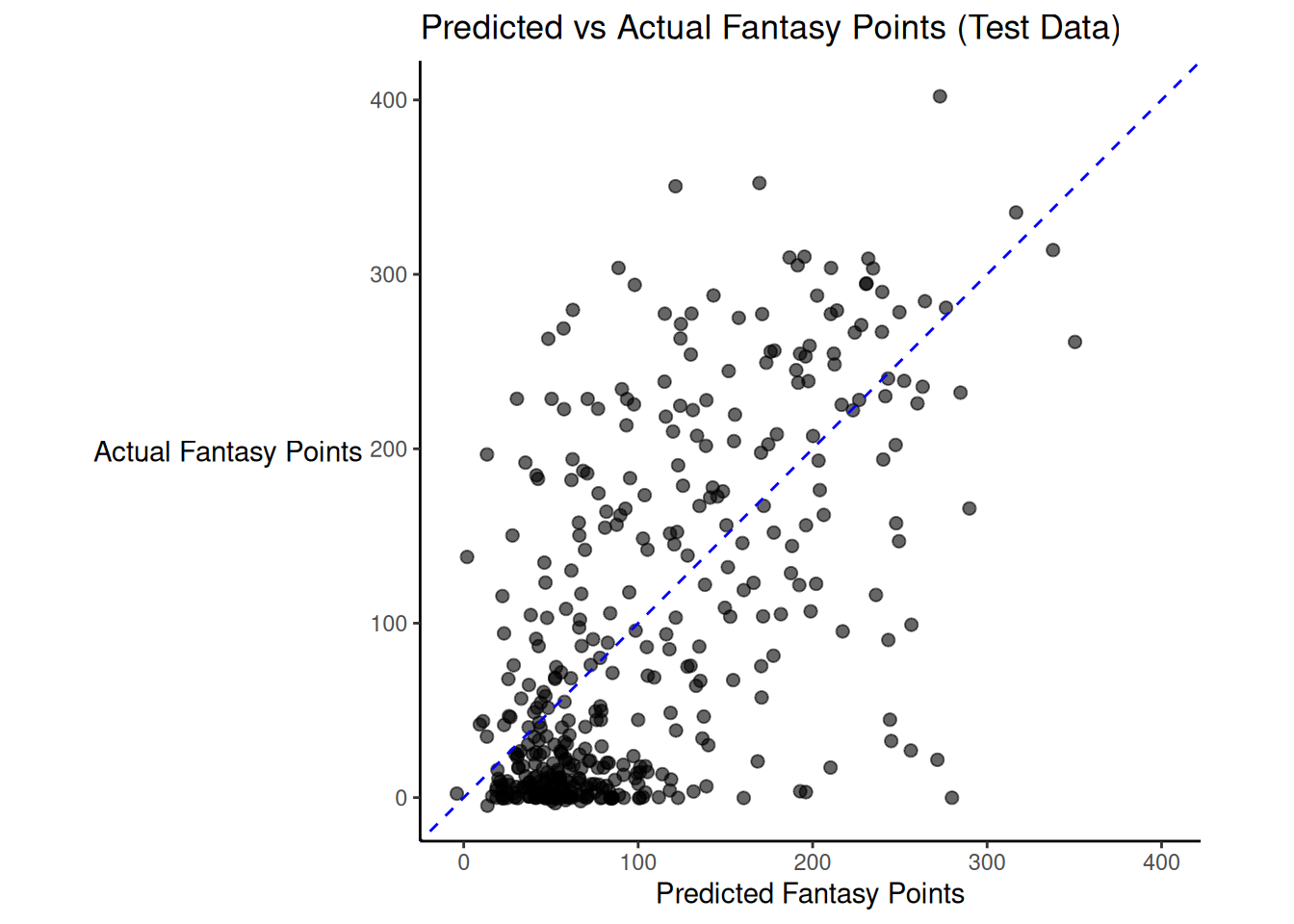

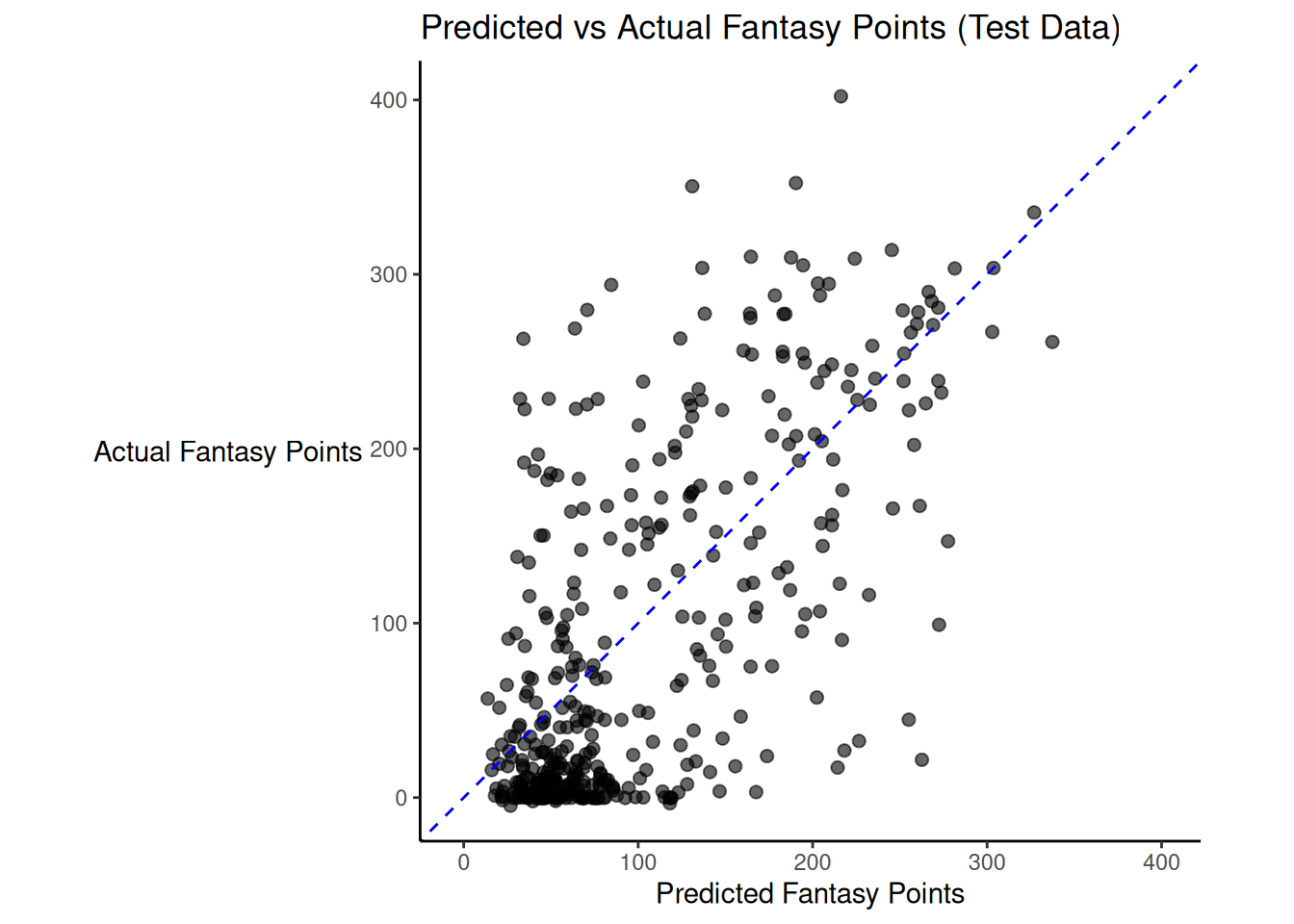

There was modest shrinkage from the training model to the test model: the \(R^2\) for the model on the training data was 0.45; the \(R^2\) for the same model applied to the test data was 0.4.

Figure 19.2 depicts the predicted versus actual fantasy points for the model on the test data.

Code

# Calculate combined range for axes

axis_limits <- range(c(df$pred, df$fantasyPoints_lag), na.rm = TRUE)

ggplot(

df,

aes(

x = pred,

y = fantasyPoints_lag)) +

geom_point(

size = 2,

alpha = 0.6) +

geom_abline(

slope = 1,

intercept = 0,

color = "blue",

linetype = "dashed") +

coord_equal(

xlim = axis_limits,

ylim = axis_limits) +

labs(

title = "Predicted vs Actual Fantasy Points (Test Data)",

x = "Predicted Fantasy Points",

y = "Actual Fantasy Points"

) +

theme_classic() +

theme(axis.title.y = element_text(angle = 0, vjust = 0.5)) # horizontal y-axis titleBelow are the model predictions for next year’s fantasy points:

19.8 Fitting the Machine Learning Models

19.8.1 Least Absolute Shrinkage and Selection Option (LASSO)

Below, we fit a LASSO model. We evaluate it and tune its penalty parameter with cross-validation. The penalty parameter in a LASSO model controls the strength of regularization applied to the model coefficients. Smaller penalty values result in less regularization, allowing the model to retain more predictors with larger (nonzero) coefficients. This typically reduces bias but increases variance of the model’s predictions, as the model may overfit to the training data by including irrelevant or weak predictors. Larger penalty values apply stronger regularization, shrinking more coefficients exactly to zero. This encourages a sparser model that may increase bias (by excluding useful predictors) but reduces variance and improves generalizability by simplifying the model and reducing overfitting.

After tuning the model, we evaluate its accuracy on the hold-out (test) data.

The LASSO models were fit using the glmnet package (Friedman et al., 2010, 2025; Tay et al., 2023).

For the machine learning models, we perform the parameter tuning using the tune::tune(), tune::tune_grid(), tune::select_best(), and tune::finalize_workflow() functions of the tune package (Kuhn, 2025). We specify the grid of possible parameter values using the dials::grid_regular() function of the dials package (Kuhn & Frick, 2025).

Code

# Set seed for reproducibility

set.seed(52242)

# Set up Cross-Validation

folds <- folds_kFold

# Define Recipe (Formula)

rec <- recipes::recipe(

fantasyPoints_lag ~ ., # use all predictors

data = data_train_qb %>% select(-gsis_id, -fantasyPointsMC_lag))

# Define Model

lasso_spec <-

parsnip::linear_reg(

penalty = tune::tune(),

mixture = 1) %>%

parsnip::set_engine("glmnet")

# Workflow

lasso_wf <- workflows::workflow() %>%

workflows::add_recipe(rec) %>%

workflows::add_model(lasso_spec)

# Define grid of penalties to try (log scale is typical)

penalty_grid <- dials::grid_regular(

dials::penalty(range = c(-4, -1)),

levels = 20)

# Tune the Penalty Parameter

cv_results <- tune::tune_grid(

lasso_wf,

resamples = folds,

grid = penalty_grid,

metrics = yardstick::metric_set(rmse, mae, rsq),

control = tune::control_grid(save_pred = TRUE)

)

# View Cross-Validation metrics

tune::collect_metrics(cv_results)Code

best_penalty <- tune::select_best(cv_results, metric = "mae")

# Finalize Workflow with Best Penalty

final_wf <- tune::finalize_workflow(

lasso_wf,

best_penalty)

# Fit Final Model on Training Data

final_model <- workflows::fit(

final_wf,

data = data_train_qb)

# View Coefficients

final_model %>%

workflows::extract_fit_parsnip() %>%

broom::tidy()Code

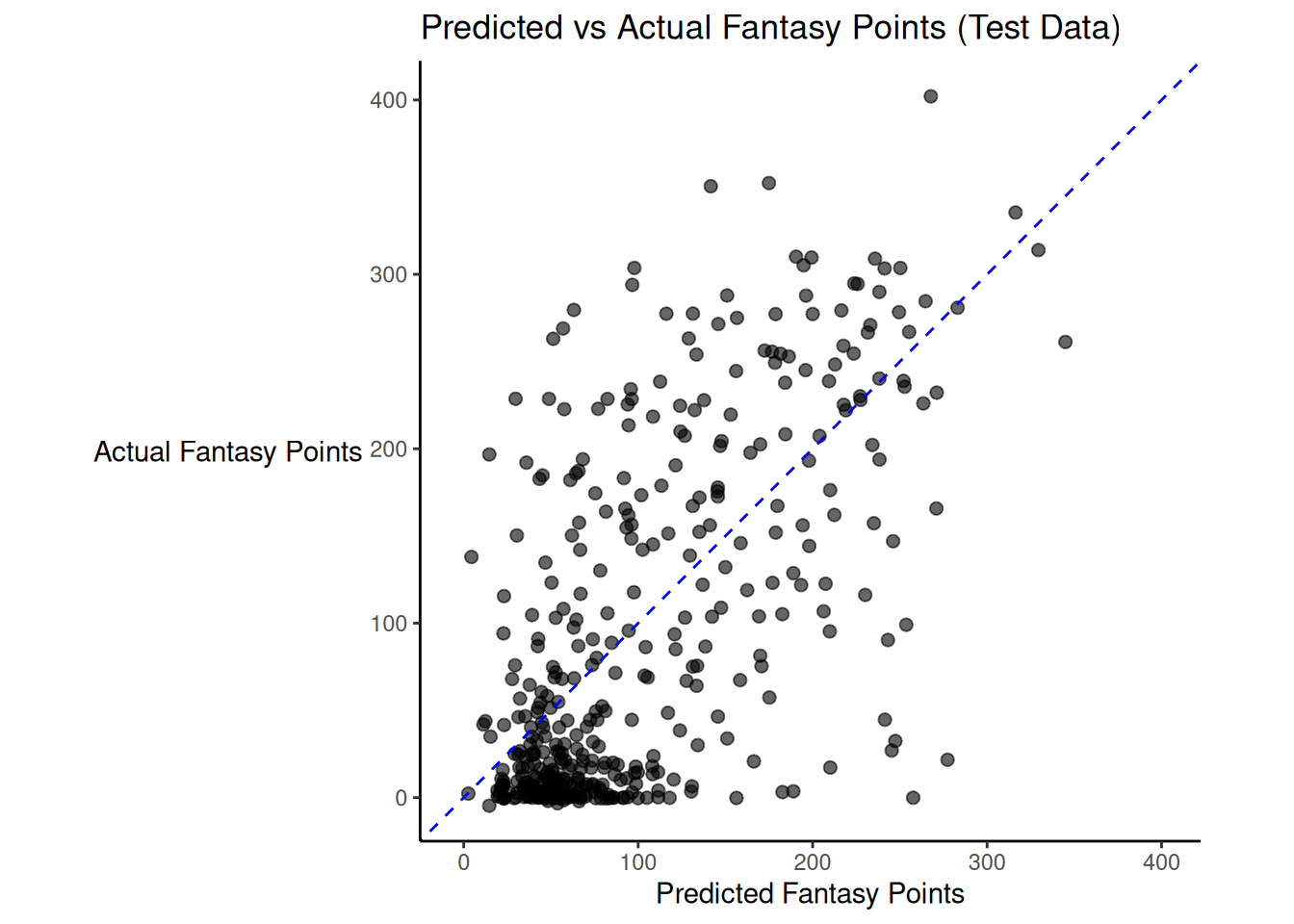

There was modest shrinkage from the training model to the test model: the \(R^2\) for the model on the training data was 0.46; the \(R^2\) for the same model applied to the test data was 0.41.

Figure 19.3 depicts the predicted versus actual fantasy points for the model on the test data.

Code

# Calculate combined range for axes

axis_limits <- range(c(df$pred, df$fantasyPoints_lag), na.rm = TRUE)

ggplot(

df,

aes(

x = pred,

y = fantasyPoints_lag)) +

geom_point(

size = 2,

alpha = 0.6) +

geom_abline(

slope = 1,

intercept = 0,

color = "blue",

linetype = "dashed") +

coord_equal(

xlim = axis_limits,

ylim = axis_limits) +

labs(

title = "Predicted vs Actual Fantasy Points (Test Data)",

x = "Predicted Fantasy Points",

y = "Actual Fantasy Points"

) +

theme_classic() +

theme(axis.title.y = element_text(angle = 0, vjust = 0.5)) # horizontal y-axis titleBelow are the model predictions for next year’s fantasy points:

19.8.2 Ridge Regression

Below, we fit a ridge regression model. We evaluate it and tune its penalty parameter with cross-validation. The penalty parameter in a ridge regression model controls the amount of regularization applied to the model’s coefficients. Smaller penalty values allow coefficients to remain large, resulting in a model that closely fits the training data. This may reduce bias but increases the risk of overfitting, especially in the presence of multicollinearity or many weak predictors. Larger penalty values shrink coefficients toward zero (though not exactly to zero), which reduces model complexity. This typically increases bias slightly but reduces variance of the model’s predictions, making the model more stable and better suited for generalization to new data.

After tuning the model, we also evaluate its accuracy on the hold-out (test) data.

The ridge regression models were fit using the glmnet package (Friedman et al., 2010, 2025; Tay et al., 2023).

Code

# Set seed for reproducibility

set.seed(52242)

# Set up Cross-Validation

folds <- folds_kFold

# Define Recipe (Formula)

rec <- recipes::recipe(

fantasyPoints_lag ~ ., # use all predictors

data = data_train_qb %>% select(-gsis_id, -fantasyPointsMC_lag))

# Define Model

ridge_spec <-

parsnip::linear_reg(

penalty = tune::tune(),

mixture = 0) %>%

parsnip::set_engine("glmnet")

# Workflow

ridge_wf <- workflows::workflow() %>%

workflows::add_recipe(rec) %>%

workflows::add_model(ridge_spec)

# Define grid of penalties to try (log scale is typical)

penalty_grid <- dials::grid_regular(

dials::penalty(range = c(-4, -1)),

levels = 20)

# Tune the Penalty Parameter

cv_results <- tune::tune_grid(

ridge_wf,

resamples = folds,

grid = penalty_grid,

metrics = yardstick::metric_set(rmse, mae, rsq),

control = tune::control_grid(save_pred = TRUE)

)

# View Cross-Validation metrics

tune::collect_metrics(cv_results)Code

best_penalty <- tune::select_best(cv_results, metric = "mae")

# Finalize Workflow with Best Penalty

final_wf <- tune::finalize_workflow(

ridge_wf,

best_penalty)

# Fit Final Model on Training Data

final_model <- workflows::fit(

final_wf,

data = data_train_qb)

# View Coefficients

final_model %>%

workflows::extract_fit_parsnip() %>%

broom::tidy()Code

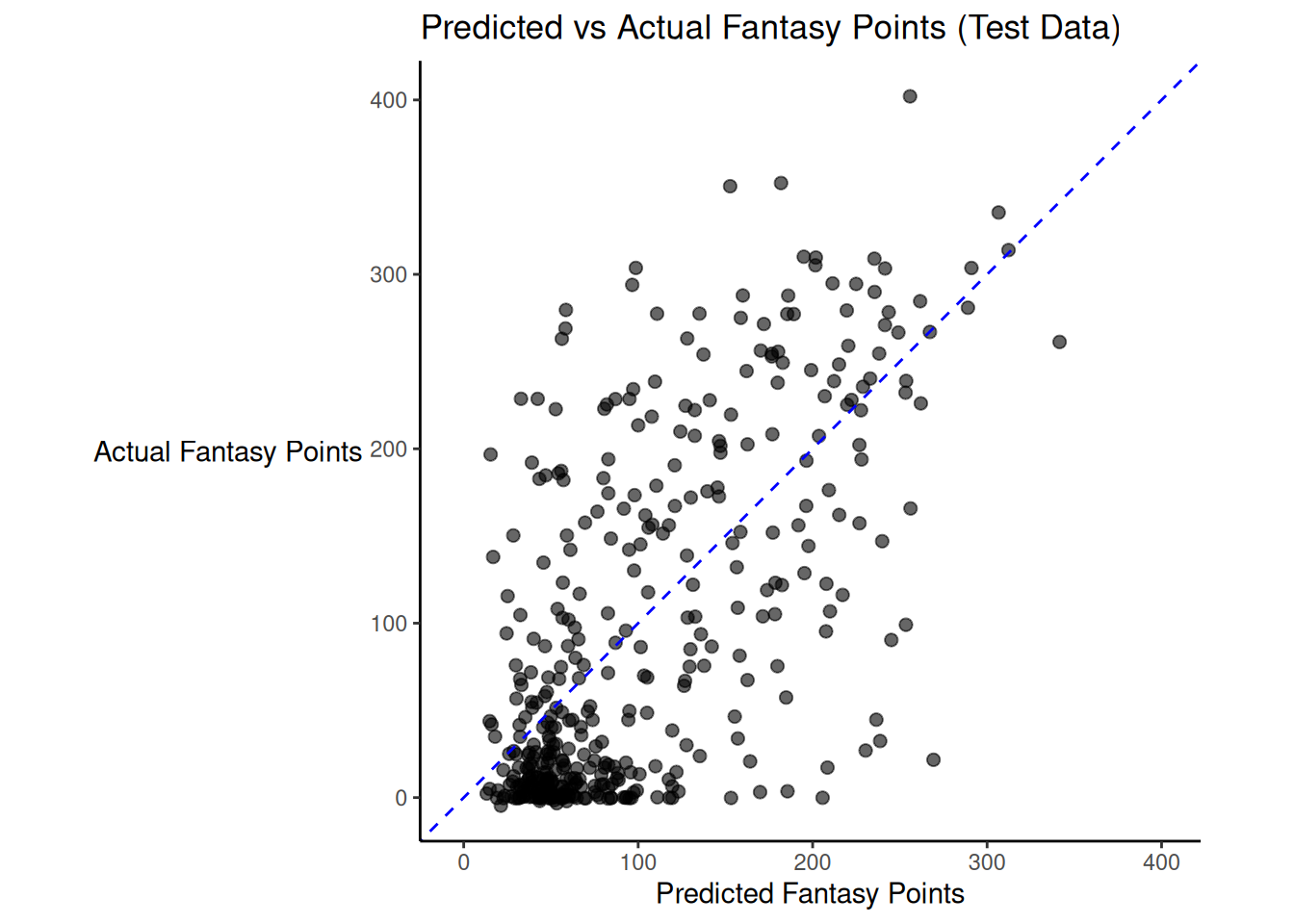

There was modest shrinkage from the training model to the test model: the \(R^2\) for the model on the training data was 0.47; the \(R^2\) for the same model applied to the test data was 0.42.

Figure 19.4 depicts the predicted versus actual fantasy points for the model on the test data.

Code

# Calculate combined range for axes

axis_limits <- range(c(df$pred, df$fantasyPoints_lag), na.rm = TRUE)

ggplot(

df,

aes(

x = pred,

y = fantasyPoints_lag)) +

geom_point(

size = 2,

alpha = 0.6) +

geom_abline(

slope = 1,

intercept = 0,

color = "blue",

linetype = "dashed") +

coord_equal(

xlim = axis_limits,

ylim = axis_limits) +

labs(

title = "Predicted vs Actual Fantasy Points (Test Data)",

x = "Predicted Fantasy Points",

y = "Actual Fantasy Points"

) +

theme_classic() +

theme(axis.title.y = element_text(angle = 0, vjust = 0.5)) # horizontal y-axis titleBelow are the model predictions for next year’s fantasy points:

19.8.3 Elastic Net

Below, we fit an elastic net model. We evaluate it and tune its penalty and mixture parameters with cross-validation.

The penalty parameter controls the overall strength of regularization applied to the model’s coefficients. Smaller penalty values allow coefficients to remain large, which can reduce bias but increase variance of the model’s predictions and can increase the risk of overfitting. Larger penalty values shrink coefficients more aggressively, leading to simpler models with potentially higher bias but lower variance of predictions. This regularization helps prevent overfitting, especially when the model includes many predictors or multicollinearity.

The mixture parameter controls the balance between LASSO and ridge regularization. It ranges from 0 to 1: A value of 0 applies pure ridge regression, which shrinks all coefficients but keeps them in the model. A value of 1 applies pure LASSO, which can shrink some coefficients exactly to zero, effectively performing variable selection. Values between 0 and 1 apply a combination: ridge-like smoothing and LASSO-like sparsity. Smaller mixture values favor shrinkage without variable elimination, whereas larger values favor sparser solutions by excluding weak predictors.

After tuning the model, we also evaluate its accuracy on the hold-out (test) data.

The elastic net models were fit using the glmnet package (Friedman et al., 2010, 2025; Tay et al., 2023).

Code

# Set seed for reproducibility

set.seed(52242)

# Set up Cross-Validation

folds <- folds_kFold

# Define Recipe (Formula)

rec <- recipes::recipe(

fantasyPoints_lag ~ ., # use all predictors

data = data_train_qb %>% select(-gsis_id, -fantasyPointsMC_lag))

# Define Model

enet_spec <-

parsnip::linear_reg(

penalty = tune::tune(),

mixture = tune::tune()) %>%

parsnip::set_engine("glmnet")

# Workflow

enet_wf <- workflows::workflow() %>%

workflows::add_recipe(rec) %>%

workflows::add_model(enet_spec)

# Define a regular grid for both penalty and mixture

grid_enet <- dials::grid_regular(

dials::penalty(range = c(-4, -1)),

dials::mixture(range = c(0, 1)),

levels = c(20, 5) # 20 penalty values × 5 mixture values

)

# Tune the Grid

cv_results <- tune::tune_grid(

enet_wf,

resamples = folds,

grid = grid_enet,

metrics = yardstick::metric_set(rmse, mae, rsq),

control = tune::control_grid(save_pred = TRUE)

)

# View Cross-Validation metrics

tune::collect_metrics(cv_results)Code

best_penalty <- tune::select_best(cv_results, metric = "mae")

# Finalize Workflow with Best Penalty

final_wf <- tune::finalize_workflow(

enet_wf,

best_penalty)

# Fit Final Model on Training Data

final_model <- workflows::fit(

final_wf,

data = data_train_qb)

# View Coefficients

final_model %>%

workflows::extract_fit_parsnip() %>%

broom::tidy()Code

There was modest shrinkage from the training model to the test model: the \(R^2\) for the model on the training data was 0.47; the \(R^2\) for the same model applied to the test data was 0.42.

Figure 19.5 depicts the predicted versus actual fantasy points for the model on the test data.

Code

# Calculate combined range for axes

axis_limits <- range(c(df$pred, df$fantasyPoints_lag), na.rm = TRUE)

ggplot(

df,

aes(

x = pred,

y = fantasyPoints_lag)) +

geom_point(

size = 2,

alpha = 0.6) +

geom_abline(

slope = 1,

intercept = 0,

color = "blue",

linetype = "dashed") +

coord_equal(

xlim = axis_limits,

ylim = axis_limits) +

labs(

title = "Predicted vs Actual Fantasy Points (Test Data)",

x = "Predicted Fantasy Points",

y = "Actual Fantasy Points"

) +

theme_classic() +

theme(axis.title.y = element_text(angle = 0, vjust = 0.5)) # horizontal y-axis titleBelow are the model predictions for next year’s fantasy points:

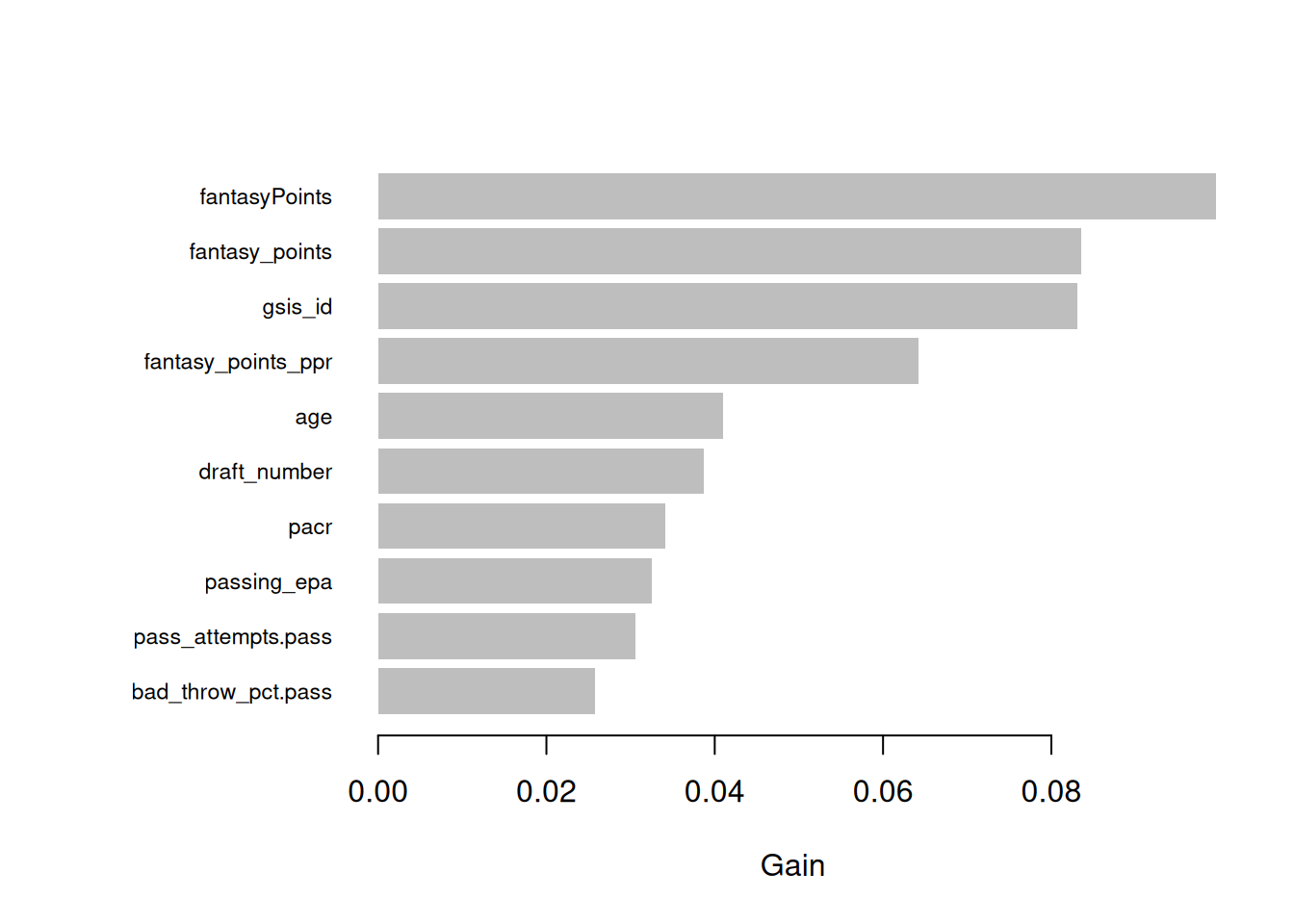

19.8.4 Random Forest Machine Learning

A random forest model combines the results of many decision trees. A decision tree splits the data into groups based on the values of the predictors, and then predicts the outcome within each group. A given decision tree can be noisy and prone to overfitting, so random forests build many trees, where each tree is trained on a random subset of the data and considers a random subset of predictors at each split. The random forest then averages the predictions of all the decision trees, to improve overall predictive accuracy.

19.8.4.1 Assuming Independent Observations

Below, we fit a random forest model. We evaluate it and tune two parameters with cross-validation. The first parameter is mtry, which controls the number of predictors randomly sampled at each split in a decision tree. Smaller mtry values increase tree diversity by forcing trees to consider different subsets of predictors. This typically reduces the variance of the overall model’s predictions (because the final prediction is averaged over more diverse trees) but may increase bias if strong predictors are frequently excluded. Larger mtry allow more predictors to be considered at each split, making trees more similar to each other. This can reduce bias but often increases variance of the model’s predictions, because the trees are more correlated and less effective at error cancellation when averaged.

The second parameter is min_n, which controls the minimum number of observations that must be present in a node for a split to be attempted. Smaller min_n values allow trees to grow deeper and capture more fine-grained patterns in the training data. This typically reduces bias but increases variance of the overall model’s predictions, because deeper trees are more likely to overfit to noise in the training data. Larger min_n values restrict the depth of the trees by requiring more data to justify a split. This can reduce variance by producing simpler, more generalizable trees—but may increase bias if the trees are unable to capture important structure in the data.

After tuning the model, we evaluate its accuracy on the hold-out (test) data.

The random forest models were fit using the ranger package (Wright, 2024; Wright & Ziegler, 2017). We specify the grid of possible parameter values using the dials::grid_random(), dials::update.parameters(), dials::mtry(), and dials::min_n() functions of the dials package (Kuhn & Frick, 2025) and the hardhat::extract_parameter_set_dials() function of the hardhat package (Frick, Vaughan, et al., 2025).

Code

# Set seed for reproducibility

set.seed(52242)

# Set up Cross-Validation

folds <- folds_kFold

# Define Recipe (Formula)

rec <- recipes::recipe(

fantasyPoints_lag ~ ., # use all predictors

data = data_train_qb %>% select(-gsis_id, -fantasyPointsMC_lag))

# Define Model

rf_spec <-

parsnip::rand_forest(

mtry = tune::tune(),

min_n = tune::tune(),

trees = 500) %>%

parsnip::set_mode("regression") %>%

parsnip::set_engine(

"ranger",

importance = "impurity")

# Workflow

rf_wf <- workflows::workflow() %>%

workflows::add_recipe(rec) %>%

workflows::add_model(rf_spec)

# Create Grid

n_predictors <- recipes::prep(rec) %>%

recipes::juice() %>%

dplyr::select(-fantasyPoints_lag) %>%

ncol()

# Dynamically define ranges based on data

rf_params <- hardhat::extract_parameter_set_dials(rf_spec) %>%

dials:::update.parameters(

mtry = dials::mtry(range = c(1L, n_predictors)),

min_n = dials::min_n(range = c(2L, 10L))

)

rf_grid <- dials::grid_random(rf_params, size = 15) #dials::grid_regular(rf_params, levels = 5)

# Tune the Grid

cv_results <- tune::tune_grid(

rf_wf,

resamples = folds,

grid = rf_grid,

metrics = yardstick::metric_set(rmse, mae, rsq),

control = tune::control_grid(save_pred = TRUE)

)

# View Cross-Validation metrics

tune::collect_metrics(cv_results)Code

best_penalty <- tune::select_best(cv_results, metric = "mae")

# Finalize Workflow with Best Penalty

final_wf <- tune::finalize_workflow(

rf_wf,

best_penalty)

# Fit Final Model on Training Data

final_model <- workflows::fit(

final_wf,

data = data_train_qb)

# View Feature Importance

rf_fit <- final_model %>%

workflows::extract_fit_parsnip()

rf_fitparsnip model object

Ranger result

Call:

ranger::ranger(x = maybe_data_frame(x), y = y, mtry = min_cols(~25L, x), num.trees = ~500, min.node.size = min_rows(~7L, x), importance = ~"impurity", num.threads = 1, verbose = FALSE, seed = sample.int(10^5, 1))

Type: Regression

Number of trees: 500

Sample size: 1574

Number of independent variables: 82

Mtry: 25

Target node size: 7

Variable importance mode: impurity

Splitrule: variance

OOB prediction error (MSE): 6400.27

R squared (OOB): 0.516673 season

126058.37

games

145371.26

gs

115643.53

years_of_experience

124165.84

age

261273.00

ageCentered20

249756.51

ageCentered20Quadratic

264252.29

height

98275.91

weight

168219.58

rookie_season

152332.14

draft_pick

375471.24

fantasy_points

2252446.36

fantasy_points_ppr

2781075.74

fantasyPoints

2350652.02

completions

356112.51

attempts

270139.82

passing_yards

898614.04

passing_tds

1045526.93

passing_interceptions

116222.75

sacks_suffered

96816.11

sack_yards_lost

150004.07

sack_fumbles

71114.87

sack_fumbles_lost

47403.37

passing_air_yards

146557.88

passing_yards_after_catch

231279.15

passing_first_downs

833827.76

passing_epa

389165.51

passing_cpoe

199682.63

passing_2pt_conversions

34807.66

pacr

152377.33

carries

131095.26

rushing_yards

134541.18

rushing_tds

46833.60

rushing_fumbles

44241.44

rushing_fumbles_lost

28658.66

rushing_first_downs

84886.81

rushing_epa

187593.41

rushing_2pt_conversions

17970.66

special_teams_tds

0.00

pocket_time.pass

108996.50

pass_attempts.pass

370405.04

throwaways.pass

116999.57

spikes.pass

66463.00

drops.pass

114051.36

bad_throws.pass

111942.38

times_blitzed.pass

246287.74

times_hurried.pass

104082.02

times_hit.pass

100322.48

times_pressured.pass

140375.87

batted_balls.pass

71550.05

on_tgt_throws.pass

189969.34

rpo_plays.pass

111892.33

rpo_yards.pass

126295.30

rpo_pass_att.pass

107233.50

rpo_pass_yards.pass

159839.11

rpo_rush_att.pass

55075.26

rpo_rush_yards.pass

121620.30

pa_pass_att.pass

106613.48

pa_pass_yards.pass

90108.12

intended_air_yards.pass

33466.06

intended_air_yards_per_pass_attempt.pass

14938.19

completed_air_yards.pass

25280.22

completed_air_yards_per_completion.pass

30909.39

completed_air_yards_per_pass_attempt.pass

50542.56

pass_yards_after_catch.pass

42382.33

pass_yards_after_catch_per_completion.pass

28017.44

scrambles.pass

41709.70

scramble_yards_per_attempt.pass

33929.75

drop_pct.pass

174200.49

bad_throw_pct.pass

218832.91

on_tgt_pct.pass

199077.00

pressure_pct.pass

174895.43

ybc_att.rush

214004.24

yac_att.rush

134678.43

att.rush

140700.93

yds.rush

129462.71

td.rush

44929.33

x1d.rush

68177.23

ybc.rush

154748.87

yac.rush

124351.88

brk_tkl.rush

50896.64

att_br.rush

49786.86 Code

There was modest shrinkage from the training model to the test model: the \(R^2\) for the model on the training data was 0.47; the \(R^2\) for the same model applied to the test data was 0.42.

Figure 19.6 depicts the predicted versus actual fantasy points for the model on the test data.

Code

# Calculate combined range for axes

axis_limits <- range(c(df$pred, df$fantasyPoints_lag), na.rm = TRUE)

ggplot(

df,

aes(

x = pred,

y = fantasyPoints_lag)) +

geom_point(

size = 2,

alpha = 0.6) +

geom_abline(

slope = 1,

intercept = 0,

color = "blue",

linetype = "dashed") +

coord_equal(

xlim = axis_limits,

ylim = axis_limits) +

labs(

title = "Predicted vs Actual Fantasy Points (Test Data)",

x = "Predicted Fantasy Points",

y = "Actual Fantasy Points"

) +

theme_classic() +

theme(axis.title.y = element_text(angle = 0, vjust = 0.5)) # horizontal y-axis titleBelow are the model predictions for next year’s fantasy points:

Code

Now we can stop the parallel backend:

19.8.4.2 Accounting for Longitudinal Data

The above approaches to machine learning assume that the observations are independent across rows. However, in our case, this assumption does not hold because the data are longitudinal—each player has multiple seasons, and each row corresponds to a unique player–season combination. In the approaches below, using a random forest model (this section) and a gradient tree boosting model (in Section 19.8.5), we address this by explicitly accounting for the nesting of longitudinal observations within players.

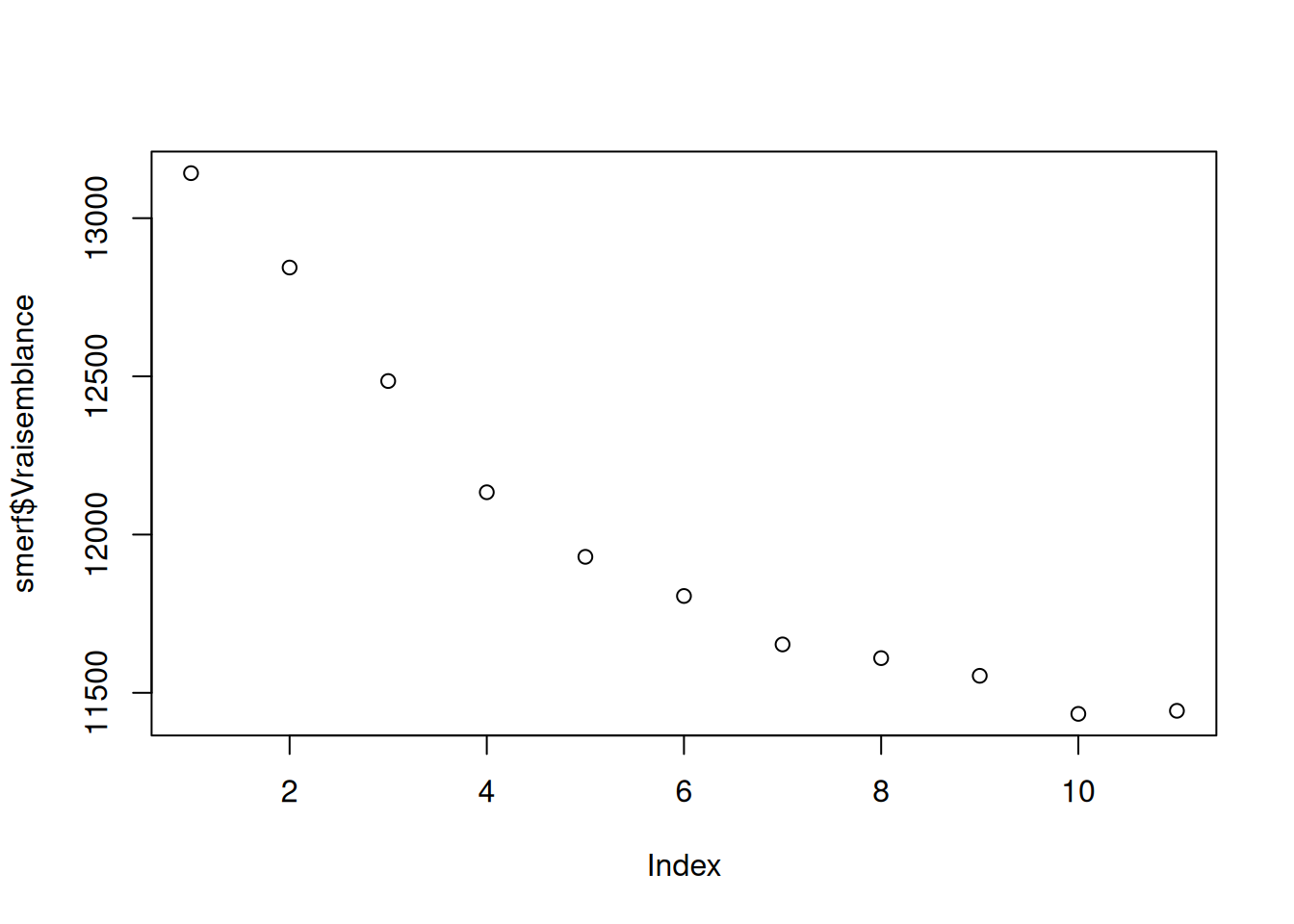

Approaches to estimating random forest models with longitudinal data are described by Hu & Szymczak (2023). Below, we fit longitudinal random forest models using the LongituRF::MERF() function of the LongituRF package (Capitaine, 2020).

Code

smerf <- LongituRF::MERF(

X = data_train_qb %>% dplyr::select(season:att_br.rush) %>% as.matrix(), # predictors of the fixed effects

Y = data_train_qb[,c("fantasyPoints_lag")] %>% as.matrix(), # outcome variable

Z = data_train_qb %>% dplyr::mutate(constant = 1) %>% dplyr::select(constant, passing_yards, passing_tds, passing_interceptions, passing_epa, pacr) %>% as.matrix(), # predictors of the random effects

id = data_train_qb[,c("gsis_id")] %>% as.matrix(), # player ID (for nesting)

time = data_train_qb[,c("ageCentered20")] %>% as.matrix(), # time variable

ntree = 500,

sto = "BM")[1] "stopped after 18 iterations."

Call:

randomForest(x = X, y = ystar, ntree = ntree, mtry = mtry, importance = TRUE)

Type of random forest: regression

Number of trees: 500

No. of variables tried at each split: 28