I want your feedback to make the book better for you and other readers. If you find typos, errors, or places where the text may be improved, please let me know. The best ways to provide feedback are by GitHub or hypothes.is annotations.

You can leave a comment at the bottom of the page/chapter, or open an issue or submit a pull request on GitHub: https://github.com/isaactpetersen/Fantasy-Football-Analytics-Textbook

Alternatively, you can leave an annotation using hypothes.is.

To add an annotation, select some text and then click the

symbol on the pop-up menu.

To see the annotations of others, click the

symbol in the upper right-hand corner of the page.

23 Data Reduction: Principal Component Analysis

This chapter provides an overview of principal component analysis as a useful technique for data reduction.

23.1 Getting Started

23.1.1 Load Packages

23.1.2 Load Data

We created the player_stats_weekly.RData object in Section 4.4.3.

23.1.3 Prepare Data

23.1.3.1 Merge Data

23.1.3.2 Specify Variables

Code

pcaVars <- c(

"completions","attempts","passing_yards","passing_tds","passing_interceptions",

"sacks_suffered","sack_yards_lost","sack_fumbles","sack_fumbles_lost",

"passing_air_yards","passing_yards_after_catch","passing_first_downs",

"passing_epa","passing_cpoe","passing_2pt_conversions","pacr","pass_40_yds",

"pass_inc","pass_comp_pct","fumbles","two_pts",

"avg_time_to_throw","avg_completed_air_yards","avg_intended_air_yards",

"avg_air_yards_differential","aggressiveness","max_completed_air_distance",

"avg_air_yards_to_sticks","passer_rating", #,"completion_percentage"

"expected_completion_percentage","completion_percentage_above_expectation",

"avg_air_distance","max_air_distance")23.1.3.3 Standardize Variables

23.2 Overview of Principal Component Analysis

Principal component analysis (PCA) is used if you want to reduce your data matrix. PCA composites represent the variances of an observed measure in as economical a fashion as possible, with no latent underlying variables. The goal of PCA is to identify a smaller number of components that explain as much variance in a set of variables as possible. It is an atheoretical way to decompose a matrix. PCA involves decomposition of a data matrix into a set of eigenvectors, which are transformations of the old variables.

The eigenvectors attempt to simplify the data in the matrix. PCA takes the data matrix and identifies the weighted sum of all variables that does the best job at explaining variance: these are the principal components, also called eigenvectors. Principal components reflect optimally weighted sums.

PCA decomposes the data matrix into any number of components—as many as the number of variables, which will always account for all variance. After the PCA is performed, you can look at the results and discard the components which likely reflect error variance. Judgments about which components to retain are based on empirical criteria in conjunction with theory to select a parsimonious number of components that account for the majority of variance.

The eigenvalue reflects the amount of variance explained by the component (eigenvector). When using a varimax (orthogonal) rotation, an eigenvalue for a component is calculated as the sum of squared standardized component loadings on that component. When using oblique rotation, however, the items explain more variance than is attributable to their factor loadings because the factors are correlated.

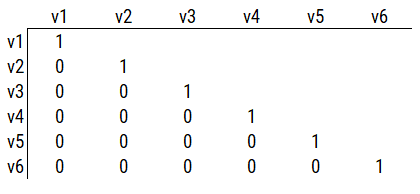

PCA pulls the first principal component out (i.e., the eigenvector that explains the most variance) and makes a new data matrix: i.e., new correlation matrix. Then the PCA pulls out the component that explains the next most variance—i.e., the eigenvector with the next largest eigenvalue, and it does this for all components, equal to the same number of variables. For instance, if there are six variables, it will iteratively extract an additional component up to six components. You can extract as many eigenvectors as there are variables. If you extract all six components, the data matrix left over will be the same as the correlation matrix in Figure 23.1. That is, the remaining variables (as part of the leftover data matrix) will be entirely uncorrelated with each other, because six components explain 100% of the variance from six variables. In other words, you can explain (6) variables with (6) new things!

However, it does no good if you have to use all (6) components because there is no data reduction from the original number of variables. When the goal is data reduction (as in PCA), the hope is that the first few components will explain most of the variance, so we can explain the variability in the data with fewer components than there are variables.

The sum of all eigenvalues is equal to the number of variables in the analysis. PCA does not have the same assumptions as factor analysis, which assumes that measures are partly from common variance and error. But if you estimate (6) eigenvectors and only keep (2), the model is a two-component model and whatever left becomes error. Therefore, PCA does not have the same assumptions as factor analysis, but it often ends up in the same place.

23.3 Decisions in Principal Component Analysis

There are four primary decisions to make in PCA:

- what variables to include in the model and how to scale them

- whether and how to rotate components

- how many components to retain

- how to interpret and use the components

As in factor analysis, the answer you get can differ highly depending on the decisions you make. We provide guidance on each of these decisions below and in Section 22.5.

23.3.1 1. Variables to Include and their Scaling

As in factor analysis, the first decision when conducting a factor analysis is which variables to include and the scaling of those variables. Guidance on which variables to include is in Section 22.5.1.

In addition, before performing a PCA, it is important to ensure that the variables included in the PCA are on the same scale. PCA seeks to identify components that explain variance in the data, so if the variables are not on the same scale, some variables may contribute considerably more variance than others. A common way of ensuring that variables are on the same scale is to standardize them using, for example, z scores.

23.3.2 2. Component Rotation

Similar considerations as in factor analysis can be used to determine whether and how to rotate components in PCA. The considerations for determining whether and how to rotate factors in factor analysis are described in Section 22.5.3.

23.3.3 3. Determining the Number of Components to Retain

Similar criteria as in factor analysis can be used to determine the number of components to retain in PCA. The criteria for determining the number of factors to retain in factor analysis are described in Section 22.5.4.

23.3.4 4. Interpreting and Using PCA Components

The next step is interpreting the PCA components. Use theory to interpret and label the components.

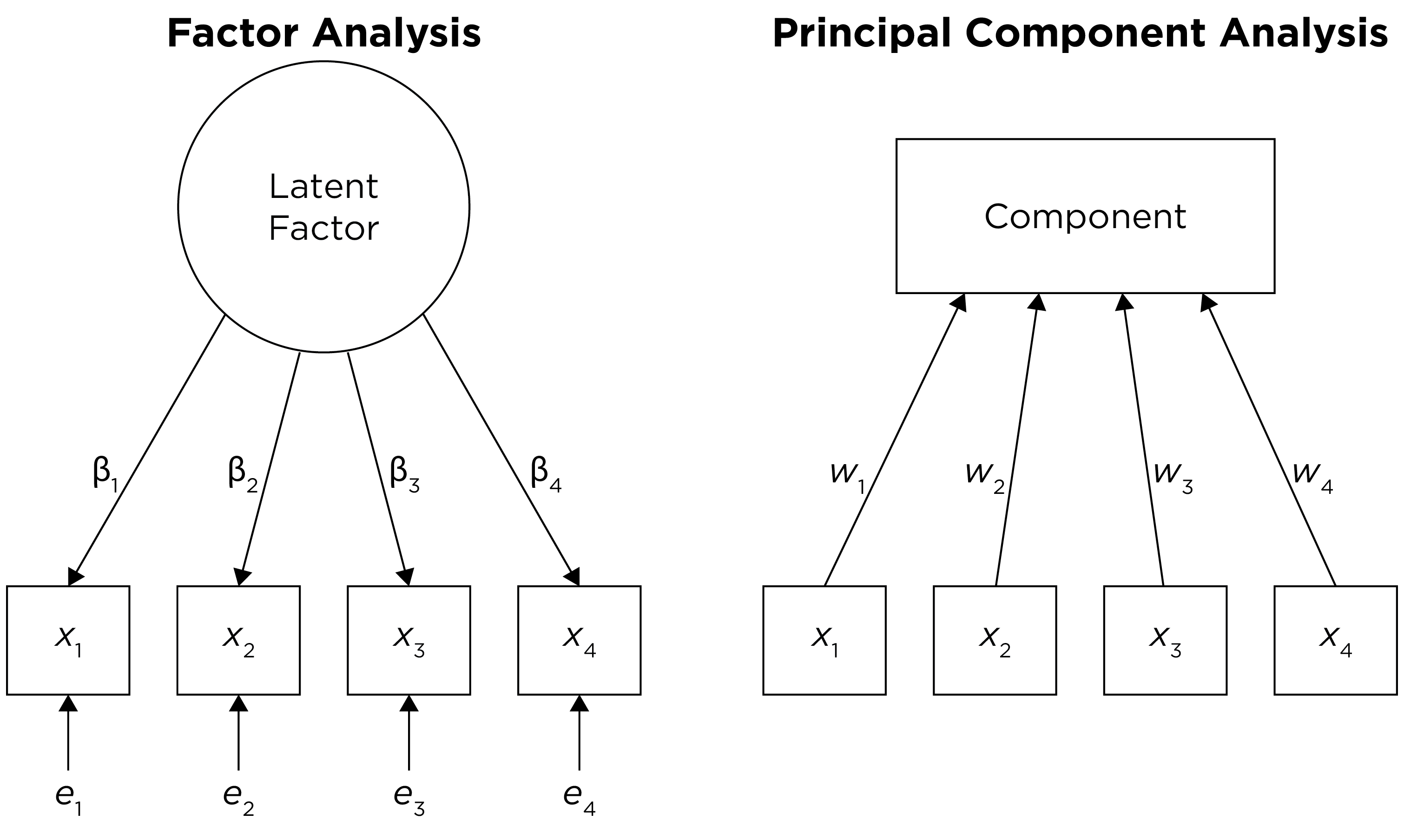

23.4 PCA Versus Factor Analysis

Both factor analysis and PCA can be used for data reduction. The key distinction between factor analysis and PCA is depicted in Figure 23.2.

There are several differences between factor analysis and PCA. Factor analysis has greater sophistication than PCA, but greater sophistication often results in greater assumptions. Factor analysis does not always work; the data may not always fit to a factor analysis model. However, PCA can decompose any data matrix; it always works. PCA is okay if you are not interested in the factor structure. PCA uses all variance of variables and assumes variables have no error, so it does not account for measurement error. PCA is good if you just want to form a linear composite and perform data reduction. However, if you are interested in the factor structure, use factor analysis, which estimates a latent variable that accounts for the common variance and discards error variance. Factor analysis better handles error than PCA—factor analysis assumes that what is in the variable is the combination of common construct variance and error. By contrast, PCA uses the total variance (not just the common variance) and assumes that the measures have no measurement error. Factor analysis is useful for the identification of latent constructs—i.e., underlying dimensions or factors that explain (cause) observed scores.

23.5 Example of Principal Component Analysis

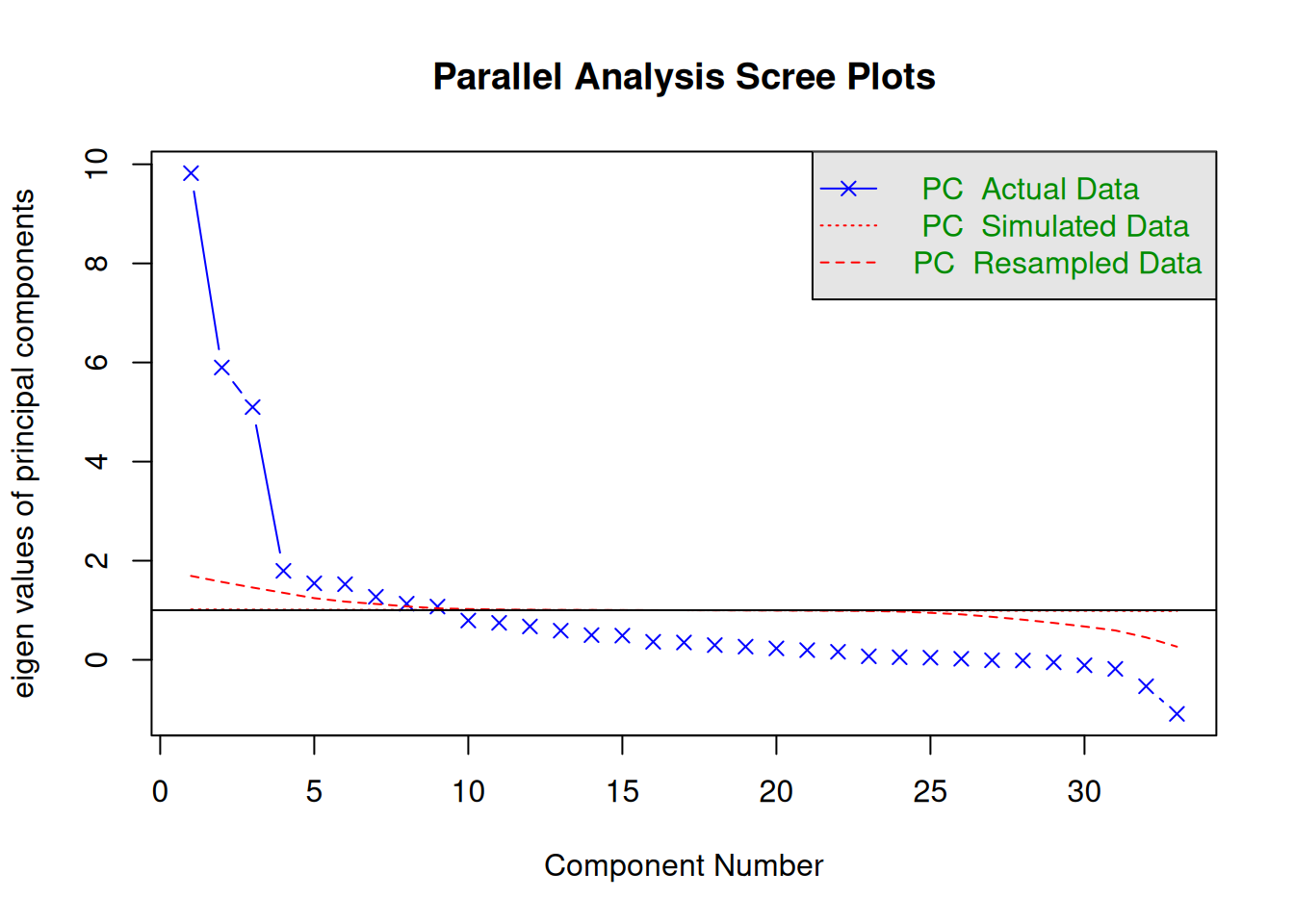

We generated the scree plot in Figure 23.3 using the psych::fa.parallel() function of the psych package (Revelle, 2025).

The number of components to keep would depend on which criteria one uses. Based on the rule to keep factors whose eigenvalues are greater than one and based on the parallel test, we would keep nine components. However, based on the Cattell scree test (the “elbow” of the screen plot minus one) (Cattell, 1966), we would keep three components. If using the optimal coordinates, we would keep four components; if using the acceleration factor, we would keep one component. Therefore, interpretability of the components would be important for deciding how many components to keep.

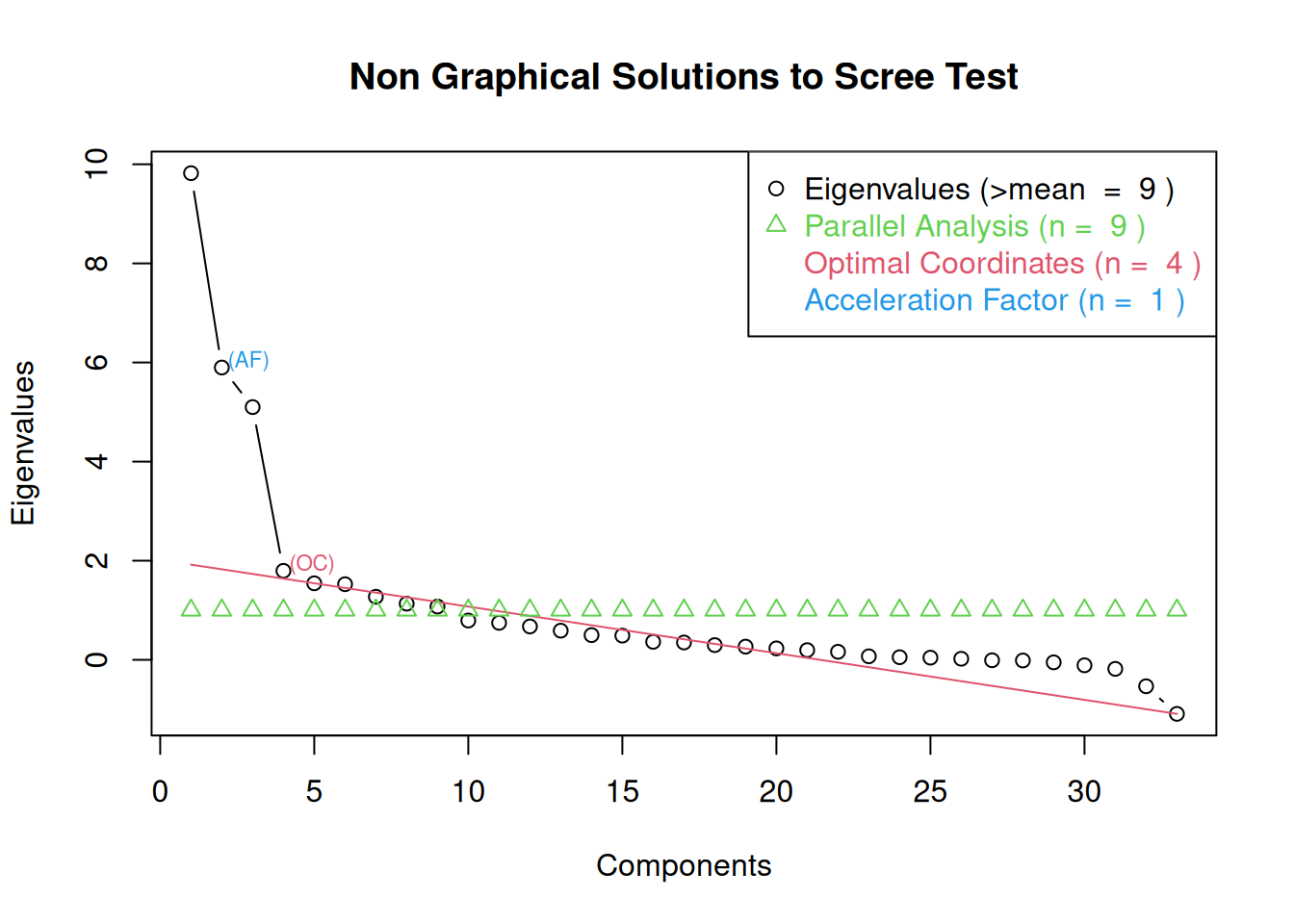

Parallel analysis suggests that the number of factors = NA and the number of components = 9 We generated the scree plot in Figure 23.4 using the nFactors::nScree() and nFactors::plotnScree() functions of the nFactors package (Raiche & Magis, 2025).

Code

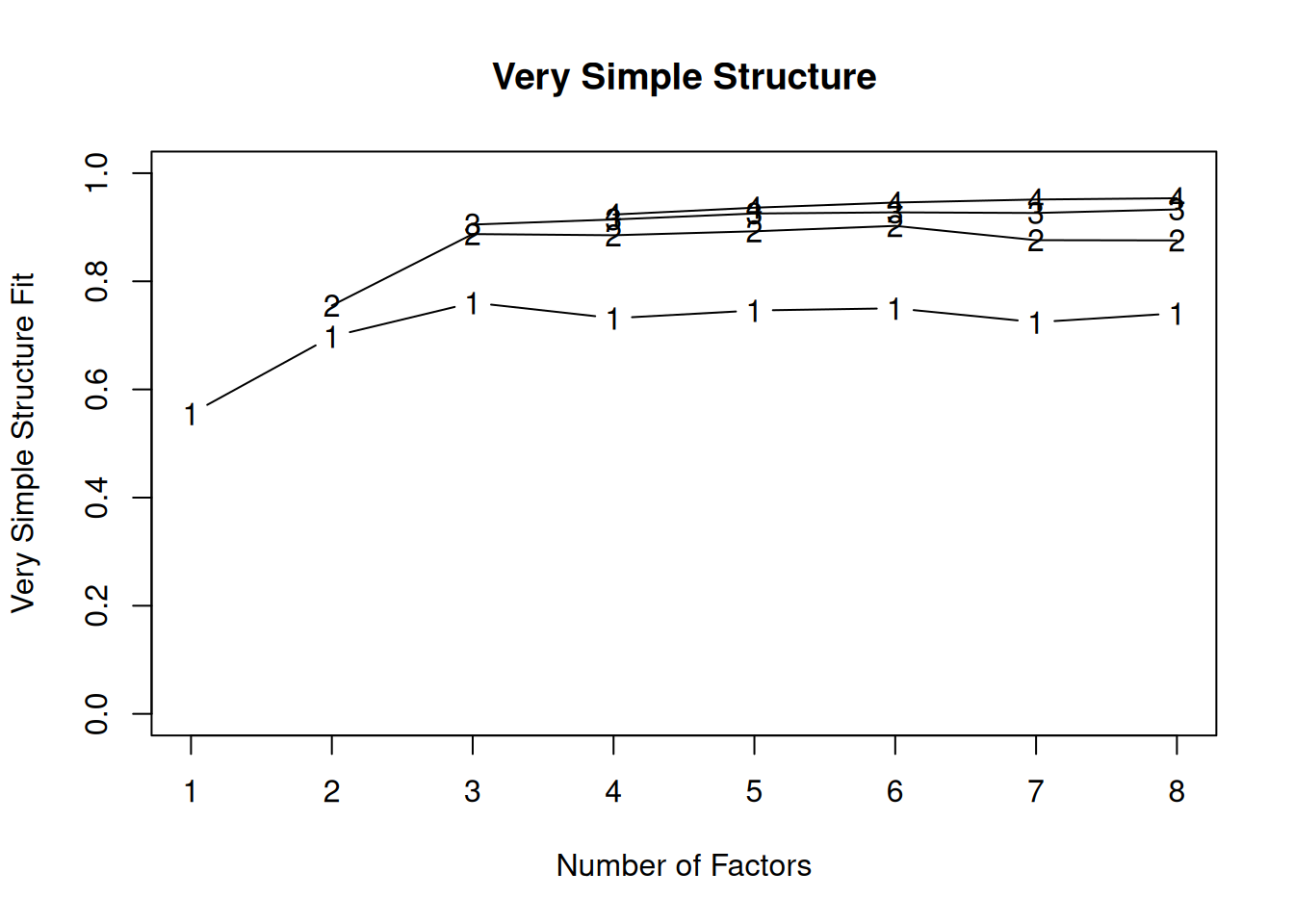

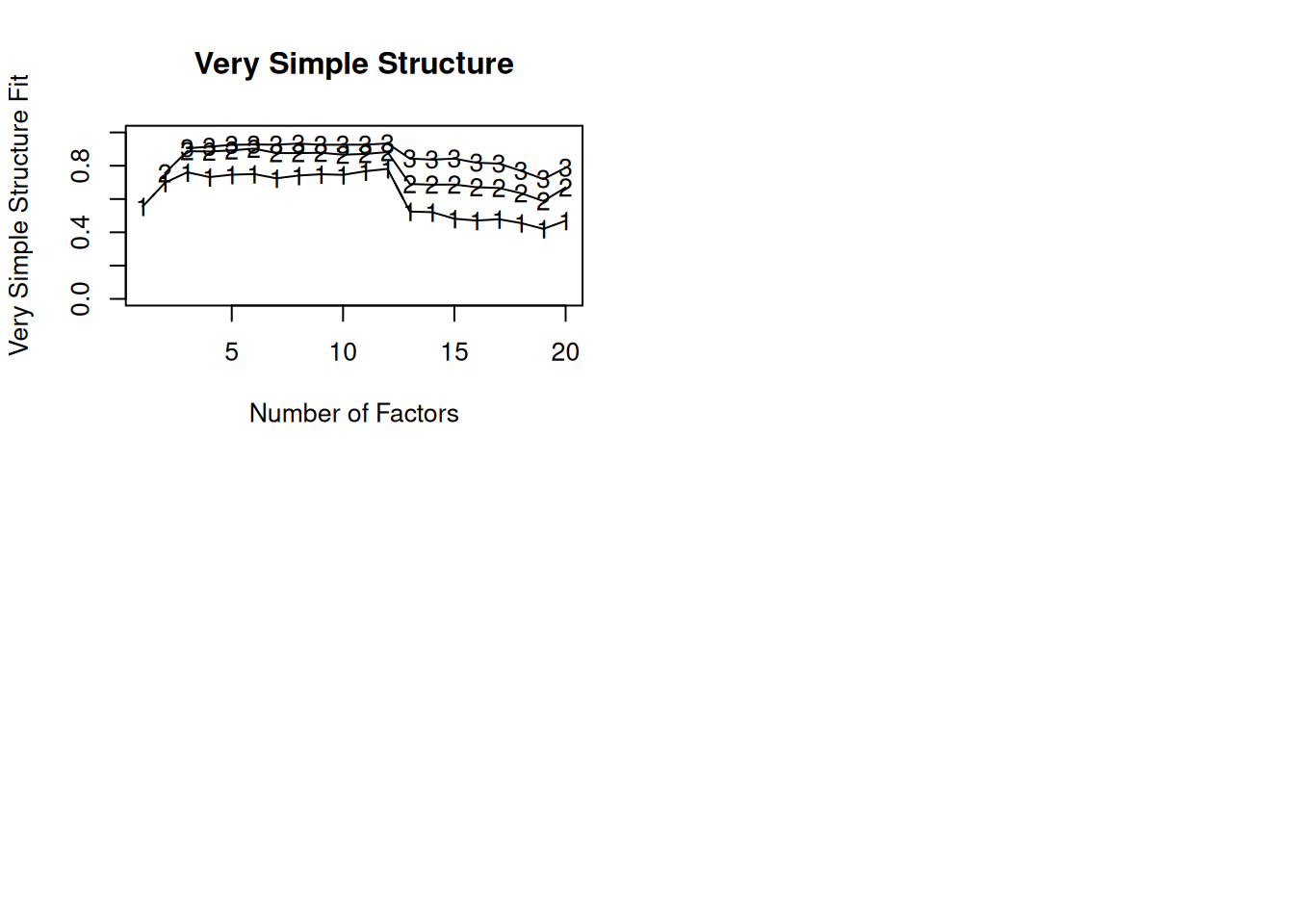

We generated the very simple structure (VSS) plots in Figures 23.5 and 23.6 using the psych::vss() and psych::nfactors() functions of the psych package (Revelle, 2025). The optimal number of components based on the VSS criterion is three components.

Very Simple Structure

Call: psych::vss(x = dataForPCA[pcaVars], rotate = "oblimin", fm = "pc")

VSS complexity 1 achieves a maximimum of 0.76 with 3 factors

VSS complexity 2 achieves a maximimum of 0.9 with 3 factors

The Velicer MAP achieves a minimum of Inf with factors

BIC achieves a minimum of Inf with factorsSample Size adjusted BIC achieves a minimum of Inf with factors

Statistics by number of factors

vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex eChisq SRMR

1 0.56 0.00 NaN 0 NA NA 77.3 0.56 NA NA NA NA NA NA

2 0.70 0.76 NaN 0 NA NA 42.5 0.76 NA NA NA NA NA NA

3 0.76 0.89 NaN 0 NA NA 16.5 0.91 NA NA NA NA NA NA

4 0.73 0.89 NaN 0 NA NA 13.3 0.92 NA NA NA NA NA NA

5 0.75 0.89 NaN 0 NA NA 10.9 0.94 NA NA NA NA NA NA

6 0.75 0.90 NaN 0 NA NA 8.6 0.95 NA NA NA NA NA NA

7 0.72 0.88 NaN 0 NA NA 7.0 0.96 NA NA NA NA NA NA

8 0.74 0.87 NaN 0 NA NA 5.7 0.97 NA NA NA NA NA NA

eCRMS eBIC

1 NA NA

2 NA NA

3 NA NA

4 NA NA

5 NA NA

6 NA NA

7 NA NA

8 NA NAError in `plot.window()`:

! need finite 'ylim' valuesCode

pca1ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 1,

rotate = "oblimin")

pca2ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 2,

rotate = "oblimin")

pca3ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 3,

rotate = "oblimin")

pca4ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 4,

rotate = "oblimin")

pca5ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 5,

rotate = "oblimin")

pca6ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 6,

rotate = "oblimin")

pca7ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 7,

rotate = "oblimin")

pca8ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 8,

rotate = "oblimin")

pca9ComponentOblique <- psych::principal(

dataForPCA[pcaVars],

nfactors = 9,

rotate = "oblimin")Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 1, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

PC1 h2 u2 com

completions 0.98 0.95698 0.0430 1

attempts 0.96 0.92853 0.0715 1

passing_yards 1.00 1.00569 -0.0057 1

passing_tds 0.83 0.69387 0.3061 1

passing_interceptions 0.65 0.42141 0.5786 1

sacks_suffered 0.81 0.64813 0.3519 1

sack_yards_lost -0.78 0.60950 0.3905 1

sack_fumbles 0.57 0.32568 0.6743 1

sack_fumbles_lost 0.46 0.21421 0.7858 1

passing_air_yards 0.84 0.70333 0.2967 1

passing_yards_after_catch 0.90 0.80559 0.1944 1

passing_first_downs 0.98 0.96528 0.0347 1

passing_epa 0.15 0.02385 0.9761 1

passing_cpoe 0.17 0.02995 0.9701 1

passing_2pt_conversions 0.26 0.06876 0.9312 1

pacr 0.09 0.00787 0.9921 1

pass_40_yds 0.30 0.09243 0.9076 1

pass_inc 0.89 0.79656 0.2034 1

pass_comp_pct 0.23 0.05437 0.9456 1

fumbles 0.50 0.25081 0.7492 1

two_pts 0.20 0.03959 0.9604 1

avg_time_to_throw -0.06 0.00331 0.9967 1

avg_completed_air_yards 0.11 0.01191 0.9881 1

avg_intended_air_yards -0.20 0.04121 0.9588 1

avg_air_yards_differential 0.08 0.00646 0.9935 1

aggressiveness -0.02 0.00060 0.9994 1

max_completed_air_distance 0.20 0.03905 0.9610 1

avg_air_yards_to_sticks 0.05 0.00287 0.9971 1

passer_rating 0.16 0.02508 0.9749 1

expected_completion_percentage 0.03 0.00069 0.9993 1

completion_percentage_above_expectation 0.16 0.02602 0.9740 1

avg_air_distance 0.03 0.00067 0.9993 1

max_air_distance 0.15 0.02140 0.9786 1

PC1

SS loadings 9.82

Proportion Var 0.30

Mean item complexity = 1

Test of the hypothesis that 1 component is sufficient.

The root mean square of the residuals (RMSR) is 0.16

with the empirical chi square 12494827 with prob < 0

Fit based upon off diagonal values = 0.8Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 2, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC2 h2 u2 com

completions 0.96 0.23 0.992 0.0082 1.1

attempts 0.97 -0.02 0.932 0.0675 1.0

passing_yards 0.99 0.14 1.014 -0.0137 1.0

passing_tds 0.82 0.18 0.714 0.2860 1.1

passing_interceptions 0.67 -0.23 0.491 0.5092 1.2

sacks_suffered 0.81 -0.10 0.667 0.3332 1.0

sack_yards_lost -0.79 0.10 0.627 0.3728 1.0

sack_fumbles 0.58 -0.08 0.336 0.6639 1.0

sack_fumbles_lost 0.47 -0.07 0.222 0.7779 1.0

passing_air_yards 0.87 -0.40 0.900 0.1003 1.4

passing_yards_after_catch 0.87 0.30 0.872 0.1280 1.2

passing_first_downs 0.97 0.17 0.980 0.0196 1.1

passing_epa 0.12 0.40 0.179 0.8210 1.2

passing_cpoe 0.13 0.55 0.329 0.6708 1.1

passing_2pt_conversions 0.26 -0.02 0.070 0.9300 1.0

pacr 0.04 0.66 0.444 0.5558 1.0

pass_40_yds 0.30 0.01 0.092 0.9076 1.0

pass_inc 0.92 -0.37 0.970 0.0304 1.3

pass_comp_pct 0.18 0.70 0.533 0.4671 1.1

fumbles 0.51 -0.07 0.259 0.7413 1.0

two_pts 0.20 -0.03 0.041 0.9590 1.0

avg_time_to_throw -0.03 -0.36 0.134 0.8665 1.0

avg_completed_air_yards 0.14 -0.36 0.142 0.8576 1.3

avg_intended_air_yards -0.15 -0.69 0.501 0.4985 1.1

avg_air_yards_differential 0.03 0.62 0.381 0.6192 1.0

aggressiveness 0.01 -0.46 0.216 0.7843 1.0

max_completed_air_distance 0.21 -0.11 0.054 0.9458 1.6

avg_air_yards_to_sticks 0.11 -0.71 0.515 0.4848 1.0

passer_rating 0.11 0.66 0.446 0.5544 1.1

expected_completion_percentage -0.03 0.75 0.562 0.4379 1.0

completion_percentage_above_expectation 0.12 0.51 0.281 0.7195 1.1

avg_air_distance 0.09 -0.76 0.574 0.4256 1.0

max_air_distance 0.18 -0.47 0.252 0.7481 1.3

TC1 TC2

SS loadings 9.81 5.91

Proportion Var 0.30 0.18

Cumulative Var 0.30 0.48

Proportion Explained 0.62 0.38

Cumulative Proportion 0.62 1.00

With component correlations of

TC1 TC2

TC1 1.00 0.03

TC2 0.03 1.00

Mean item complexity = 1.1

Test of the hypothesis that 2 components are sufficient.

The root mean square of the residuals (RMSR) is 0.12

with the empirical chi square 6693080 with prob < 0

Fit based upon off diagonal values = 0.89Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 3, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC2 TC3 h2 u2 com

completions 0.95 -0.16 0.19 0.993 0.0068 1.1

attempts 0.98 -0.04 -0.04 0.953 0.0468 1.0

passing_yards 0.94 0.10 0.36 1.076 -0.0764 1.3

passing_tds 0.75 0.12 0.45 0.834 0.1659 1.7

passing_interceptions 0.72 -0.02 -0.36 0.611 0.3887 1.5

sacks_suffered 0.86 -0.11 -0.25 0.775 0.2249 1.2

sack_yards_lost -0.84 0.11 0.25 0.735 0.2649 1.2

sack_fumbles 0.62 -0.11 -0.22 0.418 0.5821 1.3

sack_fumbles_lost 0.50 -0.08 -0.18 0.276 0.7245 1.3

passing_air_yards 0.84 0.46 -0.04 0.931 0.0686 1.5

passing_yards_after_catch 0.87 -0.24 0.19 0.879 0.1212 1.3

passing_first_downs 0.93 0.01 0.30 1.003 -0.0028 1.2

passing_epa -0.01 0.17 0.83 0.700 0.2998 1.1

passing_cpoe 0.03 -0.07 0.77 0.608 0.3922 1.0

passing_2pt_conversions 0.26 0.02 0.00 0.070 0.9299 1.0

pacr 0.04 -0.58 0.33 0.457 0.5428 1.6

pass_40_yds 0.22 0.30 0.40 0.307 0.6930 2.5

pass_inc 0.97 0.13 -0.39 1.058 -0.0582 1.4

pass_comp_pct 0.11 -0.32 0.71 0.637 0.3634 1.4

fumbles 0.54 -0.08 -0.18 0.312 0.6884 1.3

two_pts 0.20 0.04 0.01 0.041 0.9589 1.1

avg_time_to_throw -0.04 0.37 -0.12 0.153 0.8469 1.2

avg_completed_air_yards 0.02 0.76 0.37 0.689 0.3111 1.4

avg_intended_air_yards -0.23 0.89 0.01 0.830 0.1699 1.1

avg_air_yards_differential -0.02 -0.32 0.56 0.431 0.5686 1.6

aggressiveness 0.01 0.42 -0.21 0.226 0.7735 1.5

max_completed_air_distance 0.11 0.49 0.42 0.425 0.5754 2.1

avg_air_yards_to_sticks 0.03 0.93 0.03 0.873 0.1274 1.0

passer_rating -0.03 -0.02 0.99 0.973 0.0274 1.0

expected_completion_percentage -0.01 -0.74 0.27 0.627 0.3731 1.3

completion_percentage_above_expectation 0.00 0.03 0.83 0.690 0.3100 1.0

avg_air_distance 0.02 0.91 -0.06 0.844 0.1564 1.0

max_air_distance 0.13 0.60 0.01 0.387 0.6134 1.1

TC1 TC2 TC3

SS loadings 9.73 5.58 5.52

Proportion Var 0.29 0.17 0.17

Cumulative Var 0.29 0.46 0.63

Proportion Explained 0.47 0.27 0.26

Cumulative Proportion 0.47 0.74 1.00

With component correlations of

TC1 TC2 TC3

TC1 1.00 0.02 0.08

TC2 0.02 1.00 -0.04

TC3 0.08 -0.04 1.00

Mean item complexity = 1.3

Test of the hypothesis that 3 components are sufficient.

The root mean square of the residuals (RMSR) is 0.07

with the empirical chi square 2015026 with prob < 0

Fit based upon off diagonal values = 0.97Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 4, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC2 TC3 TC4 h2 u2

completions 0.95 -0.18 0.12 0.07 1.00 -0.0046

attempts 0.97 -0.07 -0.13 0.07 0.98 0.0204

passing_yards 0.96 0.08 0.27 0.02 1.08 -0.0819

passing_tds 0.83 0.09 0.32 -0.09 0.85 0.1510

passing_interceptions 0.65 -0.04 -0.37 0.16 0.62 0.3792

sacks_suffered 0.63 -0.06 -0.11 0.46 0.81 0.1911

sack_yards_lost -0.61 0.06 0.11 -0.46 0.77 0.2277

sack_fumbles 0.21 0.02 0.13 0.78 0.73 0.2683

sack_fumbles_lost 0.11 0.05 0.17 0.75 0.60 0.4006

passing_air_yards 0.83 0.44 -0.11 0.08 0.94 0.0627

passing_yards_after_catch 0.91 -0.28 0.09 -0.02 0.91 0.0890

passing_first_downs 0.94 -0.01 0.22 0.04 1.01 -0.0090

passing_epa 0.31 0.10 0.54 -0.56 0.79 0.2059

passing_cpoe -0.03 0.00 0.87 0.14 0.72 0.2805

passing_2pt_conversions 0.41 -0.04 -0.17 -0.25 0.16 0.8392

pacr 0.03 -0.56 0.35 0.02 0.46 0.5412

pass_40_yds 0.39 0.26 0.22 -0.28 0.34 0.6574

pass_inc 0.94 0.08 -0.47 0.09 1.10 -0.1042

pass_comp_pct 0.08 -0.27 0.76 0.07 0.68 0.3208

fumbles 0.20 0.03 0.11 0.65 0.52 0.4776

two_pts 0.34 -0.03 -0.16 -0.25 0.12 0.8770

avg_time_to_throw -0.14 0.40 -0.03 0.17 0.18 0.8153

avg_completed_air_yards 0.04 0.78 0.37 -0.01 0.71 0.2867

avg_intended_air_yards -0.24 0.91 0.04 0.03 0.86 0.1432

avg_air_yards_differential 0.00 -0.29 0.57 -0.02 0.44 0.5583

aggressiveness -0.06 0.43 -0.16 0.11 0.23 0.7653

max_completed_air_distance 0.23 0.47 0.31 -0.20 0.43 0.5719

avg_air_yards_to_sticks 0.07 0.92 -0.02 -0.07 0.87 0.1269

passer_rating 0.10 -0.01 0.91 -0.20 0.98 0.0217

expected_completion_percentage 0.07 -0.75 0.20 -0.15 0.65 0.3538

completion_percentage_above_expectation -0.04 0.10 0.92 0.10 0.80 0.2047

avg_air_distance 0.02 0.92 -0.06 0.01 0.85 0.1503

max_air_distance 0.22 0.57 -0.10 -0.15 0.40 0.5989

com

completions 1.1

attempts 1.1

passing_yards 1.2

passing_tds 1.3

passing_interceptions 1.8

sacks_suffered 1.9

sack_yards_lost 2.0

sack_fumbles 1.2

sack_fumbles_lost 1.1

passing_air_yards 1.6

passing_yards_after_catch 1.2

passing_first_downs 1.1

passing_epa 2.6

passing_cpoe 1.1

passing_2pt_conversions 2.1

pacr 1.7

pass_40_yds 3.3

pass_inc 1.5

pass_comp_pct 1.3

fumbles 1.3

two_pts 2.3

avg_time_to_throw 1.6

avg_completed_air_yards 1.4

avg_intended_air_yards 1.1

avg_air_yards_differential 1.5

aggressiveness 1.4

max_completed_air_distance 2.7

avg_air_yards_to_sticks 1.0

passer_rating 1.1

expected_completion_percentage 1.2

completion_percentage_above_expectation 1.1

avg_air_distance 1.0

max_air_distance 1.5

TC1 TC2 TC3 TC4

SS loadings 9.03 5.50 5.00 3.08

Proportion Var 0.27 0.17 0.15 0.09

Cumulative Var 0.27 0.44 0.59 0.69

Proportion Explained 0.40 0.24 0.22 0.14

Cumulative Proportion 0.40 0.64 0.86 1.00

With component correlations of

TC1 TC2 TC3 TC4

TC1 1.00 0.03 0.12 0.30

TC2 0.03 1.00 -0.06 -0.07

TC3 0.12 -0.06 1.00 -0.23

TC4 0.30 -0.07 -0.23 1.00

Mean item complexity = 1.5

Test of the hypothesis that 4 components are sufficient.

The root mean square of the residuals (RMSR) is 0.06

with the empirical chi square 1666806 with prob < 0

Fit based upon off diagonal values = 0.97Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 5, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC2 TC3 TC4 TC5 h2

completions 0.94 -0.18 0.12 0.07 0.05 1.01

attempts 0.96 -0.08 -0.13 0.07 0.04 0.99

passing_yards 0.97 0.08 0.26 0.02 0.01 1.09

passing_tds 0.82 0.09 0.32 -0.09 0.06 0.85

passing_interceptions 0.66 -0.04 -0.38 0.16 -0.01 0.63

sacks_suffered 0.62 -0.06 -0.11 0.47 0.02 0.81

sack_yards_lost -0.60 0.06 0.11 -0.47 -0.02 0.77

sack_fumbles 0.19 0.03 0.14 0.80 0.01 0.75

sack_fumbles_lost 0.09 0.06 0.18 0.77 0.01 0.62

passing_air_yards 0.82 0.43 -0.11 0.08 0.05 0.94

passing_yards_after_catch 0.91 -0.28 0.08 -0.02 0.03 0.92

passing_first_downs 0.93 -0.01 0.22 0.05 0.05 1.01

passing_epa 0.29 0.09 0.55 -0.55 0.07 0.79

passing_cpoe -0.05 0.00 0.89 0.16 0.01 0.73

passing_2pt_conversions 0.03 0.00 0.00 -0.02 0.89 0.80

pacr 0.06 -0.56 0.34 0.00 -0.06 0.46

pass_40_yds 0.45 0.24 0.19 -0.32 -0.11 0.38

pass_inc 0.94 0.08 -0.48 0.09 0.04 1.11

pass_comp_pct 0.07 -0.26 0.77 0.09 0.03 0.69

fumbles 0.18 0.03 0.12 0.67 0.02 0.54

two_pts -0.05 0.02 0.02 -0.02 0.91 0.80

avg_time_to_throw -0.17 0.41 -0.01 0.19 0.06 0.20

avg_completed_air_yards 0.06 0.77 0.36 -0.02 -0.03 0.71

avg_intended_air_yards -0.24 0.91 0.04 0.03 -0.01 0.86

avg_air_yards_differential 0.03 -0.30 0.56 -0.04 -0.08 0.44

aggressiveness -0.05 0.44 -0.16 0.11 -0.01 0.23

max_completed_air_distance 0.30 0.46 0.28 -0.24 -0.13 0.45

avg_air_yards_to_sticks 0.06 0.92 -0.01 -0.06 0.04 0.87

passer_rating 0.10 -0.01 0.92 -0.20 0.01 0.98

expected_completion_percentage 0.08 -0.76 0.20 -0.15 -0.01 0.65

completion_percentage_above_expectation -0.04 0.10 0.92 0.11 -0.01 0.80

avg_air_distance 0.01 0.92 -0.06 0.01 0.01 0.85

max_air_distance 0.25 0.56 -0.12 -0.18 -0.05 0.41

u2 com

completions -0.0072 1.1

attempts 0.0140 1.1

passing_yards -0.0881 1.2

passing_tds 0.1502 1.4

passing_interceptions 0.3705 1.8

sacks_suffered 0.1910 2.0

sack_yards_lost 0.2275 2.0

sack_fumbles 0.2525 1.2

sack_fumbles_lost 0.3811 1.1

passing_air_yards 0.0610 1.6

passing_yards_after_catch 0.0815 1.2

passing_first_downs -0.0103 1.1

passing_epa 0.2058 2.6

passing_cpoe 0.2658 1.1

passing_2pt_conversions 0.1991 1.0

pacr 0.5394 1.7

pass_40_yds 0.6218 3.0

pass_inc -0.1148 1.5

pass_comp_pct 0.3115 1.3

fumbles 0.4646 1.2

two_pts 0.1964 1.0

avg_time_to_throw 0.8025 1.9

avg_completed_air_yards 0.2865 1.4

avg_intended_air_yards 0.1408 1.1

avg_air_yards_differential 0.5570 1.6

aggressiveness 0.7651 1.4

max_completed_air_distance 0.5468 3.3

avg_air_yards_to_sticks 0.1256 1.0

passer_rating 0.0198 1.1

expected_completion_percentage 0.3517 1.2

completion_percentage_above_expectation 0.1956 1.1

avg_air_distance 0.1495 1.0

max_air_distance 0.5890 1.7

TC1 TC2 TC3 TC4 TC5

SS loadings 8.80 5.49 4.99 3.15 1.74

Proportion Var 0.27 0.17 0.15 0.10 0.05

Cumulative Var 0.27 0.43 0.58 0.68 0.73

Proportion Explained 0.36 0.23 0.21 0.13 0.07

Cumulative Proportion 0.36 0.59 0.80 0.93 1.00

With component correlations of

TC1 TC2 TC3 TC4 TC5

TC1 1.00 0.03 0.12 0.29 0.21

TC2 0.03 1.00 -0.06 -0.08 0.01

TC3 0.12 -0.06 1.00 -0.22 0.00

TC4 0.29 -0.08 -0.22 1.00 0.07

TC5 0.21 0.01 0.00 0.07 1.00

Mean item complexity = 1.5

Test of the hypothesis that 5 components are sufficient.

The root mean square of the residuals (RMSR) is 0.06

with the empirical chi square 1487215 with prob < 0

Fit based upon off diagonal values = 0.98Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 6, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC2 TC3 TC4 TC6 TC5

completions 0.95 -0.17 0.19 0.04 -0.06 0.03

attempts 0.98 -0.07 -0.06 0.05 -0.09 0.03

passing_yards 0.93 0.07 0.14 0.05 0.26 0.03

passing_tds 0.79 0.09 0.23 -0.07 0.22 0.08

passing_interceptions 0.67 -0.05 -0.31 0.15 -0.13 -0.02

sacks_suffered 0.63 -0.05 -0.05 0.45 -0.10 0.01

sack_yards_lost -0.61 0.05 0.04 -0.46 0.11 -0.01

sack_fumbles 0.17 0.03 0.08 0.82 0.07 0.02

sack_fumbles_lost 0.06 0.06 0.10 0.80 0.10 0.02

passing_air_yards 0.84 0.44 -0.01 0.05 -0.13 0.03

passing_yards_after_catch 0.90 -0.28 0.07 -0.02 0.07 0.03

passing_first_downs 0.92 -0.01 0.20 0.05 0.09 0.06

passing_epa 0.26 0.09 0.42 -0.53 0.30 0.09

passing_cpoe -0.03 0.05 0.94 0.09 -0.03 -0.01

passing_2pt_conversions 0.02 0.00 -0.04 -0.01 -0.03 0.90

pacr -0.03 -0.59 0.01 0.11 0.54 0.00

pass_40_yds 0.37 0.21 -0.12 -0.22 0.56 -0.05

pass_inc 0.95 0.07 -0.42 0.09 -0.10 0.03

pass_comp_pct 0.11 -0.21 0.93 -0.01 -0.19 -0.01

fumbles 0.16 0.03 0.05 0.69 0.09 0.03

two_pts -0.05 0.01 -0.03 0.00 -0.02 0.91

avg_time_to_throw -0.19 0.40 -0.08 0.22 0.08 0.07

avg_completed_air_yards -0.03 0.75 0.05 0.08 0.53 0.03

avg_intended_air_yards -0.24 0.91 0.06 0.02 -0.02 -0.01

avg_air_yards_differential -0.09 -0.34 0.09 0.10 0.79 0.01

aggressiveness -0.04 0.44 -0.10 0.09 -0.12 -0.02

max_completed_air_distance 0.21 0.43 -0.01 -0.15 0.52 -0.08

avg_air_yards_to_sticks 0.07 0.93 0.02 -0.08 -0.04 0.03

passer_rating 0.05 0.00 0.72 -0.17 0.40 0.04

expected_completion_percentage 0.07 -0.76 0.16 -0.15 0.08 -0.01

completion_percentage_above_expectation -0.02 0.14 0.95 0.05 0.02 -0.03

avg_air_distance 0.02 0.92 -0.01 0.00 -0.07 0.00

max_air_distance 0.28 0.57 -0.02 -0.21 -0.13 -0.07

h2 u2 com

completions 1.03 -0.0332 1.2

attempts 1.00 0.0045 1.0

passing_yards 1.10 -0.0969 1.2

passing_tds 0.85 0.1492 1.4

passing_interceptions 0.63 0.3704 1.6

sacks_suffered 0.81 0.1903 1.9

sack_yards_lost 0.77 0.2268 2.0

sack_fumbles 0.77 0.2296 1.1

sack_fumbles_lost 0.65 0.3528 1.1

passing_air_yards 0.96 0.0398 1.6

passing_yards_after_catch 0.92 0.0807 1.2

passing_first_downs 1.01 -0.0124 1.1

passing_epa 0.79 0.2055 3.2

passing_cpoe 0.84 0.1611 1.0

passing_2pt_conversions 0.81 0.1895 1.0

pacr 0.64 0.3585 2.1

pass_40_yds 0.52 0.4788 2.5

pass_inc 1.12 -0.1152 1.4

pass_comp_pct 0.89 0.1091 1.2

fumbles 0.56 0.4402 1.2

two_pts 0.81 0.1853 1.0

avg_time_to_throw 0.21 0.7851 2.3

avg_completed_air_yards 0.85 0.1486 1.9

avg_intended_air_yards 0.86 0.1395 1.1

avg_air_yards_differential 0.78 0.2214 1.5

aggressiveness 0.24 0.7628 1.4

max_completed_air_distance 0.56 0.4441 2.6

avg_air_yards_to_sticks 0.88 0.1203 1.0

passer_rating 0.98 0.0191 1.7

expected_completion_percentage 0.65 0.3516 1.2

completion_percentage_above_expectation 0.90 0.1041 1.1

avg_air_distance 0.86 0.1444 1.0

max_air_distance 0.44 0.5616 1.9

TC1 TC2 TC3 TC4 TC6 TC5

SS loadings 8.69 5.47 4.11 3.09 2.57 1.74

Proportion Var 0.26 0.17 0.12 0.09 0.08 0.05

Cumulative Var 0.26 0.43 0.55 0.65 0.73 0.78

Proportion Explained 0.34 0.21 0.16 0.12 0.10 0.07

Cumulative Proportion 0.34 0.55 0.71 0.83 0.93 1.00

With component correlations of

TC1 TC2 TC3 TC4 TC6 TC5

TC1 1.00 0.04 0.11 0.31 0.08 0.21

TC2 0.04 1.00 -0.10 -0.08 0.02 0.01

TC3 0.11 -0.10 1.00 -0.16 0.35 0.04

TC4 0.31 -0.08 -0.16 1.00 -0.13 0.06

TC6 0.08 0.02 0.35 -0.13 1.00 0.04

TC5 0.21 0.01 0.04 0.06 0.04 1.00

Mean item complexity = 1.5

Test of the hypothesis that 6 components are sufficient.

The root mean square of the residuals (RMSR) is 0.05

with the empirical chi square 1200862 with prob < 0

Fit based upon off diagonal values = 0.98Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 7, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC3 TC7 TC2 TC6 TC4

completions 0.95 0.20 -0.06 -0.15 -0.01 0.04

attempts 0.98 -0.05 -0.06 -0.05 -0.05 0.04

passing_yards 0.90 0.15 0.28 0.03 0.12 0.07

passing_tds 0.77 0.23 0.25 0.05 0.09 -0.05

passing_interceptions 0.71 -0.28 -0.18 0.10 0.00 0.09

sacks_suffered 0.64 -0.04 -0.16 0.03 0.00 0.42

sack_yards_lost -0.61 0.04 0.16 -0.02 0.00 -0.43

sack_fumbles 0.11 0.05 0.04 -0.03 0.04 0.86

sack_fumbles_lost -0.01 0.07 0.08 -0.05 0.03 0.84

passing_air_yards 0.83 0.02 0.08 0.27 -0.30 0.04

passing_yards_after_catch 0.86 0.06 0.08 -0.31 0.06 0.03

passing_first_downs 0.92 0.21 0.07 0.04 0.08 0.03

passing_epa 0.23 0.42 0.38 -0.04 0.07 -0.48

passing_cpoe -0.03 0.94 -0.03 -0.01 -0.03 0.09

passing_2pt_conversions 0.03 -0.05 -0.07 -0.01 0.00 0.00

pacr 0.02 0.00 0.07 -0.12 0.77 0.04

pass_40_yds 0.25 -0.14 0.74 -0.11 0.09 -0.08

pass_inc 0.97 -0.39 -0.07 0.14 -0.07 0.05

pass_comp_pct 0.10 0.91 -0.16 -0.28 -0.09 0.01

fumbles 0.11 0.03 0.06 -0.02 0.05 0.72

two_pts -0.05 -0.04 -0.06 -0.01 -0.01 0.01

avg_time_to_throw -0.22 -0.08 0.20 0.21 -0.14 0.25

avg_completed_air_yards -0.04 0.08 0.58 0.63 0.12 0.06

avg_intended_air_yards -0.31 0.07 0.40 0.41 -0.51 0.09

avg_air_yards_differential -0.04 0.09 0.30 0.12 0.88 0.03

aggressiveness 0.12 -0.02 -0.29 0.78 0.06 -0.11

max_completed_air_distance 0.09 -0.02 0.79 0.00 -0.04 0.00

avg_air_yards_to_sticks 0.03 0.05 0.34 0.52 -0.48 -0.05

passer_rating 0.00 0.70 0.39 -0.11 0.21 -0.11

expected_completion_percentage -0.03 0.10 0.07 -0.85 0.13 0.00

completion_percentage_above_expectation 0.03 0.98 -0.09 0.26 0.09 -0.03

avg_air_distance -0.02 0.01 0.33 0.48 -0.51 0.04

max_air_distance 0.13 -0.03 0.45 -0.13 -0.67 -0.02

TC5 h2 u2 com

completions 0.03 1.03 -0.0333 1.2

attempts 0.02 1.00 0.0029 1.0

passing_yards 0.04 1.10 -0.0977 1.3

passing_tds 0.08 0.85 0.1466 1.5

passing_interceptions -0.03 0.65 0.3516 1.5

sacks_suffered 0.00 0.81 0.1893 1.9

sack_yards_lost 0.00 0.77 0.2262 2.0

sack_fumbles 0.02 0.81 0.1948 1.1

sack_fumbles_lost 0.03 0.69 0.3052 1.0

passing_air_yards 0.03 0.97 0.0349 1.5

passing_yards_after_catch 0.03 0.93 0.0674 1.3

passing_first_downs 0.05 1.02 -0.0231 1.1

passing_epa 0.11 0.79 0.2054 3.6

passing_cpoe -0.01 0.84 0.1608 1.0

passing_2pt_conversions 0.90 0.81 0.1890 1.0

pacr -0.01 0.67 0.3267 1.1

pass_40_yds -0.02 0.58 0.4183 1.4

pass_inc 0.03 1.13 -0.1293 1.4

pass_comp_pct -0.01 0.90 0.1038 1.3

fumbles 0.03 0.58 0.4159 1.1

two_pts 0.92 0.82 0.1838 1.0

avg_time_to_throw 0.08 0.22 0.7785 5.0

avg_completed_air_yards 0.05 0.88 0.1179 2.1

avg_intended_air_yards 0.01 0.88 0.1223 3.7

avg_air_yards_differential 0.00 0.85 0.1487 1.3

aggressiveness -0.04 0.57 0.4312 1.4

max_completed_air_distance -0.04 0.63 0.3681 1.0

avg_air_yards_to_sticks 0.05 0.88 0.1203 2.8

passer_rating 0.05 0.98 0.0155 1.9

expected_completion_percentage 0.00 0.84 0.1613 1.1

completion_percentage_above_expectation -0.04 0.96 0.0364 1.2

avg_air_distance 0.03 0.86 0.1424 2.7

max_air_distance -0.03 0.64 0.3624 1.9

TC1 TC3 TC7 TC2 TC6 TC4 TC5

SS loadings 8.51 4.05 3.20 3.26 3.16 3.02 1.75

Proportion Var 0.26 0.12 0.10 0.10 0.10 0.09 0.05

Cumulative Var 0.26 0.38 0.48 0.58 0.67 0.76 0.82

Proportion Explained 0.32 0.15 0.12 0.12 0.12 0.11 0.06

Cumulative Proportion 0.32 0.47 0.58 0.71 0.82 0.94 1.00

With component correlations of

TC1 TC3 TC7 TC2 TC6 TC4 TC5

TC1 1.00 0.09 0.10 -0.02 0.02 0.37 0.21

TC3 0.09 1.00 0.27 -0.11 0.25 -0.13 0.06

TC7 0.10 0.27 1.00 0.23 -0.05 -0.11 0.09

TC2 -0.02 -0.11 0.23 1.00 -0.33 0.02 0.02

TC6 0.02 0.25 -0.05 -0.33 1.00 -0.04 -0.01

TC4 0.37 -0.13 -0.11 0.02 -0.04 1.00 0.06

TC5 0.21 0.06 0.09 0.02 -0.01 0.06 1.00

Mean item complexity = 1.7

Test of the hypothesis that 7 components are sufficient.

The root mean square of the residuals (RMSR) is 0.05

with the empirical chi square 1055346 with prob < 0

Fit based upon off diagonal values = 0.98Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 8, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC3 TC2 TC7 TC6 TC4

completions 0.92 0.17 -0.10 -0.13 -0.02 0.08

attempts 0.97 -0.02 -0.05 -0.05 -0.05 0.05

passing_yards 0.87 0.12 0.22 0.02 0.15 0.11

passing_tds 0.72 0.09 0.09 0.07 0.05 0.09

passing_interceptions 0.77 -0.06 0.04 0.03 0.08 -0.08

sacks_suffered 0.65 0.05 -0.06 0.01 0.02 0.34

sack_yards_lost -0.62 -0.06 0.06 -0.01 -0.02 -0.35

sack_fumbles 0.07 -0.03 -0.03 -0.01 -0.01 0.89

sack_fumbles_lost -0.05 -0.02 0.02 -0.02 -0.02 0.88

passing_air_yards 0.84 0.09 0.21 0.23 -0.23 0.00

passing_yards_after_catch 0.82 -0.01 -0.04 -0.28 0.03 0.12

passing_first_downs 0.89 0.16 0.00 0.06 0.07 0.09

passing_epa 0.15 0.12 0.07 0.03 -0.01 -0.21

passing_cpoe -0.02 0.98 0.10 -0.05 0.04 -0.01

passing_2pt_conversions 0.02 -0.02 -0.05 -0.02 0.01 -0.01

pacr 0.04 0.12 0.07 -0.17 0.82 -0.07

pass_40_yds 0.22 -0.20 0.63 -0.15 0.16 -0.02

pass_inc 0.98 -0.31 -0.01 0.12 -0.05 0.03

pass_comp_pct 0.09 0.88 -0.14 -0.26 -0.08 -0.01

fumbles 0.07 -0.05 -0.01 0.00 0.01 0.76

two_pts -0.05 -0.01 -0.03 -0.03 0.01 -0.01

avg_time_to_throw -0.19 0.05 0.38 0.13 -0.03 0.11

avg_completed_air_yards -0.05 0.07 0.61 0.55 0.22 0.05

avg_intended_air_yards -0.31 0.04 0.49 0.37 -0.43 0.09

avg_air_yards_differential -0.06 0.07 0.16 0.09 0.89 0.04

aggressiveness 0.10 -0.09 -0.30 0.81 -0.02 -0.04

max_completed_air_distance 0.10 0.03 0.84 -0.10 0.11 -0.06

avg_air_yards_to_sticks 0.03 0.02 0.43 0.47 -0.41 -0.03

passer_rating -0.07 0.46 0.14 -0.07 0.16 0.07

expected_completion_percentage -0.04 0.08 -0.05 -0.83 0.12 0.02

completion_percentage_above_expectation 0.02 0.92 -0.06 0.25 0.10 -0.04

avg_air_distance -0.01 0.03 0.47 0.42 -0.42 0.01

max_air_distance 0.15 0.02 0.59 -0.19 -0.54 -0.07

TC8 TC5 h2 u2 com

completions 0.11 0.03 1.03 -0.0341 1.2

attempts 0.00 0.03 1.00 0.0029 1.0

passing_yards 0.18 0.04 1.10 -0.0978 1.4

passing_tds 0.44 0.06 0.90 0.0999 1.8

passing_interceptions -0.51 0.02 0.78 0.2174 1.8

sacks_suffered -0.30 0.02 0.82 0.1759 2.0

sack_yards_lost 0.30 -0.02 0.79 0.2130 2.1

sack_fumbles -0.03 0.00 0.85 0.1469 1.0

sack_fumbles_lost -0.01 0.00 0.74 0.2579 1.0

passing_air_yards -0.12 0.05 0.98 0.0201 1.5

passing_yards_after_catch 0.24 0.02 0.95 0.0522 1.5

passing_first_downs 0.20 0.05 1.03 -0.0281 1.2

passing_epa 0.87 0.06 0.97 0.0306 1.3

passing_cpoe -0.11 0.01 0.95 0.0453 1.1

passing_2pt_conversions 0.01 0.90 0.81 0.1868 1.0

pacr -0.20 0.01 0.79 0.2144 1.3

pass_40_yds 0.32 -0.04 0.58 0.4182 2.4

pass_inc -0.16 0.04 1.13 -0.1300 1.3

pass_comp_pct 0.05 0.00 0.92 0.0785 1.3

fumbles 0.00 0.01 0.62 0.3767 1.0

two_pts 0.00 0.92 0.82 0.1802 1.0

avg_time_to_throw -0.32 0.11 0.29 0.7090 3.2

avg_completed_air_yards 0.10 0.05 0.89 0.1091 2.4

avg_intended_air_yards 0.06 0.01 0.88 0.1214 3.8

avg_air_yards_differential 0.14 0.00 0.86 0.1403 1.2

aggressiveness 0.08 -0.05 0.62 0.3752 1.4

max_completed_air_distance 0.04 -0.03 0.70 0.2955 1.1

avg_air_yards_to_sticks 0.09 0.05 0.88 0.1195 3.1

passer_rating 0.66 0.01 1.04 -0.0410 2.1

expected_completion_percentage 0.12 0.00 0.84 0.1599 1.1

completion_percentage_above_expectation 0.11 -0.03 0.98 0.0211 1.2

avg_air_distance -0.05 0.03 0.86 0.1394 3.0

max_air_distance -0.05 -0.01 0.66 0.3355 2.4

TC1 TC3 TC2 TC7 TC6 TC4 TC8 TC5

SS loadings 8.33 3.40 3.23 2.99 2.84 2.89 2.66 1.75

Proportion Var 0.25 0.10 0.10 0.09 0.09 0.09 0.08 0.05

Cumulative Var 0.25 0.36 0.45 0.54 0.63 0.72 0.80 0.85

Proportion Explained 0.30 0.12 0.12 0.11 0.10 0.10 0.09 0.06

Cumulative Proportion 0.30 0.42 0.53 0.64 0.74 0.84 0.94 1.00

With component correlations of

TC1 TC3 TC2 TC7 TC6 TC4 TC8 TC5

TC1 1.00 0.08 0.09 -0.03 0.02 0.41 0.03 0.21

TC3 0.08 1.00 0.12 -0.10 0.24 0.01 0.38 0.02

TC2 0.09 0.12 1.00 0.31 -0.14 -0.02 0.19 0.06

TC7 -0.03 -0.10 0.31 1.00 -0.28 -0.01 -0.04 0.04

TC6 0.02 0.24 -0.14 -0.28 1.00 0.03 0.18 -0.02

TC4 0.41 0.01 -0.02 -0.01 0.03 1.00 -0.10 0.11

TC8 0.03 0.38 0.19 -0.04 0.18 -0.10 1.00 0.03

TC5 0.21 0.02 0.06 0.04 -0.02 0.11 0.03 1.00

Mean item complexity = 1.7

Test of the hypothesis that 8 components are sufficient.

The root mean square of the residuals (RMSR) is 0.04

with the empirical chi square 889323.1 with prob < 0

Fit based upon off diagonal values = 0.99Principal Components Analysis

Call: psych::principal(r = dataForPCA[pcaVars], nfactors = 9, rotate = "oblimin")

Standardized loadings (pattern matrix) based upon correlation matrix

TC1 TC3 TC4 TC2 TC6 TC8

completions 0.90 0.18 0.09 -0.03 -0.01 0.10

attempts 0.95 -0.01 0.06 -0.05 0.02 -0.01

passing_yards 0.86 0.11 0.11 0.15 0.21 0.19

passing_tds 0.73 0.06 0.06 0.05 0.01 0.50

passing_interceptions 0.79 -0.05 -0.09 0.09 0.02 -0.50

sacks_suffered 0.69 0.04 0.31 0.01 -0.14 -0.24

sack_yards_lost -0.66 -0.04 -0.32 -0.01 0.14 0.24

sack_fumbles 0.06 -0.02 0.90 -0.01 0.00 -0.04

sack_fumbles_lost -0.06 -0.01 0.89 -0.02 0.04 -0.02

passing_air_yards 0.85 0.09 -0.01 -0.21 0.17 -0.09

passing_yards_after_catch 0.81 -0.02 0.12 0.02 0.02 0.23

passing_first_downs 0.90 0.15 0.08 0.06 -0.02 0.23

passing_epa 0.14 0.10 -0.23 -0.01 0.04 0.90

passing_cpoe -0.01 0.98 -0.01 0.04 0.06 -0.10

passing_2pt_conversions 0.01 -0.01 0.00 0.01 -0.02 0.00

pacr 0.03 0.13 -0.05 0.81 0.08 -0.23

pass_40_yds 0.16 -0.19 0.02 0.19 0.68 0.26

pass_inc 0.99 -0.31 0.02 -0.05 -0.01 -0.14

pass_comp_pct 0.07 0.89 0.00 -0.09 -0.06 0.03

fumbles 0.06 -0.04 0.76 0.01 0.00 0.00

two_pts -0.06 0.00 0.00 0.01 0.00 -0.01

avg_time_to_throw -0.01 -0.06 -0.02 -0.02 -0.14 -0.07

avg_completed_air_yards 0.00 0.04 0.01 0.25 0.35 0.19

avg_intended_air_yards -0.28 0.02 0.08 -0.40 0.32 0.11

avg_air_yards_differential -0.06 0.07 0.04 0.88 0.09 0.16

aggressiveness 0.02 -0.02 0.03 -0.01 -0.06 -0.04

max_completed_air_distance 0.01 0.06 0.00 0.16 0.91 -0.06

avg_air_yards_to_sticks 0.06 0.00 -0.06 -0.38 0.26 0.16

passer_rating -0.08 0.44 0.06 0.16 0.09 0.69

expected_completion_percentage -0.06 0.06 0.02 0.10 0.03 0.08

completion_percentage_above_expectation 0.00 0.93 -0.03 0.10 -0.03 0.10

avg_air_distance 0.02 0.01 -0.01 -0.39 0.30 0.02

max_air_distance 0.07 0.05 -0.02 -0.50 0.72 -0.15

TC7 TC9 TC5 h2 u2 com

completions -0.08 -0.17 0.04 1.03 -0.0349 1.2

attempts 0.00 -0.13 0.04 1.00 0.0014 1.1

passing_yards 0.00 0.04 0.03 1.10 -0.0978 1.4

passing_tds 0.00 0.10 0.04 0.93 0.0704 1.9

passing_interceptions 0.02 0.06 0.02 0.78 0.2170 1.8

sacks_suffered -0.05 0.14 0.00 0.84 0.1638 1.9

sack_yards_lost 0.05 -0.13 0.00 0.80 0.2022 2.0

sack_fumbles 0.02 -0.02 0.00 0.86 0.1378 1.0

sack_fumbles_lost 0.01 -0.02 0.01 0.76 0.2446 1.0

passing_air_yards 0.18 0.16 0.05 0.98 0.0200 1.5

passing_yards_after_catch -0.24 -0.17 0.02 0.95 0.0507 1.5

passing_first_downs 0.02 0.01 0.04 1.03 -0.0338 1.2

passing_epa -0.03 -0.01 0.04 1.00 0.0011 1.2

passing_cpoe -0.07 0.09 0.01 0.96 0.0436 1.1

passing_2pt_conversions 0.00 -0.01 0.90 0.82 0.1802 1.0

pacr -0.14 -0.09 0.02 0.79 0.2071 1.3

pass_40_yds -0.09 -0.05 -0.02 0.61 0.3853 1.8

pass_inc 0.11 0.02 0.04 1.13 -0.1306 1.3

pass_comp_pct -0.21 -0.15 0.01 0.93 0.0744 1.2

fumbles 0.01 0.00 0.02 0.63 0.3728 1.0

two_pts 0.00 0.00 0.92 0.83 0.1720 1.0

avg_time_to_throw -0.25 0.90 0.01 0.71 0.2855 1.2

avg_completed_air_yards 0.36 0.56 0.01 0.91 0.0940 3.3

avg_intended_air_yards 0.24 0.43 -0.01 0.88 0.1184 4.6

avg_air_yards_differential 0.05 0.06 -0.01 0.86 0.1403 1.1

aggressiveness 0.95 -0.27 0.00 0.81 0.1861 1.2

max_completed_air_distance -0.01 0.00 0.00 0.83 0.1734 1.1

avg_air_yards_to_sticks 0.33 0.44 0.02 0.89 0.1103 4.0

passer_rating -0.11 0.03 0.00 1.05 -0.0520 2.0

expected_completion_percentage -0.74 -0.29 0.00 0.84 0.1574 1.4

completion_percentage_above_expectation 0.26 0.00 -0.02 0.99 0.0074 1.2

avg_air_distance 0.29 0.44 0.01 0.86 0.1353 3.6

max_air_distance -0.07 -0.08 0.02 0.77 0.2344 2.0

TC1 TC3 TC4 TC2 TC6 TC8 TC7 TC9 TC5

SS loadings 8.31 3.37 2.85 2.70 2.68 2.68 2.47 2.37 1.74

Proportion Var 0.25 0.10 0.09 0.08 0.08 0.08 0.07 0.07 0.05

Cumulative Var 0.25 0.35 0.44 0.52 0.60 0.68 0.76 0.83 0.88

Proportion Explained 0.28 0.12 0.10 0.09 0.09 0.09 0.08 0.08 0.06

Cumulative Proportion 0.28 0.40 0.50 0.59 0.68 0.77 0.86 0.94 1.00

With component correlations of

TC1 TC3 TC4 TC2 TC6 TC8 TC7 TC9 TC5

TC1 1.00 0.07 0.42 0.02 0.13 0.04 -0.02 -0.02 0.22

TC3 0.07 1.00 0.01 0.24 0.13 0.37 -0.13 -0.05 0.01

TC4 0.42 0.01 1.00 0.04 -0.04 -0.09 -0.04 0.00 0.11

TC2 0.02 0.24 0.04 1.00 -0.10 0.18 -0.25 -0.19 -0.01

TC6 0.13 0.13 -0.04 -0.10 1.00 0.23 0.23 0.28 0.05

TC8 0.04 0.37 -0.09 0.18 0.23 1.00 -0.02 0.02 0.04

TC7 -0.02 -0.13 -0.04 -0.25 0.23 -0.02 1.00 0.30 0.00

TC9 -0.02 -0.05 0.00 -0.19 0.28 0.02 0.30 1.00 0.02

TC5 0.22 0.01 0.11 -0.01 0.05 0.04 0.00 0.02 1.00

Mean item complexity = 1.7

Test of the hypothesis that 9 components are sufficient.

The root mean square of the residuals (RMSR) is 0.04

with the empirical chi square 792053.6 with prob < 0

Fit based upon off diagonal values = 0.99Based on the component solutions, the three-component solution maps onto our three-factor solution from factor analysis. The three-component solution explains more than half of the variance in the variables. The fourth component in a four-component solution is not particularly interpretable, explains relatively little variance, and seems to be related to sacks: sacks suffered, sack yards lost, sack fumbles, and sack fumbles lost. However, two of the sack-related variables (sacks suffered and sack yards lost) load more strongly onto the first component, suggesting that these sack-related variables are better captured by another component. Moreover, sacks might be considered a scoring methodological component rather than a particular concept of interest. For these resources we prefer the three-component solution and choose to retain three components.

Here are the variables that had a standardized component loading of 0.4 or greater on each component:

Code

component1vars <- c(

"completions","attempts","passing_yards","passing_tds","passing_interceptions",

"sacks_suffered","sack_yards_lost","sack_fumbles","sack_fumbles_lost",

"passing_air_yards","passing_yards_after_catch","passing_first_downs",

#"passing_epa","passing_cpoe","passing_2pt_conversions","pacr","pass_40_yds",

"pass_inc","fumbles")#,"two_pts","pass_comp_pct",

#"avg_time_to_throw","avg_completed_air_yards","avg_intended_air_yards",

#"avg_air_yards_differential","aggressiveness","max_completed_air_distance",

#"avg_air_yards_to_sticks","passer_rating", #,"completion_percentage"

#"expected_completion_percentage","completion_percentage_above_expectation",

#"avg_air_distance","max_air_distance")

component2vars <- c(

#"completions","attempts","passing_yards","passing_tds","passing_interceptions",

#"sacks_suffered","sack_yards_lost","sack_fumbles","sack_fumbles_lost",

"passing_air_yards",#"passing_yards_after_catch","passing_first_downs",

"pacr",#"passing_epa","passing_cpoe","passing_2pt_conversions","pass_40_yds",

#"pass_inc","pass_comp_pct","fumbles","two_pts",

"avg_completed_air_yards","avg_intended_air_yards",#"avg_time_to_throw",

"aggressiveness","max_completed_air_distance",#"avg_air_yards_differential",

"avg_air_yards_to_sticks",#"passer_rating", #,"completion_percentage"

"expected_completion_percentage",#"completion_percentage_above_expectation",

"avg_air_distance","max_air_distance")

component3vars <- c(

"passing_tds",#"completions","attempts","passing_yards","passing_interceptions",

#"sacks_suffered","sack_yards_lost","sack_fumbles","sack_fumbles_lost",

#"passing_air_yards","passing_yards_after_catch","passing_first_downs",

"passing_epa","passing_cpoe","pass_40_yds",#"passing_2pt_conversions","pacr",

"pass_comp_pct",#"fumbles","two_pts","pass_inc",

"avg_air_yards_differential","max_completed_air_distance",

"avg_time_to_throw","avg_completed_air_yards","avg_intended_air_yards",

#"aggressiveness",

"passer_rating",#"avg_air_yards_to_sticks", #,"completion_percentage"

"completion_percentage_above_expectation")#,"expected_completion_percentage",

#"avg_air_distance","max_air_distance")The variables that loaded most strongly onto component 1 appear to reflect Quarterback usage: completions, incompletions, passing attempts, passing yards, passing touchdowns, interceptions thrown, fumbles, times sacked, sack yards lost (“reversed”—i.e., negatively associated with the component), sack fumbles, sack fumbles lost, passing air yards (total horizontal distance the ball travels on all pass attempts), passing yards after the catch, and first downs gained by passing. Quarterbacks who tend to throw more tend to have higher levels on those variables. Thus, we label component 1 as “Usage”, which reflects total Quarterback involvement, regardless of efficiency or outcome.

The variables that loaded most strongly onto component 2 appear to reflect Quarterback aggressiveness: passing air yards, passing air conversion ratio (reversed; ratio of passing yards to passing air yards), average air yards on completed passes, average air yards on all attempted passes, aggressiveness (percentage of passing attempts thrown into tight windows, where there is a defender within one yard or less of the receiver at the time of the completion or incompletion), expected completion percentage (reversed; based on air distance, receiver separation, Quarterback/Wide Receiver movement, pass location, whether there was pressure on the Quarterback when throwing, the throw angle and trajectory, receiver and defender positioning at the catch point, and defensive coverage scheme), average amount of air yards ahead of or behind the first down marker on passing attempts, average air distance (the true three-dimensional distance the ball travels in the air), maximum air distance, and maximum air distance on completed passes. Quarterbacks who throw the ball farther and into tighter windows tend to have higher values on those variables. Thus, we label component 2 as “Aggressiveness”, which reflects throwing longer, more difficult passes with a tight window.

The variables that loaded most strongly onto component 3 appear to reflect Quarterback performance: passing touchdowns, passing expected points added, passing completion percentage above expectation, passes completed of 40 yards or more, pass completion percentage, air yards differential (intended air yards \(-\) completed air yards; attempting deeper passes than he on average completes), maximum completed air distance, average time to throw, average completed air yards, average intended air yards, and passer rating. Quarterbacks who perform better tend to have higher values on those variables. Thus, we label component 3 as “Performance”.

Below are component scores from the PCA for the first six players:

TC1 TC2 TC3

[1,] 5.622429 0.1201789 -1.3394571

[2,] 4.008880 1.9561016 0.8404175

[3,] 8.419386 -0.4013563 -2.8549298

[4,] 4.188369 1.0737128 1.7687052

[5,] 5.802752 1.3808326 -0.5749930

[6,] 6.157540 -0.3573887 0.3901416Here are the players and weeks that showed the highest levels of Quarterback “Usage”:

Code

Here are the players and weeks that showed the lowest levels of Quarterback “Usage”:

Code

Here are the players and weeks that showed the highest levels of Quarterback “Aggressiveness”:

Code

Here are the players and weeks that showed the lowest levels of Quarterback “Aggressiveness”:

Code

Here are the players and weeks that showed the highest levels of Quarterback “Performance”:

Code

Here are the players and weeks that showed the lowest levels of Quarterback “Performance”:

23.6 Conclusion

Principal component analysis (PCA) is a technique used for data reduction—reducing a large set of a variables down to a smaller set of components that capture most of the variance in the larger set. There are many decisions to make in factor analysis. These decisions can have important impacts on the resulting solution. Thus, it can be helpful for theory and interpretability to help guide decision-making when conducting factor analysis. There are several differences between factor analysis and PCA. Unlike factor analysis, which estimates the latent factors as the common variance among the variables that load onto that factor and discards the remaining variance as “error”, PCA uses all variance of variables and assumes variables have no error. Thus, PCA does not account for measurement error. Using PCA, we were able to identify three PCA components that accounted for considerable variance in the variables we examined, pertaining to Quarterbacks: 1) usage; 2) aggressiveness; 3) performance. We were then able to determine which players were highest and lowest on each of these components.

23.7 Session Info

R version 4.5.2 (2025-10-31)

Platform: x86_64-pc-linux-gnu

Running under: Ubuntu 24.04.3 LTS

Matrix products: default

BLAS: /usr/lib/x86_64-linux-gnu/openblas-pthread/libblas.so.3

LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.26.so; LAPACK version 3.12.0

locale:

[1] LC_CTYPE=C.UTF-8 LC_NUMERIC=C LC_TIME=C.UTF-8

[4] LC_COLLATE=C.UTF-8 LC_MONETARY=C.UTF-8 LC_MESSAGES=C.UTF-8

[7] LC_PAPER=C.UTF-8 LC_NAME=C LC_ADDRESS=C

[10] LC_TELEPHONE=C LC_MEASUREMENT=C.UTF-8 LC_IDENTIFICATION=C

time zone: UTC

tzcode source: system (glibc)

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] lubridate_1.9.5 forcats_1.0.1 stringr_1.6.0 dplyr_1.2.0

[5] purrr_1.2.1 readr_2.1.6 tidyr_1.3.2 tibble_3.3.1

[9] ggplot2_4.0.2 tidyverse_2.0.0 nFactors_2.4.1.2 psych_2.6.1

loaded via a namespace (and not attached):

[1] gtable_0.3.6 jsonlite_2.0.0 compiler_4.5.2

[4] tidyselect_1.2.1 parallel_4.5.2 scales_1.4.0

[7] yaml_2.3.12 fastmap_1.2.0 lattice_0.22-7

[10] R6_2.6.1 generics_0.1.4 knitr_1.51

[13] htmlwidgets_1.6.4 tzdb_0.5.0 pillar_1.11.1

[16] RColorBrewer_1.1-3 rlang_1.1.7 stringi_1.8.7

[19] xfun_0.56 S7_0.2.1 otel_0.2.0

[22] timechange_0.4.0 cli_3.6.5 withr_3.0.2

[25] magrittr_2.0.4 digest_0.6.39 grid_4.5.2

[28] hms_1.1.4 lifecycle_1.0.5 nlme_3.1-168

[31] vctrs_0.7.1 mnormt_2.1.2 evaluate_1.0.5

[34] glue_1.8.0 GPArotation_2025.3-1 farver_2.1.2

[37] rmarkdown_2.30 tools_4.5.2 pkgconfig_2.0.3

[40] htmltools_0.5.9